A Forest Fire Recognition Method Based on Modified Deep CNN Model

Abstract

1. Introduction

2. Materials and Methods

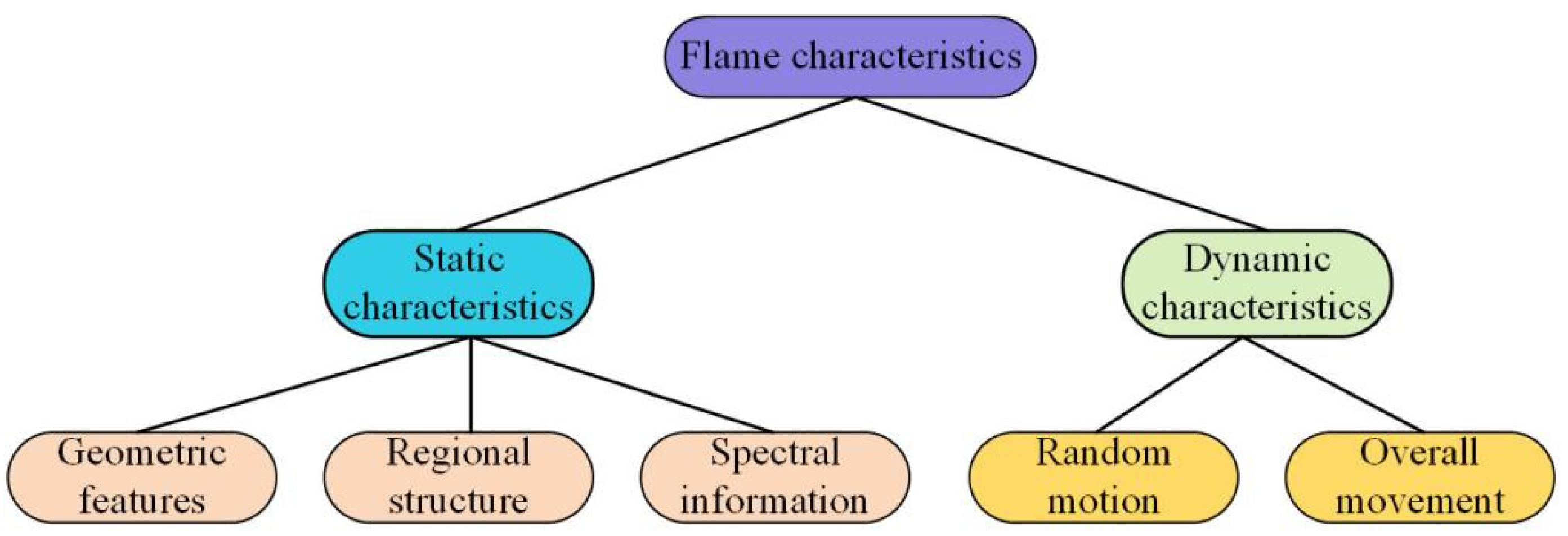

2.1. Image Flame Features and Model Selection

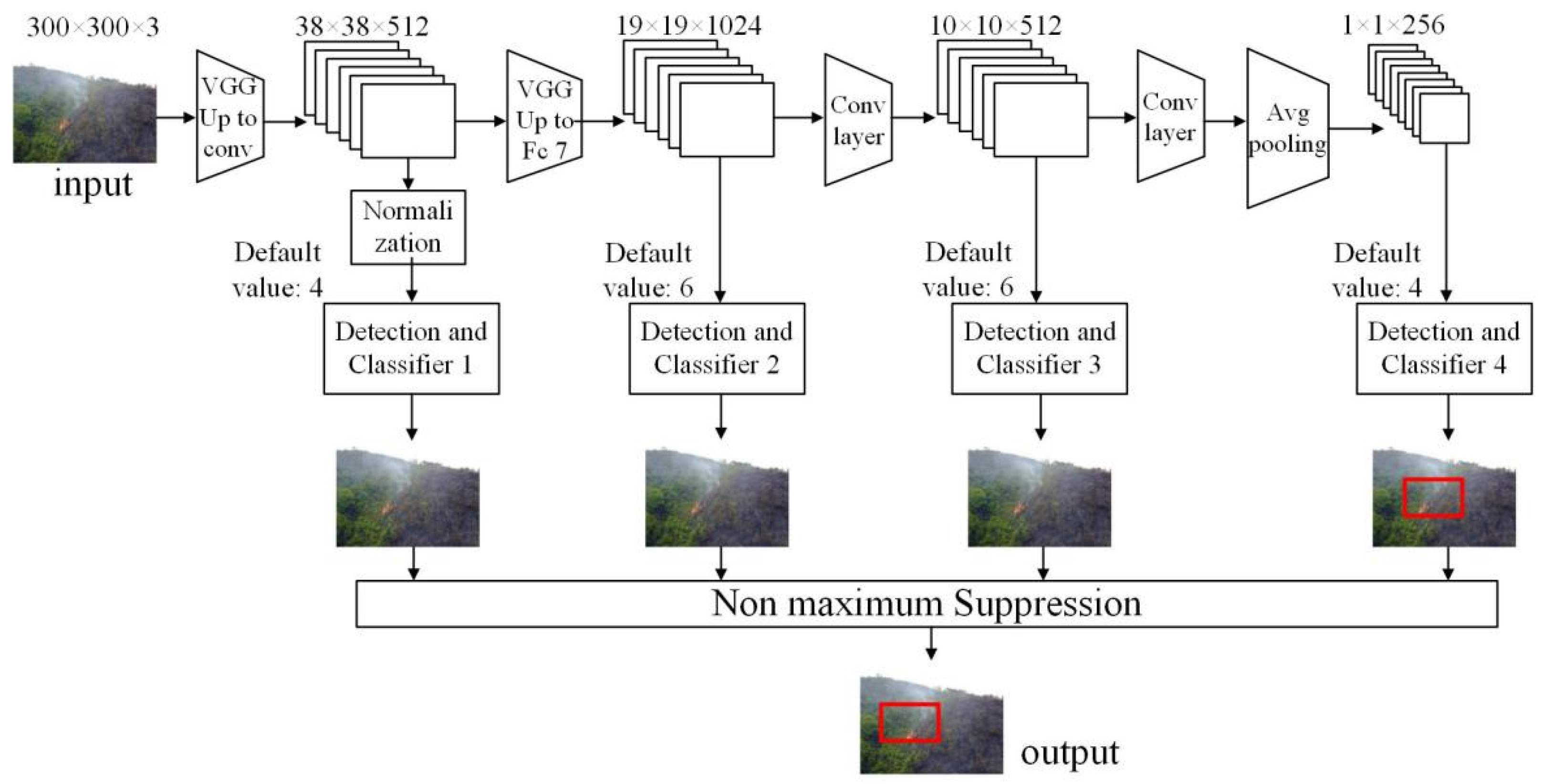

2.2. Modified Deep CNN Model for Forest Fire Recognition

- (1)

- Input layer: The main task of the input layer is to preprocess the original image. AlexNet requires an input size of 227 × 227. However, because the sample set in this article was collected through different channels, the size of the sample images is not consistent. Therefore, to reduce the computational complexity, all images were resized to match the input size.

- (2)

- Convolutional layer: Five convolutional layers are used in this study. The convolutional layer is the most important part of the entire network, and its core is the convolutional kernel (or filter). Convolutions have two attributes, size and depth, that can be set manually. As the sample size in this article is self-established and small, it is not suitable to adopt a high depth to prevent overfitting. Convolution reduces the dimensionality while extracting images. Convolutional layers are used to extract image features at a deeper level. After completing the convolution, functions are used to correct the results. Commonly used correction functions include sigmoid, rectified linear unit (ReLu), softplus, and tanh. Their function images are shown below:

- (3)

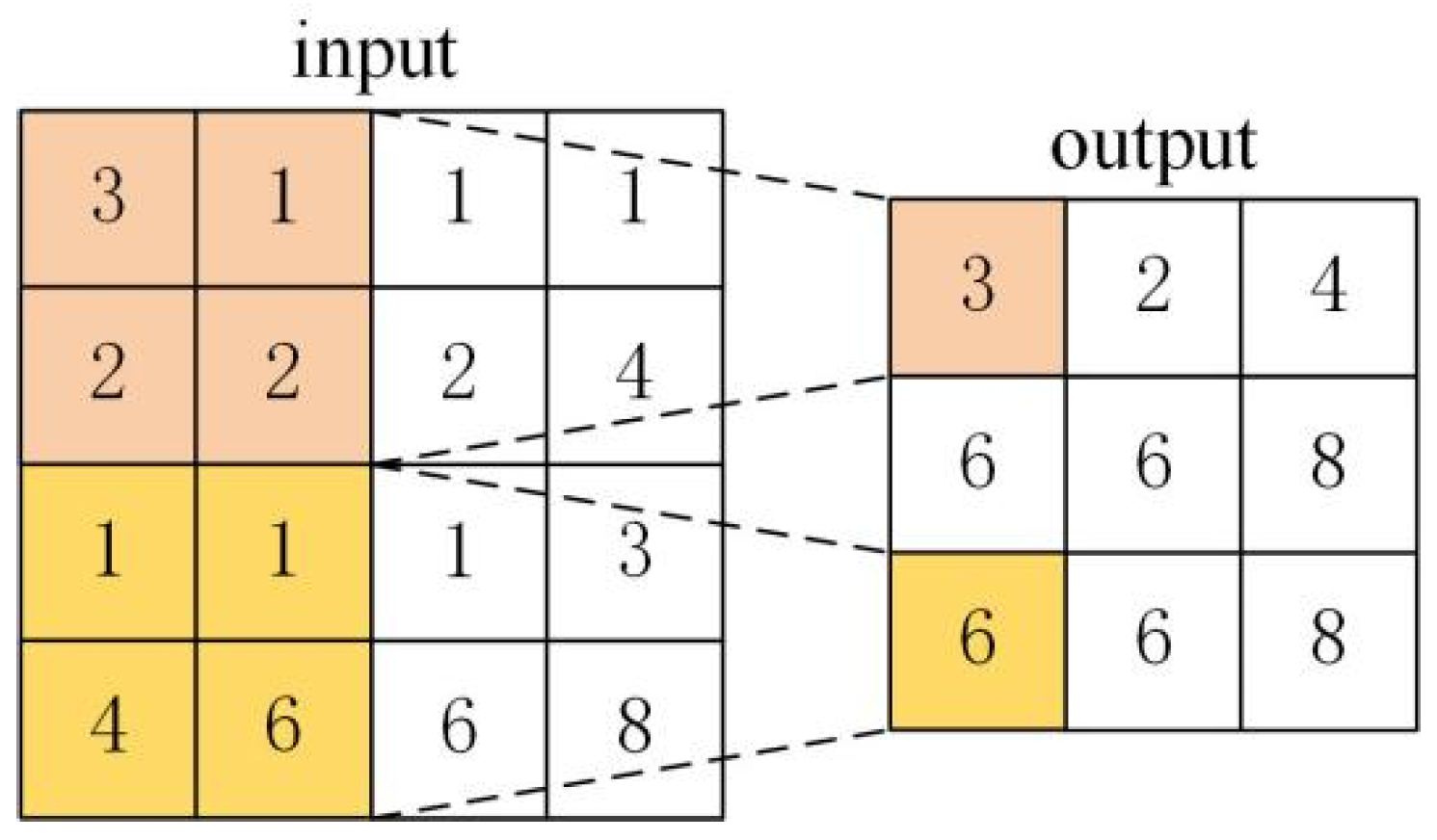

- Pooling layer: The pooling layer is usually followed by the convolutional layer; it is used to reduce the size of the matrix, preserve the main features while reducing the parameters of the next layer, and reduce the computational complexity to prevent overfitting. Max pooling and average pooling methods are used often. For image recognition, the max pooling method can reduce the mean shift caused by convolutional layer parameter errors. It can retain more texture information, which is also important in image processing. The principle is shown in Figure 4.

- (4)

- Fully connected layer: The fully connected layer correctly classifies images. To identify whether the target is a flame, the fully connected layer is divided into two categories, 0 and 1, which respectively represent non-fire and fire source images. The number of neurons input to the fully connected layer is greatly reduced through the processing of convolutional and pooling layers. For example, in AlexNet, after processing an image with a size of 227 × 227 and a color channel count of 3, the number of neurons input into the fully connected layer is 4096. Finally, the output of softmax can be determined based on the actual number of classification labels. In the fully connected layer, a dropout mechanism randomly deletes some neurons; this can save time in preventing the overfitting of contracts.

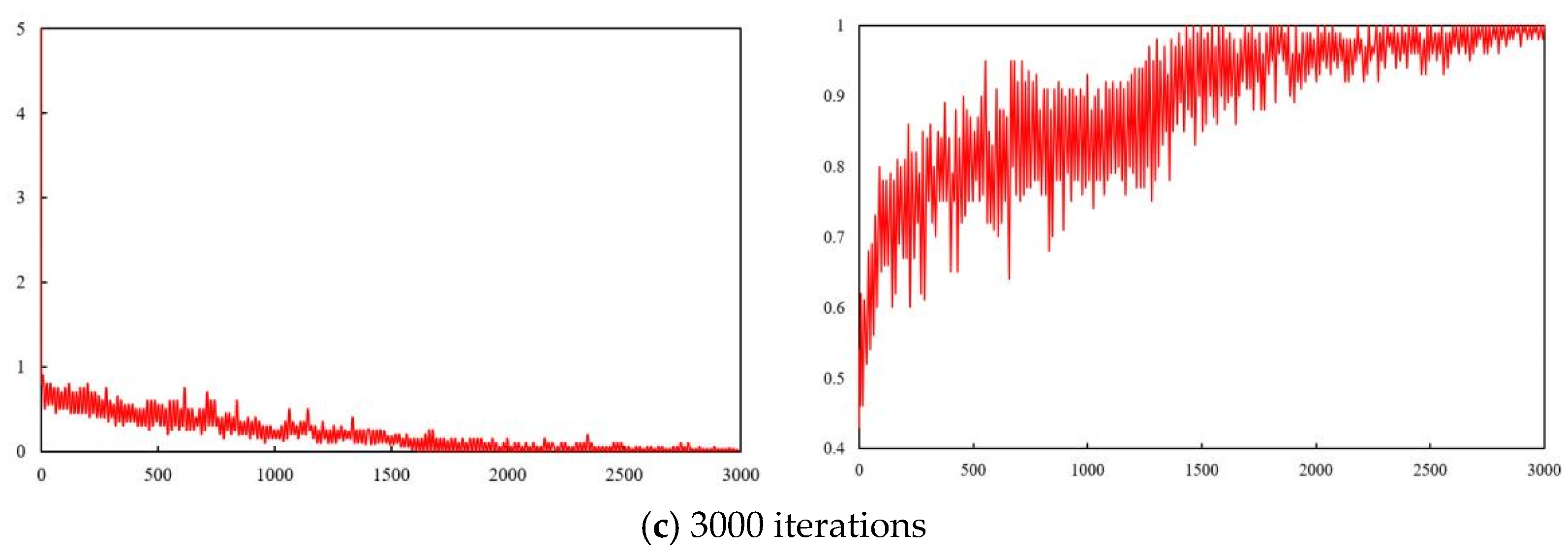

2.3. Parameter Selection

- (1)

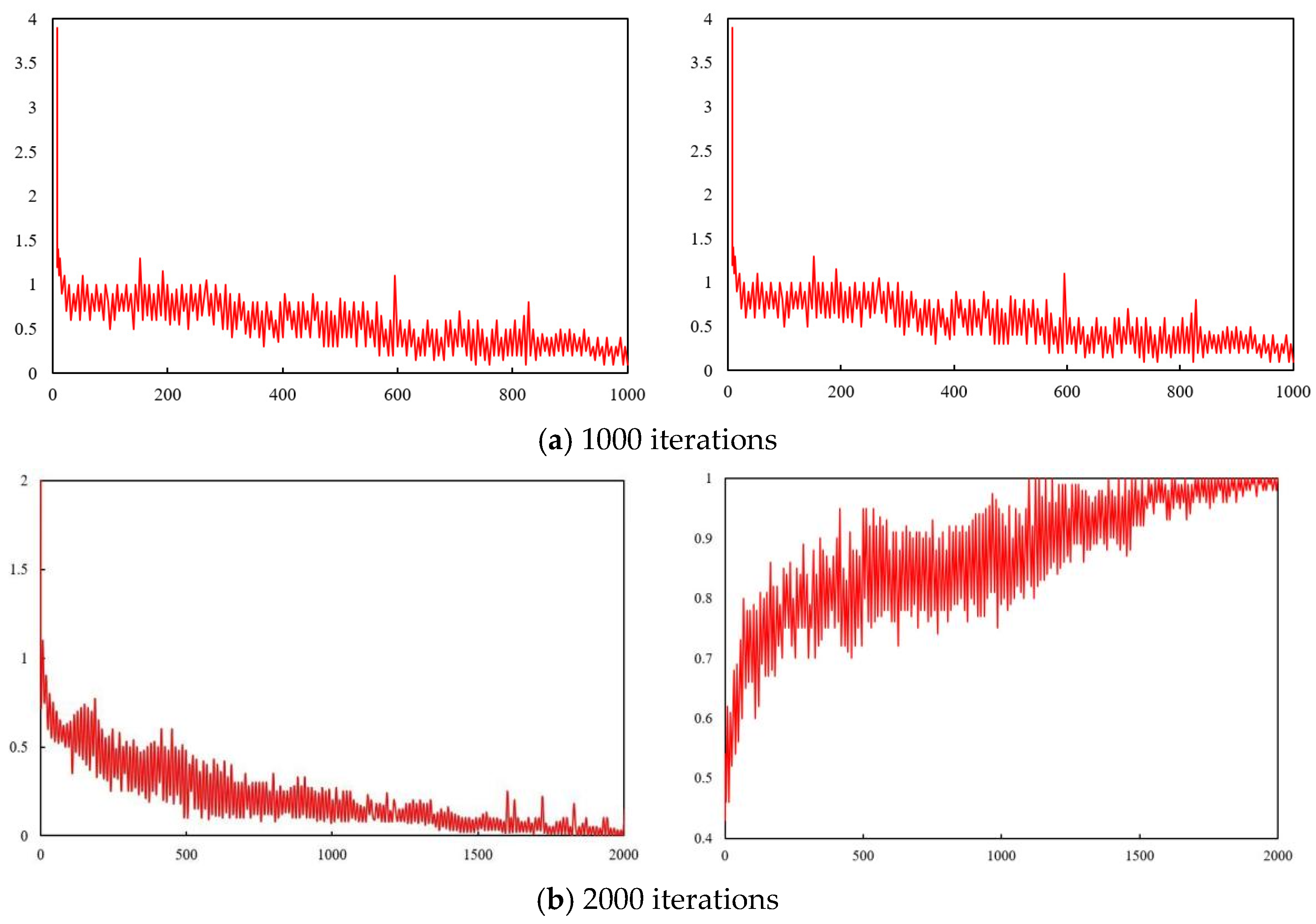

- Selection of Number of Iterations

- (2)

- Comparative experiments on different learning parameters

3. Results and Discussion

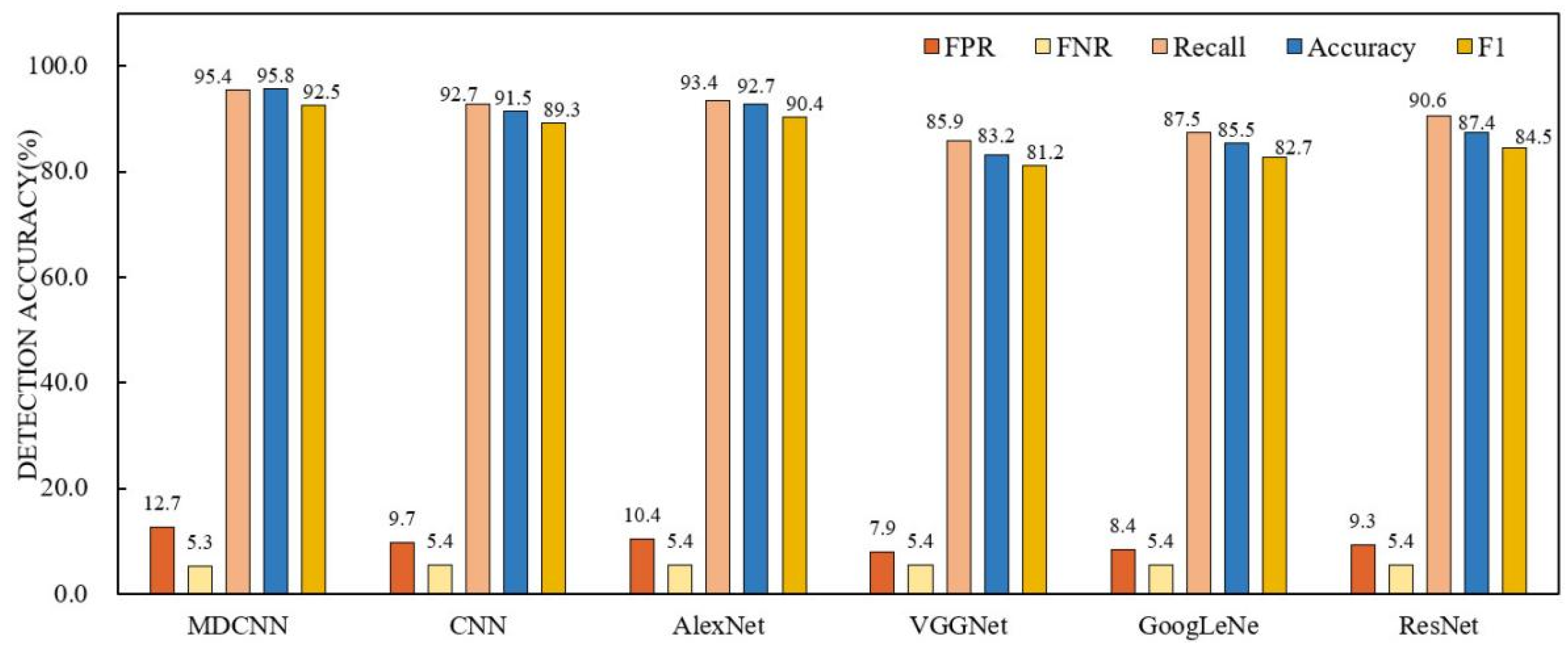

3.1. Experimental Calculation and Result Analysis

- -

- False Negative Rate (FNR): 5.3%

- -

- False Positive Rate (FPR): 12.7%

- -

- Recall Rate: 95.4%

- -

- Accuracy Rate: 95.8%

| FPR | FNR | Recall | Accuracy | F1 | |

|---|---|---|---|---|---|

| MDCNN | 12.7% | 5.3% | 95.4% | 95.8% | 92.5 |

| CNN | 9.7% | 5.4% | 92.7% | 91.5 | 89.3 |

| AlexNet | 10.4% | 5.4% | 93.4% | 92.7 | 90.4 |

| VGGNet | 7.9% | 5.4% | 85.9% | 83.2 | 81.2 |

| GoogLeNet | 8.4% | 5.4% | 87.5% | 85.5 | 82.7 |

| ResNet | 9.3% | 5.4% | 90.6% | 87.4 | 84.5 |

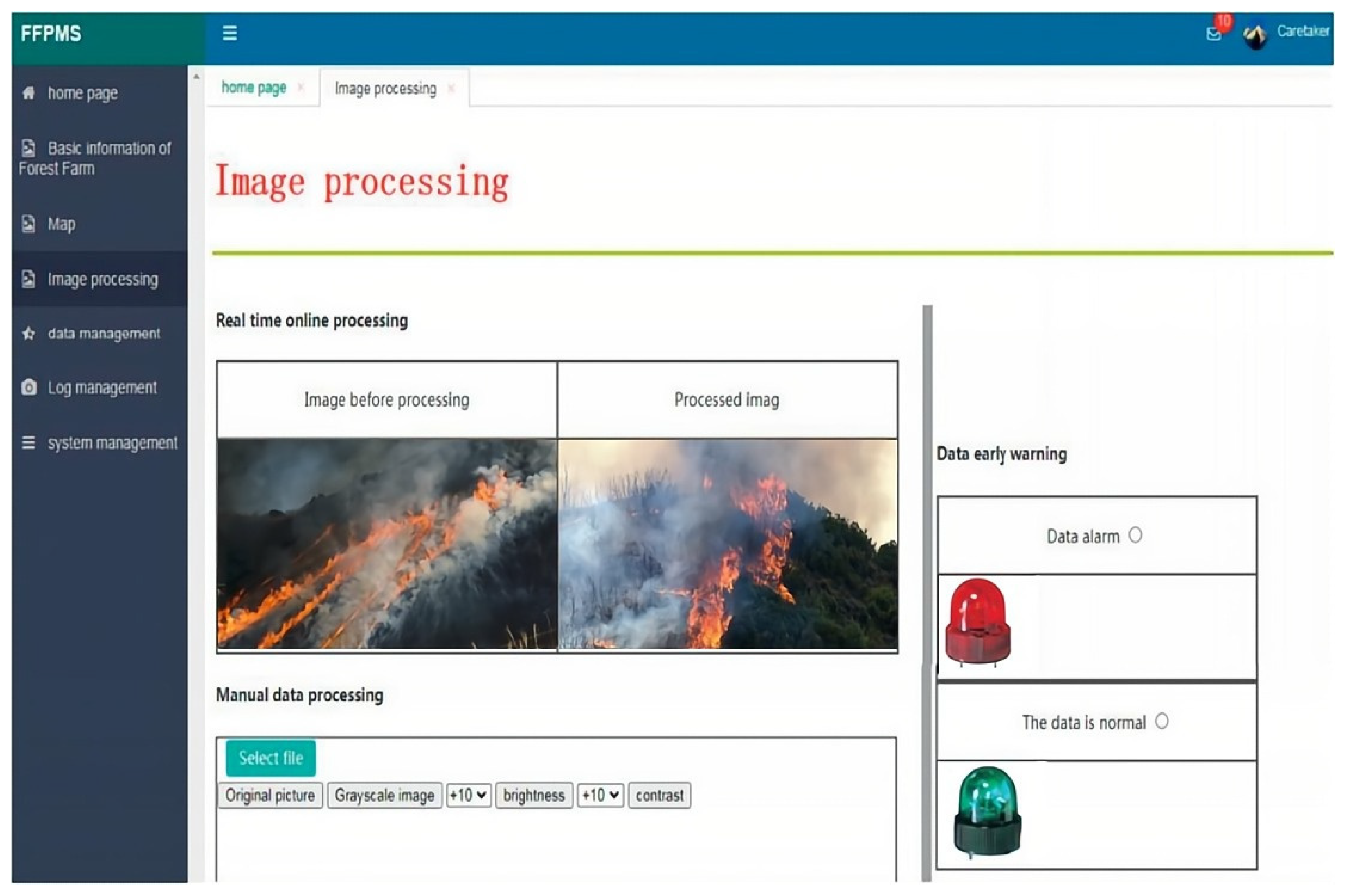

3.2. Anti- Interference Experiment

4. Conclusions

- (1)

- A forest fire recognition model was developed using a modified CNN network, resulting in a highly accurate fire video image recognition model after extensive training. The model’s accuracy and generalization capabilities were assessed using a diverse set of fire and non-fire scenarios.

- (2)

- To address recognition disruptions caused by scenes resembling fire, a method that adjusts the coordinates of the bounding boxes between consecutive frames was implemented. This approach effectively reduces static scenario interference and enhances the recognition capabilities of the model.

- (3)

- The model demonstrated commendable performance in flame detection, achieving remarkable results across multiple metrics. Firstly, it achieved a remarkably low false alarm rate of only 0.563%, indicating its ability to accurately classify non-flame instances. Additionally, the model achieved a false positive rate of 12.7%, which demonstrates its capability to minimize the occurrence of false detections. Moreover, the false negative rate of 5.3% further showcases the model’s ability to effectively identify and classify flame instances. Furthermore, the model achieved an impressive recall rate of 95.4%, indicating its high sensitivity in detecting flames. This means that the model successfully identified the vast majority of actual flame instances. The overall accuracy rate of 95.8% further highlights the model’s reliability in accurately classifying both flame and non-flame instances. These outstanding results validate the effectiveness of the proposed method in significantly augmenting the precision of flame detection. Flame detection is a critical task that is typically susceptible to errors, but the proposed method successfully mitigates these challenges, providing a reliable and accurate solution.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wahyono; Harjoko, A.; Dharmawan, A.; Adhinata, F.D.; Kosala, G.; Jo, K.-H. Real-Time Forest Fire Detection Framework Based on Artificial Intelligence Using Color Probability Model and Motion Feature Analysis. Fire 2022, 5, 23. [Google Scholar] [CrossRef]

- Alkhatib, A.A.A.; Abdelal, Q.; Kanan, T. Wireless Sensor Network for Forest Fire Detection and behavior Analysis. Int. J. Adv. Soft Comput. Its Appl. 2021, 13, 82–104. [Google Scholar]

- Apriani, Y.; Oktaviani, W.A.; Sofian, I.M. Design and Implementation of LoRa-Based Forest Fire Monitoring System. J. Robot. Control 2022, 3, 236–243. [Google Scholar] [CrossRef]

- Guede-Fernández, F.; Martins, L.; de Almeida, R.V.; Gamboa, H.; Vieira, P. A Deep Learning Based Object Identification System for Forest Fire Detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Majid, S.; Alenezi, F.; Masood, S.; Ahmad, M.; Gündüz, E.S.; Polat, K. Attention based CNN model for fire detection and localization in real-world images. Expert Syst. Appl. 2021, 189, 116114. [Google Scholar] [CrossRef]

- Abid, F. A Survey of Machine Learning Algorithms Based Forest Fires Prediction and Detection Systems. Fire Technol. 2020, 57, 559–590. [Google Scholar] [CrossRef]

- Avazov, K.; Hyun, A.E.; Sami S, A.A.; Khaitov, A.; Abdusalomov, A.B.; Cho, Y.I. Forest Fire Detection and Notification Method Based on AI and IoT Approaches. Futur. Internet 2023, 15, 61. [Google Scholar] [CrossRef]

- Azevedo, B.F.; Brito, T.; Lima, J.; Pereira, A.I. Optimum Sensors Allocation for a Forest Fires Monitoring System. Forests 2021, 12, 453. [Google Scholar] [CrossRef]

- Parajuli, A.; Manzoor, S.A.; Lukac, M. Areas of the Terai Arc landscape in Nepal at risk of forest fire identified by fuzzy analytic hierarchy process. Environ. Dev. 2023, 45, 100810. [Google Scholar] [CrossRef]

- Dutta, S.; Vaishali, A.; Khan, S.; Das, S. Forest Fire Risk Modeling Using GIS and Remote Sensing in Major Landscapes of Himachal Pradesh. In Ecological Footprints of Climate Change: Adaptive Approaches and Sustainability; Springer International Publishing: Cham, Switzerland, 2023; pp. 421–442. [Google Scholar]

- Singo, M.V.; Chikoore, H.; Engelbrecht, F.A.; Ndarana, T.; Muofhe, T.P.; Mbokodo, I.L.; Murungweni, F.M.; Bopape, M.-J.M. Projections of future fire risk under climate change over the South African savanna. Stoch. Environ. Res. Risk Assess. 2023, 37, 2677–2691. [Google Scholar] [CrossRef]

- Yandouzi, M.; Grari, M.; Idrissi, I.; Boukabous, M.; Moussaoui, O.; Azizi, M.; Ghoumid, K.; Elmiad, A.K. Forest Fires Detection using Deep Transfer Learning. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 0130832. [Google Scholar] [CrossRef]

- Feizizadeh, B.; Omarzadeh, D.; Mohammadnejad, V.; Khallaghi, H.; Sharifi, A.; Karkarg, B.G. An integrated approach of artificial intelligence and geoinformation techniques applied to forest fire risk modeling in Gachsaran, Iran. J. Environ. Plan. Manag. 2022, 66, 1369–1391. [Google Scholar] [CrossRef]

- Alkhatib, R.; Sahwan, W.; Alkhatieb, A.; Schütt, B. A Brief Review of Machine Learning Algorithms in Forest Fires Science. Appl. Sci. 2023, 13, 8275. [Google Scholar] [CrossRef]

- Arteaga, B.; Díaz, M.; Jojoa, M. Deep Learning Applied to Forest Fire Detection. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology, Louisville, KY, USA, 9–11 December 2020. [Google Scholar]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Sathishkumar, V.E.; Cho, J.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 1–17. [Google Scholar] [CrossRef]

- Crowley, M.A.; Stockdale, C.A.; Johnston, J.M.; Wulder, M.A.; Liu, T.; McCarty, J.L.; Rieb, J.T.; Cardille, J.A.; White, J.C. Towards a whole-system framework for wildfire monitoring using Earth observations. Glob. Chang. Biol. 2022, 29, 1423–1436. [Google Scholar] [CrossRef] [PubMed]

- Michael, Y.; Helman, D.; Glickman, O.; Gabay, D.; Brenner, S.; Lensky, I.M. Forecasting fire risk with machine learning and dynamic information derived from satellite vegetation index time-series. Sci. Total. Environ. 2020, 764, 142844. [Google Scholar] [CrossRef] [PubMed]

- Mao, W.; Wang, W.; Dou, Z.; Li, Y. Fire Recognition Based on Multi-Channel Convolutional Neural Network. Fire Technol. 2018, 54, 531–554. [Google Scholar] [CrossRef]

- Thach, N.N.; Ngo, D.B.-T.; Xuan-Canh, P.; Hong-Thi, N.; Thi, B.H.; Nhat-Duc, H.; Dieu, T.B. Spatial pattern assessment of tropical forest fire danger at Thuan Chau area (Vietnam) using GIS-based advanced machine learning algorithms: A comparative study. Ecol. Inform. 2018, 46, 74–85. [Google Scholar] [CrossRef]

- Vikram, R.; Sinha, D.; De, D.; Das, A.K. EEFFL: Energy efficient data forwarding for forest fire detection using localization technique in wireless sensor network. Wirel. Networks 2020, 26, 5177–5205. [Google Scholar] [CrossRef]

- Moayedi, H.; Mehrabi, M.; Bui, D.T.; Pradhan, B.; Foong, L.K. Fuzzy-metaheuristic ensembles for spatial assessment of forest fire susceptibility. J. Environ. Manag. 2020, 260, 109867. [Google Scholar] [CrossRef]

- Peruzzi, G.; Pozzebon, A.; Van Der Meer, M. Fight Fire with Fire: Detecting Forest Fires with Embedded Machine Learning Models Dealing with Audio and Images on Low Power IoT Devices. Sensors 2023, 23, 783. [Google Scholar] [CrossRef] [PubMed]

- Grari, M.; Yandouzi, M.; IdrissiI; Boukabous, M. Using IoT and ML for Forest Fire Detection, Monitoring, and Prediction: A Literature Review. J. Theor. Appl. Inf. Technol. 2022, 100, 5445–5461. [Google Scholar]

- Nikhil, S.; Danumah, J.H.; Saha, S.; Prasad, M.K.; Rajaneesh, A.; Mammen, P.C.; Ajin, R.S.; Kuriakose, S.L. Application of GIS and AHP Method in Forest Fire Risk Zone Mapping: A Study of the Parambikulam Tiger Reserve, Kerala, India. J. Geovis. Spat. Anal. 2021, 5, 1–14. [Google Scholar] [CrossRef]

- Abdusalomov, A.B.; Islam, B.M.S.; Nasimov, R.; Mukhiddinov, M.; Whangbo, T.K. An Improved Forest Fire Detection Method Based on the Detectron2 Model and a Deep Learning Approach. Sensors 2023, 23, 1512. [Google Scholar] [CrossRef] [PubMed]

- Saydirasulovich, S.N.; Mukhiddinov, M.; Djuraev, O.; Abdusalomov, A.; Cho, Y.-I. An Improved Wildfire Smoke Detection Based on YOLOv8 and UAV Images. Sensors 2023, 23, 8374. [Google Scholar] [CrossRef] [PubMed]

- Ntinopoulos, N.; Sakellariou, S.; Christopoulou, O.; Sfougaris, A. Fusion of Remotely-Sensed Fire-Related Indices for Wildfire Prediction through the Contribution of Artificial Intelligence. Sustainability 2023, 15, 11527. [Google Scholar] [CrossRef]

- Nguyen, H.D. Hybrid models based on deep learning neural network and optimization algorithms for the spatial prediction of tropical forest fire susceptibility in Nghe an province, Vietnam. Geocarto Int. 2022, 37, 11281–11305. [Google Scholar] [CrossRef]

- Garcia, T.; Ribeiro, R.; Bernardino, A. Wildfire aerial thermal image segmentation using unsupervised methods: A multilayer level set approach. Int. J. Wildland Fire 2023, 32, 435–447. [Google Scholar] [CrossRef]

- Deshmukh, A.A.; Sonar SD, B.; Ingole, R.V.; Agrawal, R.; Dhule, C.; Morris, N.C. Satellite Image Segmentation for Forest Fire Risk Detection using Gaussian Mixture Models. In Proceedings of the 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 4–6 May 2023; pp. 806–811. [Google Scholar]

- Dinh, C.T.; Nguyen, T.H.; Do, T.H.; Bui, N.A. Research and Evaluate some Deep Learning Methods to Detect Forest Fire based on Images from Camera. In Proceedings of the 12th Conference on Information Technology and It’s Applications (CITA 2023), Danang, Vietnam, 28–29 July 2023; pp. 181–191. Available online: https://elib-vku-udn-vn.translate.goog/handle/123456789/2683?mode=full&_x_tr_sch=http&_x_tr_sl=vi&_x_tr_tl=sr&_x_tr_hl=sr-Latn&_x_tr_pto=sc (accessed on 20 November 2023).

- Tupenaite, L.; Zilenaite, V.; Kanapeckiene, L.; Gecys, T.; Geipele, I. Sustainability assessment of modern high-rise timber buildings. Sustainability 2021, 13, 8719. [Google Scholar] [CrossRef]

- Reder, S.; Mund, J.P.; Albert, N.; Waßermann, L.; Miranda, L. Detection of Windthrown Tree Stems on UAV-Orthomosaics Using U-Net Convolutional Networks. Remote Sens. 2021, 14, 75. [Google Scholar] [CrossRef]

- Šerić, L.; Ivanda, A.; Bugarić, M.; Braović, M. Semantic Conceptual Framework for Environmental Monitoring and Surveillance—A Case Study on Forest Fire Video Monitoring and Surveillance. Electronics 2022, 11, 275. [Google Scholar] [CrossRef]

- Tran, D.Q.; Park, M.; Jeon, Y.; Bak, J.; Park, S. Forest-Fire Response System Using Deep-Learning-Based Approaches with CCTV Images and Weather Data. IEEE Access 2022, 10, 66061–66071. [Google Scholar] [CrossRef]

- Casal-Guisande, M.; Bouza-Rodríguez, J.-B.; Cerqueiro-Pequeño, J.; Comesaña-Campos, A. Design and Conceptual Development of a Novel Hybrid Intelligent Decision Support System Applied towards the Prevention and Early Detection of Forest Fires. Forests 2023, 14, 172. [Google Scholar] [CrossRef]

- Saha, S.; Bera, B.; Shit, P.K.; Bhattacharjee, S.; Sengupta, N. Prediction of forest fire susceptibility applying machine and deep learning algorithms for conservation priorities of forest resources. Remote. Sens. Appl. Soc. Environ. 2023, 29, 100917. [Google Scholar] [CrossRef]

- Alsheikhy, A.A. A Fire Detection Algorithm Using Convolutional Neural Network. J. King Abdulaziz Univ. Eng. Sci. 2022, 32, 39. [Google Scholar] [CrossRef]

- Casallas, A.; Jiménez-Saenz, C.; Torres, V.; Quirama-Aguilar, M.; Lizcano, A.; Lopez-Barrera, E.A.; Ferro, C.; Celis, N.; Arenas, R. Design of a Forest Fire Early Alert System through a Deep 3D-CNN Structure and a WRF-CNN Bias Correction. Sensors 2022, 22, 8790. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Kwak, D. A Study on a Complex Flame and Smoke Detection Method Using Computer Vision Detection and Convolutional Neural Network. Fire 2022, 5, 108. [Google Scholar] [CrossRef]

- Chaturvedi, S.; Khanna, P.; Ojha, A. A survey on vision-based outdoor smoke detection techniques for environmental safety. ISPRS J. Photogramm. Remote Sens. 2022, 185, 158–187. [Google Scholar] [CrossRef]

| Layer Name | Kernel Size | Stride | Input Size |

|---|---|---|---|

| Conv1 | 11 × 11 | 4 | 224 × 224 × 3 |

| Max-pool1 | 3 × 3 | 2 | 55 × 55 × 96 |

| Conv2 | 5 × 5 | 1 | 27 × 27 × 96 |

| Max-pool2 | 3 × 3 | 2 | 27 × 27 × 256 |

| Conv3 | 3 × 3 | 1 | 13 × 13 × 256 |

| Conv4 | 3 × 3 | 1 | 13 × 13 × 384 |

| Conv5 | 3 × 3 | 1 | 13 × 13 × 384 |

| Max-pool3 | 3 × 3 | 2 | 13 × 13 × 256 |

| Fcl | 2048 | / | 4096 |

| Fc2 | 2048 | / | 4096 |

| Fc3 | 1000 | / | 4096 |

| Name | Training Environment |

|---|---|

| CPU | Inter® Xeon® Gold 6240@2.59 GHz |

| GPU | NVIDIA GTX 3090@24 GB |

| RAM | 128 GB |

| PyCharm version | 2020.3.2 |

| Python version | 3.7.10 |

| PyTorch version | 1.6.0 |

| CUDA version | 11.1 |

| cuDNN version | 8.0.5 |

| Image Category | Description |

|---|---|

| Category 1 | Outdoor fire source with large flames and thick smoke |

| Category 2 | Fire source with large flames and less background interference |

| Category 5 | Outdoor burning image (interference) |

| Category 6 | Outdoor lighter image (interference) |

| Category 7 | Evening sunset (interference) |

| Category 8 | Picture of car lights (interference) |

| Image Source | Picture Information | False Alarm Rate | ||||

|---|---|---|---|---|---|---|

| Total Frames | Flame Frames | Literature [41] | Literature [42] | Literature [43] | Proposed Model | |

| Interference term | 315 | 0 | 2.64% | 5.28% | 15.9% | 0% |

| Flame image | 350 | 200 | 5.17% | 17.3% | 31.23% | 0.563% |

| Video 1 | 800 | 364 | 17.6% | 35.6% | 46.53% | 11.87% |

| Picture | Probability Value | State |

|---|---|---|

| a | 0.653 | Fire |

| b | 0.765 | Fire |

| c | 0.779 | Fire |

| d | 0.875 | Fire |

| e | 0.231 | Non-fire |

| f | 0.187 | Non-fire |

| g | 0.138 | Non-fire |

| h | 0.327 | Non-fire |

| Figures | a | b | c | d | e | f |

|---|---|---|---|---|---|---|

| (Xmin,ymin) | 93,142 | 94,142 | 86,145 | 0 | 0 | 206.5 |

| (Xmax,ymax) | 367,198 | 374,196 | 385,204 | 0 | 0 | 473.92 |

| d(px) | 38.2 | 3.35 | 13.36 | 0 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, S.; Zou, X.; Gao, P.; Zhang, Q.; Hu, F.; Zhou, Y.; Wu, Z.; Wang, W.; Chen, S. A Forest Fire Recognition Method Based on Modified Deep CNN Model. Forests 2024, 15, 111. https://doi.org/10.3390/f15010111

Zheng S, Zou X, Gao P, Zhang Q, Hu F, Zhou Y, Wu Z, Wang W, Chen S. A Forest Fire Recognition Method Based on Modified Deep CNN Model. Forests. 2024; 15(1):111. https://doi.org/10.3390/f15010111

Chicago/Turabian StyleZheng, Shaoxiong, Xiangjun Zou, Peng Gao, Qin Zhang, Fei Hu, Yufei Zhou, Zepeng Wu, Weixing Wang, and Shihong Chen. 2024. "A Forest Fire Recognition Method Based on Modified Deep CNN Model" Forests 15, no. 1: 111. https://doi.org/10.3390/f15010111

APA StyleZheng, S., Zou, X., Gao, P., Zhang, Q., Hu, F., Zhou, Y., Wu, Z., Wang, W., & Chen, S. (2024). A Forest Fire Recognition Method Based on Modified Deep CNN Model. Forests, 15(1), 111. https://doi.org/10.3390/f15010111