1. Introduction

The number of uncontrolled wildfires around the globe is increasing due to a rise in global temperature and extreme weather brought on by climate changes in recent years [

1,

2]. Longer periods of high temperatures lead to drier seasons and the accumulation of combustible material in the forests. These conditions yield an increase in uncontrolled and harmful wildfires which can be worsened by strong winds or thunderstorms. Without active and stringent measures to monitor and manage forest regions, it can be difficult to prevent and contain uncontrolled wildfires and the resulting irreversible damage. This is an especially challenging undertaking due to the vast size of typical forests, the danger posed, and the demand for highly intensive labor to fight fires or execute preventive maintenance actions.

Information collected using remote sensing methods (e.g., satellites or aircraft) can accurately monitor forestry environments while reducing labor and operational costs [

3,

4]. In particular, uncrewed aerial vehicles (UAVs) have gained attention in forestry research and operations (among other industrial applications) since they are easily deployable, provide a time-flexible operation, and can produce excellent spatial resolution measurements with different sensing modalities (e.g., visual, thermal, spectral or laser range) [

5]. UAVs have garnered interest as a promising remote sensing modality due to their accessibility, rapid maneuverability, operational range, low operational costs, and ability to provide high-resolution data. Environmental reconstruction of sensor data collected from UAVs can help create higher fidelity virtual copies of the environment (digital twins), holding the information that may be beneficial for inventorying and mapping purposes. UAVs are also especially suitable for assisting humans when working in large dangerous areas where access is limited. These characteristics have made researchers put significant effort towards using UAV-based solutions to aid in fire fighting tasks [

6,

7], scout of fire detection [

5,

8] or fire behavior analysis and prediction [

9].

A promising approach is to mitigate wildfires by reducing the accumulation of highly combustible biomass ranging from smaller biomass, such as dried pine needles, grasses, and small twigs, to larger wood pieces. Robotic forestry maintenance can play a key role in prevention tasks, especially when teaming aerial and ground vehicles. Recently, researchers have proposed and investigated a collaborative system in which a UAV will be used to survey the environment to generate a multi-layer map. This map comprises information including traversability and areas with an accumulation of combustible material. An Uncrewed ground vehicle (UGV) will receive this information and remove the highly combustible material from the forest [

10].

The integration of fuel mapping plays a vital role in ensuring the quality of forest management and the conservation of local flora and fauna. By accurately identifying and categorizing flammable materials based on the United States Department of Agriculture (USDA)’s fire behavior fuel model [

11] (the Anderson model), we can improve the precision of heavy-duty machines used for mulching and minimize the risk of false positives [

12]. This comprehensive approach, encompassing geometry, semantics, and temporal information, allows us to create a global map that captures the different fuel models of grasslands, shrublands, timber, and slash areas. With this valuable knowledge, we can implement targeted strategies and conservation to protect our forests and promote sustainable practices.

This study seeks to expand on previous works [

13,

14] by developing a perception system for UAVs in wildfire prevention efforts. To enable the implementation of such a system, we have designed a custom payload that contains different sensing modalities and a framework that includes simultaneous localization and mapping (SLAM), semantic segmentation, and fuel mapping. In our work, we have extensively tested our system on two study sites and validated our SLAM implementations and semantic mapping with qualitative and quantitative results.

2. Related Work

Developments in remote sensing technology allow conditions and changes over large geographic areas to be monitored and analyzed, at a given time or period. Remote sensing has been useful for forest wildfire applications and has been extensively studied. Previous efforts have successfully applied remote sensing, in the form of satellite imagery, in all stages of forest fire efforts, such as mapping efforts for fuel type [

15,

16,

17], burned area [

18,

19], vegetation recovery [

20], assessments of fire risk [

21,

22] and fire/burn severity [

23,

24], and detection of fires [

25,

26].

In the agricultural domain, UAVs have been used to map diseases [

27,

28], and implement precision agriculture tasks [

29]. When applied in the forestry domain, UAVs are especially appealing since they allow regular, frequent, and on-demand data acquisition, can host a diverse range of sensors according to the application, and can be used in real-time operations [

30]. Since forest environments are dynamic environments, data acquisition must be accurate and up-to-date. UAVs provide a significant advantage over traditional remote sensing techniques such as satellite imagery, notably in this area.

Works in applying UAVs for forestry applications have gained traction over the years. One author Hyyppä et al. [

31] explored the use of UAVs and a SLAM system to collect information using a Light Detection and Ranging (LiDAR) sensor, including stems of individual trees, stem curves, and the diameters at breast height (DBH). Another author Corte et al. [

32] employed machine learning techniques to design accurate predictive models from the LiDAR data collected using the UAV to conduct forest inventories. Previous works have also used low-altitude aerial images and LiDAR data to construct a local scale model of the forest canopy surface, with information about the elevation of vegetation, through photogrammetry and structure from motion approaches [

33]. Researchers have also examined using UAVs to monitor fire and its effects [

34,

35], identify spatial gaps [

36], and assess soil disturbances [

37].

Due to the large scale of forest environments, past research which used UAV data for forest inventory applications opted for using UAV data on small areas [

31,

33,

38,

39]. Results from these works have demonstrated the promising potential of using UAVs to map and estimate forest resources. Further, [

40] have investigated integrating partial-coverage UAV data with field plots to estimate forest resource parameters (i.e., volume, biomass, etc.) as a means to address the issue of mapping large-scale environments. Fusing semantic information is especially important for forest fire mitigation efforts as it allows a 3D representation containing classification information such as the location of flammable vegetation and other classes for a globally-registered map of the forest environment. While semantic mapping has gained traction over the recent years and has been applied in areas such as autonomous driving [

41,

42] or assistive robotics [

43,

44], implementing semantic mapping directly into the forest domain is still a work in progress, to the best of our knowledge. Past research in this area has investigated using high-resolution RGB images acquired by UAVs to classify shrubs [

45], and tree species [

46] in a heterogeneous forest environment. More recently, researchers have used classification approaches to target the forest wildfire issue.

Automating survey methods that involve deploying autonomous robotic systems to navigate in the field requires the robots to have a robust Simultaneous Localization and Mapping (SLAM) system to estimate their state in 3D space. Over the years, SLAM research has matured with the development of different systems based on various combinations of sensor modalities [

47]. LiDAR Odometry and Mapping (LOAM) [

48] is a state-of-the-art loosely-coupled LiDAR and inertial-based SLAM algorithm. In tightly-coupled sensor fusion frameworks, sensor data from different modalities are jointly processed and combined at a lower level, usually through a filtering or optimization framework, to estimate the robot’s pose and update the map. However, tightly-coupled frameworks require more complex algorithms and computational resources to achieve joint estimation and optimization. The LiDAR odometry via smoothing and mapping (LIO-SAM) [

49] is a tightly-coupled LiDAR and inertial-based SLAM algorithm which expands on the LOAM framework by including loop closure methods and feedback from absolute measurements such as the Global Positioning System (GPS) and compass heading. Works have also looked into fusing LiDAR, visual, and inertial modalities, such as [

50] which featured visual-inertial odometry (VIO) system and a LiDAR odometry (LO) system. The VIO subsystem is tightly-coupled and serves as a motion model for the LiDAR mapping which dewarps LiDAR points and registers the scans to the global map. Various works have implemented SLAM systems, primarily LiDAR and inertial-based, in the forest environment, for tree detection and tree trunk estimation [

51], and 3D forestry inventory mapping [

52,

53].

3. Materials and Methods

The design and development of the complete system included customized hardware and software components. The hardware includes a payload designed to allow easy mounting on diverse types of drones. It also contains different types of sensors that provide spectral, visual, and range information. The software stack includes synchronization of the sensor data acquisition, data logging, and processing. Details of the individual components are discussed in the following sections.

3.1. Sensing Payload

We designed and deployed a sensing payload with different types of components that include an inertial measurement unit IMU (VN-200 Rugged GNSS/INS), a three-dimensional Light Detection and Ranging scanner (LiDAR) with 32 channels (Velodyne VLP-32), a 20.2 Megapixel stereo camera with 8.5 mm focal length lenses (Allied Vision Alvium 1800U-2040c), a multispectral camera (Mapir Survey3W, Red+Green+near-infrared channels), and two onboard computers (NVIDIA Jetson Xavier Development Kit and the Intel core i7 NUC). Given the highly unstructured, dynamic, and changing forest environment, this sensing payload was designed to have robust sensing capabilities and is lightweight while also taking into account future research work. Due to the lack of perception work in forestry, the sensors chosen allow for future work and ablation studies regarding the best sensory combination for important decision-making modules such as semantic mapping, navigation, and SLAM. The LiDAR, stereo cameras, and IMU are chosen to have different sensing modalities on board with different fields of view and spectral characteristics. This helps to account for conditions that may render either of the sensors susceptible to poor performance. For instance, the stereo cameras have a horizontal and vertical field of view of 60.4° and 44.9°, respectively. The LiDAR has a horizontal and vertical field of view of 360° and 40°, respectively. We also opted for two onboard computers to divide the computing load for recording and processing the data. The Xavier board is intended to run machine learning models, while the NUC computer handles all the heavy CPU calculations (e.g., SLAM processing).

Figure 1 shows a 3D rendering of the payload and the mounting of its different components.

The sensing payload was designed for easy mounting on any aerial platform and can be tilted at different viewpoints ranging from horizon to nadir. Furthermore, the components are mounted in a modular way, allowing easy integration and removal of each one, if necessary, for operational constraints (e.g., limited weight capacity). In order to minimize weight, the sensing payload is configured to be powered using the UAV’s batteries. Hence, a power distribution board was used to distribute the appropriate voltages to each component.

Table 1 shows all the sensors in the payload and their operational frequency. The total weight of the payload is 3.832 kg, without factoring in the weight of the landing gears and the mounting plate. Altogether, the electrical and mechanical properties of the payload allowed for an operation of 18–20 min when mounted on a DJI Matrice 600 (using six TB47S batteries, each with 4500 mAh) and 30–35 min when mounted on an Alta X (using two 16,000 mAh batteries).

3.2. Simultaneous Localization and Mapping

We used the LiDAR, IMU, and Global Navigation Satellite System (GNSS) measurements to estimate the UAV’s 6 degrees of freedom pose (odometry) during the flight. Thus, the estimated state of the robot is represented as a 6-dimensional vector corresponding to the drone’s position and its orientation in space. We employed the estimated odometry to incrementally build a three-dimensional (3D) environment map in the form of a point cloud. Accurate pose estimation is crucial for a global map such as fuel mapping implemented in

Section 3.3.

In this work, we used Fast LiDAR Inertial Odometry with Scan Context, FASTLIO-SC, [

54,

55] and LiDAR Inertial Odometry Smoothing and Mapping, LIOSAM, [

49], which are two state of the art SLAM implementations that fuse the sensors measurements using a tightly coupled approach. We decided to focus on LiDAR inertial-based SLAM systems because cameras are greatly affected by lighting conditions and motion blur. Results from [

14] have also reported that the visual odometry had higher RMSE compared with the LiDAR odometry. LIOSAM performs state smoothing and mapping scan matching using a factor graph that introduces relative and absolute measurements as factors [

49]. Relative measurements, such as IMU and LiDAR data, are fused to optimize the calculation of the odometry, further improved by GNSS absolute positioning (when available). In particular, the IMU information allows LIOSAM to estimate an initial guess of the LiDAR’s position, velocity, and orientation by a preintegration stage, which integrates IMU measurements over the LiDAR scan rate. LIOSAM uses this LiDAR pose initial guess for de-skewing LiDAR point clouds and computing the optimized odometry. Odometry prior optimization is obtained by performing a local scan matching between consecutive LiDAR scans using corner and surface features. The GNSS measurements are included in the LiDAR optimization stage for enhancing pose estimation. Finally, the optimized pose is used as feedback to the preintegration stage for determining the IMU bias (providing the tight coupling).

Conversely, FASTLIO-SC fuses LiDAR and IMU measurements by a tightly-couple-iterated extended Kalman filter, which enables robust navigation in fast motion, noisy or occluded environments [

54]. Specifically, the iterated extended Kalman filter is implemented using the Iterated Kalman Filters on Manifold’s toolkit, based on the premise that the robotic system evolves on a SO(3) manifold [

56]. Thus, FASTLIO-SC proposes a new formula to compute the Kalman gain that depends on the system state instead of the dimension of the measurement. In this context, the iterative extended Kalman filter uses LiDAR and IMU measurements as input for estimating LiDAR odometry. Next, the LiDAR odometry is improved by GNSS measurements and movement restriction imposed by a scan context approach [

55]. This information is fused using a factor graph strategy which outputs an optimized LiDAR odometry. In contrast to LIOSAM, the optimized LiDAR odometry is not used as feedback for estimating IMU bias. A visual representation of the architecture of these two approaches is depicted in

Figure 2.

Time synchronization among the sensors and computers is a critical issue and significantly impacts the quality of the SLAM system. We designed a custom time synchronization board featuring a Teensy microcontroller that synchronized the computers, the LiDAR, the IMU, and the cameras by generating PPS signals that simulate the ones obtained from a GNSS. These signals comprise squared signals used to trigger the cameras, LiDAR, and are encoded using the National Marine Electronics Association (NMEA) strings protocol for serial communication. Having the microcontroller mimic GNSS signals is desirable in this application since forest environments can contain certain areas which would cause unreliable GNSS readings (and thus its PPS signals).

3.2.1. Photogrammetry

Photogrammetry is a technique in computer vision used to estimate the structure of a scene from a collection of images with optional GNSS information. Most modern approaches do not require any prior sensor calibration, which makes the tools robust and easy to deploy. Over the last decade, both industry and academia have developed easy-to-use software which enables wide-scale adoption of this technology to perform surveys in diverse domains such as agriculture, construction, or forestry. An important characteristic of photogrammetry is that it is solely an offline process, which requires all the images to be available before computation begins. We use photogrammetry as a benchmark to evaluate the performance of the online methods we developed in this work.

In this work, we used Agisoft Metashape [

57] 2.0.2, a common commercial structure from motion software. We downsampled our images temporally so that they were at least a half second apart because they would be highly redundant otherwise. For our collection in the most representative site (see

Section 4.1), this resulted in 886 images. Each image was tagged with the RTK GNSS position which most closely matched the image timestamp. For the initial step of estimating the camera parameters and computing the sparse geometry, we use the parameters which were optimized for forestry as found by [

58]. Then we performed meshing using the “High” quality and “High” face count settings. The pose estimation took 10 min and the meshing process took 32 min on a cloud server with a CPU AMD EPYC-Milan Processor, 60 GB RAM, and a GRID A100X-20C GPU.

3.3. Fuel Mapping

In this work, fuel mapping involves three tightly coupled modules: semantic segmentation, LiDAR camera registration and odometry, and global map representation. To achieve accurate semantic segmentation, we employ the SegNeXt network, a transformer architecture was specifically chosen for its state-of-the-art performance on popular benchmark datasets and excellent generalization capabilities, which is particularly beneficial given our limited real-world training images [

59]. This network identifies the predicted class for each pixel in the image, providing a semantically labeled image for three compressed classes, fuel, canopies, and background. We trained this Neural Network (NN) with pre-trained weights from the CityScapes dataset [

60] and 40 RGB images collected in our deployments. While 40 images were used for training, the actual number of instances is higher, since a class can appear multiple times in a single image. The RGB images have a higher resolution than our multispectral and it allows for pre-weight transfer learning from other datasets. While there is current research on using GRN (Green, Red, Near Infrared) images with pre-weights from RGB datasets, due to the the lack of state-of-the-art research and the scope of this paper, we decided to use RGB images instead.

As mentioned in

Section 1, we used the Anderson fuel model [

11], which is comprised of 15 classes as included in

Table 2 to train our semantic segmentation model, which is publicly available (All models are available at

https://github.com/Kantor-Lab/Safeforest_CMU_data_dvc/tree/public_models (accessed on 15 June 2023)). However, the classes were aggregated into three compressed classes at the post-processing steps given we did not observe all of them in the testing sites and fine-grained training has shown to improve convolutional neural network’s performance [

61]. Additionally, aggregated classes allowed simplifying the validation mapping labeling for the qualitative comparison. We chose these three classes based on easier visual inspection for fuel regions, as the site’s bare earth paths and a cluster of canopies allow for clearer boundaries between the chosen classes. Moreover, the other non-forest related classes were aggregated as background as it falls outside the scope of our current proposed system.

Subsequently, to transform the semantic image into a global map, we integrated LiDAR and camera data. This step is essential to ensure that the map projection of the semantic classes is accurate for the ground robot to mulch all potential flammable materials as our main goal is to prevent harmful wildfires. Through LiDAR camera registration and odometry, we determined which LiDAR points correspond to the camera’s field of view, enabling the registration of image pixels into the global coordinate system. By leveraging the extrinsic of the LiDAR relative to the camera, we transform LiDAR measurements into the camera’s coordinate frame. Using the calibrated camera intrinsic parameters, we project each LiDAR point onto the image plane. Points falling within the camera’s field of view are assigned classification labels from the corresponding pixels in the semantic model. The resulting semantically-textured point cloud is then transformed into the inertial reference frame using the drone’s current pose estimated by our SLAM system. This integration of LiDAR, camera, and SLAM data allows us to create a comprehensive global representation that captures the fuel characteristics in the environment.

To ensure the efficient creation of a large-scale global map in real-time, it is necessary to utilize lightweight map data structures that can handle the voluminous information generated. Various methods such as Octree [

62], Truncated Signed Distance Function (TSDF) [

63], and openVDB [

64] were considered for our research. After a careful cost-benefit analysis considering factors such as map resolution, semantic information, and computational efficiency, we identified two methods as the most suitable for our scenario: semantic OctoMap [

65,

66] and Unknown Free Occupied Map (UFOMap) [

67]. Among them, UFOMap emerged as the preferred choice due to its significant speed advantage over OctoMap, being 26 times faster and reducing memory consumption by 38% [

67]. Both methods use the Octree data structure, which offers a significant reduction in memory requirements compared to point clouds while maintaining high map quality. They convert the point cloud into voxels with a resolution of 0.1 m, while preserving valuable information about the predicted classifications. The global octree is continuously updated by incorporating newly generated semantic point clouds. By leveraging the lightweight Octree map data structure from UFOMap, we can create a comprehensive semantic global representation of the environment in real time.

4. Results

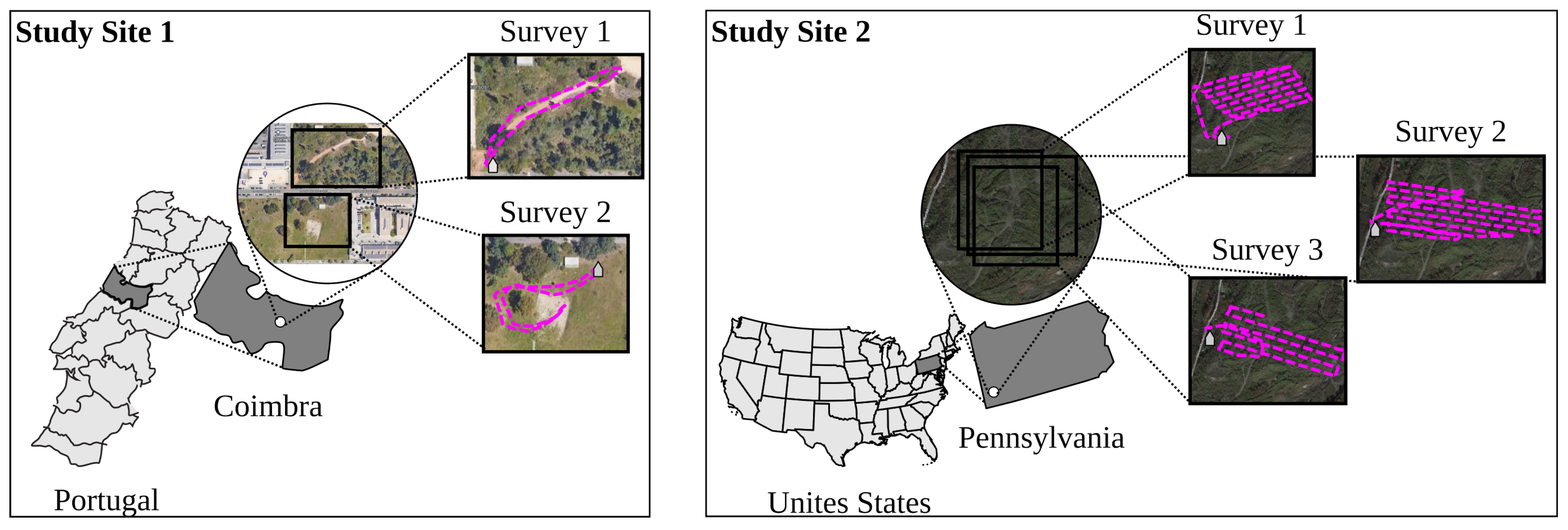

In order to evaluate our localization and mapping methods, we conducted two data collection campaigns in semi-urban and rural sites (

Figure 3). The semi-urban site is located in Coimbra, Portugal (

N

W). The rural site is located in Pittsburgh, United States (

N

W). We choose these two locations in order to test the quality of the generated maps in scenarios with relatively low and high complexities see

Figure 3. As the Coimbra test site is a semi-urban environment, it contains more structured-like landscapes making it a low-complexity scenario for our application. In contrast, the rural test site is unstructured and wild, posing a more challenging condition for the feature extraction and subsequent scan matching of the employed SLAM approaches.

The flight plan of the surveys performed at the Coimbra test site was controlled by an experienced UAV pilot. On the other hand, for the rural test site, which covers a larger area, we decided to use the UAV’s autopilot. For each survey the altitude, ground speed, and lateral distance were about 30 m, 3.0 ms, and 20 m, respectively.

4.1. Simultaneous Localization and Mapping (SLAM)

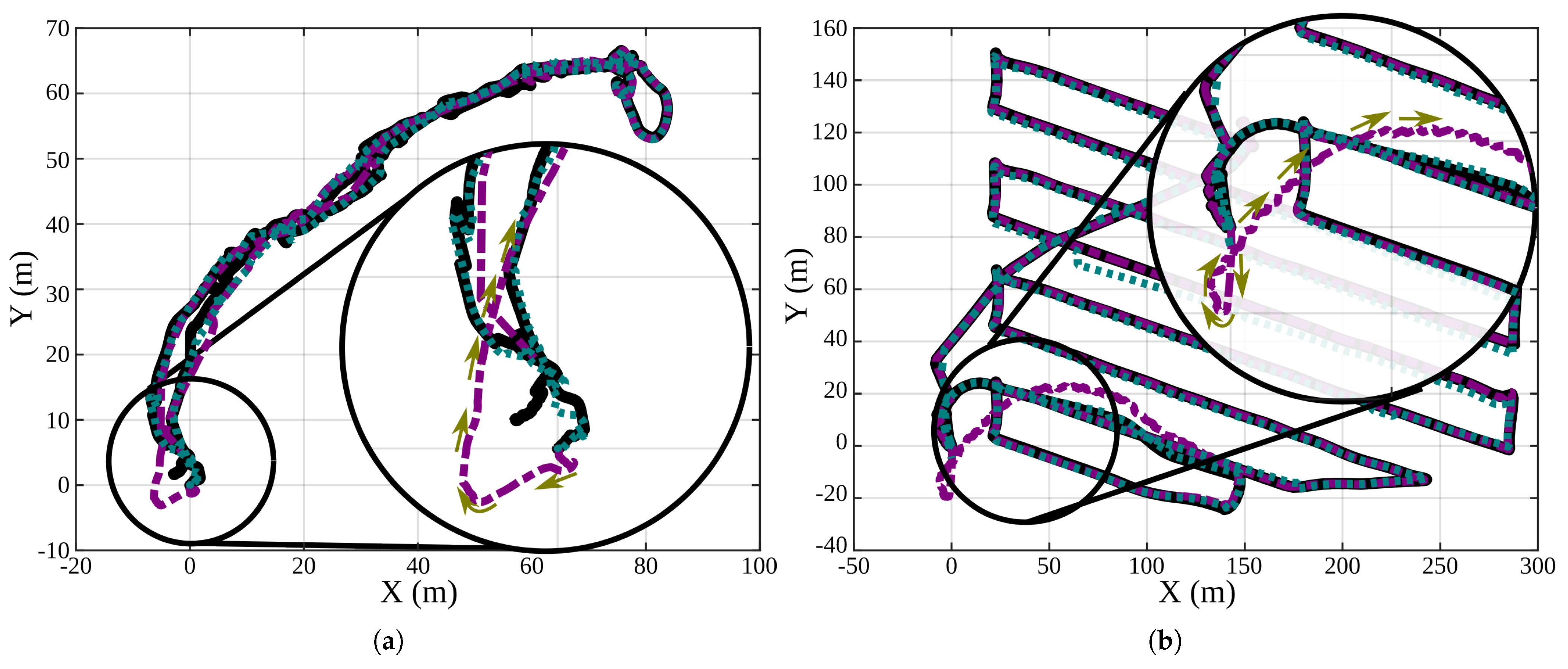

In order to evaluate the quality and suitability of the SLAM approaches for mapping forest regions, we separated the localization and mapping results. The system localization was evaluated by comparing the pose estimations given by LIOSAM and FASTLIO-SC with position measurements from a centimeter-level accuracy real-time kinematics (RTK) device. The main metric employed in this case is the root mean squared error (RMSE) calculated over a complete trajectory. In all the surveys, it was found that LIOSAM outputs lower RMSE than FASTLIO-SC, as

Table 3 shows.

Regarding translational RMSEs, LIOSAM outperforms FASTLIO-SC, showing errors between 0.28 m to 1.92 m. A lower RMSE indicates that the implemented algorithm can estimate the vehicle’s odometry closer to the current trajectory. Consequently, the drift between the vehicle’s current position and the estimated position by LIOSAM is lower than that achieved by FASTLIO-SC. Specifically, from

Figure 4 one can observe that LIOSAM produces optimized odometry that closely aligns with the GNSS measurements. Conversely, FASTLIO-SC’s odometry significantly differs from the actual ground truth measurements at the beginning of each survey. This initial deviation is attributed to the heading initialization utilized by FASTLIO-SC, which differs from the true yaw orientation of the platform. As a result, the RMSE of FASTLIO-SC increases, leading to comparatively lower performance when compared to LIOSAM, as shown in

Table 3.

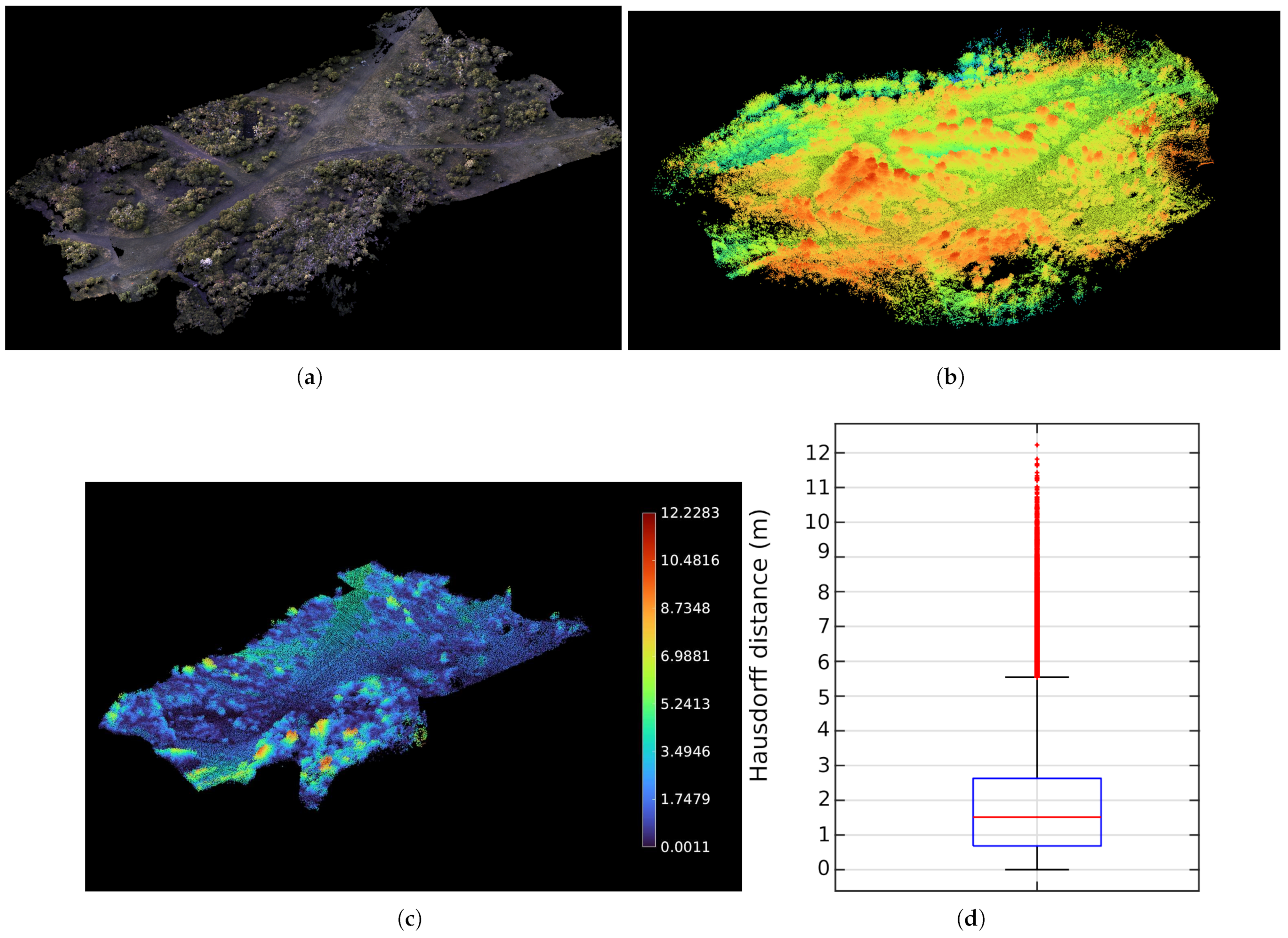

Based on the before mentioned, noting that previous works have reported that in forested regions, one could anticipate RMSE values ranging from

m to

m [

68], and considering that the RMSEs yield by LISOAM in both study sites account for less than the

of the overall trajectory, we decided to assess the LIOSAM’s mapping outcomes further. Specifically, LIOSAM was tested using data collected from study site 2, a rural environment that closely resembles a forested area due to its predominant composition of low and high vegetation. At this point, we employed the mesh generated by the Agisoft photogrammetry software as the baseline, as mentioned in

Section 3.2.1. In order to have a strong indication of the quality of the generated map regarding its morphological, visual, and geometry aspects, two approaches were used: (i) using a cloud-to-cloud comparison with the photogrammetry output and (ii) measuring geometric variables (i.e., distances and areas) in different maps.

The cloud-to-cloud evaluation is mainly quantitative and consisted in first converting the mesh into a point cloud (i.e., reference cloud). Subsequently, the point cloud generated with LIOSAM was translated and rotated so both of them were spatially aligned. Finally, we measured the geometric difference between them using a nearest neighbor criteria similar to the Hausdorff metric [

69]. The key difference is that to improve accuracy, a local model around the closest point in the reference cloud is computed to approximate its surface and thus have a more accurate estimation of the distance. This calculation was done using the Cloud Compare software [

70].

Figure 5 shows the reference cloud (top left), and the raw SLAM cloud in two formats: colorized according to its height (top right) and colorized according to its distance to the reference (bottom). In general, it can be seen that various parts of the generated map are similar to the reference under this metric (blue-colored points), but the distance increases on some of the treetops. Furthermore, the boxplot shows a median of 1.51 m, with outliers for errors above 5.54 m. These outcomes indicate that height in the maps is one of the main uncertain variables to measure in these maps. This outcome is somehow expected since the treetops in the LIOSAM point cloud are less dense and more noisy. Also, the height estimation derived from photogrammetry can be subject to large errors, which makes vertical distances in higher structures (like trees) more uncertain [

71]. For this reason, we consider this evaluation qualitative.

An important thing to note in

Figure 5 is the size of the maps. Since the baseline uses only the left camera images, the map is limited by their field of view. In contrast, the SLAM map spans a larger region since the LiDAR has a range of up to 200 m (in this work we limited the LiDAR range to 100 m) with a wider field of view.

Given that the previous evaluation is mostly qualitative, the second evaluation of the maps consisted of measuring two main geometric variables: the area of characteristic regions and the length between two landmarks. Since the photogrammetry map is prone to errors given by the feature matching and geolocalization (see

Section 3.2.1), we considered measurements taken in Google Earth software [

72] as the main baseline. Its satellite imagery provides a resolution of 0.15 m, which is accurate enough considering the total testing area covers around 3.000 m

. On the other hand, the reference and SLAM maps have a resolution of 1 cm and 30 cm, respectively. Considering that the baseline and the SLAM maps span a different area, we chose regions and landmarks that appear in both, as

Figure 6 shows.

The results of this evaluation show that in general, the measurements obtained using the LIOSAM map are closer to the ground truth, as

Table 4 summarizes. This outcome, along with the qualitative evaluation and the assessment of the odometry estimation, allows us to infer that the geometry and scale of the LIOSAM algorithm are suitable for generating accurate 3D models of forest regions online. In our tests, we also observed that using the GNSS signal as an additional observation in the estimation scheme of LIOSAM greatly improves its odometry estimations and, consequently the map generated. Finally, it is also noteworthy that having larger errors using the photogrammetry map does not imply that it is unsuitable for this application. Some practical strategies can be used to improve the accuracy of such maps. For example, having geo-referenced landmarks in the form of ground control points greatly helps to improve the optimization process during the feature matching and reprojection stages.

4.2. Fuel Mapping

In this section, we detail how we evaluate the critical individual modules necessary for the final map, specifically semantic segmentation and fuel mapping. We address the current drawbacks of each technique, our setting limitations, and our solutions to overcome them.

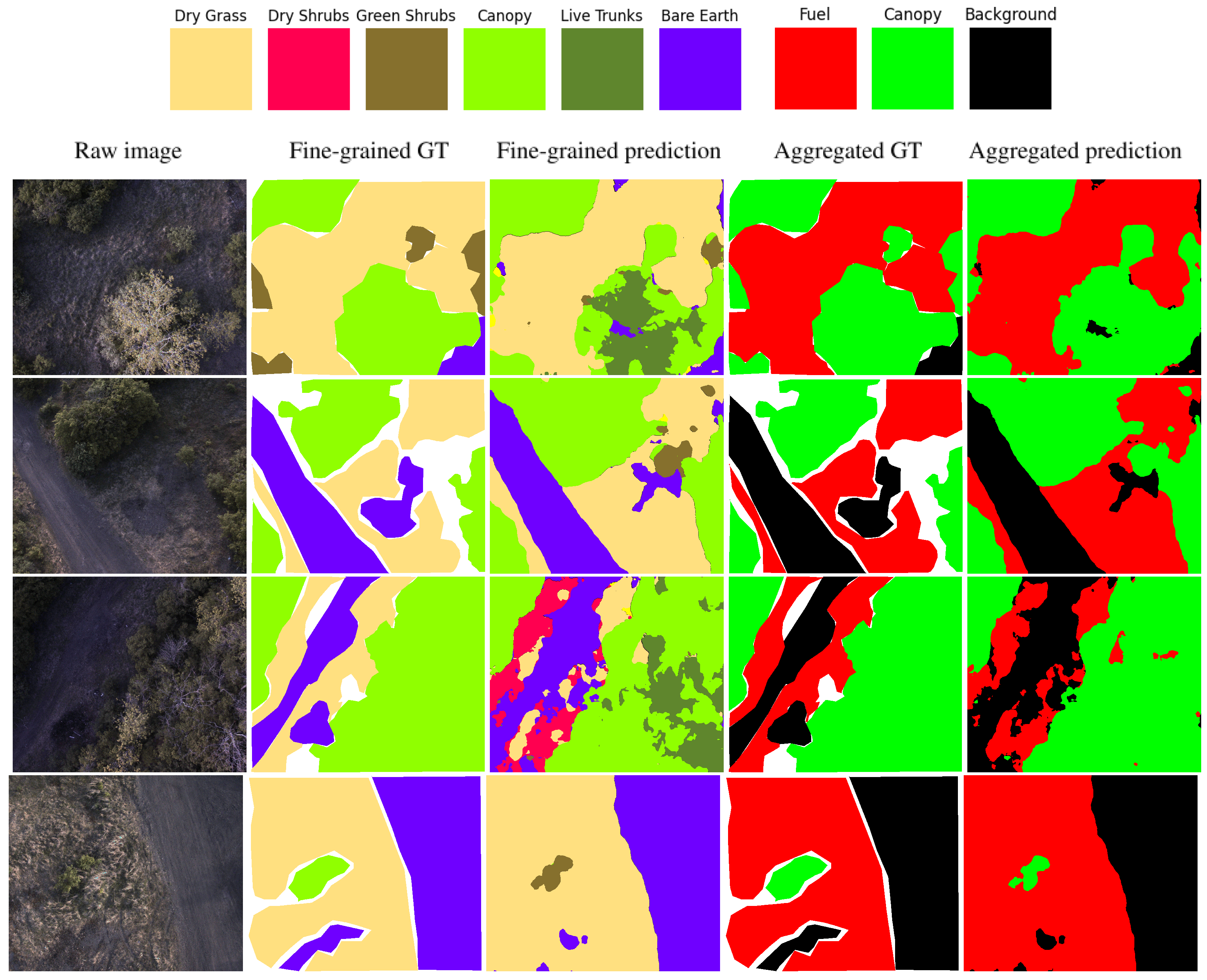

In our evaluation of the semantic segmentation model, we utilize a small annotated test set derived from the semantic mapping mission in the rural test site, which was not used for training. For this purpose, we annotated 17 images using the same strategy employed during training, but these images were not included in the training dataset. To ensure flora safety, we have used a conservative approach when labeling fuel where we would underestimate fuel to avoid future issues where the ground vehicle would mulch canopies. Similarly, for the training case, it is worth noting that despite having 17 images, the actual instances used for testing are higher. This is because some classes can appear more than once in a single image. We conducted an analysis of the semantic segmentation using precision, recall, and intersection over union (IoU) metrics to assess the fuel classification in our system. These metrics were chosen because of their importance for addressing false positive/negative results while IoU estimates the general quality of the segmentation. We decided to mainly focus on precision for our results because it avoids false positives, ideal for fuel classification to avoid mulching green vegetation and other important dynamic classes such as humans and animals.

It is worth noting that certain classes which were included in the original 15 classes, are absent from the evaluation

Table 5. This is because these classes were not present in the test set, as seen in

Figure 7. Consequently, these classes were excluded from the results. We also took into account the lack of training instances by adding class weights to rarely occurred classes in our training model to lower dataset shift issues. Even with the aforementioned techniques used, there is a noticeable shift in performance between the common classes and the rare classes, which could stem from our lack of expert annotators to ensure consistency for each subclass, the need for more class imbalances techniques, or the addition of more training instances. However, considering a ground vehicle can do further exploring and the high precision in the known fuel classes, we can conclude that the current results are above our needed threshold to find fuel regions for further inspection.

To provide further insight into the model performance, we show qualitative predictions on the test set, as seen in

Figure 8.

Finally, we present the results of the semantic mapping experiment conducted at the most representative test site (the rural location in Pittsburgh, United States). Due to the lack of ground truth information for the geometric and semantic information in the test sites, quantitative metrics for semantic mapping in large-scale forestry environments were not available. For quantitative results, a high-precision laser scanner such as the Leica NOVA MS50 or BLK360 [

73] or adding ground control points for photogrammetry is necessary to quantify the results. Therefore, we devised a qualitative test to evaluate our full framework by comparing the generated UFOMap with a manually labeled semantic mesh from an orthomosaic to evaluate the accuracy of our global map.

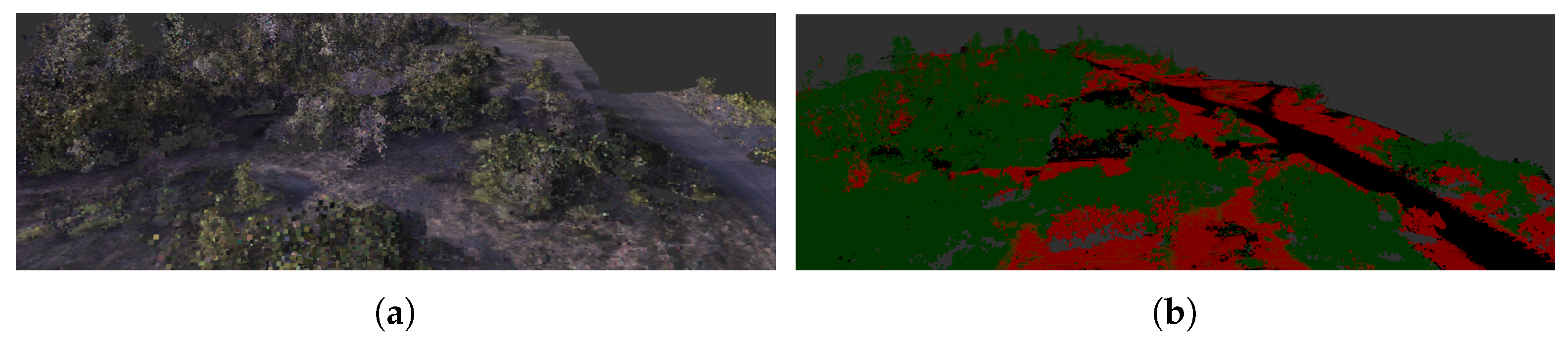

Figure 9 showcases the semantic UFOMap results, including the orthomosaic ground truth labels for the compressed classes (fuel in red, canopy in green, and background in black), along with the RGB UFOMap representing the terrain baseline. Additionally,

Figure 10 shows a close-up for both image and segmentation for a clearer comparison between semantic classes and the original rgb image.

5. Discussion

In the two test sites surveyed in this work, LIOSAM demonstrates superior performance in terms of RMSE compared to FASTLIO-SCon the study sites reported in this work. Specifically, we noticed that LIOSAM might outperform FASTLIO-SC in two key aspects: IMU heading initialization and efficient odometry updates facilitate the integration of GNSS measurements within the optimization loop. The positive impact of heading initialization and odometry updates can be observed in the quantitative and qualitative results depicted in

Table 3 and

Figure 4.

Although the experiments addressed in the current work show that LIOSAM achieves better odometry and mapping outcomes, it should be noted that for the Pittsburgh study site, the average RMSE is higher than the Coimbra study site. Although the flights in the two testing sites were different (autonomous in Pittsburgh, manual pilot in Coimbra), the main factor that advocates the higher errors in the Pittsburgh surveys might be related to the need for more reliable features for determining a closer LiDAR odometry to the actual trajectory. Specifically, LIOSAM extracts corner and surface features from LiDAR scans to perform scan matching between point clouds and retrieve LiDAR odometry. Thus, such features might not be reliable enough to yield accurate LiDAR odometry. Note that in the semi-urban environment, the Coimbra study site, where corner features are more abundant than in a rural area, the average RMSE is below 1 m, which is acceptable considering the size of the surveyed area and the distance flown.

While the two flights regarding FASTLIO-SC, it performs direct registration of LiDAR scans; thus, it does not need computing corner of surface features. However, the experiments performed in both study sites show that its main drawback might be related to the initial yaw orientation. In particular, the FASTLIO-SC yaw angle does not align with the actual IMU heading angle. This mistrust alignment severely affects the estimated odometry because it starts deviating from the current location of the platform; see

Figure 4. For instance, one of the worst deviations occurs on the Coimbra study site, where the RMSE on the

X axis rises to 42.51 m.

Based on the aforementioned, LIOSAM might be more suitable than FASTLIO-SC for performing localization and mapping tasks in semi-urban and rural areas. Nevertheless, it is suggested to continue evaluating the limitations and advantages of both SLAM approaches on rural and forest environments in future works. Further assessment of these algorithms might yield insights about a new robust SLAM strategy for these environments.

Our semantic segmentation experiments show that a small number of labeled images from a similar region can result in relatively accurate predictions for that region. Overall, the predictions in

Figure 8 show fairly good agreement with the ground truth. The errors often occurred when the textures were indicative of the predicted class, suggesting that the model struggles with more global reasoning. This could be further improved with a comparison study between RGB and multispectral cameras to see which factors more in the quality of the segmentation, the channel choices or the higher resolution. This was not possible for this paper as we did not have enough annotators for both sensors. By observing both the raw classes and the classes aggregated by the rules defined in

Table 2, we see that many of the prediction errors fall within the same aggregate class. Given the variability of vegetation by geographic area and different objectives of mapping, it seems that training many site-specific models may be easier than expecting one fixed model to generalize worldwide. This observation agrees with the result of our previous work [

14], where it was shown that increasing the number of training samples, marginally increased the segmentation accuracy.

In the fuel mapping results, we observed that capturing the map from a higher altitude, specifically between 30 m and 40 m above the ground, with resolutions below 0.1 m did not yield a cohesive and dense map due to limitations in the LiDAR density. Furthermore, the mapping process faced challenges related to odometry dropouts as the map expanded and the computational load required for neural network operations. However, despite these challenges, a high map density was achieved by collecting data points at a frequency of approximately 4 Hz, ensuring a sufficient representation of the environment as seen in

Figure 9.

Visual comparison from

Figure 9b,c (between the semantic UFOMap and the ground truth map) revealed a general alignment. Nonetheless, specific issues were identified, particularly in localized areas such as thin layers of background misidentified as canopies. These issues could be attributed to factors such as pose drift, abrupt jumps resulting from the lack of loop closure updates in the odometry messages employed, and semantic mapping. Additionally, utilizing detection without tracking had a negative impact on the segmentation quality of the map. Incorporating tracking mechanisms would enhance the robustness and accuracy of the mapping process, thereby contributing to a more reliable global representation system.

Importantly, despite room for improvement, the generated global map demonstrated a conservative approach to fuel classification, prioritizing the preservation of tree cover and minimizing the risk of erroneously identifying and removing trees. This approach aligns with the objective of accurately identifying fuel regions for wildfire prevention. These observations provide valuable insights into the challenges associated with semantic mapping. However, they underscore the significance of addressing loop closure limitations, improving tracking mechanisms, and optimizing resolution parameters to achieve a cohesive and detailed global map representation.

6. Conclusions

This paper presents a comprehensive system for monitoring forests using UAVs. The system includes a custom sensor payload with LiDAR, stereo cameras, IMU, and a multispectral camera mounted on a drone to collect reliable data in challenging forest environments. The payload is designed to be lightweight and adaptable to different drones, providing flexibility. The study implements two SLAM algorithms, LIOSAM and FASTLIO-SC, for accurate localization and mapping using LiDAR, GNSS, and IMU data. Photogrammetry is used as a benchmark to evaluate the performance of the developed online methods. The integration of fuel mapping, which includes semantic segmentation, LiDAR camera registration, and odometry, is discussed, as well as the adaptation of the global map representation. The paper concludes by highlighting qualitative tests and a comparison of the generated semantic maps with manually labeled data. Overall, this system provides a comprehensive solution for forest monitoring with drones, enabling accurate data collection and mapping for effective forest management and conservation.

Future work needs to address the limitations identified in our current framework. Alternative initialization strategies should be explored to improve odometry performance from FASTLIO-SC. Improving the alignment accuracy between the SLAM map and the reference cloud, especially with respect to tree canopy, is essential for a more accurate representation of forested areas. The accuracy of photogrammetry maps can be improved by including ground control points, which will be also used to improve the evaluation of the mapping results. In this context, the collection of geometric variables like distances and areas in situ will provide ground truth for mapping evaluation. The fuel mapping experiment faced challenges related to map coherence, odometry dropouts, and semantic segmentation results. Future research should focus on improving semantic mapping loop closure, implementing tracking for the semantic voxels, adding the multispectral camera for detection, and optimizing resolution parameters to overcome these challenges. Advances in the performance of SLAM, map alignment accuracy, geometric measurements, semantic segmentation, and fuel classification are critical to developing a robust and accurate forest mapping system that facilitates effective forest management and conservation efforts.

Author Contributions

Conceptualization F.Y. and G.K.; data curation, M.E.A., D.R., W.K., T.A.-R. and F.Y.; formal analysis, M.E.A., D.R. and T.A.-R.; investigation, M.E.A., D.R., W.K., T.A.-R. and F.Y.; methodology, all authors; project administration, G.K. and F.Y.; Resources, G.K. and F.Y.; software, M.E.A., D.R., W.K., T.A.-R. and F.Y.; supervision, G.K. and F.Y., validation, M.E.A., D.R., T.A.-R. and F.Y.; visualization, M.E.A., D.R., W.K., T.A.-R. and F.Y.; writing—original draft, all authors; writing—review & editing, M.E.A., T.A.-R. and F.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported by a CMU Portugal Affiliated Ph.D. grant (ref. PRT/BD/152194/2021) from the Portuguese Foundation for Science and Technology (FCT) and the Project of the Central Portugal Region and cofunded by the program Portugal 2020, under the reference CENTRO-01-0247-FEDER-045931.

Data Availability Statement

Data that support the findings of this study are available on request to the corresponding author, M.E.A. The data are not publicly available on request due to permission necessary to share data from certain locations.

Acknowledgments

We extend our sincere gratitude to my Ph.D. supervisors, David Portugal, Joao Filipe Ferreira, and Paulo Peixoto, for their invaluable guidance, support, and expertise throughout this research project. Their unwavering commitment to excellence and their dedication to my academic growth have been instrumental in the successful completion of this scientific journal paper. We also thank Babak B. Chehreh for their invaluable help piloting the UAV for acquiring our datasets in Portugal.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Uncrewed Aerial Vehicle |

| GNSS | Global Navigation Satellite System |

| IMU | Inertial Measurement Unit |

| LiDAR | Light Detection and Ranging |

| RGB | Red, Green, Blue |

| GPU | Graphics Processing Unit |

| SLAM | Simultaneous Localization and Mapping |

| CPU | Central Processing Unit |

| PPS | Pulse Per Second |

| GPS | Global Positioning System |

| NMEA | National Marine Electronics Association |

| NN | Neural Network |

| TSDF | Truncated Signed Distance Function |

| UFOMap | Unknown Free Occupied Map |

| IoU | Intersection over Union |

| RTK | Real Time Kinematics |

| RMSE | Root Mean Squared Error |

| LIOSAM | Lidar Inertial Odometry via Smoothing and Mapping |

| FASLIO-SC | Fast Lidar Inertial Odometry with Scan Context |

References

- Jain, P.; Castellanos-Acuna, D.; Coogan, S.C.; Abatzoglou, J.T.; Flannigan, M.D. Observed increases in extreme fire weather driven by atmospheric humidity and temperature. Nat. Clim. Chang. 2022, 12, 63–70. [Google Scholar] [CrossRef]

- Jolly, W.M.; Cochrane, M.A.; Freeborn, P.H.; Holden, Z.A.; Brown, T.J.; Williamson, G.J.; Bowman, D.M.J.S. Climate-induced variations in global wildfire danger from 1979 to 2013. Nat. Commun. 2015, 6, 7537. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Chen, G.; Potter, C.; Meentemeyer, R.K. Integrating multi-sensor remote sensing and species distribution modeling to map the spread of emerging forest disease and tree mortality. Remote Sens. Environ. 2019, 231, 111238. [Google Scholar] [CrossRef]

- Bright, B.C.; Hudak, A.T.; McCarley, T.R.; Spannuth, A.; Sánchez-López, N.; Ottmar, R.D.; Soja, A.J. Multitemporal lidar captures heterogeneity in fuel loads and consumption on the Kaibab Plateau. Fire Ecol. 2022, 18, 18. [Google Scholar] [CrossRef] [PubMed]

- Yuan, C.; Liu, Z.; Zhang, Y. UAV-based forest fire detection and tracking using image processing techniques. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 639–643. [Google Scholar] [CrossRef]

- Roldán-Gómez, J.J.; González-Gironda, E.; Barrientos, A. A Survey on Robotic Technologies for Forest Firefighting: Applying Drone Swarms to Improve Firefighters’ Efficiency and Safety. Appl. Sci. 2021, 11, 363. [Google Scholar] [CrossRef]

- Viegas, C.; Chehreh, B.; Andrade, J.; Lourenço, J. Tethered UAV with combined multi-rotor and water jet propulsion for forest fire fighting. J. Intell. Robot. Syst. 2022, 104, 21. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-based Forest Fire Detection Algorithm Using Convolutional Neural Network. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 10305–10310. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Couceiro, M.S.; Portugal, D.; Ferreira, J.F.; Rocha, R.P. SEMFIRE: Towards a new generation of forestry maintenance multi-robot systems. In Proceedings of the 2019 IEEE/SICE International Symposium on System Integration (SII), Paris, France, 14–16 January 2019; pp. 270–276. [Google Scholar] [CrossRef]

- Anderson, H.E. Aids to Determining Fuel Models for Estimating Fire Behavior; U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station: Washington, DC, USA, 1981. [Google Scholar]

- Andrada, M.E.; Ferreira, J.; Portugal, D.; Couceiro, M. Testing Different CNN Architectures for Semantic Segmentation for Landscaping with Forestry Robotics. In Proceedings of the Workshop on Perception, Planning and Mobility in Forestry Robotics, Online, 29 October 2020. [Google Scholar]

- Andrada, M.E.; Ferreira, J.F.; Portugal, D.; Couceiro, M.S. Integration of an Artificial Perception System for Identification of Live Flammable Material in Forestry Robotics. In Proceedings of the 2022 IEEE/SICE International Symposium on System Integration (SII), Narvik, Norway, 9–12 January 2022; pp. 103–108. [Google Scholar] [CrossRef]

- Russell, D.J.; Arevalo-Ramirez, T.; Garg, C.; Kuang, W.; Yandun, F.; Wettergreen, D.; Kantor, G. UAV Mapping with Semantic and Traversability Metrics for Forest Fire Mitigation. In Proceedings of the ICRA 2022 Workshop in Innovation in Forestry Robotics: Research and Industry Adoption, Philadelphia, PA, USA, 23 May 2022. [Google Scholar]

- Marino, E.; Ranz, P.; Tomé, J.L.; Ángel Noriega, M.; Esteban, J.; Madrigal, J. Generation of high-resolution fuel model maps from discrete airborne laser scanner and Landsat-8 OLI: A low-cost and highly updated methodology for large areas. Remote Sens. Environ. 2016, 187, 267–280. [Google Scholar] [CrossRef]

- Mitri, G.H.; Gitas, I.Z. Fire type mapping using object-based classification of Ikonos imagery. Int. J. Wildland Fire 2006, 15, 457–462. [Google Scholar] [CrossRef]

- Peterson, S.H.; Franklin, J.; Roberts, D.A.; van Wagtendonk, J.W. Mapping fuels in Yosemite National Park. Can. J. For. Res. 2013, 43, 7–17. [Google Scholar] [CrossRef]

- Katagis, T.; Gitas, I.Z.; Toukiloglou, P.; Veraverbeke, S.; Goossens, R. Trend analysis of medium- and coarse-resolution time series image data for burned area mapping in a Mediterranean ecosystem. Int. J. Wildland Fire 2014, 23, 668–677. [Google Scholar] [CrossRef]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Pérez-Cabello, F.; Montorio, R.; Alves, D.B. Remote sensing techniques to assess post-fire vegetation recovery. Curr. Opin. Environ. Sci. Health 2021, 21, 100251. [Google Scholar] [CrossRef]

- Chuvieco, E.; Aguado, I.; Yebra, M.; Nieto, H.; Salas, J.; Martín, M.P.; Vilar, L.; Martínez, J.; Martín, S.; Ibarra, P.; et al. Development of a framework for fire risk assessment using remote sensing and geographic information system technologies. Ecol. Model. 2010, 221, 46–58. [Google Scholar] [CrossRef]

- Ozenen Kavlak, M.; Cabuk, S.N.; Cetin, M. Development of forest fire risk map using geographical information systems and remote sensing capabilities: Ören case. Environ. Sci. Pollut. Res. 2021, 28, 33265–33291. [Google Scholar] [CrossRef]

- Kurbanov, E.; Vorobev, O.; Lezhnin, S.; Sha, J.; Wang, J.; Li, X.; Cole, J.; Dergunov, D.; Wang, Y. Remote sensing of forest burnt area, burn severity, and post-fire recovery: A review. Remote Sens. 2022, 14, 4714. [Google Scholar] [CrossRef]

- Morgan, P.; Keane, R.E.; Dillon, G.K.; Jain, T.B.; Hudak, A.T.; Karau, E.C.; Sikkink, P.G.; Holden, Z.A.; Strand, E.K. Challenges of assessing fire and burn severity using field measures, remote sensing and modelling. Int. J. Wildland Fire 2014, 23, 1045–1060. [Google Scholar] [CrossRef]

- Wooster, M.J.; Roberts, G.J.; Giglio, L.; Roy, D.P.; Freeborn, P.H.; Boschetti, L.; Justice, C.; Ichoku, C.; Schroeder, W.; Davies, D.; et al. Satellite remote sensing of active fires: History and current status, applications and future requirements. Remote Sens. Environ. 2021, 267, 112694. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Vivaldini, K.C.; Martinelli, T.H.; Guizilini, V.C.; Souza, J.R.; Oliveira, M.D.; Ramos, F.T.; Wolf, D.F. UAV route planning for active disease classification. Auton. Robot. 2019, 43, 1137–1153. [Google Scholar] [CrossRef]

- Vasavi, P.; Punitha, A.; Rao, T.V.N. Crop leaf disease detection and classification using machine learning and deep learning algorithms by visual symptoms: A review. Int. J. Electr. Comput. Eng. 2022, 12, 2079. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Hameed, I.A.; Balasundram, S.K.; Ahmad, D.; Weltzien, C.; Yamin, M. Fundamental research on unmanned aerial vehicles to support precision agriculture in oil palm plantations. In Agricultural Robots—Fundamentals and Applications; IntechOpen: London, UK, 2018; pp. 91–116. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Hyyppä, E.; Hyyppä, J.; Hakala, T.; Kukko, A.; Wulder, M.A.; White, J.C.; Pyörälä, J.; Yu, X.; Wang, Y.; Virtanen, J.P.; et al. Under-canopy UAV laser scanning for accurate forest field measurements. ISPRS J. Photogramm. Remote Sens. 2020, 164, 41–60. [Google Scholar] [CrossRef]

- Corte, A.P.D.; Souza, D.V.; Rex, F.E.; Sanquetta, C.R.; Mohan, M.; Silva, C.A.; Zambrano, A.M.A.; Prata, G.; Alves de Almeida, D.R.; Trautenmüller, J.W.; et al. Forest inventory with high-density UAV-Lidar: Machine learning approaches for predicting individual tree attributes. Comput. Electron. Agric. 2020, 179, 105815. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Casbeer, D.; Beard, R.; McLain, T.; Li, S.M.; Mehra, R. Forest fire monitoring with multiple small UAVs. In Proceedings of the 2005 American Control Conference, Portland, OR, USA, 8–10 June 2005; Volume 5, pp. 3530–3535. [Google Scholar] [CrossRef]

- Skorput, P.; Mandzuka, S.; Vojvodic, H. The use of Unmanned Aerial Vehicles for forest fire monitoring. In Proceedings of the 2016 International Symposium ELMAR, Zadar, Croatia, 12–14 September 2016; pp. 93–96. [Google Scholar] [CrossRef]

- Getzin, S.; Nuske, R.S.; Wiegand, K. Using Unmanned Aerial Vehicles (UAV) to Quantify Spatial Gap Patterns in Forests. Remote Sens. 2014, 6, 6988–7004. [Google Scholar] [CrossRef]

- Talbot, B.; Rahlf, J.; Astrup, R. An operational UAV-based approach for stand-level assessment of soil disturbance after forest harvesting. Scand. J. For. Res. 2018, 33, 387–396. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of Small Forest Areas Using an Unmanned Aerial System. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Puliti, S.; Ene, L.T.; Gobakken, T.; Næsset, E. Use of partial-coverage UAV data in sampling for large scale forest inventories. Remote Sens. Environ. 2017, 194, 115–126. [Google Scholar] [CrossRef]

- Paz, D.; Zhang, H.; Li, Q.; Xiang, H.; Christensen, H.I. Probabilistic Semantic Mapping for Urban Autonomous Driving Applications. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 2059–2064. [Google Scholar] [CrossRef]

- Cheng, Q.; Zeller, N.; Cremers, D. Vision-Based Large-scale 3D Semantic Mapping for Autonomous Driving Applications. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 9235–9242. [Google Scholar] [CrossRef]

- Kostavelis, I.; Gasteratos, A. Semantic mapping for mobile robotics tasks: A survey. Robot. Auton. Syst. 2015, 66, 86–103. [Google Scholar] [CrossRef]

- Luo, R.C.; Chiou, M. Hierarchical Semantic Mapping Using Convolutional Neural Networks for Intelligent Service Robotics. IEEE Access 2018, 6, 61287–61294. [Google Scholar] [CrossRef]

- Trenčanová, B.; Proença, V.; Bernardino, A. Development of Semantic Maps of Vegetation Cover from UAV Images to Support Planning and Management in Fine-Grained Fire-Prone Landscapes. Remote Sens. 2022, 14, 1262. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote. Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Debeunne, C.; Vivet, D. A review of visual-LiDAR fusion based simultaneous localization and mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; Volume 2, pp. 1–9. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Daniela, R. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE: Piscatway, NJ, USA, 2020; pp. 5135–5142. [Google Scholar] [CrossRef]

- Shao, W.; Vijayarangan, S.; Li, C.; Kantor, G. Stereo visual inertial lidar simultaneous localization and mapping. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2016; IEEE: Piscatway, NJ, USA, 2019; pp. 370–377. [Google Scholar] [CrossRef]

- Ringdahl, O.; Hohnloser, P.; Hellström, T.; Holmgren, J.; Lindroos, O. Enhanced Algorithms for Estimating Tree Trunk Diameter Using 2D Laser Scanner. Remote Sens. 2013, 5, 4839–4856. [Google Scholar] [CrossRef]

- Shao, J.; Zhang, W.; Mellado, N.; Wang, N.; Jin, S.; Cai, S.; Luo, L.; Lejemble, T.; Yan, G. SLAM-aided forest plot mapping combining terrestrial and mobile laser scanning. ISPRS J. Photogramm. Remote Sens. 2020, 163, 214–230. [Google Scholar] [CrossRef]

- Qian, C.; Liu, H.; Tang, J.; Chen, Y.; Kaartinen, H.; Kukko, A.; Zhu, L.; Liang, X.; Chen, L.; Hyyppä, J. An Integrated GNSS/INS/LiDAR-SLAM Positioning Method for Highly Accurate Forest Stem Mapping. Remote Sens. 2017, 9, 3. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Kim, G.; Kim, A. Scan context: Egocentric spatial descriptor for place recognition within 3d point cloud map. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscatway, NJ, USA, 2018; pp. 4802–4809. [Google Scholar] [CrossRef]

- He, D.; Xu, W.; Zhang, F. Kalman filters on differentiable manifolds. arXiv 2021, arXiv:2102.03804. [Google Scholar]

- Agisoft. Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 5 July 2023).

- Young, D.J.; Koontz, M.J.; Weeks, J.M. Optimizing aerial imagery collection and processing parameters for drone-based individual tree mapping in structurally complex conifer forests. Methods Ecol. Evol. 2022, 13, 1447–1463. [Google Scholar] [CrossRef]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. SegNeXt: Rethinking Convolutional Attention Design for Semantic Segmentation. arXiv 2022, arXiv:2209.08575. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Chen, Z.; Ding, R.; Chin, T.; Marculescu, D. Understanding the Impact of Label Granularity on CNN-based Image Classification. arXiv 2019, arXiv:1901.07012. [Google Scholar]

- Meagher, D. Geometric modeling using octree encoding. Comput. Graph. Image Process. 1982, 19, 129–147. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Fitzgibbon, A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar] [CrossRef]

- Museth, K.; Lait, J.; Johanson, J.; Budsberg, J.; Henderson, R.; Alden, M.; Cucka, P.; Hill, D.; Pearce, A. OpenVDB: An open-source data structure and toolkit for high-resolution volumes. In Proceedings of the ACM SIGGRAPH 2013 Courses—SIGGRAPH ’13, New York, NY, USA, 21–25 July 2013; p. 1. [Google Scholar] [CrossRef]

- Xuan, Z.; David, F. Real-Time Voxel Based 3D Semantic Mapping with a Hand Held RGB-D Camera. 2018. Available online: https://github.com/floatlazer/semantic_slam (accessed on 10 July 2023).

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Duberg, D.; Jensfelt, P. UFOMap: An Efficient Probabilistic 3D Mapping Framework That Embraces the Unknown. IEEE Robot. Autom. Lett. 2020, 5, 6411–6418. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Taha, A.A.; Hanbury, A. An efficient algorithm for calculating the exact Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2153–2163. [Google Scholar] [CrossRef]

- CloudCompare: 3D Point Cloud and Mesh Processing Software Home Page. Available online: https://www.cloudcompare.org/ (accessed on 15 June 2023).

- Ali-Sisto, D.; Gopalakrishnan, R.; Kukkonen, M.; Savolainen, P.; Packalen, P. A method for vertical adjustment of digital aerial photogrammetry data by using a high-quality digital terrain model. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101954. [Google Scholar] [CrossRef]

- Google Earth. Available online: https://earth.google.com (accessed on 5 July 2023).

- Jiao, J.; Wei, H.; Hu, T.; Hu, X.; Zhu, Y.; He, Z.; Wu, J.; Yu, J.; Xie, X.; Huang, H.; et al. FusionPortable: A Multi-Sensor Campus-Scene Dataset for Evaluation of Localization and Mapping Accuracy on Diverse Platforms. arXiv 2022, arXiv:2208.11865. [Google Scholar]

Figure 1.

Sensing payload.

Figure 1.

Sensing payload.

Figure 2.

General scheme of the implemented localization and mapping approaches. Note that LIOSAM and FASTLIO-SC outputs are odometry and a 3D map in the form of a point cloud.

Figure 2.

General scheme of the implemented localization and mapping approaches. Note that LIOSAM and FASTLIO-SC outputs are odometry and a 3D map in the form of a point cloud.

Figure 3.

The study sites are located in Coimbra, Portugal, and Pittsburgh, United States. The magenta line depicts the path followed by the UAV. It is important to highlight that the UAV was operated remotely by a human expert during the aerial surveys conducted at the Coimbra study site. In contrast, for the Pittsburgh study site, the UAV flights were carried out using the UAV autopilot system.

Figure 3.

The study sites are located in Coimbra, Portugal, and Pittsburgh, United States. The magenta line depicts the path followed by the UAV. It is important to highlight that the UAV was operated remotely by a human expert during the aerial surveys conducted at the Coimbra study site. In contrast, for the Pittsburgh study site, the UAV flights were carried out using the UAV autopilot system.

Figure 4.

Qualitative odometry results of LIOSAM and FASTLIO−SC approaches. To avoid figure over−stacking, two representative cases of the study sites are shown. (a,b) shows the odometry of Survey 2 for the semi-urban area and Survey 2 for the rural area, respectively. The black solid line represents the RTK measurements, the cyan dotted line is the LIOSAM odometry, and the magenta dashed line depicts the FASTLIO−SC odometry. Yellow arrows depict the odometry direction estimated by FASTLIO−SC.

Figure 4.

Qualitative odometry results of LIOSAM and FASTLIO−SC approaches. To avoid figure over−stacking, two representative cases of the study sites are shown. (a,b) shows the odometry of Survey 2 for the semi-urban area and Survey 2 for the rural area, respectively. The black solid line represents the RTK measurements, the cyan dotted line is the LIOSAM odometry, and the magenta dashed line depicts the FASTLIO−SC odometry. Yellow arrows depict the odometry direction estimated by FASTLIO−SC.

Figure 5.

Point cloud maps of the (a) photogrammetry baseline, (b) SLAM outcome. The two maps were compared using the Hausdorff distance, whose result is visualized as (c) the SLAM map colored according to this metric and (d) a boxplot showing the error distribution. Note that the baseline and the SLAM clouds colormaps correspond to RGB and height values, respectively.

Figure 5.

Point cloud maps of the (a) photogrammetry baseline, (b) SLAM outcome. The two maps were compared using the Hausdorff distance, whose result is visualized as (c) the SLAM map colored according to this metric and (d) a boxplot showing the error distribution. Note that the baseline and the SLAM clouds colormaps correspond to RGB and height values, respectively.

Figure 6.

Landmarks and regions used to validate the spatial correctness of the SLAM map. Given the photogrammetry baseline and the SLAM map span different areas, we chose regions that appear in both. (a) shows the specific areas we used to validate the SLAM map which in intersect between baseline and SLAM, (b) shows the specific segments or landmarks for detailed comparison.

Figure 6.

Landmarks and regions used to validate the spatial correctness of the SLAM map. Given the photogrammetry baseline and the SLAM map span different areas, we chose regions that appear in both. (a) shows the specific areas we used to validate the SLAM map which in intersect between baseline and SLAM, (b) shows the specific segments or landmarks for detailed comparison.

Figure 7.

This shows the fraction of pixels per class for (a) the train set and (b) the test set. Note that the three dominant classes Canopy, Bare Earth, and Dry Grass are common across both collections but the comparative frequencies are somewhat different. These correspond to our aggregate Vegetation, Background, and Fuel classes, respectively. The fractions of other classes are fairly small, and some from the training set are entirely absent in the test set.

Figure 7.

This shows the fraction of pixels per class for (a) the train set and (b) the test set. Note that the three dominant classes Canopy, Bare Earth, and Dry Grass are common across both collections but the comparative frequencies are somewhat different. These correspond to our aggregate Vegetation, Background, and Fuel classes, respectively. The fractions of other classes are fairly small, and some from the training set are entirely absent in the test set.

Figure 8.

Qualitative semantic mapping results from the test set. The results are shown both for the predicted classes and the aggregated ones, with colors visualized in the top rows. White regions in the ground truth (GT) represent areas that were ambiguous to the human annotator. Overall the predictions match the ground truth well and boundaries are well-defined. Note that many regions of confusion, such as canopy-to-trunk and green shrub-to-canopy, fall within the same coarse classes for our mapping purposes.

Figure 8.

Qualitative semantic mapping results from the test set. The results are shown both for the predicted classes and the aggregated ones, with colors visualized in the top rows. White regions in the ground truth (GT) represent areas that were ambiguous to the human annotator. Overall the predictions match the ground truth well and boundaries are well-defined. Note that many regions of confusion, such as canopy-to-trunk and green shrub-to-canopy, fall within the same coarse classes for our mapping purposes.

Figure 9.

UFOMaps of the (a) Colored Image baseline, (b) UFOMap semantic outcome using red as the fuel classes, green as the canopy classes, and black as the background classes (c) the hand-labeled orthomosaic ground truth for the semantic classes.

Figure 9.

UFOMaps of the (a) Colored Image baseline, (b) UFOMap semantic outcome using red as the fuel classes, green as the canopy classes, and black as the background classes (c) the hand-labeled orthomosaic ground truth for the semantic classes.

Figure 10.

UFOMaps from a closer view of the (a) Colored Image baseline, (b) UFOMap semantic outcome using red as the fuel classes, green as the canopy classes, and black as the background classes.

Figure 10.

UFOMaps from a closer view of the (a) Colored Image baseline, (b) UFOMap semantic outcome using red as the fuel classes, green as the canopy classes, and black as the background classes.

Table 1.

Sensors and Operation Frequency.

Table 1.

Sensors and Operation Frequency.

| Sensor Name | Sensor Type | Frequency (Hz) | Voltage (V) |

|---|

| VN-200 Rugged GNSS/INS | IMU | 400 | – |

| Velodyne VLP-32C | LiDAR | 10 | – |

| Allied Vision Alvium 1800 U-2040c | RGB camera | 10 | 5.0 |

| Mapir Survey 3 | Multispectral camera | 15 | 5.0 |

Table 2.

Classes used in semantic segmentation. These are inspired by relevant classes from the Anderson fuel model [

11] and also include additional classes relevant to our application.

Table 2.

Classes used in semantic segmentation. These are inspired by relevant classes from the Anderson fuel model [

11] and also include additional classes relevant to our application.

| Fine-Grained Class | Coarse-Grained Classes |

|---|

| Dry Grass | Fuel |

| Green Grass | Fuel |

| Dry Shrubs | Fuel |

| Wood Pieces | Fuel |

| Litterfall | Fuel |

| Timber Litter | Fuel |

| Green Shrubs | Canopy |

| Canopy | Canopy |

| Live Trunks | Canopy |

| Bare Earth | Background |

| People | Background |

| Sky | Background |

| Blurry | Background |

| Drone | Background |

| Obstacle | Background |

Table 3.

Quantitative odometry results of LIOSAM and FASTLIO-SC approaches on forested regions. Bold values denote the lowest RMSE. The last row shows the average RMSE as a percentage of the flight distance for each survey.

Table 3.

Quantitative odometry results of LIOSAM and FASTLIO-SC approaches on forested regions. Bold values denote the lowest RMSE. The last row shows the average RMSE as a percentage of the flight distance for each survey.

| | Study Sites |

|---|

| | Coimbra | Pittsburgh |

|---|

| | Survey 1 | Survey 2 | Survey 1 | Survey 2 | Survey 3 |

| Flight distance m | 175.2 | 424.4 | 1535.5 | 2421.1 | 1188.6 |

| | LIOSAM | FASTLIO-SC | LIOSAM | FASTLIO-SC | LIOSAM | FASTLIO-SC | LIOSAM | FASTLIO-SC | LIOSAM | FASTLIO-SC |

| X m | 0.26 | 1.79 | 0.67 | 42.51 | 1.33 | 2.67 | 0.73 | 0.750 | 0.72 | 1.80 |

| Y m | 0.24 | 3.49 | 0.90 | 27.50 | 0.79 | 5.39 | 1.51 | 5.70 | 0.40 | 6.02 |

| Z m | 0.33 | 4.13 | 0.63 | 7.88 | 1.60 | 4.64 | 3.53 | 3.00 | 1.91 | 5.88 |

| Average m | 0.28 | 3.14 | 0.73 | 25.96 | 1.24 | 4.23 | 1.92 | 3.15 | 1.01 | 4.57 |

| % | 0.16 | 1.79 | 0.30 | 10.70 | 0.08 | 0.28 | 0.08 | 0.13 | 0.08 | 0.38 |

Table 4.

Quantitative evaluation of the map resulting from our SLAM implementation. All of the area regions are measured in m and length segments are measured in m.

Table 4.

Quantitative evaluation of the map resulting from our SLAM implementation. All of the area regions are measured in m and length segments are measured in m.

| Measurement Type | Google Earth | Photogrammetry | Absolute Error | LIOSAM Map | Absolute Error |

|---|

| Area region 1 | 308.81 | 489.24 | 180.43 | 322.00 | 13.19 |

| Area region 2 | 3021.28 | 3144.47 | 123.19 | 3116.16 | 94.88 |

| Area region 3 | 4561.49 | 4742.12 | 180.63 | 4591.95 | 30.46 |

| Area region 4 | 6293.18 | 6216.12 | 77.06 | 6268.21 | 24.97 |

| Area region 5 | 1156.96 | 1161.97 | 5.01 | 1201.53 | 44.57 |

| Length Segment 1 | 78.09 | 91.22 | 13.13 | 77.29 | 0.8 |

| Lenght Segment 2 | 31.36 | 37.78 | 6.42 | 32.59 | 1.23 |

| Length Segment 3 | 78.83 | 92.07 | 13.24 | 79.03 | 0.2 |

| Lenght Segment 4 | 154.97 | 182.03 | 27.06 | 154.96 | 0.01 |

| Length Segment 5 | 86.49 | 99.66 | 13.17 | 88.84 | 2.35 |

Table 5.

Evaluation results of the SegNext network with the Anderson Fuel Model as a base for semantic segmentation in a forestry environment.

Table 5.

Evaluation results of the SegNext network with the Anderson Fuel Model as a base for semantic segmentation in a forestry environment.

| Class | IoU | Precision | Recall |

|---|

| Canopy | 70.05 | 84.77 | 80.13 |

| Dry Grass | 79.7 | 93.75 | 84.17 |

| Bare Earth | 78.53 | 88.12 | 87.83 |

| Green Shrubs | 3.27 | 21.72 | 3.71 |

| Green Grass | 0.0 | 0.0 | 0.0 |

| Dry Shrubs | 0.0 | 0.0 | 0.0 |

| Live Trunks | 0.05 | 0.05 | 84.09 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).