Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

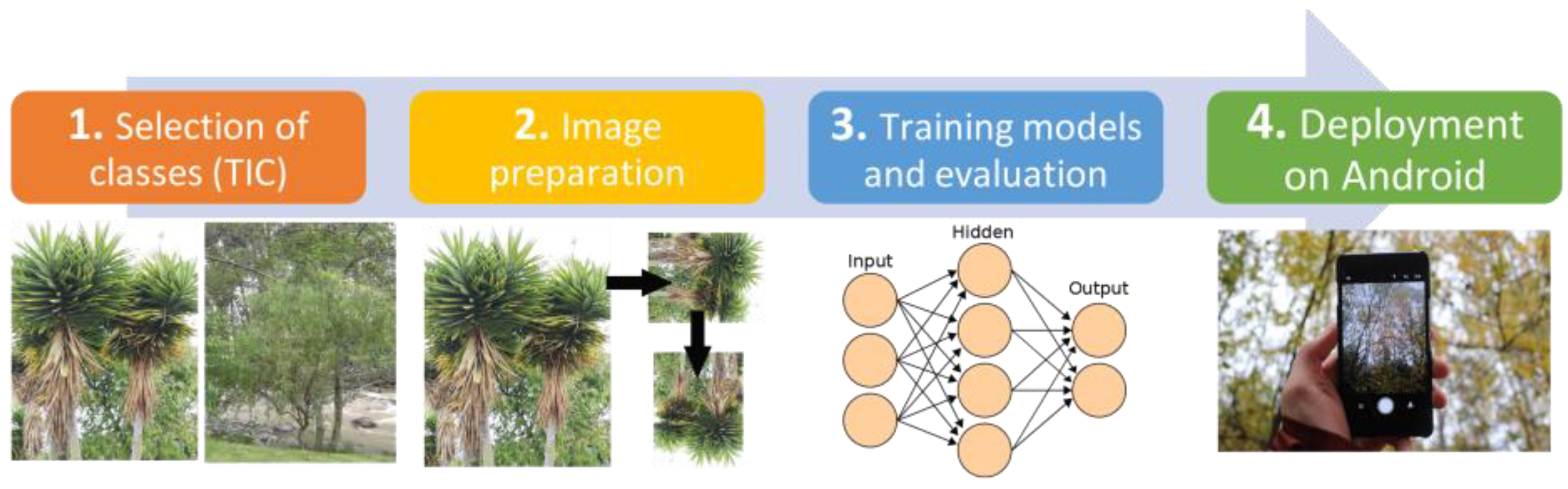

2.2. Methodology

2.2.1. Class Selection

2.2.2. Image Selection and Preparation

2.2.3. Training and Testing Models

2.2.4. Deployment of the Model in Android Application

3. Results

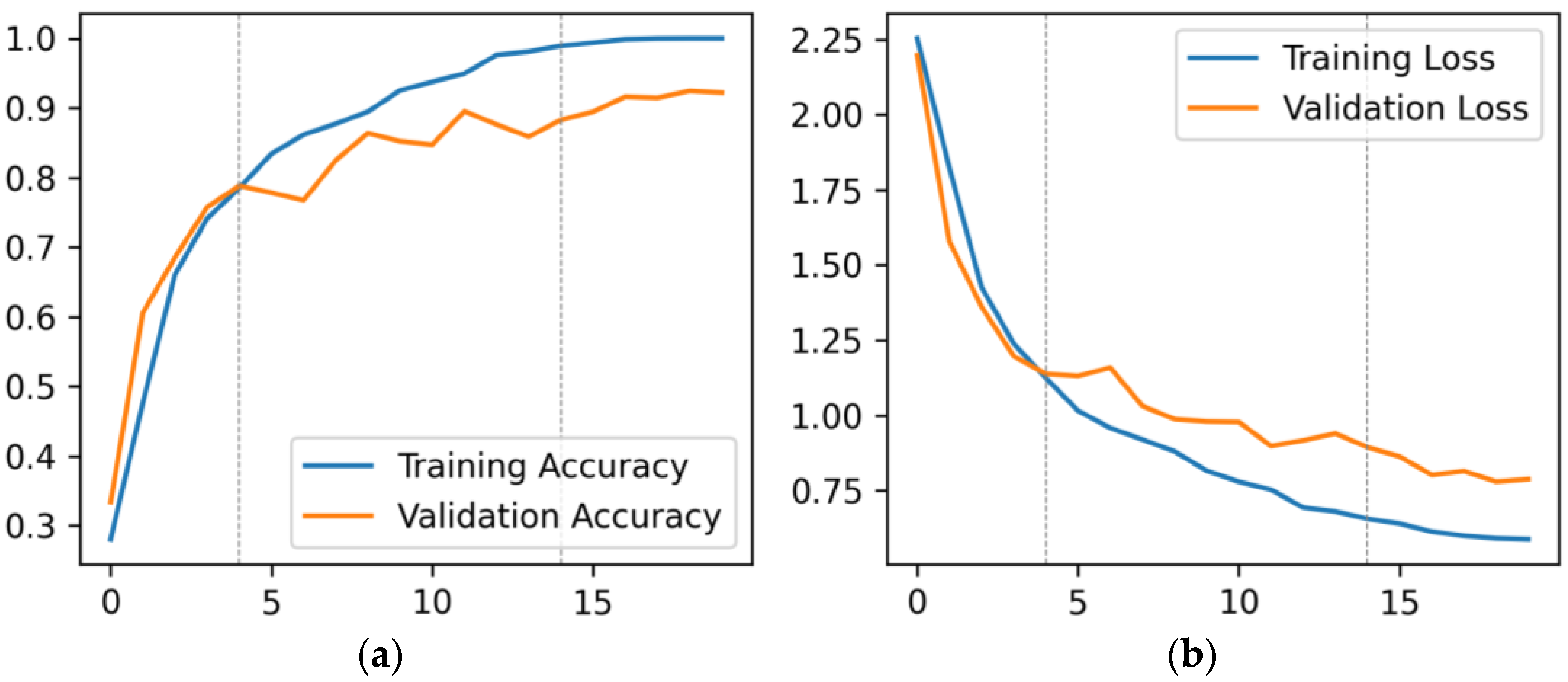

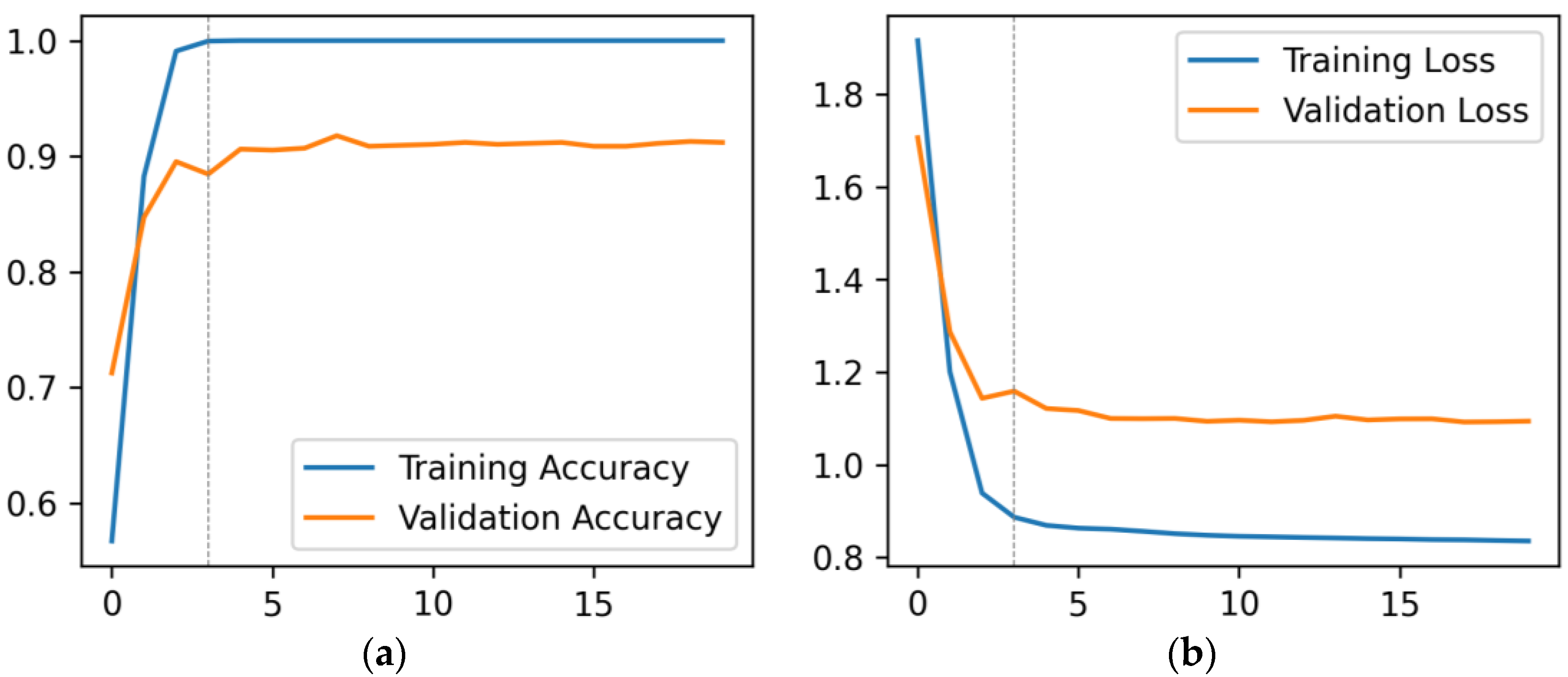

3.1. Learning Curves of the Models

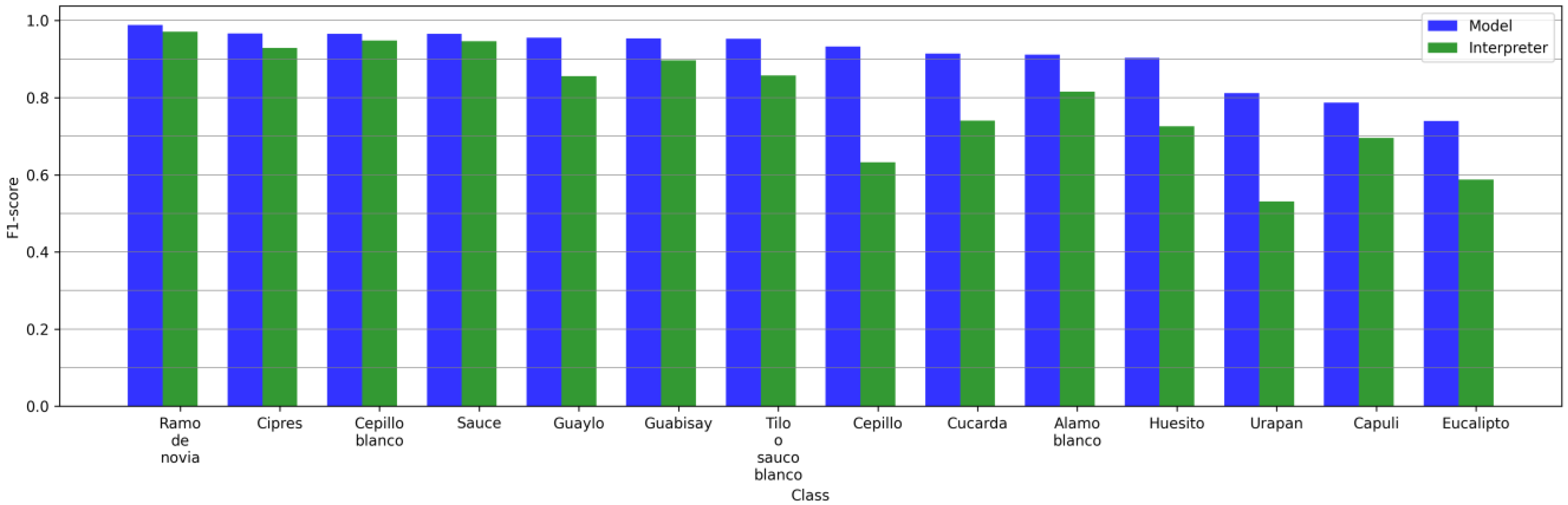

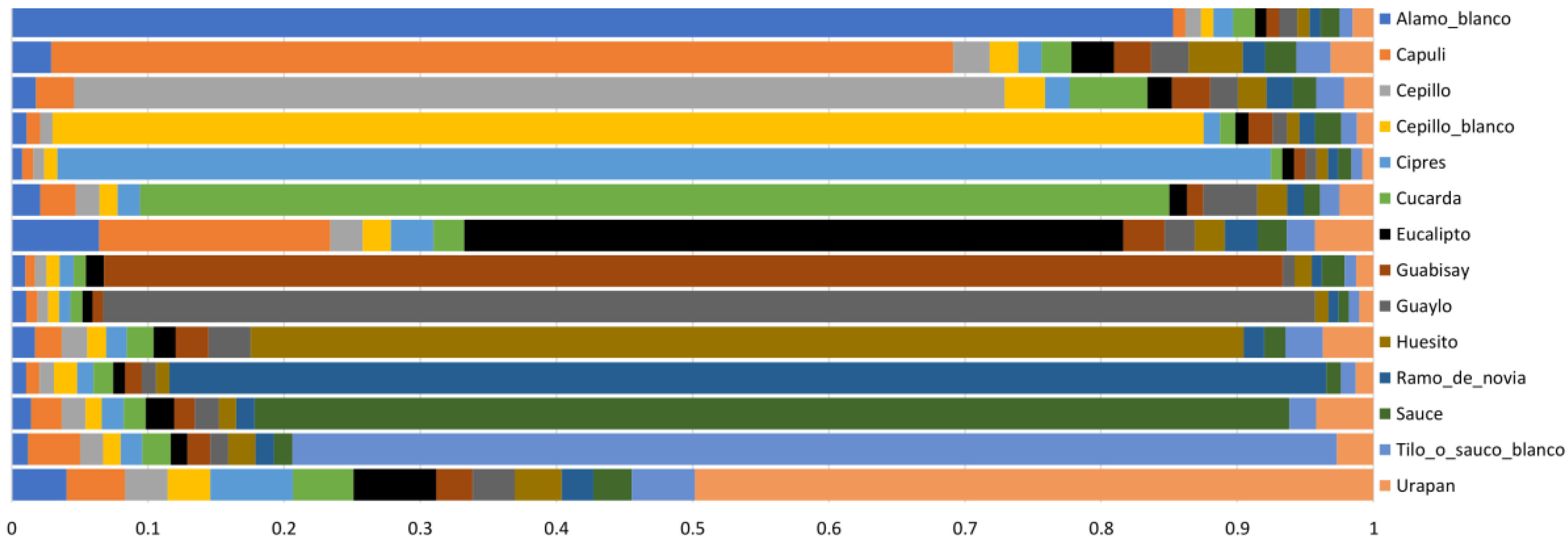

3.2. Class Accuracy with the Best Model

3.3. Model Deployment in Android Devices

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Salam, A. Internet of Things for Sustainable Forestry. In Internet of Things; Springer: Cham, Switzerland, 2020; pp. 243–271. ISBN 9783030352905. [Google Scholar]

- Solomou, A.D.; Topalidou, E.T.; Germani, R.; Argiri, A.; Karetsos, G. Importance, Utilization and Health of Urban Forests: A Review. Not. Bot. Horti Agrobot. Cluj-Napoca 2019, 47, 10–16. [Google Scholar] [CrossRef]

- Pacheco, D.; Ávila, L. Inventario de Parques y Jardines de La Ciudad de Cuenca Con UAV y Smartphones. In Proceedings of the XVI Conferencia de Sistemas de Información Geográfica, Cuenca, Spain, 27–29 September 2017; pp. 173–179. [Google Scholar]

- Pacheco, D. Drones En Espacios Urbanos: Caso de Estudio En Parques, Jardines y Patrimonio. Estoa 2017, 11, 159–168. [Google Scholar] [CrossRef]

- Das, S.; Sun, Q.C.; Zhou, H. GeoAI to Implement an Individual Tree Inventory: Framework and Application of Heat Mitigation. Urban For. Urban Green. 2022, 74, 127634. [Google Scholar] [CrossRef]

- Branson, S.; Wegner, J.D.; Hall, D.; Lang, N.; Schindler, K.; Perona, P. From Google Maps to a Fine-Grained Catalog of Street Trees. ISPRS J. Photogramm. Remote Sens. 2018, 135, 13–30. [Google Scholar] [CrossRef]

- Wäldchen, J.; Mäder, P. Plant Species Identification Using Computer Vision Techniques: A Systematic Literature Review. Arch Comput. Method E 2018, 25, 507–543. [Google Scholar] [CrossRef] [PubMed]

- Tree Inventory Project|Portland. Available online: https://www.portland.gov/trees/get-involved/treeinventory (accessed on 11 November 2022).

- Volunteers Count Every Street Tree in New York City|US Forest Service. Available online: https://www.fs.usda.gov/features/volunteers-count-every-street-tree-new-york-city-0 (accessed on 11 November 2022).

- Ávila Pozo, L.A. Implementación de un Sistema de Inventario Forestal de Parques Urbanos en la Ciudad de Cuenca. Universidad-Verdad 2017, 73, 79–89. [Google Scholar] [CrossRef]

- Nielsen, A.B.; Östberg, J.; Delshammar, T. Review of Urban Tree Inventory Methods Used to Collect Data at Single-Tree Level. Arboric. Urban For. 2014, 40, 96–111. [Google Scholar] [CrossRef]

- Wäldchen, J.; Mäder, P. Machine Learning for Image Based Species Identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Goyal, N.; Kumar, N.; Gupta, K. Lower-Dimensional Intrinsic Structural Representation of Leaf Images and Plant Recognition. Signal, Image Video Process. 2022, 16, 203–210. [Google Scholar] [CrossRef]

- Azlah, M.A.F.; Chua, L.S.; Rahmad, F.R.; Abdullah, F.I.; Alwi, S.R.W. Review on Techniques for Plant Leaf Classification and Recognition. Computers 2019, 8, 77. [Google Scholar] [CrossRef]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of Transfer Learning for Deep Neural Network Based Plant Classification Models. Comput. Electron. Agric. 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Rodríguez-Puerta, F.; Barrera, C.; García, B.; Pérez-Rodríguez, F.; García-Pedrero, A.M. Mapping Tree Canopy in Urban Environments Using Point Clouds from Airborne Laser Scanning and Street Level Imagery. Sensors 2022, 22, 3269. [Google Scholar] [CrossRef]

- Xia, X.; Xu, C.; Nan, B. Inception-v3 for Flower Classification. In Proceedings of the 2nd International Conference on Image, Vision and Computing ICIVC 2017, Chengdu, China, 2–4 June 2017; pp. 783–787. [Google Scholar] [CrossRef]

- Leena Rani, A.; Devika, G.; Vinutha, H.R.; Karegowda, A.G.; Vidya, S.; Bhat, S. Identification of Medicinal Leaves Using State of Art Deep Learning Techniques. In Proceedings of the 2022 IEEE International Conference on Distributed Computing and Electrical Circuits and Electronics ICDCECE 2022, Ballari, India, 23–24 April 2022; pp. 11–15. [Google Scholar] [CrossRef]

- Carpentier, M.; Giguere, P.; Gaudreault, J. Tree Species Identification from Bark Images Using Convolutional Neural Networks. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1075–1081. [Google Scholar] [CrossRef]

- Muñoz Villalobos, I.A.; Bolt, A. Diseño y Desarrollo de Aplicación Móvil Para La Clasificación de Flora Nativa Chilena Utilizando Redes Neuronales Convolucionales. AtoZ Novas Práticas Inf. Conhecimento 2022, 11, 1–13. [Google Scholar] [CrossRef]

- Adedoja, A.; Owolawi, P.A.; Mapayi, T. Deep Learning Based on NASNet for Plant Disease Recognition Using Leave Images. In Proceedings of the icABCD 2019—2nd International Conference on Advances in Big Data, Computing and Data Communication Systems, Winterton, South Africa, 5–6 August 2019. [Google Scholar] [CrossRef]

- Zhang, R.; Zhu, Y.; Ge, Z.; Mu, H.; Qi, D.; Ni, H. Transfer Learning for Leaf Small Dataset Using Improved ResNet50 Network with Mixed Activation Functions. Forests 2022, 13, 2072. [Google Scholar] [CrossRef]

- Vilasini, M.; Ramamoorthy, P. CNN Approaches for Classification of Indian Leaf Species Using Smartphones. Comput. Mater. Contin. 2020, 62, 1445–1472. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, W.; Gao, R.; Jin, Z.; Wang, X. Recent Advances in the Application of Deep Learning Methods to Forestry. Wood Sci. Technol. 2021, 55, 1171–1202. [Google Scholar] [CrossRef]

- Cetin, Z.; Yastikli, N. The Use of Machine Learning Algorithms in Urban Tree Species Classification. ISPRS Int. J. Geo-Inf. 2022, 11, 226. [Google Scholar] [CrossRef]

- Lasseck, M. Image-Based Plant Species Identification with Deep Convolutional Neural Networks. In Proceedings of the CEUR Workshop Proceedings, Bloomington, IN, USA, 20–21 January 2017; Volume 1866. [Google Scholar]

- Liu, J.; Wang, X.; Wang, T. Classification of Tree Species and Stock Volume Estimation in Ground Forest Images Using Deep Learning. Comput. Electron. Agric. 2019, 166, 105012. [Google Scholar] [CrossRef]

- Gajjar, R.; Gajjar, N.; Thakor, V.J.; Patel, N.P.; Ruparelia, S. Real-Time Detection and Identification of Plant Leaf Diseases Using Convolutional Neural Networks on an Embedded Platform. Vis. Comput. 2022, 38, 2923–2938. [Google Scholar] [CrossRef]

- Gogul, I.; Kumar, V.S. Flower Species Recognition System Using Convolution Neural Networks and Transfer Learning. In Proceedings of the 4th Fourth International Conference on Signal Processing, Communication and Networking ICSCN 2017, Chennai, India, 16–18 March 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in Vegetation Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Pang, B.; Nijkamp, E.; Wu, Y.N. Deep Learning With TensorFlow: A Review. J. Educ. Behav. Stat. 2020, 45, 227–248. [Google Scholar] [CrossRef]

- Kim, T.K.; Kim, S.; Won, M.; Lim, J.H.; Yoon, S.; Jang, K.; Lee, K.H.; Park, Y.D.; Kim, H.S. Utilizing Machine Learning for Detecting Flowering in Mid—Range Digital Repeat Photography. Ecol. Modell. 2021, 440, 109419. [Google Scholar] [CrossRef]

- Figueroa-Mata, G.; Mata-Montero, E. Using a Convolutional Siamese Network for Image-Based Plant Species Identification with Small Datasets. Biomimetics 2020, 5, 8. [Google Scholar] [CrossRef]

- Paper, D. Simple Transfer Learning with TensorFlow Hub. In State-of-the-Art Deep Learning Models in TensorFlow; Apress: Berkeley, CA, USA, 2021; pp. 153–169. [Google Scholar]

- Creador de Modelos TensorFlow Lite. Available online: https://www.tensorflow.org/lite/models/modify/model_maker (accessed on 4 August 2022).

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- TensorFlow Hub. Available online: https://www.tensorflow.org/hub (accessed on 18 January 2023).

- TensorFlow Lite Model Maker. Available online: https://www.tensorflow.org/lite/models/modify/model_maker (accessed on 18 January 2023).

- Reda, M.; Suwwan, R.; Alkafri, S.; Rashed, Y.; Shanableh, T. AgroAId: A Mobile App System for Visual Classification of Plant Species and Diseases Using Deep Learning and TensorFlow Lite. Informatics 2022, 9, 55. [Google Scholar] [CrossRef]

- Kumar, A.; Singh, S.B.; Satapathy, S.C.; Rout, M. MOSQUITO-NET: A Deep Learning Based CADx System for Malaria Diagnosis along with Model Interpretation Using GradCam and Class Activation Maps. Expert Syst. 2022, 39, e12695. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-Cam: Why Did You Say That? Visual Explanations from Deep Networks via Gradient-Based Localization. Rev. Hosp. Clínicas 2016, 17, 331–336. [Google Scholar]

- Minga, D.; Verdugo, A. Árboles y Arbustos de Los Ríos de Cuenca; Universidad del Azuay: Cuenca, Ecuador, 2016; ISBN 978-9978-325-42-1. [Google Scholar]

- Populus alba in Flora of China@Efloras. Available online: http://www.efloras.org/florataxon.aspx?flora_id=2&taxon_id=200005643 (accessed on 10 May 2022).

- Sánchez, J. Flora Ornamental Española. Available online: http://www.arbolesornamentales.es/ (accessed on 15 October 2022).

- Mahecha, G.; Ovalle, A.; Camelo, D.; Rozo, A.; Barrero, D. Vegetación Del Territorio CAR, 450 Especies de Sus Llanuras y Montañas; Corporación Autónoma Regional de Cundinamarca (Bogotá): Bogotá, Colombia, 2004; ISBN 958-8188-06-7. [Google Scholar]

- Guillot, D.; Van der Meer, P. El Género Yucca L En España; Jolube: Jaca, Spain, 2009; Volume 2, ISBN 978-84-937291-8-9. [Google Scholar]

- Tilo (Sambucus Canadensis) · INaturalist Ecuador. Available online: https://ecuador.inaturalist.org/taxa/84300-Sambucus-canadensis (accessed on 4 January 2023).

- Fresno (Fraxinus Uhdei) · INaturalist Ecuador. Available online: https://ecuador.inaturalist.org/taxa/134212-Fraxinus-uhdei (accessed on 4 January 2023).

- ResNet V2 101|TensorFlow Hub. Available online: https://tfhub.dev/google/imagenet/resnet_v2_101/feature_vector/5 (accessed on 6 February 2023).

- Ab Wahab, M.N.; Nazir, A.; Ren, A.T.Z.; Noor, M.H.M.; Akbar, M.F.; Mohamed, A.S.A. Efficientnet-Lite and Hybrid CNN-KNN Implementation for Facial Expression Recognition on Raspberry Pi. IEEE Access 2021, 9, 134065–134080. [Google Scholar] [CrossRef]

- Mezgec, S.; Seljak, B.K. Nutrinet: A Deep Learning Food and Drink Image Recognition System for Dietary Assessment. Nutrients 2017, 9, 657. [Google Scholar] [CrossRef]

- Object Detection with TensorFlow Lite Model Maker. Available online: https://www.tensorflow.org/lite/models/modify/model_maker/object_detection (accessed on 6 February 2023).

- Zheng, H.; Sherazi, S.W.A.; Son, S.H.; Lee, J.Y. A Deep Convolutional Neural Network-based Multi-class Image Classification for Automatic Wafer Map Failure Recognition in Semiconductor Manufacturing. Appl. Sci. 2021, 11, 9769. [Google Scholar] [CrossRef]

- Sklearn.Metrics.Cohen_kappa_score—Scikit-Learn 1.2.1 Documentation. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.metrics.cohen_kappa_score.html (accessed on 2 January 2023).

- Recognize Flowers with TensorFlow Lite on Android. Available online: https://codelabs.developers.google.com/codelabs/recognize-flowers-with-tensorflow-on-android#0 (accessed on 6 September 2022).

- Shah, V.; Sajnani, N. Multi-Class Image Classification Using CNN and Tflite. Int. J. Res. Eng. Sci. Manag. 2020, 3, 65–68. [Google Scholar] [CrossRef]

- TensorFlow Lite Inference. Available online: https://www.tensorflow.org/lite/guide/inference (accessed on 16 February 2023).

- Homan, D.; du Preez, J.A. Automated Feature-Specific Tree Species Identification from Natural Images Using Deep Semi-Supervised Learning. Ecol. Inform. 2021, 66, 101475. [Google Scholar] [CrossRef]

- Natesan, S.; Armenakis, C.; Vepakomma, U. Resnet-Based Tree Species Classification Using Uav Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2019, 42, 475–481. [Google Scholar] [CrossRef]

- Dixit, A.; Nain Chi, Y. Classification and Recognition of Urban Tree Defects in a Small Dataset Using Convolutional Neural Network, Resnet-50 Architecture, and Data Augmentation. J. For. 2021, 8, 61–70. [Google Scholar] [CrossRef]

- Zhang, C.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Tree Species Classification Using Deep Learning and RGB Optical Images Obtained by an Unmanned Aerial Vehicle. J. For. Res. 2021, 32, 1879–1888. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Suwais, K.; Alheeti, K.; Al_Dosary, D. A Review on Classification Methods for Plants Leaves Recognition. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 92–100. [Google Scholar] [CrossRef]

- Shaji, A.P.; Hemalatha, S. Data Augmentation for Improving Rice Leaf Disease Classification on Residual Network Architecture. In Proceedings of the 2nd International Conference on Advances in Computing, Communication and Applied Informatics ACCAI 2022, Chennai, India, 28–29 January 2022. [Google Scholar] [CrossRef]

- Mikołajczyk, A.; Grochowski, M. Data Augmentation for Improving Deep Learning in Image Classification Problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop IIPhDW 2018, Swinoujscie, Poland, 9–12 May 2018; pp. 117–122. [Google Scholar] [CrossRef]

- Barrientos, J.L. Leaf Recognition with Deep Learning and Keras Using GPU Computing; Engineering School, University Autonomous of Barcelona: Barcelona, Spain, 2018. [Google Scholar]

- TensorFlow Model Optimization|TensorFlow Lite. Available online: https://www.tensorflow.org/lite/performance/model_optimization (accessed on 22 February 2023).

| Class Name | Description | Origin | |

|---|---|---|---|

| Alamo blanco Populus alba L. | Tree that reaches up to 15 m in height, with straight trunks and greenish white outer bark that cracks over the years [43]. | I |  |

| Capuli Prunus serotina Ehrh. | Tree that reaches from 8 to 15 m in height and 30 to 50 cm in diameter at breast height (DBH). It has fissured outer bark, alternate branching, and a globose crown [42]. | N |  |

| Cepillo Callistemon lanceolatus (Sm.) Sweet | Tree from 2 to 7 m in height with dark brown fissured outer bark. It has elongated leaves with a linear shape that are pubescent when young [42]. | I |  |

| Cepillo blanco Melaleuca armillaris (Sol. ex Gaertn.) Sm. | Tree from 2 to 6 m in height with straight trunk and grayish brown fibrous outer bark. It has very thin leaves of linear shape with an acute apex [44]. | I |  |

| Cipres Cupressus macrocarpa Hartw. | They can reach 20 m in height with an approximate diameter of about 60 cm. They have a straight trunk and thin bark with longitudinal fissures. Their leaves are very small, scale-like, and aligned in opposite pairs [42]. | I |  |

| Cucarda Hibiscus rosa-sinensis L. | Shrub with branched stems from 2 to 5 m in height. It has simple leaves that are rounded at the base and elongated towards the apex. Its flowers can be presented in various colors, most commonly red or pink [42]. | I |  |

| Eucalipto Eucaliptus globulus Labill. | They can reach more than 60 m in height. In some specimens, the outer bark is light brown with a skin-like appearance and it peels off in strips leaving gray or brownish spots on the inner bark [42]. | I |  |

| Guabisay Podocarpus sprucei Parl | Tree that grows up to 15 m in height in natural environments. It has a straight trunk and grayish brown fissured outer bark. It has simple, alternate, linear leaves with a hard-textured pointed apex [42]. | N |  |

| Guaylo Delostoma integrifolium D. Don | Tree that reaches up to 6 m in height with a cylindrical trunk and smooth bark. It has simple, opposite elliptic to oblong elliptic leaves with entire margin and terminal inflorescence with a few clustered flowers [42]. | N |  |

| Huesito Pittosporum undulatum Vent. | Tree from 3 to 5 m in height with grayish brown granular outer bark texture. It has a dense or semi-dense crown of globose or ellipsoidal shape that is light green in color [45]. | I |  |

| Ramo de novia Yucca gigantea Lem. | Tree from 3 to 6 m in height; when mature, it usually develops several stems. Its trunk has bulges at the base that taper towards the middle part with grayish brown rough outer bark [46]. | I |  |

| Sauce Salix humboldtiana | Tree that reaches between 5 and 12 m in height and 50 cm in diameter. It has a tortuous trunk with cracked outer bark and a wide irregular crown with alternate branching [42]. | I |  |

| Tilo o Sauco blanco Sambucus mexicana C. Presl ex DC | Deciduous shrub that reaches up to 3 m or more in height. Its leaves are arranged in opposite pairs [47]. | I |  |

| Urapan Fraxinus excelsior L. | Tree that grows up to 35 m in height with irregular crown and deciduous foliage. It has opposite, pinnate compound leaves and the leaflets are finely serrated [48]. | I |  |

| Class | Original Images | Rotation 90° | Rotation 180° | Rotation 270° | Rotated Images | % Images Rotated |

|---|---|---|---|---|---|---|

| Alamo blanco | 295 | 15 | 15 | 15 | 45 | 13% |

| Capuli | 340 | 0 | 0 | 0 | 0 | 0% |

| Cepillo | 340 | 0 | 0 | 0 | 0 | 0% |

| Cepillo blanco | 340 | 0 | 0 | 0 | 0 | 0% |

| Cipres | 271 | 23 | 23 | 23 | 69 | 20% |

| Cucarda | 322 | 18 | 6 | 6 | 6 | 5% |

| Eucalipto | 249 | 31 | 30 | 30 | 91 | 27% |

| Guabisay | 340 | 0 | 0 | 0 | 0 | 0% |

| Guaylo | 289 | 17 | 17 | 17 | 51 | 15% |

| Huesito | 208 | 44 | 44 | 44 | 132 | 39% |

| Ramo de novia | 172 | 56 | 56 | 56 | 168 | 49% |

| Sauce | 340 | 0 | 0 | 0 | 0 | 0% |

| Tilo o sauco blanco | 340 | 0 | 0 | 0 | 0 | 0% |

| Urapan | 340 | 0 | 0 | 0 | 0 | 0% |

| 4186 | 202 | 187 | 185 | 574 |

| Full Training | Top Layer Training | |||||||

|---|---|---|---|---|---|---|---|---|

| Model | Interpreter | Model | Interpreter | |||||

| Base Model Name | Acc | Kappa | Acc | Kappa | Acc | Kappa | Acc | Kappa |

| ResNet V2 101 | 0.912 | 0.905 | 0.801 | 0.785 | 0.750 | 0.731 | 0.678 | 0.653 |

| EfficientNet-Lite | 0.922 | 0.916 | 0.770 | 0.752 | 0.780 | 0.763 | 0.648 | 0.621 |

| Prediction | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | Precision | Recall | F1-Score | ||

| Real | 1 | 82 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0.87 | 0.95 | 0.91 |

| 2 | 3 | 74 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 3 | 0 | 0 | 1 | 2 | 0.73 | 0.86 | 0.79 | |

| 3 | 0 | 2 | 76 | 2 | 1 | 4 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0.99 | 0.88 | 0.93 | |

| 4 | 0 | 0 | 0 | 84 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0.95 | 0.98 | 0.97 | |

| 5 | 0 | 0 | 0 | 0 | 86 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.93 | 1.00 | 0.97 | |

| 6 | 0 | 1 | 0 | 0 | 0 | 80 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 2 | 0.90 | 0.93 | 0.91 | |

| 7 | 8 | 19 | 0 | 0 | 0 | 0 | 54 | 0 | 0 | 0 | 1 | 0 | 0 | 4 | 0.90 | 0.63 | 0.74 | |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 83 | 0 | 1 | 0 | 1 | 0 | 0 | 0.94 | 0.97 | 0.95 | |

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 86 | 0 | 0 | 0 | 0 | 0 | 0.91 | 1.00 | 0.96 | |

| 10 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 2 | 75 | 0 | 0 | 1 | 5 | 0.94 | 0.87 | 0.90 | |

| 11 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 85 | 0 | 0 | 0 | 0.99 | 0.99 | 0.99 | |

| 12 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 84 | 0 | 0 | 0.95 | 0.98 | 0.97 | |

| 13 | 0 | 3 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 80 | 1 | 0.98 | 0.93 | 0.95 | |

| 14 | 1 | 1 | 0 | 1 | 4 | 4 | 3 | 0 | 1 | 1 | 0 | 1 | 0 | 69 | 0.82 | 0.80 | 0.81 | |

| Prediction | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | Precision | Recall | F1-Score | ||

| Real | 1 | 84 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0.70 | 0.98 | 0.82 |

| 2 | 6 | 64 | 0 | 0 | 0 | 1 | 11 | 1 | 3 | 0 | 0 | 0 | 0 | 0 | 0.65 | 0.74 | 0.70 | |

| 3 | 4 | 5 | 43 | 4 | 2 | 13 | 4 | 4 | 2 | 0 | 2 | 0 | 0 | 3 | 0.86 | 0.50 | 0.63 | |

| 4 | 0 | 0 | 0 | 83 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0.93 | 0.97 | 0.95 | |

| 5 | 1 | 0 | 0 | 0 | 85 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.88 | 0.99 | 0.93 | |

| 6 | 2 | 1 | 0 | 0 | 0 | 77 | 1 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 0.63 | 0.90 | 0.74 | |

| 7 | 8 | 17 | 1 | 0 | 2 | 1 | 52 | 0 | 0 | 0 | 1 | 0 | 1 | 3 | 0.57 | 0.60 | 0.59 | |

| 8 | 2 | 0 | 0 | 0 | 0 | 1 | 1 | 78 | 0 | 0 | 0 | 1 | 0 | 3 | 0.89 | 0.91 | 0.90 | |

| 9 | 5 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 80 | 0 | 0 | 0 | 0 | 0 | 0.79 | 0.93 | 0.86 | |

| 10 | 2 | 2 | 0 | 0 | 1 | 11 | 3 | 1 | 9 | 49 | 1 | 0 | 0 | 7 | 1.00 | 0.57 | 0.73 | |

| 11 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 85 | 0 | 0 | 0 | 0.96 | 0.99 | 0.97 | |

| 12 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 79 | 0 | 4 | 0.98 | 0.92 | 0.95 | |

| 13 | 2 | 7 | 1 | 0 | 0 | 7 | 1 | 1 | 0 | 0 | 0 | 0 | 66 | 1 | 0.97 | 0.77 | 0.86 | |

| 14 | 4 | 1 | 5 | 1 | 6 | 10 | 18 | 0 | 2 | 0 | 0 | 0 | 0 | 39 | 0.64 | 0.45 | 0.53 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pacheco-Prado, D.; Bravo-López, E.; Ruiz, L.Á. Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach. Forests 2023, 14, 1050. https://doi.org/10.3390/f14051050

Pacheco-Prado D, Bravo-López E, Ruiz LÁ. Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach. Forests. 2023; 14(5):1050. https://doi.org/10.3390/f14051050

Chicago/Turabian StylePacheco-Prado, Diego, Esteban Bravo-López, and Luis Ángel Ruiz. 2023. "Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach" Forests 14, no. 5: 1050. https://doi.org/10.3390/f14051050

APA StylePacheco-Prado, D., Bravo-López, E., & Ruiz, L. Á. (2023). Tree Species Identification in Urban Environments Using TensorFlow Lite and a Transfer Learning Approach. Forests, 14(5), 1050. https://doi.org/10.3390/f14051050