1. Introduction

Particleboard, also known as bagasse board, is one of the three main products of man-made board at present. Particleboard is a kind of wood-based panel made of wood or other lignocellulosic materials, which is glued under the action of heat and pressure after applying adhesive. The raw materials of particleboard come from a wide range of sources, and the board has high strength. The sales market of particleboard-related products is also broad [

1].

At present, there are many kinds of automatic production equipment for particleboard, among which the continuous flat press is the leading technology. It has the advantages of high automation and outstanding production capacity. However, due to the particularity of the raw material source of particleboard, there are some problems in the production line of the continuous flat press. Due to factors such as uneven size and type of raw materials, machine errors in production equipment, and workshop environmental interference, individual products may have surface defects. These defects can impact the subsequent veneer process, the appearance and quality of the finished board, and make secondary processing more challenging. With the refinement and high-end development of the furniture manufacturing industry, the demand for high-quality particleboard is rising. Consequently, particleboard manufacturers pay more attention to product surface quality testing than ever before, making it one of the core measurement factors for board grading. Among the available quality testing methods, surface defect image classification is a critical component for improving the quality of particleboard products.

During the particleboard production process, surface quality inspection is a crucial step that greatly impacts the subsequent veneer and edge bonding processes. The influence of surface defects on the board quality can vary widely depending on factors such as the damage degree, the defect type, and the defect area [

2]. Currently, the treatment schemes usually involve either leaving the defect untreated or directly cutting the defective parts of the board. The former approach often leads to problems such as blistering and layering of the board after veneering, thereby affecting the quality of the final product and subsequent processing. The latter approach can result in the wastage of raw materials and production resources, which is not conducive to the effective utilization of wood resources. Detecting and identifying surface defects on the board and implementing different treatment methods based on the detection and identification results can address the aforementioned issues.

With the development of industrial technology, non-destructive testing methods are emerging as a replacement for manual operations in detecting and identifying defects in industrial products. In the wood-based panel industry, researchers have been exploring defect detection technology, resulting in progress in non-destructive testing and identification methods. These methods include ultrasonic detection [

3], X-ray detection [

4], and machine vision detection [

5]. Ultrasonic testing uses ultrasonic propagation attenuation law to judge the defect position in the panel, but it has drawbacks such as long detection time, the need for coupling agents, and operator experience. X-ray testing targets internal defects in the panel, utilizing ray beam attenuation imaging to display the defect size and position. However, protective equipment is required due to the harmful effects of radiation, limiting its applicability in open production environments.

Machine vision technology emerged in the 1970s and 1980s, owing to the progress of digital image processing and computer pattern recognition technologies. In the realm of product surface defect detection, this technology acquires product surface images through detection and perception and subsequently produces results of diverse projects such as defect measurement, classification, and quality evaluation based on algorithms. It is capable of multi-mode detection, such as quantitative, qualitative, and semi-quantitative. This method is highly suitable for industrial production fields associated with panel defect detection due to its fast detection speed, flexible processing, high accuracy, and easy equipment configuration. However, the industrial production line’s production environment is intricate, and the requirements for surface defect detection differ for different boards. Thus, surface defect detection and recognition technology based on machine vision still present extensive research prospects.

In the problem of surface defect detection and recognition based on machine vision, extracting the defect features to form a sample set, building a defect-type classifier, training the classifier, and classifying the defects according to their feature values are necessary. Currently, the main classifiers are the Bayesian Classifier, K-Nearest Neighbor Classifier, Decision Tree Classifier, Random Forest Classifier, Support Vector Machine Classifier, and Neural Network Classifier. Scholars have conducted much in-depth research and innovations on relevant issues in recent years [

6,

7,

8,

9,

10,

11]. He, T and Liu, Y used the improved AP (Affinity Propagation) clustering algorithm and SOM (Self-Organizing Maps) neural network to classify wood defects. The research achieves recall rates of 85.5% and 83.6% and precision rates of 90.3% and 92.7% [

6]. Zhang, H improved the classification speed by using compressed Random Forest to classify the defects on the surface of solid wood flooring, achieving a one-time prediction time of 3.44 ms [

7]. Liu, S classified wood defects using a BP (Back Propagation) Neural Network and Support Vector Machine [

9]. The experimental result showed that the Support Vector Machine is more accurate in classification, with a recognition accuracy of 92% of live joints, dead joints, and cracks. To address the problem of high labor cost and low efficiency in wood defect detection, Ding, F and Zhuang, Z used a color charge-coupled equipment camera to collect surface images of red wood and Pinus sylvestris [

11]. They applied the transfer learning method to a Single-Shot Multi-Box Detector (SSD) and proposed a target detection algorithm. The average accuracy of detecting a live joint, dead joint, and three types of defects reached 96.1%.

Based on recent research, it is evident that Neural Networks perform particularly well in related problems and yield satisfactory results for defect detection. Although many scholars have improved and innovated Neural Networks, current algorithms such as Convolutional Neural Networks and BP Neural Networks used in defect classification have their own limitations. In particular, trained network models may recognize targets as other objects when interference such as translation, rotation, or scale transformation occurs. Moreover, many Neural Network algorithms are greatly affected by the number of training samples, requiring a large number of training samples to achieve better recognition results, which involves significant computational cost and a long training time.

In this article, we address the challenge of identifying surface defects in particleboards, which is limited by the availability of defect samples. To overcome this challenge, we propose using the Capsule Network model, which has been shown to perform well in small sample classification problems. We improve the model to achieve a better recognition effect.

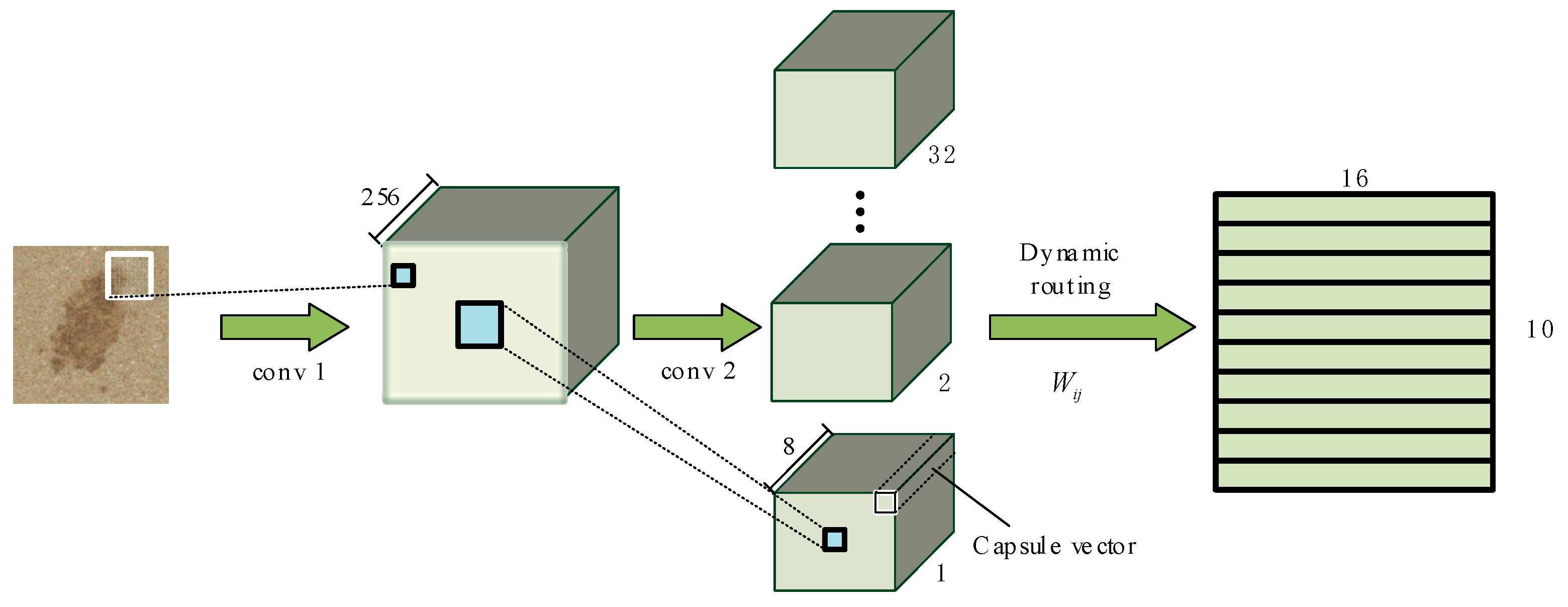

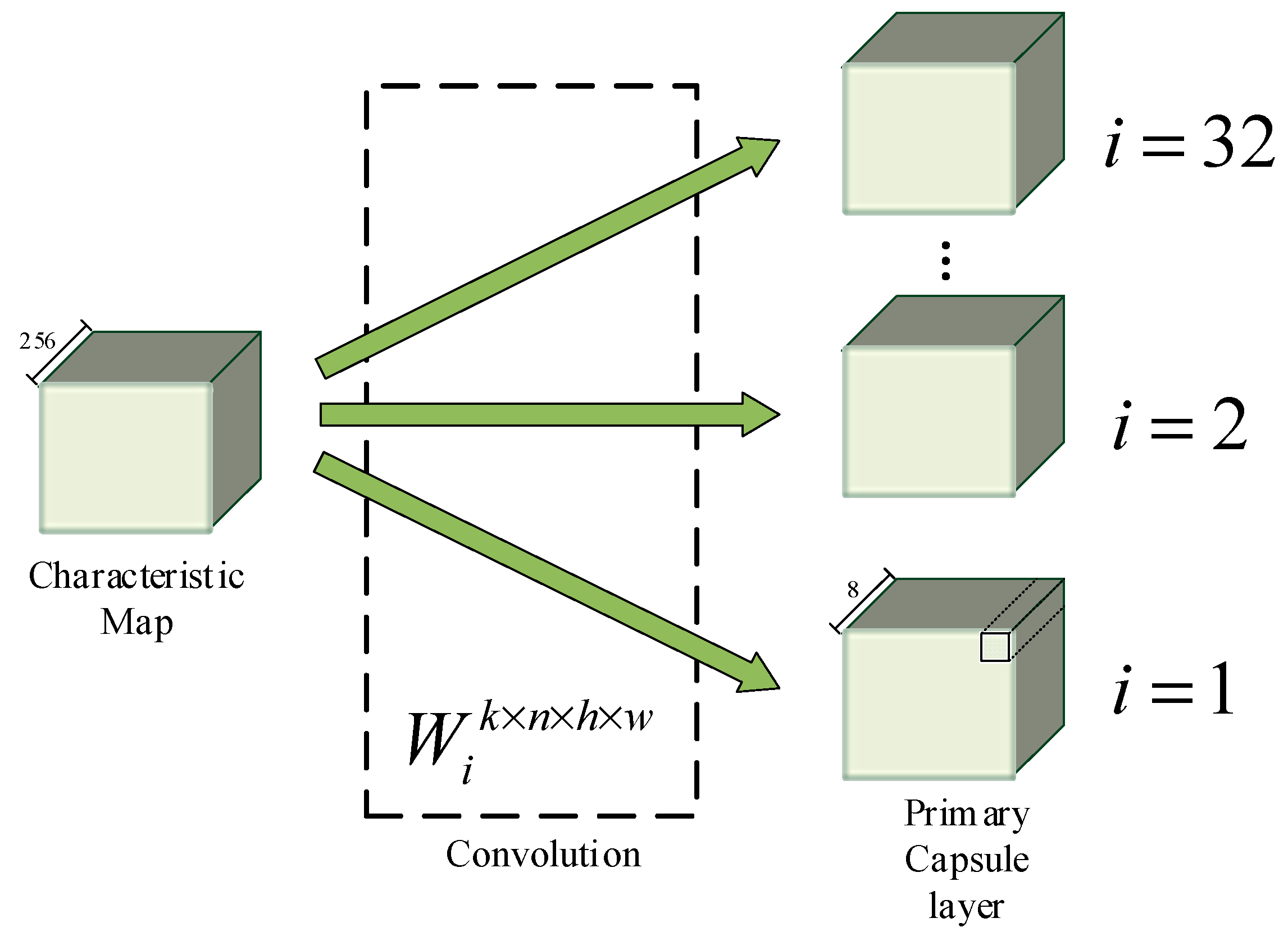

The Capsule Network (CapsNet) proposes replacing neurons in a Convolutional Neural Network with capsules to preserve detailed pose information and spatial hierarchical relationships between objects [

12,

13,

14]. A capsule, in this network, refers to a small group of neurons that can identify a specific object in a defined region. The output of a capsule is a vector with a specific length, representing the probability of object existence, and the vector direction records the object’s attitude parameters. If there is any slight change in the object’s position, such as rotation or movement, the capsule would output a vector with the same length but a slightly varied direction.

In recent years, a Capsule Network has been increasingly utilized in image classification and recognition and has shown promising results [

15,

16,

17,

18,

19]. Shakhnoza, M applied a Capsule Network to fire smoke image recognition, which demonstrated high recognition accuracy and robustness for outdoor camera image classification in the presence of smoke and fire [

15]. Wang, W combined a Convolutional Neural Network and Capsule Network to classify gastrointestinal endoscopic images with a classification accuracy of over 85% for multiple datasets [

16]. Sreekala, K developed a deep transfer learning model for face recognition using the Capsule Network and utilized a Grey Wolf Optimization (GWO) and Stacked Autoencoder (SAE) model to classify faces in images [

18].

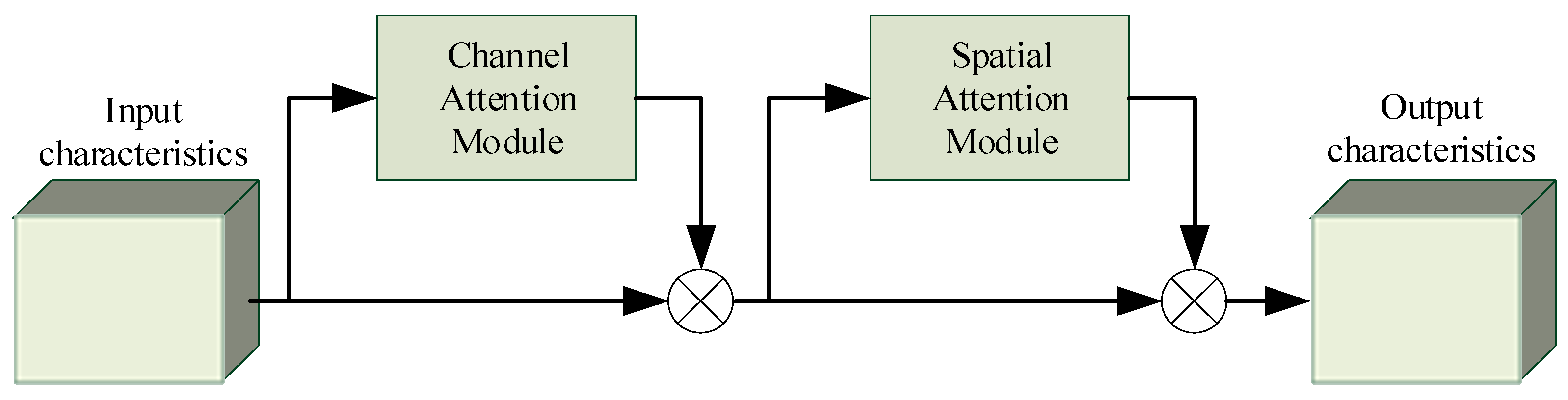

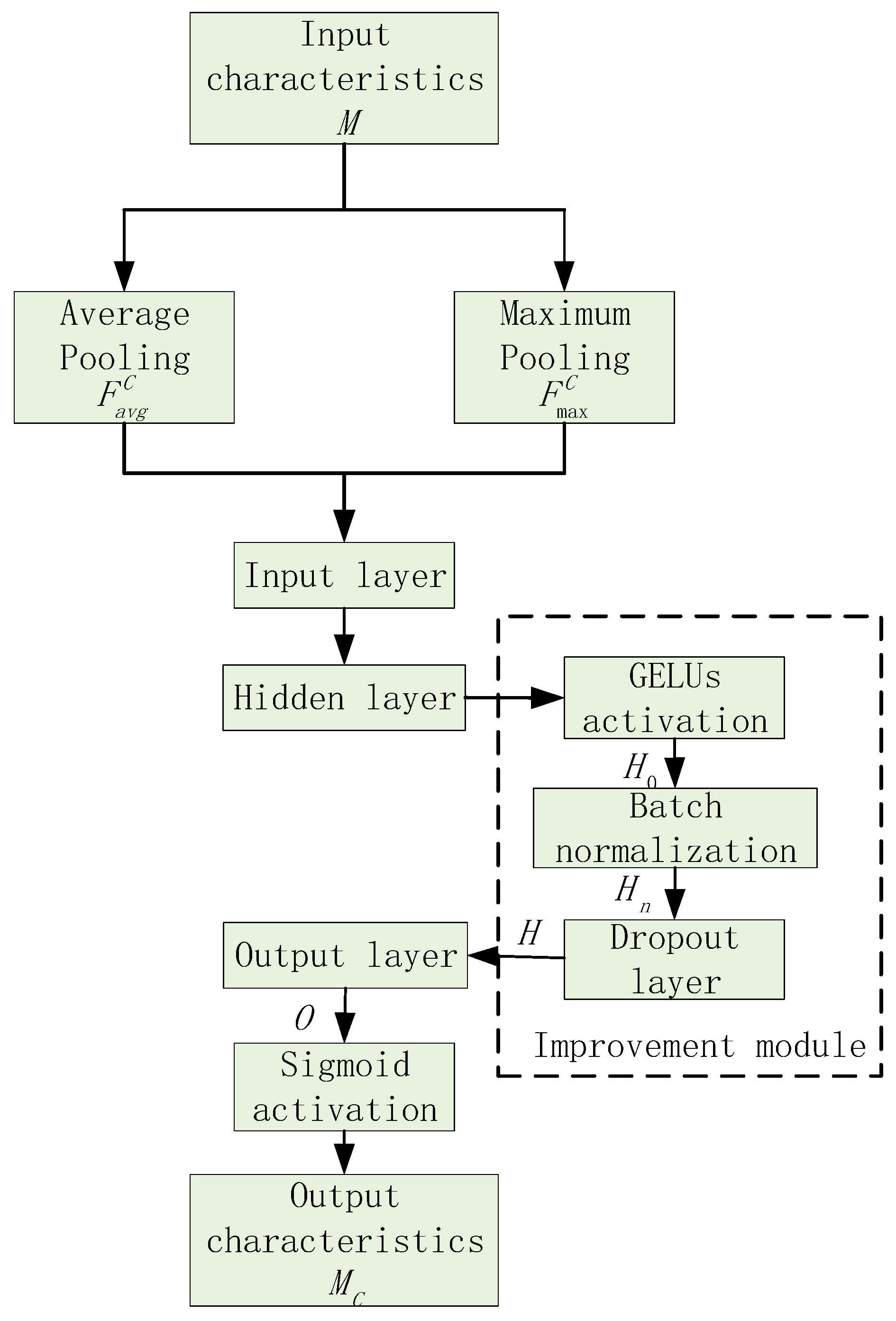

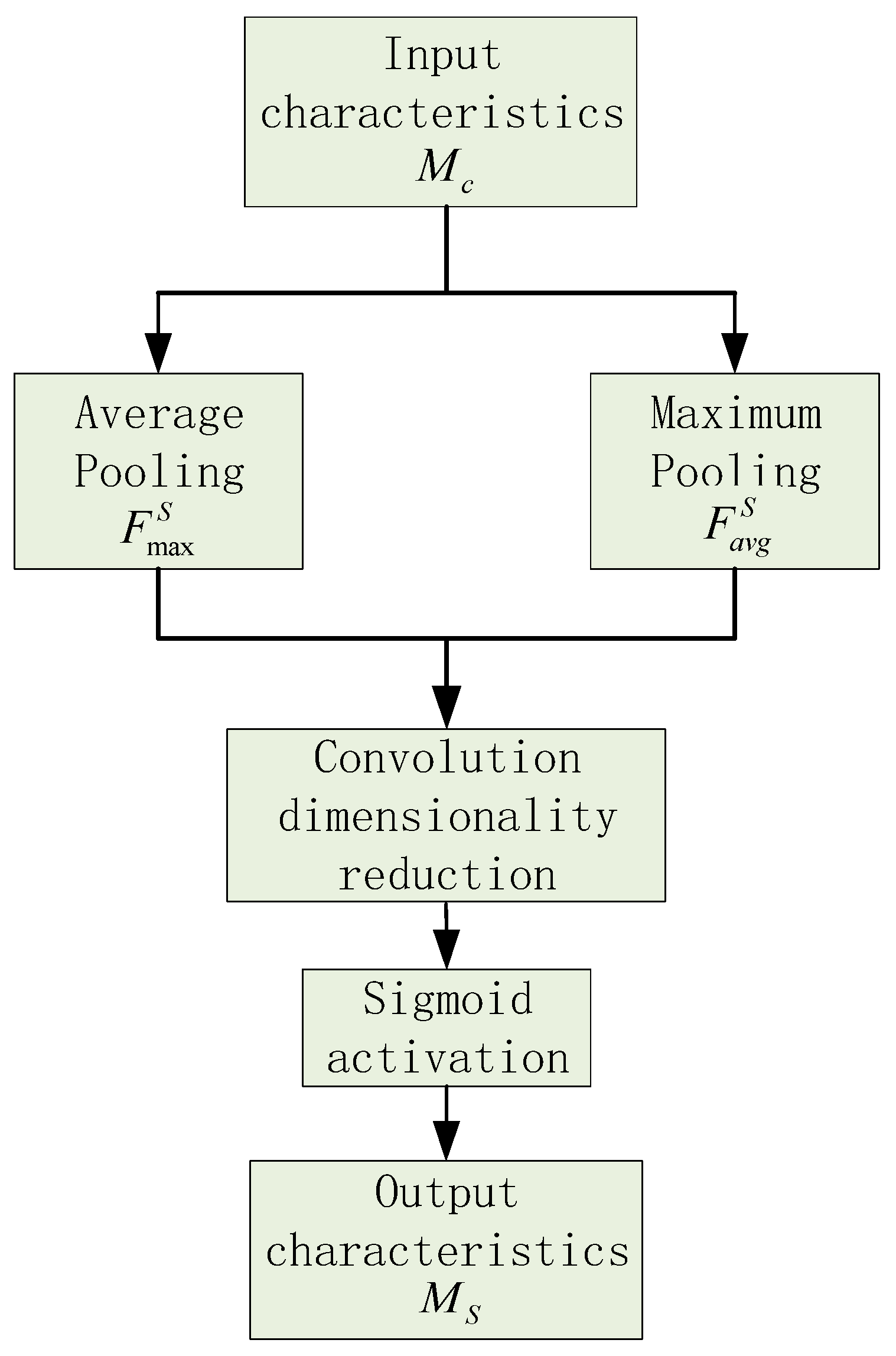

In our study of particleboard surface defect recognition, the recognition process of the defect type is greatly interfered with by the special characteristic of the surface texture of the board. To address this, we introduce an improved CBAM attention model into the Capsule Network. The CBAM (Convolutional Block Attention Module) model is a channel space mixed attention model [

20,

21,

22]. It generates attention feature map information in both channel and space dimensions and multiplies the two feature maps with the original input feature map to perform adaptive feature correction and obtain an optimized feature map. By using the CBAM attention model in the particleboard surface defect classification and recognition problem, important feature information is strengthened, unnecessary features are suppressed, and feature optimization is achieved, resulting in improved recognition accuracy with reduced calculation parameters and computing power being saved.

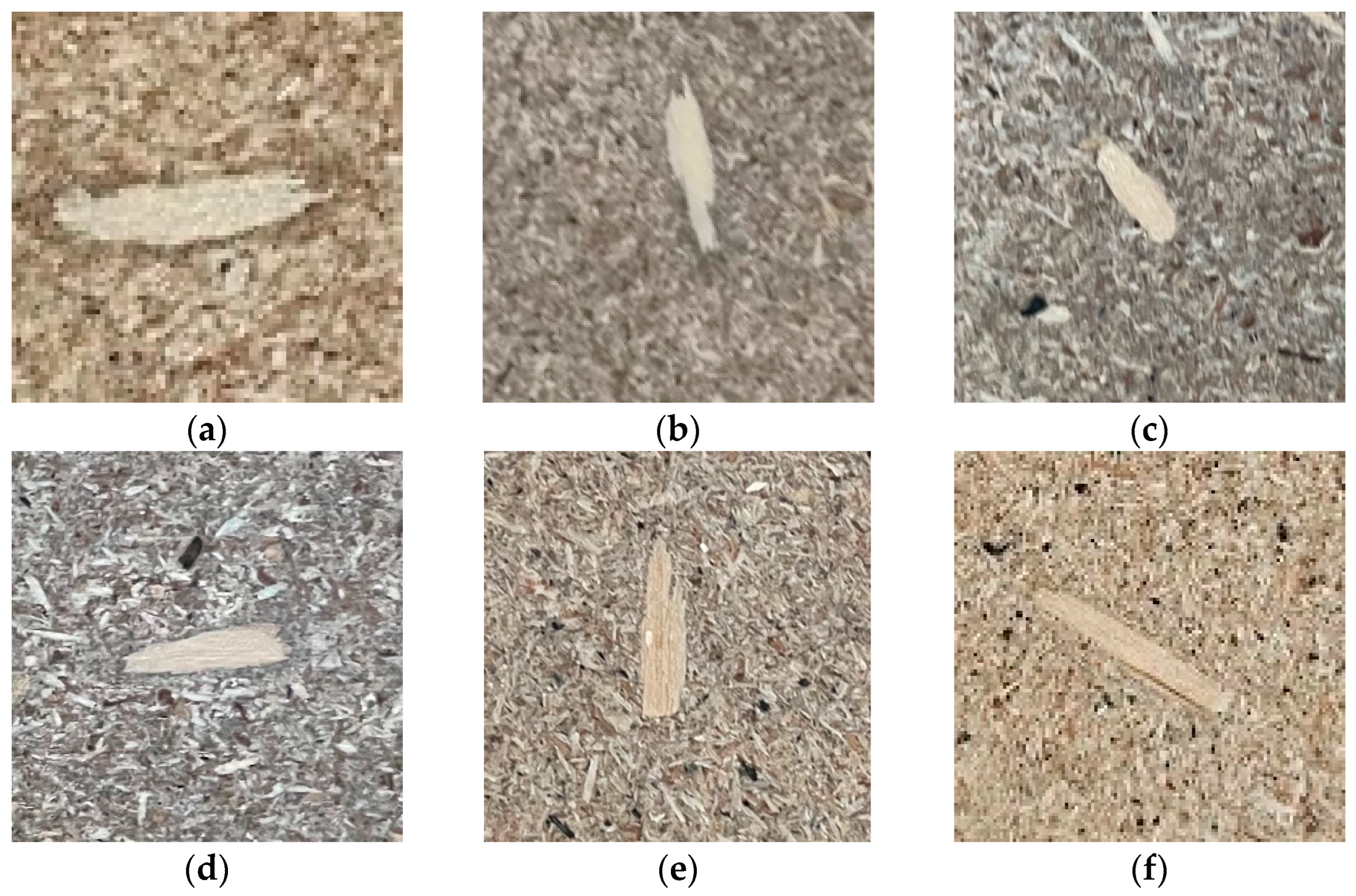

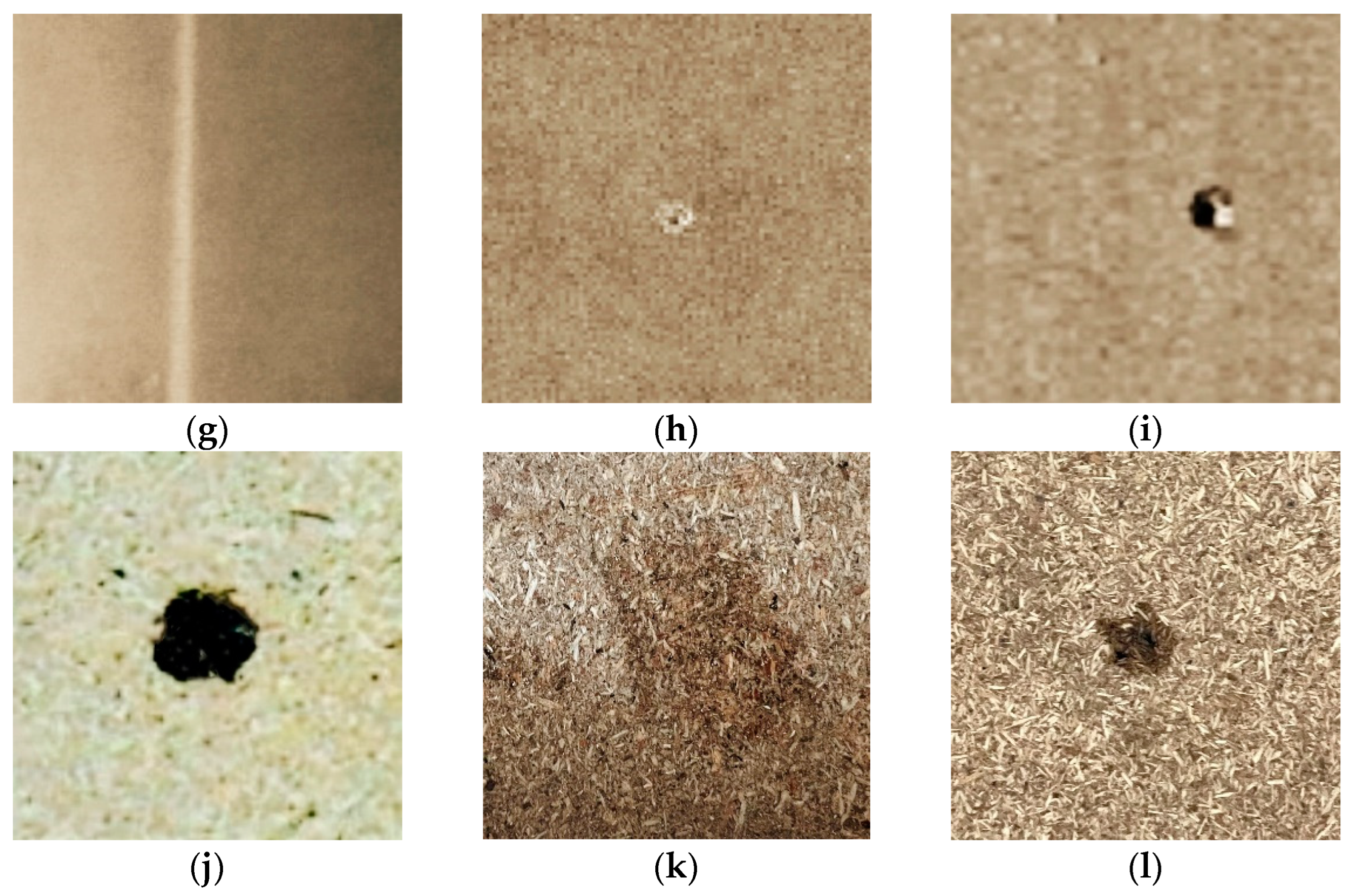

In the particleboard production line, surface defects must be detected, extracted, classified, and identified to assess the degree of damage to the board. Subsequently, the boards that do not meet surface quality requirements must be removed from production. To achieve this objective, we use the CBAM attention model to improve the Capsule Network and use the improved method to identify five common surface defects (bare shavings, oil spots, glue spots, sand lines, and holes) on the production line. The study has two main aspects. Firstly, we propose an algorithm for the Capsule Network based on the improved CBAM attention model, making it more suitable for identifying particleboard surface defect types. Secondly, we conduct recognition experiments using defect samples from the particleboard surface defect dataset and compare the effectiveness of our model with that of other common recognition methods.

3. Results

The sample set of defect features to be tested is divided into a training set and a test set in a 7:3 proportion. The training set comprises 1400 defect feature maps, while the test set contains 600 defect feature maps.

Common classification recognition methods such as an AP Clustering algorithm, SOM Neural Network, Capsule Network (CapsNet), Fast Capsule Network (Fast CapsNet), and the proposed CBAM-Capsule Network (CBAM CapsNet) were selected to identify and compare the types of surface defects. The size of the sliding pane in the AP clustering algorithm is

, and the reference threshold is

. The SOM Neural Network takes the number of competing layer nodes as 200. CapsNet, Fast CapsNet, and CBAM-CN employ the loss function shown in Formula (6), wherein the loss regulation parameter

, the capsule length threshold

,

, and the reconstruction loss as shown in Formula (7), where the reconstruction regulation parameter

.

Table 2 show the other training parameter settings.

Each recognition algorithm is utilized to conduct recognition experiments on the five types of surface defect images. The accuracy rates and F1 score evaluation index results obtained from the recognition experiments on the training set and test set are presented in

Table 3 and

Table 4.

The data show that the CBAM-CN achieves a higher classification accuracy and F1 score than other algorithms in both the training and test sets when recognizing particleboard surface defect feature images with small sample sizes. This indicates that the CBAM-CN proposed in this paper is effective in eliminating background texture interference and improving classification accuracy. Moreover, by comparing the recognition accuracy of the CBAM-CN at dropout probabilities of 0.5 and 1, it is observed that the introduction of a dropout layer into the improved CBAM attention model results in a relatively small gap between the test set and the training set. These results suggest that the CBAM-CN with a dropout layer can better alleviate overfitting problems caused by small sample sizes.

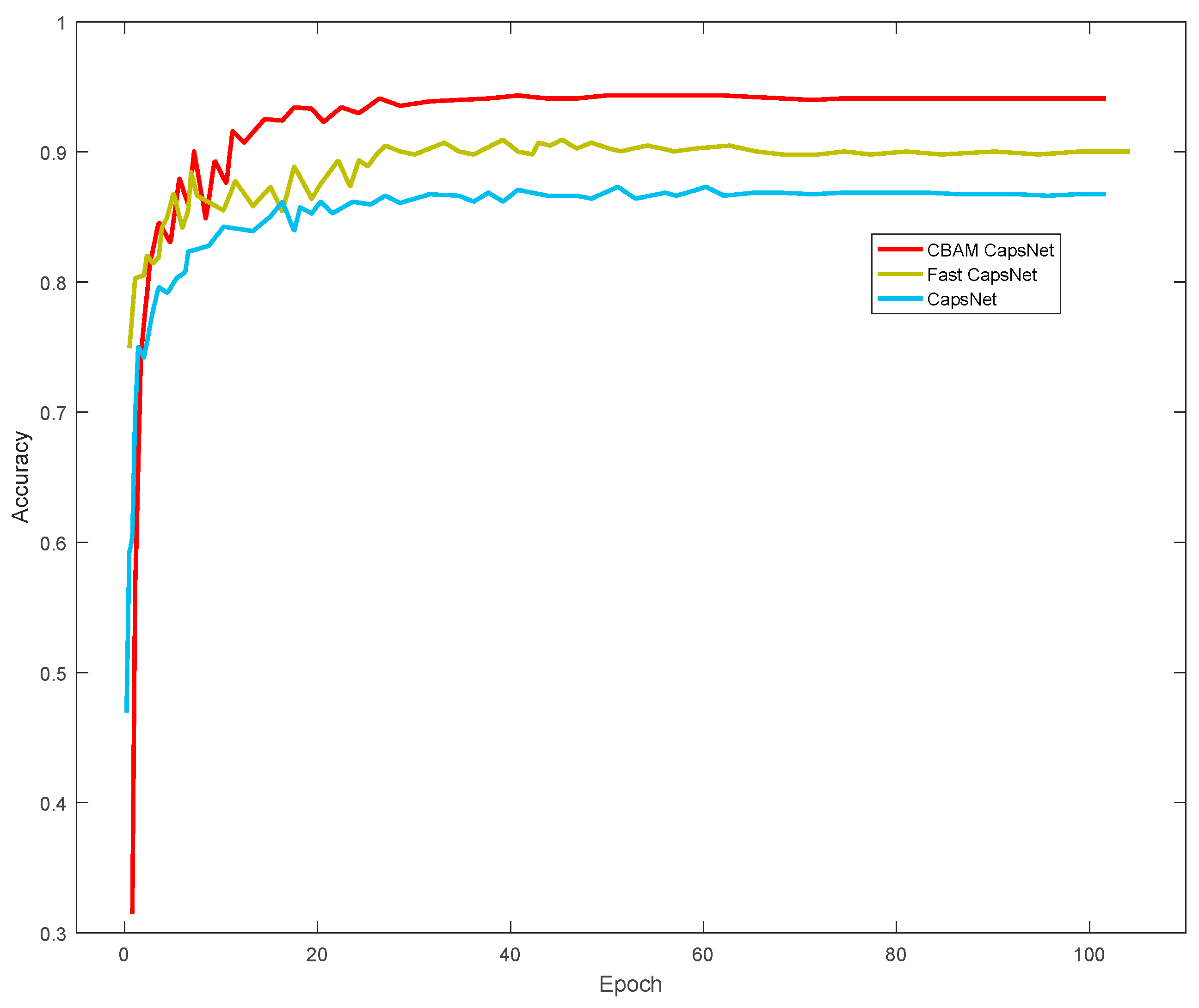

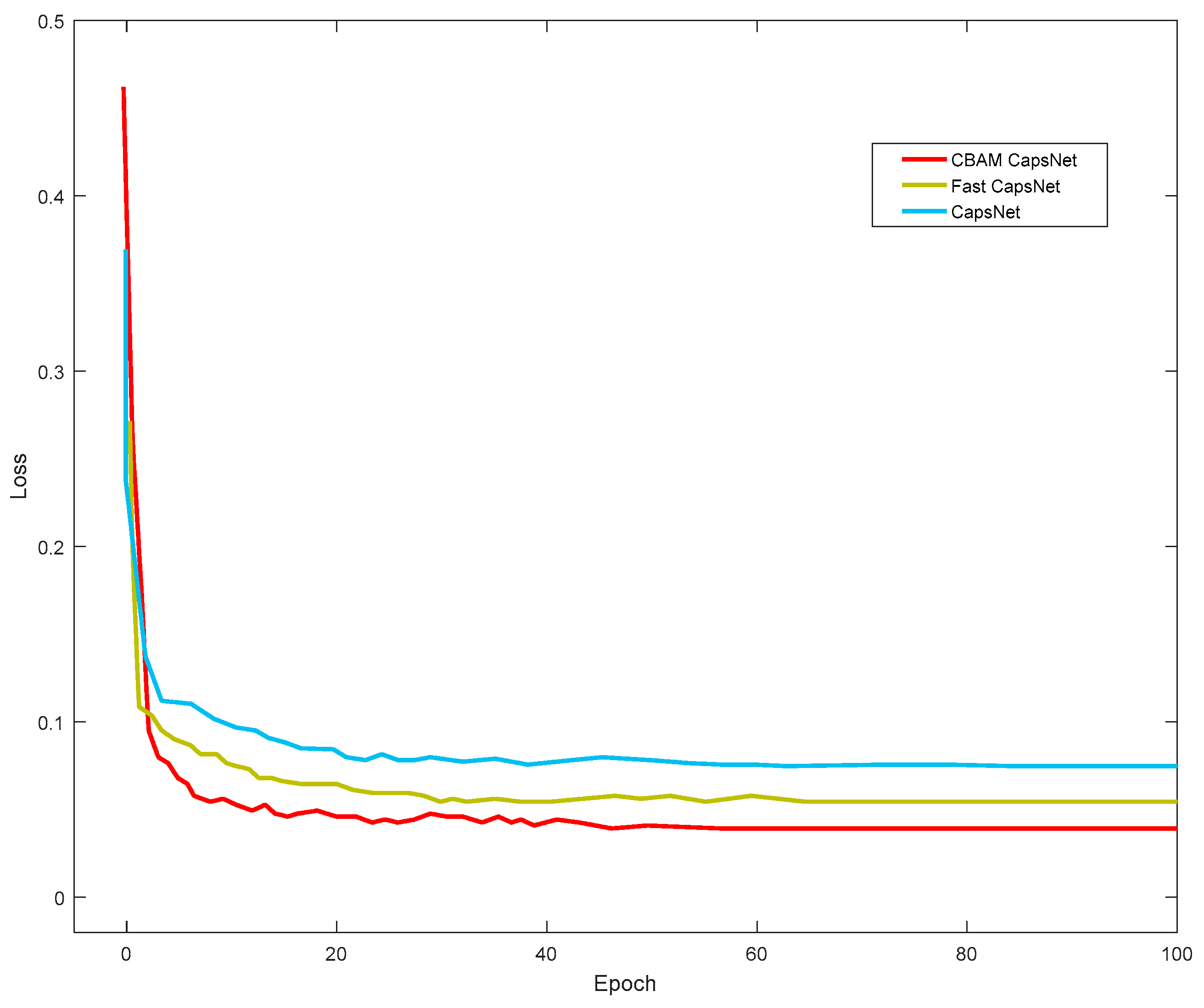

To verify the effectiveness of the CBAM-CN on the particleboard surface defect image sample set, we analyzed the relationship between recognition accuracy, loss rate, and epochs for CapsNet, Fast CapsNet, and the CBAM-CN. The corresponding curves are presented in

Figure 9 and

Figure 10.

The graph above demonstrates that the CBAM-CN achieves a stable training effect at 40 epochs, while the curves of CapsNet and Fast CapsNet tend to stabilize at 60 epochs. This indicates that the CBAM-CN can optimize the feature graph and improve the training efficiency of the capsule network model. Moreover, it is evident from the curves that the CBAM-CN has higher recognition accuracy and lower loss rate when the training curve tends to be stable, indicating that it can achieve a stable and accurate recognition effect in identifying surface defects of particleboard under the same epoch conditions.

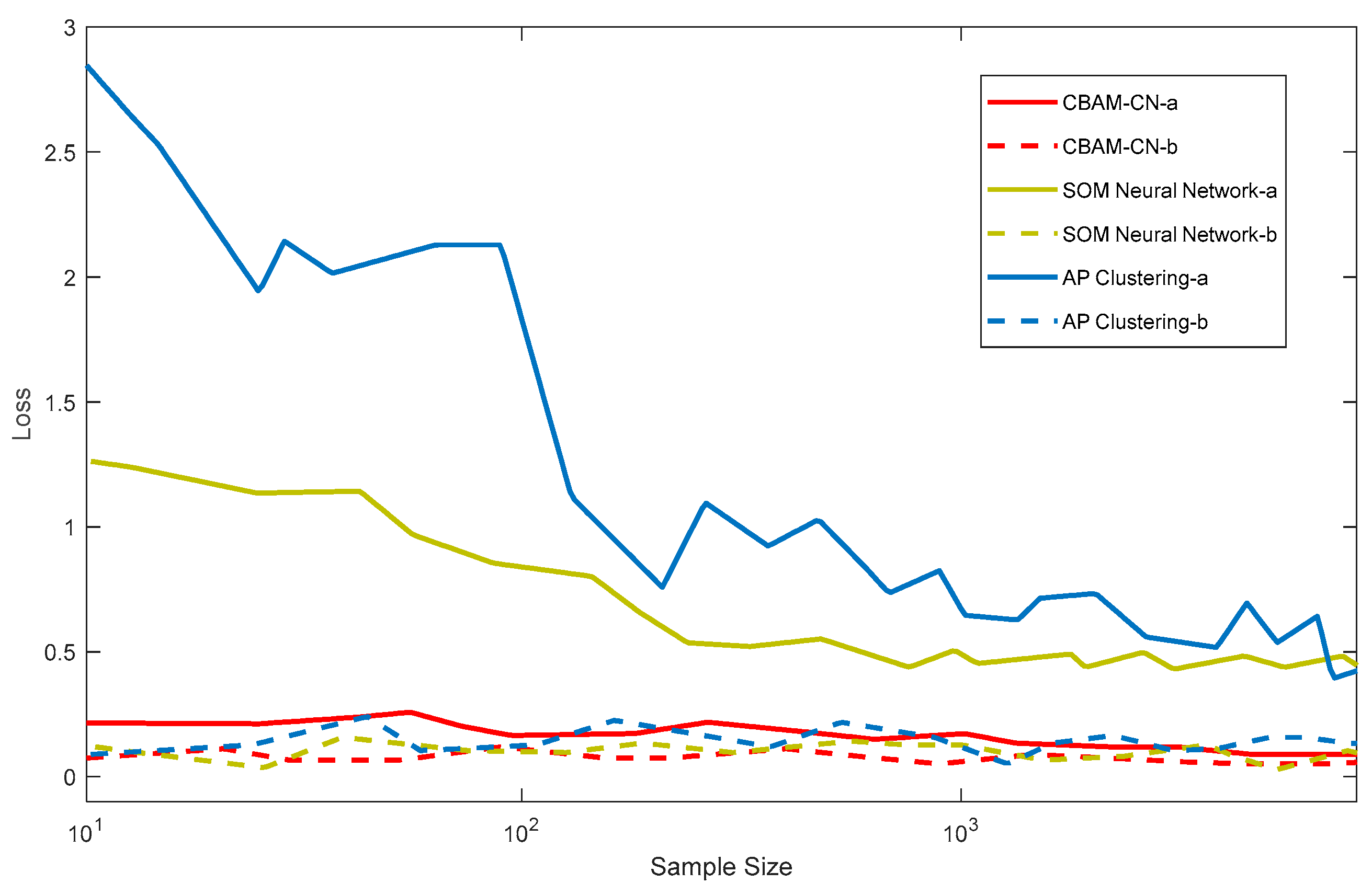

In general, image recognition problems require a large number of training samples for Convolutional Neural Networks to achieve optimal performance. However, in the case of particleboard surface defect recognition, the number of available samples is limited. Therefore, we compare and analyze the recognition accuracy and loss rate of the AP Clustering algorithm, SOM Neural Network, and the CBAM-CN, with varying sample sizes. The resulting curves are shown in

Figure 11 and

Figure 12. To compare the effects of these recognition algorithms on the problem of overfitting, we include the recognition results of the training set as a comparison in

Figure 12. The solid line represents the experimental data of the test set, while the dotted line represents the experimental data of the training set.

The curves in

Figure 11 and

Figure 12 reveal that when the sample size is small, the training performance of the AP Clustering algorithm and SOM Neural Network will be greatly affected, and a satisfactory recognition effect cannot be achieved. In contrast, the CBAM-CN can achieve an accuracy rate of nearly 85% and a loss rate of less than 15% with just 100 training set samples. When the training set of samples is more than 500, the CBAM-CN can maintain an accuracy rate of more than 90% and a loss rate of less than 5%. On the other hand, other neural network models require over 1000 training samples to attain an accuracy rate of more than 90%. Moreover, under the same number of training samples, the loss rate is also higher than the CBAM-CN.

It is evident from

Figure 12 that when the number of samples is small, there is a significant difference between the recognition loss rate of the test set and the training set for the AP Clustering algorithm and SOM Neural Network, indicating the presence of an overfitting problem. However, the two experimental data curves of the CBAM-CN are similar, and it can maintain a low loss rate for the test set even when the number of training samples is small. This result highlights that the CBAM-CN can effectively address the overfitting problem caused by the insufficient number of training samples.