Enhancing Forest Security through Advanced Surveillance Applications

Abstract

:1. Introduction

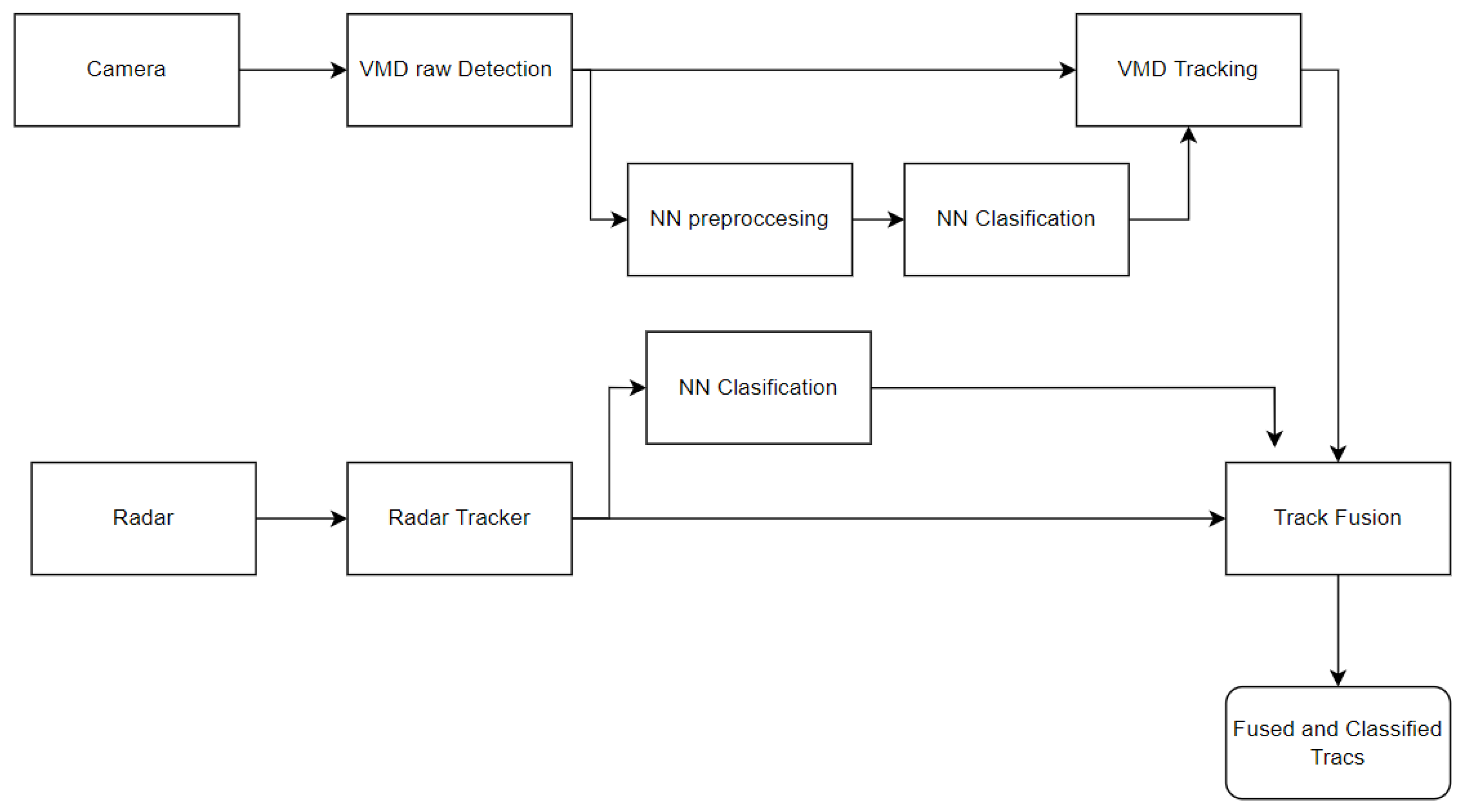

- Thermal camera tracking methods for multi-target tracking are explored and adopted for required object localization and tracking, with CNN object classification and improved thermal motion detection and tracking.

- A novel thermal camera and MMW radar fusion model is proposed and tested. The proposed model has advanced capability to handle rush environments with different clutter situations from sensors. The model used in the fusion approach enables the targets to be more accurately tracked by individual sensors before fusion.

- The performance of the fusion and tracking strategy was evaluated via false alarm rate field test, detection accuracy, and time.

2. Related Works

3. Methods

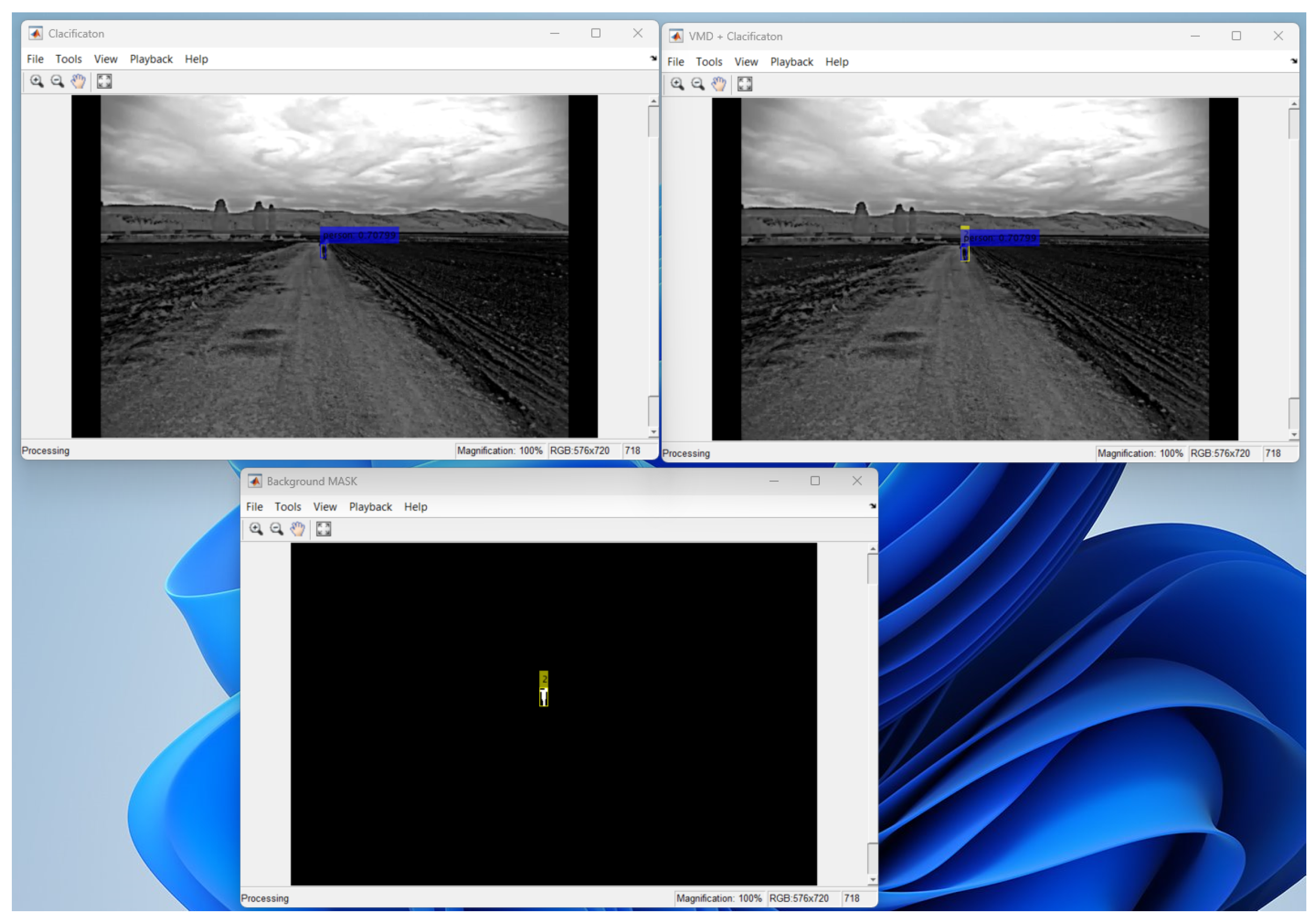

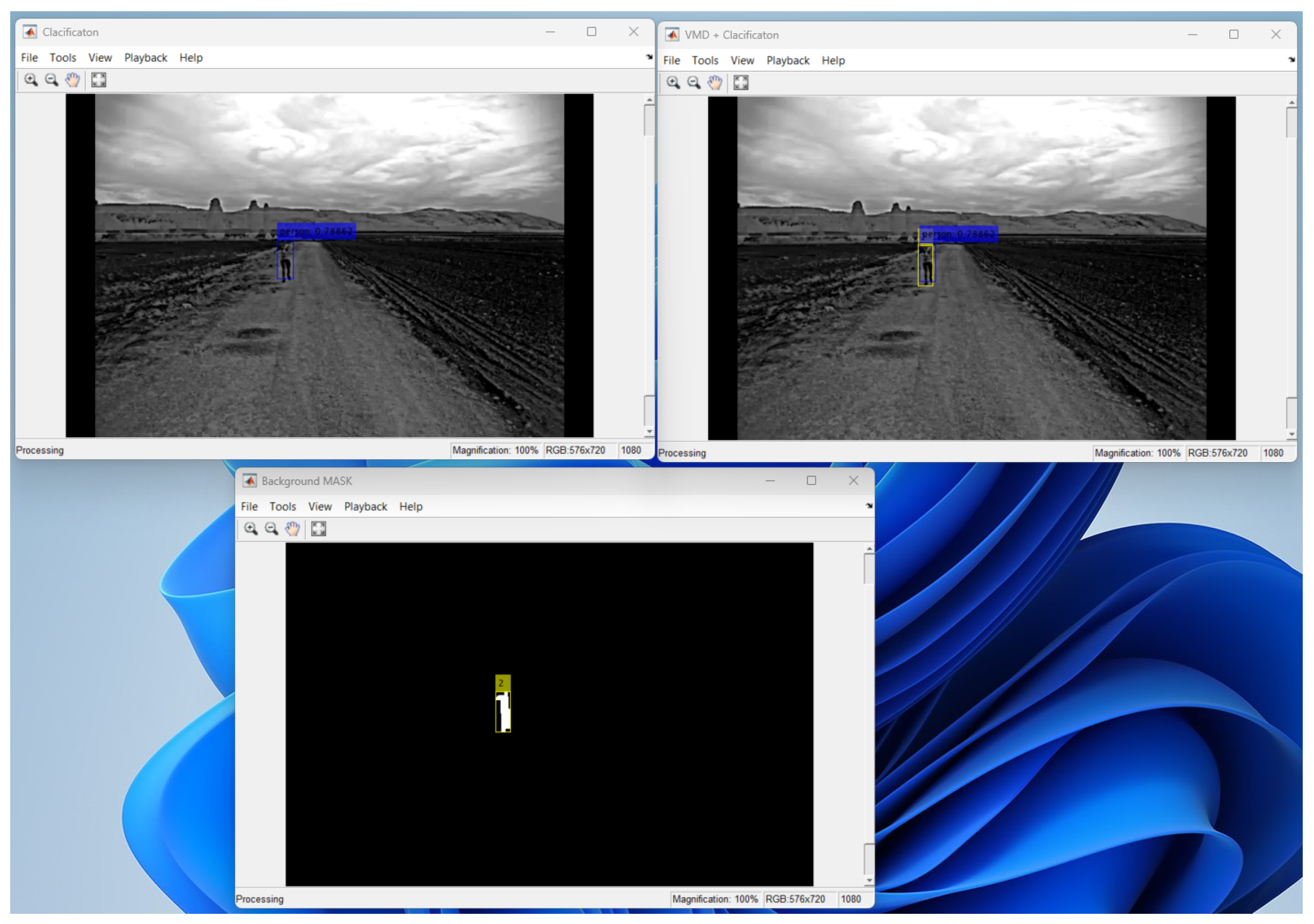

3.1. Thermal Imaging Tracking and Localization

- Probability of detection (PD) > 98%.

- False alarm rate (FAR) < 1 per week.

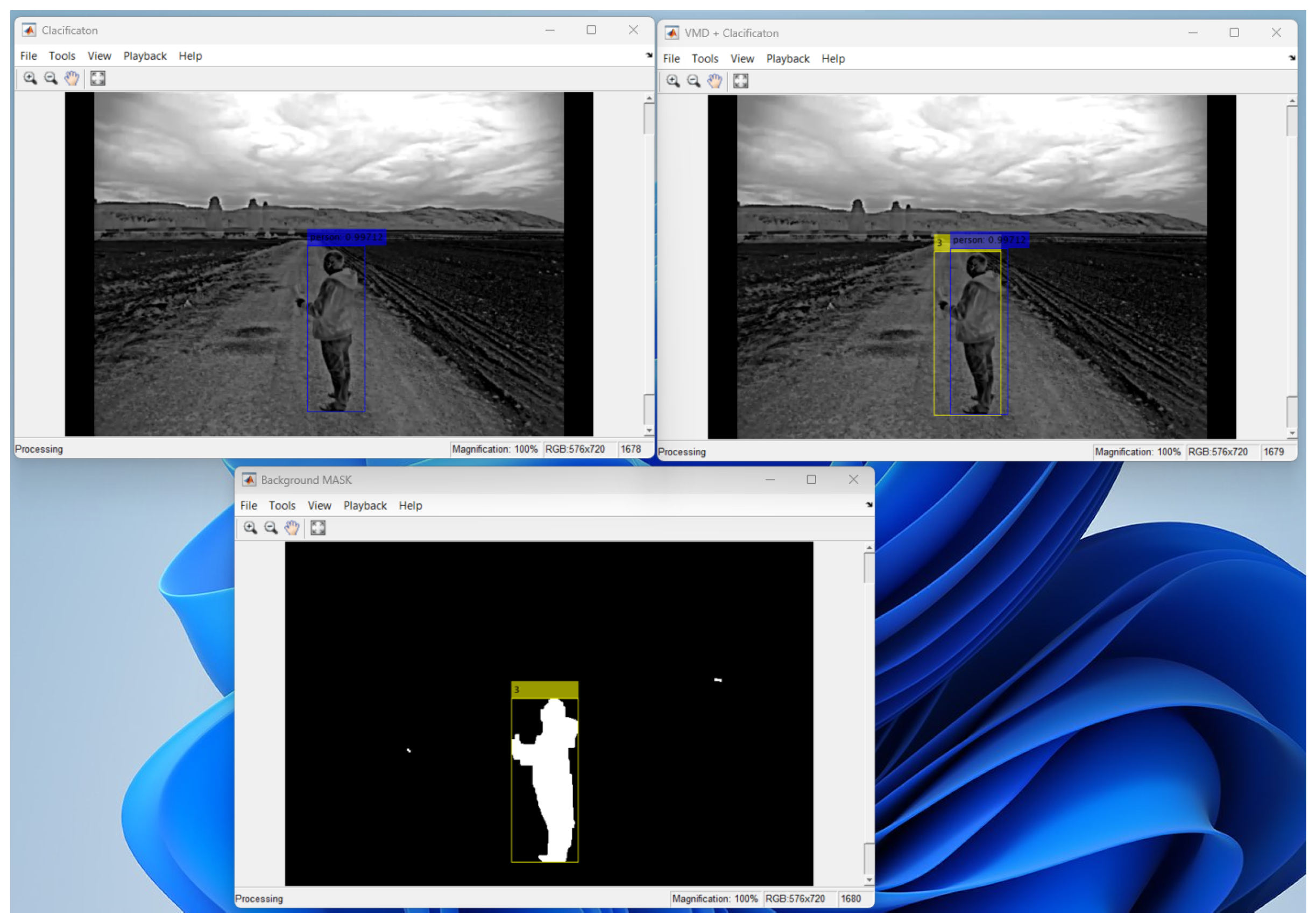

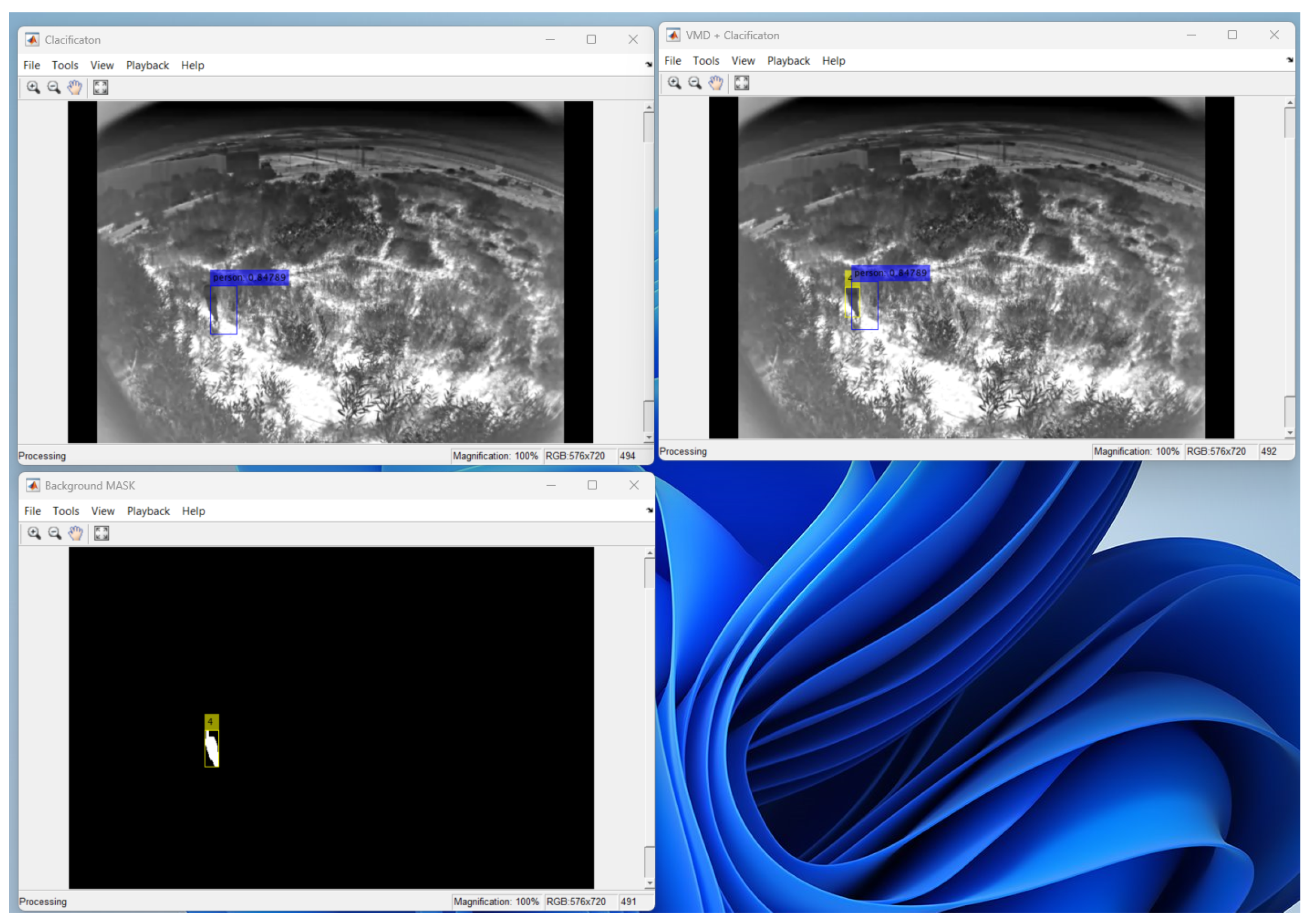

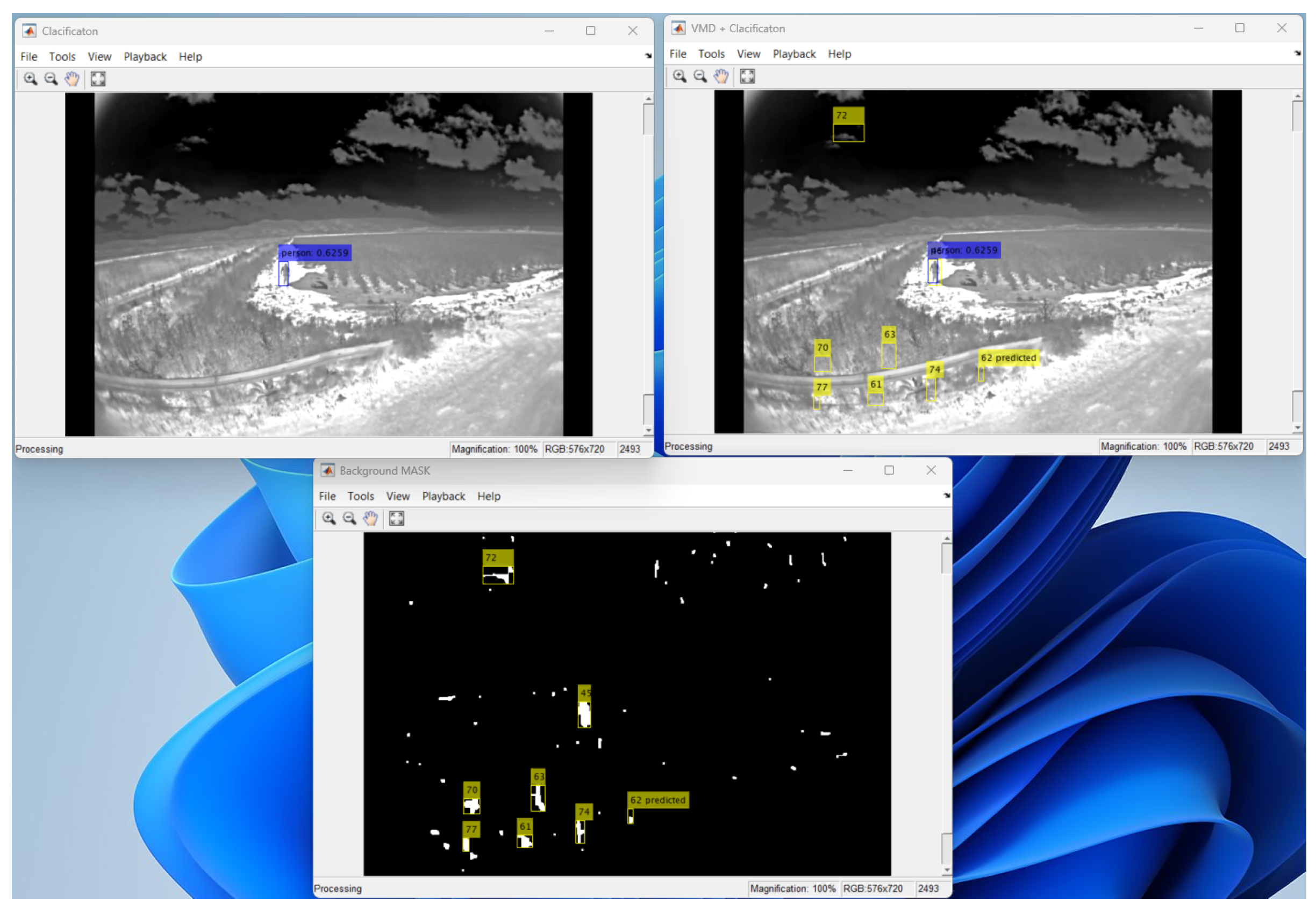

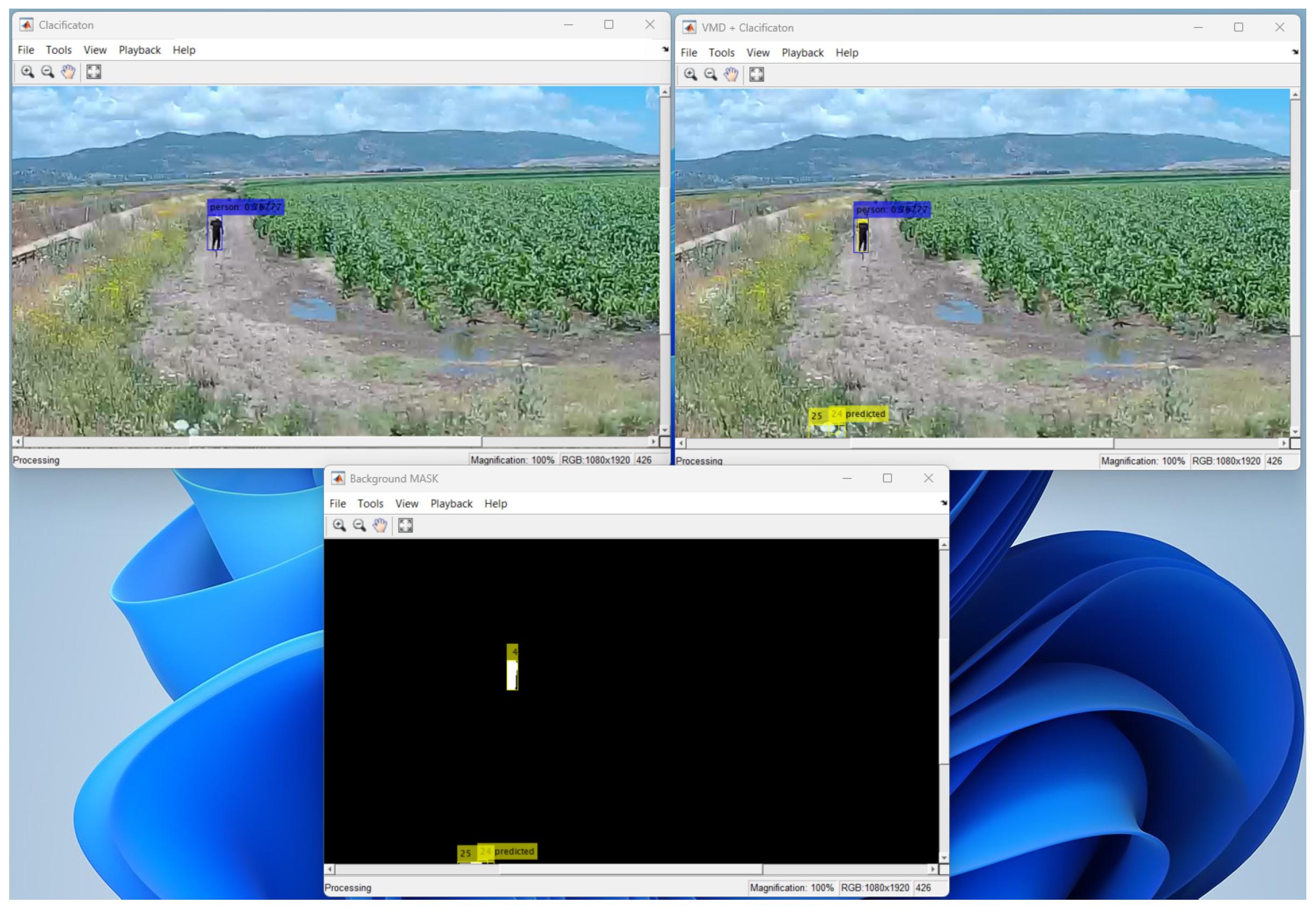

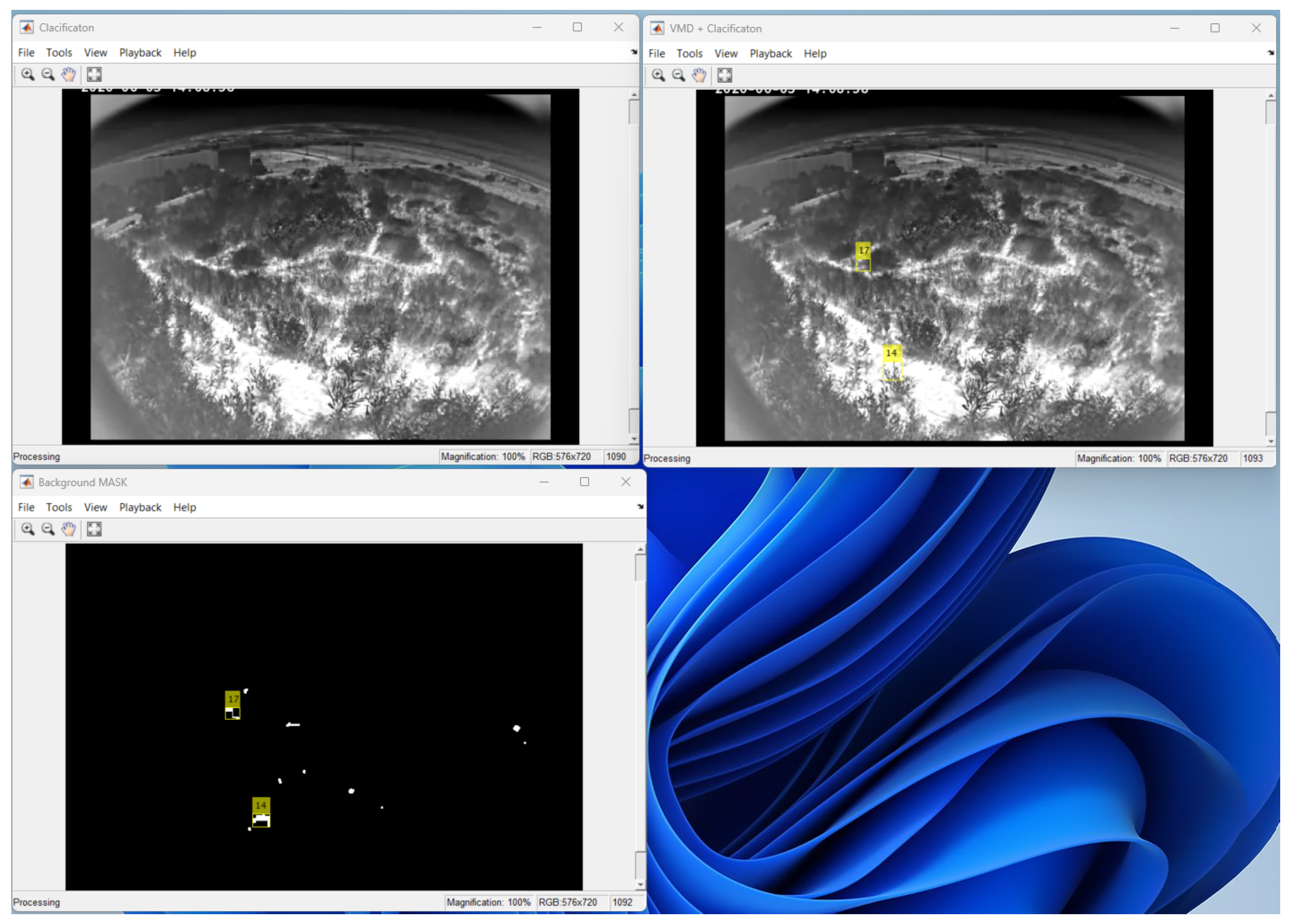

3.1.1. Background Subtraction Mask

3.1.2. Parameters Scaling Mask

3.1.3. Fusion with a Neural Network

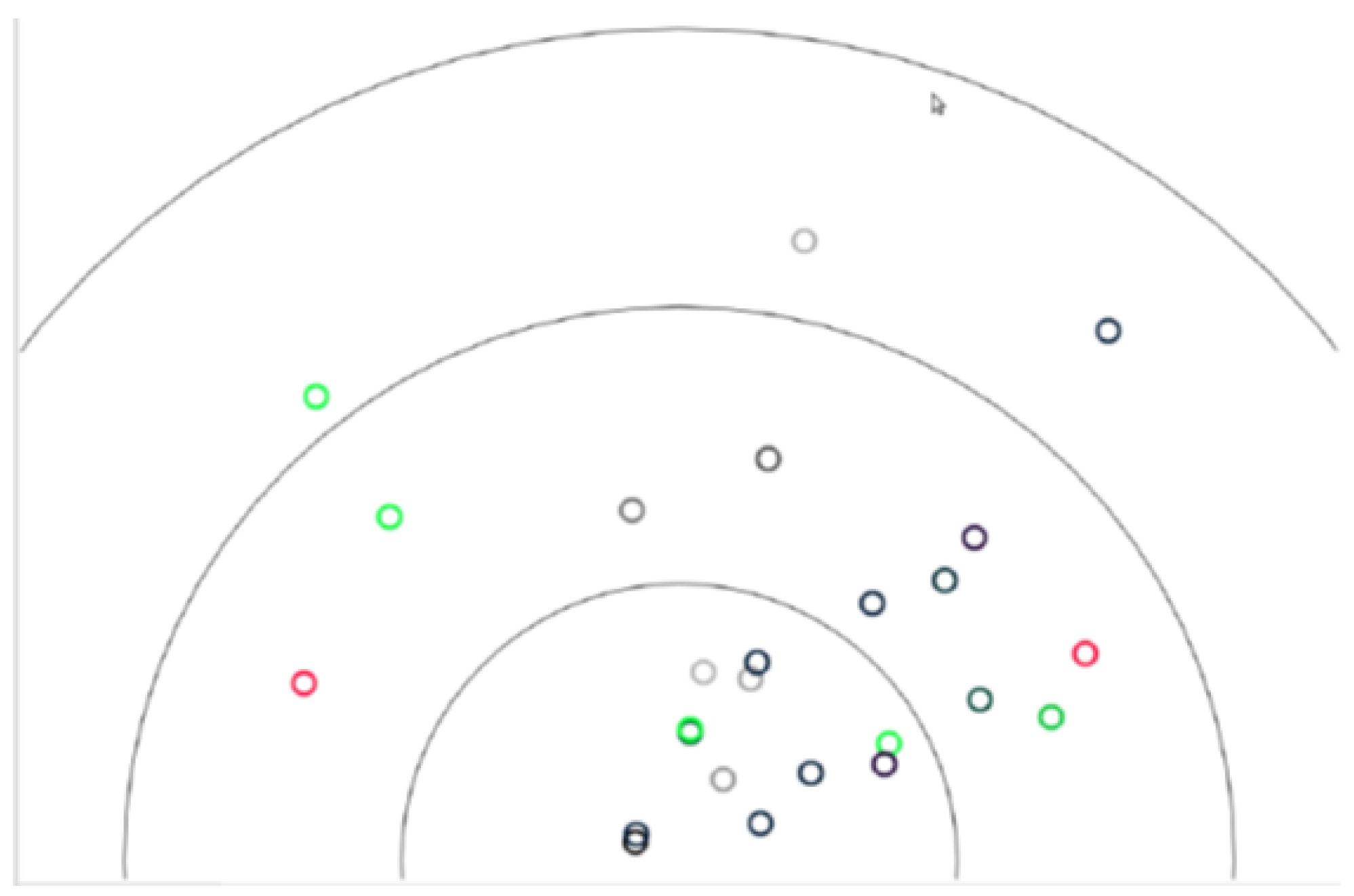

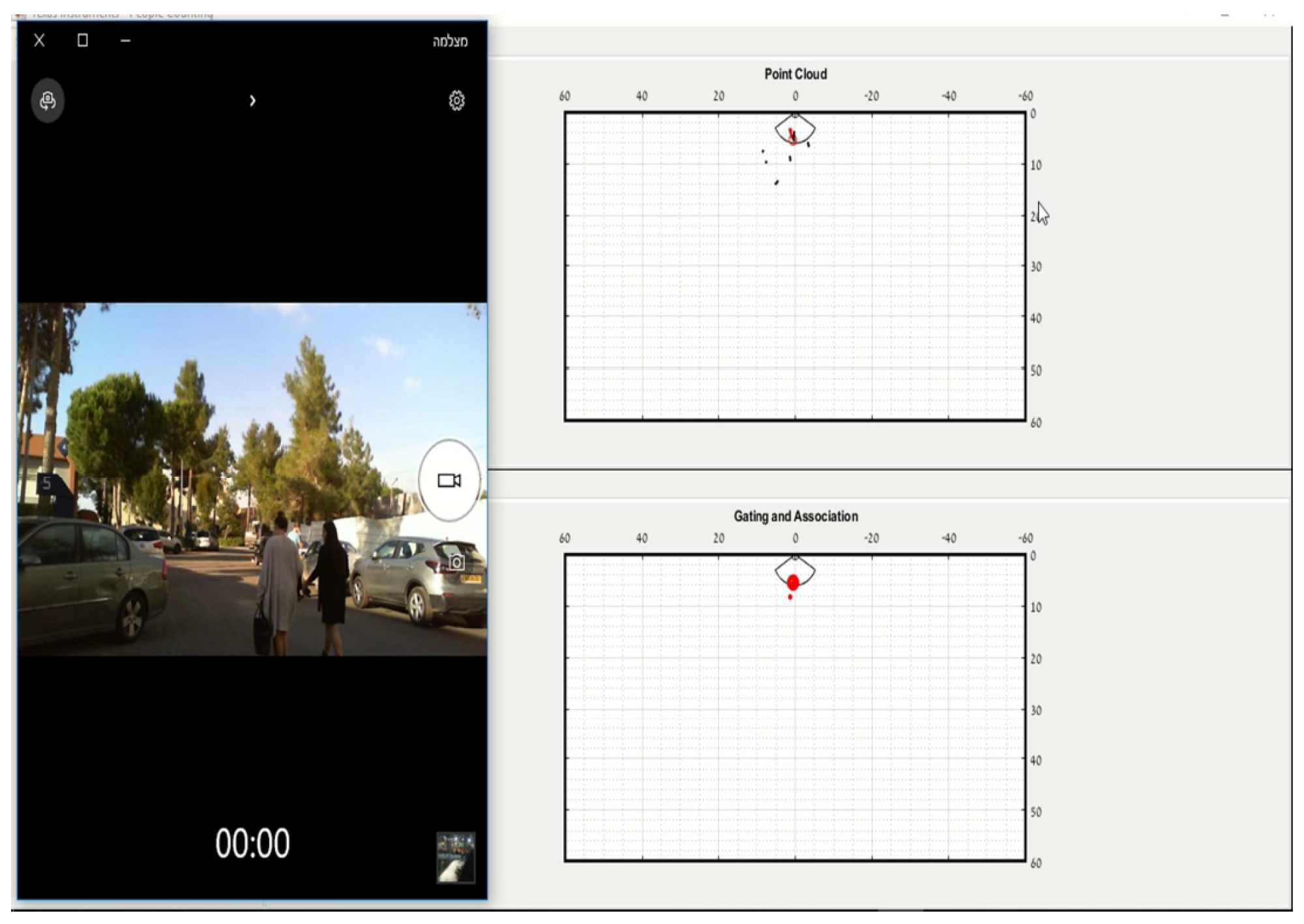

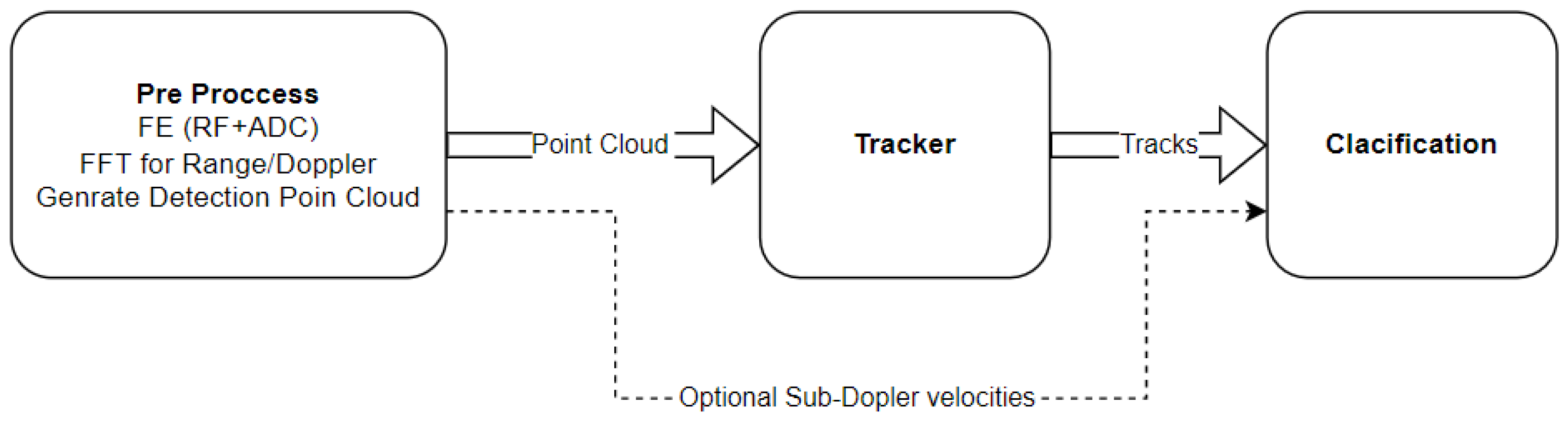

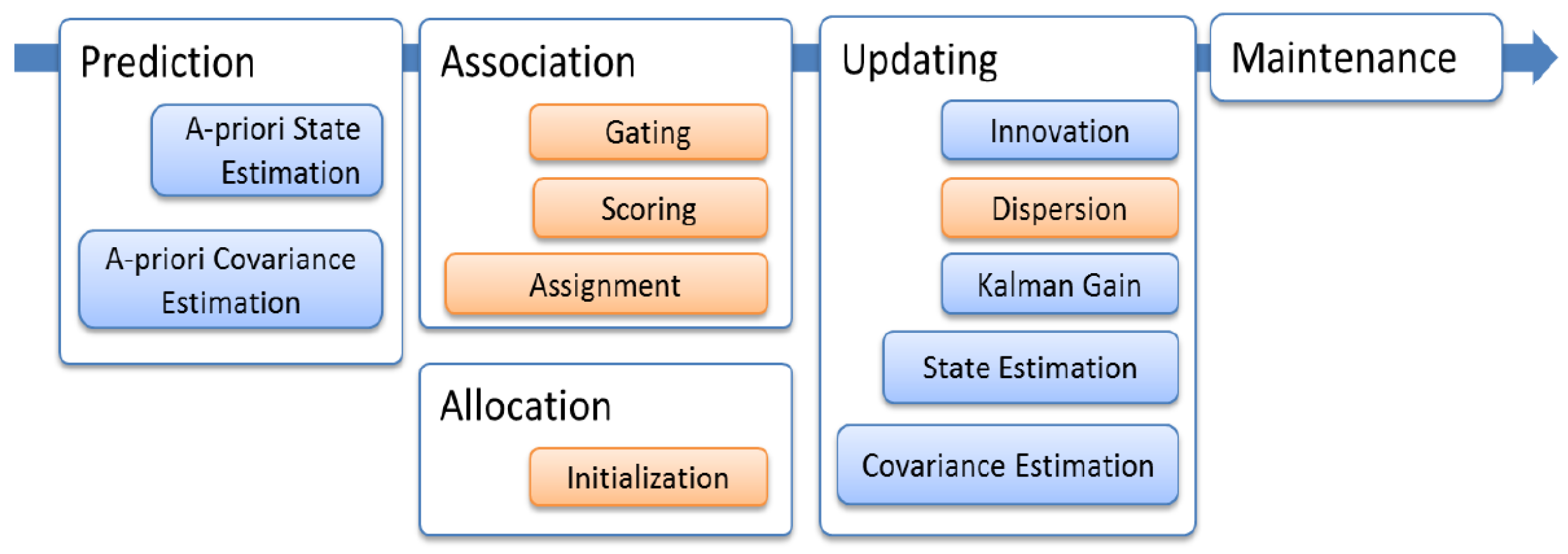

3.2. MMW Radar Object Tracking

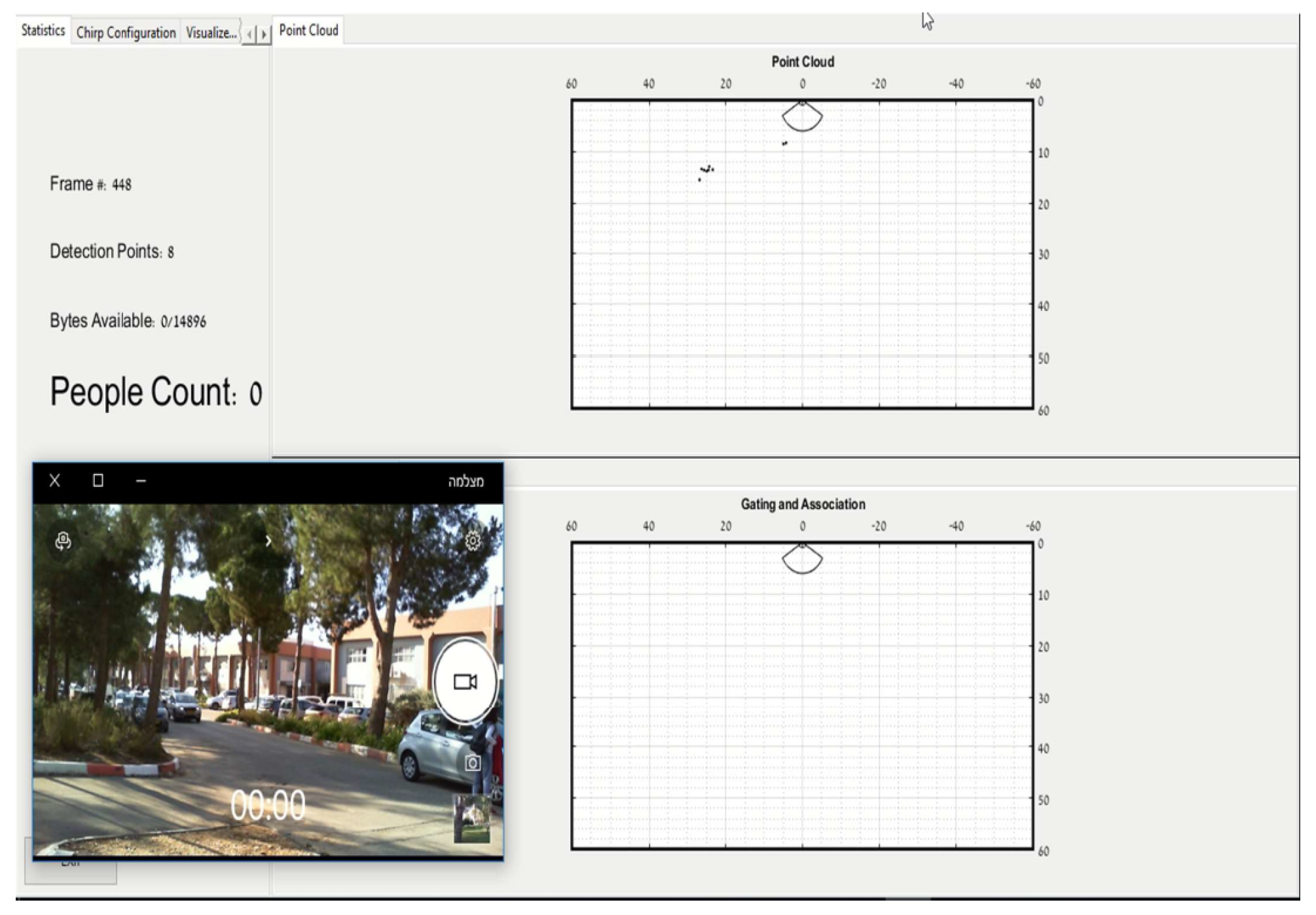

3.2.1. MMW Radar Object Tracking Used with IWR6843IS Radar

3.2.2. MMW Radar Object Tracking Used with iSYS-5020 Radar

- ID.

- State vector (range, range rate, range acceleration, azimuth, azimuth rate, azimuth acceleration).

- Timestamp (when last updated).

- Track probability.

- Age (only increased, if matching measurement is found, used as one of the properties for track validation).

- Update tracks by measurement.

- Merge existing tracks.

- Update clutter model.

- Create potential tracks.

- Validate tracks.

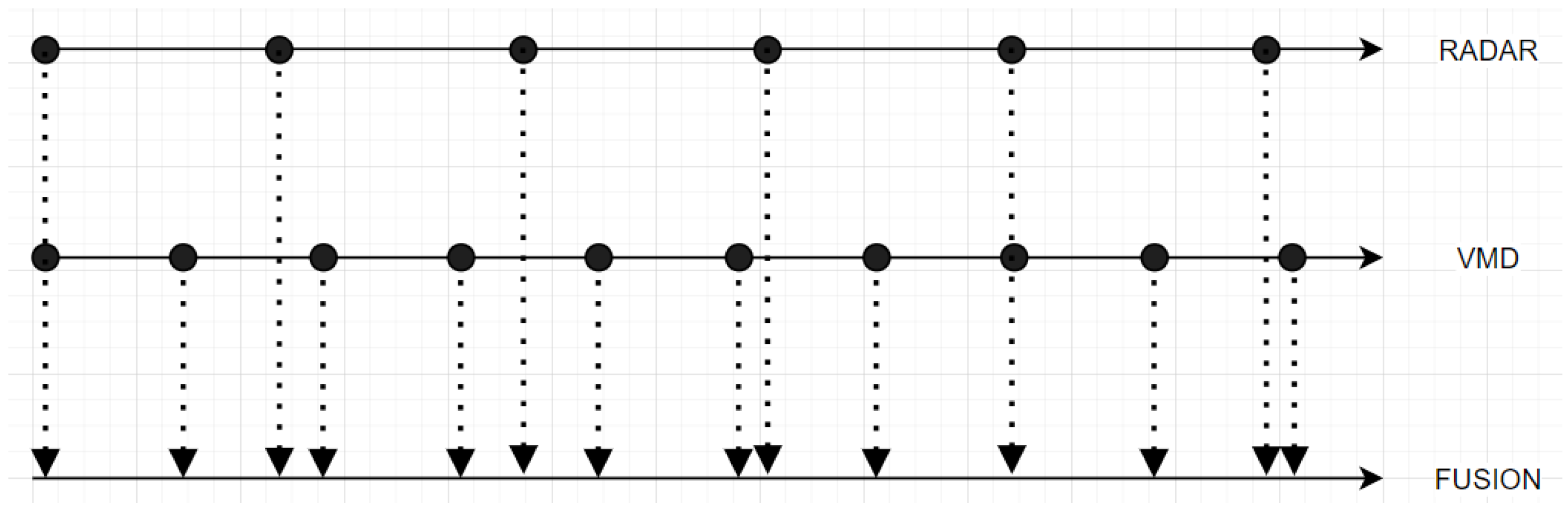

3.3. Fusion Strategy

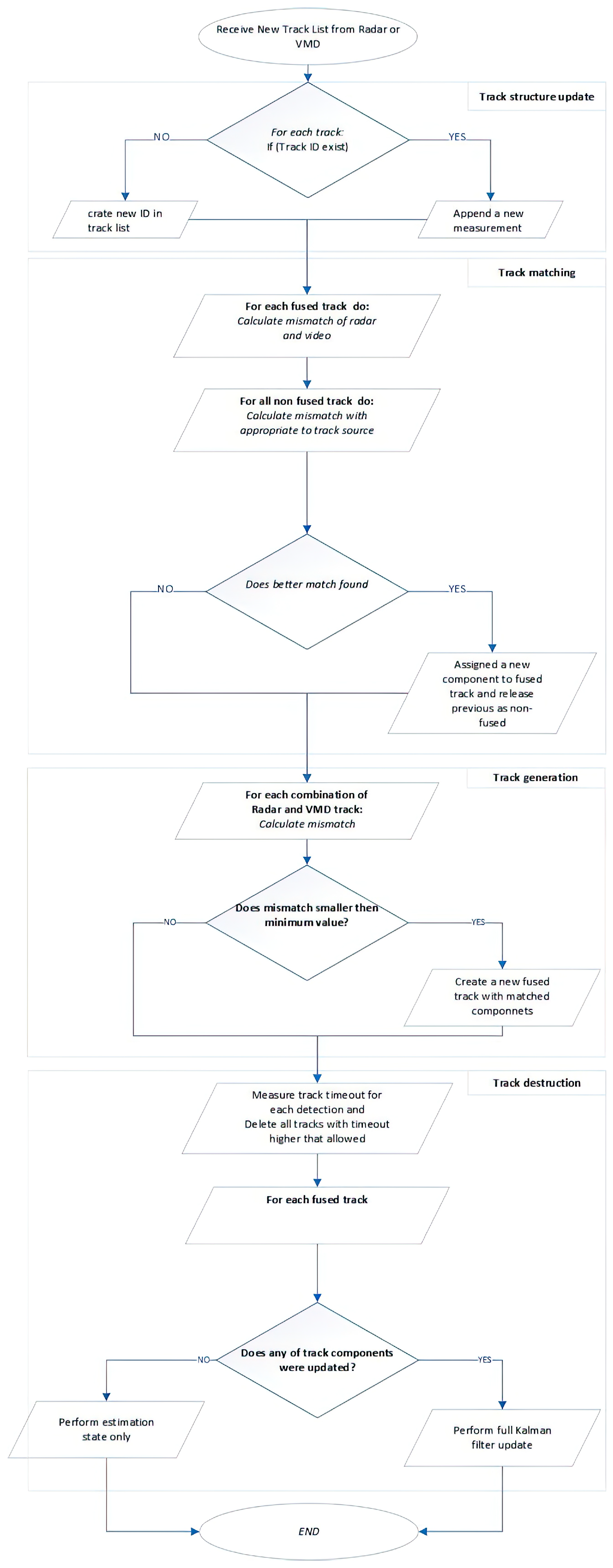

Track Fusion

- It is possible for both sources (the VMD system and the radar tracker) to create fusion tracks that are not yet complete and are waiting for a match to be found at a later stage. This means that the tracks may not yet contain all the necessary information about the object being tracked, but they can still be used to help estimate the object’s location and other characteristics. Once a match is found, the fusion tracks can be updated with additional information from the other source, and the complete track will be more accurate and reliable.

- Data association (matching the tracks to the Kalman state estimate) has already been performed in the tracker, so the input tracks can be used directly in the fusion process without further verification.

- VMD and radar tracker tracks can be merged (combined) if certain requirements are met. Once the tracks are merged, the resulting fusion track will contain information from both the VMD and the radar tracker.

- The track can be split (divided) if it is determined that the visual and radar data are diverging too much. This process of track splitting and merging is performed at each step where new data is received and updates are made to the track. This allows the track to be refined and kept as accurate as possible on the basis of the most current data.

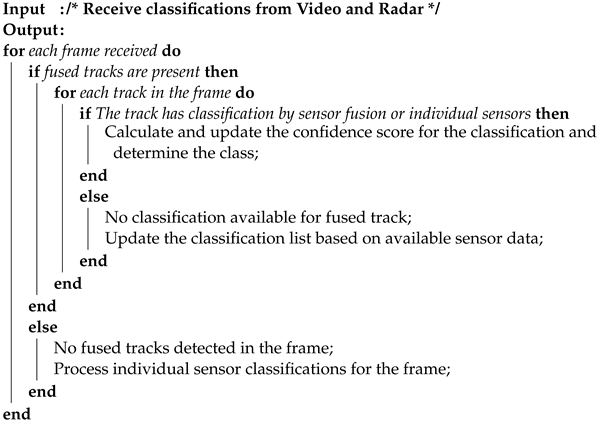

| Algorithm 1: Classification Fusion Algorithm |

|

4. Experimental Results

4.1. Dataset Used

4.2. Metrics

4.3. Test Setups

Camera Detection Test and Improvements

4.4. Achieved Results

- The tracks, which are approaching and staying together, will still be merged if a single blob is reported for many frames.

- During merging/splitting of three or more objects, it is very likely that the tracks will become unstable and will often switch between objects.

- The use of velocity-based or size-based filters still requires defining the geometry of the station relative to the observed area.

- The inclusion of shadow detection, when using the mixture of Gaussians (MOG) background subtractor [69], often causes the separation of objects into two or more detections. Currently, it is not clear how to join all such detections to a single track while still being able to resolve two separate tracks moving close to one another.

- Tuning a frame memory of the MOG background subtractor does not allow to cleanly remove some periodic background movement (water, branches, etc.) while at the same time avoiding false tracks from the large-scale changes (changed lighting, slowly moving clouds).

- The detection of left/added objects cannot be performed for a long period for the same reason of large-scale changes causing many false detections.

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| MMW | Millimeter-wave |

| CNN | Convolutional neural network |

| GNN | Graph neural networks |

| MHT | Multiple hypothesis tracking |

| PHD | Probability hypothesis density |

| GM-PHD | Gaussian mixture PHD |

| CMOS | Complementary metal-oxide semiconductor |

| ROI | Region of interest |

| VMD | Visual motion detection |

| PD | Probability of detection |

| FAR | False alarm rate |

| MOG | Mixture of Gaussians |

| NN | Neural network |

| Yolo | Deep learning model |

| PDA | Probabilistic data association |

| OSPA | Metric by penalizing localization errors |

| MOTP | Accuracy metric |

| MOTA | Accuracy metric |

| TIR | Thermal imaging |

Appendix A. Thermal Imaging Tracking and Localization

Appendix B. MMW Radar Tracking

- Millimeter-wave (MmWave) radar excels in providing precise measurements of location, velocity, and angle, all while maintaining interference-free performance.

- When compared to conventional radars operating at centimeter wavelengths, MmWave radar technology boasts superior antenna miniaturization capabilities.

- In civilian applications, MmWave radar systems often use frequencies at 24 GHz, 60 GHz, or 77 GHz for optimal performance.

Appendix C. Technical Details of the Implementation

- FrameID—Individual ID for each frame.

- Azimuth—Detected target azimuth from the sensor.

- Range—Detected target range from the sensor.

- Velocity—Detected target velocity.

- Signal—Detected target signal strategy.

Radar Calibration

References

- Roman, L.A.; Conway, T.M.; Eisenman, T.S.; Koeser, A.K.; Ordóñez Barona, C.; Locke, D.H.; Jenerette, G.D.; Östberg, J.; Vogt, J. Beyond ‘trees are good’: Disservices, management costs, and tradeoffs in urban forestry. Ambio 2021, 50, 615–630. [Google Scholar] [CrossRef] [PubMed]

- Esperon-Rodriguez, M.; Tjoelker, M.G.; Lenoir, J.; Baumgartner, J.B.; Beaumont, L.J.; Nipperess, D.A.; Power, S.A.; Richard, B.; Rymer, P.D.; Gallagher, R.V. Climate change increases global risk to urban forests. Nat. Clim. Chang. 2022, 12, 950–955. [Google Scholar] [CrossRef]

- Keefe, R.F.; Wempe, A.M.; Becker, R.M.; Zimbelman, E.G.; Nagler, E.S.; Gilbert, S.L.; Caudill, C.C. Positioning methods and the use of location and activity data in forests. Forests 2019, 10, 458. [Google Scholar] [CrossRef] [PubMed]

- Singh, R.; Gehlot, A.; Akram, S.V.; Thakur, A.K.; Buddhi, D.; Das, P.K. Forest 4.0: Digitalization of forest using the Internet of Things (IoT). J. King Saud. Univ. Comput. Inf. Sci. 2022, 34, 5587–5601. [Google Scholar] [CrossRef]

- Borges, P.; Peynot, T.; Liang, S.; Arain, B.; Wildie, M.; Minareci, M.; Lichman, S.; Samvedi, G.; Sa, I.; Hudson, N.; et al. A survey on terrain traversability analysis for autonomous ground vehicles: Methods, sensors, and challenges. Field Robot. 2022, 2, 1567–1627. [Google Scholar] [CrossRef]

- Blasch, E.; Pham, T.; Chong, C.Y.; Koch, W.; Leung, H.; Braines, D.; Abdelzaher, T. Machine learning/artificial intelligence for sensor data fusion–opportunities and challenges. IEEE Aerosp. Electron. Syst. Mag. 2021, 36, 80–93. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef]

- Ma, K.; Zhang, H.; Wang, R.; Zhang, Z. Target tracking system for multi-sensor data fusion. In Proceedings of the 2017 IEEE 2nd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 December 2017; pp. 1768–1772. [Google Scholar]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry remote sensing from unmanned aerial vehicles: A review focusing on the data, processing and potentialities. Remote. Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Qiu, S.; Zhao, H.; Jiang, N.; Wang, Z.; Liu, L.; An, Y.; Zhao, H.; Miao, X.; Liu, R.; Fortino, G. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 2022, 80, 241–265. [Google Scholar] [CrossRef]

- Rizi, M.H.P.; Seno, S.A.H. A systematic review of technologies and solutions to improve security and privacy protection of citizens in the smart city. Internet Things 2022, 20, 100584. [Google Scholar] [CrossRef]

- Elmustafa, S.A.A.; Mujtaba, E.Y. Internet of things in smart environment: Concept, applications, challenges, and future directions. World Sci. News 2019, 134, 1–51. [Google Scholar]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A deep learning-based radar and camera sensor fusion architecture for object detection. In 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF); IEEE: Piscataway, NJ, USA, 2019; pp. 1–7. [Google Scholar]

- Vo, B.N.; Mallick, M.; Bar-Shalom, Y.; Coraluppi, S.; Osborne, R.; Mahler, R.; Vo, B.T. Multitarget tracking. In Wiley Encyclopedia of Electrical and Electronics Engineering; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2015. [Google Scholar]

- Zhu, Y.; Wang, T.; Zhu, S. Adaptive Multi-Pedestrian Tracking by Multi-Sensor: Track-to-Track Fusion Using Monocular 3D Detection and MMW Radar. Remote Sens. 2022, 14, 1837. [Google Scholar] [CrossRef]

- Tan, M.; Chao, W.; Cheng, J.K.; Zhou, M.; Ma, Y.; Jiang, X.; Ge, J.; Yu, L.; Feng, L. Animal detection and classification from camera trap images using different mainstream object detection architectures. Animals 2022, 12, 1976. [Google Scholar] [CrossRef] [PubMed]

- Feichtenhofer, C.; Pin, A.; Zisserman, A. Detect to Track and Track to Detect. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Andriluka, M.; Roth, S.; Schiele, B. People-tracking-by-detection and people-detection-by-tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; Volume 14. [Google Scholar] [CrossRef]

- Zvonko, R. A study of a target tracking method using Global Nearest Neighbor algorithm. Vojnoteh. Glas. 2006, 54, 160–167. [Google Scholar]

- Thomas, F.; Bar-Shalom, Y.; Scheffe, M. Sonar tracking of multiple targets using joint probabilistic data association. IEEE J. Ocean. Eng. 1983, 8, 173–184. [Google Scholar] [CrossRef]

- Reid, D. An algorithm for tracking multiple targets. IEEE Trans. Autom. Control. 1979, 24, 843–854. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Simon, J.; Julier, J.K.U. New extension of the Kalman filter to nonlinear systems. Signal Process. Sens. Fusion Target Recognit. VI 1997, 3068, 182–193. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; Department of Computer Science, University of North Carolina: Chapel Hill, NC, USA, 1999. [Google Scholar]

- Blackman, S.S.; Popoli, R. Design and Analysis of Modern Tracking Systems; Artech House: Norwood, MA, USA, 1999. [Google Scholar]

- Blackman, S. Multiple hypothesis tracking for multiple target tracking. IEEE Trans. Aerosp. Electron. Syst. 2004, 19, 5–18. [Google Scholar] [CrossRef]

- Mahler, R. Multitarget Bayes Filtering via First-Order Multitarget Moments. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1152–1178. [Google Scholar] [CrossRef]

- Lahoz-Monfort, J.J.; Magrath, M.J. A comprehensive overview of technologies for species and habitat monitoring and conservation. BioScience 2021, 71, 1038–1062. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Wang, C.; Jiang, B.; Song, H.; Meng, Q. Visual perception enabled industry intelligence: State of the art, challenges and prospects. IEEE Trans. Ind. Inform. 2020, 17, 2204–2219. [Google Scholar] [CrossRef]

- Adaval, R.; Saluja, G.; Jiang, Y. Seeing and thinking in pictures: A review of visual information processing. Consum. Psychol. Rev. 2019, 2, 50–69. [Google Scholar] [CrossRef]

- Kahmen, O.; Rofallski, R.; Luhmann, T. Impact of stereo camera calibration to object accuracy in multimedia photogrammetry. Remote. Sens. 2020, 12, 2057. [Google Scholar] [CrossRef]

- Garg, R.; Wadhwa, N.; Ansari, S.; Barron, J.T. Learning single camera depth estimation using dual-pixels. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 2 October–2 November 2019; pp. 7628–7637. [Google Scholar]

- Hu, J.; Zhang, Y.; Okatani, T. Visualization of convolutional neural networks for monocular depth estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 2 October–2 November 2019; pp. 3869–3878. [Google Scholar]

- Arnold, E.; Al-Jarrah, O.Y.; Dianati, M.; Fallah, S.; Oxtoby, D.; Mouzakitis, A. A Survey on 3D Object Detection Methods for Autonomous Driving Applications. IEEE Trans. Intell. Transp. Syst. 2019, 20, 3782–3795. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.L.X. 3D Object Detection for Autonomous Driving. A Survey. Pattern Recognit. 2021, 39, 1152–1178. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote. Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Jha, U.S. The millimeter Wave (mmW) radar characterization, testing, verification challenges and opportunities. In Proceedings of the 2018 IEEE Autotestcon, National Harbor, MD, USA, 17–20 September 2018; pp. 1–5. [Google Scholar]

- Katkevičius, A.; Plonis, D.; Damaševičius, R.; Maskeliūnas, R. Trends of microwave devices design based on artificial neural networks: A review. Electronics 2022, 11, 2360. [Google Scholar] [CrossRef]

- Plonis, D.; Katkevičius, A.; Gurskas, A.; Urbanavičius, V.; Maskeliūnas, R.; Damaševičius, R. Prediction of meander delay system parameters for internet-of-things devices using pareto-optimal artificial neural network and multiple linear regression. IEEE Access 2020, 8, 39525–39535. [Google Scholar] [CrossRef]

- van Berlo, B.; Elkelany, A.; Ozcelebi, T.; Meratnia, N. Millimeter Wave Sensing: A Review of Application Pipelines and Building Blocks. IEEE Sens. J. 2021, 8, 10332–10368. [Google Scholar] [CrossRef]

- Hurl, B.; Czarnecki, K.; Waslander, S. Precise synthetic image and lidar (presil) dataset for autonomous vehicle perception. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2522–2529. [Google Scholar]

- Ilci, V.; Toth, C. High definition 3D map creation using GNSS/IMU/LiDAR sensor integration to support autonomous vehicle navigation. Sensors 2020, 20, 899. [Google Scholar] [CrossRef] [PubMed]

- Raj, T.; Hanim Hashim, F.; Baseri Huddin, A.; Ibrahim, M.F.; Hussain, A. A survey on LiDAR scanning mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Sens. J. 2019, 21, 2847–2868. [Google Scholar] [CrossRef]

- Buchman, D.; Drozdov, M.; Mackute-Varoneckiene, A.; Krilavicius, T. Visual and Radar Sensor Fusion for Perimeter Protection and Homeland Security on Edge. In Proceedings of the IVUS 2020: Information Society and University Studies, Kaunas, Lithuania, 23 April 2020; Volume 21. [Google Scholar]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H. Fusion of 3D LIDAR and Camera Data for Object Detection in Autonomous Vehicle Applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef]

- Samal, K.; Kumawat, H.; Saha, P.; Wolf, M.; Mukhopadhyay, S. Task-Driven RGB-Lidar Fusion for Object Tracking in Resource-Efficient Autonomous System. In Proceedings of the IVUS 2020: Information Society and University Studies, Kaunas, Lithuania, 23 April 2020; Volume 7, pp. 102–112. [Google Scholar]

- Varone, G.; Boulila, W.; Driss, M.; Kumari, S.; Khan, M.K.; Gadekallu, T.R.; Hussain, A. Finger pinching and imagination classification: A fusion of CNN architectures for IoMT-enabled BCI applications. Inf. Fusion 2024, 101, 102006. [Google Scholar] [CrossRef]

- Lee, K.H.; Kanzawa, Y.; Derry, M.; James, M.R. Multi-Target Track-to-Track Fusion Based on Permutation Matrix Track Association. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018. [Google Scholar]

- Kong, L.; Peng, X.; Chen, Y.; Wang, P.; Xu, M. Multi-sensor measurement and data fusion technology for manufacturing process monitoring: A literature review. Int. J. Extrem. Manuf. 2020, 2, 022001. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Mujtaba, G.; Al-Garadi, M.A. Data fusion and multiple classifier systems for human activity detection and health monitoring: Review and open research directions. Inf. Fusion 2019, 46, 147–170. [Google Scholar] [CrossRef]

- El Madawi, K.; Rashed, H.; El Sallab, A.; Nasr, O.; Kamel, H.; Yogamani, S. Rgb and lidar fusion based 3d semantic segmentation for autonomous driving. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 7–12. [Google Scholar]

- Qi, R.N.H. CenterFusion: Center-based Radar and Camera Fusion for 3D Object Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021. [Google Scholar]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-Road Vehicle Detection and Tracking Using MMW Radar and Monovision Fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Chang, S.; Zhang, Y.; Zhang, F.; Zhao, X.; Huang, S.; Feng, Z.; Wei, Z. Spatial Attention Fusion for Obstacle Detection Using MmWave Radar and Vision Sensor. Sensors 2020, 20, 956. [Google Scholar] [CrossRef]

- Zhang, W.; Zhou, H.; Sun, S.; Wang, Z.; Shi, J.; Loy, C.C. Robust Multi-Modality Multi-Object Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2020. [Google Scholar]

- Wang, Z.; Miao, X.; Huang, Z.; Luo, H. Research of Target Detection and Classification Techniques Using Millimeter-Wave Radar and Vision Sensors. Remote Sens. 2021, 13, 1064. [Google Scholar] [CrossRef]

- Cao, R.Z.S. Extending Reliability of mmWave Radar Tracking and Detection via Fusion With Camera. IEEE Access 2021, 7, 137065–137079. [Google Scholar]

- Kim, H.S.W.C.H. Robust Vision-Based Relative-Localization Approach Using an RGB-Depth Camera and LiDAR Sensor Fusion. IEEE Trans. Ind. Electron. 2016, 63, 3725–3736. [Google Scholar]

- Texas Instruments. Tracking Radar Targets with Multiple Reflection Points; Texas Instruments: Dallas, TX, USA, 2018; Available online: https://dev.ti.com/tirex/explore/content/mmwave_industrial_toolbox_3_2_0/labs/lab0013_traffic_monitoring_16xx/src/mss/gtrack/docs/Tracking_radar_targets_with_multiple_reflection_points.pdf (accessed on 2 October 2023).

- Kirubarajan, T.; Bar-Shalom, Y.; Blair, W.D.; Watson, G.A. IMMPDA solution to benchmark for radar resource allocation and tracking in the presence of ECM. IEEE Trans. Aerosp. Electron. Syst. 1998, 34, 1023–1036. [Google Scholar] [CrossRef]

- Otto, C.; Gerber, W.; León, F.P.; Wirnitzer, J. A Joint Integrated Probabilistic Data Association Filter for pedestrian tracking across blind regions using monocular camera and radar. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Alcala de Henares, Spain, 3–7 June 2012. [Google Scholar]

- Svensson, L.; Granström, K. Multiple Object Tracking. Available online: https://www.youtube.com/channel/UCa2-fpj6AV8T6JK1uTRuFpw (accessed on 15 October 2019).

- Shi, X.; Yang, F.; Tong, F.; Lian, H. A comprehensive performance metric for evaluation of multi-target tracking algorithms. In Proceedings of the 2017 3rd International Conference on Information Management (ICIM), Chengdu, China, 21–23 April 2017; pp. 373–377. [Google Scholar]

- Weng, X.; Wang, J.; Held, D.; Kitani, K. 3d multi-object tracking: A baseline and new evaluation metrics. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10359–10366. [Google Scholar]

- Texas Instruments. IWR6843ISK. Available online: https://www.ti.com.cn/tool/IWR6843ISK (accessed on 2 October 2023).

- Opgal. 2023. Available online: https://www.opgal.com/products/sii-uc-uncooled-thermal-core/ (accessed on 2 October 2023).

- iSYS-5020 Radarsystem for Security Applications. Available online: https://www.innosent.de/en/radar-systems/isys-5020-radar-system/ (accessed on 2 October 2023).

- pen Source Computer Vision. cv::BackgroundSubtractorMOG2 Class Reference. Available online: https://docs.opencv.org/4.1.0/d7/d7b/classcv_1_1BackgroundSubtractorMOG2.html (accessed on 2 October 2023).

- Buchman, D.; Drozdov, M.; Krilavičius, T.; Maskeliūnas, R.; Damaševičius, R. Pedestrian and Animal Recognition Using Doppler Radar Signature and Deep Learning. Sensors 2022, 22, 3456. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; He, Z.; Li, C.; Li, J.; Zhou, Z.; Yuan, D.; Li, J.; Yang, K.; Fan, N.; et al. LSOTB-TIR: A Large-Scale High-Diversity Thermal Infrared Object Tracking Benchmark. In Proceedings of the 28th ACM International Conference on Multimedia (MM ’20), Seattle, WA, USA, 12–16 October 2020. [Google Scholar] [CrossRef]

- Banuls, A.; Mandow, A.; Vázquez-Martín, R.; Morales, J.; García-Cerezo, A. Object Detection from Thermal Infrared and Visible Light Cameras in Search and Rescue Scenes. In Proceedings of the 2020 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR) 2020, Abu Dhabi, United Arab Emirates, 4–6 November 2020. [Google Scholar] [CrossRef]

- Haseeb, M.A.; Ristić-Durrant, D.; Gräser, A. Long-range obstacle detection from a monocular camera. In Proceedings of the 9th International Conference on Circuits, Systems, Control, Signals (CSCS18), Sliema, Malta, 22–24 June 2018. [Google Scholar]

- Huang, K.C.; Wu, T.H.; Su, H.T.; Hsu, W.H. MonoDTR: Monocular 3D Object Detection with Depth-Aware Transformer. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Texas Instruments. Tracking Radar Targets with Multiple Reflection Points; Texas Instruments: Dallas, TX, USA, 2021; Available online: https://dev.ti.com/tirex/explore/node?node=A__AAylBLCUuYnsMFERA.sL8g__com.ti.mmwave_industrial_toolbox__VLyFKFf__LATEST (accessed on 2 October 2023).

| Daylight Camera | Thermal Camera | Radar | Lidar | |

|---|---|---|---|---|

| Angular Resolution | ||||

| Depth Resolution | ||||

| Velocity | ||||

| Depth Range | ||||

| Traffic Sights | ||||

| Object Edge Precision | ||||

| Lane Detection | ||||

| Color Recognition | ||||

| Adverse Weaver | ||||

| Low-Light Performance | ||||

| Cost |

| Property | Value | Value |

|---|---|---|

| Operating frequency | 62 GHz | 24 GHz |

| Bandwidth | 1768.66 MHz | 250 MHz |

| Chirp time | 32.0 us | |

| Range resolution | 0.15 m | 2 m |

| Speed resolution | 0.4 km/h | 0.5 km/h |

| Detection range | up to 100 m | up to 200 m |

| Detection speed | −50–50 km/h | −100–100 km/h |

| Detection angle | 48 degrees (azimuth) | 75 degrees (azimuth) |

| Property | Value |

|---|---|

| Sensor resolution | 640 × 480 |

| Sensor type | Uncooled Bolometr |

| Focal Length | FOV |

| 35 mm | Horizontal: 17 degrees, Vertical: 13 degrees, Diagonal: 20.9 degrees |

| 8.5 mm | Horizontal: 73 degrees, Vertical: 54 degrees, Diagonal: 93 degrees |

| Approaching | Meeting/Crossing | Complex Enviroment | |

|---|---|---|---|

| COTS tracker | |||

| Track detection distance | 77.9092 | 58.8766 | 95.847 |

| Average object count accuracy | 0.817647 | 0.880142 | 0.511081 |

| False alarm rate | 0 | 0.0247525 | 0.878553 |

| Average OSPA, (c = 1) | 0.388855 | 0.495837 | 2.18644 |

| Average OSPA, (c = 10) | 1.91344 | 2.20029 | 10.6034 |

| Average OSPA, (c = 50) | 8.6894 | 9.69494 | 51.6263 |

| Own tracker | |||

| Track detection distance | 92.5097 | 75.8336 | 126.063 |

| Average object count accuracy | 0.905882 | 0.916934 | 0.900298 |

| False alarm rate | 0 | 0.00495049 | 0.108527 |

| Average OSPA, (c = 1) | 0.325851 | 0.363873 | 0.484733 |

| Average OSPA, (c = 10) | 1.1516 | 1.59612 | 2.14556 |

| Average OSPA, (c = 50) | 4.64887 | 6.77777 | 9.27821 |

| Testing Scenario | Event Duration, s | Detection Duration, s | Detection Duration by Competition, s | Event Type |

|---|---|---|---|---|

| 1 | 14 | 6 | 11 (7) | Person approaching |

| 2 | 17 | 0 | 4 (0) | Person approaching, Was tracked from previous event |

| 3 | 9 | 2 | not detected | Person approaching and hiding |

| 4 | 18 | 5 | 7 (6) | Person approaching |

| 5 | 22 | 2.5 | 2 (2) | Person receding and hiding |

| 6 | 9 | 2 | not detected | Person receding from behind obstacle, Lost and retracked |

| 7 | 19 | 2 | 2 (2) | Person receding and hiding |

| 8 | 17 | 2 | not detected | Car sideways, Very far |

| 9 | 9 | 3 | not detected | Person receding from behind obstacle |

| 10 | 6 | 4 | not detected | Car sideways, Was part of the background |

| 11 | 18 | 4 | not detected | Car sideways, Was part of the background |

| 12 | 10 | 7 | not detected (5) | Group approaching, Event end after the group split |

| 13 | 60 | 2 | Not detected | Car, Sideways |

| 14 | 33 | 3 | 5 (5), Bus | Sideways |

| 15 | 41 | 6 | 6 (6), Person | Along perimeter, partially covered |

| 16 | 32 | 8 | 8 (8), Person | Along perimeter, partially covered |

| 17 | 71 | 3.5 | 3 (2), Person | Receding |

| 18 | 8 | 2 | Not detected | Car, Sideways, very far |

| 19 | 16 | 5 | 7 (5) | Person, Receding from previously stationary position |

| 20 | 75 | 2 | 2.5 (1.5), Person | Receding, was tracked while stationary |

| 21 | 35 | 2.5 | 5 (3) | Car, Sideways, very far |

| 22 | 20 | 0 | 0 (0) | Person, Was tracked from previous event |

| Radar | VMD | ||

|---|---|---|---|

| Average object count accuracy | 90% | 96% | 98.8% |

| False alarm rate (Per Day) | 15 | 30 | 0.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buchman, D.; Krilavičius, T.; Maskeliūnas, R. Enhancing Forest Security through Advanced Surveillance Applications. Forests 2023, 14, 2335. https://doi.org/10.3390/f14122335

Buchman D, Krilavičius T, Maskeliūnas R. Enhancing Forest Security through Advanced Surveillance Applications. Forests. 2023; 14(12):2335. https://doi.org/10.3390/f14122335

Chicago/Turabian StyleBuchman, Danny, Tomas Krilavičius, and Rytis Maskeliūnas. 2023. "Enhancing Forest Security through Advanced Surveillance Applications" Forests 14, no. 12: 2335. https://doi.org/10.3390/f14122335

APA StyleBuchman, D., Krilavičius, T., & Maskeliūnas, R. (2023). Enhancing Forest Security through Advanced Surveillance Applications. Forests, 14(12), 2335. https://doi.org/10.3390/f14122335