1. Introduction

As of 2022, the country has a forest area of 231 million hectares, with a forest cover of 24.02% [

1]. In the past five years, there have been 7301 forest fires in China. The forest, known as the lungs of the earth, is of vital importance to the earth’s ecology. It maintains ecological stability by purifying harmful gases and releasing the oxygen necessary for the survival of plants and animals. In addition, forests regulate temperature, keep soil erosion at bay, and maintain the diversity of biological species. Forest fire is a worldwide forestry disaster and the first of the three major forest natural disasters. Once a forest fire occurs, it will cause huge natural environmental losses and economic losses. Forest fires are the main forestry disaster causing loss of forest resources, forest ecosystem safety, and personal injury in China. And forest fire prevention is also an important part of disaster prevention and mitigation work in China [

2]. Therefore, it is of great importance to guarantee the safety of forest resources, ecological safety, and people’s lives and property.

China has formed a four-level, three-dimensional forest fire monitoring level, including ground patrol, near-ground monitoring, aerial patrol, and satellite monitoring. Ground patrol fire prevention measures mainly rely on forest rangers and forest fire prevention professionals to inspect around the clock, so manual inspection has some defects, such as heavy tasks, poor objectivity, and low efficiency, among others. Near-ground monitoring includes watchtower detection and remote video detection, which requires watchmen and monitoring personnel to use observation equipment for monitoring and analysis [

3]. It requires a high technical level of monitoring personnel and easily produces monitoring blind spots. Aerial patrol monitoring mainly uses airplanes and drones to carry out high-altitude patrols, mainly used in the sparsely populated primitive forests. However, the cost of patrolling is high and cannot be monitored continuously on a large scale for a long time [

4]. Satellite early warning monitoring monitors the heat sources of objects on the ground through artificial satellites, but it will be disturbed by factors such as high temperature, bare ground, and strong reflectors, resulting in misjudgment and omission of fire points [

5].

Zheng et al. improved the dynamic convolution neural network and designed a forest fire prediction model that can accurately identify and classify the forest fire risk under the condition of natural light [

6]. Yang et al. used vector machines for forest fire detection [

7]. S. Sudhakar proposed a UAV-based method for forest fire detection with improved recognition algorithms applicable to UAVs to reduce the false alarms of forest fires [

8]. Yoojin Kang et al. presented a model for forest fire detection using geosynchronous satellite data that effectively reduces detection delays and false alarms [

9]. Ding proposes a new flame recognition color space that reduces the false alarm rate of flame recognition [

10]. The method introduced by Zhao et al. put forth a novel approach for detecting diminutive targets of forest fires, thereby augmenting the precision in discerning such minute instances of forest fires [

11]. Noureddine Moussa et al. proposed a novel routing protocol that makes the use of wireless sensor networks to detect forest fires more energy-efficient and reliable [

12]. Zhang et al. orchestrated the development of a wireless sensor network tailored for the purpose of detecting forest fires. This endeavor involved the amalgamation of satellite surveillance, airborne observation, and terrestrial surveillance modalities [

13].

Amidst the swift advancement and widespread adoption of technological innovations such as computer vision, image processing, and artificial intelligence, forest fire identification technology based on video images has attracted a lot of attention. The trajectory of development within forest fire recognition technology reliant upon video imagery can be primarily categorized into two cardinal avenues of progress. One is the background segmentation of the image, and then the theoretical research algorithm is designed; the features are extracted manually and sent to the classifier to recognize the feature image [

14]. The second is image recognition based on deep learning, which sends the image into a convolutional neural network and automatically extracts features to recognize the image [

15,

16,

17,

18,

19]. In recent years, many researchers have applied deep learning to forest fire detection. Sathishkumar et al. proposed a method to detect forest fires using forgettable learning based on deep learning [

20]. Chen et al. used an adaptive copy–paste data enhancement method and a dice loss method to improve the semantic segmentation of forest flames [

21]. Thus, compared with traditional fire monitoring methods, forest fire monitoring methods based on image recognition have a wide range of applications, large monitoring areas, lower cost, and higher accuracy. Although these studies have made good progress, there is still room for improvement in the effect of the model. At present, forest fire identification often uses only a single model for monitoring. However, this method has a high rate of misidentification of forest fires in complex environments, and the accuracy still needs to be improved.

At present, within the domain of forest fire detection, the attainment of high-precision detection for forest fires characterized by diminutive targets continues to pose a formidable challenge. This challenge has led numerous scholars to immerse themselves in endeavors aimed at mitigating the issue of overlooking and misidentifying forest fires with small-scale targets. Consequently, an array of models has been innovatively formulated with a specific focus on the detection of forest fires characterized by these smaller targets. However, it is essential to recognize that forest fires distinguished by smaller-scale targets as opposed to their larger-scale counterparts exhibit conspicuous dissimilarities in terms of both spatial dimensions and inherent characteristics [

22]; large target forest fires tend to have a large flame area, and their features are relatively easy to be captured in images or remote sensing data, whereas small-target forest fires usually have a small flame area and their features tend to be weak. Detection models designed for small-target forest fires may perform well in capturing the details and features of small targets but may experience performance degradation when dealing with large targets. This is because the model may focus too much on the details of small targets and ignore the overall characteristics of large targets. Forest fire detection models designed for large target forest fires are again prone to the problem of missed detection of small target forest fires. To summarize, the current forest fire detection model still lacks a model that comprehensively considers targets of different scales, and it is difficult to achieve high-precision detection of both large and small targets at the same time. In addition, the current forest fire identification often uses a single model for detection; however, the present approach is beset by a notable proclivity for misclassifying forest fires within intricate woodland settings, signifying a substantial misjudgment rate. Consequently, there persists a palpable imperative to enhance the precision of the methodology. Therefore, this paper designs an integrated learning-based forest fire detection model. This model is meticulously crafted to facilitate adaptive learning and the extraction of forest fire manifestations. The primary contributions of this paper are outlined as follows:

(1) In this paper, the WSS model, which is good at large target forest fire detection, and the WSB model, which is good at small target forest fire detection, are designed, and the two models are integrated based on the method of WBF, and a more efficient forest fire detection model, WSB_WSS, is proposed. The integrated model gives full play to the advantages of the two models and is more robust and more generalized than the recognition results of a single model.

(2) A multi-scale forest fire detection method is designed to solve the problem of obvious differences in the scales of large and small targets. The information transfer and feature expression ability of the network is enhanced by two-way connection and cross-layer feature fusion. The introduction of SPPFCSPC allows the model to acquire spatial information at different scales, thus improving the perception of targets of different sizes.

(3) In order to optimize the model’s anti-interference ability against noise, occlusion, and scale changes, a new edge loss function, WFIoU Loss, is designed in this paper, which not only improves the training speed of the model but also improves the accuracy of forest fire detection.

(4) In order to amplify the model’s emphasis on forest fires, this study introduces both the SimAM attention mechanism and the SE attention mechanism, which suppresses unimportant features and makes the model more accurately capture features related to forest fires.

3. Methods

3.1. The Models Proposed in This Paper—WSB and WSS

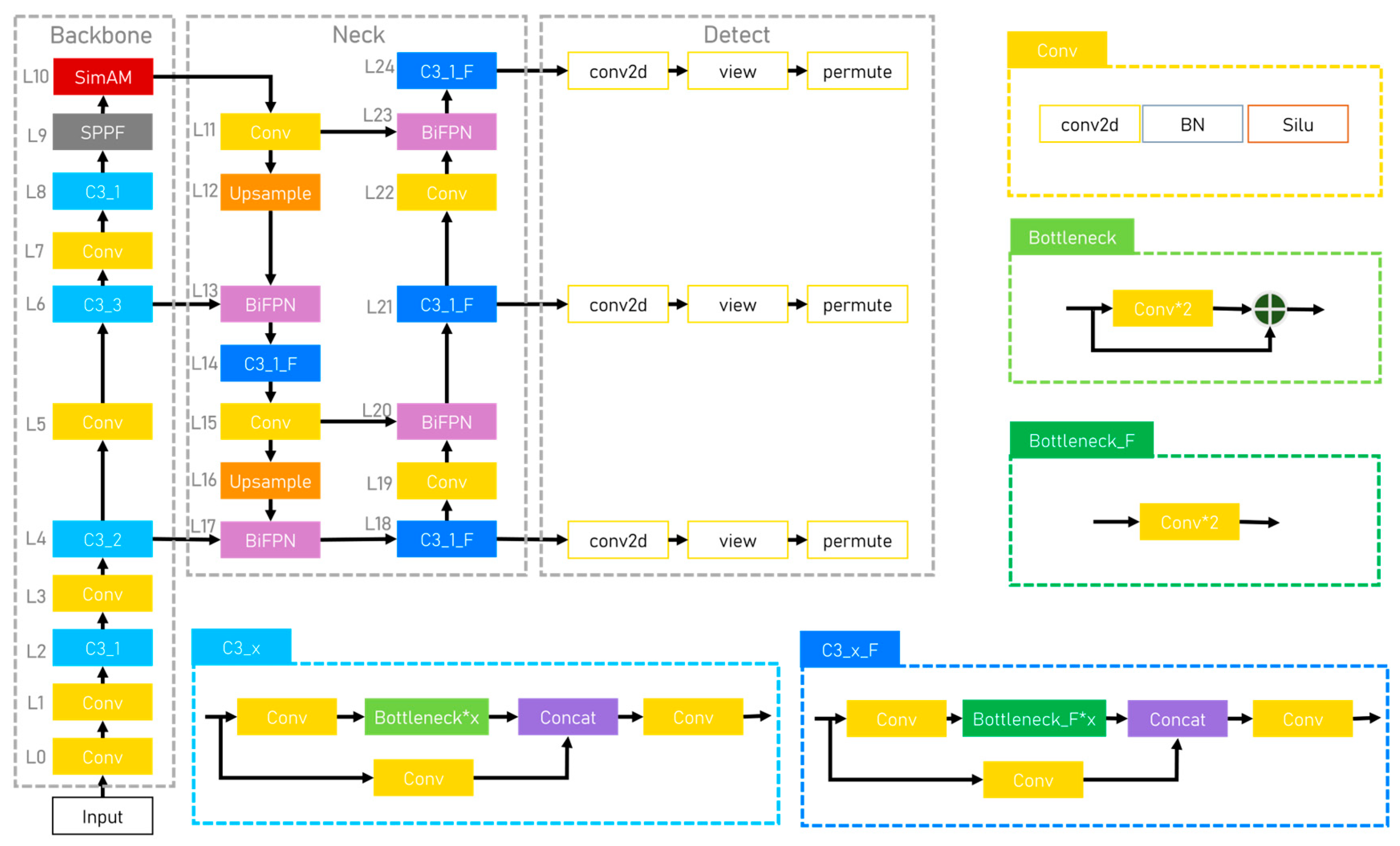

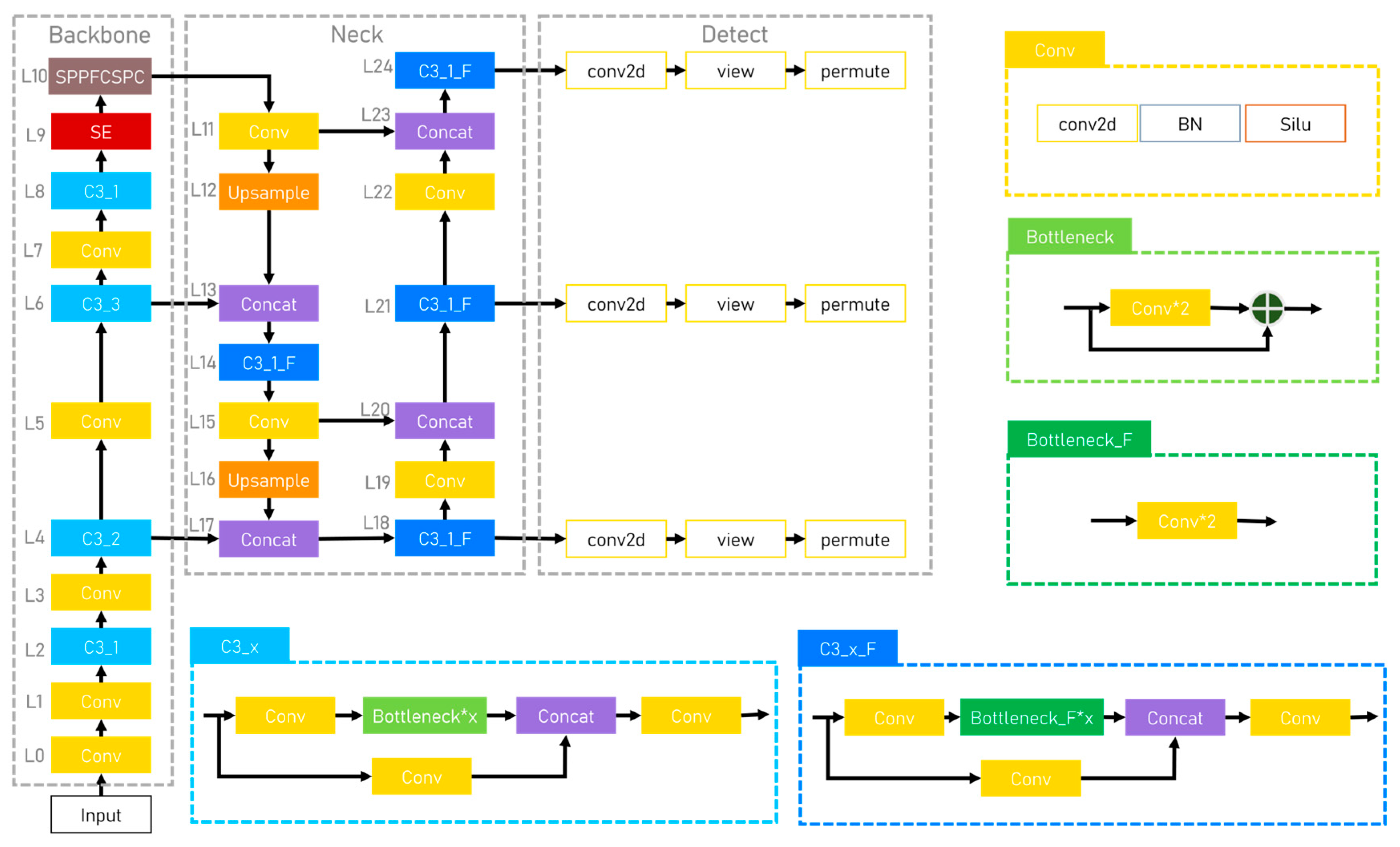

In this paper, two forest fire detection models are designed: WSB and WSS.

Both models are composed of four parts: Input, Backbone, Neck, and Detect. The Input and Detect parts of WSB and WSS are exactly the same, and there are slight differences in the Backbone and Neck. The network structure diagrams of the two models are shown in

Figure 3 and

Figure 4.

The Input module mainly performs adaptive scaling processing, Mosaic data augmentation, and the computation of adaptive anchor frames applied to the images.

(1) Adaptive image scaling

In traditional target detection methods, images are usually scaled to a fixed size, which may result in the target being compressed or stretched, affecting the accuracy of detection. To solve this problem, this paper performs adaptive scaling operations based on the actual size of the target. Specifically, for each training sample, the input scales the image to an appropriate size based on the maximum edge length of the target in it. This can keep the shape of forest fire targets unchanged and improve the accuracy of forest fire target detection.

(2) Mosaic Data Enhancement

After adaptive image scaling, for each training sample, Mosaic data enhancement randomly selects four images and splices them together according to certain rules to form a large image. At the same time, according to the position information of the splicing, the image is adjusted with the corresponding label.

(3) Adaptive anchor frame calculation

In the target detection task, the anchor box is used to define the position and size of the target. Traditional target detection methods usually need to set up a set of anchor boxes with fixed size and scale in advance. In this paper, we adopt the adaptive anchor box calculation method, which clusters the target boxes in the training set by clustering algorithm to get a set of optimal anchor box sizes. This can make the anchor frames more closely match the true size distribution of the target, thus improving the accuracy of target detection.

The Backbone is the most important part of the network, which is responsible for extracting high-level semantic features from the input image. The first eight layers of the Backbone module for both the WSB and WSS models are layered with a series of C3_X modules and convolutional modules, with only the last two layers differing. The penultimate layer of the WSB model is the Spatial Pyramid Pooling-Fast (SPPF), and the SimAM attention mechanism (

Section 3.2) is added to the last layer of the Backbone network, which makes the extraction of image features more accurate; WSS adds SE (

Section 3.3) to the penultimate layer of the Backbone network, which allows the model to pay more attention to the most informative forest fire features and suppresses unimportant features; and SPPFCSPC (

Section 3.4) is used as the last layer of Backbone, which makes the network to be able to extract forest fire features more efficiently.

The Neck part of the WSS model consists of a series of convolution, sampling, C3_X_F module, and Concat module layers. Firstly, through the up-sampling operation, the feature information extracted by the network at the higher level is fused with the features at the lower level in a top–down manner. Then, the features at the lower level are fused with the feature information at the higher level in a bottom–up manner, which makes full use of semantic information at the higher level as well as the detailed information at the lower level for better feature fusion of the forest fire target. The Neck part of the WSS model, on the basis of the WSS model, has changed the Concat to a weighted bidirectional feature pyramid network structure Bi-directional Feature Pyramid Network (BiFPN) (

Section 3.5), which assigns different weights to each input according to the importance of the features to achieve more effective feature fusion.

The WSS and WSB models have the same Detect module. The input comes from three feature maps extracted from layers 18, 21, and 24, which have different resolutions and semantic information, where the lower layer feature maps have higher spatial resolution and the higher layer feature maps have richer semantic information. For each feature map, the model uses Anchor Boxes to generate a set of candidate boxes. For each Anchor Box, the model calculates the confidence that it contains the target based on the predictions on the feature map. It also predicts the bounding box offsets of the target and the class probability of the target. These predictions are computed and adjusted by a set of convolutional and fully connected layers. In order to remove redundant detection results, this paper uses a Non-Maximum Suppression (NMS) algorithm to decide which boxes should be retained by traversing the candidate boxes and eliminating them when the overlap between a candidate box and an already retained candidate box exceeds a set threshold. Finally, for the retained candidate frames, the model selects the most likely category based on the category probability and adjusts the position and size of the candidate frames based on the prediction results of the bounding box loss function WFIoU Loss (

Section 3.6) designed in this paper to more accurately localize forest fire targets.

3.2. SimAM Attention Mechanism

SimAM is a simple and parameter-free attention Module [

25]. Different from a large number of scholars’ research on feature extraction methods for spatial dimension or channel dimension, the SimAM module was devised to establish an energy function, thereby facilitating the derivation of three-dimensional attention weights without the need for additional parameters as feature maps, which ensures that it is lighter and more efficient.

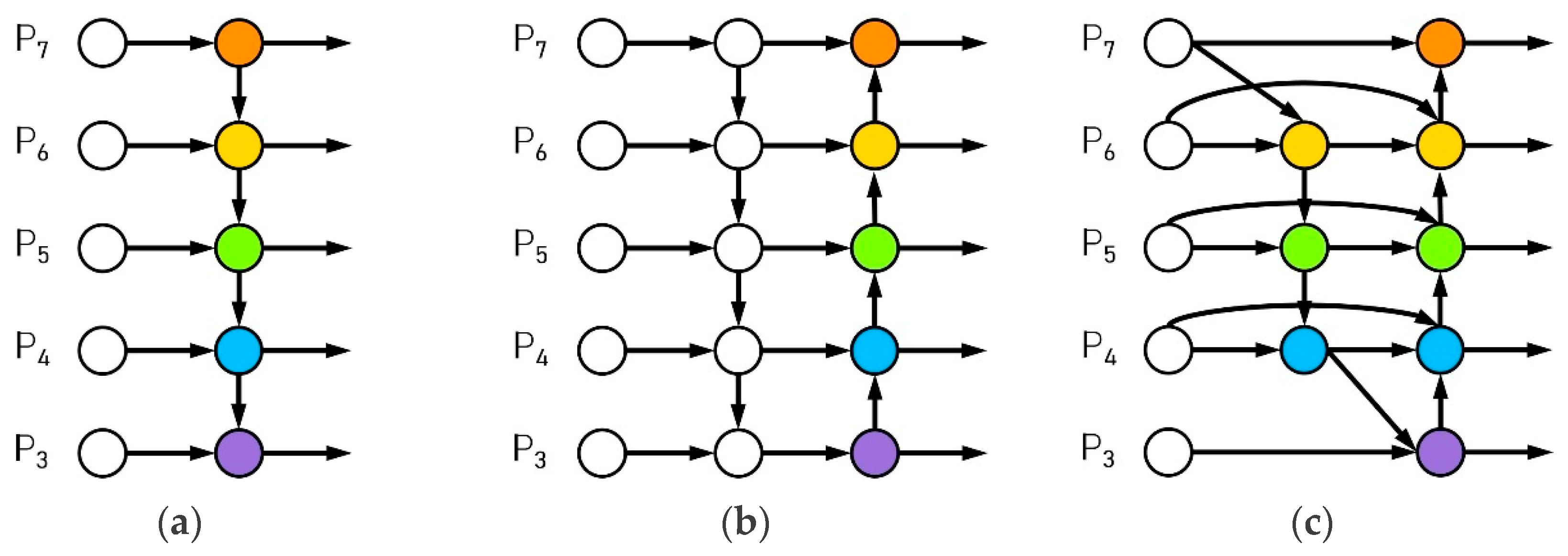

Figure 5 shows the step-by-step diagram of the different attentions. (a) The attention module generates 1-D weights by feature X, which is extended to channel attention. It differentiates treatment across distinct channels while uniformly considering all spatial dimensions. (b) The attention module generates 2-D weights by feature X, which is extended to spatial attention. It treats different spaces differently and treats all channels equally. (c) It is SimAM that generates 3-D weights.

SimAM finds important neurons by measuring the linear differentiability between neurons and assigns higher priority to these neurons. The equation defining the energy function of each neuron is shown in Equation (1).

is the target neuron in the channel,

is the other neurons in the channel,

i represents the index denoting the spatial dimension, and

M signifies the count of neurons present within that specific channel. By binary labeling and adding the canonical term, the ultimate formulation of the energy function is depicted as presented in Equation (5).

By operating on individual neurons and integrating this linear separability into an end-to-end framework, a better enhancement to neural networks is achieved.

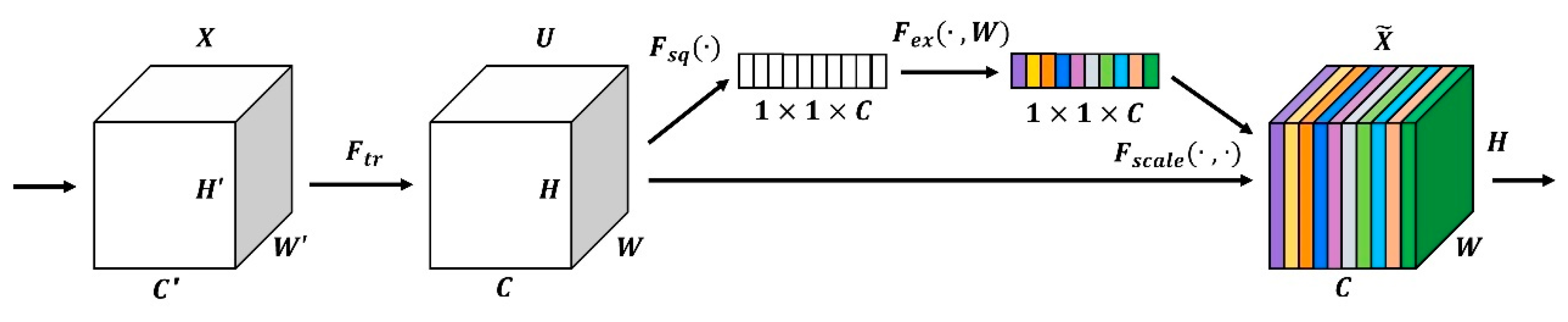

3.3. SE Attention Mechanism

At the heart of convolutional neural networks lies the fundamental convolution operation, which fuses features in spatial and channel dimensions by means of local perceptual fields. While feature extraction methods for the spatial dimension have been studied by a large number of scholars previously, SE is a channel attention mechanism that has been introduced to convolutional neural networks earlier [

26]. The structure of the SE building block is depicted in

Figure 6. SE is composed of Squeeze (Global Information Embedding) and Excitation (Adaptative Recalibration), respectively.

In order to make each cell of the transformed output U use contextual information outside this region, “Squeeze” employs global averaging pooling, which is the simplest aggregation technique that compresses the spatial dimensions H and W of U to 1 × 1. That is, the holistic spatial information is compacted into a channel descriptor through a global compression process, and one pixel is used to represent a channel to achieve low-dimensional embedding. The compressed feature is essentially a vector with only a channel dimension and no spatial dimension.

Squeeze is calculated as shown in Equation (6):

A subsequent operation is executed, endeavoring to comprehensively encapsulate interdependencies among channels to harness the insights extracted during the aggregation process in the squeeze operation. This endeavor necessitated the function to meet two essential criteria. Firstly, it required flexibility, particularly the capability to acquire knowledge of nonlinear interactions between channels. Secondly, it was imperative for the function to acquire a non-mutually exclusive association, as the objective was to facilitate the accentuation of multiple channels rather than enforcing exclusive activation of a solitary channel.

The Excitation component is realized by means of two fully connected layers. The initial fully connected layer condenses ‘C’ channels into ‘C/r’ channels, thereby mitigating computational demands (succeeded by ReLU activation). The subsequent fully connected layer restores the channel count to ‘C’ (succeeded by Sigmoid activation). R refers to the ratio of compression, also known as the dimensionality reduction rate. The Excitation operations are designed to fully capture the channel dependencies. For this purpose, a gating mechanism is designed with the following Equation (7).

This function is able to learn nonlinear interactions between channels flexibly, as well as non-mutually exclusive relationships, which ensures that multiple channels are emphasized.

3.4. SPPFCSPC

The SPP structure, also known as spatial pyramid pooling, can convert arbitrarily sized feature maps into fixed-size feature vectors to achieve an adaptive size output [

27]. In YOLOv5-6.0, the SPPF module is employed in lieu of the conventional SPP module. The SPPF module employs a sequence of cascading small-size pooling kernels as opposed to a singular large-size pooling kernel in the traditional SPP module. It not only retains the original function but also integrates the characteristic maps of different receptive fields. And in the case of enriching the expression ability of the feature map, the running speed is improved. The SPPCSPC structure is used in YOLOv7, which performs better than SPPF, but the number of parameters and computation is much higher, and the running speed is lower than SPPF.

Figure 7 and

Figure 8 show the network structure diagrams of SPPF and SPPCSPC, respectively.

In this paper, the SPPCSPC is optimized by learning the idea of SPPF to obtain the SPPFCSPC, which improves the model running speed while keeping the perceptual field unchanged.

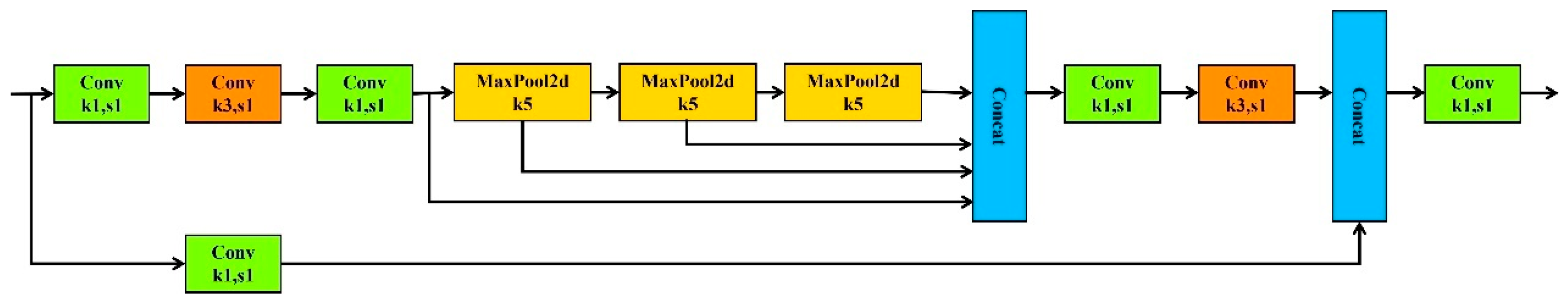

Figure 9 shows the network structure of SPPFCSPC.

3.5. BiFPN

BiFPN, known as Bidirectional Feature Pyramid Network, is a combination of top–down and bottom–up feature pyramid networks. Most of the models use the Feature Pyramid Network (FPN) plus the Path Aggregation Network (PAN) structure for feature fusion in the Neck layer fusing shallow and deep networks, while BiFPN can obtain a more effective feature fusion method compared to PAN+FPN, which can increase the coupling of features at each scale and perform better in shallow features for small target detection [

28]. To address the issue of imprecise recognition stemming from the convergence of detection targets, the BiFPN implements a cross-scale connection methodology. This approach facilitates the modulation of feature representation for distinct detection features by means of cross-scale weights, thereby enabling both suppression and enhancement of feature expression. The structure diagrams of the FPN network, PAFPN network, and BiFPN network are shown in

Figure 10.

The BiFPN structure is based on PAN, which deletes the nodes with no feature fusion and less contribution and adds a new channel between the original input node and the output node, thus saving resource consumption and fusing more feature information at the same time. The BiFPN structure carries on the fast normalized fusion through the ratio of the weight and the sum of the weight and finally normalizes the weight to [0, 1], which improves the perception ability of the target in different situations. At the same time, the information of feature maps between different levels can be fused at the prediction side, which can effectively solve the interference caused by noisy images and other factors.

3.6. Loss Function—WFIoU Loss

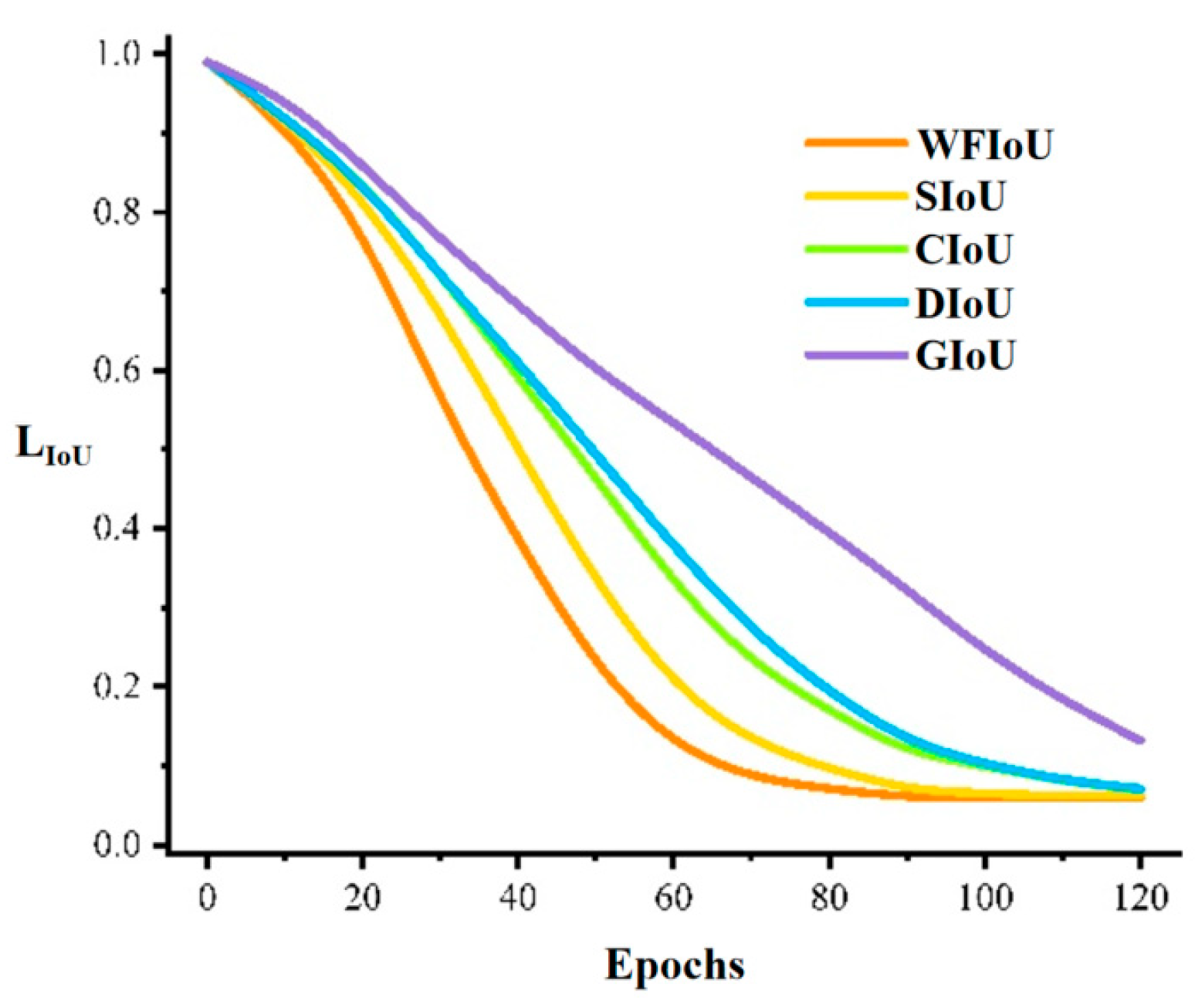

The loss function plays a crucial role in deep learning-based target detection. Through the process of minimizing the loss function, the model achieves expedited convergence, leading to a reduction in the error rate of model predictions. Therefore, using a better loss function will better improve the detection performance of the model. This paper designs the loss function WFIoU based on Wise-IoU, which has a dynamic non-monotonic focusing mechanism. This mechanism has a smarter gradient gain allocation strategy, and this strategy can reduce the harmful gradients generated by low-quality examples. Through experiments, the performance of bounding box regression (BBR) loss without FMs is compared. From

Figure 11 below, it can be observed that the WFIoU converges the fastest among a series of losses.

WFIoUv1 constructs an attention-based bounding box loss, while v2 and v3 build on this by constructing a gradient gain (focus factor) calculation to attach a focus mechanism. WFIoUv1 is defined as shown in Equations (8) and (9):

Wg and Hg represent the width and height of the minimum enclosing frame, respectively. The of the ordinary quality anchor frame will be magnified by , while the of the high-quality anchor frame will be significantly reduced, separating Wg and Hg (operation with * in the formula), which can prevent the gradient convergence from being hindered by .

In WFIoU v2, construct a monotonic focusing coefficient

for

. Define as Equation (10) below.

In order to prevent the reduction of the convergence speed in the late training period, as shown in Equation (11), the mean value of

is introduced as a normalization factor.

In order to prevent the low-quality example from producing a large harmful gradient, a smaller gradient gain is assigned to anchor frames with larger outliers in WFIoUv3. As shown in Equation (12), a non-monotonic focus factor based on

β is constructed on the basis of v1.

Because and the criteria for dividing anchor frames are not immutable, WFIoUv3 can change the gradient gain allocation strategy in a very timely manner to adapt to the current situation.

3.7. Forest Fire Detection Model WSB_WSS Built on Integrated Learning

Integration learning is a commonly used method to improve the accuracy of model recognition [

29]. The basic idea is to combine multiple base learners according to some method to get a strong learner. Generally, machine learning is used to get the most robust data model possible by selecting a suitable single model algorithm, but the single model enhancement has some limitations. Therefore, integration learning arises at the historic moment, which is used to fuse the results of multiple weak learners according to certain rules to further improve the accuracy of model recognition.

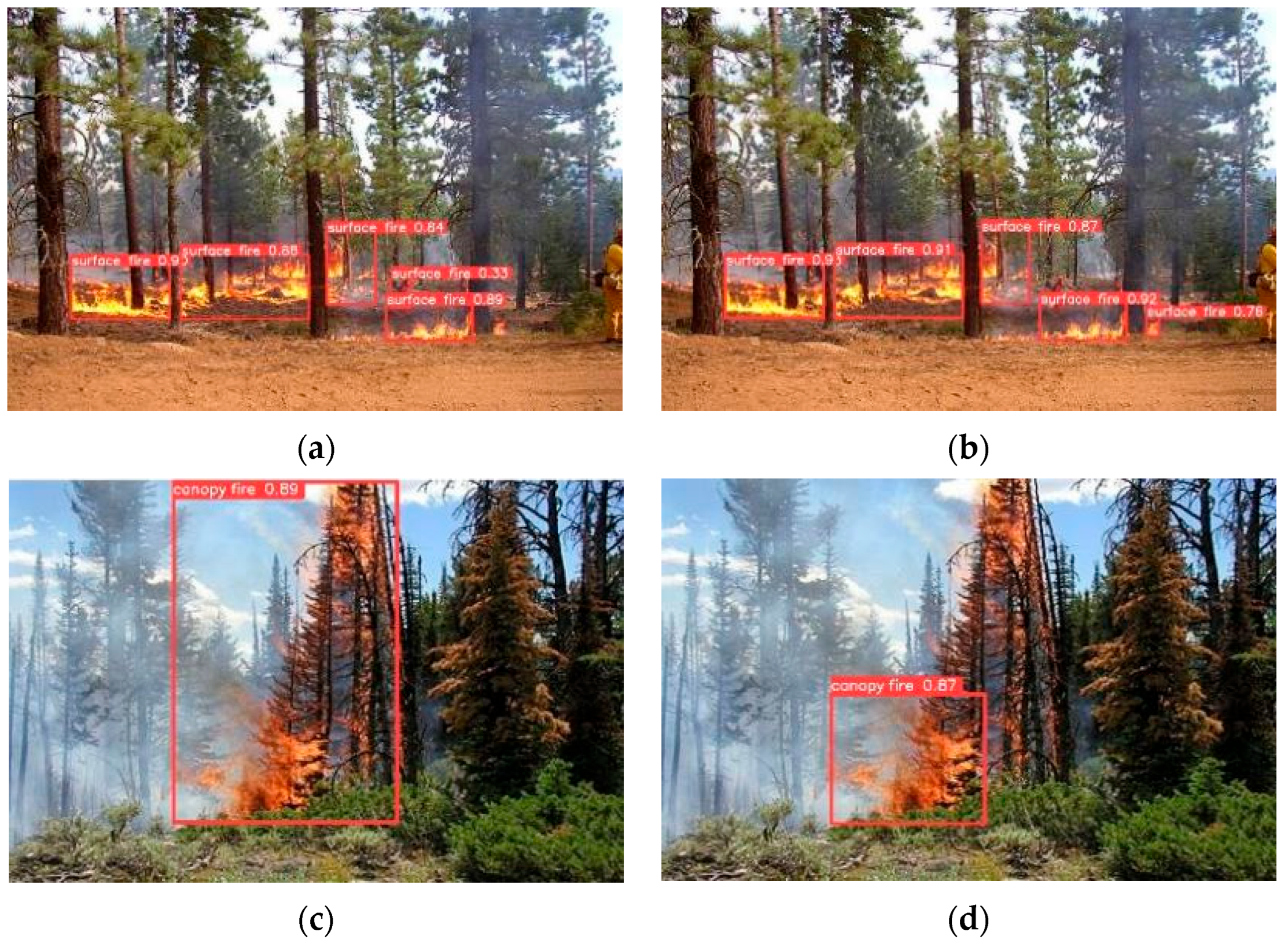

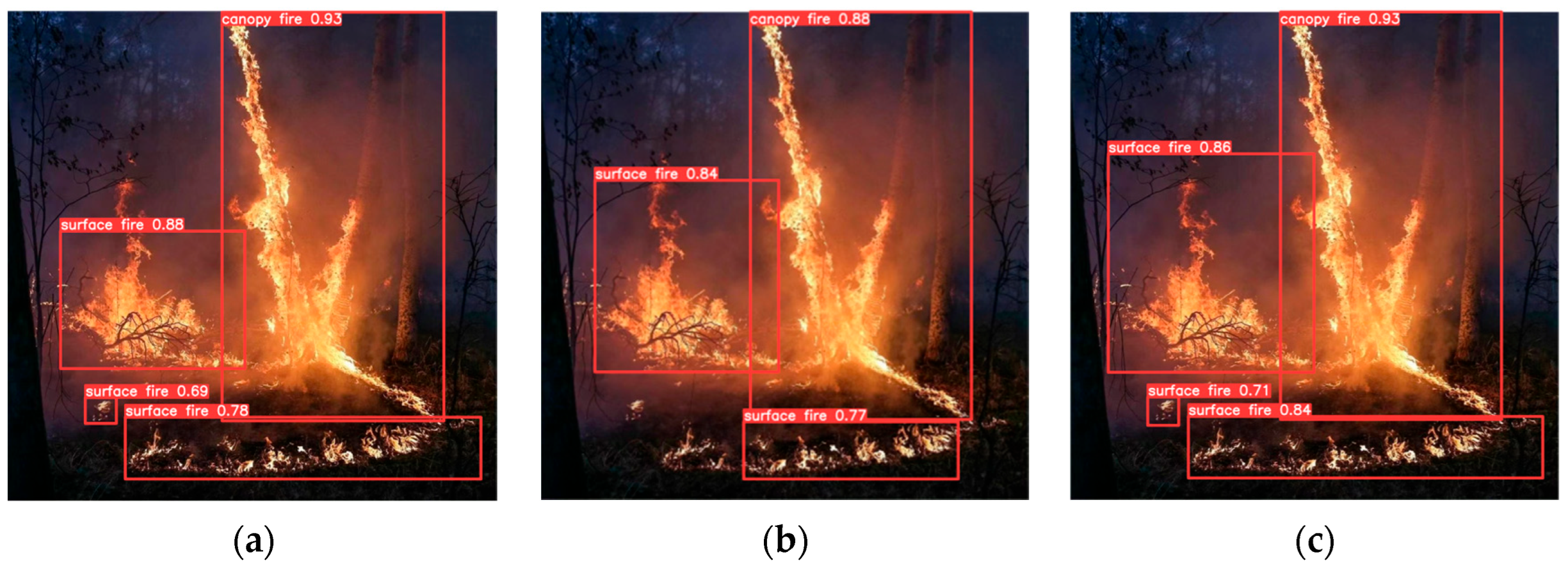

In this paper, two weakly supervised models, WSB and WSS, are designed. Through experiments, it is observed that WSB is more advantageous in the identification of small target forest fires, but the identification of large target forest fires is incomplete. WSS can identify large target forest fires in a complete way, but the detection accuracy of small target forest fires is not as good as that of the WSB model. Therefore, this paper integrates WSB and WSS and proposes a more efficient forest fire detection model, WSB_WSS. The integrated model gives full play to the advantages of the two models, which can not only identify large forest fires completely but also accurately identify small target forest fires, which offsets the error between a single hypothesis and the target hypothesis and is more robust and generalizable than the recognition results of a single model.

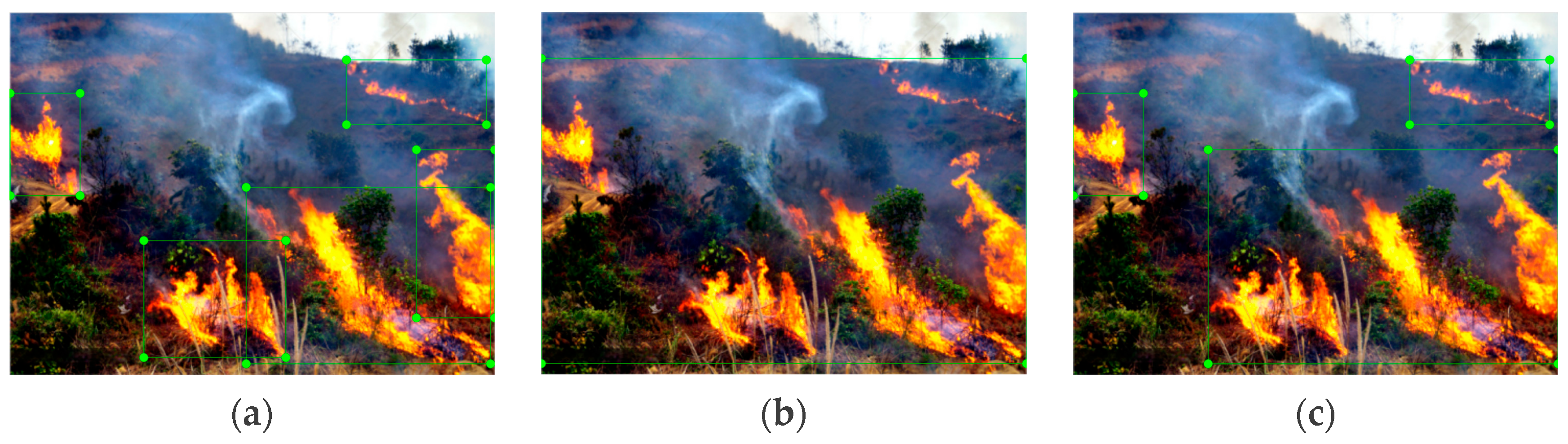

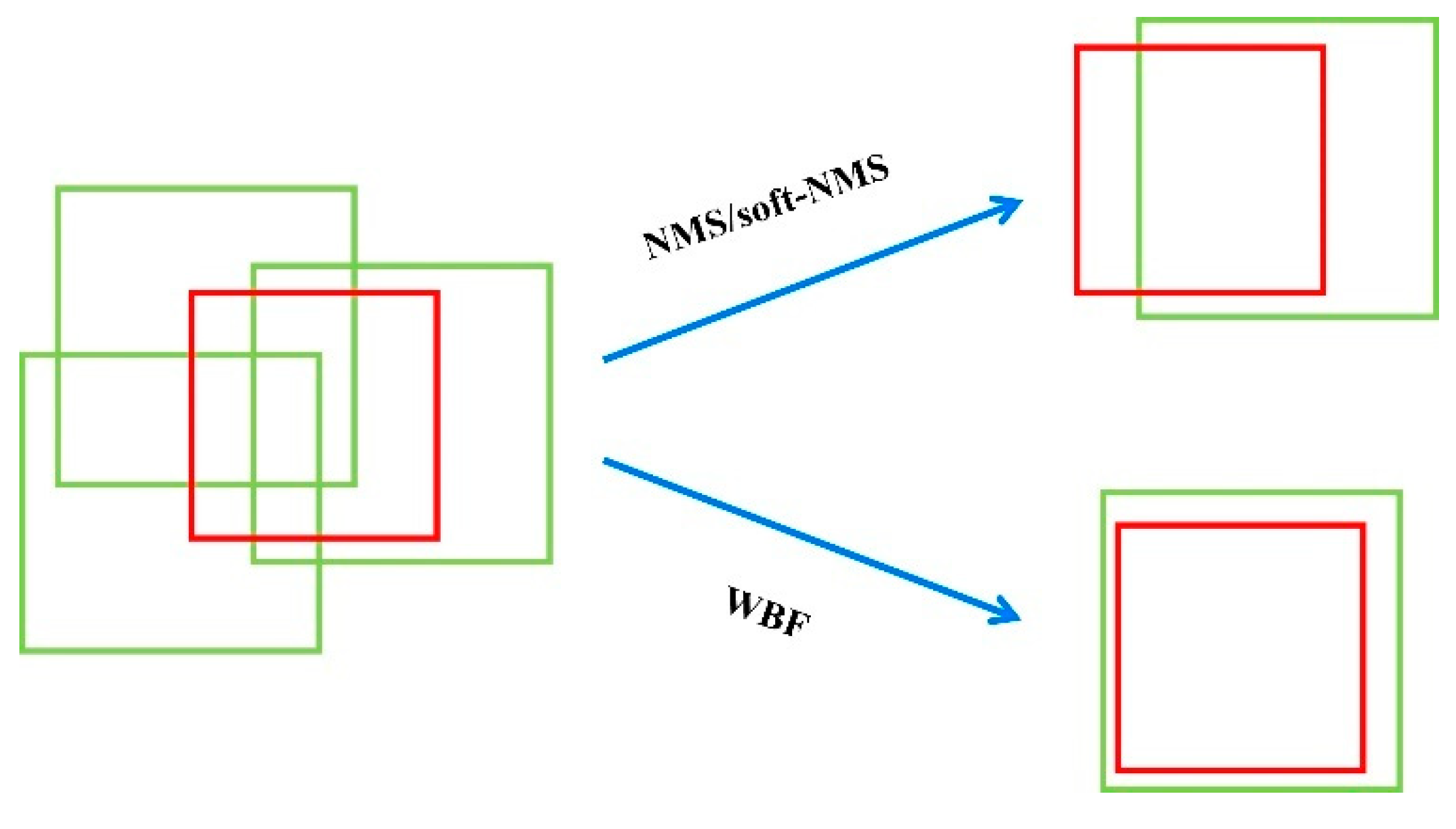

The integrated model in this paper needs to fuse two different models. In the process of fusion, a large number of overlapping frames need to be de-duplicated to obtain the optimal detection frames. The commonly used de-duplication method is generally the non-maximum suppression method (NMS), but using NMS will directly eliminate the detection boxes with high overlap, resulting in some useful detection boxes being deleted. Therefore, in this paper, the Weighted Boxes Fusion (WBF) method is used to weight the fusion of the detection boxes, and then a new detection box is obtained to improve the accuracy of the final prediction box. As shown in

Figure 12 below, the red box is the ground truth, and the green detection box is the prediction box. It can be seen that using NMS leaves only one box with the highest confidence but is not accurate while using WBF recalculates the confidence of the three prediction boxes to generate a new box, which is more accurate than NMS.

5. Conclusions

At present, the establishment of a high-precision detection model that can realize both large and small target forest fires is still a major difficulty in the field of forest fire detection. There are obvious differences in the scales and characteristics of large and small target fires, and the existing forest fire detection models still have the problem of not being able to comprehensively consider the targets at different scales. Detection models designed for small target forest fires often pay too much attention to the details of small targets, thus ignoring the overall characteristics of large target forest fires; models designed for large target forest fires are often prone to the omission of small-target forest fires. In addition, most of the current forest fire detection uses a single model for detection, which cannot adequately handle the impact of target size change, occlusion, and other factors on forest fire detection, and the robustness of the model is poor.

In order to solve the problem of the obvious difference in the scales of large and small target forest fires, this paper designs a multi-scale forest fire detection method, which enhances the feature expression ability of the network by means of bidirectional links and cross-layer feature fusion. In addition, this paper introduces SPPFCSPC to improve the model’s ability to perceive targets at different scales. In addition, this paper proposes a new edge loss function, WFIoU Loss, to optimize the model’s anti-interference ability against noise, occlusion, and scale changes and to improve the accuracy of the model for forest fire detection. This paper also introduces the SimAM attention mechanism and SE attention mechanism to increase the model’s attention to forest fires. Based on the above techniques, this paper proposes two forest fire detection models, WSB and WSS. Through experiments, we find that the WSB model can accurately identify small target surface fires, but the identification of large target forest fires is sometimes incomplete, while the WSS model can completely identify large surface fires and trunk fires but misses part of the small target surface fires. Therefore, this paper integrates the two models based on the WBF method and designs a more efficient forest fire detection model, WSB_WSS, which simultaneously realizes high-accuracy large target forest fire detection and small target forest fire detection. The model WSB_WSS designed in this paper has an accuracy of 83.3% for small target forest fire detection and 93.5% for large target forest fire detection, which is significantly better than the existing mainstream models.

Considering that there are many smoke interferences in the process of forest fire recognition, which affect the presentation of forest fire images and the recognition and extraction of forest fire image features, and influence the accuracy of recognition, further analysis and experiments on how to exclude the influence of smoke features on forest fire images will be carried out in the following to improve the generalizability of the model [

30,

31].