1. Introduction

Information and communication technologies have made significant advances in the last decades and disrupted science and other knowledge sectors. Many mathematical problems waiting to be solved for centuries are solved using more efficient algorithms and increased computing power. Artificial intelligence became commercially viable, and standard tools using machine learning have been developed in space, technology, and medical research. Many tools and techniques for analyzing unstructured data have been developed and commercialized at scale. However, the changes in systematic review methodologies and tools in international development and social impact sectors have been much slower. There are many missed opportunities to increase the effectiveness, efficiency, and societal relevance of systematic reviews and maps in international development and social impact sectors.

Systematic reviews and maps are gaining momentum in the broader forests use and management literature, including subjects such as land use management, ecosystems services, and zoology in the last decade. There are about 140 systematic reviews and maps related to forestry use and management in Scopus and the Web of Science database. Following the early years of minor increase between 2003 and 2013, the number of forest use and management related reviews increased from 2/year in 2013 to 27/year in 2020. The diversity of the review types have also been increasing. In the early years, systematic reviews were the only types. Since 2012, systematic review protocols have become a part of the forestry use and management systematic review work, and since 2015 systematic maps and map protocols have been published. By June 2021, there are more than 106 systematic reviews, 15 systematic review protocols, 12 Systematic maps, and seven systematic map protocols in broader forestry use and management related subjects. However, machine-centered approaches, such as machine learning, artificial intelligence, text mining, automated semantic analysis, and translation bots, in carrying out these reviews and maps were minimal. No review or map has used artificial intelligence, algorithmic procedures, or text mining approaches in a structured manner among the 140 reviews and maps. The use of analysis software that has text and content analysis functionalities was limited to a few.

One of the root causes of the slow change in the evidence sector in general and systematic reviews, in particular, is the polarization of the opinion about the role of machine-centered systems. On the one hand, several scientists argue that machine-centered evidence systems, such as artificial intelligence-based classifications, are black boxes, and their findings cannot be validated sufficiently [

1,

2]. Some others raise ethical concerns and claim that evidence generated by machine-centered systems will lead to ethically blind interventions [

3,

4]. On the other hand, multiple scientists argue that human judgment on evidence will always be incomplete, at best [

5,

6], and partial in some cases [

6]. Some proponents of machine-centered systems also argue that human-centered evidence approaches reflect the preferences of a professional community rather than the needs of society [

7,

8] since building sufficient capacity to generate, disseminate, and use evidence effectively requires a considerable investment that can only be done by a minority of the people in society [

9,

10].

In this article, we provide a hybrid approach that combines both machine-centered and human-centered elements. We think our hybrid approach for conducting systematic reviews and maps can address most concerns about evidence management and improve the efficiency, effectiveness, and societal relevance of systematic reviews and maps. We propose using the hybrid approach, especially in the evidence sector in international development, social impact, and broader forest use, as well as management sectors that shaped our perspective leading to the design and development of the hybrid approach. We think that broader forest use and management sectors, including land management, are among the fields that can benefit from the approach for addressing the key challenges in contemporary forestry.

2. Current Challenges in Systematic Review and Map Sector

We classify current challenges in the systematic review and map sector that inhibit effectiveness, efficiency, and societal relevance in three major groups. The first group of challenges is related to the evidence sources. In the last decade, the number of publications in the international development and social impact sectors has increased exponentially [

11,

12]. However, none of the major databases hosting publications have near full coverage [

13,

14]. The metadata sets of the publications registered in the databases are not standardized. It is common to have multiple versions of the same publication [

15,

16]. In addition, authors of publications may inadvertently “obscure” their articles by using loosely related popular terms in the titles and keywords, decreasing the specificity of articles to increase the likelihood of the article being indexed in and prioritized by the query engines, as titles play an essential role in making publications accessible to search engines and attractive to users [

17].

The second group of challenges is related to the process of conducting the systematic reviews and maps, which can be a ‘time consuming and sometimes boring task’ [

18]. A systematic review was estimated to take more than 12 months [

19,

20] in health research. Although the estimations were made for health reviews and maps, the evidence sources in international development and social impact sectors differ, so it is realistic to assume that reviews and maps in international development would take this amount of time. Although anecdotal in the sense that it is not yet fully documented, our own experience indicates the time-consuming nature of systematic reviews in international development [

21].

Current approaches used for systematic reviews and maps heavily rely on human and manually intensive efforts in compiling, screening, analyzing, and synthesizing literature sources, which require significant time investments [

22,

23] and usually lead to leaving limited time for evaluation and synthesis activities [

24]. Analysis and synthesis require advanced skills, necessitating comprehensive education and training periods coupled with significant financial investments [

9]. Due to the time limitations, low investment by the international development and social impact sectors on evidence, and lack of capabilities by the review teams, the evidence generation process is not sufficiently documented, making replicating the review results hardly feasible. Because of the same limitations, review teams could not spend sufficient efforts on documenting their learning about the content and the evidence, which could have been an essential source of information for the design of international development and social impact interventions. Notwithstanding the considerable potential of systematic reviews for knowledge generation, these process-related challenges highlight the lack of timeliness of systematic reviews and their limited uptake, significantly limiting the use of the evidence in different contexts and making use at scale unlikely.

The third group of challenges relates to the acceptance and applicability in the social sciences. Although originally derived from the health and medical field to consider the effectiveness of specific health interventions, systematic reviews are less able to deal with qualitative evidence, multidisciplinary studies, and differing contexts, common in international development research [

25]. Systematic reviews also privilege scientific over other forms of multiple pieces of knowledge, such as local, technical, and experiential knowledge, making them less applicable to multidisciplinary research.

Despite these challenges, systematic reviews are seen by bilateral and multilateral donors as an essential tool for evidence-informed research for policymaking precisely because they provide a unique opportunity to synthesize large bodies of evidence. For example, UK Department for International Development (DFID), the Australian Agency for International Development (AusAID), and International Initiative for Impact Evaluation (3ie) had commissioned up to 100 systematic reviews [

24]. Given this emphasis on systematic reviews among funders, improving current approaches and addressing some of these challenges, specifically their timeliness, is essential.

3. A Hybrid Approach to Address the Challenges

Our hybrid approach builds on the relative advantages of human and machine-centered approaches. In conducting systematic reviews and maps, machines have a comparative advantage over humans in any processes that can be standardized and can be structured into simple components. Although it is changing rapidly, humans have advantages in unstandardized processes and semantics. For instance, machine-centered approaches are not advanced enough to have common sense, and machines cannot establish semantic relations as effectively and efficiently as human-centered approaches yet [

26,

27]. Based on this picture, our approach uses three critical operations.

3.1. Combining Lexical Databases with Dictionaries from Crowdsourced Literature for Queries

Building a search query is one of the critical steps of any systematic review and map process [

22,

28]. In conventional reviews and maps, the queries are manually identified by experts in the author team of the review or the advisory committee [

28,

29] (

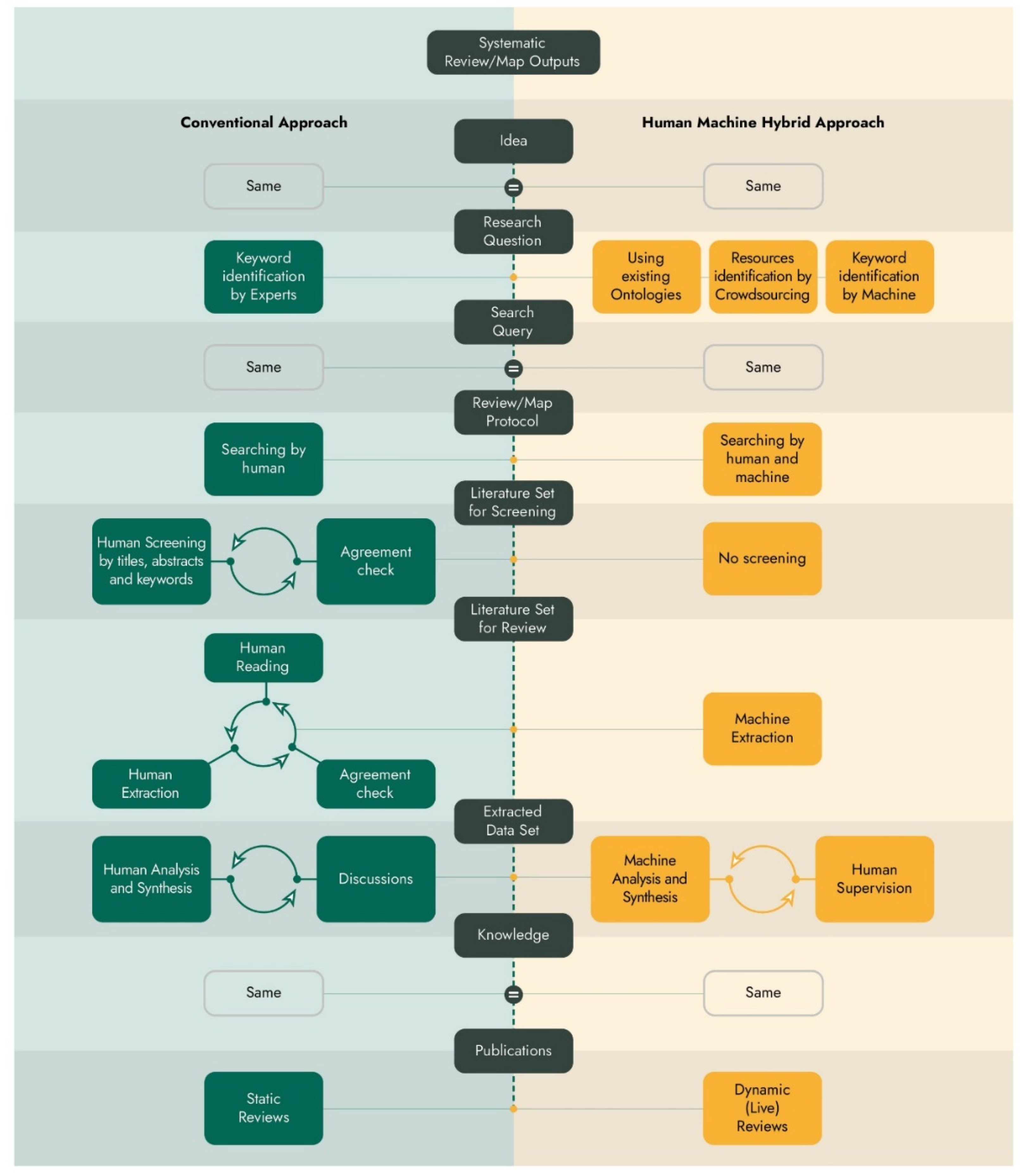

Figure 1). In some cases, the queries are tested against a reference set of resources and updated until it retrieves the whole reference set or a significant part of the reference set. Expert opinion is a quick way to develop a query with a high chance of retrieving relevant resources. However, since the expert identification process is not random and the number of experts who can be consulted is limited, there is always a high risk of omitting relevant resources and expert bias, especially when experts are selected based on convenience. Organizing the advisory committee is cost and effort-intensive for reviews and maps in international development and social impact. Since international development and social impact sectors are international, and advisory committees require diverse members from different countries and continents who need to travel significant distances to attend the committee meetings. Each trip requires significant resources and time for various arrangements.

In our hybrid approach, we reduce the risk of bias induced by omitting relevant resources and evidence that the author team and advisory committee did not know by building queries using standardized ontologies in lexical databases and expert pooled evidence dictionaries. Standardized agricultural and forestry ontologies such as AGROVOC, AIMS, The Crop Ontology for Agricultural Data have been built with the participation of many experts and peer-reviewed by large communities of researchers. They combine more than 40,000 concepts and 700,000 terms in more than 20 languages, providing opportunities to analyze literature published in those languages. WordNet combines all concepts and terms on the internet with a clear semantic relation structure for about 200 languages. The semantic relations in Wordnet go beyond synonyms and antonyms and include all relations such as hyponyms and meronyms. By selecting the words and terms from these ontologies based on the research question, we capitalize the inputs of hundreds of experts in identifying the search query in a standardized way. The queries formulated by the hybrid approach identify a higher number of relevant resources from a broader base of literature.

The ontologies suggest too many words and terms for practical use when the review or map research question is not focused enough. In this case, we use dictionaries generated from expert pooled literature to prioritize words and terms included in the ontologies. To ensure that the experts cover all possible points of view, we use a crowdsourcing approach. Instead of looking for publications or university positions to identify the experts, we use professional social media platforms, mostly ResearchGate, AcademiaEdu, and LinkedIn. We share the research question with all the experts that come out from searching on the social media pages and by emails and ask them if they could provide literature resources informing the questions. Since these social media platforms include expertise and contact information about even the experts who do not have personal profiles, this approach identifies and enables one to contact digitally disengaged experts. Afterward, we use the most frequent words that come out from the analysis of the literature shared by the experts.

3.2. Using Full Texts Instead of Title, Abstract, and Keywords for Screening

Most conventional systematic review and mapping methods include a screening protocol whereby title, abstracts, and keywords are screened to identify potentially relevant literature for the research question against specific inclusion criteria [

30,

31]. Since identification depends on individual perspective relative to the inclusion criteria adherence, more than one person does the screening, and relevance decisions are compared [

28,

32]. When there are differences in inclusion decisions, the screeners interact, agree on standard criteria, and reassess [

33,

34]. This process requires significant time as reaching an overall consensus is time-intensive and blind to the inadvertent obscuring we mentioned in the section describing the challenges. The majority of the graduate programs have a research writing course in which strategic use of scientifically or societally popular words in titles, abstracts, and keywords was taught. Although the objective of such courses is to increase the chances of the articles being prioritized among many hits generated by the query, systematic use of it creates a massive discrepancy between the content of the full papers and title, abstract, and keywords. In addition, a significant portion of the evidence generated in international development and social impact literature is funded by international research for development projects.

Accessing international research for development research funding depends on the quality of research and a promise of positive, large-scale development impact expectations. These expectations create an incentive for authors to have catchy titles and maximalist impact claims in the abstracts. In our hybrid approach, we go a step forward and do not just screen the resources using the title, abstract, and keywords. We retrieve full texts of all accessible resources from the significant general databases, i.e., Scopus, Web of Sciences, Pubmed, and specialty databases based on the research question. Then, we leverage algorithmic procedures to analyze them and extract the relevant parts using text mining methods such as word combinations, interactive word trees, and word-resource maps. This enables us to not only significantly increase the number of publications we could use for the synthesis and mapping (also reducing the time to gather them in ways that would not be possible manually) but also identify evidence patterns that would not be visible in the conventional human-centered approaches. For research questions, including concepts with fuzzy boundaries, which is common in international development and social impact sector reviews and maps, the machine-driven only system might lead to the extraction of a large text set, making a coherent synthesis impractical. If this is the case, we use human selection and auditing of the extracted text and identify a subset of them based on key principles identified by the authors team. Our early attempts showed that enforcing a further focus and rerunning the updated query might lead to a reduction of information relevant for the focus as well. Going back to the beginning, updating the research question would require a new process of ontology identification and crowdsourcing. We think that human-based selection is a more viable approach for the concepts with fuzzy boundaries until validated ontologies are published.

3.3. Using Metadata Sets for Mapping and Synthesizing the Evidence

Metadata of publications have not been utilized in the conventional production process of systematic review and mapping methods. Although the location, the period that the evidence has been generated, and the type of the publication play essential roles in exclusion and inclusion criteria, they are external variables that define the scope of the review rather than internal variables that can be used to compare the existing evidence available across different locations and times [

35,

36]. A significant part of the international development and social impact research is, in fact, multi-location and context-dependent. They cut across multiple periods since development and social impact are long-term processes [

24,

37]. The inclusion and exclusion of resources based on time and location can lead to a loss of relevant evidence. The learning that can be generated from the comparison of time and location can be lost.

Other metadata of publications, such as the authors’ profile, organizational affiliations associated with the publications, and funding agencies of the research are hardly used. Since the authors’ profile, organizational affiliation, and funding agencies indicate a potential conflict of interest in a broader sense, giving information about inclusivity and diversity of the agency that generates the evidence, excluding them from the synthesis and maps creates a risk of enforcing an illusion of the impartiality of evidence.

In our hybrid approach, we use metadata as an internal variable for presenting the evidence in a more granular way. Using a combination of academic reference management software and word-publication text analysis techniques, we create high-quality, comparable metadata and make the authors, organizations, and multiple contextual variables a part of the synthesis. This enables us to present specific configurations of time, space, individual, and organizational factors that can lead to specific international development and social impact outcomes. We also combine the metadata from the academic data basis with other data sources such as national statistics and datasets of international organizations such as the United Nations organizations to enrich the evidence that can inform the systematic review or map the research question.

4. Towards More Effective, Efficient and Societally Relevant Systematic Reviews and Maps in International Development and Social Impact Sectors

Our Human Machine Hybrid Approach proposes changes in how systematic reviews and maps in international development and social impact sectors are conducted. We justify the changes by increasing the effectiveness via capitalizing standardized ontologies and revealing the strategic behavior in the title, abstract, and keyword formulation, efficiency by removing the screening step and introducing a combination of text mining with human auditing to increase societal relevance. We do so via crowdsourcing the literature identification and by increasing granularity on the use of metadata. We argue that, by combining the strengths of both human-centered and machine-centered approaches in multiple steps from building queries to synthesis, our approach is a significant improvement in the ways systematic reviews and maps in international development and social impact sectors are carried out. Due to the nature of this perspective paper, specific details of our approach were not described in this paper. We intend to provide such details in a follow-up method article.

We are aware that our approach might require higher digital and technical literacy to review teams and greater access to computing and processing power, which might be hard to achieve for teams working in low-income countries and settings where infrastructure capacity is low. We recognize that the gains from implementing our hybrid approach might not be high enough to justify changing the ways of doing reviews and maps when the review questions include fuzzy concepts. Nevertheless, as time passes, we believe that existing trends of increasing digital literacy at a global scale, the exponential increase in open-access academic literature, and advances in ontologies will reduce the resource demands of our hybrid approach and make it even more beneficial. The value of this approach to subjects such as forestry and sustainable development, which rely heavily on a wide range of research in many different disciplines and on grey literature to provide evidence for decision-making, will be particularly high given the existing constraints.

Author Contributions

Conceptualization, All; methodology, M.S., A.G.; software, M.S.; validation, S.C. and A.A.; resources, M.S., and A.A.; data curation, M.S. and A.A.; writing—All; writing—review and editing, All; project administration M.S. and A.A.; funding acquisition, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by International Fund for Agricultural Development (IFAD), grant number 2000001661 as a part of the project “Strengthening Knowledge Management for Greater Development Effectiveness in the Near East, North Africa, Central Asia and Europe (SKiM)”.

Acknowledgments

We would like to acknowledge Isabelle Stordeur and Enrico Bonaiuti for effective management and process support which enabled this work and their contribution to developing our ideas. We thank Valerio Graziano for the ideas he provided.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study, in the collection, analyses, or interpretation of data, in the writing of the manuscript, or in the decision to publish the results.

References

- Bathaee, Y. The Artificial Intelligence Black Box and the Failure of Intent and Causation. Harv. J. Law Technol. 2017, 31, 889–938. [Google Scholar]

- London, A.J. Artificial Intelligence and Black-Box Medical Decisions: Accuracy versus Explainability. Hastings Cent. Rep. 2019, 49, 15–21. [Google Scholar] [CrossRef]

- Safdar, N.M.; Banja, J.D.; Meltzer, C.C. Ethical considerations in artificial intelligence. Eur. J. Radiol. 2020, 122, 108768. [Google Scholar] [CrossRef]

- Murphy, K.; Di Ruggiero, E.; Upshur, R.; Willison, D.J.; Malhotra, N.; Cai, J.C.; Malhotra, N.; Lui, V.; Gibson, J. Artificial intelligence for good health: A scoping review of the ethics literature. BMC Med. Ethics 2021, 22, 14. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Verghese, A.; Shah, N.H.; Harrington, R.A. What This Computer Needs Is A Physician: Humanism and Artificial Intelligence. JAMA 2018, 319, 19–20. [Google Scholar] [CrossRef] [PubMed]

- Collins, A.M.; Coughlin, D.; Randall, N. Engaging environmental policy-makers with systematic reviews: Challenges, solutions and lessons learned. Environ. Evid. 2019, 8, 2. [Google Scholar] [CrossRef]

- Laupacis, A.; Straus, S. Systematic reviews: Time to address clinical and policy relevance as well as methodological rigor. Ann. Intern. Med. 2007, 147, 273–274. [Google Scholar] [CrossRef]

- Oliver, S.; Bangpan, M.; Stansfield, C.; Stewart, R. Capacity for conducting systematic reviews in low- and middle-income countries: A rapid appraisal. Health Res. Policy Syst. 2015, 13, 23. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Stern, C.; Munn, Z.; Porritt, K.; Lockwood, C.; Peters, M.D.J.; Bellman, S.; Stephenson, M.; Jordan, Z. An International Educational Training Course for Conducting Systematic Reviews in Health Care: The Joanna Briggs Institute’s Comprehensive Systematic Review Training Program. Worldviews Evid. Based Nurs. 2018, 15, 401–408. [Google Scholar] [CrossRef]

- Aleixandre-Benavent, R.; Aleixandre-Tudó, J.L.; Castelló-Cogollos, L.; Aleixandre, J.L. Trends in scientific research on climate change in agriculture and forestry subject areas (2005–2014). J. Clean. Prod. 2017, 147, 406–418. [Google Scholar] [CrossRef]

- Zhang, C.; Fang, Y.; Chen, X.; Congshan, T. Bibliometric Analysis of Trends in Global Sustainable Livelihood Research. Sustainability 2019, 11, 1150. [Google Scholar] [CrossRef]

- De Filippo, D.; Sanz-Casado, E. Bibliometric and Altmetric Analysis of Three Social Science Disciplines. Front. Res. Metr. Anal. 2018, 3, 34. [Google Scholar] [CrossRef]

- Martín-Martín, A.; Orduna-Malea, E.; Thelwall, M.; Delgado López-Cózar, E. Google Scholar, Web of Science, and Scopus: A systematic comparison of citations in 252 subject categories. J. Inf. 2018, 12, 1160–1177. [Google Scholar] [CrossRef]

- Bates, J.; Best, P.; McQuilkin, J.; Taylor, B. Will Web Search Engines Replace Bibliographic Databases in the Systematic Identification of Research? J. Acad. Libr. 2017, 43, 8–17. [Google Scholar] [CrossRef]

- Visser, M.; van Eck, N.J.; Waltman, L. Large-scale comparison of bibliographic data sources: Scopus, Web of Science, Dimensions, Crossref, and Microsoft Academic. Quant. Sci. Stud. 2021, 2, 20–41. [Google Scholar] [CrossRef]

- Dunleavy, P. Why do academics choose useless titles for articles and chapters? Four steps to getting a better title. Impact Soc. Sci. Blog 2014. Available online: https://blogs.lse.ac.uk/impactofsocialsciences/2014/02/05/academics-choose-useless-titles/ (accessed on 28 May 2021).

- Van de Scoot, R. Symposium Systematic Reviewing 3.0. Rensvandeschoot [Internet]. 20 September 2018. Available online: https://www.rensvandeschoot.com/sysrev30/ (accessed on 27 May 2021).

- Smith, V.; Devane, D.; Begley, C.M.; Clarke, M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med. Res. Methodol. 2011, 11, 15. [Google Scholar] [CrossRef]

- Borah, R.; Brown, A.W.; Capers, P.L.; Kaiser, K.A. Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry. BMJ Open 2017, 7, e012545. [Google Scholar] [CrossRef]

- Kiwanuka, S.; Cummings, S.; Regeer, B. The private sector as the ‘unusual suspect’in knowledge brokering for international sustainable development: A critical review. Knowl. Manag. Dev. J. 2020, 15, 70–97. [Google Scholar]

- Bullers, K.; Howard, A.M.; Hanson, A.; Kearns, W.D.; Orriola, J.J.; Polo, R.L.; Sakmar, K.A. It takes longer than you think: Librarian time spent on systematic review tasks. J. Med. Libr. Assoc. 2018, 106, 198–207. [Google Scholar] [CrossRef]

- Kitchenham, B.; Brereton, P. A systematic review of systematic review process research in software engineering. Inf. Softw. Technol. 2013, 55, 2049–2075. [Google Scholar] [CrossRef]

- Waddington, H.; White, H.; Snilstveit, B.; Hombrados, J.G.; Vojtkova, M.; Davies, P.; Bhavsar, A.; Eyers, J.; Koehlmoos, T.P.; Petticrew, M.; et al. How to do a good systematic review of effects in international development: A tool kit. J. Dev. Eff. 2012, 4, 359–387. [Google Scholar] [CrossRef]

- Mallett, R.; Hagen-Zanker, J.; Slater, R.; Duvendack, M. The benefits and challenges of using systematic reviews in international development research. J. Dev. Eff. 2012, 4, 445–455. [Google Scholar] [CrossRef]

- Kim, T.S.; Sohn, S.Y. Machine-learning-based deep semantic analysis approach for forecasting new technology convergence. Technol. Forecast. Soc. Chang. 2020, 157, 120095. [Google Scholar] [CrossRef]

- Salloum, S.A.; Khan, R.; Shaalan, K. A Survey of Semantic Analysis Approaches. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020; pp. 61–70. [Google Scholar]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. Cochrane Handbook for Systematic Reviews of Interventions; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Petticrew, M.; Roberts, H. Systematic Reviews in the Social Sciences: A Practical Guide; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Mengist, W.; Soromessa, T.; Legese, G. Method for conducting systematic literature review and meta-analysis for environmental science research. MethodsX 2020, 7, 100777. [Google Scholar] [CrossRef]

- Koutsos, T.M.; Menexes, G.C.; Dordas, C.A. An efficient framework for conducting systematic literature reviews in agricultural sciences. Sci. Total Environ. 2019, 682, 106–117. [Google Scholar] [CrossRef] [PubMed]

- Gough, D.; Oliver, S.; Thomas, J. An Introduction to Systematic Reviews; SAGE: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Mateen, F.J.; Oh, J.; Tergas, A.I.; Bhayani, N.H.; Kamdar, B.B. Titles versus titles and abstracts for initial screening of articles for systematic reviews. Clin. Epidemiol. 2013, 5, 89. [Google Scholar] [CrossRef]

- Kohl, C.; McIntosh, E.J.; Unger, S.; Haddaway, N.R.; Kecke, S.; Schiemann, J.; Wilhelm, R. Online tools supporting the conduct and reporting of systematic reviews and systematic maps: A case study on CADIMA and review of existing tools. Environ. Evid. 2018, 7, 8. [Google Scholar] [CrossRef]

- Greenhalgh, T.; Thorne, S.; Malterud, K. Time to challenge the spurious hierarchy of systematic over narrative reviews? Eur. J. Clin. Investig. 2018, 48, e12931. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; E Brennan, S.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Sartas, M.; Schut, M.; Proietti, C.; Thiele, G.; Leeuwis, C. Scaling Readiness: Science and practice of an approach to enhance impact of research for development. Agric. Syst. 2020, 183, 102874. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).