Abstract

Sawmilling operations are typically one of the most important cells of the wood supply chain as they take the log assortments as inputs to which they add value by processing lumber and other semi-finite products. For this kind of operations, and especially for those developed at a small scale, long-term monitoring data is a prerequisite to make decisions, to increase the operational efficiency and to enable the precision of operations. In many cases, however, collection and handling of such data is limited to a set of options which may come at high costs. In this study, a low-cost solution integrating offline object tracking, signal processing and artificial intelligence was tested to evaluate its capability to correctly classify in the time domain the events specific to the monitoring of wood sawmilling operations. Discrete scalar signals produced from media files by tracking functionalities of the Kinovea® software (13,000 frames) were used to derive a differential signal, then a filtering-to-the-root procedure was applied to them. Both, the raw and filtered signals were used as inputs in the training of an artificial neural network at two levels of operational detail: fully and essentially documented data. While the addition of the derived signal made sense because it improved the outcomes of classification (recall of 92–97%) filtered signals were found to add less contribution to the classification accuracy. The use of essentially documented data has improved substantially the classification outcomes and it could be an excellent solution in monitoring applications requiring a basic level of detail. The tested system could represent a good and cheap solution to monitor sawmilling facilities aiming to develop our understanding on their technical efficiency.

Keywords:

big data; automation; efficiency; monitoring; wood sawmilling; operations; low-cost; improvement; integration 1. Introduction

Reliable production monitoring data collected on long term is of a crucial importance in many industries because it provides an informed background for resource allocation and saving, optimization and operational improvement [1]. In many ways, productivity increment in different types of operations is seen nowadays as one of the founding factors of competitiveness enhancement, and monitoring data is commonly gained by different types of surveys, starting from less advanced, generalist ones, and ending with those able to produce accurate and detailed quantitative data, in real time. While the wood processing industry makes no exception from that, it is frequently seen to hold a limited capability to achieve an efficient production, which may be the effect of low technical and allocative efficiencies [2], as well as of the missing monitoring data, with the latter preventing the science to find solutions for the problem. The situation is even more bottlenecked in the case of small-scale sawmills, which are relying on simple machines, that do not integrate production monitoring systems, operate at low production rates [3,4,5], and do not hold the financial ability to procure sophisticated monitoring systems. At least in such cases, the production monitoring solutions are few and limited by the amount of resources needed under the regular or advanced approaches to the problem.

In this regard, long-term assessment of efficiency and productivity requires data on production (i.e., the amount of manufactured products) and time consumption (i.e., time spent to manufacture the products) [6]. Moreover, to build a clearer picture on the factors that should be (re)engineered for a better performance, productivity studies are often carried out at elemental level [7]. Of a particular importance in production monitoring is also the ability to identify and delimitate different kinds of delays, which gives the computational basis for the net and gross productive performance metrics [7]; in relation to the delay-free time, often the studies are framed around the main functions that a machine or tool may enable, with the functions being also interpreted in a spatial context.

While the data on production is often handy to get because it forms the basis of transactions on the market, monitoring of productive performance relies on time-and-motion studies that can be done at different resolutions and by different means [7]. Regarding the means, and by assuming an absence of integrated monitoring systems, the current options may include traditional chronometry studies [3,8], video surveillance and the use of other kind of external sensors [9]. For long-term monitoring, however, the approaches by video surveillance and the use of different types of sensors have the most promising potential due to their ability to collect and store events of interest on long time windows. In particular, video surveillance holds the important capability of capturing and storing the real sequence of events [1]; however, the office effort needed to analyze the data by human-assisted interpretation may be challenging, especially when the observed processes or study designs are complex [10].

To overcome this situation, solutions are needed to learn and classify the events of interest and their associated time consumption directly from the video files. Such an option could be enabled by the use of a series of properties and methods associated with video surveillance, signal processing and artificial intelligence. One of them refers to actually producing a useful signal from the collected video files and it is related and enabled by the recent developments in the algorithms [11] and software for video tracking applications [12]; the latter are based on the supervised definition of an object on a given frame followed by its detection and tracking on the subsequent frames [13]. By doing so, the frequency of the frames may be used as a counter to compute the time consumption on events and, as such, object tracking may be implemented online or offline and it may refer to short-term or long-term tracking of single or multiple objects [14]; it works well on 2D scenes to detect new locations taken successively by the tracked objects. The motion identified by tracking a given object in successive frames can be then used to produce a discrete signal in the scalar domain, a property which is enabled by offline video-tracking software such as the Kinovea®, which has been typically used in the science related to the human performance monitoring and to the kinematics of human body segments [12,15,16]. The individual scalar signals may be then moved in the time domain and used, either directly or after the application of different filters, as inputs for supervised learning algorithms or processes, such as the artificial neural networks (ANNs). In this regard, the ANNs [17] and other techniques of artificial intelligence (AI) are able to solve multivariate non-linear problems which are quite common in the case of using as inputs for classification nonlinear signals characterizing multi-class problems [9,18,19]. Similar to the object tracking software applications, implementation of ANNs, as well as of many other types of classification algorithms, has become affordable lately by the development of free open-source software.

The goal of this study was to test the performance of a system implemented for data collection, signal processing and supervised classification with application in the long-term performance monitoring of small-scale wood processing facilities. One of the basic assumptions and requirements in developing and testing the system was that of using to a great extent the freely available tools and software to produce accurate classifications of the operational events in the time domain. The system consisted of using an affordable video camera to collect the data and the freely available Kinovea® (version 0.8.27, https://www.kinovea.org/) and Orange Visual Programming Software® (version 3.2.4.1, Ljubljana, Slovenia) software tools to produce the needed discrete scalar signals and to check the accuracy of the classification. Therefore, the objectives of this study were (i) to check the classification performance of the operational events in the time-domain by using the original signals as a baseline, less resource-intensive alternative; (ii) to check the classification performance enhancements, if any, by adding a derived simple-to-compute signal, based the original signals as an additional, more discriminative solution for the implementation of the ANN algorithm; and (iii) to check the classification performance enhancements, if any, brought by signal filtering to their roots, by median filters, as a fine-tuned, discriminative solution for the implementation of the ANN algorithm. Acknowledging the importance and differences that may occur in the classification performance in the testing phase, for the achievement of most of this study objectives, the workflow of the ANN implementations has been restricted to the training phase. Further, the ANN implementations were done at two levels of detail, of which one characterized the data documented at the finest possible level (elemental study) and one characterized the data aggregated at two discriminant levels which are important for production monitoring: machine working versus machine non-working.

2. Materials and Methods

2.1. Video Recording and Media Input

The media files used to test the system were captured by video recording in a sawmilling facility by the use of a cheap small-sized camera which was mounted near the steel frame of the sawmilling machine with the field of view perpendicularly oriented towards the active frame (Figure 1). The surveyed machine (Mebor, model HTZ 1200) operates in a similar way to that described in [9] with the main differences consisting in its propelling system, which was electrical, and the maximum allowable size of the input logs which was higher. During the field study, the machine operated with Norway spruce logs that had diameters in the range of 26 to 69 cm (average of 45 cm), by the free willingness of the operator in what regards the settings and sequences of cutting, at an air temperature of ca. 25 °C, without any camera vision interferences caused by the sawing dust.

Figure 1.

A frame extracted from the Kinovea® software showing the camera’s field of view, scalar coordinate system (red) and the tracker placement (yellow).

At the field study time (2017), the placement and use of the camera were intended to collect the data needed to estimate the productivity of operations by a rather traditional approach which supposed a manual measurement of the wood inputs and outputs and a chronometry method which was based on regular video surveillance. That is the reason for which the camera was used to continuously record a full day of operation as well as for not placing and using any kind of arbitrary highly-reflective markers on the moving parts of the machine. Nevertheless, by its placement, the camera enabled the video recording of operations in a distance range of ca. 1 to 5 m; it produced a set of video files of 20 min in length each (by settings), at a video resolution of 1280 × 720 pixels and at a sampling rate of 21 frames per second (fps). During recording the light conditions were good, ensuring a good visibility in the collected files, which was enabled by the natural and artificial light available in the hangar of the facility. For the purpose of this study, all the video files were analyzed in detail by playing them in the office phase, to check them against two selection criteria. A first one was that of having in the recording all of the work elements typical to the machine surveyed, including here different delays that characterized non-working events of the machine. The second condition referred to the non-obstruction of the field of view by different moving features such as the interference of workers or other machines. Based on this analysis, a media file was selected for further processing and parts of it which failed to meet the above criteria were removed.

2.2. Signal Extraction, Processing, and Event Documentation

Extraction of the discrete scalar signals from the media file was done by the means of the Kinovea® free software. An example of the settings used is given in Figure 1. To provide a reference for measurement by tracking, a convenient coordinate system was chosen and set with the y-axis close to the middle of the field of view captured in the media file; the origin of the coordinate system was defined close to one seventh of the field of view’s height (Figure 1). Based on these preliminary settings, the tools for trajectory configuration were used to set up the tracking point. This was enabled by the presence on the machine’s frame of some distinguishable geometric features resembling typical markers (Figure 1) of which one was selected as a reference in the first frame taken into analysis. Following the selection, the effective tracking of the machine’s movements was done automatically at 50% of the real running speed of the media file and the data outputted this way was then exported as a Microsoft Excel (Microsoft, Redmond, WA, USA). XML file. For each frame, it contained the coordinates given in pixels and the current time of each coordinate pair (x, y). This output formed the reference dataset of this study, and it accounted for a total number of 13,116 frames.

Based on the extracted data, and for convenience in graphically reporting some of the results, the original data collected on the two axes was downscaled by a factor of 1/100. The resulting datasets (XREF, YREF) were then used as direct inputs to compute a new, derived signal (ΔXYREF), in the form of a restricted positive difference between XREF and YREF (that is subtracting YREF from XREF and when this gave negative values these were included as positive values in the analysis) and for filtering purposes assuming a median filter implemented over a 3-observation window, which was iterated until reaching the root signals. These measures were taken to improve the separability of data under the assumption of less noise in the signals’ patterns.

The benefits of using the median filtered data are those explained, for instance, in [20]; in short, filters from this class may improve the signal-to-noise ratio and provide an unaltered (by truncation) dataset, for instance, in the time domain. This property is important in preserving the time consumption distribution on categories and the approach of median filtering was similar to that detailed in [9]. In what regards the use of root signals, the approach was based on the theoretical backgrounds given in [21] and it was carried out to reach a better uniformity of the signals and to remove the noise from the original data due to inter-pixel movement of the tracker. It is worth mentioning here that a limited calculation effort was assumed right at the beginning, based on an initial plotting of XREF and YREF in the time domain, as well as that the approach may be suitable only for applications such as that described in this study since the computational effort to get the root signals may be extensive by definition [21]. The procedure implemented for median filtering to get the root signals required five iterations in the case of XREF and six iterations in the case of YREF. Based on the filtering results, a new set of signals was developed (XROOT, YROOT and ΔXYROOT) taking as a reference the number and order of observations specific to YROOT. The approach has led to a minor data loss at the extremities of the datasets (12 observations in total). Table 1 is showing the six signals used in the training phase of the ANN.

Table 1.

Signals extracted and processed and their purpose in this study.

Data coding was done by analyzing in the best possible detail the media file and by considering the kinematics of the machine. This procedural step has used the work sequences and codes shown in Table 2. In general, machines from this class operate by adjusting the cutting frame height by upward or downward movements before cutting and before returning the frame to start a new work sequence; in addition, they enable the forward movement of the cutting frame to carry on the active cut and backward movement, in the empty turn, to reach the point of starting a new operational sequence.

Table 2.

Codes used to document the events.

As such, to detach a wood piece from the log, the typical sequence was that of moving the frame downward, then moving the frame forward while carrying on the cut, moving the frame upward and moving the frame backward. This sequence was conventionally adapted to the order of log processing. Using the codes attributed by the analysis of video files, four datasets were developed and used in the ANN training. The first set contained the data of XREF, YREF, and ΔXYREF coded in detail as shown in Table 2 and the second set contained the same signals for which the data was coded for working (W) and non-working events (S). The same data organization procedure was used for the last two datasets which contained the XROOT, YROOT, and ΔXYROOT signals, which were documented in full and essential detail, respectively. Therefore, the analysis of the two groups of signals was extended to include two alternatives: fully detailed (FULL), which included the events MD, MF, MU, MB, and S, and essentially detailed data (ESSEN), respectively, which included the events S and W.

2.3. Setup and Training of the ANN

Setup of the ANN for training has used the freely available Orange Visual Programming Software (version 3.2.4.1) [22]. The main parameters of the ANN were configured similarly to those explained in detail in [9], by assuming the same reasons in regards to the computational cost and classification performance. The setup was based on the use of the rectified linear unit function (ReLu) as an activation function, Adam solver and the L2 penalty regularization term set at 0.0001; additional settings consisted of using three hidden layers of 100 neurons each as well as of using a number of 1,000,000 iterations for training a given ANN model. For all models, training and scoring were done by cross-validation assuming a stratified approach and a number of folds set at 20. The training procedure has used two signal sets (REF and ROOT) of seven possible combinations each, as shown in Table 3. They were used to account for the designed analysis resolutions and to produce data by training to see which one could output the best results. Using the approach described, a total number of 28 ANN models were trained. For example, the REF1 referred to training of the ANN using the fully detailed XREF signal first and then to training of the ANN by using the essentially detailed XREF.

Table 3.

Combinations, signals and analysis resolutions used in the training phase of the ANN.

Following the training procedure, the most commonly used performance metrics (CA—classification accuracy, PREC—precision, REC—recall, and F1—the harmonic mean of PREC and REC) were calculated for each of the trained ANN models. Definitions, meaning, and interpretation of these classification performance indicators may be found, for instance, in [23,24]; for a supplementary check of the classification performance, the area under curve (AUC) was computed for each model. While all of the computed metrics are important in characterizing the classification performance, the focus of this study was on the REC metric, following the reasons given in [25] which apply to time-and-motion studies. Configuration of the computer used to run the training of the ANN models was that given in [9] and to differentiate between the training costs incurred by the potentially different complexities of the signals, the time of training was counted, in seconds, for each model.

2.4. Data Processing and Analysis

Data processing and analysis was done mainly in the Microsoft Excel® (Microsoft, Redmond, WA, USA, 2016 version) software and it relied on the visual comparisons of the data. It was assumed, therefore, that statistical comparisons of the classification performance metrics will bring no relevance due to the fact that the outcomes of training were quite different, as well as due to the fact that even a small difference found in a given pair of metrics could cause significant effects in such a case in which the results would have been scaled to larger datasets. As a first step of data analysis, data coming from the XREF, YREF, and ΔXYREF signals was plotted in the time domain against the codes attributed for the events identified and delimited at the FULL and ESSEN resolutions. While this helped in understanding the kinematics of the machine, in the results section, only a partition of the most representative data was given due to the limited graphical space. Based on the resolutions taken into study (FULL, ESSEN) and on the signal combination classes (REF, ROOT) the main descriptive statistics were computed and reported for the events encoded in each of them as the absolute and relative frequencies. Then, the training time was reported for each signal, on combination classes and resolutions taken into study.

Classification performance was reported at the same levels of detail, by a graphical comparative approach. At this stage, however, only the global classification performance of each model has been taken into consideration for reporting. Based on its outcomes, the best models were selected for both, FULL and ESSEN study resolutions and the classification performance metrics for these models were reported in detail, at the event level. The last task took into consideration a more detailed analytical approach to identify and characterize those events that were misclassified. For this step, the data of the two models retained as holding the best global classification performance was exported from the software used to train the ANN into Microsoft Excel® (Microsoft, Redmond, WA, USA, 2016 version) where sorting procedures were taken to account for the number and share of misclassifications at event level; this step was complemented by a graphical representation of two examples of misclassifications extracted as being relevant for the models taken into analysis.

3. Results

3.1. Description of Data and Training Time

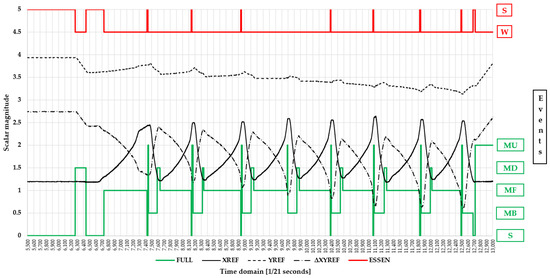

The coding results plotted against the signals extracted from the media files by tracking are given as an example, in the time domain, in Figure 2. The kinematics of the active frame were found to be quite distinguishable in the signals’ pattern shown.

Figure 2.

A partition of the original data extracted by tracking plotted against the coded events in the time domain. Legend: meaning of S, W, MU, MD, MF, and MB is given in Table 2, FULL—fully documented data, including the events S, MU, MD, MF and MB, ESSEN—essentially documented data, including the events S and W, meaning of XREF, YREF, and ΔXYREF is given in Table 1.

For instance, one could identify quite easily a full cutting cycle consisting of a succession of MD, MF, S, MU, and MB events (e.g., Figure 2, time domain from ca. 7400 to 8150, expressed in 1/21 s where the events are given by the pattern of the green line). Moreover, at the ESSEN resolution scale the events of working (W) and non-working (S) may be easily identified in the data patterns shown (Figure 2, events are shown in the pattern of the red line). Given the setup and placement of the camera, the variation in amplitude of the XREF was wider compared to that of YREF and it was transposed also, to a great extent, in the amplitude variation of ΔXYREF.

Table 4 is showing the absolute and relative frequencies of the events in their corresponding datasets for the two resolutions taken into study. Irrespective of the case, the observations in which the machine was found to work accounted for ca. 85% of the datasets. In the case of the FULL resolution, moving up (MU) and down (MD) accounted for relatively similar speeds; therefore, the number of observations found for the two was close. However, this was not the case of moving forward (cut, MF) and backward (MB), because the active cut was done at a considerably lower speed. Therefore, the use of the machine to effectively cut the wood was found to predominate in observations (ca. 58%), and this event is also important because it can be used to infer the number of cuts done or pieces detached from the logs.

Table 4.

Absolute and relative frequency of the events in the signals used.

Table 5, on the other hand, is showing the variation of the ANN training time for the signal(s) used in the training process. The general trend was that of systematically taking less time to train the ANN from the signals extracted from the X axis (XREF, XROOT) while any combination that has used the data coming from the Y axis took considerably more time in the training process. From this point of view, it seems that it was easier for the ANN to see a better pattern in data coming from the X axis compared to that coming from the Y axis, a fact that can be supported by the data shown in Figure 2. Training costs in terms of time consumption for the derived signal (ΔXY) were lower, probably due to the contribution of data coming from the X axis in its computation. In general, the combination of X, Y, and ΔXY signals took considerably more training time compared to any other alternatives. Further, the signals filtered to the root (ROOT) failed to improve considerably the amount of time needed for training as the ratios between the time needed to train the ROOT and REF signals were in range of 0.76–1.17 for the FULL resolution and of 0.80–1.21 for the ESSEN resolution, respectively. On the other hand, the highest differences in terms of training time resources were brought by the classification complexity (FULL, ESSEN); for these, with one exception, the ratio of time needed to train essential data to that needed to train fully described data was in the range of 0.20–0.73. From this point of view, and by assuming that the objectives of a given application will be met, less detailed data could be a better approach to save resources in the case of extensive datasets.

Table 5.

Training time of each model.

3.2. Overall Classification Performance

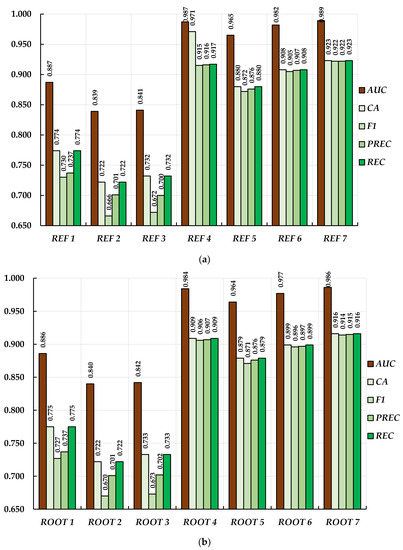

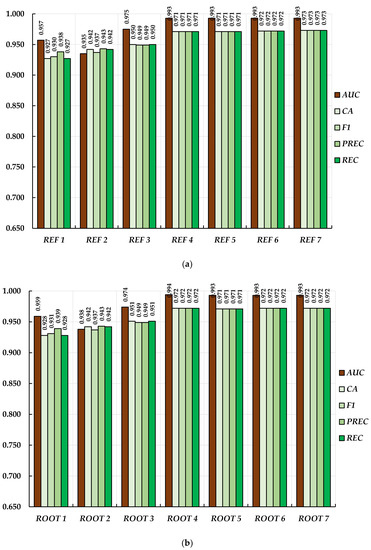

The general results of the classification performance are given in Figure 3 and Figure 4, respectively. By a data comparison between the two, it was quite obvious that signals characterizing the ESSEN resolution returned better classification performances irrespective of the signal(s) taken into consideration in the training phase. In what concerns the same level of detail in data coding, the results were quite different. In the case of FULL resolution both, REF and ROOT classes of signals returned poorer classification results when using a single signal as an input for training. For instance, REF1, REF2, and REF3 corresponded to the individual use of XREF, YREF and ΔXYREF, respectively, in the training phase. Accordingly, ROOT1, ROOT2, and ROOT3 corresponded to the individual use of XROOT, YROOT, and ΔXYROOT for training. In these circumstances, classification accuracy, precision, and recall (CA, PREC, REC) were rather in the average domain, with values ranging from ca. 0.7 to 0.8. So, in these cases, and by considering the REC metric, one could misclassify very well 20–30% of the data. Nevertheless, the X signals seemed to carry more information to classify the events as the values of REC were the highest in their case.

Figure 3.

General classification performance metrics computed for the signals characterizing the FULL resolution: (a) classification performance metrics for the REF signals, (b) classification performance metrics for the ROOT signals. Legend: REF1—REF7 and ROOT1—ROOT7 have the meanings given in Table 3, AUC—area under curve, CA—classification accuracy, F1—harmonic mean of PREC and REC, PREC—precision, REC—recall.

Figure 4.

General classification performance metrics computed for the signals characterizing the ESSEN resolution: (a) classification performance metrics for the REF signals, (b) classification performance metrics for the ROOT signals. Legend: REF1—REF7 and ROOT1—ROOT7 have the meanings given in Table 3, AUC—area under curve, CA—classification accuracy, F1—harmonic mean of PREC and REC, PREC—precision, REC—recall.

For the same resolution and irrespective of the signal class, the classification performance outcomes were improved substantially by the use of two or more signals for a given classification attempt. For instance, when using both (XREF and YREF), as specific to REF4, accuracy, precision and recall were found to have values of 0.916 to 0.971. Furthermore, they were in the range of 0.907 to 0.909 in the case of ROOT signal class. For both classes of signals, the best results of the classification performance indicators were found when using all the three signals (XREF, YREF, and ΔXYREF, on the one hand and XROOT, YROOT, and ΔXYROOT on the other hand). In these cases, the outcomes of the main classification performance indicators exceeded the value of 0.915 and they have shown a better performance when training the data from the REF class of signals.

Given the results found, in the case of REF class of signals it would be advisable to compute and use a derived signal (ΔXYREF), as defined in this study. This could be important since by using it one could bring an additional improvement of the classification performance by up to 0.6% if the REC metric is to be considered. While this can be interpreted as being a low improvement, one should account for scaling when interpreting such data. For instance, this study used ca. 13,000 observations for the REF class of signals which could be transposed in ca. 620 s of detailed analysis. An improvement of 0.6% of the REC metric, from 0.917 (as the signals could be extracted directly by tracking) to 0.923 (which will suppose an additional, yet simple calculation of the ΔXYREF) for this dataset would account for an additional correct classification of ca. 4 s. Scaled at least at one operational day, this will mean an improvement by an additional correct classification of ca. 192 s. The same could apply to the ROOT signal class but, given the outcomes of the classification performance, as well as the additional effort to process the signals by filtering to the root, the attempt seems to be unreasonable. On the other hand, by assuming that a less detailed data (by type of events surveyed and coded) could fit the needs of a study, the results shown in Figure 4 are encouraging. In this case, and by keeping the same interpretations on the feasibility of signal filtering procedure, as well as those related to the type of signals used to train the ANN models, the use of all the three signals from the REF class has led to values of the classification performance metrics of 0.973, which could be interpreted as excellent event (time) classification results. In this case (i.e., ESSEN resolution) the outcomes of the classification performance seemed to be less affected by the number of signals used to train the ANN. As a fact, the values of the classification performance metrics ranged from 0.927 to 0.973 and they were also less affected by the class of signals used (REF, ROOT). However, given the results shown in Figure 3 and Figure 4, for a more detailed analysis were kept the REF7 combinations corresponding to both, FULL and ESSEN resolutions.

3.3. Characteristics of the Best Classification Models

The detailed results of the classification performance for the two retained models are given in Table 6. In the case of the FULL resolution, classification accuracy was found to exceed 0.95 (95%) irrespective of the event taken into study. However, the values of the REC metric ranged from ca. 70% (MD) to ca. 98% (MF), with the last high value being also very important because it characterizes largely the active cutting of the machine. Therefore, excepting the downward movement, all the values shown may characterize very good classification results. It is important to mention here also the amount of data to which a given metric of classification performance applies. For instance, the movements done downward and upward accounted for less than 15% of the data.

Table 6.

Detailed description of the classification performance for the best models.

Similar results were found for the classification performance of the REF7 at the ESSEN resolution. Machine working, which is essential in the productivity studies to delimitate and account for the productive time [6], was found to yield a value of 98.5% for the REC classification performance metric while the machine non-working has outputted a value of 90.6% for the same metric. Misclassifications found in the best models are detailed in Table 7 by a matrix comparison approach.

Table 7.

Misclassifications in the best models.

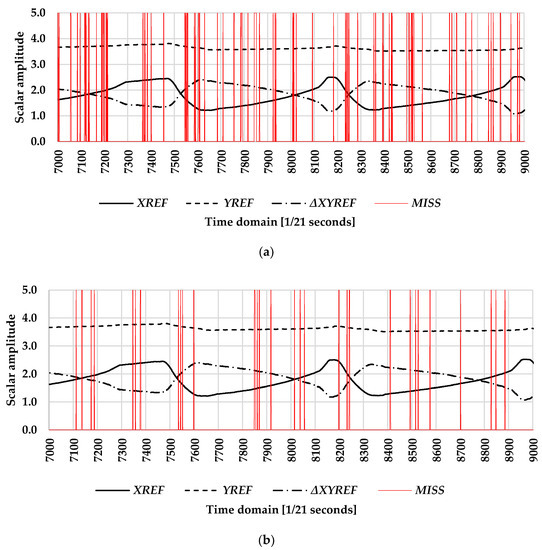

In total, there were 1007 misclassified events in the REF7 of the FULL resolution and these were quite equally distributed among all the moving events, with backward movement accounting for the most. In this case, most of them were misclassified as forward movements, a fact that characterized also the downward movement misclassifications. For the ESSEN resolution, on the other hand, the number of misclassified events was much less, accounting for 358. In this case, the misclassifications were distributed quite evenly between the two classes (W and S, respectively). Given the tools used to collect the data, as well as the approach used to extract the used signals, it was assumed that some of the misclassifications could be specific to a particular geometry or regions in the signals’ patterns. To visually check for such behaviors, Figure 5 was developed to report a representative partition of the data. What can be interpreted from Figure 5 is that the misclassifications din not followed a specific pattern in relation to the geometry included in the patterns of the used input signals, neither did they hold a specific grouping in the data. Another thing that can be seen was that, for the approximately the same data range, the ESSEN resolution produced significantly less misclassifications which was already proven by the data included in Figure 3 and Figure 4, respectively.

Figure 5.

A partition of the original data plotted against misclassifications in the time domain: (a) for the FULL resolution and (b) for the ESSEN resolution. Legend: XREF, YREF, and ΔXYREF have the meaning given in Table 1, MISS—misclassifications (0—absent, 5—present).

4. Discussion

Classification outcomes, as outputted by the system taken into study, were encouraging, a fact that may be discussed from at least two points of view. First of all, the precision of classification is generally termed as being very high if for a given application one achieves values of 90% or over [23]. However, what is to be considered is also the utility of the metrics, their magnitude and the role of a given class in a given application. From this point of view, the classification recall (REC) would be the best choice to evaluate the performance of the models. As it reached values of 92.3% and 97.3% for the FULL and ESSEN resolutions, respectively, then one could expect misclassifications of time consumption associated to the recall metric of ca. 1.5 (ESSEN) to 4.5 (FULL) minutes per one hour of monitoring. However, this assumes that one will use the models as given herein without any other checks on the geometry and other features, while the data for the active cutting (FULL) and machine working (ESSEN) will lead to much fewer misclassifications. On the other hand, it was shown that improvements of classification ability may be obtained by data scaling to standardize the inputs [26] so as to reach a mean value of zero and a standard deviation of one for the input data. While for the application described herein the eventual improvements brought by this kind of approach still need to be checked, what is clear for now is that they will also need an additional computational effort.

Since the performance of the ANNs is related to the data used (signals, patterns) as inputs, then one good approach to improve the classification outcomes will be that of getting very good signals, including by their augmentation [23] where there is the case. As such, the ability to convert an analogous signal into a good digital one and to augment the inputs provided by a digital signal may be done in several ways, by having in mind both, the acquired signal and the underlying process [27]. From this point of view both, deriving a new signal as well as filtering the used input signals to their roots could be seen as some sort of data augmentation. As the first approach actually led to an increment of the general classification accuracy, one may conclude that it can bring improvements for applications such as that described herein. However, its usefulness would depend also by the acceptability of the classification outcomes since the improvement was of 0.6% compared to that of using the raw signals in the multiclass (FULL) resolution problem and of 0.2% in the binary (ESSEN) resolution problem. It is worth mentioning here that the raw signals (REF) were produced at half of the original media speed, therefore, the trade-off between accuracy and saving time sources in the office phase should be explored further.

The above mentioned, lead naturally to the data collector, its capabilities, setup, and settings, which could hold the key for producing better signals. For a data collector such as that used herein, and by considering the classification recall metric, it was found that the lower performing events were the upward, downward and backward movements. This may not be erratic given the camera capabilities and location, the background data used to produce the raw signals, the distance to the surveyed events, and the speed at which the events occurred. In what concerns the backward movement, it was found to be done at considerably higher speeds compared to the forward movement, a fact that could have been affected the output signal at least by the sampling frequency. This was also the case of upward and downward movements, with the latter ones being done in the field of view’s background; therefore, one may just assume that the speed at which the events occurred and their distance from the camera affected the classification performance. The general classification outcomes may be related very well also to the resolution of the camera and to the sampling frequency. For instance, Ref [28] have indicated that monitoring the movement prediction errors in construction sites may be affected by the frequency of sampling (i.e., number of frames per second). On the other hand, higher sampling rates have produced excellent results even for faster events [16]. Hence, a camera holding a better resolution, a finer sampling rate and an improved shooter speed, may have been improved the outcomes, given the fact that the software used to produce the signals works by a frame-by-frame, pixel-by-pixel approach. Since the camera itself is not the only one component of the data collection system, one may think if the use of well-designed markers placed on the machine’s frame could have been produced better signals. As such, many applications of the Kinovea® software have produced excellent results from using markers [12,15] while the software itself has been used in many types of applications that supposed the analysis of motion [12,15,16] or inter-validation of different methods [16,29].

This study addressed only those events that were related to the machine use. Therefore, long-term applications could include also external events such as feeding the logs and log rotation on the machine’s platform (partly included in this study). It is to be checked to what extent a multi-tracking approach could enhance a better event and time consumption classification for a setup such as that from herein, as well as for a setup designed to monitor other active parts of the machine such as the devices used to hold and rotate the logs. Accordingly, for a finer tuning of the system, it is to be checked to what extent a calibration of the camera could solve other problems such as actually getting variables related to the size of the logs and of the processed wood products. However, by assuming a system such as that described herein, what could matter for long-term data collection sessions is that of finding and holding a non-obstructed and non-interfered position for the camera. Moreover, the system’s financial performance and sustainability are to be checked against those systems based on cheap external sensors [9].

Last, but not least, this study has used an ANN architecture which is just one of the several techniques of the AI. Our choice for this technique was based on its performance and popularity [23] and mainly on its ability to solve multivariate problems [19] and to extract meaningful data from complex patters [25]. Nevertheless, future studies should check the performance of other techniques such as the support vector machine (SVM), Bayes classifier (BC), or random forests (RF) to see if they could output better results. Furthermore, the approach of this study was just that to check the performance of ANN architectures to classify data. This was done only by training and has outputted excellent results. However, an extension of the system to get long term data would be beneficial to build more robust models and to keep a separate subset for testing and validation, as these are the typical steps for ANN development and deployment [17]; it will also extend our understanding related to the use of algorithms, electronics and computer software in the assessment of operational performance in the wood supply chain by adding new approaches to the known ones [30,31,32].

5. Conclusions

The main conclusion of this study is that the described and tested system holds a lot of potential for automating data collection, processing, and analysis in wood sawmilling time-and-motion applications. This was enabled by the use of more than one scalar signal as well as by the use of derived signals, while the filtering to the signal’s roots did not concur to performance enhancements. Less detailed data has produced better outcomes in the classification accuracy metrics; therefore, this approach could be more suitable for long term applications under the assumption that such outcomes will be accepted. Even if not fine-tuned, as described in the discussion section, the system may still achieve a high performance in data collection, analysis, and classification problems related to the efficiency of wood sawmilling operations. Assuming that a sufficient dataset would be available to build a robust ANN model, the system could be implemented to get long-term data for an in-depth analysis. In this configuration, it would enable better decisions supported by an informed background, contributing this way to an easier search for improvement approaches in small-scale sawmilling industry.

Author Contributions

Conceptualization, S.A.B.; data curation, S.A.B.; formal analysis, S.A.B. and M.P.; funding acquisition, S.A.B.; investigation, S.A.B.; methodology, S.A.B. and M.P.; project administration, S.A.B.; resources, S.A.B. and M.P.; validation, S.A.B. and M.P.; visualization, S.A.B. and M.P.; writing—original draft, S.A.B. and M.P.; writing—review and editing, S.A.B. and M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors acknowledge the technical support of the Department of Forest Engineering, Forest Management Planning and Terrestrial Measurements, Faculty of Silviculture and Forest Engineering, Transylvania University of Brasov in designing and conducting this study. The authors would like to thank to the manager of the sawmilling facility, who wished to remain anonymous, for his support in the field data collection phase.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Borz, S.A. Evaluarea Eficienţei Echipamentelor şi Sistemelor Tehnice în Operaţii Forestiere; Lux Libris Publishing House: Braşov, Romania, 2014; 252p. [Google Scholar]

- Hyytiäinen, A.; Viitanen, J.; Mutanen, A. Production efficiency of independent Finnish sawmills in the 2000’s. Baltic For. 2011, 17, 280–287. [Google Scholar]

- Gligoraş, D.; Borz, S.A. Factors affecting the effective time consumption, wood recovery rate and feeding speed when manufacturing lumber using a FBO-02 CUT mobile bandsaw. Wood Res. 2015, 60, 329–338. [Google Scholar]

- Cedamon, E.D.; Harrison, S.; Herbohn, J. Comparative analysis of on-site free-hand chainsaw milling and fixed site mini-bandsaw milling of smallholder timber. Small-Scale For. 2013, 12, 389–401. [Google Scholar] [CrossRef]

- De Lasaux, M.J.; Spinelli, R.; Hartsough, B.R.; Magagnotti, N. Using a small-log mobile sawmill system to contain fuel reduction treatment cost on small parcels. Small-Scale For. 2009, 8, 367–379. [Google Scholar] [CrossRef]

- Björheden, R.; Apel, K.; Shiba, M.; Thompson, M. IUFRO Forest Work Study Nomenclature; The Swedish University of Agricultural Science: Garpenberg, Sweden, 1995. [Google Scholar]

- Acuna, M.; Bigot, M.; Guerra, S.; Hartsough, B.; Kanzian, C.; Kärhä, K.; Lindroos, O.; Magagnotti, N.; Roux, S.; Spinelli, R.; et al. Good Practice Guidelines for Biomass Production Studies; CNR IVALSA Sesto Fiorentino (National Research Council of Italy—Trees and Timber Institute): Sesto Fiorentino, Italy, 2012; pp. 1–51. ISBN 978-88-901660-4-4.

- Ištvanić, J.; Lučić, R.B.; Jug, M.; Karan, R. Analysis of factors affecting log band saw capacity. Croat. J. For. Eng. 2009, 30, 27–35. [Google Scholar]

- Cheţa, M.; Marcu, M.V.; Iordache, E.; Borz, S.A. Testing the capability of low-cost tools and artificial intelligence techniques to automatically detect operations done by a small-sized manually driven bandsaw. Forests 2020, 11, 739. [Google Scholar] [CrossRef]

- Muşat, E.C.; Apăfăian, A.I.; Ignea, G.; Ciobanu, V.D.; Iordache, E.; Derczeni, R.A.; Spârchez, G.; Vasilescu, M.M.; Borz, S.A. Time expenditure in computer aided time studies implemented for highly mechanized forest equipment. Ann. For. Res. 2016, 59, 129–144. [Google Scholar] [CrossRef]

- Xu, H.; Zhu, Y. Real-time object tracking based on improved fully-convolutional siamese network. Comput. Electr. Eng. 2020, 86, 106755. [Google Scholar] [CrossRef]

- Fernández-González, P.; Koutsou, A.; Cuesta-Gómez, A.; Carratalá-Tejada, M.; Miangolarra-Page, J.C.; Molina-Rueda, F. Reliability of Kinovea® software and agreement with a three-dimensional motion system for gait analysis in healthy subjects. Sensors 2020, 20, 3154. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision. Algorithms and Applications; Springer: London, UK, 2011; 812p. [Google Scholar]

- Makhura, O.J.; Woods, J.C. Learn-select-track: An approach to multi-object tracking. Signal Process-Image 2019, 74, 153–161. [Google Scholar] [CrossRef]

- Damsted, C.; Nielsen, O.R.; Larsen, L.H. Reliability of video-based quantification of the knee- and hip angle at foot strike during running. Int. J. Sports Phys. Ther. 2015, 10, 147–154. [Google Scholar] [PubMed]

- Balsalobre-Fernández, C.; Tejero-González, C.M.; Del Campo-Vecino, J.; Bavaresco, N. The concurrent validity and reliability of a low-cost, high-speed camera-based method for measuring the flight time of vertical jumps. J. Strength Cond. Res. 2014, 28, 528–533. [Google Scholar] [CrossRef] [PubMed]

- Haykin, S. Neural Networks and Learning Machines; Pearson: Upper Saddle River, NJ, USA, 2009; Volume 3, 906p. [Google Scholar]

- Keefe, R.F.; Zimbelman, E.G.; Wempe, A.M. Use of smartphone sensors to quantify the productive cycle elements of hand fallers on industrial cable logging operations. Int. J. For. Eng. 2019, 30, 132–143. [Google Scholar] [CrossRef]

- Proto, A.R.; Sperandio, G.; Costa, C.; Maesano, M.; Antonucci, F.; Macri, G.; Scarascia Mugnozza, G.; Zimbalatti, G. A three-step neural network artificial intelligence modeling approach for time, productivity and costs prediction: A case study in Italian forestry. Croat. J. For. Eng. 2020, 41, 35–47. [Google Scholar] [CrossRef]

- Leeb, S.B.; Shaw, S.R. Applications of real-time median filtering with fast digital and analog sorters. IEEE/ASME Trans. Mech. 1997, 2, 136–143. [Google Scholar] [CrossRef]

- Neal, C.G., Jr.; Gary, L.W. A theoretical analysis of the properties of median filters. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1136–1141. [Google Scholar]

- Demsar, J.; Curk, T.; Erjavec, A.; Gorup, C.; Hocevar, T.; Milutinovic, M.; Mozina, M.; Polajnar, M.; Toplak, M.; Staric, A.; et al. Orange: Data Mining Toolbox in Python. J. Mach. Learn. Res. 2013, 14, 2349–2353. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldu, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recogn. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Cheța, M.; Marcu, M.V.; Borz, S.A. Effect of Training Parameters on the Ability of Artificial Neural Networks to Learn: A Simulation on Accelerometer Data for Task Recognition in Motor-Manual Felling and Processing. Bulletin of the Transilvania University of Brasov. Forestry, Wood Industry, Agricultural Food Engineering. Series II; Braşov, Romania, 2020; Volume 13, pp. 19–36. Available online: http://webbut.unitbv.ro/Bulletin/Series%20II/2020/BULETIN%20I%20PDF/SV-02_Cheta%20et%20al.pdf (accessed on 1 December 2020).

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning. Data Mining, Inference and Prediction, 2nd ed.; Springer: New York, NY, USA, 2009; 745p. [Google Scholar]

- Smith, S.W. The Scientist and Engineer’s Guide to Digital Signal Processing, 2nd ed.; California Technical Publishing: San Diego, CA, USA; 650p.

- Zhu, Z.; Park, M.-W.; Koch, C.; Soltani, M.; Hammad, A.; Davari, K. Predicting movements of onsite workers and mobile equipment for enhancing construction safety. Autom. Constr. 2016, 68, 95–101. [Google Scholar] [CrossRef]

- Michelleti Cremasco, M.; Giustetto, A.; Caffaro, F.; Colantoni, A.; Cavallo, E.; Grigolato, S. Risk assessment for musculoskeletal disorders in forestry: A comparison between RULA and REBA in the manual feeding of a wood-chipper. Int. J. Environ. Res. Public Health 2019, 16, 793. [Google Scholar] [CrossRef] [PubMed]

- Picchio, R.; Proto, A.R.; Civitarese, V.; Di Marzio, N.; Latterini, F. Recent contributions of some fields of the electronics in development of forest operations technologies. Electronics 2019, 8, 1465. [Google Scholar] [CrossRef]

- Marinello, F.; Proto, A.R.; Zimbalatti, G.; Pezzuolo, A.; Cavalli, R.; Grigolato, S. Determination of forest road surface roughness by Kinect depth imaging. Ann. For. Res. 2017, 62, 217–226. [Google Scholar] [CrossRef]

- Costa, C.; Figorilli, S.; Proto, A.R.; Colle, G.; Sperandio, G.; Gallo, P.; Antonucci, F.; Pallottino, F.; Menesatti, P. Digital stereovision system for dendrometry, georeferencing and data management. Biosyst. Eng. 2018, 174, 126–133. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).