Characterizing Seedling Stands Using Leaf-Off and Leaf-On Photogrammetric Point Clouds and Hyperspectral Imagery Acquired from Unmanned Aerial Vehicle

Abstract

:1. Introduction

2. Materials and Methods

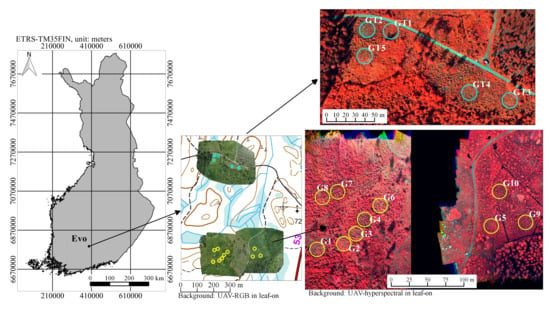

2.1. Study Area and Establishment of the Sample Plots

2.2. Remote Sensing Data

2.3. Creating Dense Point Clouds and Image Mosaics

2.4. Delineation of Tree Crowns and Extracting 3D Metrics

2.5. Selection of Training Segments

2.6. Vegetation Indices and Finding Optimal Bands

2.7. Segments Classification

2.8. Accuracy Evaluation for Tree Density and Height

3. Results

3.1. Analysing Spectral Features and Optimal Bands for Vegetation Indices

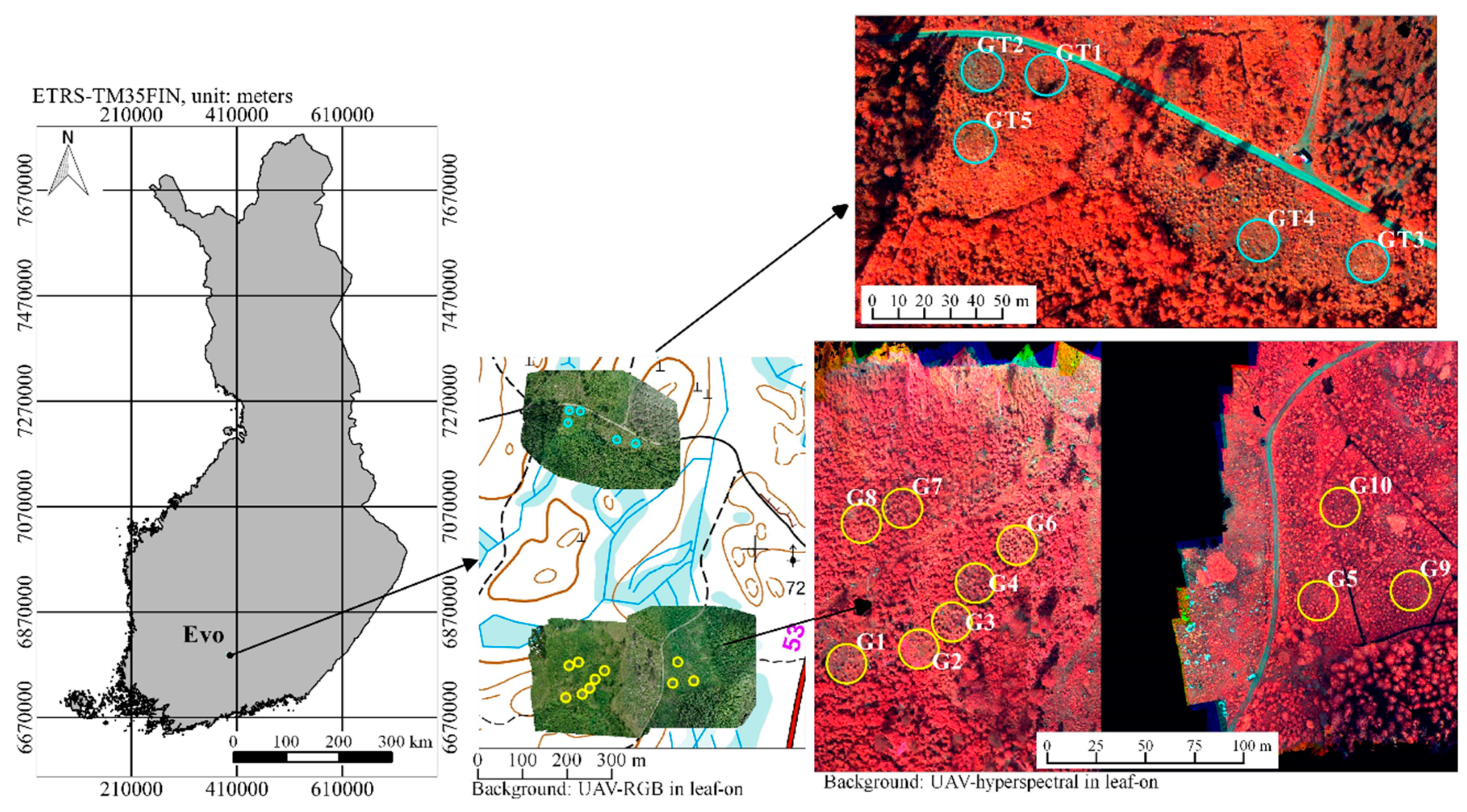

3.2. Tree Density Estimation

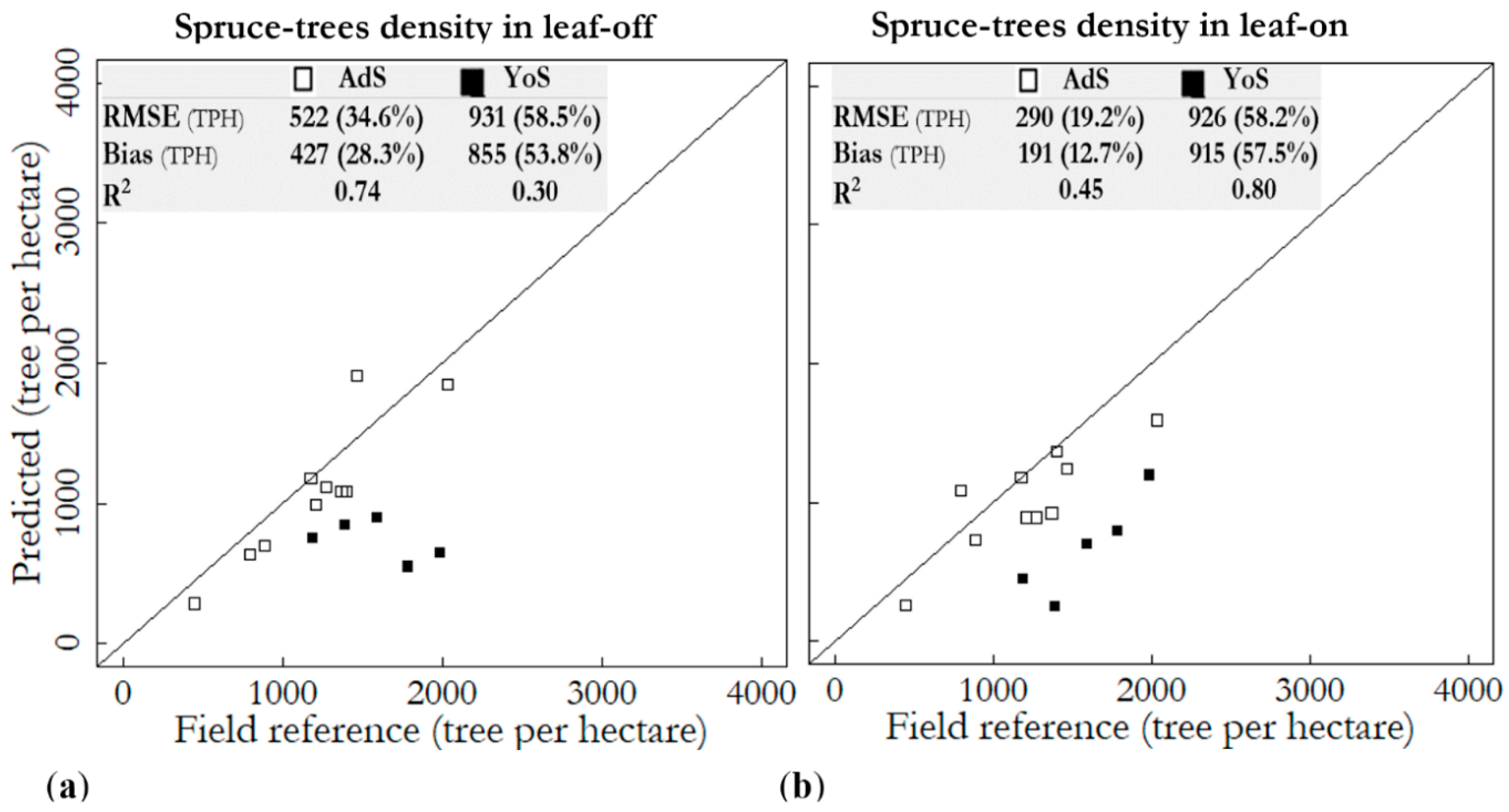

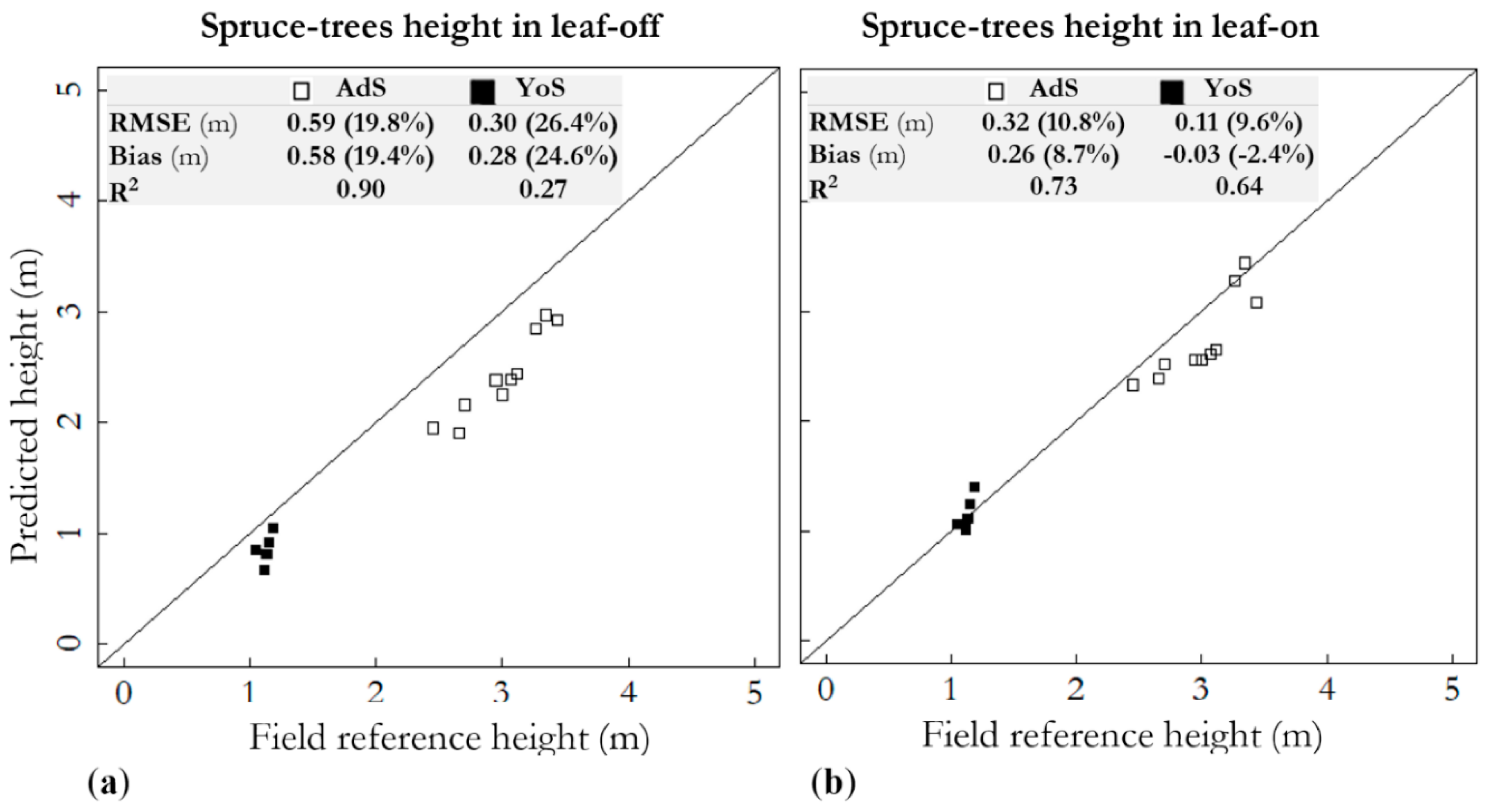

3.3. Height Attribute Extraction

4. Discussion

4.1. Tree Density Estimation

4.2. Tree Height Estimation

4.3. Comparing Leaf-Off and Leaf-On Data

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Puliti, S.; Ene, L.T.; Gobakken, T.; Næsset, E. Use of partial-coverage UAV data in sampling for large scale forest inventories. Remote Sens. Environ. 2017, 194, 115–126. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Marshall, P.L.; Tompalski, P.; Crawford, P. Unmanned aerial systems for precision forest inventory purposes: A review and case study. For. Chron. 2017, 93, 71–81. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2016, 38, 1–21. [Google Scholar] [CrossRef]

- Zahawi, R.A.; Dandois, J.P.; Holl, K.D.; Nadwodny, D.; Reid, J.L.; Ellis, E.C. Using lightweight unmanned aerial vehicles to monitor tropical forest recovery. Biol. Conserv. 2015, 186, 287–295. [Google Scholar] [CrossRef]

- Tuominen, S.; Balazs, A.; Saari, H.; Pölönen, I.; Sarkeala, J.; Viitala, R. Unmanned aerial system imagery and photogrammetric canopy height data in area-based estimation of forest variables. Silva. Fenn. 2015, 49, 1–19. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-deseilligny, M.; Lejeune, P.; Management, N. A Photogrammetric Workflow for the Creation of a Forest Canopy Height Model from Small Unmanned Aerial System Imagery. Forests 2013, 922–944. [Google Scholar] [CrossRef]

- Wallace, L.O.; Lucieer, A.; Watson, C.S. Assessing the feasibility of UAV-based LiDAR for high resolution forest change detection. In Proceedings of the 12th Congress of the International Society for Photogrammetry and Remote Sensing, Melbourne, Australia, 25 August–1 September 2012; International Archives of the Photogrammetry, Remote Sensing and Spatial Information Science: Melbourne, Australia, 2012; Volume 39, pp. 499–504. [Google Scholar]

- Carr, J.C.; Slyder, J.B. Individual tree segmentation from a leaf-off photogrammetric point cloud. Int. J. Remote Sens. 2018, 39, 5195–5210. [Google Scholar] [CrossRef]

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidar-based point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2017, 38. [Google Scholar] [CrossRef]

- Tapio. Hyvän Metsänhoidon Suositukset. (Recommendations for Forest Management in Finland); Forest Development Centre Tapio. Metsäkustannus oy: Helsinki, Finland, 2006; p. 100. (In Finnish) [Google Scholar]

- Huuskonen, S.; Hynynen, J. Timing and intensity of precommercial thinning and their effects on the first commercial thinning in Scots pine stands. Silva. Fenn. 2006, 40, 645–662. [Google Scholar] [CrossRef]

- Puliti, S.; Solberg, S.; Granhus, A. Use of UAV Photogrammetric Data for Estimation of Biophysical Properties in Forest Stands Under Regeneration. Remote Sens. 2019, 11, 233. [Google Scholar] [CrossRef]

- Feduck, C.; Mcdermid, G.J. Detection of Coniferous Seedlings in UAV Imagery. Forests 2018, 9, 432. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; Crawford, P. Assessing the status of forest regeneration using digital aerial photogrammetry and unmanned aerial systems. Int. J. Remote Sens. 2018, 39, 5246–5264. [Google Scholar] [CrossRef]

- Vepakomma, U.; Cormier, D.; Thiffault, N. Potential of UAV based convergent photogrammetry in monitoring regeneration standards. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 281–285. [Google Scholar] [CrossRef]

- Röder, M.; Latifi, H.; Hill, S.; Wild, J.; Svoboda, M.; Brůna, J.; Macek, M.; Nováková, M.H.; Gülch, E.; Heurich, M. Application of optical unmanned aerial vehicle-based imagery for the inventory of natural regeneration and standing deadwood in post-disturbed spruce forests. Int. J. Remote Sens. 2018, 39, 5288–5309. [Google Scholar] [CrossRef]

- Kirby, C.L. A Camera and Interpretation System for Assessment of Forest Regeneration; Northern Forest Research Center: Edmonton, AB, Canada, 1980. [Google Scholar]

- Hall, R.J.; Aldred, A.H. Forest regeneration appraisal with large-scale aerial photographs. For. Chron. 1992, 68, 142–150. [Google Scholar] [CrossRef]

- Pouliot, D.A.; King, D.J.; Pitt, D.G. Development and evaluation of an automated tree detection—delineation algorithm for monitoring regenerating coniferous forests. Can. J. For. Res. 2005, 35, 2332–2345. [Google Scholar] [CrossRef]

- Pouliot, D.A.; King, D.J.; Pitt, D.G. Automated assessment of hardwood and shrub competition in regenerating forests using leaf-off airborne imagery. Remote Sens. Environ. 2006, 102, 223–236. [Google Scholar] [CrossRef]

- Wunderle, A.L.; Franklin, S.E.; Guo, X.G. Regenerating boreal forest structure estimation using SPOT-5 pan-sharpened imagery. Int. J. Remote Sens. 2007, 28, 4351–4364. [Google Scholar] [CrossRef]

- Hauglin, M.; Bollandsås, O.M.; Gobakken, T.; Næsset, E. Monitoring small pioneer trees in the forest-tundra ecotone: Using multi-temporal airborne laser scanning data to model height growth. Environ. Monit. Assess. 2018, 190. [Google Scholar] [CrossRef]

- Thieme, N.; Martin Bollandsås, O.; Gobakken, T.; Næsset, E. Detection of small single trees in the forest-tundra ecotone using height values from airborne laser scanning. Can. J. Remote Sens. 2011, 37, 264–274. [Google Scholar] [CrossRef]

- Ole Ørka, H.; Gobakken, T.; Næsset, E. Predicting Attributes of Regeneration Forests Using Airborne Laser Scanning. Can. J. Remote Sens. 2016, 42, 541–553. [Google Scholar] [CrossRef]

- Næsset, E.; Bjerknes, K.O. Estimating tree heights and number of stems in young forest stands using airborne laser scanner data. Remote Sens. Environ. 2001, 78, 328–340. [Google Scholar] [CrossRef]

- Økseter, R.; Bollandsås, O.M.; Gobakken, T.; Næsset, E. Modeling and predicting aboveground biomass change in young forest using multi-temporal airborne laser scanner data. Scand. J. For. Res. 2015, 30, 458–469. [Google Scholar] [CrossRef]

- Korpela, I.; Tuomola, T.; Tokola, T.; Dahlin, B. Appraisal of seedling stand vegetation with airborne imagery and discrete-return LiDAR—an exploratory analysis. Silva. Fenn. 2008, 42, 753–772. [Google Scholar] [CrossRef]

- Korhonen, L.; Pippuri, I.; Packalén, P.; Heikkinen, V.; Maltamo, M.; Heikkilä, J. Detection of the need for seedling stand tending using high-resolution remote sensing data. Silva. Fenn. 2013, 47, 1–20. [Google Scholar] [CrossRef]

- Näslund, M. Skogsförsöksanstaltens gallrings-försök i tallskog. Meddelanden från Statens Skogs-försöksanstalt 29; EDDELANDEN FRÅN STATENS KOGSFöRSöKSANSTALT HAFTE 29: Stockholm, Sweden, 1936; p. 169. (In Swedish) [Google Scholar]

- Saari, H.; Pölönen, I.; Salo, H.; Honkavaara, E.; Hakala, T.; Holmlund, C.; Mäkynen, J.; Mannila, R.; Antila, T.; Akujärvi, A. Miniaturized hyperspectral imager calibration and UAV flight campaigns. In Proceedings of the SPIE 8889, Sensors, Systems, and Next-Generation Satellites XVII, 88891O, Dresden, Germany, 24 October 2013; Meynart, R., Neeck, S.P., Shimoda, H., Eds.; International Society for Optics and Photonics SPIE: Dresden, Germany, 2013. [Google Scholar]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Honkavaara, E.; Hakala, T.; Nevalainen, O.; Viljanen, N.; Rosnell, T.; Khoramshahi, E.; Näsi, R.; Oliveira, R.; Tommaselli, A. Geometric and reflectance signature characterization of complex canopies using hyperspectral stereoscopic images from uav and terrestrial platforms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 77–82. [Google Scholar] [CrossRef]

- Honkavaara, E.; Markelin, L.; Hakala, T.; Peltoniemi, J. The Metrology of Directional, Spectral Reflectance Factor Measurements Based on Area Format Imaging by UAVs. Photogramm.-Fernerkund.-Geoinf. 2014, 2014, 175–188. [Google Scholar] [CrossRef]

- Honkavaara, E.; Rosnell, T.; Oliveira, R.; Tommaselli, A. Band registration of tuneable frame format hyperspectral UAV imagers in complex scenes. ISPRS J. Photogramm. Remote Sens. 2017, 134, 96–109. [Google Scholar] [CrossRef]

- Honkavaara, E.; Khoramshahi, E. Radiometric correction of close-range spectral image blocks captured using an unmanned aerial vehicle with a radiometric block adjustment. Remote Sens. 2018, 10, 256. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Markelin, L.; Honkavaara, E.; Näsi, R.; Viljanen, N.; Rosnell, T.; Hakala, T.; Vastaranta, M.; Koivisto, T.; Holopainen, M. Radiometric correction of multitemporal hyperspectral uas image mosaics of seedling stands. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 113–118. [Google Scholar] [CrossRef]

- Hyyppä, J.; Hyyppä, H.; Leckie, D.; Gougeon, F.; Yu, X.; Maltamo, M. Review of methods of small-footprint airborne laser scanning for extracting forest inventory data in boreal forests. Int. J. Remote Sens. 2008, 29, 1339–1366. [Google Scholar] [CrossRef]

- Roussel, J.-R.; Auty, D. lidR: Airborne LiDAR Data Manipulation and Visualization for Forestry Applications. R package version 1.2.1. 2017. Available online: https://CRAN.R-project.org/package=lidR (accessed on 30 November 2017).

- R Core Team (2017). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. Available online: https://www.R-project.org/ (accessed on 12 September 2017).

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for Automated Geoscientific Analyses (SAGA) v. 2.1.4. Geosci. Model. Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef]

- Crookston Nicholas, L.; Finley Andrew, O. YaImpute: An R Package for k-NN Imputation. J. Stat. Softw. 2008. Available online: https://CRAN.R-project.org/package=yaImpute (accessed on 1 March 2018).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bohlin, J.; Bohlin, I.; Jonzén, J.; Nilsson, M. Mapping forest attributes using data from stereophotogrammetry of aerial images and field data from the national forest inventory. Silva. Fenn. 2017, 51, 1–18. [Google Scholar] [CrossRef]

- Bohlin, J.; Wallerman, J.; Fransson, J.E.S. Deciduous forest mapping using change detection of multi-temporal canopy height models from aerial images acquired at leaf-on and leaf-off conditions height models from aerial images acquired at leaf-on and leaf-off conditions. Scand. J. For. Res. 2016, 31, 517–525. [Google Scholar] [CrossRef]

- White, J.C.; Arnett, J.T.T.R.; Wulder, M.A.; Tompalski, P.; Coops, N.C. Evaluating the impact of leaf-on and leaf-off airborne laser scanning data on the estimation of forest inventory attributes with the area-based approach. Can. J. For. Res. 2015, 45, 1498–1513. [Google Scholar] [CrossRef]

- Næsset, E. Assessing sensor effects and effects of leaf-off and leaf-on canopy conditions on biophysical stand properties derived from small-footprint airborne laser data. 2005, 98, 356–370. [Google Scholar] [CrossRef]

- Villikka, M.; Packalén, P.; Maltamo, M. The suitability of leaf-off airborne laser scanning data in an area-based forest inventory of coniferous and deciduous trees. Silva. Fenn. 2012, 46, 99–110. [Google Scholar] [CrossRef]

- Ørka, H.O.; Næsset, E.; Bollandsås, O.M. Effects of different sensors and leaf-on and leaf-off canopy conditions on echo distributions and individual tree properties derived from airborne laser scanning. Remote Sens. Environ. 2010, 114, 1445–1461. [Google Scholar] [CrossRef]

| Stand Development Class | Total Trees | Spruce | Birch | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Plot Name | Stem Number (TPH) | Hmean (m) | Stem Number | Hmin | Hmax | Hmean | Hstd | Stem Number | Hmin | Hmax | Hmean | Hstd | ||

| YoS (n = 5) | GT1 | 1989 | 1.19 | 1989 | 0.73 | 1.87 | 1.19 | 0.33 | 0 | |||||

| GT2 | 1790 | 1.16 | 1790 | 0.77 | 1.78 | 1.16 | 0.24 | 0 | ||||||

| GT3 | 1194 | 1.12 | 1194 | 0.77 | 1.61 | 1.12 | 0.19 | 0 | ||||||

| GT4 | 1393 | 1.05 | 1393 | 0.82 | 1.48 | 1.05 | 0.20 | 0 | ||||||

| GT5 | 1592 | 1.14 | 1592 | 0.86 | 1.56 | 1.14 | 0.19 | 0 | ||||||

| AdS (n = 10) | G1 | 986 | 2.78 | 891 | 1.62 | 3.92 | 2.66 | 0.55 | 95 | 3.66 | 4.33 | 3.90 | 0.4 | |

| G2 | 605 | 3.23 | 446 | 1.87 | 3.83 | 3.00 | 0.57 | 159 | 3.36 | 4.27 | 3.88 | 0.4 | ||

| G3 | 1592 | 3.20 | 1369 | 1.71 | 4.00 | 3.12 | 0.58 | 223 | 3.28 | 4.33 | 3.67 | 0.3 | ||

| G4 | 1814 | 3.66 | 1273 | 1.57 | 4.40 | 3.44 | 0.63 | 541 | 3.36 | 5.01 | 4.17 | 0.5 | ||

| G5 | 1401 | 2.70 | 1401 | 1.62 | 3.87 | 2.70 | 0.54 | 0 | ||||||

| G6 | 2069 | 3.08 | 2037 | 1.71 | 4.54 | 3.07 | 0.66 | 32 | 3.44 | 3.44 | 3.44 | |||

| G7 | 2228 | 3.72 | 1464 | 2.09 | 4.21 | 3.27 | 0.52 | 764 | 3.36 | 5.58 | 4.58 | 0.6 | ||

| G8 | 2388 | 3.74 | 1178 | 1.62 | 4.28 | 3.35 | 0.62 | 1210 | 2.74 | 5.17 | 4.12 | 0.6 | ||

| G9 | 1210 | 2.45 | 1210 | 1.71 | 3.56 | 2.45 | 0.50 | 0 | ||||||

| G10 | 796 | 2.95 | 796 | 2.03 | 3.96 | 2.95 | 0.58 | 0 | ||||||

| Spectral Settings of the Hyperspectral Spectral Camera | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L0 (nm) | 507.24 | 509.08 | 513.48 | 520.44 | 537.16 | 545.62 | 554.2 | 562.85 | 572.27 | 584.43 | 591.92 | 599.24 |

| 605.39 | 616.18 | 628.6 | 643.2 | 656.34 | 668.97 | 675.75 | 687.44 | 694.17 | 702.28 | 709.41 | 715.4 | |

| 726.91 | 734.62 | 748.81 | 761.23 | 790.85 | 804.14 | 816.73 | 831.08 | 844.45 | 857.46 | 871.31 | 885.86 | |

| FWHM (nm) | 7.79 | 10.57 | 15.86 | 19.82 | 20.11 | 19.23 | 20.53 | 20.69 | 22.75 | 16.64 | 15.35 | 19.82 |

| 26.55 | 26.72 | 30.81 | 28.61 | 27.9 | 28.98 | 27.85 | 30.01 | 30.59 | 28.29 | 25.45 | 26.13 | |

| 29.94 | 31.34 | 28 | 29.6 | 27.65 | 25.13 | 27.97 | 28.6 | 28.41 | 30.68 | 32.75 | 29.52 | |

| Spot | YoS | AdS West | AdS East | |||

|---|---|---|---|---|---|---|

| Season | Leaf-Off | Leaf-On | Leaf-Off | Leaf-On | Leaf-Off | Leaf-On |

| Date | 11 May | 29 June | 9 May | 29 June | 9 May | 29 June |

| Time (UTC + 3) | 11:41 | 15:11 | 12:10 | 13:57 | 11:31 | 13:12 |

| SunZen | 46° | 42° | 45° | 38° | 47° | 38° |

| SunAz | 148° | 218° | 158° | 193° | 145° | 176° |

| Illumination Conditions | Bright | Bright | Bright | Variable | Bright | Overcast |

| Radiometric Model | BRDF | BRDF | BRDF | RELA | BRDF | RELA |

| Birch | Spruce | Non-Trees | Total | |

|---|---|---|---|---|

| Leaf-off | 30 | 67 | 47 | 144 |

| Leaf-on | 50 | 101 | 128 | 279 |

| Wavelength Range (nm) | Number of Bands | |

|---|---|---|

| Green | 507–562 | 8 |

| Red | 620–700 | 7 |

| Red Edge | 700–780 | 7 |

| NIR | 780–886 | 8 |

| Vegetation Index | Leaf-Off Wavelengths (nm) | Leaf-On Wavelengths (nm) |

|---|---|---|

| Red and NIR | 694.16 and 857.46 675.75 and 804.15 | 675.75 and 871.31 |

| Green and NIR | 520.44 and 857.46 513.48 and 871.31 | 537.16 and 790.85 |

| Red Edge and NIR | 709.41 and 790.85 702.28 and 844.45 761.23 and 831.08 | 709.41 and 885.86 715.40 and 871.31 715.40 and 885.86 748.81 and 844.45 |

| Total Number of Trees | Number of Spruce Trees | |||

|---|---|---|---|---|

| Leaf-Off | Leaf-On | Leaf-Off | Leaf-On | |

| RMSE (TPH) | 514 | 411 | 686 | 585 |

| Relative RMSE (%) | 33.5 | 26.8 | 44.6 | 38.1 |

| Bias (TPH) | 269 | 311 | 570 | 432 |

| Bias % | 17.5 | 20.2 | 37.1 | 28.1 |

| R2 | 0.57 | 0.73 | 0.46 | 0.35 |

| Mean Height of all of the Trees | Mean Height of Spruce Trees | |||

|---|---|---|---|---|

| Leaf-Off | Leaf-On | Leaf-Off | Leaf-On | |

| RMSE (m) | 0.57 | 0.29 | 0.52 | 0.27 |

| Relative RMSE (%) | 23.0 | 11.5 | 21.7 | 11.4 |

| Bias (m) | 0.52 | 0.18 | 0.48 | 0.16 |

| Bias% | 20.8 | 7.4 | 20.2 | 6.9 |

| R2 | 0.95 | 0.96 | 0.97 | 0.95 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Imangholiloo, M.; Saarinen, N.; Markelin, L.; Rosnell, T.; Näsi, R.; Hakala, T.; Honkavaara, E.; Holopainen, M.; Hyyppä, J.; Vastaranta, M. Characterizing Seedling Stands Using Leaf-Off and Leaf-On Photogrammetric Point Clouds and Hyperspectral Imagery Acquired from Unmanned Aerial Vehicle. Forests 2019, 10, 415. https://doi.org/10.3390/f10050415

Imangholiloo M, Saarinen N, Markelin L, Rosnell T, Näsi R, Hakala T, Honkavaara E, Holopainen M, Hyyppä J, Vastaranta M. Characterizing Seedling Stands Using Leaf-Off and Leaf-On Photogrammetric Point Clouds and Hyperspectral Imagery Acquired from Unmanned Aerial Vehicle. Forests. 2019; 10(5):415. https://doi.org/10.3390/f10050415

Chicago/Turabian StyleImangholiloo, Mohammad, Ninni Saarinen, Lauri Markelin, Tomi Rosnell, Roope Näsi, Teemu Hakala, Eija Honkavaara, Markus Holopainen, Juha Hyyppä, and Mikko Vastaranta. 2019. "Characterizing Seedling Stands Using Leaf-Off and Leaf-On Photogrammetric Point Clouds and Hyperspectral Imagery Acquired from Unmanned Aerial Vehicle" Forests 10, no. 5: 415. https://doi.org/10.3390/f10050415

APA StyleImangholiloo, M., Saarinen, N., Markelin, L., Rosnell, T., Näsi, R., Hakala, T., Honkavaara, E., Holopainen, M., Hyyppä, J., & Vastaranta, M. (2019). Characterizing Seedling Stands Using Leaf-Off and Leaf-On Photogrammetric Point Clouds and Hyperspectral Imagery Acquired from Unmanned Aerial Vehicle. Forests, 10(5), 415. https://doi.org/10.3390/f10050415