Abstract

This paper discusses the parameter estimation problems of multi-input output-error autoregressive (OEAR) systems. By combining the auxiliary model identification idea and the data filtering technique, a data filtering based recursive generalized least squares (F-RGLS) identification algorithm and a data filtering based iterative least squares (F-LSI) identification algorithm are derived. Compared with the F-RGLS algorithm, the proposed F-LSI algorithm is more effective and can generate more accurate parameter estimates. The simulation results confirm this conclusion.

1. Introduction

System modeling and identification of single variable processes have been well studied. However, most industrial processes are multivariable systems [1,2,3], including multiple-input multiple-output (MIMO) systems and multiple-input single-output (MISO) systems. For example, in chemical and process industries, the heat exchangers are MIMO systems, in which the state of a heat exchanger often is represented by four field input variables: the cold inlet temperature, the hot inlet temperature, the cold mass flow and the hot mass flow; the outputs are respectively the cold outlet temperature and hot outlet temperature [4]. In wireless communication systems, the MIMO technology can increase wireless channel capacity and bandwidth by using the multiple antennas without the need of additional power [5]. In computing system technology, the power consumption model for host servers can be identified using a MISO model, in which the system inputs have different forms, such as the rate of the change in the CPU frequency and the rate of the change in the CPU time share, and the system outputs are the changes in power consumption [6]. With the development of the industrial process, the identification of multivariable processes is in great demand. Researchers have studied the problem of identification for multichannel systems from different fields [7,8,9], and many methods have been proposed for multivariable cases [10,11].

Recursive algorithms and iterative algorithms have wide applications in system modeling and system identification [12,13,14]. For example, Wang et al. derived the hierarchical least squares based iterative algorithm for the Box-Jenkins system [15]; and Dehghan and Hajarian presented the iterative method for solving systems of linear matrix equations over reflexive and anti-reflexive matrices [16]. Compared with the recursive identification algorithm, the iterative identification algorithm uses all the measured data to refresh parameter estimation, so the parameter estimation accuracy can be greatly improved, and the iterative identification methods have been successfully applied to many different models [17,18,19].

In the field of system identification, the filtering technique is efficient to improve the computational efficiency [20,21,22], and it has been widely used in parameter estimation of different models [23,24]. Particularly, Basin et al. discussed the parameter estimation for linear stochastic time-delay systems based state filtering [25]; Scarpiniti et al. discussed the identification of Wiener-type nonlinear systems using the adaptive filter [26]; and Wang et al. presented a gradient based iterative algorithm for identification of a class nonlinear systems by filtering the input–output data [27].

This paper combines the filtering technique with the auxiliary model identification idea to estimate parameters of multi-input output error autoregressive (OEAR) systems. By using a linear filter to filter the input-output data, a multi-input OEAR system is transformed into two identification models, and the dimensions of the covariance matrices of the decomposed two models become smaller than that of the original OEAR model. The contributions of this paper are as follows:

- By using the data filtering technique and the auxiliary model identification idea, a data filtering based recursive generalized least squares (F-RGLS) identification algorithm is derived for the multi-input OEAR system.

- A data filtering based iterative least squares (F-LSI) identification algorithm is developed for the multi-input OEAR system.

- The proposed F-LSI identification algorithm updates the parameter estimation by using all of the available data, and can produce highly accurate parameter estimates compared to the F-RGLS identification algorithm.

The rest of this paper is organized as follows: Section 2 gives a description for multi-input OEAR systems. Section 3 gives an F-RGLS algorithm for the multi-input OEAR system by using the data filtering technique. Section 4 derives an F-LSI algorithm by using the data filtering technique and the iterative identification method. Two examples to illustrate the effectiveness of the proposed algorithms are given in Section 5. Finally, Section 6 gives some concluding remarks.

2. The System Description

Consider the following multi-input OEAR system:

where , are the inputs, is the output, represents the noise-free output, is random white noise with zero mean, and is random colored noise. Assume that the orders and are known, , and for . The parameters , and are to be identified from input–output data .

Define the parameter vectors:

and the information vectors as

The information vector is unknown due to the unmeasured variables and . By means of the above definitions, Equations (2)–(4) can be expressed as

Equation (7) is the identification model of the multi-input OEAR system, and the parameter vector contains all the parameters to be estimated.

3. The Data Filtering Based Recursive Least Squares Algorithm

Define a unit backward shift operator as and a rational function . In this section, we use the linear filter to filter the input–output data and derive an F-RGLS algorithm.

For the multi-input OEAR system in Equations (1)–(4), we define the filtered input–output data and as

Multiplying both sides of Equations (1) and (4) by , we can obtain the following filtered output-error model:

Define the filtered information vectors and as

Equations (10) and (11) can be rewritten as

Taking advantage of the idea in [28] for the filtered identification model in Equation (13), we can get

Since the information vector contains the unknown variables and , the algorithm in Equation (14) cannot be applied to estimate directly. According to the idea in [29], the unknown variables are replaced with the outputs of relevant auxiliary model, and the unmeasurable terms and are replaced with their estimates and , respectively. The derivation process is as follows.

Let , and be the estimates of , c and at time t, respectively. Use the estimates , and to define the estimates of and as

where the estimates and can be computed by

Define the covariance matrix

and the gain vector

Equation (14) can be written as

Applying the matrix inversion formula to Equation (15), we can obtain the following recursive least squares algorithm for estimating :

Applying the least squares principle to the noise model in Equation (6), we can obtain the following algorithm to estimate the parameter vector c:

The noise information vector involves the unknown term . From Equations (6) and (7), once the estimate is obtained, the estimate can be computed by

Replace the unmeasurable noise terms in with estimates and define the estimate of as

Use the estimate to form the estimate of as follows:

The estimates of the filtered input and the filtered output can be computed through

Replacing and in Equations (16)–(18) with their estimates and , and replacing and in Equations (19)–(21) with their estimates and , we can summarize the data filtering based recursive generalized least squares (F-RGLS) algorithm for the multi-input OEAR systems:

The F-RGLS estimation algorithm involves two steps: the parameter identification of the system model—see Equations (22)–(29)—and the parameter identification of the noise model—see Equations (30)–(37). The F-RGLS algorithm can generate the parameter estimation of the multi-input OEAR system; however, the algorithm uses only the measured data up to time t, not including the data . Next, we will make full use of all the measured data to improve the parameter estimation accuracy by adopting the iterative identification approach.

4. The Data Filtering Based Iterative Least Squares Algorithm

Suppose that the data length . Based on the identification models in Equations (6) and (13), we define two quadratic criterion functions

Minimizing the above two quadratic criterion functions, we can obtain the estimation algorithm of computing and :

Because the vectors and are unknown, the parameter estimates and cannot be computed directly. Here, we adopt the iterative estimation theory. Let be an iterative variable, and denote the iterative estimates of and c at iteration k. Let and be the estimates of and at iteration k. Replacing and in Equation (5) with their estimates and at iteration k, and in Equation (6) with their estimates at iteration k and the at iteration , the estimate and can be calculated by

Replacing in with , in with , the estimates , and can be obtained by

Using the parameter estimate to form the estimate of at iteration k:

Filtering the input–output data and by , we can obtain the estimates of and

Let be the estimate of at iteration k, replacing and in Equation (12) with their estimates and at iteration k, the estimate can be computed by

Replacing and in with their estimates at iteration and at iteration k, we can obtain the estimates:

Replacing and in Equation (41) with their estimates and , and replacing and in Equation (42) with their estimates and , we can obtain the data filtering based iterative least squares (F-LSI) algorithm of estimating the parameter vectors and c:

From Equations (43)–(54), we can summarize the F-LSI algorithm as follows:

We list the steps for computing the estimates and as iteration k increases:

- To initialize, let , , , , , , .

- Collect the input–output data : .

- Form by Equation (64), by Equation (63), and by Equation (62).

- Compute by Equation (66), update the parameter estimate by Equation (61).

- Read by Equation (69), compute and by Equations (58) and (59).

- Form and by Equations (56) and (57), update the parameter estimate by Equation (55).

- Read by Equation (68), compute and by Equations (60) and (65).

- Give a small positive ε, compare with , if , obtain the iterative time k and the parameter estimate , increase k by 1 and go to Step 2; otherwise, increase k by 1 and go to Step 3.

Remark:

The computational complexity implies the computational amount of multiplications and adds in the algorithm, depending on the sizes and lengths.

5. Examples

Example 1:

Consider the following multi-input OEAR system:

The parameter vector to be estimated is

The inputs are taken as two persistent excitation signal sequences with zero mean and unit variance, and as a white noise sequence with zero mean and variance and .

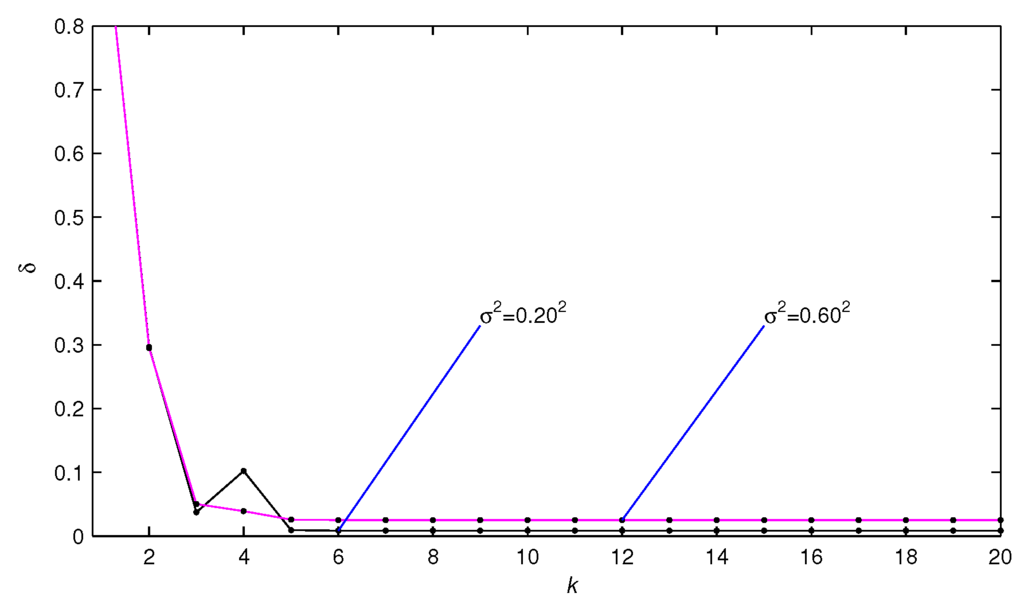

Applying the F-RGLS algorithm to estimate the parameters of this example system, the parameter estimates and their estimation errors are shown in Table 1. Applying the F-LSI algorithm to estimate the parameters of this example system, when the data length the parameter estimates and their estimation errors are shown in Table 2 with different noise variances. When the data length , the parameter estimates and their errors δ are shown in Table 3 with different noise variances. Under different noise variances and different data lengths, the parameter estimates and their errors δ are shown in Table 4 when the iteration . Under different noise variances, the parameter estimation errors δ versus k are shown in Figure 1.

Table 1.

The F-RGLS estimates and their errors for Example 1.

Table 2.

The F-LSI parameter estimates and errors for Example 1 ().

Table 3.

The F-LSI parameter estimates and errors for Example 1 ().

Table 4.

The F-LSI parameter estimates and errors for Example 1 ().

Figure 1.

The estimation errors δ versus t.

From Table 1, Table 2, Table 3 and Table 4 and Figure 1, we can draw the following conclusions:

- Increasing the data length L can improve the parameter estimation accuracy of the F-RGLS algorithm and the F-LSI algorithm, and as the data length L increases, the parameter estimates are getting more stationary.

- Under the same data length, the estimation accuracy of the F-RGLS algorithm and the F-LSI algorithm increases as the noise variance decreases.

- Under the same data length and noise variance, the estimation errors of the F-LSI algorithm are smaller than the F-RGLS algorithm.

- The F-LSI algorithm has fast convergence speed, and the parameter estimates only need several iterations close to their true values.

Example 2:

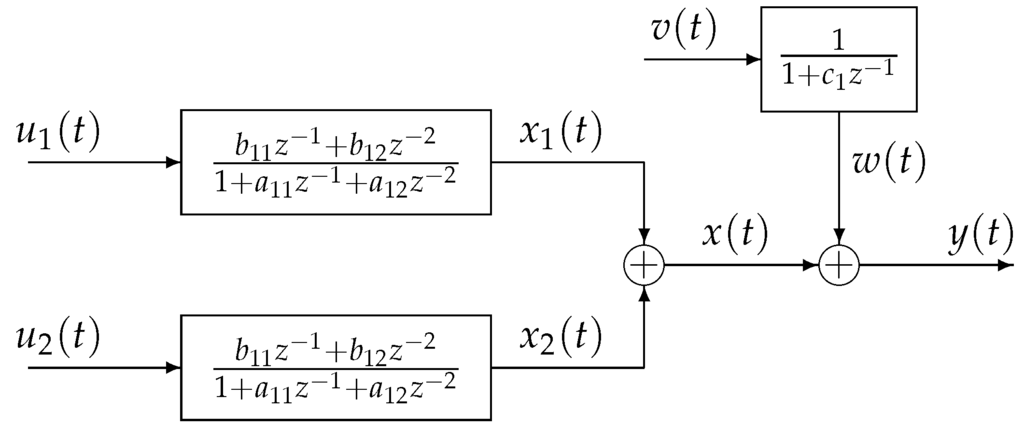

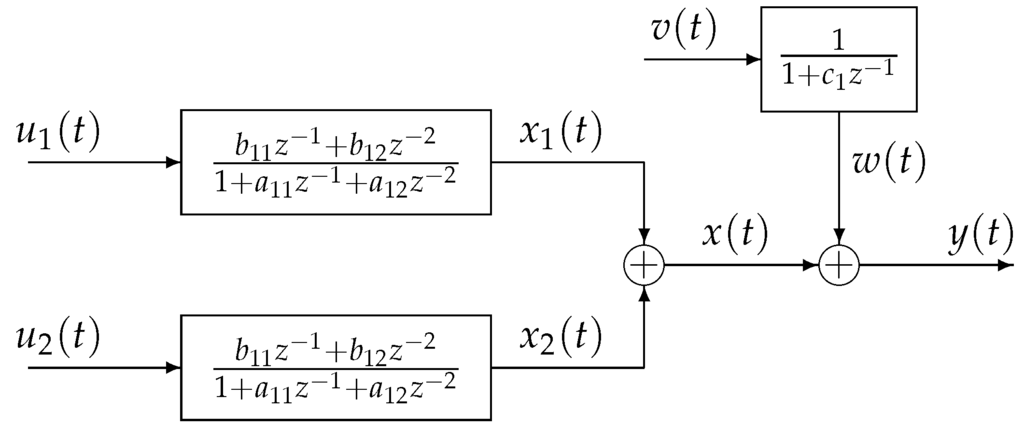

Consider the industrial process with colored noise, which has two inputs and one output as shown in Figure 2 and described as

where , and .

Figure 2.

The diagram of a multi-input OEAR system.

The parameters to be estimated are

The simulation conditions are the same as those of Example 1, and the noise variance . Applying the F-RGLS and the F-LSI algorithms to estimate the parameters of the system, the parameter estimates and their errors are presented in Table 5 and Table 6.

Table 5.

The F-RGLS parameter estimates and errors for Example 2.

Table 6.

The F-LSI parameter estimates and errors for Example 2.

From Table 5 and Table 6, we can see that the estimation errors become smaller with the increase of t and the F-LSI algorithm can get accurate parameter estimates by only several iterations, which shows the effectiveness of the proposed algorithms.

The power consumption in host servers can be concerned by the model of Example 2. The two inputs are the changes in CPU frequency of the host server and the changes in the guest server’s time share to use the physical CPU of the host server, and the power consumption is the system output. The configuration and the allocation of memory, storage and network bandwidth for the guest server are the random disturbances of the system.

6. Conclusions

This paper discusses the parameter estimation problem for multi-input OEAR systems. Based on the data filtering technique, an F-RGLS algorithm and an F-LSI algorithm are developed. The proposed methods are effective for estimating the parameters of multi-input OEAR systems. The simulation results indicate that the proposed F-LSI algorithm achieves higher estimation accuracies than the F-RGLS algorithm, and the convergence rate of the proposed methods can be improved by increasing the data length. The methods used in this paper can be extended to study the identification of other linear systems, nonlinear systems, state space systems and time delay systems.

Acknowledgments

This work was supported by the Visiting Scholar Project for Young Backbone Teachers of Shandong Province, China.

Conflicts of Interest

The author declares no conflict of interest.

References

- Mobayen, S. An LMI-based robust tracker for uncertain linear systems with multiple time-varying delays using optimal composite nonlinear feedback technique. Nonlinear Dyn. 2015, 80, 917–927. [Google Scholar] [CrossRef]

- Saab, S.S.; Toukhtarian, R. A MIMO sampling-rate-dependent controller. IEEE Trans. Ind. Electron. 2015, 62, 3662–3671. [Google Scholar] [CrossRef]

- Garnier, H.; Gilson, M.; Young, P.C.; Huselstein, E. An optimal iv technique for identifying continuous-time transfer function model of multiple input systems. Control Eng. Pract. 2007, 46, 471–486. [Google Scholar] [CrossRef]

- Chouaba, S.E.; Chamroo, A.; Ouvrard, R.; Poinot, T. A counter flow water to oil heat exchanger: MISO quasi linear parameter varying modeling and identification. Simulat. Model. Pract. Theory 2012, 23, 87–98. [Google Scholar] [CrossRef]

- Halaoui, M.E.; Kaabal, A.; Asselman, H.; Ahyoud, S.; Asselman, A. Dual band PIFA for WLAN and WiMAX MIMO systems for mobile handsets. Procedia Technol. 2016, 22, 878–883. [Google Scholar] [CrossRef]

- Al-Hazemi, F.; Peng, Y.Y.; Youn, C.H. A MISO model for power consumption in virtualized servers. Cluster Comput. 2015, 18, 847–863. [Google Scholar] [CrossRef]

- Yerramilli, S.; Tangirala, A.K. Detection and diagnosis of model-plant mismatch in MIMO systems using plant-model ratio. IFAC-Papers OnLine 2016, 49, 266–271. [Google Scholar] [CrossRef]

- Sannuti, P.; Saberi, A.; Zhang, M.R. Squaring down of general MIMO systems to invertible uniform rank systems via pre- and/or post- compensators. Automatica 2014, 50, 2136–2141. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. Novel data filtering based parameter identification for multiple-input multiple-output systems using the auxiliary model. Automatica 2016, 71, 308–313. [Google Scholar] [CrossRef]

- Wang, D.Q.; Ding, F. Parameter estimation algorithms for multivariable Hammerstein CARMA systems. Inf. Sci. 2016, 355, 237–248. [Google Scholar] [CrossRef]

- Liu, Y.J.; Tao, T.Y. A CS recovery algorithm for model and time delay identification of MISO-FIR systems. Algorithms 2015, 8, 743–753. [Google Scholar] [CrossRef]

- Wang, X.H.; Ding, F. Convergence of the recursive identification algorithms for multivariate pseudo-linear regressive systems. Int. J. Adapt. Control Signal Process. 2016, 30, 824–842. [Google Scholar] [CrossRef]

- Ji, Y.; Liu, X.M. Unified synchronization criteria for hybrid switching-impulsive dynamical networks. Circuits Syst. Signal Process. 2015, 34, 1499–1517. [Google Scholar] [CrossRef]

- Meng, D.D.; Ding, F. Model equivalence-based identification algorithm for equation-error systems with colored noise. Algorithms 2015, 8, 280–291. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. The filtering based iterative identification for multivariable systems. IET Control Theory Appl. 2016, 10, 894–902. [Google Scholar] [CrossRef]

- Dehghan, M.; Hajarian, M. Finite iterative methods for solving systems of linear matrix equations over reflexive and anti-reflexive matrices. Bull. Iran. Math. Soc. 2014, 40, 295–323. [Google Scholar]

- Xu, L. The damping iterative parameter identification method for dynamical systems based on the sine signal measurement. Signal Process. 2016, 120, 660–667. [Google Scholar] [CrossRef]

- Zhang, W.G. Decomposition based least squares iterative estimation for output error moving average systems. Eng. Comput. 2014, 31, 709–725. [Google Scholar] [CrossRef]

- Zhou, L.C.; Li, X.L.; Xu, H.G.; Zhu, P.Y. Gradient-based iterative identification for Wiener nonlinear dynamic systems with moving average noises. Algorithms 2015, 8, 712–722. [Google Scholar] [CrossRef]

- Shi, P.; Luan, X.L.; Liu, F. H-infinity filtering for discrete-time systems with stochastic incomplete measurement and mixed delays. IEEE Trans. Ind. Electron. 2012, 59, 2732–2739. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. The auxiliary model based hierarchical gradient algorithms and convergence analysis using the filtering technique. Signal Process. 2016, 128, 212–221. [Google Scholar] [CrossRef]

- Li, X.Y.; Sun, S.L. H-infinity filtering for networked linear systems with multiple packet dropouts and random delays. Digital Signal Process. 2015, 46, 59–67. [Google Scholar] [CrossRef]

- Ding, F.; Liu, X.M.; Gu, Y. An auxiliary model based least squares algorithm for a dual-rate state space system with time-delay using the data filtering. J. Franklin Inst. 2016, 353, 398–408. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Z.P.; Sun, F.C.; Dorrell, D.G. Online parameter identification of ultracapacitor models using the extended kalman filter. Algorithms 2014, 7, 3204–3217. [Google Scholar] [CrossRef]

- Basin, M.; Shi, P.; Calderon-Alvarez, D. Joint state filtering and parameter estimation for linear stochastic time-delay systems. Signal Process. 2011, 91, 782–792. [Google Scholar] [CrossRef]

- Scarpiniti, M.; Comminiello, D.; Parisi, R.; Uncini, A. Nonlinear system identification using IIR Spline Adaptive filters. Signal Process. 2015, 108, 30–35. [Google Scholar] [CrossRef]

- Wang, C.; Tang, T. Several gradient-based iterative estimation algorithms for a class of nonlinear systems using the filtering technique. Nonlinear Dyn. 2014, 77, 769–780. [Google Scholar] [CrossRef]

- Ding, F.; Wang, Y.J.; Ding, J. Recursive least squares parameter identification for systems with colored noise using the filtering technique and the auxiliary model. Digital Signal Process. 2015, 37, 100–108. [Google Scholar] [CrossRef]

- Wang, D.Q. Least squares-based recursive and iterative estimation for output error moving average systems using data filtering. IET Control Theory Appl. 2011, 5, 1648–1657. [Google Scholar] [CrossRef]

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).