Abstract

In this work, we have developed a fourth order Newton-like method based on harmonic mean and its multi-step version for solving system of nonlinear equations. The new fourth order method requires evaluation of one function and two first order Fréchet derivatives for each iteration. The multi-step version requires one more function evaluation for each iteration. The proposed new scheme does not require the evaluation of second or higher order Fréchet derivatives and still reaches fourth order convergence. The multi-step version converges with order , where r is a positive integer and . We have proved that the root α is a point of attraction for a general iterative function, whereas the proposed new schemes also satisfy this result. Numerical experiments including an application to 1-D Bratu problem are given to illustrate the efficiency of the new methods. Also, the new methods are compared with some existing methods.

1. Introduction

An often discussed problem in many applications of science and technology is to find a real zero of a system of nonlinear equations , where , , and is a smooth map and D is an open and convex set, where we assume that is a zero of the system and is an initial guess sufficiently close to α. For example, problems of the above type arise while solving boundary value problems for differential equations. The differential equations are reduced to system of nonlinear equations, which are in turn solved by the familiar Newton’s iteration method having convergence order two [1]. The Newton method (2ndNM) is given by

Homeier [2] has proposed a third order iterative method called Harmonic Mean Newton’s method for solving a single nonlinear equation. Analogous to this method [2], we consider the following extension to solve a system of nonlinear equation , henceforth called as :

We note that is the average of the inverses of two Jacobians. In general, such third order methods free of second derivatives like Equation (2) can be used for solving system of nonlinear equations. These methods require one function evaluation and two first order Fréchet derivative evaluations. The convergence analysis of a few such methods using point of attraction theory can be found in [3]. This method is more efficient than Halley’s method because it does not require the evaluation of a third order tensor of values while finding the number of function evaluations.

Furthermore, the methods are less efficient than two-step fourth order Newton’s method ()

which was recently rediscovered by Noor et al. [4] using the variational iteration technique. Recently Sharma et al. [5] developed the fourth order method, which is given by

Cordero et al. [6] presented a sixth order method, which is given by

Recently, an improved fourth order version from a third order method for solving a single nonlinear equation is found in [7]. In the current paper, similar to the method found in [7], a multivariate version having fourth order convergence is proposed. The rest of this paper is organized as follows. In Section 2, we present a new algorithm (optimal) that has fourth order convergence by using only three function evaluations and a multi-step version with order , where r is a positive integer and for solving systems of nonlinear equations. In Section 3, we study the convergence analysis of the new methods using the point of attraction theory. Section 4 presents numerical examples and comparison with some existing methods. Furthermore, we also study an application problem, i.e., the 1-D Bratu problem [8]. A brief conclusion is given in Section 5.

2. Development of the Methods

Babajee [7] has recently improved the method to get a fourth order method for single equation

This method is one of the member in the family of higher order multi-point iterative methods based on power mean for solving single nonlinear equation by Babajee et al. [9].

We next extend the above idea to the multivariate case. For the method given in Equation (2), we propose an improved fourth order Harmonic Mean Newton’s method () for solving systems of nonlinear equations as follows:

where I is the identity matrix. We further improve the method by additional function evaluations to get a multi-step version called HM method given by

Note that this multi-step version has order , where r is a positive integer and . The case is the method.

3. Convergence Analysis

The main theorem is going to be demonstrated by means of the n-dimensional Taylor expansion of the functions involved. In the following, we use certain notations and results found in [10]:

Let be sufficiently Fréchet differentiable in D. Suppose the qth derivative of F at , , is the q-linear function such that . Given , which lies in a neighborhood of a solution α of the nonlinear system , Taylor’s expansion can be applied (assuming Jacobian matrix is nonsingular) to obtain

where , . It is noted that since and . Also, we can expand in Taylor series

where I is the identity matrix. It is also noted that . Denote , so the error at the th iteration is , where L is a p-linear function is called the error equation and p is the order of convergence. Observe that is .

In order to prove the convergence order for the Equation (6), we need to recall some important definitions and results from the theory of point of attraction.

Definition (Point of Attraction). [11] Let . Then α is a point of attraction of the iteration

if there is an open neighborhood S of α defined by

such that and, for any , the iterating defined by Equation (10) all lie in D and converge to α.

Theorem 1 (Ostrowski Theorem). [11] Assume that has a fixed point and is Fréchet differentiable on α. If

then α is a point of attraction for .

We now prove a general result that shows α is a point of attraction of a general iteration function .

Theorem 2. Let be sufficiently Fréchet differentiable at each point of an open convex neighborhood D of , which is a solution of the system . Suppose that are sufficiently Fréchet differentiable functionals (depending on F) at each point in D with , and . Then, there exists a ball

on which the mapping

is well-defined; moreover, G is Fréchet differentiable at α, thus

Proof: Clearly, .

Since is differentiable in α and , we can assume that δ was chosen sufficiently small such that

for all with depending on δ and in case .

Since P, Q and R are continuously differentiable functions, then , and are bounded:

Now by mean value theorem for integrals

and

so that

Combining, we have

which shows that is differentiable in α since δ and ϵ are arbitrary and , , and are constants. Thus .

Theorem 3. Let be sufficiently Fréchet differentiable at each point of an open convex neighborhood D of that is a solution of the system . Let us suppose that and is continuous and nonsingular in α, and is close enough to α. Then α is a point of attraction of the sequence obtained using the iterative expression Equation (6). Furthermore, the sequence converges to α with order 4, where the error equation obtained is

Proof: We first show that α is a point of attraction using Theorem 2. In this case,

Now, since , we have

so that and by Ostrowski’s theorem, α is a point of attraction of Equation (6).

We next establish the fourth order convergence of this method. From Equation (8) and Equation (9) we obtain

and

where .

We have

where , and .

Then

and the expression for is

The Taylor expansion of the Jacobian matrix is

Therefore,

and then

Also,

where

Finally, by using Equation (13) and Equation (18) in Equation (6) with some simplifications, the error equation can be expressed as:

Thus from Equation (19), it can be concluded that the order of convergence of the method is four. ☐

For the case we state and prove the following theorem.

Theorem 4. Let be sufficiently Fréchet differentiable at each point of an open convex neighborhood D of that is a solution of the system . Let us suppose that and is continuous and nonsingular in α, and is close enough to α. Then α is a point of attraction of the sequence obtained using the iterative expression Equation (7). Furthermore the sequence converges to α with order , wherer is a positive integer and .

Proof: In this case,

We can show by induction that

so that

So and by Ostrowski’s theorem, α is a point of attraction of Equation (7). A Taylor expansion of about α yields

Also, let

4. Numerical Examples

In this section, we compare the performance of the contributed Equation (6) and Equation (7) with different methods given in Equations (1)–(5). The numerical experiments have been carried out using MATLAB 7.6 software for the test problems given below. The approximate solutions are calculated correct to 1000 digits by using variable precision arithmetic. We use the following stopping criterion for the iterations:

We have used the approximated computational order of convergence given by (see [12])

Let M be the number of iterations required for reaching the minimum residual .

4.1. Test Problems

Test Problem 1 (TP1) We consider the following system given in [13]:

, where and

The Jacobian matrix is given by The starting vector is and the exact solution is .

Test Problem 2 (TP2) We consider the following system given in [3]:

The solution is . We choose the starting vector . The Jacobian matrix has 7 non-zero elements and it is given by

Test Problem 3 (TP3) We consider the following system given in [3]:

We solve this system using the initial approximation . The solution of this system is The Jacobian matrix that has 12 non-zero elements is given by

Table 1.

Comparison of different methods for system of nonlinear equations.

| Methods | TP1 | TP2 | TP3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| M | M | M | |||||||

| Equation (1) | 7 | 4.6e−114 | 2.00 | 9 | 1.7e−107 | 2.00 | 8 | 3.9e−145 | 2.02 |

| Equation (2) | 5 | 1.4e−174 | 2.99 | 6 | 4.5e−139 | 3.00 | 5 | 2.9e−291 | 4.10 |

| Equation (3) | 4 | 4.6e−114 | 4.02 | 5 | 1.7e−107 | 4.00 | 5 | 2.9e−291 | 4.11 |

| Equation (4) | 4 | 7.1−108 | 3.99 | 6 | 0 | 3.99 | 5 | 8.8e−257 | 4.03 |

| Equation (6) | 4 | 1.4e−105 | 3.99 | 6 | 0 | 4.00 | 5 | 5.5e−247 | 4.12 |

| Equation (5) | 4 | 0 | 5.91 | 5 | 0 | 5.98 | 4 | 4.6e−199 | 6.12 |

| Equation (7) | 4 | 0 | 5.90 | 5 | 0 | 5.98 | 4 | 6.1e−194 | 6.13 |

| Equation (7) | 4 | 0 | 7.90 | 4 | 1.9e−133 | 7.99 | 4 | 0 | 8.64 |

| Equation (7) | 3 | 1.1e−154 | 9.90 | 4 | 2.2e−248 | 9.99 | 4 | 0 | 10.76 |

Table 1 shows the results for the test problems (TP1, TP2, TP3), from which we conclude that the method is the most efficient method with least number of iterations and residual error.

Table 2.

Comparison of CPU time (s).

| Methods | TP1 | TP2 | TP3 |

|---|---|---|---|

| 1.161405 | 1.734549 | 1.758380 | |

| 0.950678 | 2.445676 | 1.969176 | |

| 0.808851 | 1.569021 | 1.452089 | |

| 1.052950 | 2.649530 | 2.571427 | |

| 1.001148 | 2.170088 | 2.456138 | |

| 1.132364 | 2.117847 | 2.405149 | |

| 0.944062 | 2.137319 | 2.528262 | |

| 0.986300 | 2.328460 | 2.071641 | |

| 1.029707 | 2.482167 | 2.213744 |

In Table 2, we have given CPU time for the proposed methods and some existing methods.

Next, we consider the HM family of methods for finding the least value of r and thus the value of p in order to get the number of iteration and . To achieve this, TP1 requires (), TP2 requires () and TP3 requires (). Furthermore, it is observed that the order of convergence p depends on the test problem and its starting vector.

4.2. 1-D Bratu Problem

The 1-D Bratu problem [8] is given by

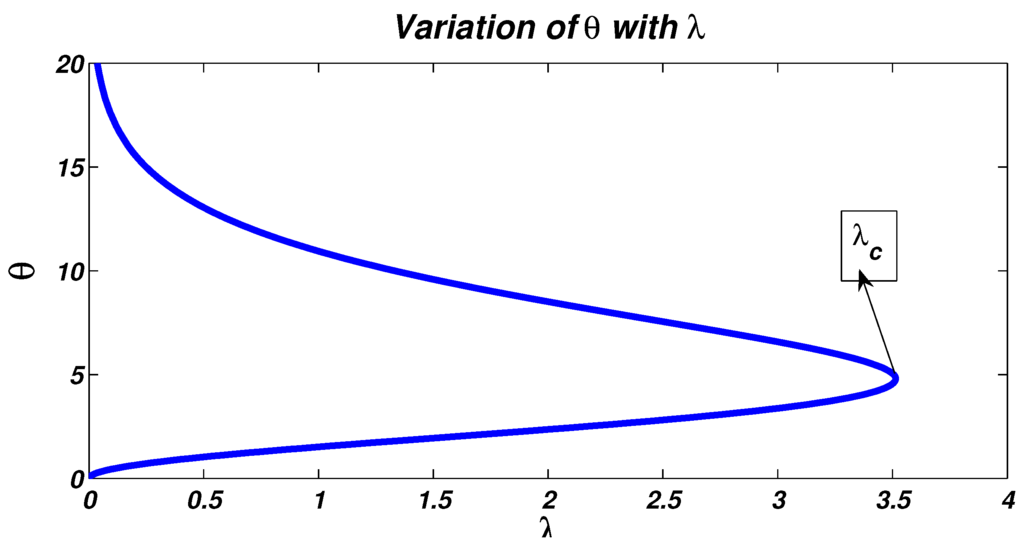

with the boundary conditions . The 1-D planar Bratu problem has two known, bifurcated, exact solutions for values of , one solution for and no solution for .

The critical value of is simply , where η is the fixed point of the hyperbolic cotangent function . The exact solution to Equation (26) is known and can be presented here as

where θ is a constant to be determined, which satisfies the boundary conditions and is carefully chosen and assumed to be the solution of the differential Equation (26). Using a similar procedure as in [14], we show how to obtain the critical value of λ. Substitute Equation (27) in Equation (26), simplify and collocate at the point because it is the midpoint of the interval. Another point could be chosen, but low order approximations are likely to be better if the collocation points are distributed somewhat evenly throughout the region. Then, we have

By eliminating λ from Equation (28) and Equation (29), we have the value of for the critical satisfying

for which can be obtained using an iterative method. We then get from Equation (28). Figure 1 illustrates this critical value of λ.

Figure 1.

Variation of θ for different values of λ.

The finite dimensional problem using standard finite difference scheme is given by

with discrete boundary conditions and the step size . There are unknowns (). The Jacobian is a sparse matrix and its typical number of nonzero per row is three. It is known that the finite difference scheme converges to the lower solution of the 1-D Bratu using the starting vector .

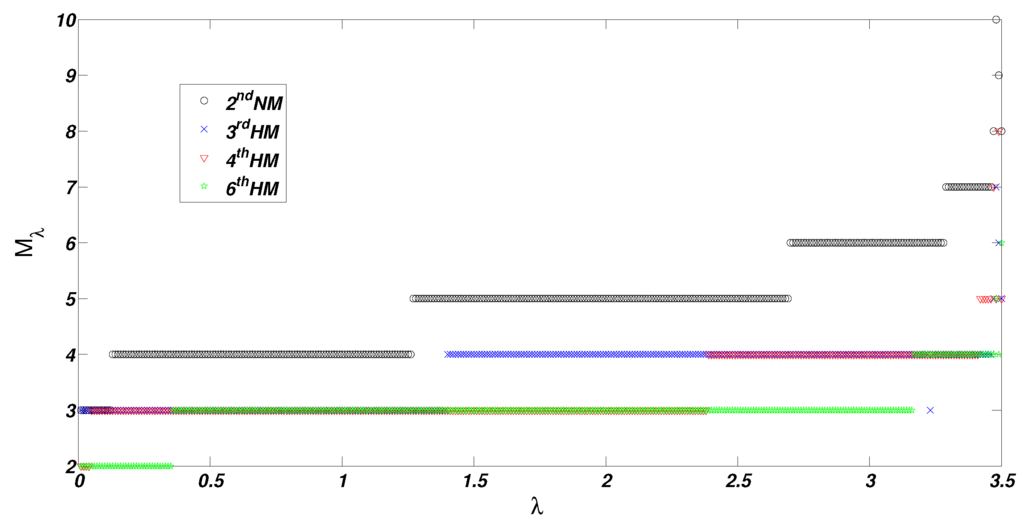

We use () and test for 350 λ’s in the interval (interval width = 0.01). For each λ, we let be the minimum number of iterations for which , where the approximation is calculated correct to 14 decimal places. Let be the mean of iteration number for the 350 λ’s.

Table 3.

Comparison of number of λ’s in different methods for 1-D Bratu problem.

| Method | ||||||

|---|---|---|---|---|---|---|

| 0 | 12 | 114 | 143 | 81 | 4.92 | |

| 0 | 140 | 206 | 2 | 2 | 3.62 | |

| 4 | 237 | 100 | 8 | 1 | 3.33 | |

| 4 | 234 | 103 | 7 | 2 | 3.35 | |

| 3 | 213 | 124 | 8 | 2 | 3.42 | |

| 35 | 281 | 32 | 1 | 1 | 3.00 |

Figure 2 and Table 3 give the results for the 1-D Bratu problem, where M represents number of iterations for convergence. It can be observed from the six methods considered in Table 3 that as λ increases to its critical value, the number of iterations required for convergence increase. However, as the order of method increases, the mean of iteration number decreases. The is the most efficient method among the six methods because it has the lowest mean iteration number and the highest number of λ converging in 2 iterations.

Figure 2.

Variation of number of iteration with λ for the , , and methods.

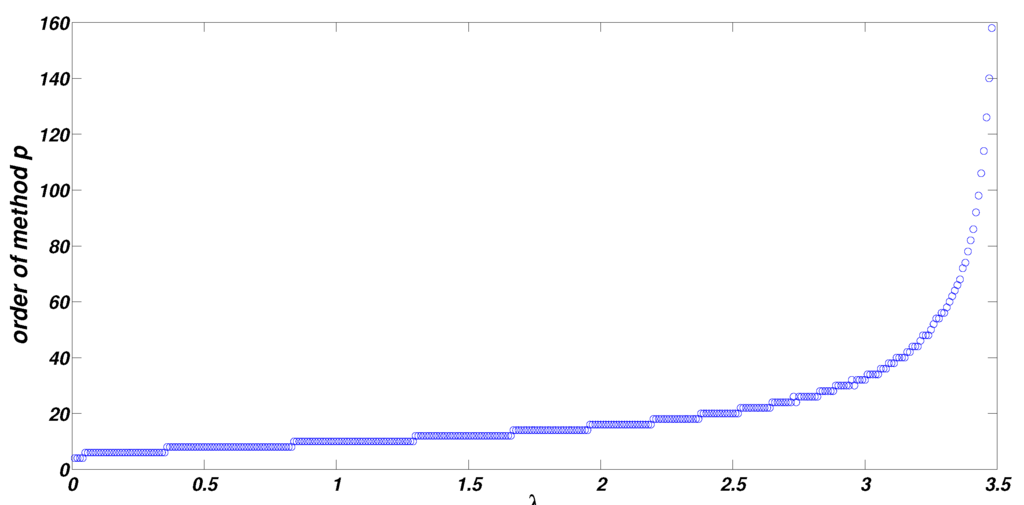

For each λ, we find the minimum order of the HM family so that we reach convergence in 2 iterations and the results are shown in Figure 3. It can be observed that as the value of λ increases, the value of p required for convergence in 2 iterations also increases. For , we require (). For , we require (). For , we require (). For , we require (). For , we require (). For , we require (). For , we require (). For , we require (). For , we require (). For , we require () and so on. We notice that the width of the interval decrease and the order of the family is very high as λ tends to its critical value. Finally, for , we require to reach convergence in 2 iterations.

Figure 3.

Order of the HM family for each λ.

5. Conclusion

In this work, we have proposed a fourth order method and its multi-step version having higher order convergence using weight functions to solve systems of nonlinear equations. The proposed schemes do not require the evaluation of second or higher order Fréchet derivatives to reach fourth order or higher order of convergence. We have tested a few examples using the proposed schemes and compared them with some known schemes, which illustrate the superiority of the new schemes. Finally, the proposed new methods have been applied on a practical problem called the 1-D Bratu problem. The results obtained are interesting and encouraging for the new methods. Hence, the proposed methods can be considered competent enough to some of the existing methods.

Acknowledgments

The authors are thankful to the anonymous reviewers for their valuable comments.

Author Contributions

The contributions of all of the authors have been similar. All of them have worked together to develop the present manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ostrowski, A.M. Solutions of Equations and System of Equations; Academic Press: New York, NY, USA, 1960. [Google Scholar]

- Homeier, H.H.H. On Newton-type methods with cubic convergence. Comp. Appl. Math. 2005, 176, 425–432. [Google Scholar] [CrossRef]

- Babajee, D.K.R. Analysis of Higher Order Variants of Newton’s Method and Their Applications to Differential and Integral Equations and in Ocean Acidification. Ph.D. Thesis, University of Mauritius, Moka, Mauritius, October 2010. [Google Scholar]

- Noor, M.A.; Waseem, M. Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 2009, 57, 101–106. [Google Scholar] [CrossRef]

- Sharma, J.R.; Guha, R.K.; Sharma, R. An efficient fourth order weighted-Newton method for systems of nonlinear equations. Numer. Algor. 2013, 62, 307–323. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 2012, 25, 2369–2374. [Google Scholar] [CrossRef]

- Babajee, D.K.R. On a two-parameter Chebyshev-Halley-like family of optimal two-point fourth order methods free from second derivatives. Afr. Mat. 2015, 26, 689–697. [Google Scholar] [CrossRef]

- Buckmire, R. Investigations of nonstandard Mickens-type finite-difference schemes for singular boundary value problems in cylindrical or spherical coordinates. Num. Meth. P. Diff. Eqns. 2003, 19, 380–398. [Google Scholar] [CrossRef]

- Babajee, D.K.R.; Kalyanasundaram, M.; Jayakumar, J. A family of higher order multi-point iterative methods based on power mean for solving nonlinear equations. Afr. Mat. 2015. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Algor. 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinbolt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comp. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Frontini, M.; Sormani, E. Third-order methods from Quadrature Formulae for solving systems of nonlinear equations. Appl. Math. Comp. 2004, 140, 771–782. [Google Scholar] [CrossRef]

- Odejide, S.A.; Aregbesola, Y.A.S. A note on two dimensional Bratu problem. Kragujevac J. Math. 2006, 29, 49–56. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).