Abstract

At the center of the macula, fovea plays an important role in computer-aided diagnosis. To locate the fovea, this paper proposes a vessel origin (VO)-based parabolic model, which takes the VO as the vertex of the parabola-like vasculature. Image processing steps are applied to accurately locate the fovea on retinal images. Firstly, morphological gradient and the circular Hough transform are used to find the optic disc. The structure of the vessel is then segmented with the line detector. Based on the characteristics of the VO, four features of VO are extracted, following the Bayesian classification procedure. Once the VO is identified, the VO-based parabolic model will locate the fovea. To find the fittest parabola and the symmetry axis of the retinal vessel, an Shift and Rotation (SR)-Hough transform that combines the Hough transform with the shift and rotation of coordinates is presented. Two public databases of retinal images, DRIVE and STARE, are used to evaluate the proposed method. The experiment results show that the average Euclidean distances between the located fovea and the fovea marked by experts in two databases are 9.8 pixels and 30.7 pixels, respectively. The results are stronger than other methods and thus provide a better macular detection for further disease discovery.

1. Introduction

The fovea is a small depression (about 1 mm in diameter) located in the center of the macula area of the retina [1]. Fovea detection is necessary because the grade of diabetic retinopathy depends on their distance to the fovea. Early detection and diagnosis of retinopathies decrease the risk of retinal lesion and effectively control the illness. However, due to problems of retinal image quality, such as poor contrast and physicians’ subjective observation, the diagnoses can be unstable and uncertain. Computer analysis systems for retinal images can offer an efficient and stable assistance for diagnosis.

The performance of the computer analysis system is generally influenced by retinal anatomy detection. The fovea is difficult to observe, but it usually is where the diabetic retinopathy occurs. The consequence of this obvious visual structure in retinal images is that fovea localization becomes very difficult. Although a great deal of research has studied this issue, there are still many restrictions to their methods.

The fovea region is always darker than the surrounding retinal tissue. Previous studies searched for it directly on retinal images. Gagnon et al. [2] located dark pigment regions to identify the potential fovea. Zhang and Karray [3] defined the fovea as the area with the lowest intensity. The foveae were identified by using matching correlation together with characteristics typical of a fovea, e.g., the darkest area near the optic disc, by Sinthanayothin et al. [4]. However, these methods face problems when the region is covered by lesions or hemorrhages. Chin et al. [5] located the fovea as the region of minimum vessel density within a search region which is defined by the anatomical priors. This method does not require parameter adjustments for different image resolution, but it relies on the quality of vessel segmentation.

In the meantime, some studies utilized the location of the optic disc (OD) and vasculature features to detect the fovea. Li and Chutatape [6] depicted the major routes of the retinal vessels with 30 extracted landmark points, using them to fit a parabola for the fovea localization. Foracchia et al. [7] proposed a method for fovea localization based on the geometrical directional pattern of the retinal vascular system, which can be geometrically modeled as two parabolas with the same vertex. Tobin et al. [8] also applied this model to find the horizontal raphe of the retina, which is a line passing approximately through the OD and the fovea. Fleming et al. [9] described a method whereby an elliptic form of the major retinal blood vessels is used to obtain approximate locations, which are refined by the circular edge of the optic disc and the local darkness at the fovea. Niemeijer et al. [10] used the local vessel geometry alongside the intensity information to detect the fovea.

Geometrical models are usually applied for locating fovea. However, most studies based on a parabolic model often took the center of the OD as the vertex of the parabola-like vasculature. According to observation, the ideal vertex is the entry point of the main blood vessels (i.e., vessel origin (VO)), which is beside the OD center. Therefore, a VO-based parabolic model for fovea localization is proposed in this paper. This model takes the VO as the vertex of the parabola-like vasculature and finds the fittest parabola with the Hough transform. The fovea is located on the symmetry axis of the parabola with a certain distance from the VO.

Some studies concerning VO detection have been presented. In order to detect vessels within OD, Goatman, et al. [11] roughly estimated VO by selecting the darkest 20% of pixels of the OD and taking the mean of these pixels as the VO. This paper modifies the algorithm presented in [12] and presents a novel algorithm of VO detection. This can be used to improve the detection of blood vessels in OD and the localization of fovea.

In order to accurately extract VO from retinal images, four features based on the characteristic of VO are extracted. Then Bayesian classifier is employed to locate the VO. Meanwhile, an Shift and Rotation (SR)-Hough transform, which combines Hough transform with the shift and rotation of coordinates, is presented to search for the fittest parabola. The fovea region is eventually located with the fittest parabola which is gained by SR-Hough transform.

2. Materials and Methods

A VO-based parabolic model for fovea localization is presented in this paper. To evaluate the performance of the proposed scheme, two available retinal databases, DRIVE [13] and STARE [14], are used. The DRIVE database contains 40 images, with 565 × 584 pixels and 8 bits per color channel. Seven retinopathy images are in this database. The STARE database consists of 81 images, with 700 × 605ȵ pixels and 8 bits per color channel. There are 50 retinopathy images in this database, including hard exudates, soft exudates, hemorrhages and neo-vascularization images. This study excludes three images from the DRIVE database and 32 images from the STARE database, because the foveae in these images were not examined by experts. A total of 17 images from DRIVE database are chosen for training. The surplus 20 images in the DRIVE database and 25 images randomly selected from the STARE database are the tested images.

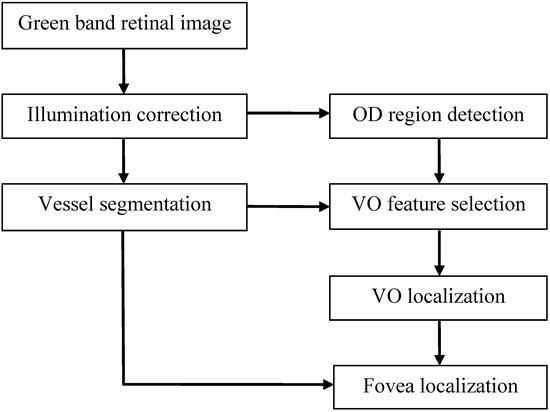

The illumination correction is performed on the green band of color retinal image, followed by the OD detection and blood vasculature segmentation. Then the VO features are extracted and used for VO localization. Once the location of the VO and the blood vasculature are acquired, the VO-based parabolic model works to find the most suitable parabola, which matches the vasculature. The flow chart of this method is shown in Figure 1 and the details are described in the following subsections.

2.1. Illumination Correction

Since non-uniform illumination has been a common phenomenon of images, illumination correction becomes an indispensable pre-processing of object localization. Some studies performed more thorough research of this issue [15,16], properly increasing the local contrast of the major structures in the image, which could ultimately raise the accuracy of structure identification. In color retinal images, a green channel highlights the blood vessels and the OD region. Hence, a gray level image is obtained by extracting the green channel from the color retinal image.

Figure 1.

The flow chart of the fovea localization.

Non-uniform illumination is always one of the main influences of feature selection, subsequently making OD not the brightest region and the vessels unclear. The intensity of vessels would even be higher than OD. In order to decrease uneven background intensity, an illumination-correction process described in [17] is employed. It is defined as:

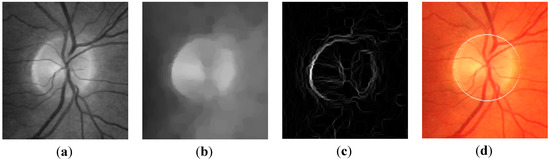

Where I' denotes the illumination-corrected image, and I is the original gray image. μN(x,y) and σN(x,y) are respectively the mean and standard deviation of pixels within a N × N window centered at the pixel (x,y). Here, N is set as 35. The image after illumination correction is shown in Figure 2a.

Figure 2.

Images in gray level morphology processing, (a) the illumination-corrected image I' (b) the vessel-removed image Ivr; (c) the image Ig after morphological gradient; (d) the circular border of the optic disc.

2.2. Optic Disc (OD) Localization

Because the blood vessels are darker in retinal images, the gray-scale morphological closing operator is utilized on the illumination-corrected image to delete vessels and leave the OD and background complete. The coverage of the structure element b of the closing operator cannot be too big, or the retinal image will lose its original feature. On the other hand, if it is too small, vessels cannot be completely removed. Here, b is defined as a circle with 11 pixels as diameter (the largest vessel width). The image after closing is the vessel-removed image, Ivr, as shown in Figure 2b, in which the vessels were removed.

Dilation operation expands objects in an image, while erosion operation makes it shrink. Their difference, referred to as the morphological gradient, emphasizes the boundaries of regions. The gradient image, Ig, can be obtained by applying morphological gradient on Ivr, as shown in Figure 2c. In order to detect the range of the OD from the gradient image, the circular Hough transform is used. Then, the circular contour of the OD is obtained. The OD diameter, which provides significant data for fovea localization in the later subsection, can be determined with this circular border. Figure 2d shows the circular border of the OD superimposing on the color retinal image.

2.3. Retinal Vessel Segmentation

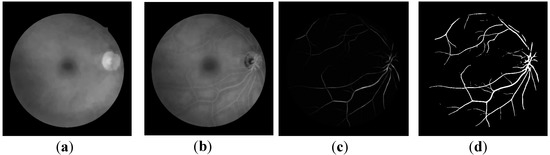

To get the blood vasculature, the OD and fovea (or some other background structures) should be removed. The difference between the vessel-removed image Ivr (Figure 3a) and the illumination-corrected image I' gets the crude vessel structure image Icv. In the image Icv, background and OD are no longer obvious. See Figure 3b.

Figure 3.

Images in retinal vessel segmentation: (a) the vessel-removed image Ivr; (b) the crude vessel image Icv; (c) the image after line detector processing; (d) the Otsu binary image Io of (d).

Based on the fact that the vessels are line-like and smooth, a line detector [16,18] is applied to the image Icv for further vessel enhancement and detection. The line detector consists of 12 oriented line segments passing through the center pixel p(x,y) of a square window. Each line is defined as the following formula,

where (i,j) denotes a neighbor of p, and θ = 0°, 15°, 30°,…, 165°, is the slope angle of each line.

The average gray level is computed along each line in a fixed length l. The line strength of the pixel p(x,y), denoted as S(x,y), is given by the difference between L(x,y) and N(x,y). Here, L(x,y) is the largest average gray level among those of 12 lines, and N(x,y) is the mean intensity of the l × l square neighborhood. The best performance would be with l =15 pixels [16]. Since vessels are darker than the background, the line strength can be use to identify whether a pixel is a vessel pixel or not. After the line detector, the Otsu algorithm [19] is used to get the binary vasculature image Io. Note that the vessel structures in images (b)–(d) of Figure 3 are highlighted and given to illuminate the effect of each step of vessel segmentation.

2.4. Vessel Origin (VO) Feature Selection

According to the VO-based parabolic model, the retinal vessel origin and major blood vessels are employed to find the fittest parabola. Because main vessels stem from the VO, using the VO as the parabolic vertex can provide a better vessel structure description and fovea localization than using the center of OD as the parabolic vertex. In the meantime, the OD does not coincide with the geometric center of OD. Before VO detection, four features of VO—vessel thickness rate, vessel density, vessel radiation, and vessel average intensity described in [12]—are selected and presented in the following subsections.

2.4.1. Vessel Thickness Rate

The closer the vessel is to the VO, the thicker it will be. The thickness rate of a vessel pixel p(x,y) is defined by the ratio between the numbers of vessel pixels, within a rectangle mask centered at p(x,y), on the binary vascular image Io and on the binary vascular thinned image It. The binary vascular thinned image is obtained by the common morphological thinning operator. For details, one is referred to [19]. The feature map of thickness rate FVTR is represented as the following,

where wvtr is an elongated rectangular mask. On account that main vessels radiate from vessel origin to the above and the below, the size of wvtr is set as r × 2r, r denoting the radius of the optic disc. Symbol “ * ” represents the convolution product. Figure 4b is the feature map of thickness rate corresponding to Figure 4a.

2.4.2. Vessel Density

The vessel density of a pixel p(x,y) is defined as the number of vessel pixels within a rectangle mask centered at p(x,y). The feature map of vessel density is represented as the following,

where the size of the mask wvd is the same as wvtr in the Equation (3). Since vessels grow out from the vessel origin, vessels are concentrated around the vessel origin, and the value of vessel density in this region is larger than that in others. A vessel density map is shown in Figure 4c.

Figure 4.

An example of feature maps, (a) the original image; (b) the thickness rate feature map FVTR; (c) the density feature map FVD; (d) the radiation feature map FVR; (e) the average intensity feature map FVAI.

2.4.3. Vessel Radiation

The vessel radiation of a pixel p(x, y) is defined as:

Where n denotes the number of neighbors in the mask region centered at p(x, y). denotes the vessel flux through the ith neighbor, which is tangent to the vessel segment. is the vector from the ith neighbor to the center of mask region.

ai is the magnitude of vector (i.e., ). The dot product of and means the component of in direction. In Equation (5), size of the mask is r × 2r, r denotes the radius of optic disc, and n equals 2r2. The radiation is calculated to get the radiation image FVR, as shown in Figure 4d. The gray level amount in the image FVR is the value of the radiation feature. The vessel radiation of a pixel p(x,y) represents the radiating level of blood vessels which emit from p(x,y). As retinal vessels grow out from vessel origin, vessel radiation around vessel origin is always higher.

2.4.4. Vessel Average Intensity

The vessel average intensity of a pixel p(x,y) is the average gray level in a 5 × 5 window centered at p(x,y). To get this mean feature map FVAI, as shown in Figure 4e, the average value is calculated with the illumination-corrected image I'. The intensity around vessel origin would be darker because of the vascular structure around it.

2.5. VO Localization

Since the Bayesian classifier is robust against retinal lesion in the images of the training set, it is chosen for retinal vessel origin detection. Pixels are classified into two classes, vessel origin (VO) and non-vessel origin (NVO). The pixel with the maximal average likelihood ratio of a posteriori probabilities is chosen as the vessel origin. This is described in detail in the following.

Based on the four features mentioned in the previous subsections, each pixel is associated with a multivariate feature vector x. The multivariate Gaussian density function is used to model the conditional densities [20], which are given as:

Where ΣVO and ΣNVO are covariance matrices, and μVO and μNVO are the population mean vectors generated from the training data set for classes VO and NVO, respectively.

On the basis of the Bayes rule, the well-known likelihood ratio of a posteriori probabilities is defined as follows [8]:

Where p(CVO) and p(CNVO) denote the a priori probabilities of VO and of NVO, respectively. Accordingly, the likelihood ratio function Rllh builds a confidence map, denoted as FC. The VO location (h,k) is defined as the point (x,y) having the maximal average confidence value. This is presented as the following:

Where w is an r × r mean filter, and symbol “ * ” represents the convolution product. Here, r is the radius of optic disc.

2.6. Fovea Localization

The main retinal vessels distribute as a parabolic curve, and the symmetry axis of retina vasculature passes through the VO. The fovea lies on the symmetry axis in a distance of 2.5 OD diameters away from the VO [6,21]. Here, a Hough transform is employed to search for the fittest parabola and symmetry axis of the retinal vessel. In order to reduce the dimension of parameter space of Hough transform, the origin of the coordinate system is shifted to the VO, and then the coordinate axes are rotated about the VO. Therefore, an SR-Hough transform is proposed, which combines the Hough transform with coordinate transformation. In the meantime, to speed up the process of the Hough transform, the small vessels of vasculature on the binary vessel image Io are pruned by the morphological opening processing before applying the SR-Hough transform.

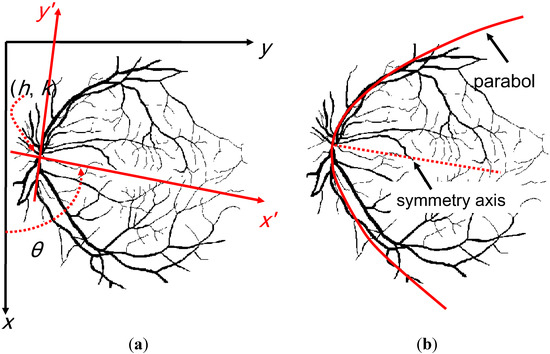

A coordinate transformation integrating the shift and rotation of coordinates is performed. As shown in Figure 5, a Cartesian coordinate system, denoted by x-y and originating at the upper-left corner in the image, is given. A second Cartesian coordinate system, denoted by x'-y', is obtained by shifting the origin of the x-y plane to the VO location (i.e., taking it as the origin of the x'-y' coordinate system) and rotating it in a counter-clockwise direction through an angle θ around the new origin.

Figure 5.

(a) The proposed parabolic model; (b) the fittest parabola superimposing on the retinal vessel structure.

The coordinate transform is defined as:

Where (h,k) denotes the origin of the x'-y' plane. The coordinate (x,y) is an arbitrary point relative to the x-y coordinate system, while the coordinates (x',y') are relative to the x'-y' coordinate system. After rotating through an angle α, the symmetry axis of the fittest parabola is exactly closed with the x' axis of the x'-y' coordinate system. Therefore, the fittest parabola can be expressed as the following,

Where c is the focal length of the parabola. Substituting x' and y' in Equation (10) into Equation (11) leads to the Equation (12),

This parabola is utilized to estimate the parameters (α,c) with the Hough transform. In principle, the Hough transform [19] maps the points in the x-y plane to the α-c plane. All the points on this parabola have the same values of α and c in the α-c parameter space. For every vessel point in the x-y plane, let α equal to each of the subdivision value on the α-axis (here, 0≤α<359° for 1° step) and solve for the corresponding c that satisfies Equation (12). Then, the c value is rounded off to the nearest integer. The cell at coordinates (i,j), with accumulator value A(i,j), corresponds to the square associated with parameter space coordinates (αi,cj). While a choice of αl yields solution cm, then let A(l, m) = A(l, m) + 1. A value of n in A(i, j) means that n points in the x-y plane lie on a parabola, of which α = αi, and c = cj .

Once all the vessel points have been processed, the fittest parabola can be determined by finding the (α, c) fittest of the parabola according to the following equations.

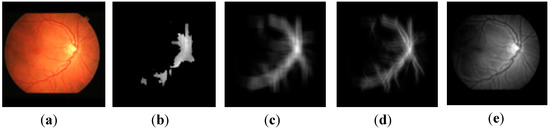

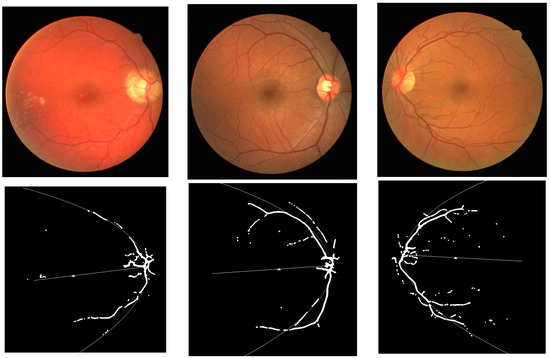

The rough location of fovea, denoted as (u,v), lies on the symmetry axis of the parabola in a distance of 2.5 OD diameters from the VO. Figure 6 shows an example of the location of fovea obtained by the VO-based parabolic model. In order to refine the fovea location, a square region centered at (u,v) with a length of 1.6 times the OD diameters is cropped from the vessel-removed image Ivr. Then, the centroid of the 6% lower intensity pixels within the square region is regarded as the fovea location.

Figure 6.

The upper row shows the original images, and the lower row illustrates the rough position of the fovea, marked with the white small triangle.

3. Results and Discussions

3.1. Results

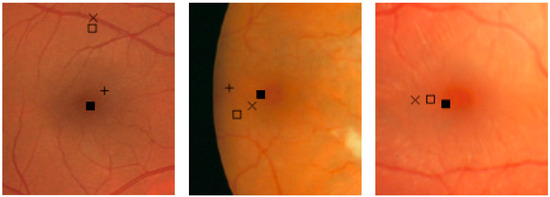

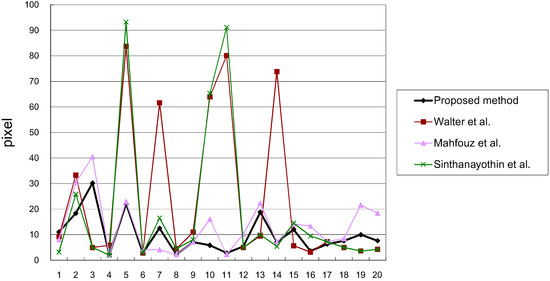

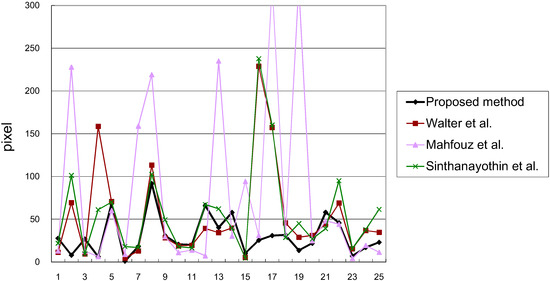

Most of the work on locating the fovea on retinal images is evaluated by measuring the distance between the located fovea center and the ground truth [1]. In this work, the ground truth of each retinal image is the average of coordinates manually marked by two experts. Figure 7 shows some examples of the fovea localization obtained by each method. Figure 8 and Figure 9 show the comparison of the Euclidean distance between the fovea obtained by each method and that given by experts. Essentially, Walter et al. [22] and Mahfouz et al. [23] only reveal the OD detection algorithms. Their fovea data are both obtained by the traditional parabolic model, which takes the OD center as the vertex of the parabolic-like vasculature. The results reflect the superiority of taking the VO as the vertex of the parabolic model. As one can see, the Euclidean distance of the proposed method is small and stable. In contrast, the Euclidean distance of other methods show the stark rises and falls.

Figure 7.

Examples of the fovea location. The fovea given by experts is at the center of the image. Each note denotes the fovea obtained by the related method, “ + ”: Mahfouz et al. method, “ □ ”: Walter et al. method, “ × ”: Sinthanayothin et al. method, and “ ■ ”: the proposed method.

Figure 8.

The comparison of the Euclidean distance between the fovea location in each method and expert location (DRIVE).

Figure 9.

The comparison of Euclidean distance between the fovea location in each method and expert location (STARE).

Table 1 lists the average Euclidean distance between the fovea obtained by each method and that given by experts. The results gained by the proposed scheme are 9.8 pixels in DRIVE database, 30.7 pixels in STARE database, which are much better than the other methods. It shows that replacing the OD center with the VO as the vertex of parabolic model locates the fovea more accurately.

Table 1.

The average of the Euclidean distance (in pixels) between the fovea obtained by each method and that given by experts.

| Method | DRIVE | STARE |

|---|---|---|

| Proposed method | 9.8 | 30.7 |

| Mahfouz et al. [23] | 13.1 | 79.7 |

| Walter et al. [22] | 23.9 | 53.1 |

| Kovacs et al. [24] | 16.2 | - |

| Sinthanayothin et al. [4] | 19.1 | 56.3 |

3.2. Discussions

The proposed algorithm provides a better performance and works well even with the existence of exudative lesions or bright artifactual features. However, it tends to produce false fovea detection if there are large hemorrhages around the fovea, especially in the STARE dataset. Another factor decreasing the precision of the method is raised by the vessel segmentation due to the presence of heavy pathologies. A novel vessel segmentation algorithm [25,26,27] may be taken into account to improve the vessel segmentation in the future.

The retinopathy analysis depends on the fovea localization. The detection of hard exudates is not enough to grade the diabetic macular edema, because the distribution of exudates around the fovea also needs to be considered. Therefore, the proposed approach for locating fovea may provide a better macular detection for further disease detection.

In this paper, we supposed that the fovea is considered as a point (as most of the published works did), and evaluated the performance by measuring the distance between the located fovea center and the ground truth point. The measurement of the fovea area is challenging work that necessitates future development.

4. Conclusions

This paper presents a fovea-detection method based on the VO parabolic model, which takes VO as the vertex of a parabola-like vasculature. During the fovea-detecting process, the vessel structure map is a prerequisite obtained by using mathematic morphology and a line detector. Four VO features are subsequently selected for the VO localization. Concurrently, an SR-Hough transform reduces the parameter dimensions of the parabolic Hough transform and speeds up the fovea-locating procedure. The proposed algorithm performs better than some other methods, and it works automatically. However, if there are large hemorrhages around the fovea, this method sometimes results in false fovea detection. An extension of this study could entail the following: first, improving the vessel segmentation algorithm; second, detecting exudates; and third, grading diabetic macular edema.

Acknowledgment

This research was supported by the National Science Council R.O.C. under Grant NSC 102-2221-E-005-082. The authors thank A. Hoover for making his database publicly available.

Author Contributions

All authors carried out the work collaboratively. Chun-Yuan Yu and Shyr-Shen Yu conceived of and implemented the algorithm. Chen-Chung Liu helped interpret the results. All authors drafted the manuscript together.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gegundez-Arias, M.E.; Marin, D.; Bravo, J.M.; Suero, A. Locating the Fovea Center Position in Digital Fundus Images Using Thresholding and Feature Extraction Techniques. Comput. Med. Imaging Graph. 2013, 37, 386–393. [Google Scholar] [CrossRef] [PubMed]

- Gagnon, L.; Lalonde, M.; Beaulieu, M.; Boucher, M.C. Procedure to Detect Anatomical Structures in Optical Fundus Images. Proc. SPIE 2001. [Google Scholar] [CrossRef]

- Zhang, B.; Karray, F. Optic disc and fovea detection via multi-scale matched filters and a vessels’ directional matched filter. In Proceedings of the 2010 International Conference on Autonomous and Intelligent Systems (AIS), Povoa de Varzim, Portugal, 21–23 June 2010; pp. 1–5.

- Sinthanayothin, C.; Boyce, J.; Cook, H.; Williamson, T. Automated Localization of the Optic Disc, Fovea, and Retinal Blood Vessels from Digital Colour Fundus Images. Br. J. Ophthalmol. 1999, 83, 902–910. [Google Scholar] [CrossRef] [PubMed]

- Chin, K.S.; Trucco, E.; Tan, L.; Wilson, P.J. Automatic Fovea Location in Retinal Images Using Anatomical Priors and Vessel Density. Pattern Recognit. Lett. 2013, 34, 1152–1158. [Google Scholar] [CrossRef]

- Li, H.; Chutatape, O. Automated Feature Extraction in Color Retinal Images by a Model Based Approach. IEEE Trans. Biomedical Eng. 2004, 51, 246–254. [Google Scholar] [CrossRef] [PubMed]

- Foracchia, M.; Grisan, E.; Ruggeri, A. Detection of Optic Disc in Retinal Images by Means of a Geometrical Model of Vessel Structure. IEEE Trans. Med. Imaging 2004, 23, 1189–1195. [Google Scholar] [CrossRef] [PubMed]

- Tobin, K.W.; Chaum, E.; Govindasamy, V.P. Detection of Anatomic Structures in Human Retinal Imagery. IEEE Trans. Med. Imaging 2007, 26, 1729–1739. [Google Scholar] [CrossRef] [PubMed]

- Fleming, A.D.; Goatman, K.A.; Philip, S.; Olson, J.A.; Sharp, P.F. Automatic Detection of Retinal Anatomy to Assist Diabetic Retinopathy Screening. Phys. Med. Biol. 2007, 52, 331–345. [Google Scholar] [CrossRef] [PubMed]

- Niemeijer, M.; Abràmoff, M.D.; van Ginneken, B. Automated Localization of the Optic Disc and the Fovea. In Proceedings of the IEEE EMBS Conference on 30th Annual International, Vancouver, BC, Canada, 2008; pp. 3538–3541.

- Goatman, K.A.; Fleming, A.D.; Philip, S.; Williams, G.J.; Olson, J.A.; Sharp, P.F. Detection of New Vessels on the Optic Disc Using Retinal Photographs. IEEE Trans. Med. Imaging 2011, 30, 972–979. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.Y.; Liu, C.C.; Wu, J.L.; Yu, S.S.; Huang, J.Y. A Study of Vessel Origin Detection on Retinal Images. In Proceedings of IEA/AIE 2013 Conference, Amsterdam, Netherlands, 17–21 June 2013.

- University Medical Center Utrecht, Image Sciences Institute. Available online: http://www.isi.uu.nl/Research/ Databases/DRIVE (accessed on 20 October 2011).

- Hoover, A. Structured Analysis of the Retina Project Website. Available online: http://www.ces.clemson.edu/~ahoover/stare (accessed on 10 September 2009).

- Foracchia, M.; Grisan, E.; Ruggeri, A. Luminosity and Contrast Normalization in Retinal Images. Med. Image Anal. 2005, 9, 179–190. [Google Scholar] [CrossRef] [PubMed]

- Ricci, E.; Perfetti, R. Retinal Blood Vessel Segmentation Using Line Operators and Support Vector Classification. IEEE Trans. Med. Imaging 2007, 26, 1357–1365. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, H.K.; Liu, C.C.; Yu, C.Y.; Kuo, S.W.; Yu, S.S. A Novel Optic Disc Detection Scheme on Retinal Images. Expert Syst. Appl. 2012, 39, 10600–10606. [Google Scholar] [CrossRef]

- Zwiggelaar, R.; Astley, S.M.; Boggis, C.R.M.; Taylor, C.J. Linear Structures in Mammographic Images: Detection and Classification. IEEE Trans. Med. Imaging 2004, 23, 1077–1086. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Pearson: New Jersey, NJ, USA, 2010. [Google Scholar]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification, 2nd ed.; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Niemeijer, M.; Abràmoff, M.D. Segmentation of the Optic Disc, Macula and Vascular Arch in Fundus Photographs. IEEE Trans. Med. Imaging 2007, 26, 116–127. [Google Scholar] [CrossRef] [PubMed]

- Walter, T.; Klein, J.C.; Massin, P.; Erginay, A. A Contribution of Image Processing to the Diagnosis of Diabetic Retinopathy—Detection of Exudates in Color Fundus Images of the Human Retina. IEEE Trans. Med. Imaging 2002, 21, 1236–1243. [Google Scholar] [CrossRef] [PubMed]

- Mahfouz, A.E.; Fahmy, A.S. Fast Localization of the Optic Disc Using Projection of Image Features. IEEE Trans. Image Process. 2010, 19, 3285–3289. [Google Scholar] [CrossRef] [PubMed]

- Kovacs, L.; Qureshi, R.J.; Nagy, B.; Harangi, B.; Hajdu, A. Graph based detection of optic disc and fovea in retinal images. In Proceedings of the 2010 4th International Workshop on Soft Computing Applications (SOFA), Arad, Romania, 15–17 July 2010; pp. 143–148.

- Conversano, F.; Franchini, R.; Demitri, C.; Massoptier, L.; Montagna, F.; Maffezzoli, A.; Malvasi, A.; Casciaro, S. Hepatic vessel segmentation for 3D planning of liver surgery: Experimental evaluation of a new fully automatic algorithm. Acad. Radiol. 2011, 18, 461–470. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, U.T.V.; Bhuiyan, A.; Park, L.A.F.; Ramamohanarao, K. An Effective Retinal Blood Vessel Segmentation Method using Multi-scale Line Detection. Pattern Recognit. 2013, 46, 703–715. [Google Scholar] [CrossRef]

- Bhuiyan, A.; Kawasaki, R.; Lamoureux, E.; Ramamohanarao, K.; Wong, T.Y. Retinal Artery-Vein Caliber Grading Using Color Fundus Imaging. Comput. Methods Programs Biomed. 2013, 111, 104–114. [Google Scholar] [CrossRef] [PubMed]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).