An Overview of the Euler-Type Universal Numerical Integrator (E-TUNI): Applications in Non-Linear Dynamics and Predictive Control

Abstract

1. Introduction

2. Related Work

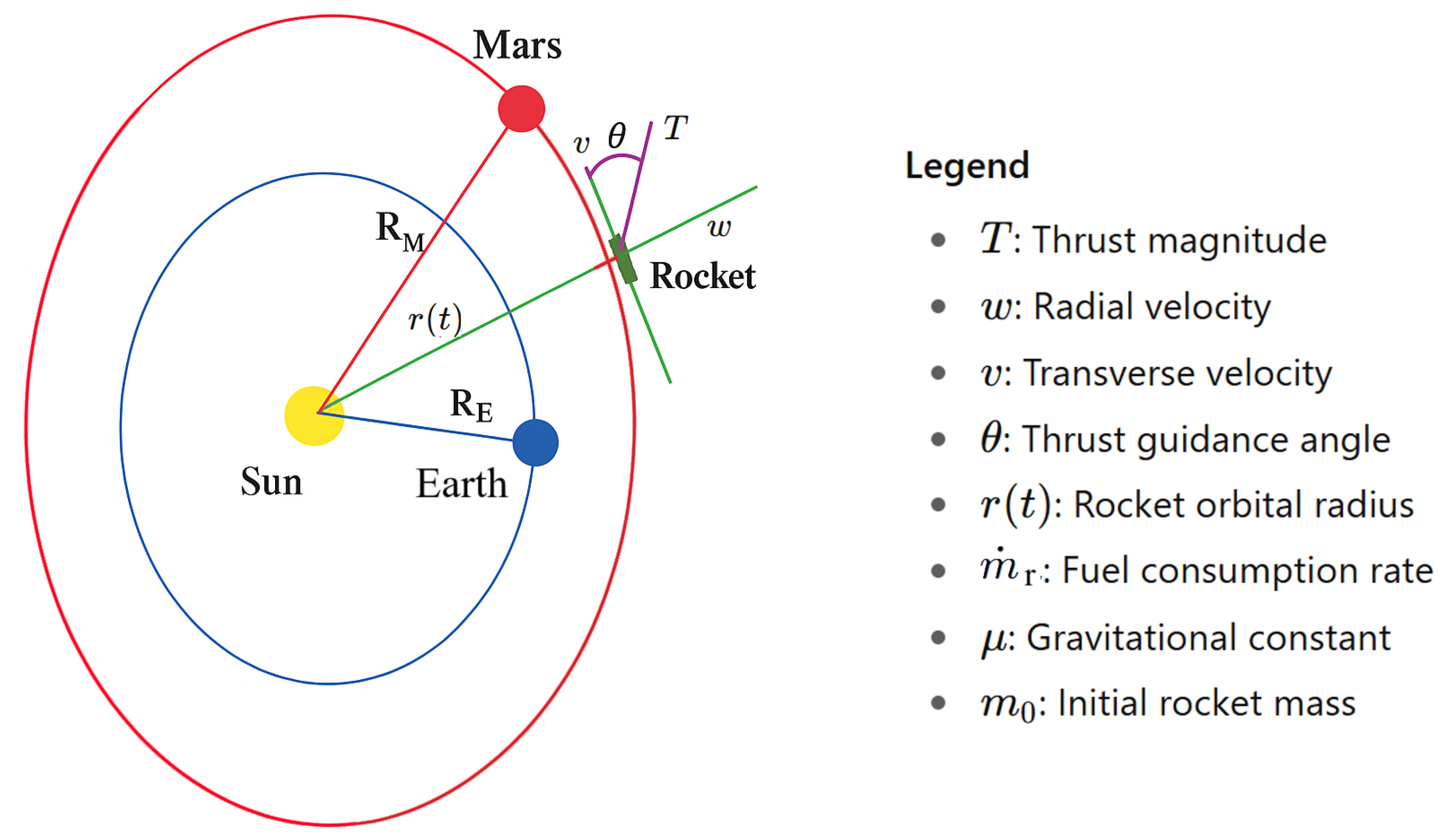

3. Neural Predictive Control Problem Formulation

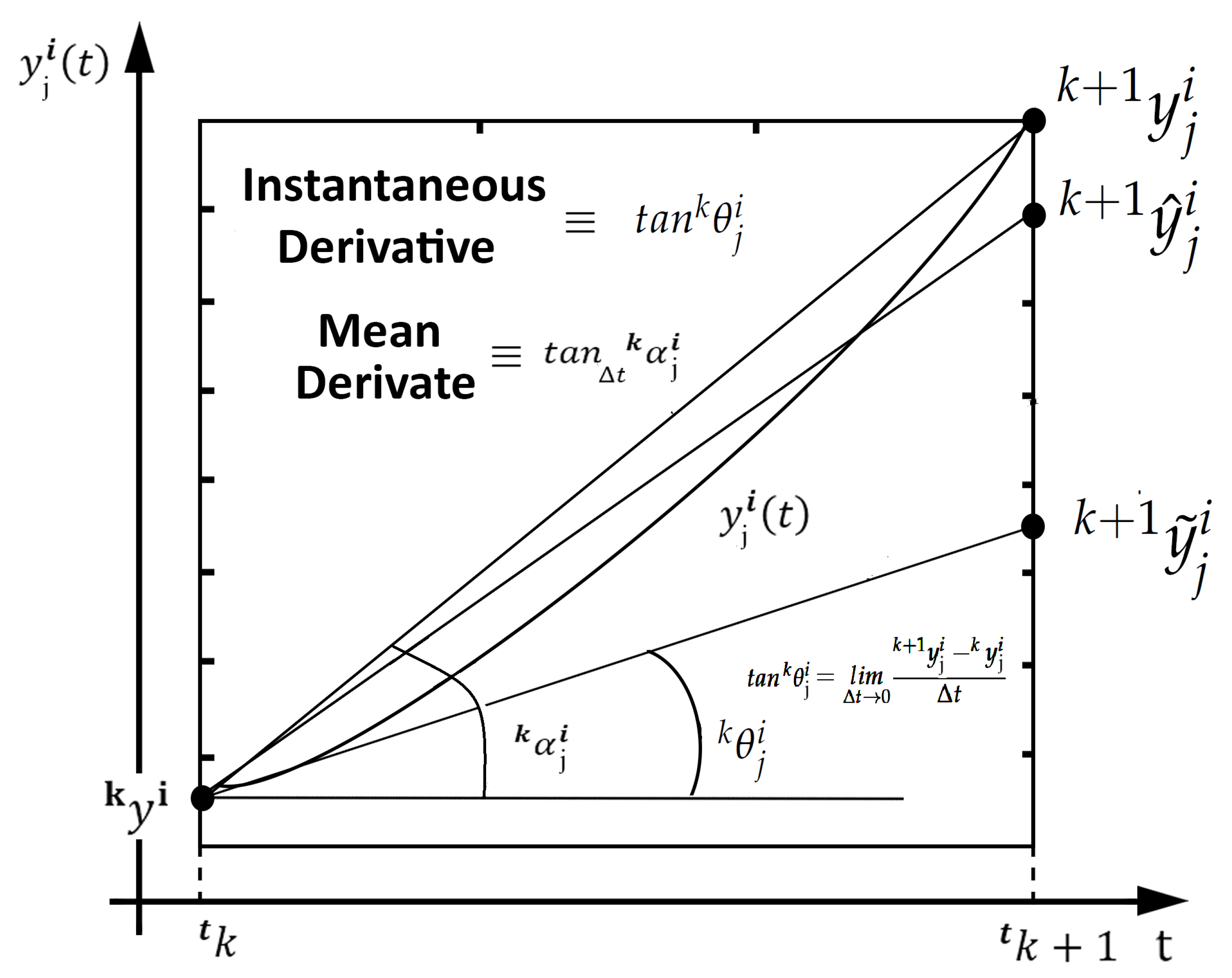

4. Preliminaries and Symbols Used

- ⋯ System of continuous differential equations.

- ⋯ State Variables.

- ⋯ Instantaneous derivative functions.

- ⋯ Particular continuous and differentiable curve of a family of solution curves of the dynamical system .

- ⋯ First derivative of .

- ⋯ Vector of state variables at time .

- ⋯ Scalar state variable for at time . It is a generic discretization point of the state variables generated by the integers i, j, and k.

- ⋯ Total number of state variables.

- ⋯ Vector of control variables at time .

- ⋯ Scalar control variable for at time .

- ⋯ Total number of control variables.

- ⋯ Exact vector of state variables at time .

- ⋯ Exact scalar state variable for at time .

- ⋯ Estimated Vector of state variables by UNI or E-TUNI at time .

- ⋯ Scalar state variable estimated by UNI or E-TUNI for at time .

- ⋯ Estimated Vector of state variables when using only the integrator and without using the neural network at time .

- ⋯ Exact vector of positive mean derivative functions at time .

- ⋯ Scalar positive mean derivative functions for at time .

- ⋯ Estimated vector of positive mean derivative functions by the E-TUNI at time .

- ⋯ Vector of positive instantaneous derivatives at time .

- ⋯ Scalar positive instantaneous derivative for at instant .

- ⋯ Time instant .

- ⋯ Time instant .

- ⋯ Integration step.

- i⋯ Over-index that enumerates a particular curve from the family of curves of the dynamical system to be modeled ().

- j⋯ Under-index that enumerates the state and control variables.

- k⋯ Over-index that enumerates the discrete time instants ().

- r⋯ Total number of horizons of the time variable.

- q⋯ Total number of curves from the family of curves of the dynamic system to be modeled.

- ⋯ Instant of time within the interval as a result of the Differential Mean Value Theorem (see Theorem 1).

- ⋯ Instant of time within the interval as a result of the Integral Mean Value Theorem (see Theorem 2).

- m⋯ Total number of horizons in a predictive control structure.

5. Mathematical Development

5.1. Basic Mathematical Development of E-TUNI

5.2. Predictive Control Designed with E–TUNI

5.3. Correct Mathematical Demonstration of the E-TUNI General Expression

5.4. Mathematical Relationship Between Mean and Instantaneous Derivatives

6. Results and Analysis

- Step 1. Generate the E-TUNI input/output training patterns through the Runge–Kutta 4-5 integrator, applied to the non-linear simple pendulum equation. Note that, as this dynamical system is a non-linear equation, its solution in the phase plane is not a perfect circle.

- Step 2. Use the Levenberg–Marquardt algorithm in the direct approach to training two distinct neural networks, namely: (i) a neural network to learn the instantaneous derivative functions and (ii) another neural network to learn the mean derivative functions.

- Step 5. Compare the results obtained for different integration steps. Also, compare the solution of the interpolating parabolas with the mean derivative equations.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ABNN | Adams–Bashforth Neural Network |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Networks |

| E-TUNI | Euler-Type Universal Numerical Integrator |

| MLP | Multi-Layer Perceptron |

| MSE | Mean Squared Error |

| NARMAX | Non-linear Auto Regressive Moving Average with eXogenous input |

| PCNN | Predictive-Corrector Neural Network |

| RBF | Radial Basis Function |

| RKNN | Runge–Kutta Neural Network |

| SVM | Support Vector Machine |

| UNI | Universal Numerical Integrator |

References

- McCulloch, W.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition. Dokl. Akad. Nauk. SSR 1957, 114, 953–956. [Google Scholar] [CrossRef]

- Charpentier, E.; Lesne, A.; Nikolski, N. Kolmogorov’s Heritage in Mathematics, 1st ed.; Spring: Berlin/Heidelberg, Germany; New York, NY, USA, 2004. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1999. [Google Scholar]

- Tasinaffo, P.M.; Gonçalves, G.S.; Cunha, A.M.; Dias, L.A.V. An introduction to universal numerical integrators. Int. J. Innov. Comput. Inf. Control 2019, 15, 383–406. [Google Scholar] [CrossRef]

- Henrici, P. Elements of Numerical Analysis; John Wiley and Sons: New York, NY, USA, 1964. [Google Scholar]

- Vidyasagar, M. Non-linear Systems Analysis; Electrical Engineering Series; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1978. [Google Scholar] [CrossRef]

- Rama Rao, K. A Review on Numerical Methods for Initial Value Problems; Internal Report, INPE-3011-RPI/088; Instituto Nacional de Pesquisas Espaciais (INPE): São José dos Campos, SP, Brazil, 1984. [Google Scholar]

- Chen, S.; Billings, S.A. Representations of nonlinear systems: The NARMAX model. Int. J. Control 1989, 49, 1013–1032. [Google Scholar] [CrossRef]

- Hunt, K.J.; Sbarbaro, D.; Zbikowski, R.; Gawthrop, P.J. Neural networks for control systems—A survey. Automatica 1992, 28, 1083–1112. [Google Scholar] [CrossRef]

- Euler, L.P. Institutiones Calculi Integralis; Impensis Academiae Imperialis Scientiarum: St. Petersburg, Russia, 1768. [Google Scholar]

- Zhang, Y.; Li, L.; Yang, Y.; Ruan, G. Euler neural network with its weight-direct-determination and structure-automatic-determination algorithms. In Proceedings of the Ninth International Conference on Hybrid Intelligent Systems, Shenyang, China, 12–14 August 2009; IEEE Computer Society: Shenyang, China, 2009; pp. 319–324. [Google Scholar]

- Tasinaffo, P.M. Estruturas de Integração Neural Feedforward Testadas em Problemas de Controle Preditivo. Ph.D. Thesis, INPE-10475-TDI/945. Instituto Nacional de Pesquisas Espaciais (INPE), São José dos Campos, SP, Brazil, 2003. [Google Scholar]

- Tasinaffo, P.M.; Dias, L.A.V.; da Cunha, A.M. A qualitative approach to universal numerical integrators (UNIs) with computational application. Hum.-Centric Intell. Syst. 2024, 4, 571–598. [Google Scholar] [CrossRef]

- Tasinaffo, P.M.; Dias, L.A.V.; da Cunha, A.M. A quantitative approach to universal numerical integrators (UNIs) with computational application. Hum.-Centric Intell. Syst. 2025, 5, 1–20. [Google Scholar] [CrossRef]

- Tasinaffo, P.M.; Gonçalves, G.S.; Marques, J.C.; Dias, L.A.V.; da Cunha, A.M. The Euler-type universal numerical integrator (E-TUNI) with backward integration. Algorithms 2025, 18, 153. [Google Scholar] [CrossRef]

- Wang, Y.-J.; Lin, C.-T. Runge-Kutta neural network for identification of dynamical systems in high accuracy. IEEE Trans. Neural Netw. 1998, 9, 294–307. [Google Scholar] [CrossRef]

- Uçak, K. A Runge-Kutta neural network-based control method for nonlinear MIMO systems. Soft Comput. 2019, 23, 7769–7803. [Google Scholar] [CrossRef]

- Uçak, K. A novel model predictive Runge-Kutta neural network controller for nonlinear MIMO systems. Neural Process. Lett. 2020, 51, 1789–1833. [Google Scholar] [CrossRef]

- Chen, R.T.Q.; Rubanova, Y.; Bettencourt, J.; Duveand, D. Neural ordinary differential equations. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurlPS), Montréal, QC, Canada, 2–8 December 2018; pp. 1–19. [Google Scholar] [CrossRef]

- Sage, A.P. Optimum Systems Control; Prentice-Hall, Inc.: Englewood Cliffs, NJ, USA, 1968. [Google Scholar]

- Tasinaffo, P.M.; Rios Neto, A. Predictive control with mean derivative based neural Euler integrator dynamic model. Rev. Controle Autom. 2006, 18, 94–105. [Google Scholar] [CrossRef]

- Munem, M.A.; Foulis, D.J. Calculus with Analytic Geometry (Volumes I and II); Worth Publishers, Inc.: New York, NY, USA, 1978. [Google Scholar]

- Lagaris, I.E.; Likas, A.; Fotiadis, D.I. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 1998, 9, 987–1000. [Google Scholar] [CrossRef]

- Hayati, M.; Karami, B. Feedforward neural network for solving partial differential equations. J. Appl. Sci. 2007, 7, 2812–2817. [Google Scholar] [CrossRef]

- Lagaris, I.E.; Likas, A.; Fotiadis, D.I. Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans. Neural Netw. 2000, 11, 1041–1049. [Google Scholar] [CrossRef]

- Weinan, E.; Han, J.; Jentzen, A. Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Commun. Math. Stat. 2017, 5, 349–380. [Google Scholar] [CrossRef]

- Han, J.; Arnulf, J.; Weinan, E. Solving high-dimensional partial differential equations using deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, 8505–8510. [Google Scholar] [CrossRef] [PubMed]

- Sacchetti, A.; Bachmann, B.; Löffel, K.; Künzi, U.-M.; Paoli, B. Neural networks to solve partial differential equations: A comparison With finite elements. J. IEEE Access 2022, 10, 32271–32279. [Google Scholar] [CrossRef]

- Zhu, Q.; Yang, J. A local deep learning method for solving high order partial differential equations. Numer. Math. Theor. Meth. Appl. 2022, 15, 42–67. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. Deepxde: A deep learning library for solving differential equations. SIAM Rev. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Han, J.; Nica, M.; Stinchcombe, A.R. A derivative-free method for solving elliptic partial differential equations with deep neural networks. J. Comput. Phys. 2020, 419, 109672. [Google Scholar] [CrossRef]

- Van der Houven, P.J. The development of Runge-Kutta methods for partial differential equations. Appl. Numer. Math. 1996, 20, 261–272. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Singhal, S.; Wu, L. Training multilayer perceptrons with the extended Kalman algorithm. Adv. Neural Inf. Process. Syst. 1989, 1, 133–140. [Google Scholar]

- Rios Neto, A. stochastic optimal linear parameter estimation and neural nets training in systems modeling. J. Braz. Soc. Mech. Sci. 1997, 19, 138–146. [Google Scholar]

- Hagan, M.T.; Menhaj, M.B. Training feedforward networks with the Marquardt algorithm. IEEE Trans. Neural Netw. 1994, 5, 989–993. [Google Scholar] [CrossRef]

| n | Time | and | Determining Form |

|---|---|---|---|

| 1 | Initial Instant | ||

| 2 | Given by E-TUNI | ||

| 3 | Output of net | ||

| 4 | From Equations (33) or (34) |

| MSE of Validation Patterns | Direct Approach | |

|---|---|---|

| - | Instantaneous Derivatives | |

| Mean Derivatives | ||

| Mean Derivatives | ||

| Mean Derivatives | ||

| Mean Derivatives |

| NARMAX | E–TUNI | RKNN | |

|---|---|---|---|

| (Training) | |||

| (Validation) | |||

| (Testing) | |||

| (Training) [s] | 0.01 | 0.01 | Not applicable |

| (Simulation) [s] | 0.01 | 0.01 | 0.01 |

| Propagation Interval [s] | [0, 25] | [0, 25] | [0, 25] |

| Training Patterns | 1600 | 1600 | 1600 |

| Testing Patterns | 400 | 400 | 400 |

| Training Algorithm | LM | LM | LM |

| Hidden Layers (HLs) | 1 | 1 | 1 |

| Neurons | 30 | 30 | 30 |

| Activation Function | tansig | tansig | tansig |

| Learning Rate | 0.2 | 0.2 | 0.2 |

| Training Domain | , , | same | same |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tasinaffo, P.M.; Gonçalves, G.S.; Marques, J.C.; Dias, L.A.V.; da Cunha, A.M. An Overview of the Euler-Type Universal Numerical Integrator (E-TUNI): Applications in Non-Linear Dynamics and Predictive Control. Algorithms 2025, 18, 562. https://doi.org/10.3390/a18090562

Tasinaffo PM, Gonçalves GS, Marques JC, Dias LAV, da Cunha AM. An Overview of the Euler-Type Universal Numerical Integrator (E-TUNI): Applications in Non-Linear Dynamics and Predictive Control. Algorithms. 2025; 18(9):562. https://doi.org/10.3390/a18090562

Chicago/Turabian StyleTasinaffo, Paulo M., Gildárcio S. Gonçalves, Johnny C. Marques, Luiz A. V. Dias, and Adilson M. da Cunha. 2025. "An Overview of the Euler-Type Universal Numerical Integrator (E-TUNI): Applications in Non-Linear Dynamics and Predictive Control" Algorithms 18, no. 9: 562. https://doi.org/10.3390/a18090562

APA StyleTasinaffo, P. M., Gonçalves, G. S., Marques, J. C., Dias, L. A. V., & da Cunha, A. M. (2025). An Overview of the Euler-Type Universal Numerical Integrator (E-TUNI): Applications in Non-Linear Dynamics and Predictive Control. Algorithms, 18(9), 562. https://doi.org/10.3390/a18090562