Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization

Abstract

1. Introduction

2. Background

- Generate a surrogate model of the objective function f, usually under the form of a stochastic process.

- Select a new point to evaluate the true objective function.

- Update the surrogate model using the Bayesian update, the pair of the new point and the value of the objective function in it.

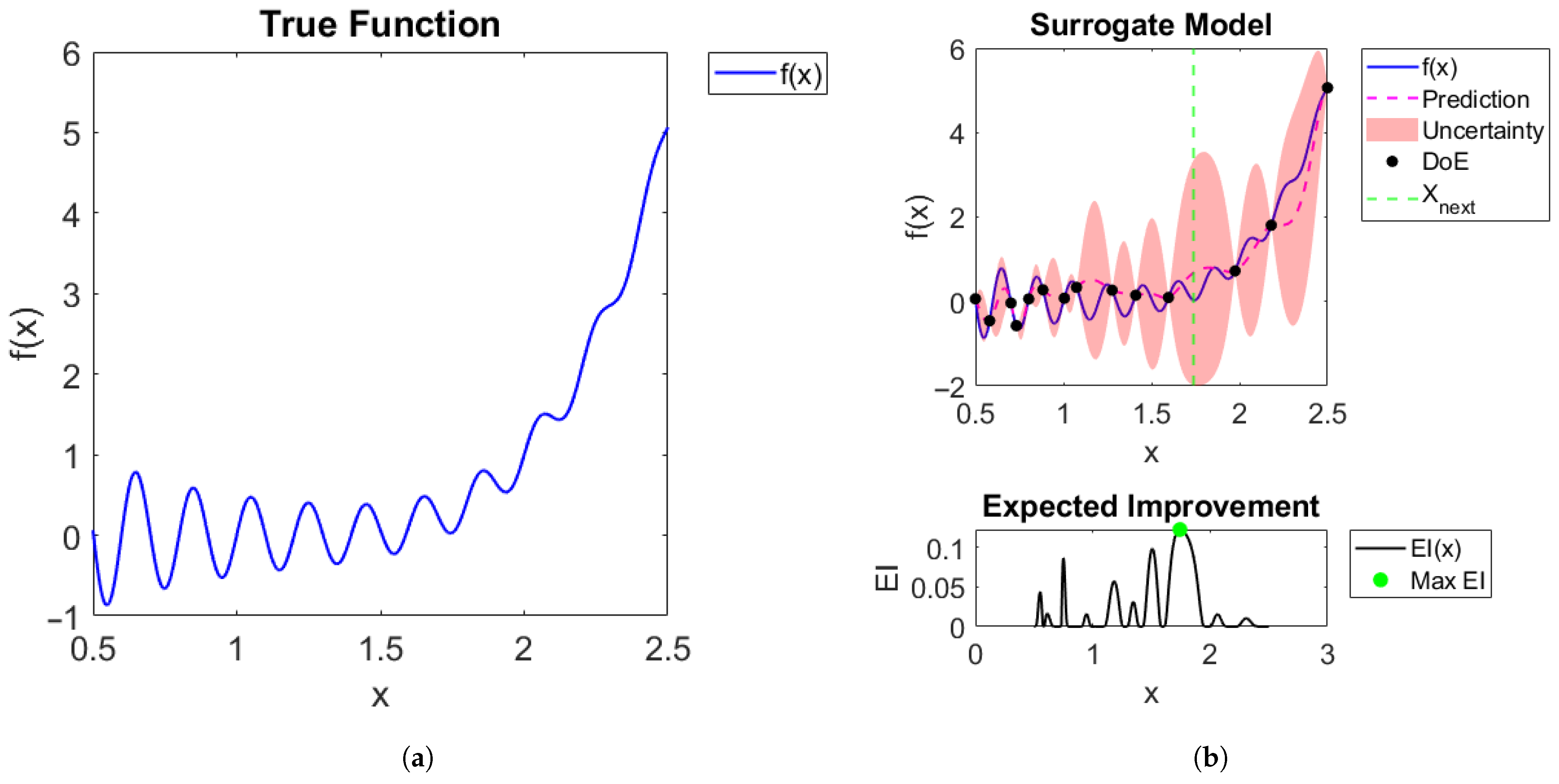

2.1. Standard Bayesian Optimization (SBO)

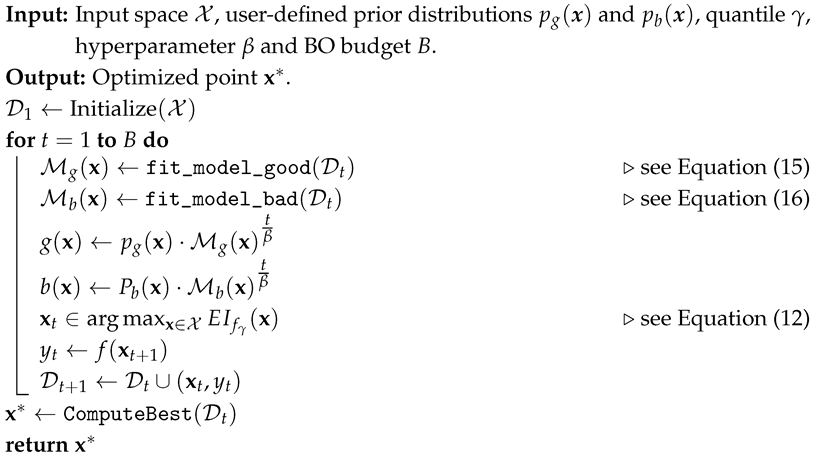

2.2. BOPrO

| Algorithm 1: BOPrO Algorithm. keeps track of all function evaluations so far: [23]. |

|

3. Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization

3.1. Preliminaries

- Describes the belief that some areas of the search space are more (or less) promising.

- Can be combined with the information available, both from model evaluations and from surrogate model predictions. This formulation poses significant limitations when the domain of application is different, such as in structural design or general engineering design optimization. Notably, in such applications, expert knowledge may not be available, or it may not be sufficiently reliable, or the expert may provide input at a different stage of the process.

3.2. Construction of Priors

- The uncertainties can be modeled by a random vector U and the variable of interest is ;

- The random variable U takes its values on an interval of real numbers (or, more generally, on a bounded subset of );

- X belongs to the space of square summable random variables: , id est, X has finite moment of order 2;

- Some statistical information about the couple is available.

| Algorithm 2: Data-driven prior construction in Hilbert spaces for Bayesian optimization |

|

4. Results and Discussion

4.1. Test Design and Technical Aspects

4.2. Benchmark Problems

- Maximum and minimum values reached by the function (corresponding to the 10 runs) obtained in the last iteration. This allows us to capture the worst and best performance of the algorithm.

- Mean of the function values at the last iteration (corresponding to the 10 runs) and the standard deviation of these values.

- Success rate, defined as the number of executions in which the function reached a value below the target.

4.3. Application: Shape Optimization of a Solid in Linear Elasticity Under Uniaxial Loading

- is the total volume of the generated structure;

- is the average horizontal displacement on the right boundary ;

- =0.0013 is the target displacement;

- is a regularization coefficient.

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BO | Bayesian Optimization |

| Gaussian Process | |

| BOPrO | Bayesian Optimization with a Prior for The Optimum |

| BOA | Bayesian Optimization Algorithm |

| SBO | Standart Bayesian Optimization |

| TPE | Tree-structured Parzen Estimator |

| CDF | Cumulative Distribution Function |

| Probability Density Function | |

| HSBO | Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization |

| DoE | Initial Design of Experiments |

| SR | Simple Regret |

| NURBS | Non-Uniform Rational B-Splines |

Appendix A. Description of Test Functions

| Function | Dimension | Design Space | Minimun | Target |

|---|---|---|---|---|

| (F1) Gramacy and Lee | ||||

| 1 | −0.86901 | −0.8256 | ||

| (F2) Cross-in-Tray | ||||

| 2 | −2.06261 | −1.9595 | ||

| (F3) Branin | ||||

| 2 | 0.397887 | 0.4178 | ||

| (F4) Hartmann 3-Dimensional | ||||

| 3 | −3.86278 | −3.6696 | ||

| (F5) Sum of Different Powers Function | ||||

| 4 | 0 | 0.001 | ||

| (F6) Hartmann 6-Dimensional | ||||

| 6 | −3.32237 | −3.1563 |

Appendix B. Mean of Simple Regret

References

- Jones, D.R.; Schonlau, M.; Welch, W.J. Efficient global optimization of expensive black-box functions. J. Glob. Optim. 1998, 13, 455–492. [Google Scholar] [CrossRef]

- Regis, R.G. Stochastic radial basis function algorithms for large-scale optimization involving expensive black-box objective and constraint functions. Comput. Oper. Res. 2011, 38, 837–853. [Google Scholar] [CrossRef]

- Kumagai, W.; Yasuda, K. Black-box optimization and its applications. In Innovative Systems Approach for Facilitating Smarter World; Springer: Singapore, 2023; pp. 81–100. [Google Scholar]

- Ghanbari, H.; Scheinberg, K. Black-box optimization in machine learning with trust region based derivative free algorithm. arXiv 2017, arXiv:1703.06925. [Google Scholar] [CrossRef]

- Pardalos, P.M.; Rasskazova, V.; Vrahatis, M.N. Black Box Optimization, Machine Learning, and No-Free Lunch Theorems; Springer: Berlin/Heidelberg, Germany, 2021; Volume 170. [Google Scholar]

- Abreu de Souza, F.; Crispim Romão, M.; Castro, N.F.; Nikjoo, M.; Porod, W. Exploring parameter spaces with artificial intelligence and machine learning black-box optimization algorithms. Phys. Rev. D 2023, 107, 035004. [Google Scholar] [CrossRef]

- Calvel, S.; Mongeau, M. Black-box structural optimization of a mechanical component. Comput. Ind. Eng. 2007, 53, 514–530. [Google Scholar] [CrossRef]

- Lainé, J.; Piollet, E.; Nyssen, F.; Batailly, A. Blackbox optimization for aircraft engine blades with contact interfaces. J. Eng. Gas Turbines Power 2019, 141, 061016. [Google Scholar] [CrossRef]

- Thalhamer, A.; Fleisch, M.; Schuecker, C.; Fuchs, P.F.; Schlögl, S.; Berer, M. A black-box optimization strategy for customizable global elastic deformation behavior of unit cell-based tri-anti-chiral metamaterials. Adv. Eng. Softw. 2023, 186, 103553. [Google Scholar] [CrossRef]

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. Recent advances in Bayesian optimization. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Wu, J.; Chen, X.Y.; Zhang, H.; Xiong, L.D.; Lei, H.; Deng, S.H. Hyperparameter optimization for machine learning models based on Bayesian optimization. J. Electron. Sci. Technol. 2019, 17, 26–40. [Google Scholar]

- Cho, H.; Kim, Y.; Lee, E.; Choi, D.; Lee, Y.; Rhee, W. Basic enhancement strategies when using Bayesian optimization for hyperparameter tuning of deep neural networks. IEEE Access 2020, 8, 52588–52608. [Google Scholar] [CrossRef]

- Do, B.; Zhang, R. Multi-fidelity Bayesian optimization in engineering design. arXiv 2023, arXiv:2311.13050. [Google Scholar] [CrossRef]

- Frazier, P.I.; Wang, J. Bayesian optimization for materials design. In Information Science for Materials Discovery and Design; Springer: Cham, Switzerland, 2016; pp. 45–75. [Google Scholar]

- Humphrey, L.; Dubas, A.; Fletcher, L.; Davis, A. Machine learning techniques for sequential learning engineering design optimisation. Plasma Phys. Control. Fusion 2023, 66, 025002. [Google Scholar] [CrossRef]

- de Cursi, E.S. Uncertainty Quantification with R; Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Mockus, J. Application of Bayesian approach to numerical methods of global and stochastic optimization. J. Glob. Optim. 1994, 4, 347–365. [Google Scholar] [CrossRef]

- Frazier, P.I. A tutorial on Bayesian optimization. arXiv 2018, arXiv:1807.02811. [Google Scholar] [CrossRef]

- Brochu, E.; Cora, V.M.; De Freitas, N. A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. arXiv 2010, arXiv:1012.2599. [Google Scholar] [CrossRef]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; De Freitas, N. Taking the human out of the loop: A review of Bayesian optimization. Proc. IEEE 2015, 104, 148–175. [Google Scholar] [CrossRef]

- Ben Yahya, A.; Ramos Garces, S.; Van Oosterwyck, N.; De Boi, I.; Cuyt, A.; Derammelaere, S. Mechanism design optimization through CAD-based Bayesian optimization and quantified constraints. Discov. Mech. Eng. 2024, 3, 21. [Google Scholar] [CrossRef]

- Surjanovic, S.; Bingham, D. Virtual Library of Simulation Experiments: Test Functions and Datasets. 2013. Available online: https://www.sfu.ca/~ssurjano/optimization.html (accessed on 24 January 2025).

- Souza, A.; Nardi, L.; Oliveira, L.B.; Olukotun, K.; Lindauer, M.; Hutter, F. Bayesian optimization with a prior for the optimum. In Proceedings of the Machine Learning and Knowledge Discovery in Databases. Research Track: European Conference, ECML PKDD 2021, Bilbao, Spain, 13–17 September 2021; Proceedings, Part III 21. Springer: Berlin/Heidelberg, Germany, 2021; pp. 265–296. [Google Scholar]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. Adv. Neural Inf. Process. Syst. 2011, 24, 2546–2554. [Google Scholar]

- de Cursi, E.S.; Fabro, A. On the Collaboration Between Bayesian and Hilbertian Approaches. In Proceedings of the International Symposium on Uncertainty Quantification and Stochastic Modeling, Fortaleza, Brazil, 30 July–4 August 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 178–189. [Google Scholar]

- Bassi, M.; Souza de Cursi, J.E.; Ellaia, R. Generalized Fourier Series for Representing Random Variables and Application for Quantifying Uncertainties in Optimization. In Proceedings of the 3rd International Symposium on Uncertainty Quantification and Stochastic Modeling, Maresias, Brazil, 15–19 February 2016. [Google Scholar] [CrossRef]

- De Cursi, E.S.; Sampaio, R. Uncertainty Quantification and Stochastic Modeling with Matlab; Elsevier: Amsterdam, The Netherlands, 2015. [Google Scholar]

- Eldred, M.; Burkardt, J. Comparison of non-intrusive polynomial chaos and stochastic collocation methods for uncertainty quantification. In Proceedings of the 47th AIAA aErospace Sciences Meeting Including the New Horizons Forum and Aerospace Exposition, Orlando, FL, USA, 5–8 January 2009; p. 976. [Google Scholar]

- AV, A.K.; Rana, S.; Shilton, A.; Venkatesh, S. Human-AI collaborative Bayesian optimisation. Adv. Neural Inf. Process. Syst. 2022, 35, 16233–16245. [Google Scholar]

- Vakili, S.; Bouziani, N.; Jalali, S.; Bernacchia, A.; Shiu, D.s. Optimal order simple regret for Gaussian process bandits. Adv. Neural Inf. Process. Syst. 2021, 34, 21202–21215. [Google Scholar]

- Nguyen, V.P.; Anitescu, C.; Bordas, S.P.; Rabczuk, T. Isogeometric analysis: An overview and computer implementation aspects. Math. Comput. Simul. 2015, 117, 89–116. [Google Scholar] [CrossRef]

| Method | Min () | Max () | Mean () | Std () | Success Rate |

|---|---|---|---|---|---|

| (f(x) < Target) | |||||

| SBO (baseline) | −0.8690 | −0.8492 | −0.8648 | 10/10 | |

| HSBO with normal prior | −0.8690 | −0.6622 | −0.8407 | 9/10 | |

| HSBO with log-normal prior | −0.8690 | −0.6619 | −0.8271 | 8/10 | |

| HSBO with exponential prior | −0.8690 | −0.5185 | −0.8099 | 8/10 | |

| HSBO with Rayleigh prior | −0.8690 | −0.5185 | −0.7922 | 7/10 | |

| HSBO with Pearson prior | −0.8690 | −0.8492 | −0.8648 | 10/10 |

| Method | Min () | Max () | Mean () | Std () | Success Rate |

|---|---|---|---|---|---|

| (f(x) < Target) | |||||

| SBO (baseline) | −2.0626 | −1.8894 | −2.0447 | 9/10 | |

| HSBO with normal prior | −2.0626 | −2.0620 | −2.0625 | 10/10 | |

| HSBO with log-normal prior | −2.0626 | −2.0426 | −2.0564 | 10/10 | |

| HSBO with exponential prior | −2.0456 | −1.8891 | −1.9740 | 7/10 | |

| HSBO with Rayleigh prior | −2.0456 | −1.8327 | −1.9601 | 6/10 | |

| HSBO with Pearson prior | −2.0626 | −1.8833 | −2.0270 | 8/10 |

| Method | Min () | Max () | Mean () | Std () | Success Rate |

|---|---|---|---|---|---|

| (f(x) < Target) | |||||

| SBO (baseline) | 0.3980 | 0.4303 | 0.4114 | 6/10 | |

| HSBO with normal prior | 0.3980 | 0.5495 | 0.4322 | 3/10 | |

| HSBO with log-normal prior | 0.3980 | 0.5495 | 0.4322 | 3/10 | |

| HSBO with exponential prior | 0.3980 | 0.4449 | 0.4171 | 4/10 | |

| HSBO with Rayleigh prior | 0.3980 | 0.5495 | 0.4322 | 3/10 | |

| HSBO with Pearson prior | 0.3980 | 0.4801 | 0.4252 | 3/10 |

| Method | Min () | Max () | Mean () | Std () | Success Rate |

|---|---|---|---|---|---|

| (f(x) < Target) | |||||

| SBO (baseline) | −3.8609 | −3.8428 | −3.8539 | 10/10 | |

| HSBO with normal prior | −3.8609 | −3.8428 | −3.8539 | 10/10 | |

| HSBO with log-normal prior | −3.8609 | −3.8428 | −3.8539 | 10/10 | |

| HSBO with exponential prior | −3.8609 | −3.8428 | −3.8539 | 10/10 | |

| HSBO with Rayleigh prior | −3.8609 | −3.8428 | −3.8539 | 10/10 | |

| HSBO with Pearson prior | −3.8609 | −3.8428 | −3.8539 | 10/10 |

| Method | Min () | Max () | Mean () | Std () | Success Rate |

|---|---|---|---|---|---|

| (f(x) < Target) | |||||

| SBO (baseline) | 10/10 | ||||

| HSBO with normal prior | 10/10 | ||||

| HSBO with log-normal prior | 5/10 | ||||

| HSBO with exponential prior | - | ||||

| HSBO with Rayleigh prior | - | ||||

| HSBO with Pearson prior | 10/10 |

| Method | Min () | Max () | Mean () | Std () | Success Rate |

|---|---|---|---|---|---|

| (f(x) < Target) | |||||

| SBO (baseline) | −3.0166 | −2.9428 | −2.9742 | - | |

| HSBO with normal prior | −3.0166 | −2.9428 | −2.9740 | - | |

| HSBO with log-normal prior | −3.0166 | −2.9583 | −2.9864 | - | |

| HSBO with exponential prior | −3.0166 | −2.9428 | −2.9742 | - | |

| HSBO with Rayleigh prior | −3.0166 | −2.9428 | −2.9742 | - | |

| HSBO with Pearson prior | −2.9871 | −2.9184 | −2.9632 | - |

| Patterns | Key Points |

|---|---|

| Normal and log-normal priors |

|

| Pearson prior |

|

| Exponential and Rayleigh priors |

|

| Dimensionality effect |

|

| Problem structure dependency |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santos Almonte, C.; Sanchez Jimenez, O.; Souza de Cursi, E.; Pagnacco, E. Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization. Algorithms 2025, 18, 557. https://doi.org/10.3390/a18090557

Santos Almonte C, Sanchez Jimenez O, Souza de Cursi E, Pagnacco E. Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization. Algorithms. 2025; 18(9):557. https://doi.org/10.3390/a18090557

Chicago/Turabian StyleSantos Almonte, Carol, Oscar Sanchez Jimenez, Eduardo Souza de Cursi, and Emmanuel Pagnacco. 2025. "Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization" Algorithms 18, no. 9: 557. https://doi.org/10.3390/a18090557

APA StyleSantos Almonte, C., Sanchez Jimenez, O., Souza de Cursi, E., & Pagnacco, E. (2025). Data-Driven Prior Construction in Hilbert Spaces for Bayesian Optimization. Algorithms, 18(9), 557. https://doi.org/10.3390/a18090557