A Review of Explainable Artificial Intelligence from the Perspectives of Challenges and Opportunities

Abstract

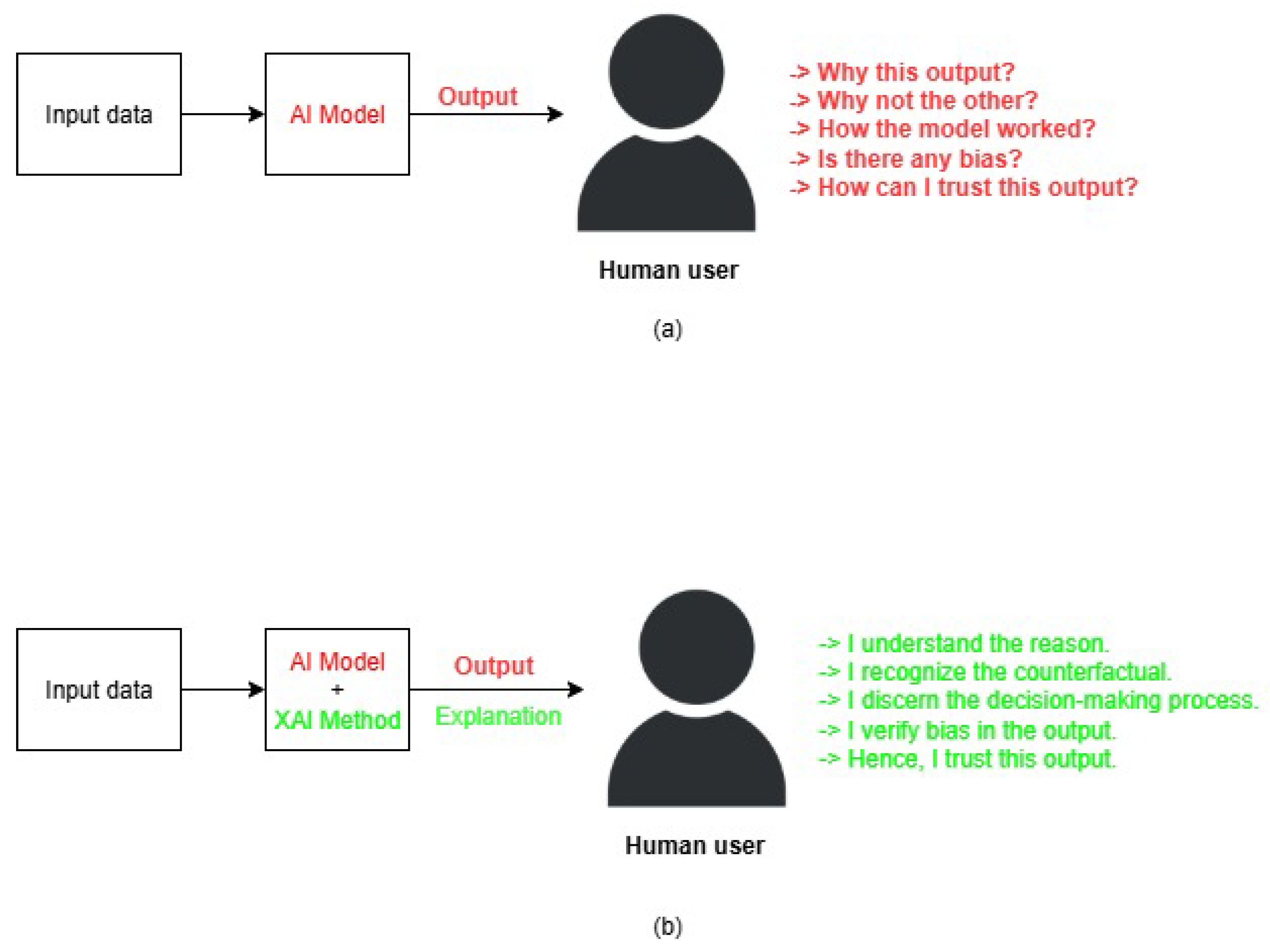

1. Introduction

2. Background and Definition of XAI

2.1. Background

2.2. Definition

2.3. Terminology

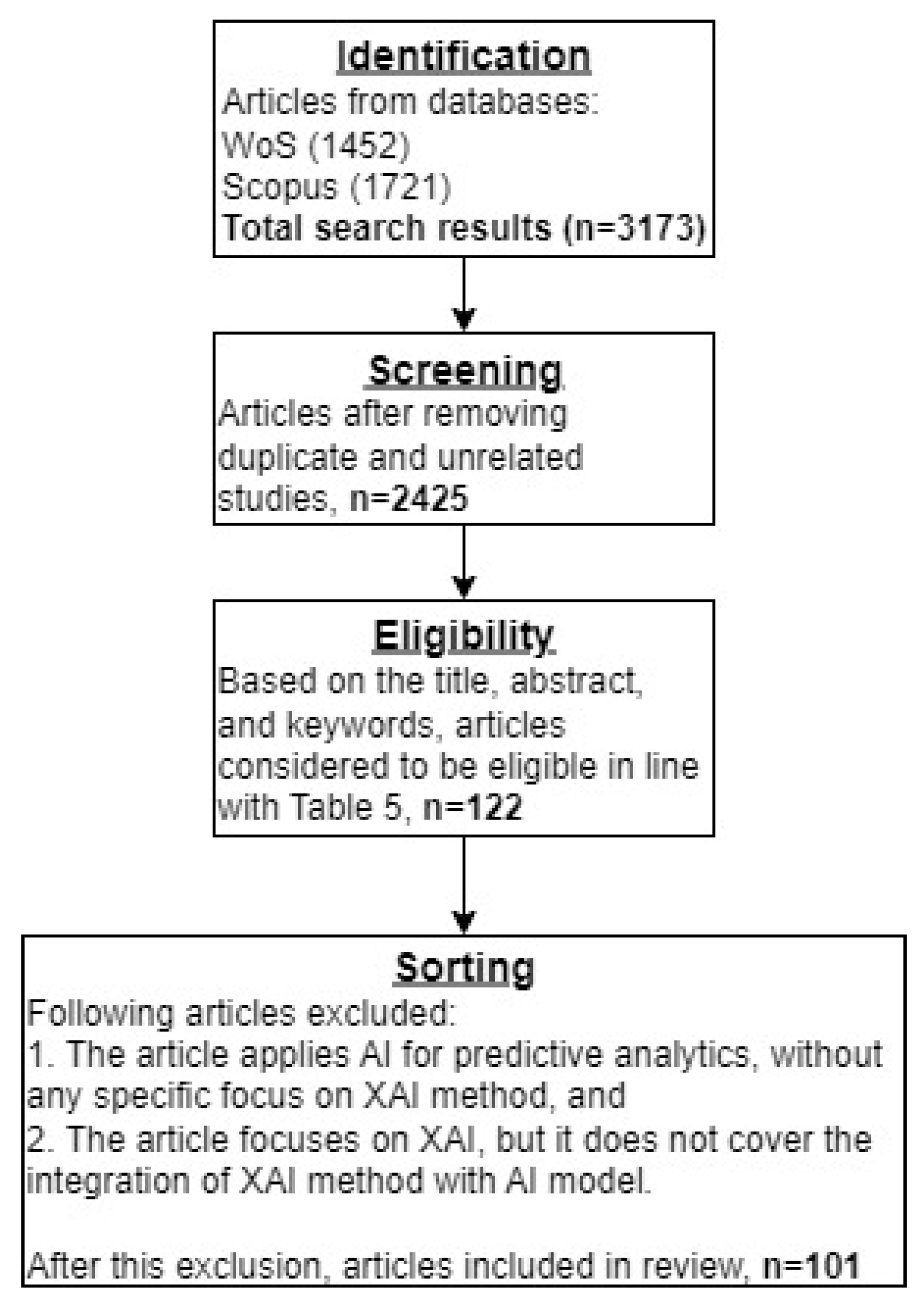

3. Research Methodology

3.1. Planning the Review

3.2. Conducting the Review

3.3. Reporting

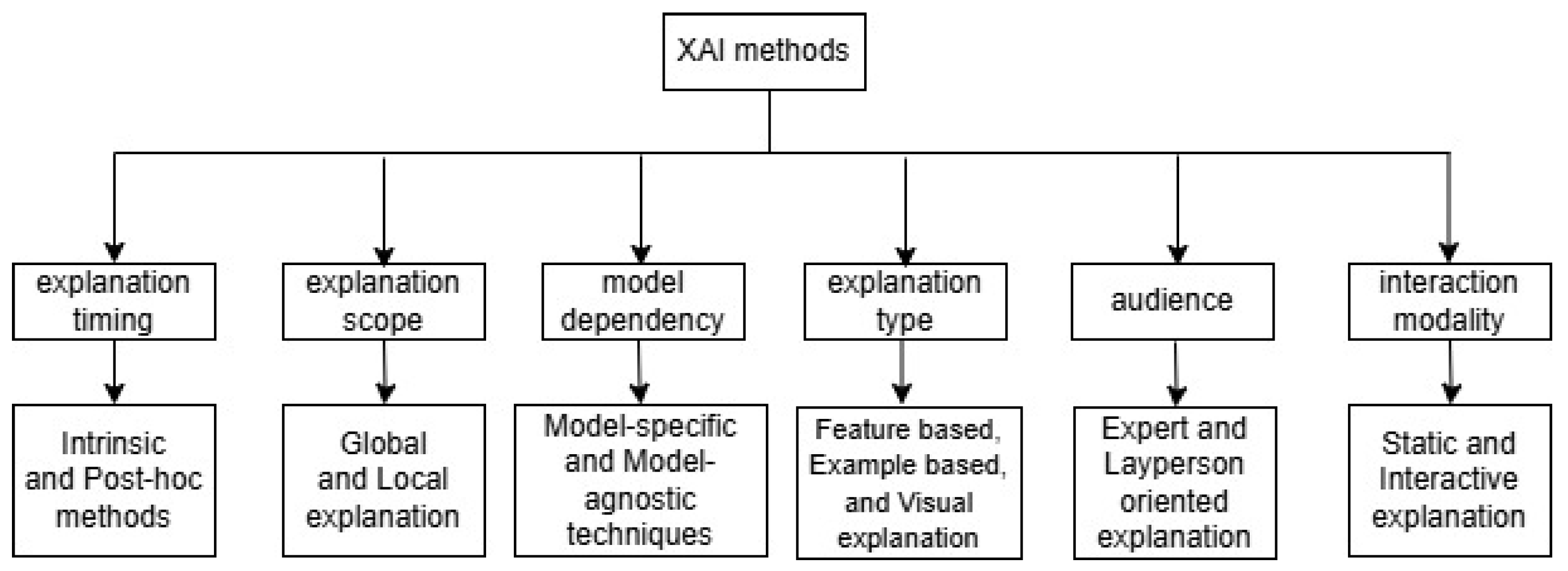

4. Taxonomy of XAI

- Intrinsic (ante hoc) methods are inherently interpretable models, such as decision trees, rule-based models, and linear regression. These models offer transparency by exposing the internal logic directly to the user [54]. For example, the Belief Rule-Based Expert System (BRBES), a rule-based model with intrinsic explainabilty, was employed by [25] to predict and explain the energy consumption of buildings.

- Post hoc methods, by contrast, apply interpretability techniques after a model’s predictive output. These techniques extract explanations from a complex “black-box” model, such as DNNs and ensemble models. Examples of post hoc methods include LIME [4], SHAP [15], Partial Dependence Plots (PDPs) [55], Individual Conditional Expectation (ICE) Plots, counterfactual explanation [56], and anchors [57]. PDPs and ICE plots are useful for understanding the relationship between a feature and the predicted outcome, particularly for models that have complex feature interactions. PDPs show how a predicted output changes with regard to the variation in a single feature, keeping other features constant. ICE plots show the effect of a feature on individual instances [58]. Anchors are if-then rules, which “anchor” a prediction. If the conditions in a rule are true, the AI model will make the same prediction, even when other features change. These post hoc tools provide valuable insights into an opaque model’s decision-making process. For instance, Gradient Boosting Machine (GBM) was used to predict the sepsis risk of Intensive Care Unit (ICU) patients [59]. The post hoc tool SHAP explained this prediction by showing that the features, such as serum lactate level, respiratory rate, and Sequential Organ Failure Assessment (SOFA) scores, were the significant contributors to the model’s prediction [59]. Such explanations provide clinicians with actionable insights into individual patient’s risks.

- Global explanation describes the overall behavior of a model across the entire input space, allowing insights into feature importance and model structure [53]. For example, SHAP can be used to produce global explanations by aggregating local (per-instance) Shapley values across several predictions, typically using the mean (or mean absolute) Shapley value to estimate each feature’s overall importance [61]. This global explanation of SHAP was applied to a random forest model trained with electronic health record data [62]. After predicting unscheduled hospital readmission with this trained random forest model, the authors used SHAP values to produce a global explanation by ranking features according to their overall influence across the entire cohort. The global SHAP summary identified days of stay and age as the top two most influential features. Such global explanation reveals risk factors for the whole system, rather than individual patients [62].

- Local explanation focuses on the reasoning behind individual prediction. For example, LIME explains the individual predictions of a complex model by creating a local surrogate model (e.g., linear regression or decision tree), which mimics the behavior of the complex model near the input space [4,15]. Anchors are applied locally around an instance to capture the conditions of an if-then rule [57]. To provide local explanation of individual test cases of breast masses classification output, LIME was applied to a DNN by [63]. For a particular patient, LIME highlighted high values of texture, smoothness, and concave points as determining factors for a malignant prediction [63]. Such case-level local explanation helped clinicians to inspect the specific reasoning behind a single prediction, and increased trust in the model-assisted diagnosis.

- Model-specific approaches are tailored to specific types of models. For instance, attention maps are suitable for deep learning in computer vision and Natural Language Processing (NLP) tasks [65], whereas feature importance scores are applicable to tree-based models. TreeSHAP, a model-specific XAI technique for tree-ensemble models, leverages the internal structure of decision-tree ensembles to compute exact Shapley feature attributions efficiently. In a real-world study, TreeSHAP was employed by [66] to explain a random forest model trained on a clinical metabolomics dataset. TreeSHAP produced both local and global feature importance explanations and identified testosterone metabolites as a key discriminator in a urine dataset [66].

- Model-agnostic approaches are applied universally to any AI model to extract explanations. Examples of these approaches include LIME, SHAP, and partial dependence plots [67]. KernelSHAP is a model-agnostic estimator of Shapley values [68]. It treats a trained predictor as a black-box model and approximates each feature’s contribution by querying the model on perturbed inputs. In a clinical application to intelligent fetal monitoring, ref. [68] applied KernelSHAP post hoc to a stacked-ensemble classifier to locally explain each instance of cardiotocography prediction. To facilitate clinical interpretation, the authors also aggregated local importance scores to produce global explanations.

- Feature-based Explanation: This provides explanations by highlighting the contribution of each input feature to the prediction [70]. In a real-world study, ref. [71] applied a local feature-based explainer (LIME in Study 1, and SHAP in Study 2) to predict real-estate price. The authors showed that the feature-level explanations systematically changed users’ situational information processing and mental models.

- Example-based Explanation: This uses training instances to explain a prediction by highlighting its decision boundaries. Moreover, counterfactuals are generated to inform the user of preconditions to obtain an alternative outcome [56]. Thus, it offers intuitive “what-if” information at the instance level. In a real-world study, ref. [72] introduced GANterfactual, an adversarial image-to-image translation method, which generates realistic counterfactual chest X-rays to flip a pneumonia classifier’s decision. The authors showed that these counterfactuals improved non-expert users’ mental models, satisfaction, and trust compared with saliency map explanation.

- Visual Explanation: This technique (saliency/pixel-attribution maps) produces heat-maps over images to highlight the regions the model used to form a prediction. For example, Gradient-weighted Class Activation Mapping (Grad-CAM), a saliency map technique, produces a heatmap to show which regions of the image contributed the most to the predicted class [73]. If a Convolutional Neural Network (CNN) predicts “dog” for an image, Grad-CAM may highlight the dog’s face and tail, indicating that these regions were the most influential to the classification. In a real-world study, ref. [74] systematically evaluated seven saliency methods (Grad-CAM, Grad-CAM++, Integrated Gradients, Eigen-CAM, Deep Learning Important FeaTures (DeepLIFT), Layer-wise Relevance Propagation (LRP), and occlusion) on chest X-rays. This study found better performance of Grad-CAM than other saliency methods to localize pathologies. However, all methods still performed substantially worse than a human radiologist benchmark. This demonstrates that saliency heat maps are useful but not fully reliable as a standalone clinical explanation [74].

- Expert-oriented explanation provides detailed technical insights tailored to the developers, data scientists, or domain experts [25,26]. For example, ref. [76] developed a modified Progressive Concept Bottleneck Model, which returns both anatomical segmentations and clinically meaningful property concepts (symmetry, caliper-placement feasibility, image quality) as real-time feedback for fetal growth scans. The system was validated across hospitals, where 75% of clinicians rated the explanation as useful. Moreover, the model achieved 96.3% classification accuracy on standard-plane assessment.

- Layperson-oriented explanation is provided in non-technical language using analogies, narratives, or visuals to enhance public understanding and trust [8]. Recent advancements in XAI have increasingly focused on designing explanations which align with human cognitive processes. To present explanations to a layperson in an intelligible manner, principles for explanatory debugging were proposed by [28]. In a real-world study, ref. [71] tested feature-level, plain explanations with lay participants on a real-estate price prediction task. The study demonstrated that such explanations change lay users’ decision patterns and mental models, highlighting both the usefulness and risks of exposing non-experts to model rationales. Interactive natural-language explanatory interfaces, such as TalkToModel [77] also increase non-expert users’ performance and comprehension in applied settings.

- Static explanation provides fixed reports or visualizations without user input [79]. For example, PDPs are static, one-shot visual explanations to show the average effect of a feature on model predictions. PDPs are produced once and inspected as non-interactive figures. In a real-world study, ref. [80] applied one-way and multi-way PDPs to a gradient-boosted model for satellite-based prediction. PDPs visualized how meteorological and spatiotemporal predictors influenced predicted pollution levels across regions and seasons. Thus, the authors communicated the model behavior to domain scientists using the static PDP figures.

- Interactive explanation allows a user to interact with the model. Such explanation enables a user to investigate model behavior dynamically by changing inputs and exploring “what-if” scenarios, resulting in deeper comprehension of the model’s decision-making process [81]. For example, an AI-powered recommender system can allow users to adjust preferences and immediately assess how these changes influence the recommendations [30]. This interactivity increases user trust and improves the overall understanding of AI behavior. A prominent example of interactive explanation in practice is the InteraCtive expLainable plAtform for gRaph neUral networkS (CLARUS) [82]. This is an explainability platform for graph neural networks in clinical decision support systems. CLARUS visualizes patient-specific biological networks and model relevance scores. It also allows domain experts to manually edit graphs (nodes/edges) to ask “what-if” counterfactuals, immediately re-predict outcomes, and retrain models to observe the consequences of those edits. This interactive loop moves beyond static saliency or post hoc attributions by allowing users to probe causal hypotheses and refine model behavior. This interactive pattern is increasingly emphasized in the human-centered XAI literature [83].

5. Application Domains of XAI

5.1. Healthcare

- Key AI models and XAI methods

- Domain features

- –

- Highly sensitive, and heterogeneous data, such as imaging, time-series data of Electronic Health Record (EHR), and genomics [92].

- –

- Lack of adequate quantity of labeled data. [93]

- –

- Erroneous prediction and explanation have severe consequences. Hence, the healthcare domain needs transparent prediction from AI models with high accuracy [94].

- Problem types

- Advantages of using XAI

- Disadvantages of using XAI

- –

- Explanations offered by various post hoc XAI techniques may be misleading due to local approximation of a decision boundary instead of capturing the full model logic or global behavior [97].

5.2. Finance

- Key AI models and XAI methods

- Domain features

- –

- High compliance requirements: Financial AI models must be fully auditable and traceable to meet standards such as GDPR, Basel-III, and the Fair Credit Reporting Act [101].

- –

- Fairness and Non-Discrimination: Bias in financial data, such as in relation to gender, race, and location, poses legal and ethical risks [103].

- –

- Real-time requirements: Applications such as fraud detection and high-frequency trading need sub-second predictions and lightweight explanation methods (e.g., SHAP, surrogate decision trees) to keep pace with streaming data [103].

- Problem types

- Advantages of using XAI

- Disadvantages of using XAI

- –

- When faced with large high-frequency datasets of trading and fraud-detection pipelines, traditional post hoc XAI techniques, such as LIME and SHAP, may produce overly generalized or even misleading explanations [109].

- –

- Explanations may leak the sensitive proprietary logic of a financial AI model, which can be manipulated to fool the AI model [109].

5.3. Criminal Justice

- Key AI models and XAI methods

- –

- Recidivism prediction: Gradient-boosted decision trees (XGBoost) with SHAP dependence plots to reveal how factors such as age at first offense, incarceration length, and in-prison behavior drive risk scores to re-offend [112].

- –

- Spatial crime forecasting: Random forest predicts burglary or street-crime risk patterns, which are explained by SHAP values [113].

- Domain features

- –

- Fairness-critical decisions: Decisions such as bail release, sentencing, and parole directly affect individual liberties. Hence, judicial AI models must be both accurate and transparent [114].

- –

- Severe class imbalance: Criminal events such as violent re-offense and homicide cases are rare. Hence, skewed class distributions have to be addressed properly by judicial AI models [115].

- –

- Multi-modal inputs: Criminal data can be in the format of tabular records (demographics, prior convictions), text (legal documents), biometrics (face, fingerprint), and imagery. Hence, judicial AI models have to deal with heterogeneous multi-modal input data [116].

- Problem types

- Advantages of using XAI

- –

- Post hoc XAI techniques such as SHAP and LIME reveal which features drive risk scores or case clearance predictions, enabling practitioners to detect and correct biases embedded in historical data or AI models [120].

- –

- Providing judges, attorneys, and defendants with comprehensible explanations for AI-driven decisions fosters public confidence in the criminal-justice process [120].

- Disadvantages of using XAI

- –

- Through explanations provided by XAI techniques, exploitable triggers of a judicial AI model may be revealed. Such triggers may be manipulated by malicious actors to reverse engineer the AI model and evade detection [121].

- –

- Many post hoc XAI techniques produce simplified explanations, such as single-feature attribution, which may omit critical model complexities. Such simplification may causes judges to interpret the AI model incorrectly [122].

5.4. Autonomous Systems

- Key AI models and XAI methods

- –

- Image segmentation: CNN performs pixel-wise semantic segmentation of road scenes. Critical image regions such as pedestrians and lane markings can be highlighted by saliency maps. Such highlighted regions facilitate design-time debugging and runtime safety monitoring of self-driving cars [126].

- –

- Anomaly detection: Random forest is applied on multi-sensor streams such as cameras, Inertial Measurement Units (IMUs), and the Global Positioning System (GPS) to detect anomalies or faults in a drone. Post hoc XAI techniques such as SHAP and LIME can be applied to fuse and rank the most salient sensor-level features [127].

- Domain features

- –

- Real-time closed-loop operation: Autonomous agents deal with continuous streams of heterogeneous sensor data. AI models have to act on these sensor data within milliseconds. This “sense–think–act” loop requires an AI model to have ultra-low latency for safety-critical actions [128]. Moreover, XAI techniques such as saliency maps and confidence scores have to be generated without breaking real-time constraints [128].

- –

- Safety and reliability: In self-driving cars or industrial robots, failures can lead to injury or loss of life. Hence, such systems must conform to functional safety standards, such as ISO 26262, and provide stable explanations. Such explanations enable engineers and regulators to inspect an autonomous system before and after an incident [129]. Otherwise, without robust explanations of XAI methods, loopholes in autonomous systems will remain undetected.

- –

- Dynamic environments: Autonomous systems operate in non-stationary settings, such as changing weather and where there are novel obstacles. Hence, to cover this distribution shift, XAI methods have to convey uncertainty and adaptation over time [130].

- Problem types

- –

- Classification: Objects or events in the environment are classified by AI models [131]. For example, an AI model can classify obstacles as pedestrians, cyclists, or vehicles. Similarly, a scene can be classified as a road sign or lane marking.

- –

- Regression: AI models can regress continuous quantities for an autonomous system, such as future positions, velocities, and risk scores [132].

- Advantages of using XAI

- –

- Natural language explanations for driving commands produced by transformer-based architectures enhance the trustworthiness of AI models [129].

- –

- Post hoc XAI tools such as attention-map visualizations, surrogate models, and feature-importance scores highlight which inputs triggered a particular decision. This fine-grained insight enables developers to identify the architectural weakness of an autonomous system [129].

- Disadvantages of using XAI

- –

- Many post hoc XAI methods, such as LIME, SHAP, and saliency maps, entail high computational costs to process high-dimensional sensor inputs. Such extensive computation can cause delays in perception and planning pipelines of a real-time autonomous system [128].

- –

- XAI explanations may become unstable or misleading when the sensor quality degrades due to various reasons, such as low light, fog, rain, and motion blur. Without properly quantifying such uncertainties, XAI techniques may provide incorrect explanations in critical situations [133].

5.5. Customer Service and Human Resources

- Key AI models and XAI methods

- –

- Chatbots and virtual assistants: In customer service, various Large Language Models (LLMs), such as Generative Pre-trained Transformer (GPT) and Bidirectional Encoder Representations from Transformers (BERT), are used to develop chatbots and virtual assistants [136]. As an XAI method, attention visualization is used to explain chatbot responses [136].

- –

- Domain features

- –

- Real-time high-volume engagement: To serve customers efficiently, chatbots must handle large streams of customer requests (e.g., chat, voice, and email). Hence, XAI methods have to provide on-the-fly explanations to sustain user engagement. [138].

- –

- Multi-function coverage: In human resources, AI models are employed for multiple functions, such as talent acquisition, performance appraisal, and workforce planning. Hence, based on the use case, the algorithmic requirements of an AI model can be of various types, such as descriptive, predictive, and prescriptive [139].

- Problem types

- –

- –

- Regression: A customer’s lifetime value can be predicted using a meta-learning-based stacked regression approach [142].

- –

- Clustering: By using AI models, human resources can cluster employees into groups based on multi-modal features, such as skills, engagement, and performance. Such clustering enables management to identify cohorts for tailored training programs [143].

- Advantages of using XAI

- Disadvantages of using XAI

- –

- Generating post hoc explanations for high-volume, real-time interactions can introduce computational bottlenecks, slowing response time and degrading customer experience [128].

- –

5.6. Large Language Models

- Key AI models and XAI methods

- –

- BERT is used as an LLM. As an XAI method, attention visualization highlights which input tokens BERT attends to while making a prediction [147]. For example, the attention visualization technique highlights keywords driving sentiment classification.

- –

- Another LLM is GPT-3. To explain the outcome of GPT-3, the XAI technique Chain-of-Thought (CoT) is used [153]. CoT makes GPT-3’s logic transparent by embedding intermediate reasoning steps directly with prompts.

- Domain features

- –

- Model scale and architecture: LLMs are built on a huge-scale parameterized transformer architecture with self-attention, positional embeddings, and multi-head attention layers [154]. This architecture enables long-range context modeling of LLMs more effectively than using RNNs or Long Short-Term Memory (LSTM) [154].

- –

- Adaptation and human feedback: LLMs can be instruction-tuned to follow diverse task descriptions. Moreover, LLMs can be further enhanced through Reinforcement Learning from Human Feedback (RLHF) to refine responses and align with human preferences [147].

- Problem types

- –

- Classification: LLMs are fine-tuned for various classification tasks, such as token-level classification, topic classification, and sentence classification [155].

- –

- Generation: Decoder-only LLMs are trained for next-token prediction and used for open-ended text generation, story generation, code generation, and other creative tasks [155].

- –

- Structured output: LLMs support tasks requiring structured outputs, such as relation extraction outputs in JSON format, particularly in medical and legal information extraction settings [155].

- Advantages of using XAI

- –

- By providing human-understandable explanations, XAI techniques enable stakeholders to audit and sanity-check outputs of LLMs [156].

- –

- LLMs can be prompted to generate their own explanations by approximating traditional feature-attribution methods. Such explanations can be looped back to fine-tune the factuality of LLM output [157].

- Disadvantages of using XAI

- –

- Post hoc XAI techniques may miss complex interactions of transformer layers, resulting in incomplete rationales. Hence, the end-user cannot understand the true decision process of LLMs [128].

- –

- Applying XAI techniques to LLMs is computationally expensive and latency-intensive, which represents a serious bottleneck to provide real-time explanations of LLM output [128].

6. Trade-Offs and Challenges of XAI

6.1. Accuracy Versus Interpretability Trade-Off

6.2. Evaluation Metrics

6.3. Scalability

6.4. Fairness and Privacy

6.5. Human-Centric Interpretability

7. Future Scope of XAI

7.1. Formalization of Definition and Terminology

7.2. Advancement in Explainability Techniques

7.3. Human-Centered AI and Cognitive Alignment

7.4. Interactive Explanation

7.5. Ethical XAI

7.6. Regulatory Compliance

7.7. Explainability for New AI Paradigms

7.8. Standardization of Evaluation Metrics

7.9. Economic and Sustainability Perspective

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Walmsley, J. Artificial Intelligence and the Value of Transparency. AI Soc. 2021, 36, 585–595. [Google Scholar] [CrossRef]

- Wiggerthale, J.; Reich, C. Explainable Machine Learning in Critical Decision Systems: Ensuring Safe Application and Correctness. AI 2024, 5, 2864–2896. [Google Scholar] [CrossRef]

- Emmert-Streib, F.; Yang, Z.; Feng, H.; Tripathi, S.; Dehmer, M. An Introductory Review of Deep Learning for Prediction Models with Big Data. Front. Artif. Intell. 2020, 3, 4. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why Should I Trust You? Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Gunning, D. Explainable Artificial Intelligence (XAI); DARPA: Arlington, VA, USA, 2017; Available online: https://www.darpa.mil/program/explainable-artificial-intelligence (accessed on 6 June 2025).

- Gunning, D.; Aha, D.W. DARPA’s Explainable Artificial Intelligence (XAI) Program. AI Mag. 2019, 40, 44–58. [Google Scholar]

- Mahto, M.K. Explainable Artificial Intelligence: Fundamentals, Approaches, Challenges, XAI Evaluation, and Validation. In Explainable Artificial Intelligence for Autonomous Vehicles; CRC Press: Boca Raton, FL, USA, 2025; pp. 25–49. [Google Scholar]

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Gilpin, L.H.; Bau, D.; Zoran, D.; Pezzotti, N.; Bach, S.; Wexler, J. Explaining Explanations: An Overview of Interpretability of Machine Learning. In Proceedings of the 2018 ICML Workshop on Human Interpretability in Machine Learning (WHI 2018), Stockholm, Sweden, 15 July 2018. [Google Scholar] [CrossRef]

- Li, X.; Xiong, H.; Li, X.; Wu, X.; Zhang, X.; Liu, J.; Dou, D. Interpretable Deep Learning: Interpretation, Interpretability, Trustworthiness, and Beyond. Knowl. Inf. Syst. 2022, 64, 3197–3234. [Google Scholar] [CrossRef]

- Albahri, A.S.; Duhaim, A.M.; Fadhel, M.A.; Alnoor, A.; Baqer, N.S.; Alzubaidi, L.; Deveci, M. A Systematic Review of Trustworthy and Explainable Artificial Intelligence in Healthcare: Assessment of Quality, Bias Risk, and Data Fusion. Inf. Fusion 2023, 96, 156–191. [Google Scholar] [CrossRef]

- Caruana, R.; Gehrke, J.; Koch, C.; Sturm, M. Intelligible Models for Healthcare: Predicting Pneumonia Risk and Hospital 30-Day Readmission. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, NSW, Australia, 10–13 August 2015; Volume 21, pp. 1721–1730. [Google Scholar] [CrossRef]

- Zhang, Q.; Wu, Y.N.; Zhu, S.C. Interpretable Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8827–8836. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the Game of Go without Human Knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 4765–4774. Available online: https://arxiv.org/abs/1705.07874 (accessed on 28 August 2025).

- Khan, A.; Ali, A.; Khan, J.; Ullah, F.; Faheem, M. Exploring Consistent Feature Selection for Software Fault Prediction: An XAI-based model-agnostic Approach. IEEE Access 2025, 13, 75493–75524. [Google Scholar] [CrossRef]

- Assis, A.; Dantas, J.; Andrade, E. The Performance-Interpretability Trade-Off: A Comparative Study of Machine Learning Models. J. Reliab. Intell. Environ. 2025, 11, 1. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Serradilla, O.; Zugasti, E.; Rodriguez, J.; Zurutuza, U. Deep Learning Models for Predictive Maintenance: A Survey, Comparison, Challenges and Prospects. Appl. Intell. 2022, 52, 10934–10964. [Google Scholar] [CrossRef]

- Lipton, Z.C. The Mythos of Model Interpretability. Commun. ACM 2016, 59, 36–43. [Google Scholar] [CrossRef]

- Lopes, P.; Silva, E.; Braga, C.; Oliveira, T.; Rosado, L. XAI Systems Evaluation: A Review of Human and Computer-Centred Methods. Appl. Sci. 2022, 12, 9423. [Google Scholar] [CrossRef]

- Kadir, M.A.; Mosavi, A.; Sonntag, D. Evaluation Metrics for XAI: A Review, Taxonomy, and Practical Applications. In Proceedings of the 2023 IEEE 27th International Conference on Intelligent Engineering Systems (INES), Tihany, Hungary, 19–21 July 2023; pp. 000111–000124. [Google Scholar]

- Miller, T. Explanation in Artificial Intelligence: Insights from the Social Sciences. Artif. Intell. 2019, 267, 1–38. [Google Scholar] [CrossRef]

- Abdul, A.; Vermeulen, J.; Wang, D.; Lim, B.Y.; Kankanhalli, M. Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–18. [Google Scholar] [CrossRef]

- Kabir, S.; Hossain, M.S.; Andersson, K. An Advanced Explainable Belief Rule-Based Framework to Predict the Energy Consumption of Buildings. Energies 2024, 17, 1797. [Google Scholar] [CrossRef]

- Kabir, S.; Hossain, M.S.; Andersson, K. A Semi-Supervised-Learning-Aided Explainable Belief Rule-Based Approach to Predict the Energy Consumption of Buildings. Algorithms 2025, 18, 305. [Google Scholar] [CrossRef]

- Barocas, S.; Hardt, M.; Narayanan, A. Fairness and Machine Learning; 2019. Available online: https://fairmlbook.org/ (accessed on 9 June 2025).

- Kulesza, T.; Stumpf, S.; Burnett, M.; Wong, W.K.; Striegel, A. Principles of Explanatory Debugging to Assist Machine Learning. In Proceedings of the 2013 CHI Conference on Human Factors in Computing Systems (CHI ’13), Paris, France, 27 April–2 May 2013; pp. 1519–1528. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar] [CrossRef]

- Stumpf, S.; Rajaram, V.; Li, L.; Burnett, M.; Dietterich, T.G.; Sullivan, E.; Drummond, R. Interacting with Machine Learning Systems: The Case for Explanation and Beyond. In Proceedings of the 14th International Conference on Intelligent User Interfaces (IUI ’09), Sanibel Island, FL, USA, 8–11 February 2009; pp. 109–118. [Google Scholar]

- Calders, T.; Verwer, S. Three Naive Bayes Approaches for Discrimination-Free Classification. Data Min. Knowl. Discov. 2010, 21, 277–292. [Google Scholar] [CrossRef]

- Goodman, B.; Flaxman, S. European Union Regulations on Algorithmic Decision-Making and a “Right to Explanation”. Proc. Mach. Learn. Res. 2017, 81, 1–10. [Google Scholar] [CrossRef]

- Moral, A.; Castiello, C.; Magdalena, L.; Mencar, C. Explainable Fuzzy Systems; Springer International Publishing: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Shortliffe, E. (Ed.) Computer-Based Medical Consultations: MYCIN; Elsevier: Amsterdam, The Netherlands, 2012; Volume 2. [Google Scholar]

- Shortliffe, E.H.; Buchanan, B.G. A Model of Inexact Reasoning in Medicine. Math. Biosci. 1975, 23, 351–379. [Google Scholar] [CrossRef]

- Wang, W.; Yang, Y.; Wu, F. Towards Data- and Knowledge-Driven AI: A Survey on Neuro-Symbolic Computing. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 878–899. [Google Scholar] [CrossRef]

- Liang, B.; Wang, Y.; Tong, C. AI Reasoning in Deep Learning Era: From Symbolic AI to Neural–Symbolic AI. Mathematics 2025, 13, 1707. [Google Scholar] [CrossRef]

- Fernandez-Quilez, A. Deep Learning in Radiology: Ethics of Data and on the Value of Algorithm Transparency, Interpretability and Explainability. AI Ethics 2023, 3, 257–265. [Google Scholar] [CrossRef]

- Barocas, S.; Friedler, S.; Hardt, M.; Kroll, J.; Venkatasubramanian, S.; Wallach, H. The FAT-ML Workshop Series on Fairness, Accountability, and Transparency in Machine Learning. Available online: http://www.fatml.org/ (accessed on 9 June 2025).

- Longo, L.; Brcic, M.; Cabitza, F.; Choi, J.; Confalonieri, R.; Del Ser, J.; Stumpf, S. Explainable Artificial Intelligence (XAI) 2.0: A Manifesto of Open Challenges and Interdisciplinary Research Directions. Inf. Fusion 2024, 106, 102301. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Souza, V.F.; Cicalese, F.; Laber, E.; Molinaro, M. Decision Trees with Short Explainable Rules. Adv. Neural Inf. Process. Syst. 2022, 35, 12365–12379. [Google Scholar] [CrossRef]

- Agarwal, C.; Krishna, S.; Saxena, E.; Pawelczyk, M.; Johnson, N.; Puri, I.; Lakkaraju, H. OpenXAI: Towards a Transparent Evaluation of Model Explanations. Adv. Neural Inf. Process. Syst. 2022, 35, 15784–15799. [Google Scholar]

- Basti, G.; Vitiello, G. Deep Learning Opacity, and the Ethical Accountability of AI Systems: A New Perspective. In The Logic of Social Practices II; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 21–73. [Google Scholar] [CrossRef]

- Alvarez-Melis, D.; Jaakkola, T.S. On the Robustness of Interpretability Methods. arXiv 2018, arXiv:1806.08049. [Google Scholar] [CrossRef]

- Binns, R. Fairness in Machine Learning: Lessons from Political Philosophy. In Proceedings of the 2018 Conference on Fairness, Accountability and Transparency (FAT), New York, NY, USA, 23–24 February 2018; pp. 149–159. [Google Scholar]

- Retzlaff, C.O.; Angerschmid, A.; Saranti, A.; Schneeberger, D.; Roettger, R.; Mueller, H.; Holzinger, A. Post-Hoc vs Ante-Hoc Explanations: XAI Design Guidelines for Data Scientists. Cogn. Syst. Res. 2024, 86, 101243. [Google Scholar] [CrossRef]

- Rudin, C.; Chen, C.; Chen, Z.; Huang, H.; Semenova, L.; Zhong, C. Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges. Stat. Surv. 2022, 16, 1–85. [Google Scholar] [CrossRef]

- Necula, S.-C.; Dumitriu, F.; Greavu-Șerban, V. A Systematic Literature Review on Using Natural Language Processing in Software Requirements Engineering. Electronics 2024, 13, 2055. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Moher, D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int. J. Surg. 2021, 88, 105906. [Google Scholar] [CrossRef]

- Singh, V.K.; Singh, P.; Karmakar, M.; Leta, J.; Mayr, P. The journal coverage of Web of Science, Scopus and Dimensions: A comparative analysis. Scientometrics 2021, 126, 5113–5142. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A Survey of Methods for Explaining Black Box Models. ACM Comput. Surv. 2018, 51, 1–42. [Google Scholar] [CrossRef]

- Rudin, C. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Szepannek, G.; Lübke, K. How Much Do We See? On the Explainability of Partial Dependence Plots for Credit Risk Scoring. Argum. Oeconomica 2023, 1, 137–150. [Google Scholar] [CrossRef]

- Wachter, S.; Mittelstadt, B.; Russell, C. Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR. Harv. J. Law Technol. 2017, 31, 841. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-Precision Model-Agnostic Explanations. Proc. AAAI Conf. Artif. Intell. 2018, 32. [Google Scholar] [CrossRef]

- Goldstein, A.; Kapelner, A.; Bleich, J.; Pitkin, E. Predicting Consumer Choices from Complex Behavioral Data. In Proceedings of the 21st ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 1315–1324. [Google Scholar]

- Liu, Z.; Zhang, J.; Wang, Y. Interpretable Machine Learning for Predicting Sepsis Risk in ICU Patients. Sci. Rep. 2025, 15, 12345. [Google Scholar] [CrossRef]

- Hariharan, S.; Rejimol Robinson, R.R.; Prasad, R.R.; Thomas, C.; Balakrishnan, N. XAI for Intrusion Detection System: Comparing Explanations Based on Global and Local Scope. J. Comput. Virol. Hack. Tech. 2023, 19, 217–239. [Google Scholar] [CrossRef]

- Ponce-Bobadilla, A.V.; Schmitt, V.; Maier, C.S.; Mensing, S.; Stodtmann, S. Practical guide to SHAP analysis: Explaining supervised machine learning model predictions in drug development. Clin. Transl. Sci. 2024, 17, e70056. [Google Scholar] [CrossRef]

- Ruiz de San Martín, R.; Morales-Hernández, C.; Barberá, C.; Martínez-Cortés, C.; Banegas-Luna, A.J.; Segura-Méndez, F.J.; Pérez-Sánchez, H.; Morales-Moreno, I.; Hernández-Morante, J.J. Global and Local Interpretable Machine Learning Allow Early Prediction of Unscheduled Hospital Readmission. Mach. Learn. Knowl. Extr. 2024, 6, 1653–1666. [Google Scholar] [CrossRef]

- Sathyan, A.; Weinberg, A.I.; Cohen, K. Interpretable AI for Bio-Medical Applications. Complex Eng. Syst. 2022, 2, 18. [Google Scholar] [CrossRef]

- Wang, Y. A Comparative Analysis of Model Agnostic Techniques for Explainable Artificial Intelligence. Res. Rep. Comput. Sci. 2024, 3, 25–33. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Bifarin, O.O. Interpretable Machine Learning with Tree-Based Shapley Additive Explanations: Application to Metabolomics Datasets for Binary Classification. PLoS ONE 2023, 18, e0284315. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable. 2022. Available online: https://christophm.github.io/interpretable-ml-book/ (accessed on 10 June 2025).

- Feng, J.; Liang, J.; Qiang, Z.; Hao, Y.; Li, X.; Li, L.; Wei, H. A Hybrid Stacked Ensemble and Kernel SHAP-Based Model for Intelligent Cardiotocography Classification and Interpretability. BMC Med. Inform. Decis. Mak. 2023, 23, 273. [Google Scholar] [CrossRef]

- Vilone, G.; Longo, L. Classification of Explainable Artificial Intelligence Methods through Their Output Formats. Mach. Learn. Knowl. Extract. 2021, 3, 615–661. [Google Scholar] [CrossRef]

- Cremades, A.; Hoyas, S.; Vinuesa, R. Additive-Feature-Attribution Methods: A Review on Explainable Artificial Intelligence for Fluid Dynamics and Heat Transfer. Int. J. Heat Fluid Flow 2025, 112, 109662. [Google Scholar] [CrossRef]

- Bauer, K.; von Zahn, M.; Hinz, O. Expl(AI)ned: The Impact of Explainable Artificial Intelligence on Users’ Information Processing. Inf. Syst. Res. 2023, 34, 1582–1602. [Google Scholar] [CrossRef]

- Mertes, S.; Huber, T.; Weitz, K.; Heimerl, A.; André, E. GANterfactual—Counterfactual Explanations for Medical Non-Experts Using Generative Adversarial Learning. Front. Artif. Intell. 2022, 5, 825565. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps. arXiv 2014, arXiv:1312.6034. [Google Scholar] [CrossRef]

- Saporta, A.; Gui, X.; Agrawal, A.; Pareek, A.; Truong, S.Q.H.; Nguyen, C.D.T.; Ngo, V.-D.; Seekins, J.; Blankenberg, F.G.; Ng, A.Y.; et al. Benchmarking Saliency Methods for Chest X-ray Interpretation. Nat. Mach. Intell. 2022, 4, 867–878. [Google Scholar] [CrossRef]

- Ford, C.; Keane, M.T. Explaining Classifications to Non-Experts: An XAI User Study of Post-Hoc Explanations for a Classifier When People Lack Expertise. In Proceedings of the International Conference on Pattern Recognition, Cham, Switzerland, 21–25 August 2022; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 246–260. [Google Scholar] [CrossRef]

- Bashir, Z.; Lin, M.; Feragen, A.; Mikolaj, K.; Taksøe-Vester, C.; Christensen, A.N.; Svendsen, M.B.S.; Fabricius, M.H.; Andreasen, L.; Nielsen, M.; et al. Clinical Validation of Explainable AI for Fetal Growth Scans through Multi-Level, Cross-Institutional Prospective End-User Evaluation. Sci. Rep. 2025, 15, 2074. [Google Scholar] [CrossRef]

- Slack, D.; Krishna, S.; Lakkaraju, H.; Singh, S. Explaining Machine Learning Models with Interactive Natural Language Conversations Using TalkToModel. Nat. Mach. Intell. 2023, 5, 873–883. [Google Scholar] [CrossRef]

- Bertrand, A.; Viard, T.; Belloum, R.; Eagan, J.R.; Maxwell, W. On Selective, Mutable and Dialogic XAI: A Review of What Users Say about Different Types of Interactive Explanations. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–21. [Google Scholar] [CrossRef]

- Nachtigall, M.; Do, L.N.Q.; Bodden, E. Explaining Static Analysis—A Perspective. In Proceedings of the 2019 34th IEEE/ACM International Conference on Automated Software Engineering Workshop (ASEW), San Diego, CA, USA, 11–15 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 29–32. [Google Scholar] [CrossRef]

- Shi, H.; Yang, N.; Yang, X.; Tang, H. Clarifying Relationship between PM2.5 Concentrations and Spatiotemporal Predictors Using Multi-Way Partial Dependence Plots. Remote Sens. 2023, 15, 358. [Google Scholar] [CrossRef]

- Amershi, S.; Chickering, M.; Drucker, S.M.; Lee, B.; Simard, P.; Suh, J. ModelTracker: Redesigning Performance Analysis Tools for Machine Learning. In Proceedings of the CHI ’14: SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 337–346. [Google Scholar] [CrossRef]

- Metsch, J.M.; Saranti, A.; Angerschmid, A.; Pfeifer, B.; Klemt, V.; Holzinger, A.; Hauschild, A.-C. CLARUS: An Interactive Explainable AI Platform for Manual Counterfactuals in Graph Neural Networks. J. Biomed. Inform. 2024, 150, 104600. [Google Scholar] [CrossRef] [PubMed]

- Delaney, E.; Pakrashi, A.; Greene, D.; Keane, M.T. Counterfactual Explanations for Misclassified Images: How Human and Machine Explanations Differ. Artif. Intell. 2023, 324, 103995. [Google Scholar] [CrossRef]

- Hassan, S.U.; Abdulkadir, S.J.; Zahid, M.S.M.; Al-Selwi, S.M. Local Interpretable Model-Agnostic Explanation Approach for Medical Imaging Analysis: A Systematic Literature Review. Comput. Biol. Med. 2025, 185, 109569. [Google Scholar] [CrossRef]

- Hwang, H.; Bell, A.; Fonseca, J.; Pliatsika, V.; Stoyanovich, J.; Whang, S.E. SHAP-based Explanations are Sensitive to Feature Representation. In Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency, Athens, Greece, 23–26 June 2025; pp. 1588–1601. [Google Scholar] [CrossRef]

- Sarti, G.; Feldhus, N.; Qi, J.; Nissim, M.; Bisazza, A. Democratizing Advanced Attribution Analyses of Generative Language Models with the Inseq Toolkit. In Proceedings of the Joint of the 2nd World Conference on eXplainable Artificial Intelligence Late-Breaking Work, Demos and Doctoral Consortium, xAI-2024: LB/D/DC, Valletta, Malta, 17–19 July 2024; pp. 289–296. [Google Scholar]

- Miglani, V.; Yang, A.; Markosyan, A.H.; Garcia-Olano, D.; Kokhlikyan, N. Using Captum to Explain Generative Language Models. In Proceedings of the 5th Workshop for Natural Language Processing Open Source Software (NLP-OSS), Singapore, 6 December 2023; Association for Computational Linguistics: Singapore, 2023; pp. 165–173. [Google Scholar] [CrossRef]

- Simuni, G. Explainable AI in ML: The Path to Transparency and Accountability. Int. J. Recent Adv. Multidiscip. Res. 2024, 11, 10531–10536. [Google Scholar]

- Ganapavarapu, G.; Mukherjee, S.; Martinez Gil, N.; Sarpatwar, K.; Rajasekharan, A.; Dhurandhar, A.; Arya, V.; Vaculin, R. AI Explainability 360 Toolkit for Time-Series and Industrial Use Cases. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 6–10 August 2023; pp. 5777–5778. [Google Scholar] [CrossRef]

- Rajkomar, A.; Hardt, M.; Howell, M.D.; Corrado, G.; Chin, M.H. Ensuring Fairness in Machine Learning for Healthcare. Lancet 2019, 393, 110–111. [Google Scholar]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 111–119. [Google Scholar] [CrossRef]

- Musthafa M, M.; Mahesh, T.R.; Kumar V, V.; Guluwadi, S. Enhancing Brain Tumor Detection in MRI Images through Explainable AI Using Grad-CAM with ResNet 50. BMC Med. Imaging 2024, 24, 107. [Google Scholar] [CrossRef]

- Alkhanbouli, R.; Matar Abdulla Almadhaani, H.; Alhosani, F.; Simsekler, M.C.E. The Role of Explainable Artificial Intelligence in Disease Prediction: A Systematic Literature Review and Future Research Directions. BMC Med. Inform. Decis. Mak. 2025, 25, 110. [Google Scholar] [CrossRef]

- Chaddad, A.; Peng, J.; Xu, J.; Bouridane, A. Survey of Explainable AI Techniques in Healthcare. Sensors 2023, 23, 634. [Google Scholar] [CrossRef] [PubMed]

- Vanitha, K.; Mahesh, T.R.; Kumar, V.V.; Guluwadi, S. Enhanced Tuberculosis Detection Using Vision Transformers and Explainable AI with a Grad-CAM Approach on Chest X-rays. BMC Med. Imaging 2025, 25, 96. [Google Scholar] [CrossRef]

- Lu, S.; Chen, R.; Wei, W.; Belovsky, M.; Lu, X. Understanding Heart Failure Patients EHR Clinical Features via SHAP Interpretation of Tree-Based Machine Learning Model Predictions. In AMIA Annual Symposium Proceedings; AMIA: San Francisco, CA, USA, 2022; Volume 2021, p. 813. [Google Scholar]

- Goethals, S.; Martens, D.; Evgeniou, T. Manipulation risks in explainable AI: The implications of the disagreement problem. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 185–200. [Google Scholar] [CrossRef]

- Mishra, A.K.; Tyagi, A.K.; Richa; Patra, S.R. Introduction to Machine Learning and Artificial Intelligence in Banking and Finance. In Applications of Block Chain Technology and Artificial Intelligence: Lead-ins in Banking, Finance, and Capital Market; Springer International Publishing: Cham, Switzerland, 2024; pp. 239–290. [Google Scholar] [CrossRef]

- Cao, L. AI in Finance: Challenges, Techniques, and Opportunities. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Akkalkot, A.I.; Kulshrestha, N.; Sharma, G.; Sidhu, K.S.; Palimkar, S.S. Challenges and Opportunities in Deploying Explainable AI for Financial Risk Assessment. In Proceedings of the 2025 International Conference on Pervasive Computational Technologies (ICPCT), Pune, India, 8–9 February 2025; pp. 382–386. [Google Scholar] [CrossRef]

- De Lange, P.E.; Melsom, B.; Vennerød, C.B.; Westgaard, S. Explainable AI for credit assessment in banks. J. Risk Financ. Manag. 2022, 15, 556. [Google Scholar] [CrossRef]

- Freeborough, W.; van Zyl, T. Investigating Explainability Methods in Recurrent Neural Network Architectures for Financial Time Series Data. Appl. Sci. 2022, 12, 1427. [Google Scholar] [CrossRef]

- Černevičienė, J.; Kabašinskas, A. Explainable Artificial Intelligence (XAI) in Finance: A Systematic Literature Review. Artif. Intell. Rev. 2024, 57, 216. [Google Scholar] [CrossRef]

- Zhao, Z.; Bai, T. Financial Fraud Detection and Prediction in Listed Companies Using SMOTE and Machine Learning Algorithms. Entropy 2022, 24, 1157. [Google Scholar] [CrossRef] [PubMed]

- Sonkavde, G.; Dharrao, D.S.; Bongale, A.M.; Deokate, S.T.; Doreswamy, D.; Bhat, S.K. Forecasting Stock Market Prices Using Machine Learning and Deep Learning Models: A Systematic Review, Performance Analysis and Discussion of Implications. Int. J. Financ. Stud. 2023, 11, 94. [Google Scholar] [CrossRef]

- Li, Y.; Stasinakis, C.; Yeo, W.M. A Hybrid XGBoost-MLP Model for Credit Risk Assessment on Digital Supply Chain Finance. Forecasting 2022, 4, 184–207. [Google Scholar] [CrossRef]

- Choudhary, H.; Orra, A.; Sahoo, K.; Thakur, M. Risk-Adjusted Deep Reinforcement Learning for Portfolio Optimization: A Multi-Reward Approach. Int. J. Comput. Intell. Syst. 2025, 18, 126. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, H.; Xiao, Z.; Qiu, J. A User-Centered Explainable Artificial Intelligence Approach for Financial Fraud Detection. Financ. Res. Lett. 2023, 58, 104309. [Google Scholar] [CrossRef]

- Khan, F.S.; Mazhar, S.S.; Mazhar, K.; AlSaleh, D.A.; Mazhar, A. Model-Agnostic Explainable Artificial Intelligence Methods in Finance: A Systematic Review, Recent Developments, Limitations, Challenges and Future Directions. Artif. Intell. Rev. 2025, 58, 232. [Google Scholar] [CrossRef]

- Christin, A. Predictive Algorithms and Criminal Sentencing. In The Decisionist Imagination: Sovereignty, Social Science and Democracy in the 20th Century; Steinmetz, G., Berkowitz, R., Eds.; Berghahn Books: New York, NY, USA, 2018; pp. 272–294. [Google Scholar] [CrossRef]

- Raji, I.D.; Buolamwini, J. Actionable Auditing: Investigating the Impact of Publicly Naming Biased Performance Results of Commercial AI Products. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society (AIES ’19), Honolulu, HI, USA, 27–28 January 2019; ACM: New York, NY, USA, 2019; pp. 429–435. [Google Scholar] [CrossRef]

- Mu, D.; Zhang, S.; Zhu, T.; Zhou, Y.; Zhang, W. Prediction of Recidivism and Detection of Risk Factors under Different Time Windows Using Machine Learning Techniques. Soc. Sci. Comput. Rev. 2024, 42, 1379–1402. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, L.; Lan, M.; Song, G.; Xiao, L.; Chen, J. Interpretable Machine Learning Models for Crime Prediction. Comput. Environ. Urban Syst. 2022, 94, 101789. [Google Scholar] [CrossRef]

- Arayola, M.M.; Tal, I.; Connolly, R.; Saber, T.; Bendechache, M. Ethics and Trustworthiness of AI for Predicting the Risk of Recidivism: A Systematic Literature Review. Information 2023, 14, 426. [Google Scholar] [CrossRef]

- Eren, O.; Owens, E. Economic Booms and Recidivism. J. Quant. Criminol. 2024, 40, 343–372. [Google Scholar] [CrossRef]

- Leung, C.; Mehrnia, B. A Multi-Model Approach to Legal Judgment Prediction Using Advanced Knowledge Integration Techniques. J. Comput. Sci. Artif. Intell. 2024, 1, 9–16. [Google Scholar] [CrossRef]

- Lee, Y.; O, S.; Eck, J.E. Improving Recidivism Forecasting with a Relaxed Naïve Bayes Classifier. Crime Delinq. 2023, 71, 89–117, (Original work published 2025). [Google Scholar] [CrossRef]

- Cesario, E.; Lindia, P.; Vinci, A. Multi-Density Crime Predictor: An Approach to Forecast Criminal Activities in Multi-Density Crime Hotspots. J. Big Data 2024, 11, 75. [Google Scholar] [CrossRef]

- Pires, R.; de Souza, F.C.; Rosa, G.; Lotufo, R.; Nogueira, R. Sequence-to-Sequence Models for Extracting Information from Registration and Legal Documents. In Document Analysis Systems. DAS 2022; Uchida, S., Barney, E., Eglin, V., Eds.; Lecture Notes in Computer Science, Vol. 13237; Springer: Cham, Switzerland, 2022; pp. 73–88. [Google Scholar] [CrossRef]

- Shamoo, Y. The Role of Explainable AI (XAI) in Forensic Investigations. In Digital Forensics in the Age of AI; IGI Global Scientific Publishing: Cham, Switzerland, 2024; pp. 31–62. [Google Scholar] [CrossRef]

- Fine, A.; Marsh, S. Judicial Leadership Matters (Yet Again): The Association between Judge and Public Trust for Artificial Intelligence in Courts. Discov. Artif. Intell. 2024, 4, 44. [Google Scholar] [CrossRef]

- Erdoğanyılmaz, C. A New Explainable AI Approach to Legal Judgement Prediction: Detecting Model Uncertainty and Analyzing the Alignment Between Judges and Models. In Proceedings of the 2024 Innovations in Intelligent Systems and Applications Conference (ASYU), Ankara, Turkey, 16–18 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Zafar, A. Artificial Intelligence in Autonomous Systems: Challenges and Opportunities. Res. Corrid. J. Eng. Sci. 2024, 1, 182–193. [Google Scholar]

- Goodall, N.J. Machine Ethics and Automated Vehicles. In Road Vehicle Automation; Meyer, G., Beiker, S., Eds.; Lecture Notes in Mobility; Springer International Publishing: Cham, Switzerland, 2014; pp. 93–102. [Google Scholar] [CrossRef]

- Gartner, J. Autonomy and Accountability in Autonomous Systems. IEEE Intell. Syst. 2017, 32, 8–12. [Google Scholar]

- Kuznietsov, A.; Gyevnar, B.; Wang, C.; Peters, S.; Albrecht, S.V. Explainable AI for Safe and Trustworthy Autonomous Driving: A Systematic Review. IEEE Trans. Intell. Transp. Syst. 2024, 25, 19342–19364. [Google Scholar] [CrossRef]

- Nazat, S.; Li, L.; Abdallah, M. XAI-ADS: An Explainable Artificial Intelligence Framework for Enhancing Anomaly Detection in Autonomous Driving Systems. IEEE Access 2024, 12, 48583–48607. [Google Scholar] [CrossRef]

- Yang, W.; Wei, Y.; Wei, H.; Chen, Y.; Huang, G.; Li, X.; Li, R.; Yao, N.; Wang, X.; Gu, X.; et al. Survey on Explainable AI: From Approaches, Limitations and Applications Aspects. Hum.-Cent. Intell. Syst. 2023, 3, 161–188. [Google Scholar] [CrossRef]

- Dong, J.; Chen, S.; Miralinaghi, M.; Chen, T.; Li, P.; Labi, S. Why did the AI make that decision? Towards an explainable artificial intelligence (XAI) for autonomous driving systems. Transp. Res. Part C Emerg. Technol. 2023, 156, 104358. [Google Scholar] [CrossRef]

- Hauptman, A.I.; Schelble, B.G.; Duan, W.; Flathmann, C.; McNeese, N.J. Understanding the influence of AI autonomy on AI explainability levels in human-AI teams using a mixed methods approach. Cogn. Technol. Work 2024, 26, 435–455. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, D.; Zhou, M.; Yang, J. Deep learning-based point cloud classification of obstacles for intelligent vehicles. World Electr. Veh. J. 2025, 16, 80. [Google Scholar] [CrossRef]

- Wang, T.; Fu, Y.; Cheng, X.; Li, L.; He, Z.; Xiao, Y. Vehicle trajectory prediction algorithm based on hybrid prediction model with multiple influencing factors. Sensors 2025, 25, 1024. [Google Scholar] [CrossRef]

- Javaid, S.; Khan, M.A.; Fahim, H.; He, B.; Saeed, N. Explainable AI and monocular vision for enhanced UAV navigation in smart cities: Prospects and challenges. Front. Sustain. Cities 2025, 7, 1561404. [Google Scholar] [CrossRef]

- Rahevar, M.; Darji, S. The Adoption of AI-Driven Chatbots into a Recommendation for E-Commerce Systems to Targeted Customer in the Selection of Product. Int. J. Manag. Econ. Commer. 2024, 1, 128–137. [Google Scholar] [CrossRef]

- Dastin, J. Amazon Scraps Secret AI Recruiting Tool That Showed Bias Against Women. Reuters, 10 October 2018. Available online: https://www.taylorfrancis.com/chapters/edit/10.1201/9781003278290-44/amazon-scraps-secret-ai-recruiting-tool-showed-bias-women-jeffrey-dastin (accessed on 28 August 2025).

- Kovari, A. Explainable AI Chatbots towards XAI ChatGPT: A Review. Heliyon 2025, 11, e42077. [Google Scholar] [CrossRef]

- Hofeditz, L.; Clausen, S.; Rieß, A.; Mirbabaie, M.; Stieglitz, S. Applying XAI to an AI-Based System for Candidate Management to Mitigate Bias and Discrimination in Hiring. Electron. Markets 2022, 32, 2207–2233. [Google Scholar] [CrossRef]

- Veeramachaneni, V. Large Language Models: A Comprehensive Survey on Architectures, Applications, and Challenges. Adv. Innov. Comput. Program. Lang. 2025, 7, 20–39. [Google Scholar] [CrossRef]

- Bujold, A.; Roberge-Maltais, I.; Parent-Rocheleau, X.; Boasen, J.; Sénécal, S.; Léger, P.-M. Responsible Artificial Intelligence in Human Resources Management: A Review of the Empirical Literature. AI Ethics 2024, 4, 1185–1200. [Google Scholar] [CrossRef]

- Peng, K.; Peng, Y.; Li, W. Research on Customer Churn Prediction and Model Interpretability Analysis. PLoS ONE 2023, 18, e0289724. [Google Scholar] [CrossRef] [PubMed]

- Guerranti, F.; Dimitri, G.M. A Comparison of Machine Learning Approaches for Predicting Employee Attrition. Appl. Sci. 2023, 13, 267. [Google Scholar] [CrossRef]

- Gadgil, K.; Gill, S.S.; Abdelmoniem, A.M. A Meta-Learning Based Stacked Regression Approach for Customer Lifetime Value Prediction. J. Economy Technol. 2023, 1, 197–207. [Google Scholar] [CrossRef]

- Nawaz, N.; Arunachalam, H.; Pathi, B.K.; Gajenderan, V. The Adoption of Artificial Intelligence in Human Resources Management Practices. Int. J. Inf. Manag. Data Insights 2024, 4, 100208. [Google Scholar] [CrossRef]

- Inavolu, S.M. Exploring AI-Driven Customer Service: Evolution, Architectures, Opportunities, Challenges and Future Directions. Int. J. Eng. Adv. Technol. 2024, 13, 156–163. [Google Scholar] [CrossRef]

- Polignano, M.; Musto, C.; Pellungrini, R.; Purificato, E.; Semeraro, G.; Setzu, M. XAI.it 2024: An Overview on the Future of AI in the Era of Large Language Models. XAI.it 2024, 3839, 1–10. [Google Scholar]

- Fantozzi, P.; Naldi, M. The Explainability of Transformers: Current Status and Directions. Computers 2024, 13, 92. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Wang, S.; Yin, D.; Du, M. Explainability for Large Language Models: A Survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–38. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain of Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2022, arXiv:2201.11903. [Google Scholar] [CrossRef]

- Lampinen, A.K.; Dasgupta, I.; Micheli, G.; Chan, S.; Bowman, H.; Hernandez, M.; Liu, J.Z.; Nematzadeh, A.; Schwettmann, S.; Blundell, C.; et al. Can Language Models Learn from Explanations in Context? arXiv 2022, arXiv:2204.02329. [Google Scholar] [CrossRef]

- Wiegreffe, S.; Pinter, Y. Attention Is Not Not Explanation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume D19-1002, pp. 11–20. [Google Scholar] [CrossRef]

- Geva, M.; Schuster, T.; Berant, J.; Levy, O. Transformer Feed-Forward Layers Are Key-Value Memories. arXiv 2021, arXiv:2106.05394. [Google Scholar] [CrossRef]

- Jacovi, A.; Goldberg, Y. Towards Faithfully Interpretable NLP Systems: How Should We Define and Evaluate Faithfulness? In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL 2020), Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA; 2020; pp. 4198–4205. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Wu, L.; Wang, J. Enhancing Chain of Thought Prompting in Large Language Models via Reasoning Patterns. Proc. AAAI Conf. Artif. Intell. 2025, 39, 25985–25993. [Google Scholar] [CrossRef]

- Saleh, Y.; Abu Talib, M.; Nasir, Q.; Dakalbab, F. Evaluating Large Language Models: A Systematic Review of Efficiency, Applications, and Future Directions. Front. Comput. Sci. 2025, 7, 1523699. [Google Scholar] [CrossRef]

- Patil, R.; Gudivada, V. A Review of Current Trends, Techniques, and Challenges in Large Language Models (LLMs). Appl. Sci. 2024, 14, 2074. [Google Scholar] [CrossRef]

- Krause, S.; Stolzenburg, F. Commonsense Reasoning and Explainable Artificial Intelligence Using Large Language Models. In Proceedings of the European Conference on Artificial Intelligence; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 302–319. [Google Scholar] [CrossRef]

- Randl, K.; Pavlopoulos, J.; Henriksson, A.; Lindgren, T. Evaluating the Reliability of Self-Explanations in Large Language Models. In Proceedings of the International Conference on Discovery Science; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 36–51. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, H.; Wen, Z.; Lin, W. How Explainable Machine Learning Enhances Intelligence in Explaining Consumer Purchase Behavior: A Random Forest Model with Anchoring Effects. Systems 2023, 11, 312. [Google Scholar] [CrossRef]

- Jain, N.; Jana, P.K. Xrrf: An Explainable Reasonably Randomised Forest Algorithm for Classification and Regression Problems. Inf. Sci. 2022, 613, 139–160. [Google Scholar] [CrossRef]

- Sayeed, M.A.; Rahman, A.; Rahman, A.; Rois, R. On the Interpretability of the SVM Model for Predicting Infant Mortality in Bangladesh. J. Health Popul. Nutr. 2024, 43, 170. [Google Scholar] [CrossRef] [PubMed]

- Loor, M.; Tapia-Rosero, A.; De Tré, G. Contextual Boosting to Explainable SVM Classification. In Fuzzy Logic and Technology, and Aggregation Operators. EUSFLAT AGOP 2023; Massanet, S., Montes, S., Ruiz-Aguilera, D., González-Hidalgo, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 14069. [Google Scholar] [CrossRef]

- de Waal, A.; Joubert, J.W. Explainable Bayesian Networks Applied to Transport Vulnerability. Expert Syst. Appl. 2022, 209, 118348. [Google Scholar] [CrossRef]

- Butz, R.; Schulz, R.; Hommersom, A.; van Eekelen, M. Investigating the Understandability of XAI Methods for Enhanced User Experience: When Bayesian Network Users Became Detectives. Artif. Intell. Med. 2022, 134, 102438. [Google Scholar] [CrossRef]

- Saleem, R.; Yuan, B.; Kurugollu, F.; Anjum, A.; Liu, L. Explaining Deep Neural Networks: A Survey on the Global Interpretation Methods. Neurocomputing 2022, 513, 165–180. [Google Scholar] [CrossRef]

- Dai, E.; Zhao, T.; Zhu, H.; Xu, J.; Guo, Z.; Liu, H.; Tang, J.; Wang, S. A Comprehensive Survey on Trustworthy Graph Neural Networks: Privacy, Robustness, Fairness, and Explainability. Mach. Intell. Res. 2024, 21, 1011–1061. [Google Scholar] [CrossRef]

- Otto, A.R.; Devine, S.; Schulz, E.; Bornstein, A.M.; Louie, K. Context-Dependent Choice and Evaluation in Real-World Consumer Behavior. Sci. Rep. 2022, 12, 17744. [Google Scholar] [CrossRef]

- Miró-Nicolau, M.; Jaume-i-Capó, A.; Moyà-Alcover, G. A Comprehensive Study on Fidelity Metrics for XAI. Inf. Process. Manag. 2025, 62, 103900. [Google Scholar] [CrossRef]

- Chen, J.; Song, L.; Yoon, J. Explaining Explanations: Axiomatic Feature Interactions for Deep Networks. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar] [CrossRef]

- Coroama, L.; Groza, A. Evaluation Metrics in Explainable Artificial Intelligence (XAI). In Proceedings of the International Conference on Advanced Research in Technologies, Information, Innovation and Sustainability, Santiago de Compostela, Spain, 12–15 September 2022; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 401–413. [Google Scholar] [CrossRef]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Karimi, A.H.; Schölkopf, B.; Valera, I. Algorithmic Recourse: From Counterfactual Explanations to Interventions. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT 2021), Virtual Event, 3–10 March 2021; Association for Computing Machinery: New York, NY, USA; 2021; pp. 353–362. [Google Scholar] [CrossRef]

- Perotti, A.; Borile, C.; Miola, A.; Nerini, F.P.; Baracco, P.; Panisson, A. Explainability, Quantified: Benchmarking XAI Techniques. In Proceedings of the World Conference on Explainable Artificial Intelligence, Valletta, Malta, 17–19 July 2024; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 421–444. Available online: https://link.springer.com/chapter/10.1007/978-3-031-63787-2_22 (accessed on 28 August 2025).

- Bhattacharya, A. Applied Machine Learning Explainability Techniques: Make ML Models Explainable and Trustworthy for Practical Applications Using LIME, SHAP, and More; Packt Publishing Ltd.: Birmingham, UK, 2022. [Google Scholar]

- Merrick, L.; Taly, A. The Explanation Game: Explaining Machine Learning Models Using Shapley Values. In Machine Learning and Knowledge Extraction—Proceedings of the 4th IFIP TC 5, TC 12, WG 8.4, WG 8.9, WG 12.9 International Cross-Domain Conference, CD-MAKE 2020, Dublin, Ireland, 25–28 August 2020; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12279, pp. 17–38. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Drosou, M.; Jagadish, H.V.; Pitoura, E.; Stoyanovich, J. Diversity in Big Data: A Review. Big Data 2017, 5, 73–84. [Google Scholar] [CrossRef]

- Agrawal, R.; Gollapudi, S.; Halverson, A.; Ieong, S. Diversifying Search Results. In Proceedings of the 2nd ACM International Conference on Web Search and Data Mining (WSDM 2009), Barcelona, Spain, 9–11 February 2009; Association for Computing Machinery: New York, NY, USA; 2009; pp. 5–14. [Google Scholar] [CrossRef]

- O’Neil, C. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy; Crown Publishing Group: New York, NY, USA, 2016. [Google Scholar]

- Chamola, V.; Hassija, V.; Sulthana, A.R.; Ghosh, D.; Dhingra, D.; Sikdar, B. A Review of Trustworthy and Explainable Artificial Intelligence (XAI). IEEE Access 2023, 11, 78994–79015. [Google Scholar] [CrossRef]

- High Level Expert Group on Artificial Intelligence, European Commission. Ethics Guidelines for Trustworthy AI; Technical Report; European Commission: Brussels, Belgium, 2019. Available online: https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=60419 (accessed on 25 August 2025). [CrossRef]

- Oh, S.J.; Benenson, R.; Fritz, M.; Schiele, B. Faceless Person Recognition: Privacy Implications in Social Media. In Proceedings of the European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland; 2016; pp. 19–35. [Google Scholar] [CrossRef]

- Orekondy, T.; Schiele, B.; Fritz, M. Knockoff Nets: Stealing Functionality of Black-Box Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA; 2019; pp. 4954–4963. [Google Scholar] [CrossRef]

- Oh, S.J.; Schiele, B.; Fritz, M. Towards Reverse-Engineering Black-Box Neural Networks. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.-R., Eds.; Springer: Cham, Switzerland, 2019; pp. 121–144. [Google Scholar] [CrossRef]

- Aditya, P.; Sen, R.; Druschel, P.; Oh, S.J.; Benenson, R.; Fritz, M.; Wu, T.T. I-PIC: A Platform for Privacy-Compliant Image Capture. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys 2016), Singapore, 25–30 June 2016; Association for Computing Machinery: New York, NY, USA; 2016; pp. 235–248. [Google Scholar] [CrossRef]

- Sun, Q.; Tewari, A.; Xu, W.; Fritz, M.; Theobalt, C.; Schiele, B. A Hybrid Model for Identity Obfuscation by Face Replacement. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland; 2018; pp. 553–569. [Google Scholar] [CrossRef]

- Ziethmann, P.; Stieler, F.; Pfrommer, R.; Schlögl-Flierl, K.; Bauer, B. Towards a Framework for Interdisciplinary Studies in Explainable Artificial Intelligence. In Proceedings of the International Conference on Human-Computer Interaction, Washington, DC, USA, 29 June–4 July 2024; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 316–333. [Google Scholar] [CrossRef]

- Vilone, G.; Longo, L. Notions of Explainability and Evaluation Approaches for Explainable Artificial Intelligence. Inf. Fusion 2021, 76, 89–106. [Google Scholar] [CrossRef]

- Graziani, M.; Dutkiewicz, L.; Calvaresi, D.; Pereira Amorim, J.; Yordanova, K.; Vered, M.; Nair, R.; Henriques Abreu, P.; Blanke, T.; Pulignano, V.; et al. A Global Taxonomy of Interpretable AI: Unifying the Terminology for the Technical and Social Sciences. Artif. Intell. Rev. 2023, 56, 3473–3504. [Google Scholar] [CrossRef]

- Saeed, W.; Omlin, C. Explainable AI (XAI): A Systematic Meta-Survey of Current Challenges and Future Opportunities. Knowl.-Based Syst. 2023, 263, 110273. [Google Scholar] [CrossRef]

- Raees, M.; Meijerink, I.; Lykourentzou, I.; Khan, V.J.; Papangelis, K. From Explainable to Interactive AI: A Literature Review on Current Trends in Human-AI Interaction. Int. J. Hum.-Comput. Stud. 2024, 180, 103301. [Google Scholar] [CrossRef]

- Picard, R.W. Perceptual User Interfaces: Affective Perception. Commun. ACM 2000, 43, 50–51. [Google Scholar] [CrossRef]

- Venkatasubbu, S.; Krishnamoorthy, G. Ethical Considerations in AI: Addressing Bias and Fairness in Machine Learning Models. J. Knowl. Learn. Sci. Technol. 2022, 1, 130–138. [Google Scholar] [CrossRef]

- Islam, M.U.; Mottalib, M.M.; Hassan, M.; Alam, Z.I.; Zobaed, S.M.; Rabby, M.F. The Past, Present, and Prospective Future of XAI: A Comprehensive Review. In Explainable Artificial Intelligence for Cyber Security: Next Generation Artificial Intelligence; Springer: Cham, Switzerland, 2022; pp. 1–29. [Google Scholar] [CrossRef]

- Banabilah, S.; Aloqaily, M.; Alsayed, E.; Malik, N.; Jararweh, Y. Federated Learning Review: Fundamentals, Enabling Technologies, and Future Applications. Inf. Process. Manag. 2022, 59, 103061. [Google Scholar] [CrossRef]

- Hougen, D.F.; Shah, S.N.H. The Evolution of Reinforcement Learning. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1457–1464. [Google Scholar] [CrossRef]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Privacy-Preserving Deep Learning. In Proceedings of the 2015 ACM SIGSAC Conference on Computer and Communications Security (CCS 2015), Denver, CO, USA, 12–16 October 2015; Association for Computing Machinery: New York, NY, USA; 2015; pp. 1310–1321. [Google Scholar] [CrossRef]

- López-Blanco, R.; Alonso, R.S.; González-Arrieta, A.; Chamoso, P.; Prieto, J. Federated Learning of Explainable Artificial Intelligence (FED-XAI): A Review. In Proceedings of the International Symposium on Distributed Computing and Artificial Intelligence, Salamanca, Spain, 5–7 July 2023; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 318–326. [Google Scholar] [CrossRef]

- Miró-Nicolau, M.; Jaume-i-Capó, A.; Moyà-Alcover, G. Assessing Fidelity in XAI Post-Hoc Techniques: A Comparative Study with Ground Truth Explanations Datasets. Artif. Intell. 2024, 335, 104179. [Google Scholar] [CrossRef]

- Lei, Y.; Li, Z.; Li, Y.; Zhang, J.; Shan, H. LICO: Explainable Models with Language-Image Consistency. In Proceedings of the Thirty-Seventh Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Available online: https://arxiv.org/abs/2310.09821 (accessed on 28 August 2025).

- Mhasawade, V.; Rahman, S.; Haskell-Craig, Z.; Chunara, R. Understanding Disparities in Post Hoc Machine Learning Explanation. In Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency, Rio de Janeiro, Brazil, 3–6 June 2024; pp. 2374–2388. [Google Scholar] [CrossRef]

- Guidotti, R. Counterfactual Explanations and How to Find Them: Literature Review and Benchmarking. Data Min. Knowl. Discov. 2024, 38, 2770–2824. [Google Scholar] [CrossRef]

- Pawelczyk, M.; Bielawski, S.; Heuvel, J.v.d.; Richter, T.; Kasneci, G. CARLA: A Python Library to Benchmark Algorithmic Recourse and Counterfactual Explanation Algorithms. arXiv 2021, arXiv:2108.00783. [Google Scholar] [CrossRef]

- Serrurier, M.; Mamalet, F.; Fel, T.; Béthune, L.; Boissin, T. On the Explainable Properties of 1-Lipschitz Neural Networks: An Optimal Transport Perspective. Adv. Neural Inf. Process. Syst. 2023, 36, 54645–54682. Available online: https://arxiv.org/abs/2206.06854 (accessed on 28 August 2025).

- DeYoung, J.; Jain, S.; Rajani, N.F.; Lehman, E.; Xiong, C.; Socher, R.; Wallace, B.C. ERASER: A Benchmark to Evaluate Rationalized NLP Models. arXiv 2019, arXiv:1911.03429. [Google Scholar] [CrossRef]

- Wollek, A.; Graf, R.; Čečatka, S.; Fink, N.; Willem, T.; Sabel, B.O.; Lasser, T. Attention-Based Saliency Maps Improve Interpretability of Pneumothorax Classification. Radiol. Artif. Intell. 2023, 5, e220187. [Google Scholar] [CrossRef]

- Sadeghi, Z.; Alizadehsani, R.; Cifci, M.A.; Kausar, S.; Rehman, R.; Mahanta, P.; Bora, P.K.; Almasri, A.; Alkhawaldeh, R.; Hussain, S.; et al. A Review of Explainable Artificial Intelligence in Healthcare. Comput. Electr. Eng. 2024, 118, 109370. [Google Scholar] [CrossRef]

- Burger, C.; Walter, C.; Le, T. The Effect of Similarity Measures on Accurate Stability Estimates for Local Surrogate Models in Text-Based Explainable AI. arXiv 2024, arXiv:2406.15839. [Google Scholar] [CrossRef]

- Igami, M. Artificial Intelligence as Structural Estimation: Economic Interpretations of Deep Blue, Bonanza, and AlphaGo. arXiv 2017, arXiv:1710.10967. Available online: https://arxiv.org/abs/1710.10967 (accessed on 13 June 2025). [CrossRef]

- United Nations General Assembly. Transforming Our World: The 2030 Agenda for Sustainable Development; United Nations: New York, NY, USA, 2015. Available online: https://www.un.org/en/development/desa/population/migration/generalassembly/docs/globalcompact/A_RES_70_1_E.pdf (accessed on 25 August 2025).

| Year | Milestone | Description |

|---|---|---|

| 1975 | MYCIN expert system [35] | One of the first AI systems to provide rule-based, human-readable explanations in medicine. |

| 1980s | Growth of symbolic AI and rule-based models [34] | Explainability was inherently part of early symbolic AI using logical inference and transparent rules. |

| 1990s | Transition to statistical machine learning [37] | Rise of machine learning models (e.g., random forests, SVMs), with reduced inherent interpretability. |

| 2012 | Deep Learning [41] | Despite high predictive accuracy, DNN is an opaque black-box model. |

| 2016 | DARPA XAI Program [5] | U.S. government’s initiative to develop interpretable machine learning models without compromising accuracy. |

| 2016 | LIME [4] | A post hoc explanation tool which uses local surrogate model to explain individual prediction. |

| 2017 | SHAP [15] | A post hoc tool, which uses Shapley values to identify feature importance. |

| 2017 | “Right to Explanation” in GDPR [32] | The EU established legal grounds for demanding explanations of automated decisions. |

| 2019 | Human-centered XAI [23] | Highlights the importance of social science and human-centered evaluation in explanation design. |

| 2020s | Interdisciplinary research [40] | XAI intersects with machine learning, HCI, philosophy, and ethics. |

| Domain | Interpretation | Primary Goals |

|---|---|---|

| Military and Government [5] | XAI is a framework, which produces more explainable models while maintaining performance and enhancing user trust. | Trust, control, and operational reliability. |

| Healthcare [12] | Explanation should provide clinically sound reasoning, which physicians and patients can understand. | Transparency, clinical trust, informed decision-making. |

| Finance [13,32] | Explanation should place emphasis on regulatory compliance and bias detection in decision, such as credit scoring. | Fairness, accountability, legal conformance. |

| Legal and Regulatory [32] | Focus is on the right to explanation for algorithmic decisions which affect individuals. | Interpretability for audits. |

| Developers/Engineers [4,15] | Explanations assist in model debugging and feature importance analysis. | Debugging, model improvement |

| End-users (Non-experts) [23,28] | Explanations must be intuitive, simple, and support trust and usability. | Understandability, user trust, adoption. |

| Academic Researchers [8,20] | XAI should systematize interpretability through formal definition and evaluation metrics. | Scientific rigor, reproducibility, generalization. |

| Research Question | Description |

|---|---|

| RQ1 | What are the key XAI methods for making the decisions of AI models transparent? |

| RQ2 | How does the relevance of XAI methods vary based on the application domains? |

| RQ3 | What are the major limitations and challenges of XAI methods for enhancing the transparency of AI models? |

| Keywords Related to Explainability | Keywords Related to AI |

|---|---|

| (“explainable” OR “interpretable” OR “transparent” OR “trustworthy” OR “xai” OR “fair” OR “ethical” OR “robust” OR “accountable” OR “bias free” OR “discrimination free” OR “safe” OR “post-hoc explanation” OR “post-hoc interpretability”) AND | (“artificial intelligence” OR “AI” OR “machine learning” OR “ML” OR “deep learning” OR “neural networks” OR “automated decision making” OR “predictive analytics” OR “algorithm”) |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| XAI methods have been proposed to enhance transparency of AI models; | Technical reports and book chapters; |

| Articles in English; | Editorials, viewpoints, and opinions; |

| Novel contributions published in peer-reviewed journals or conference proceedings. | Duplicated articles. |

| Technique | Type | Explanation Format | Model Compatability | Advantages | Limitations |

|---|---|---|---|---|---|

| LIME | Post hoc, Local | Feature Importance (weights) | Model-agnostic | Interprets any model; good local fidelity. | Unstable explanations; lacks global consistency. |

| SHAP | Post hoc, Local and Global | Shapley Values | Model-agnostic | Solid theoretical foundation; consistent. | Computationally intensive for complex models. |

| Saliency Maps | Post hoc, Local | Visual (Heatmap) | Deep Learning (CNNs) | Intuitive for image data; visual feedback. | Sensitive to noise; lacks standardization. |

| PDP | Post hoc, Global | Graphical (Feature vs. Output) | Model-agnostic | Simple visualization of feature impact. | Assumes feature independence. |