Optimization of Object Detection Network Architecture for High-Resolution Remote Sensing

Abstract

1. Introduction

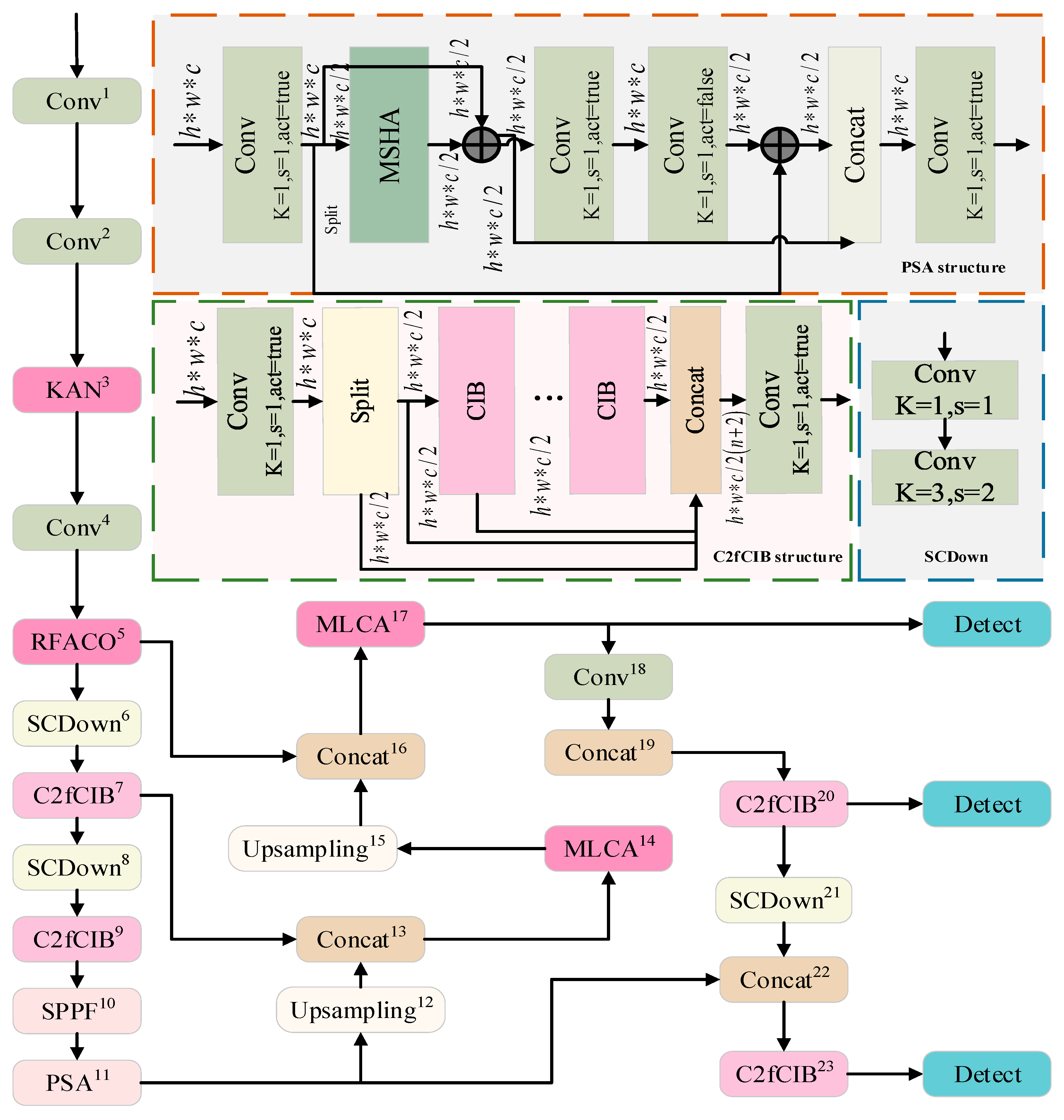

- A new backbone network structure is constructed, and the third layer C2f of the original backbone network is optimized to the Kolmogorov–Arnold Network (KAN); and the fifth layer C2f is optimized to the Receptive-Field Attention (RFA) convolution, which significantly improves the feature extraction accuracy and overall feature extraction efficiency of small objects.

- The feature pyramid part is optimized, and the C2f and C2fCIB structures in the upsampling process are optimized to the Mixed Local Channel Attention (MLCA) module. Through its unique channel and spatial attention mechanism, it can more effectively process multi-scale features and significantly enhance the model’s robustness to complex backgrounds.

- The effectiveness of the optimized YOLOv10x model in remote-sensing image target detection tasks is verified through experiments on two public remote-sensing datasets.

2. Related Work

3. YOLO-KRM Model

3.1. Backbone Improvement

3.1.1. Kolmogorov–Arnold Network

- Nonlinear activation: KAN applies a learnable nonlinear activation function at each pixel point, which increases the expression ability of the model.

- Efficient parameters: Although the number of parameters has increased, the overall parameters are more efficient due to their unique design.

- Easy to use: Provides a simple and easy-to-import KAN Convolutional Layer class to facilitate integration into existing projects.

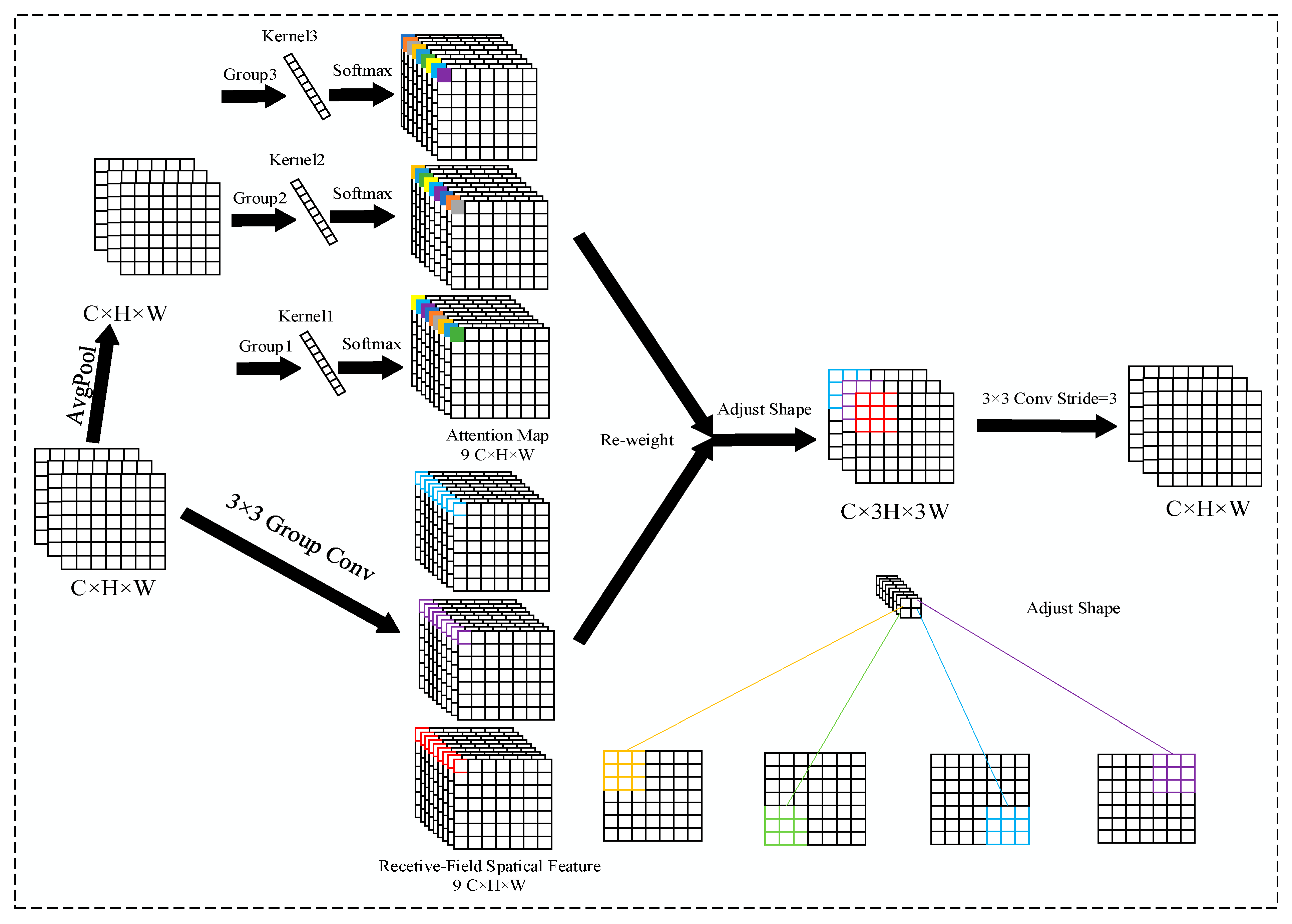

3.1.2. Receptive Field Attention Convolutional Operations

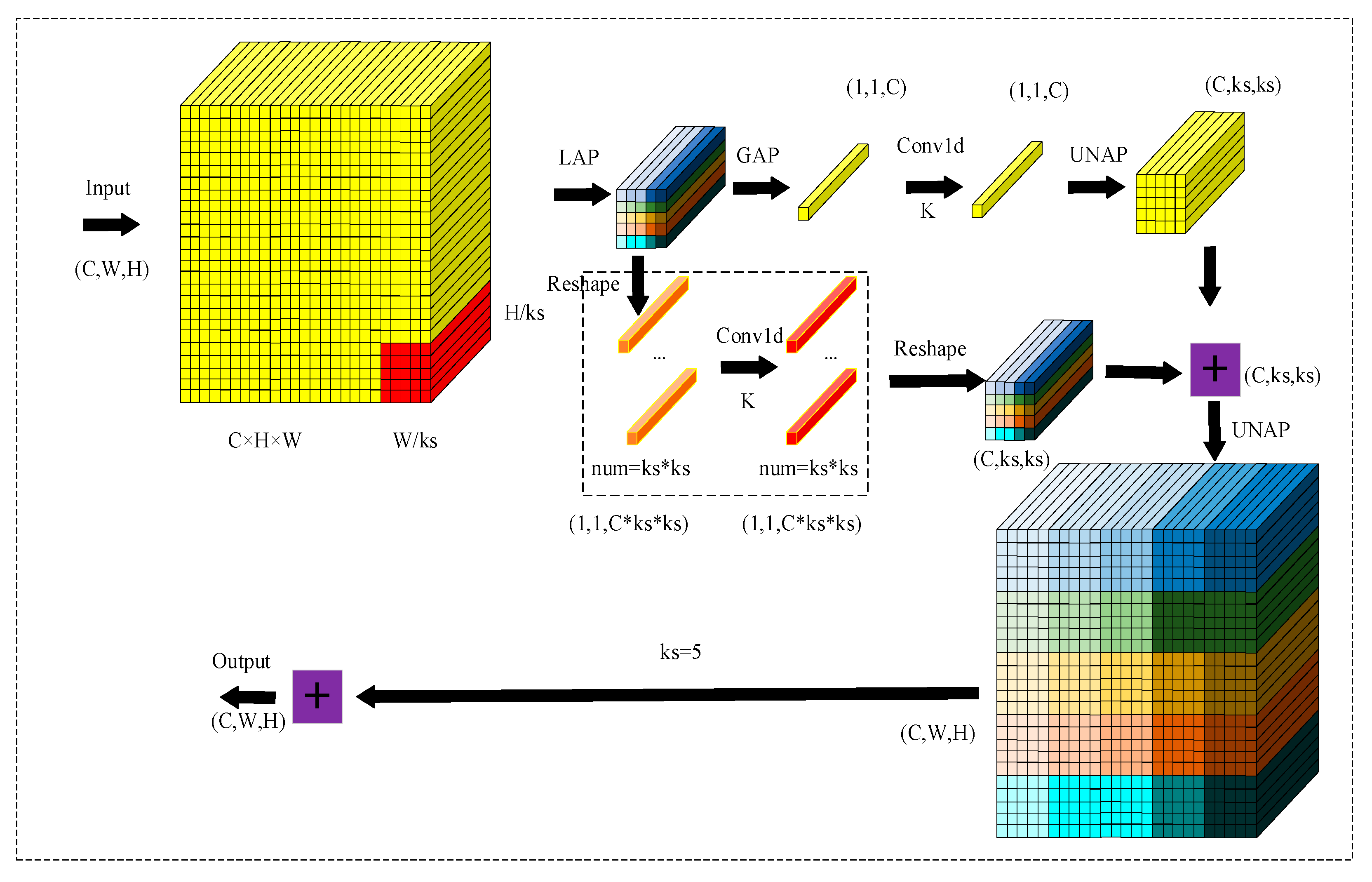

3.2. FPN Improvement

4. Experiment

4.1. Experimental Configuration

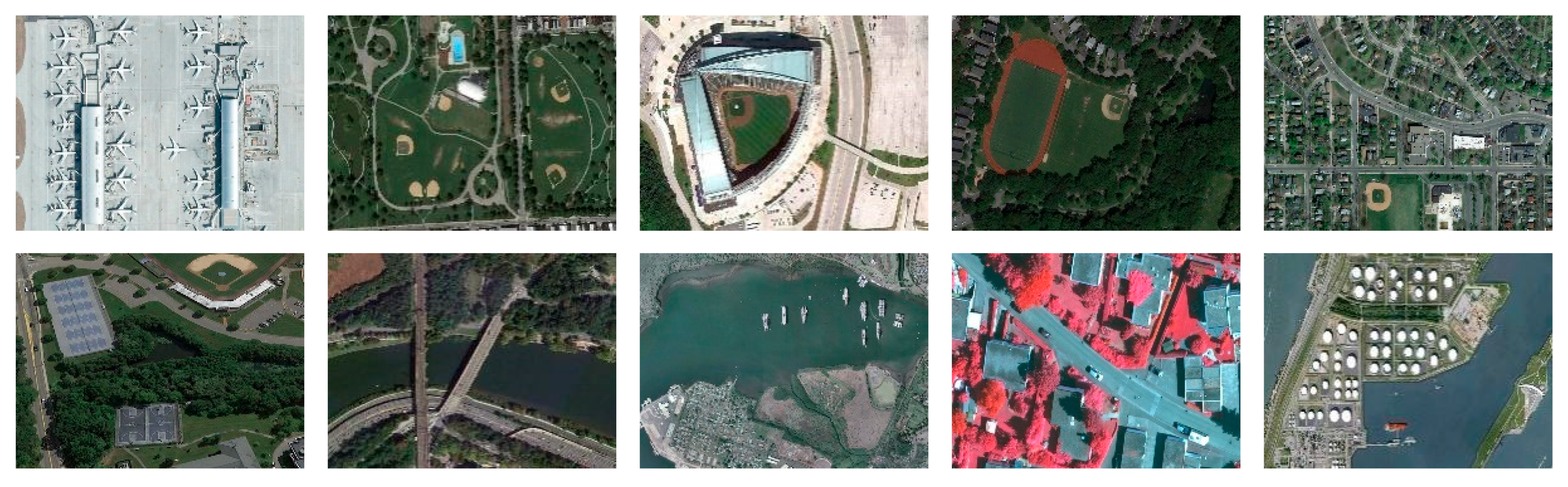

4.2. Dataset

4.3. Evaluating Indicator

4.4. Contrast Experiments

4.5. Ablation Experiments

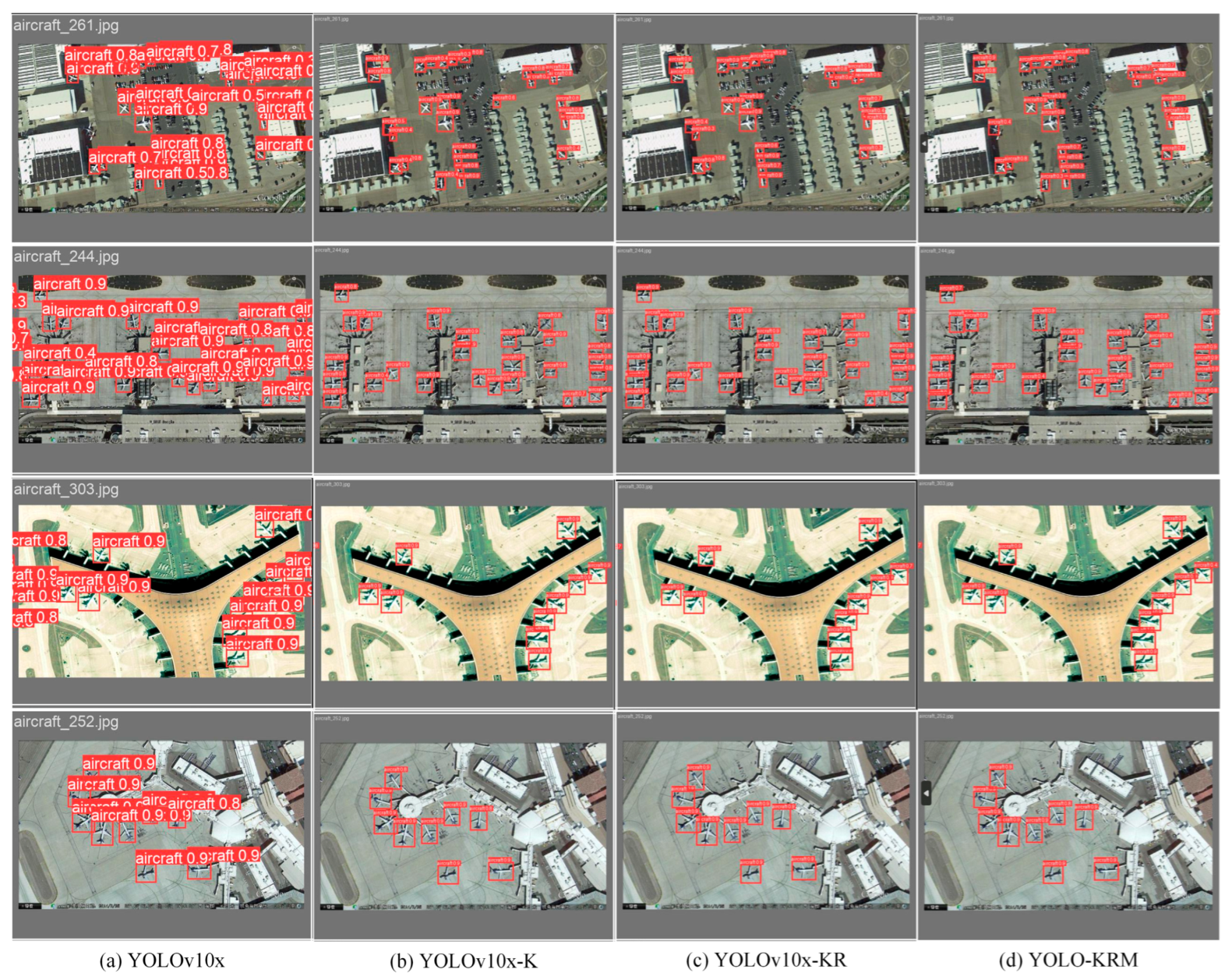

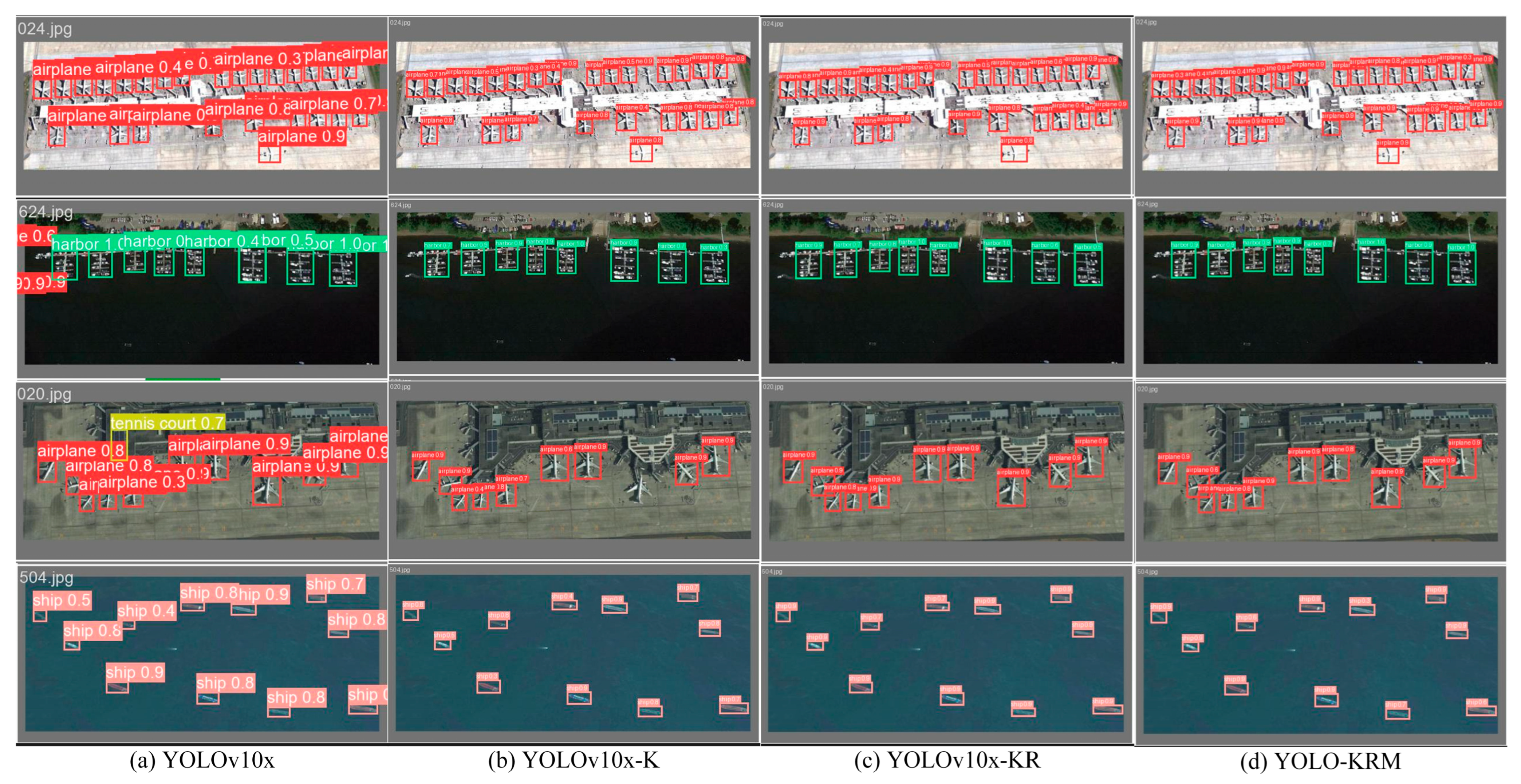

4.6. Experimental Results

5. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gui, S.; Song, S.; Qin, R.; Tang, Y. Remote sensing object detection in the deep learning era—A review. Remote Sens. 2024, 16, 327. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, M.; Gao, X.; Shi, W. Advances and challenges in deep learning-based change detection for remote sensing images: A review through various learning paradigms. Remote Sens. 2024, 16, 804. [Google Scholar] [CrossRef]

- Wei, W.; Cheng, Y.; He, J.; Zhu, X. A review of small object detection based on deep learning. Neural Comput. Appl. 2024, 36, 6283–6303. [Google Scholar] [CrossRef]

- Wen, L.; Cheng, Y.; Fang, Y.; Li, X. A comprehensive survey of oriented object detection in remote sensing images. Expert Syst. Appl. 2023, 224, 1199–1231. [Google Scholar] [CrossRef]

- Hua, W.; Chen, Q. A survey of small object detection based on deep learning in aerial images. Artif. Intell. Rev. 2025, 58, 1–67. [Google Scholar] [CrossRef]

- Wang, X.; Wang, A.; Yi, J.; Song, Y.; Chehri, A. Small object detection based on deep learning for remote sensing: A comprehensive review. Remote Sens. 2023, 15, 3265. [Google Scholar] [CrossRef]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Guo, Z.; Niu, Y. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 2022, 197, 116793–116814. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 723–751. [Google Scholar] [CrossRef]

- Sun, H.; Yao, G.; Zhu, S.; Zhang, L.; Xu, H.; Kong, J. SOD-YOLOv10: Small Object Detection in Remote Sensing Images Based on YOLOv10. IEEE Geosci. Remote Sens. Lett. 2025, 18, 235–257. [Google Scholar] [CrossRef]

- Zhang, W.F.; Zhang, H.W.; Mei, Y.; Xiao, N. A DINO remote sensing target detection algorithm combining efficient hybrid encoder and structural reparameterization. J. Beijing Univ. Aeronaut. Astronaut. 2025, 3, 1–13. [Google Scholar]

- Yao, T.T.; Zhao, H.X.; Feng, Z.H.; Hu, Q. A Context-Aware Multiple Receptive Field Fusion Network for Oriented Object Detection in Remote Sensing Images. J. Electron. Inf. Technol. 2025, 47, 233–243. [Google Scholar]

- Liu, X.; Gong, W.; Shang, L.; Li, X.; Gong, Z. Remote Sensing Image Target Detection and Recognition Based on YOLOv5. Remote Sens. 2023, 15, 4459. [Google Scholar] [CrossRef]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A Review on yolov8 and Its Advancements. In International Conference on Data Intelligence and Cognitive Informatics; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Nguyen, P.T.; Nguyen, G.L.; Bui, D.D. LW-UAV–YOLOv10: A lightweight model for small UAV detection on infrared data based on YOLOv10. Geomatica 2025, 77, 100049. [Google Scholar] [CrossRef]

- Fan, K.; Li, Q.; Li, Q.; Zhong, G.; Chu, Y.; Le, Z.; Xu, Y.; Li, J. YOLO-remote: An object detection algorithm for remote sensing targets. IEEE Access 2024, 12, 155654–155665. [Google Scholar] [CrossRef]

- Xu, D.Q.; Wu, Y.Q. Progress of research on deep learning algorithms for object detection in optical remote sensing images. Natl. Remote Sens. Bull. 2024, 28, 3045–3073. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Zhu, P.; Jia, X.; Tang, X.; Jiao, L. Generalized few-shot object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2023, 195, 353–364. [Google Scholar] [CrossRef]

- Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hap, Z.; Lin, J.; Ma, X. A survey on deep learning-based change detection from high-resolution remote sensing images. Remote Sens. 2022, 14, 1552. [Google Scholar] [CrossRef]

- Qu, J.; Tang, Z.; Zhang, L.; Zhang, Y.; Zhang, Z. Remote sensing small object detection network based on attention mechanism and multi-scale feature fusion. Remote Sens. 2023, 15, 2728. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, H.; Shang, Y.; Gang, C.; Suhua, Z.; Quanbo, Y. Starting from the structure: A review of small object detection based on deep learning. Image Vis. Comput. 2024, 10, 105054–105073. [Google Scholar]

- Zhou, H.; Liu, W.; Sun, K.; Wu, J.; Wi, T. MSCANet: A multi-scale context-aware network for remote sensing object detection. Earth Sci. Inform. 2024, 17, 5521–5538. [Google Scholar] [CrossRef]

- Jiang, H.; Luo, T.; Peng, H.; Zhang, G. MFCANet: Multiscale Feature Context Aggregation Network for Oriented Object Detection in Remote-Sensing Images. IEEE Access 2024, 32, 10324–10357. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Zhu, X.; Wang, G.; Han, X.; Tang, X.; Jiao, L. Multistage enhancement network for tiny object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Xie, Q.; Zhou, D.; Tang, R.; Feng, H. A deep cnn-based detection method for multi-scale fine-grained objects in remote sensing images. IEEE Access 2024, 12, 15622–15630. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, W.; Wu, C.; Li, W.; Tao, R. FANet: An arbitrary direction remote sensing object detection network based on feature fusion and angle classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Bashir, A.K.; Shao, X.; Cheng, Y.; Yu, K. A remote sensing image rotation object detection approach for real-time environmental monitoring. Sustain. Energy Technol. Assess. 2023, 57, 103270. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Y.; Lin, M. MFLD: Lightweight object detection with multi-receptive field and long-range dependency in remote sensing images. Int. J. Intell. Comput. Cybern. 2024, 17, 805–823. [Google Scholar] [CrossRef]

- Pan, Z.; Xu, L.; Liang, C.; Pan, K.; Zhao, M.; Lu, M. Lightweight spatial sliced-concatenate-multireceptive-field enhance and joint channel attention mechanism for infrared object detection. IEEE Access 2022, 10, 55508–55521. [Google Scholar] [CrossRef]

- Sun, H.; Bai, J.; Yang, F.; Bai, X. Receptive-field and direction induced attention network for infrared dim small target detection with a large-scale dataset IRDST. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–13. [Google Scholar] [CrossRef]

- Si, Y.; Xu, H.; Zhu, X.; Zhang, W.; Dong, Y.; Chen, Y.; Li, H. SCSA: Exploring the synergistic effects between spatial and channel attention. Neurocomputing 2025, 634, 129866–129884. [Google Scholar] [CrossRef]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Bao, W.; Huang, C.; Hu, G.; Su, B.; Yang, X. Detection of Fusarium head blight in wheat using UAV remote sensing based on parallel channel space attention. Comput. Electron. Agric. 2024, 217, 108630–1086662. [Google Scholar] [CrossRef]

- Wu, D.; Li, H.; Hou, X.; Xu, C.; Cheng, G.; Guo, L.; Liu, H. Spatial-Channel Attention Transformer with Pseudo Regions for Remote Sensing Image-Text Retrieval. IEEE Trans. Geosci. Remote Sens. 2024, 261, 1371–1395. [Google Scholar] [CrossRef]

- Zhao, R.; Zhang, C.; Xue, D. A multi-scale multi-channel CNN introducing a channel-spatial attention mechanism hyperspectral remote sensing image classification method. Eur. J. Remote Sens. 2024, 57, 2353290–2353319. [Google Scholar] [CrossRef]

- Nan, G.; Zhao, Y.; Fu, L.; Ye, Q. Object detection by channel and spatial exchange for multimodal remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 72, 630–652. [Google Scholar] [CrossRef]

- Somvanshi, S.; Javed, S.A.; Islam, M.M.; Pandit, D.; Das, S. A survey on Kolmogorov-Arnold network. ACM Comput. Surv. 2024, 68, 331–352. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Soong, Y. RFAConv: Innovating spatial attention and standard convolutional operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

| Configuration | Setting |

|---|---|

| Input size | 640 × 640 |

| Learning rate | 0.01 |

| Optimizer | AdamW |

| Batch size | 4 |

| Epochs | 100 |

| Data augmentation | HSV (H:0.015, S:0.7, V:0.4), Translation (0.1), Scaling (0.5), Horizontal Flip (0.5), Mosaic (1.0) |

| Plotting and Analysis Library | Matplotlib 3.7.4, Seaborn 0.13.2, TensorBoard 2.13.0 |

| Tools | Torch 1.12.0, TorchVision 0.13, CUDA 11.3 |

| Operating system | Windows 11 Home Edition |

| Disk | 512 GB SSD |

| GPU | Server V100 and Windows RTX 3070Ti |

| Memory | DDR5 32 GB |

| CPU | 2 × 718532C |

| Video Memory | 16 G |

| Models\Metrics | P (/%)↑ | R (/%)↑ | mAP@50 (/%)↑ | mAP@50:95 (/%)↑ | FPS↑ |

|---|---|---|---|---|---|

| YOLOv6 | 87.61 | 91.19 | 91.38 | 64.29 | 117.2 |

| YOLOv5x | 89.04 | 88.63 | 91.51 | 63.29 | 119.1 |

| YOLOv8 | 91.20 | 87.75 | 90.64 | 64.29 | 121.5 |

| YOLOv10x | 92.37 | 92.13 | 95.91 | 75.95 | 27.4 |

| YOLOv10C | 88.03 | 91.23 | 93.06 | 74.03 | 23.8 |

| YOLOv10D | 93.90 | 93.26 | 96.35 | 75.47 | 32.9 |

| YOLOv10G | 92.88 | 91.23 | 95.98 | 75.49 | 31.8 |

| YOLOv10GD | 93.70 | 92.05 | 95.82 | 76.73 | 32.1 |

| YOLOv10GM | 93.04 | 92.88 | 96.71 | 76.32 | 30.1 |

| YOLOv10GS | 92.52 | 92.05 | 95.81 | 74.76 | 31.4 |

| YOLOv10M | 92.27 | 92.66 | 96.21 | 75.04 | 31.3 |

| YOLO-KRM | 95.48 | 94.50 | 97.68 | 79.77 | 21.8 |

| Models\Metrics | P (/%)↑ | R (/%)↑ | mAP@50 (/%)↑ | mAP@50:95 (/%)↑ | FPS↑ |

|---|---|---|---|---|---|

| YOLOv6 | 88.42 | 84.44 | 89.12 | 58.62 | 40.5 |

| YOLOv5x | 86.28 | 82.53 | 87.23 | 55.55 | 42.4 |

| YOLOv5s | 91.51 | 88.54 | 91.45 | 59.71 | - |

| YOLOv5n | 92.01 | 84.63 | 90.86 | 59.37 | - |

| YOLOv7-tiny | 90.93 | 86.7 | 91.22 | 58.73 | - |

| YOLOv8 | 90.64 | 86.03 | 91.99 | 61.10 | 123.8 |

| YOLOv10x | 89.92 | 89.53 | 94.84 | 71.77 | 33.3 |

| YOLOv10n | 87.58 | 85.14 | 91.90 | 59.55 | - |

| YOLOv10C | 92.38 | 91.66 | 96.79 | 73.56 | 23.7 |

| YOLOv10D | 90.08 | 89.53 | 94.84 | 71.82 | 33.3 |

| YOLOv10G | 91.13 | 90.94 | 96.22 | 71.77 | 24.9 |

| YOLOv10GD | 91.67 | 90.86 | 95.95 | 71.95 | 32.8 |

| YOLOv10GM | 90.66 | 90.34 | 95.69 | 71.65 | 31.0 |

| YOLOv10GS | 91.06 | 90.16 | 94.68 | 70.36 | 31.5 |

| YOLOv10M | 88.19 | 87.54 | 93.70 | 69.21 | 31.2 |

| YOLOv11n | 90.10 | 86.80 | 92.45 | 60.76 | - |

| YOLO-KRM | 92.99 | 94.34 | 97.59 | 77.00 | 22.1 |

| KAN | RFACO | MLCA | RSOD Dataset (/%) | NWPU VHR-10 Dataset (/%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| P↑ | R↑ | mAP@50↑ | mAP@50:95↑ | P↑ | R↑ | mAP@50↑ | mAP@50:95↑ | |||

| × | × | × | 92.37 | 92.13 | 95.91 | 75.95 | 89.92 | 89.53 | 94.84 | 71.77 |

| √ | × | × | 94.44 | 93.39 | 96.99 | 77.54 | 90.63 | 89.63 | 95.76 | 72.16 |

| √ | √ | × | 95.19 | 94.42 | 97.35 | 79.77 | 91.52 | 91.37 | 97.19 | 75.91 |

| √ | √ | √ | 95.48 | 94.5 | 97.68 | 80.07 | 92.99 | 94.34 | 97.59 | 77.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, H.; Bai, X.; Bai, C. Optimization of Object Detection Network Architecture for High-Resolution Remote Sensing. Algorithms 2025, 18, 537. https://doi.org/10.3390/a18090537

Shi H, Bai X, Bai C. Optimization of Object Detection Network Architecture for High-Resolution Remote Sensing. Algorithms. 2025; 18(9):537. https://doi.org/10.3390/a18090537

Chicago/Turabian StyleShi, Hongyan, Xiaofeng Bai, and Chenshuai Bai. 2025. "Optimization of Object Detection Network Architecture for High-Resolution Remote Sensing" Algorithms 18, no. 9: 537. https://doi.org/10.3390/a18090537

APA StyleShi, H., Bai, X., & Bai, C. (2025). Optimization of Object Detection Network Architecture for High-Resolution Remote Sensing. Algorithms, 18(9), 537. https://doi.org/10.3390/a18090537