Modeling Local Search Metaheuristics Using Markov Decision Processes

Abstract

1. Introduction

2. Previous Work

3. Markov Decision Processes for Local Search Metaheuristics

3.1. Definitions

- is a set of states.

- is a set of actions available in state .

- is the state transition matrix, given action a, where .

- is a reward function, .

- is a discount factor.

- The time is discrete and the time horizon is infinite.

- The utility function is the total expected reward.

3.2. Optimal Policies

3.3. Local Search Metaheuristics as Policies

- . Any state can trivially be converted into a candidate solution as the binary representation of the integer i.

- , the set of all possible transformations in all neighborhoods applicable to the current state .

- The transition probabilities, for all , :

- The reward for state j given action is defined by , where f is the objective function (note the slight abuse of notation here since f is defined on rather than , but the conversion is trivial).

- as defined before (we flip one bit), and

- Let , then

3.4. Summary

4. Simulated Annealing

- Let and be defined as in Theorem 1.

- Let us consider action and state . Using Theorem 1 we obtain

5. Computational Experiments

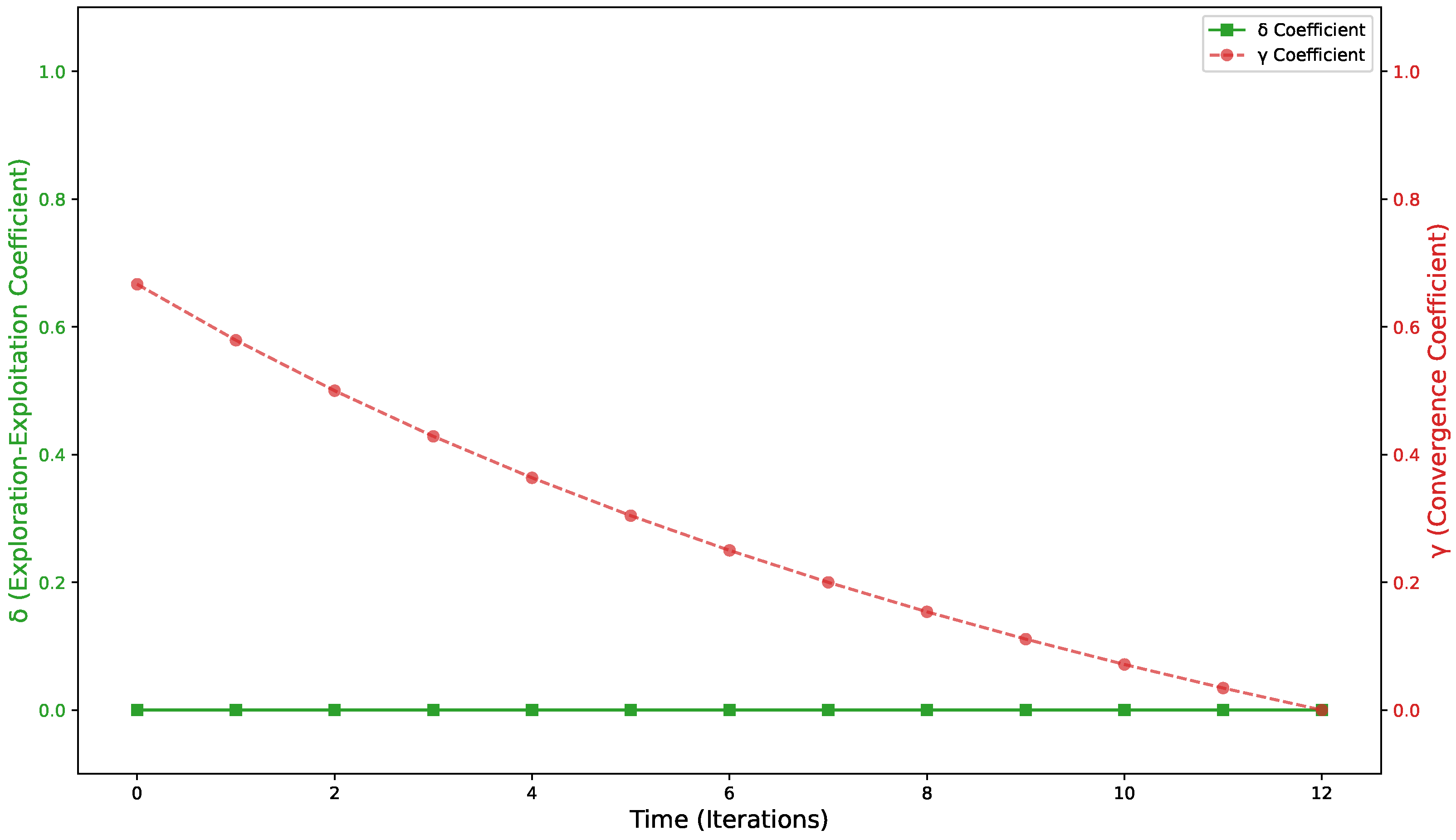

5.1. Hill Climbing

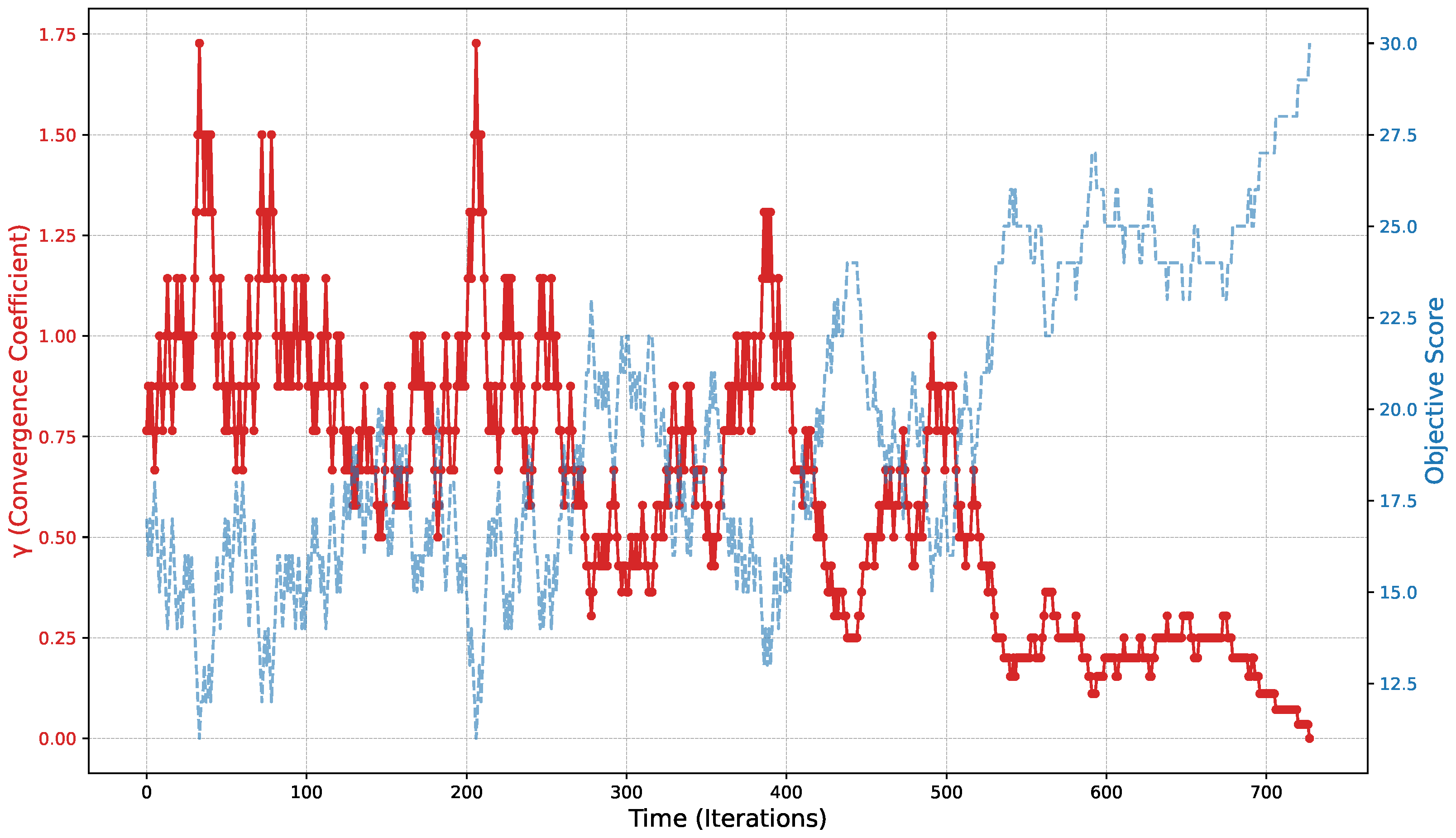

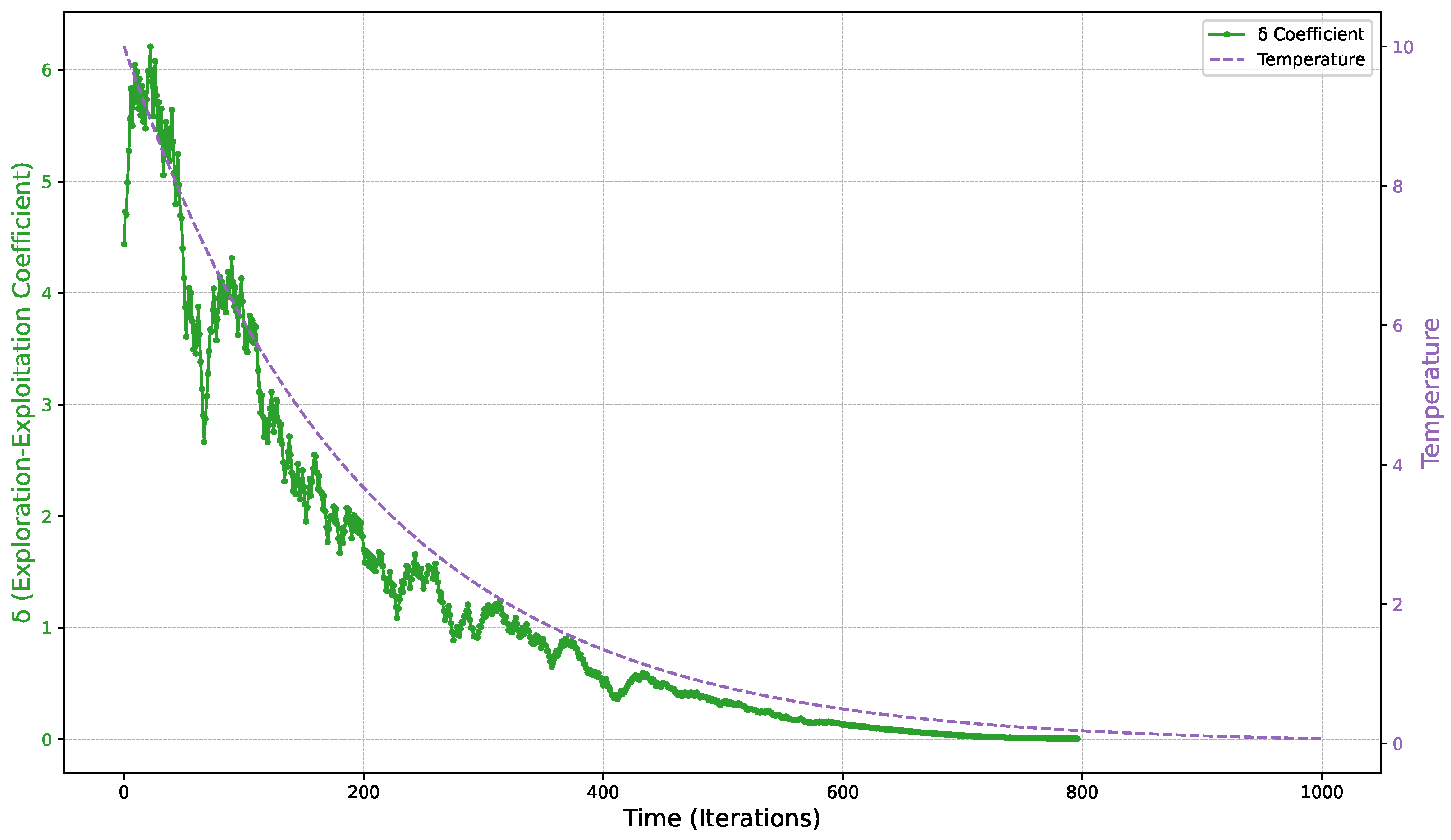

5.2. Simulated Annealing

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Sörensen, K.; Glover, F. Metaheuristics. In Encyclopedia of Operations Research and Management Science; Springer: Boston, MA, USA, 2013; Volume 62, pp. 960–970. [Google Scholar]

- Silberholz, J.; Golden, B. Comparison of Metaheuristics. In Handbook of Metaheuristics; Gendreau, M., Potvin, J.Y., Eds.; Springer: Boston, MA, USA, 2010; pp. 625–640. [Google Scholar] [CrossRef]

- Lin, S.W.; Lee, Z.J.; Ying, K.C.; Lee, C.Y. Applying hybrid meta-heuristics for capacitated vehicle routing problem. Expert Syst. Appl. 2009, 36, 1505–1512. [Google Scholar] [CrossRef]

- Fernandez, S.A.; Juan, A.A.; de Armas Adrián, J.; Silva, D.G.e.; Terrén, D.R. Metaheuristics in Telecommunication Systems: Network Design, Routing, and Allocation Problems. IEEE Syst. J. 2018, 12, 3948–3957. [Google Scholar] [CrossRef]

- Pillay, N. A survey of school timetabling research. Ann. Oper. Res. 2014, 218, 261–293. [Google Scholar] [CrossRef]

- Kaur, M.; Saini, S. A Review of Metaheuristic Techniques for Solving University Course Timetabling Problem. In Advances in Information Communication Technology and Computing; Goar, V., Kuri, M., Kumar, R., Senjyu, T., Eds.; Lecture Notes in Networks and Systems; Springer: Singapore, 2021; pp. 19–25. [Google Scholar] [CrossRef]

- Ecoretti, A.; Ceschia, S.; Schaerf, A. Local search for integrated predictive maintenance and scheduling in flow-shop. In Proceedings of the 14th Metaheuristics International Conference, Syracuse, Italy, 11–14 July 2022. [Google Scholar]

- Ceschia, S.; Guido, R.; Schaerf, A. Solving the static INRC-II nurse rostering problem by simulated annealing based on large neighborhoods. Ann. Oper. Res. 2020, 288, 95–113. [Google Scholar] [CrossRef]

- Doering, J.; Kizys, R.; Juan, A.A.; Fitó, À.; Polat, O. Metaheuristics for rich portfolio optimisation and risk management: Current state and future trends. Oper. Res. Perspect. 2019, 6, 100121. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Glover, F.; Laguna, M. Tabu Search; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Mladenović, N.; Hansen, P. Variable neighborhood search. Comput. Oper. Res. 1997, 24, 1097–1100. [Google Scholar] [CrossRef]

- Shaw, P. Using constraint programming and local search methods to solve vehicle routing problems. In Proceedings of the International Conference on Principles and Practice of Constraint Programming, Pisa, Italy, 26–30 October 1998; pp. 417–431. [Google Scholar]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Larrañaga, P.; Lozano, J.A. Estimation of Distribution Algorithms: A New Tool for Evolutionary Computation; Springer Science & Business Media: Berlin, Germany, 2001; Volume 2. [Google Scholar]

- Liu, B.; Wang, L.; Liu, Y.; Wang, S. A unified framework for population-based metaheuristics. Ann. Oper. Res. 2011, 186, 231–262. [Google Scholar] [CrossRef]

- Chauhdry, M.H.M. A framework using nested partitions algorithm for convergence analysis of population distribution-based methods. EURO J. Comput. Optim. 2023, 11, 100067. [Google Scholar] [CrossRef]

- Suzuki, J. A Markov chain analysis on simple genetic algorithms. IEEE Trans. Syst. Man Cybern. 1995, 25, 655–659. [Google Scholar] [CrossRef]

- Suzuki, J. A further result on the Markov chain model of genetic algorithms and its application to a simulated annealing-like strategy. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 1998, 28, 95–102. [Google Scholar] [CrossRef] [PubMed]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; U Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Eiben, A.E.; Aarts, E.H.L.; Van Hee, K.M. Global convergence of genetic algorithms: A markov chain analysis. In Proceedings of the Parallel Problem Solving from Nature, Dortmund, Germany, 1–3 October 1991; Schwefel, H.P., Männer, R., Eds.; Springer: Berlin/Heidelberg, Germany, 1991; pp. 3–12. [Google Scholar] [CrossRef]

- Cao, Y.; Wu, Q. Convergence analysis of adaptive genetic algorithms. In Proceedings of the Second International Conference on Genetic Algorithms in Engineering Systems: Innovations and Applications, Glasgow, UK, 2–4 September 1997; pp. 85–89, ISSN 0537-9989. [Google Scholar] [CrossRef]

- Eberbach, E. Toward a theory of evolutionary computation. Biosystems 2005, 82, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Cuevas, E.; Diaz, P.; Camarena, O. Experimental Analysis Between Exploration and Exploitation. In Metaheuristic Computation: A Performance Perspective; Cuevas, E., Diaz, P., Camarena, O., Eds.; Intelligent Systems Reference Library, Springer International Publishing: Cham, Switzerland, 2021; pp. 249–269. [Google Scholar] [CrossRef]

- Chen, Y.; He, J. Exploitation and Exploration Analysis of Elitist Evolutionary Algorithms: A Case Study. arXiv 2020, arXiv:2001.10932. [Google Scholar]

- Xu, J.; Zhang, J. Exploration-exploitation tradeoffs in metaheuristics: Survey and analysis. In Proceedings of the 33rd Chinese Control Conference, Nanjing, China, 28–30 July 2014; pp. 8633–8638, ISSN 1934-1768. [Google Scholar] [CrossRef]

- Chen, J.; Xin, B.; Peng, Z.; Dou, L.; Zhang, J. Optimal Contraction Theorem for Exploration–Exploitation Tradeoff in Search and Optimization. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2009, 39, 680–691. [Google Scholar] [CrossRef]

- Kallenberg, L.C. Lecture Notes on Markov Decision Processes; University of Leiden: Leiden, The Netherlands, 2022. [Google Scholar]

- Pisinger, D.; Ropke, S. Large Neighborhood Search. In Handbook of Metaheuristics; Gendreau, M., Potvin, J.Y., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 99–127. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruiz-Torrubiano, R.; Dhungana, D.; Paudel, S.; Buckchash, H. Modeling Local Search Metaheuristics Using Markov Decision Processes. Algorithms 2025, 18, 512. https://doi.org/10.3390/a18080512

Ruiz-Torrubiano R, Dhungana D, Paudel S, Buckchash H. Modeling Local Search Metaheuristics Using Markov Decision Processes. Algorithms. 2025; 18(8):512. https://doi.org/10.3390/a18080512

Chicago/Turabian StyleRuiz-Torrubiano, Rubén, Deepak Dhungana, Sarita Paudel, and Himanshu Buckchash. 2025. "Modeling Local Search Metaheuristics Using Markov Decision Processes" Algorithms 18, no. 8: 512. https://doi.org/10.3390/a18080512

APA StyleRuiz-Torrubiano, R., Dhungana, D., Paudel, S., & Buckchash, H. (2025). Modeling Local Search Metaheuristics Using Markov Decision Processes. Algorithms, 18(8), 512. https://doi.org/10.3390/a18080512