Unveiling the Shadows—A Framework for APT’s Defense AI and Game Theory Strategy

Abstract

1. Introduction

2. Defining Advanced Persistent Threats

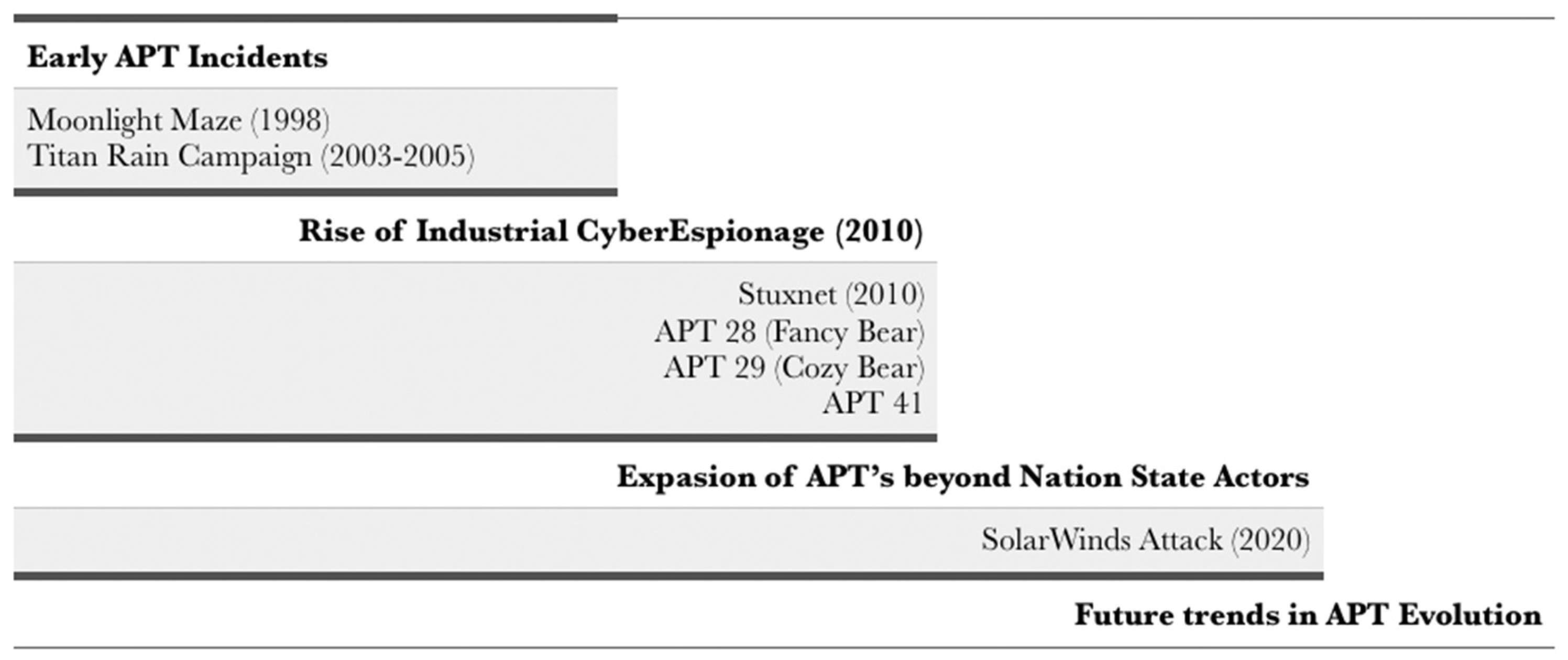

3. Historical Development of APTs

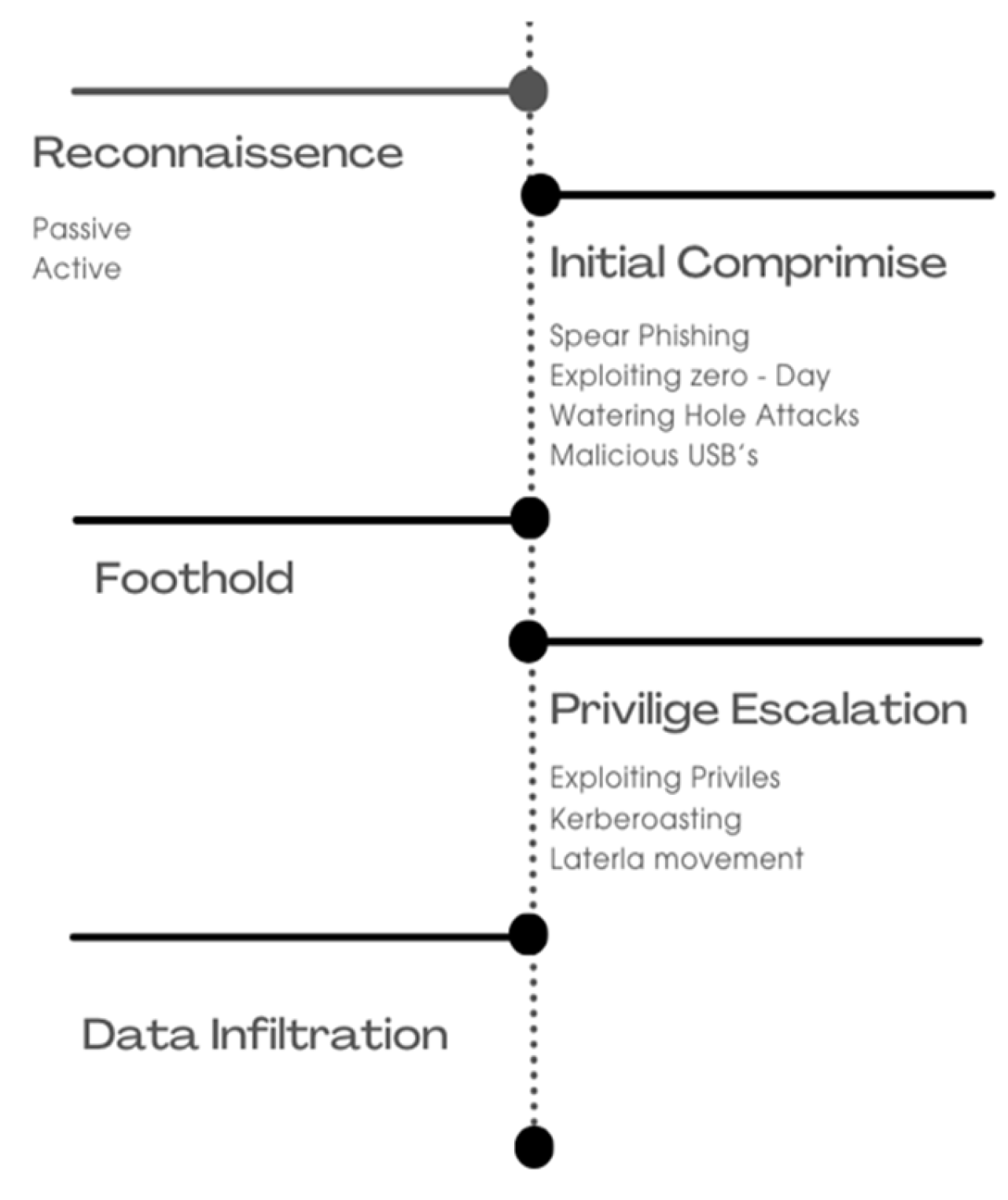

4. APT Attack Processes and Defense Implications

5. Conceptual Framework

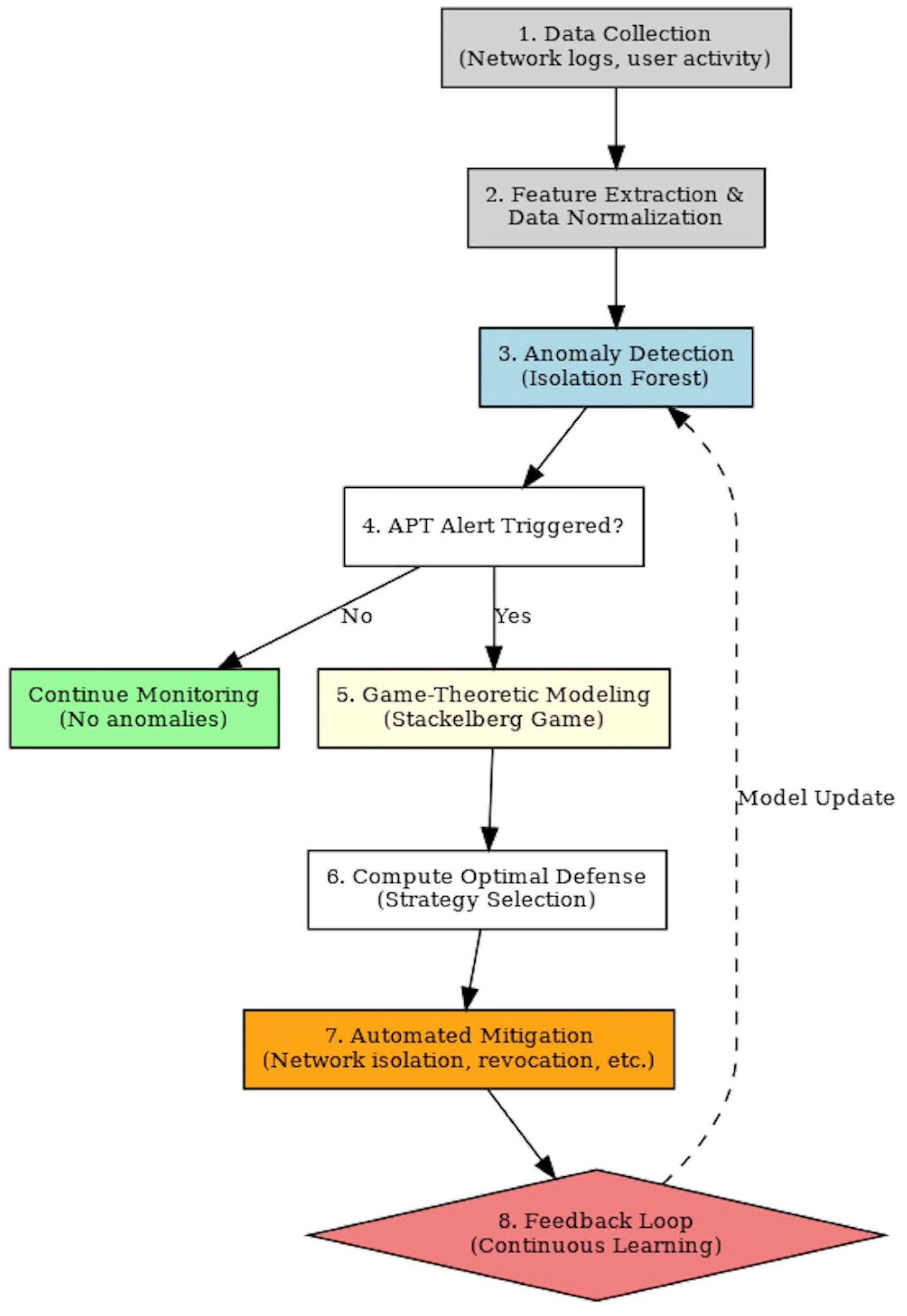

5.1. APT Defense Methodology

5.2. Data Simulation and Preprocessing with Synthetic Data Generation

5.3. Game Strategy (Stackelberg Game Model for Cyber Defense)

- -

- Phase 1—detection phase, when the isolation forest computes s(x, n).

- -

- Phase 2—threat prediction, the attacker’s next step is modeled using game theory, and the optimal defensive strategy is computed;

- -

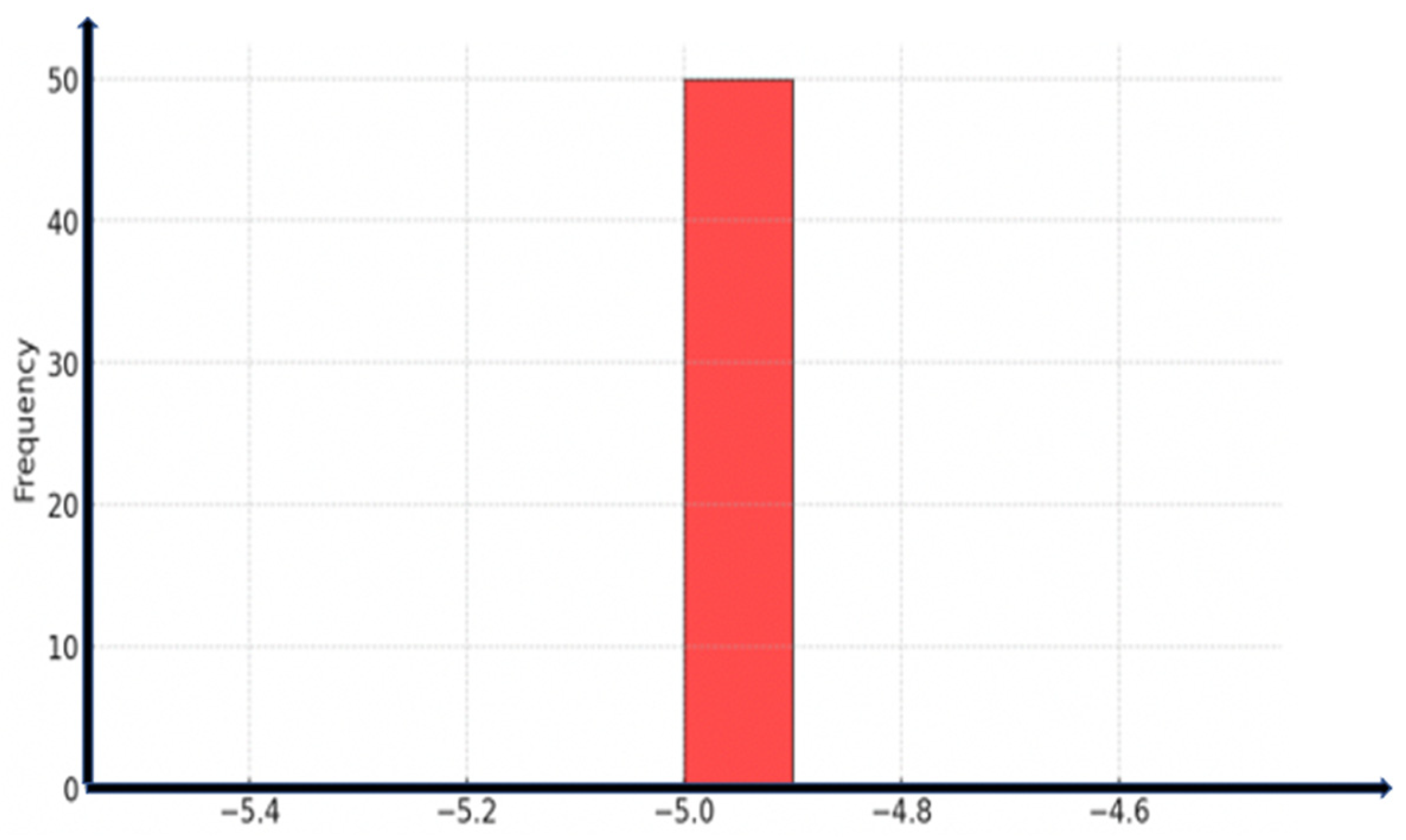

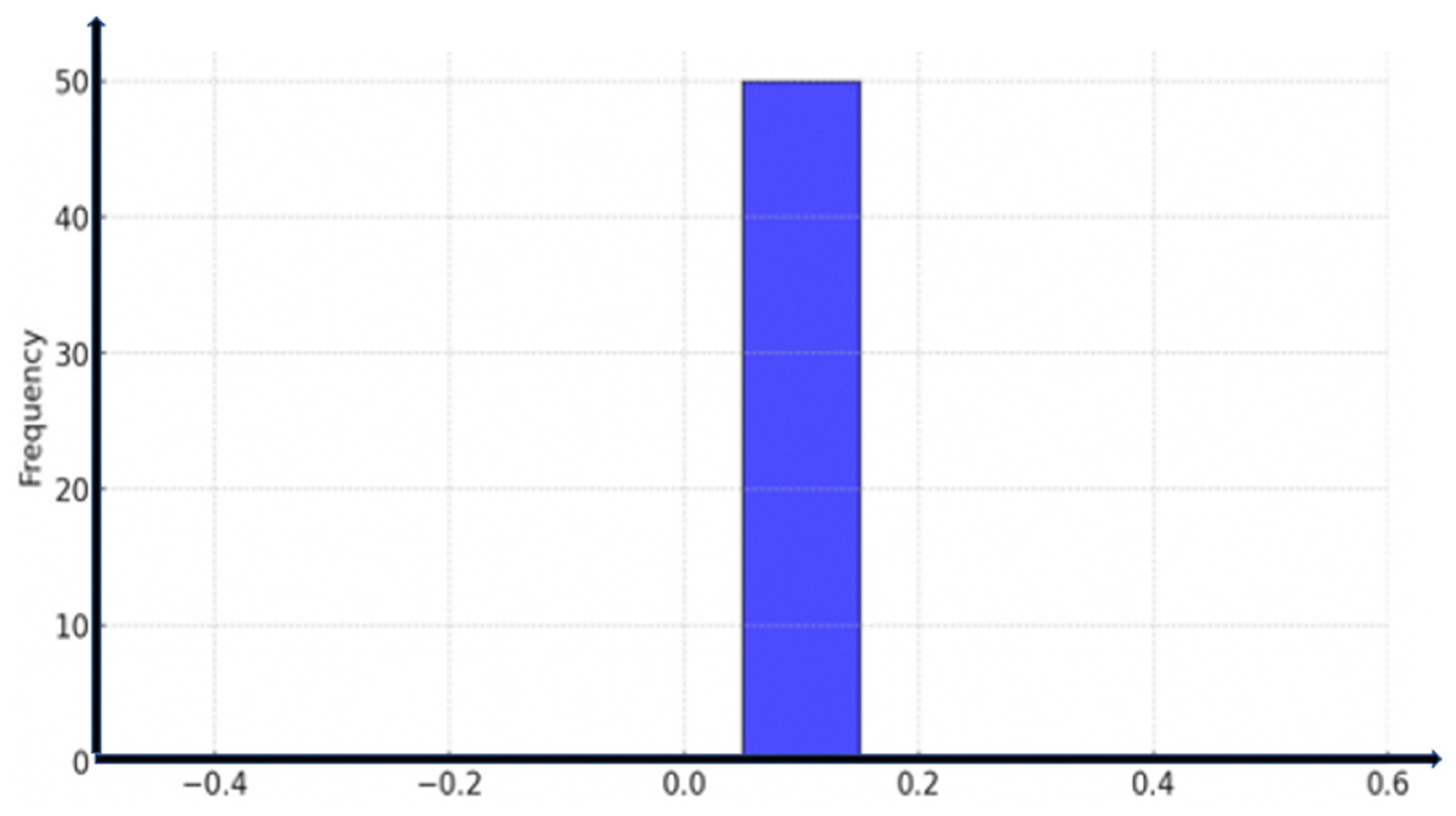

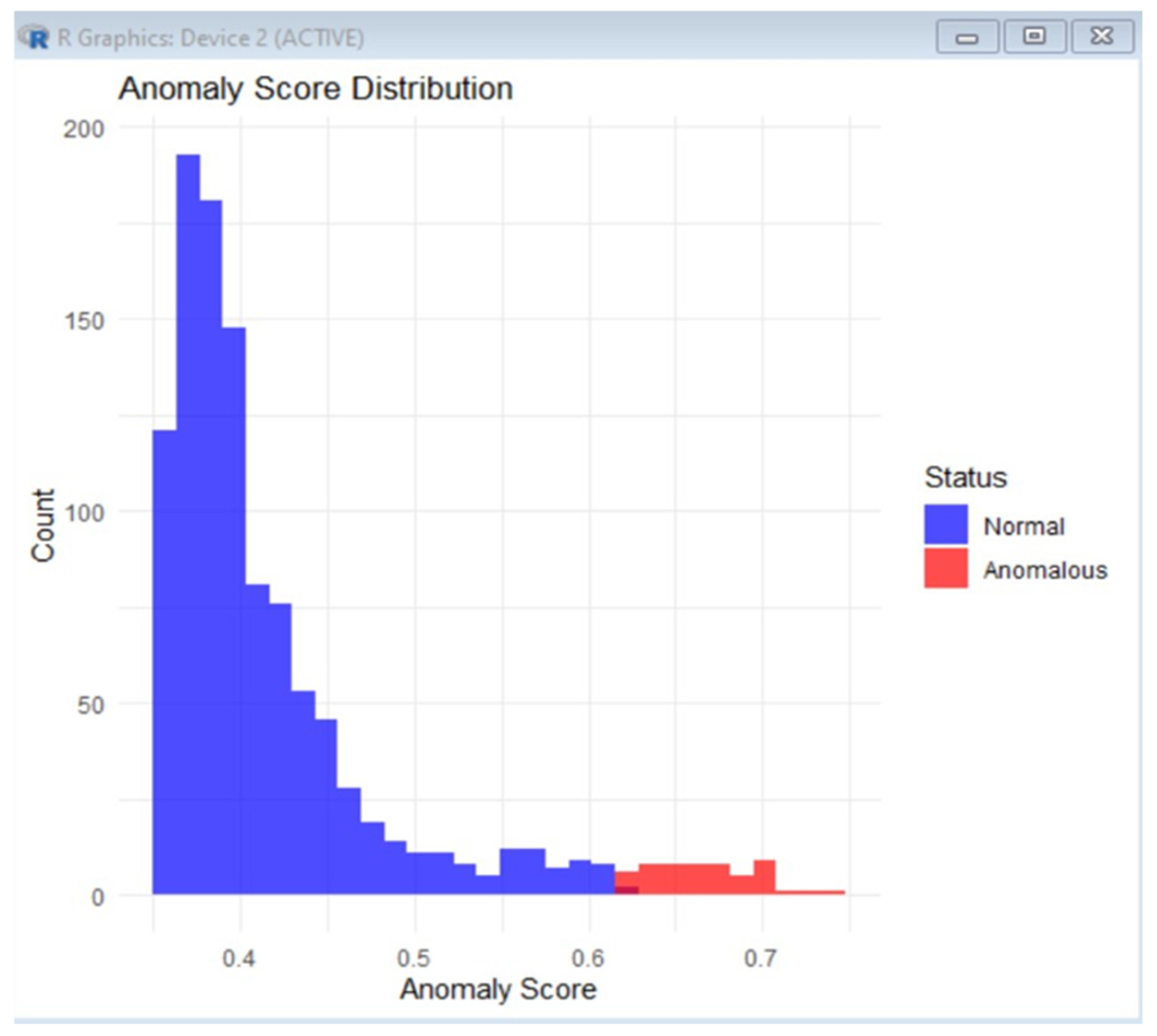

- Phase 3—automated response, the optimal d* strategy is applied (e.g., network segmentation or access revocation). Therefore, by using the isolation forest algorithm, the model can assume 5% anomalous data, and the expected outcome of the expect_anomaly_score (Isolation_Algorithm_python) should be approximate to 0.05 (5%) if the data are balanced.

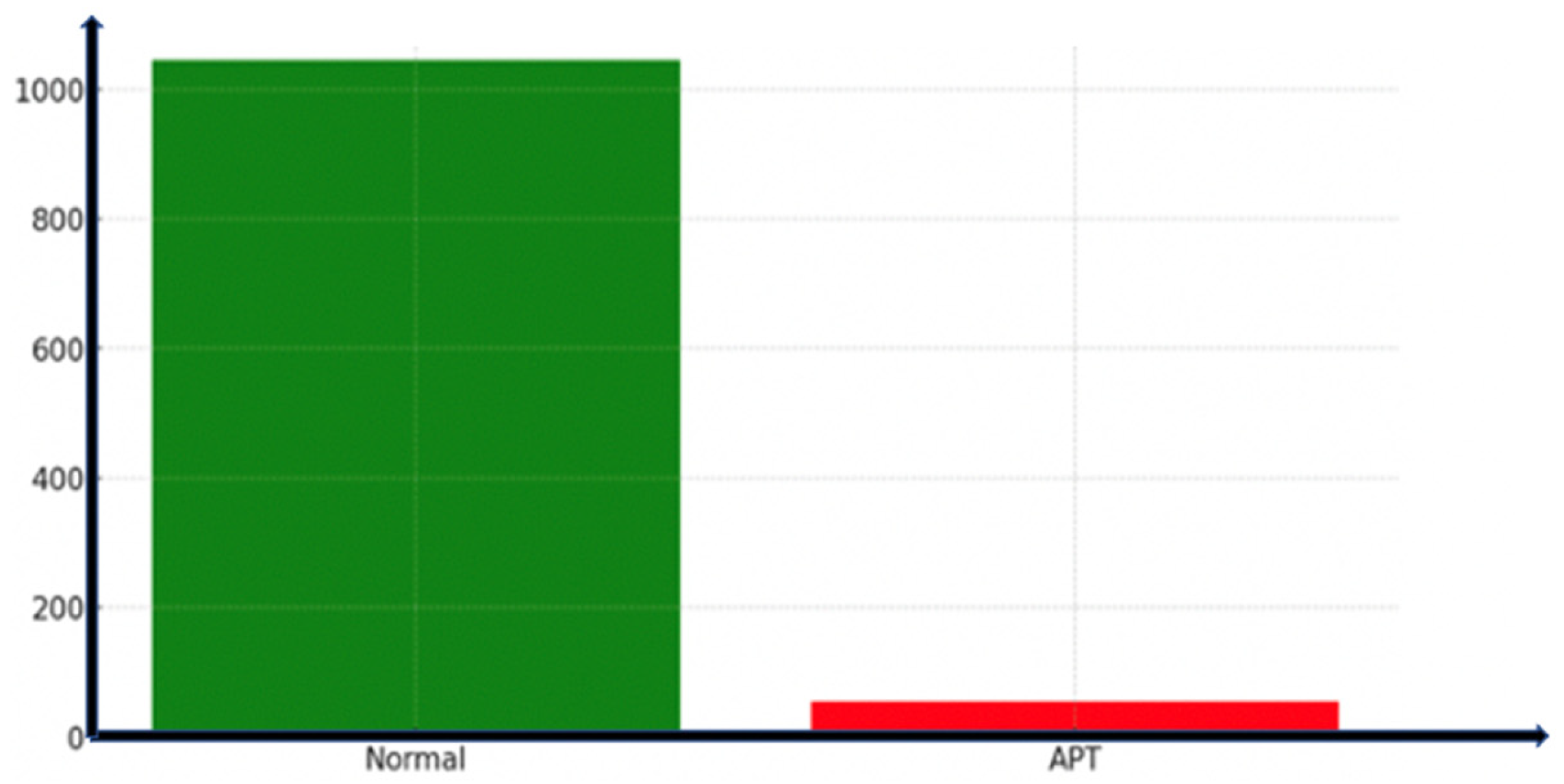

6. Results and Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Symantec Threat Report. The Evolution of Advanced Persistent Threats in 2022. 2022. Available online: https://www.symantec.com/security-center (accessed on 1 December 2024).

- Wang, Y.; Wang, Y.; Liu, J.; Huang, Z. A network gene-based framework for detecting advanced persistent threats. In Proceedings of the 2014 Ninth International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, Guangzhou, China, 8–10 November 2014; pp. 97–102. [Google Scholar]

- IBM Corporation. IBM Security X-Force Threat Intelligence Index 2023; IBM Security; IBM Corporation: Amonk, NY, USA, 2023; Available online: https://www.ibm.com/reports/threat-intelligence (accessed on 1 January 2025).

- Panahnejad, M.; Mirabi, M. APT-Dt-KC: Advanced persistent threat detection based on kill-chain model. J. Supercomput. 2022, 4, 8644–8677. [Google Scholar] [CrossRef]

- Zarras, A.; Xu, P.; Xiao, H.; Kolosnjaji, B. Artficial Intelligence for Cybersecurity; Packt Publishing Ltd.: Birmingham, UK, 2024. [Google Scholar]

- Araujo, T.; Helberger, N.; Kruikemeier, S.; de Vreese, C.H. In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI Soc. 2020, 35, 611–623. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology (NIST). Cybersecurity Framework and APT Mitigation Guidelines; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2023. Available online: https://www.nist.gov/cyberframework (accessed on 1 January 2025).

- FireEye Intelligence. APT Group Activity Analysis: 2023 Review. Available online: https://www.fireeye.com (accessed on 1 January 2025).

- Xuan, C.D. Detecting APT attacks based on network traffic using machine learning. J. Web Eng. 2021, 20, 171–190. [Google Scholar] [CrossRef]

- Wagh, N.; Jadhav, Y.; Tambe, M.; Dargad, S. Eclipsing security: An in-depth analysis of advanced persistent threats. Int. J. Sci. Res. Eng. Manag. (IJSREM) 2023, 7, 1–8. [Google Scholar] [CrossRef]

- Khalid, M.N.A.; Al-Kadhimi, A.A.; Singh, M.M. Recent developments in game-theory approaches for the detection and defense against advanced persistent threats: A systematic review. Mathematics 2023, 11, 1353. [Google Scholar] [CrossRef]

- Challa, N. Unveiling the shadows: A comprehensive exploration of advanced persistent threats and silent intrusions in cybersecurity. J. Artif. Intell. Cloud Comput. 2022, 190, 2–5. [Google Scholar] [CrossRef]

- AL-Aamri, A.S.; Abdulghafor, R.; Turaev, S. Machine learning for APT detection. Sustainability 2023, 15, 13820. [Google Scholar] [CrossRef]

- Perumal, S.; Kola Sujatha, P. Stacking Ensemble-based XSS Attack Detection Strategy Using Classification Algorithms. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatre, India, 8–10 July 2021; pp. 897–901. [Google Scholar]

- Abdel-Basset, M.; Chang, V.; Mohamed, R. HSMA_WOA: A hybrid novel Slime mould algorithm with whale optimization algorithm for tackling the image segmentation problem of chest X-ray images. Appl. Soft Comput. J. 2020, 95, 106642. [Google Scholar] [CrossRef]

- Kaspersky Security Bulletin. State-Sponsored Cyber-Espionage and APT Trends. 2023. Available online: https://academy.kaspersky.com/ (accessed on 1 January 2025).

- IBM X-Force Threat Intelligence Index. Tracking Advanced Cyber Threats and APT Evolution. 2023. Available online: https://secure-iss.com/wp-content/uploads/2023/02/IBM-Security-X-Force-Threat-Intelligence-Index-2023.pdf. (accessed on 1 February 2025).

- CrowdStrike Global Threat Report. APT Operations and Cyber Threat Landscape. 2023. Available online: https://www.crowdstrike.com (accessed on 1 December 2024).

- Abu Talib, M.; Nasir, Q.; Bou Nassif, A.; Mokhamed, T.; Ahmed, N.; Mahfood, B. APT beaconing detection: A systematic review. Comput. Secur. 2022, 122, 102875. [Google Scholar] [CrossRef]

- Sengupta, S.; Chowdhary, A.; Huang, D.; Kambhampati, S. General Sum Markov Games for Strategic Detection of Advanced Persistent Threats Using Moving Target Defense in Cloud Networks. In Decision and Game Theory for Security; Springer: Cham, Switzerland, 2019; pp. 492–512. [Google Scholar]

- Google Threat Analysis Group (TAG). Threat Intelligence Insights on APT Groups. 2023. Available online: https://blog.google/threat-analysis-group (accessed on 1 January 2025).

- SolarWinds Incident Analysis. Technical Breakdown of the SolarWinds Supply Chain Attack. 2021. Available online: https://www.fortinet.com/resources/cyberglossary/solarwinds-cyber-attack (accessed on 1 January 2025).

- Alshamrani, A.; Myneni, S.; Chowdhary, A.; Huang, D. A Survey on Advanced Persistent Threats: Techniques, Solutions, Challenges, and Research Opportunities. IEEE Commun. Surv. Tutor. 2019, 21, 1851–1877. [Google Scholar] [CrossRef]

- Andersen, J. Understanding and interpreting algorithms: Toward a hermeneutics of algorithms. Media Cult. Soc. 2020, 42, 1479–1494. [Google Scholar] [CrossRef]

- Cisco Talos Intelligence. Understanding Advanced Persistent Threats: Case Studies and Defenses. 2023. Available online: https://blog.talosintelligence.com (accessed on 1 November 2024).

- Al-Kadhimi, A.A.; Singh, M.M.; Khalid, M.N.A. A systematic literature review and a conceptual framework proposition for advanced persistent threats detection for mobile devices using artificial intelligence techniques. Appl. Sci. 2023, 13, 8056. [Google Scholar] [CrossRef]

- Daimi, K. Computer and Network Security Essentials; Springer International Publishing AG: London, UK, 2018; pp. 1–618. [Google Scholar]

- Alsahli, M.S.; Almasri, M.M.; Al-Akhras, M.; Al-Issa, A.I.; Alawairdhi, M. Evaluation of Machine Learning Algorithms for Intrusion Detection System in WSN. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 617–626. [Google Scholar] [CrossRef]

- Mandiant Threat Intelligence. Advanced Persistent Threats: Trends and Case Studies, Mandiant Reports. 2023. Available online: https://www.mandiant.com/resources/reports (accessed on 1 January 2025).

- Xiong, C.; Zhu, T.; Dong, W.; Ruan, L.; Yang, R.; Cheng, Y.; Chen, Y.; Cheng, S.; Chen, X. Conan: A Practical Real-Time APT Detection System with High Accuracy and Efficiency. IEEE Trans. Dependable Secur. Comput. 2020, 19, 551–565. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Alam, K.M.R.; Siddique, N.; Adeli, H. A dynamic ensemble learning algorithm for neural networks. Neural Comput. Appl. 2020, 32, 8675–8690. [Google Scholar] [CrossRef]

- Alsanad, A.; Altuwaijri, S. Advanced Persistent Threat Attack Detection using Clustering Algorithms. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 640–649. [Google Scholar] [CrossRef]

- Do, X.C.; Huong, D.T.; Nguyen, T. A novel intelligent cognitive computing-based APT malware detection for Endpoint systems. J. Intell. Fuzzy Syst. 2022, 43, 3527–3547. [Google Scholar]

- Hadlington, L. Human factors in cybersecurity; examining the link between Internet addiction, impulsivity, attitudes towards cybersecurity, and risky cybersecurity behaviours. Heliyon 2017, 3, e00346. [Google Scholar] [CrossRef] [PubMed]

- Zimba, A.; Chen, H.; Wang, Z.; Chishimba, M. Modeling and detection of the multi-stages of Advanced Persistent Threats attacks based on semi-supervised learning and complex networks characteristics. Future Gener. Comput. Syst. 2020, 106, 501–517. [Google Scholar] [CrossRef]

| Characteristic | Advanced Persistent Threats (APTs) | Traditional Cyber Threats |

|---|---|---|

| Motivation | Espionage, strategic sabotage, and geopolitical influence | Financial gain; opportunistic attacks |

| Tactics | Multi-stage infiltration, stealth, and persistence | Quick exploitation; immediate impact |

| Duration | Long-term; often months to years | Short-term; typically minutes to days |

| Attack Vectors | Phishing, zero-days, and supply chain attacks | Ransomware, brute force, and malware |

| Defensive Evasion | Adaptive, AI-powered evasion techniques | Basic obfuscation and encryption |

| Year | APT Name | Attribution | Target Sector | Tactic |

|---|---|---|---|---|

| 1998 | Moonlight Maze | Unknown (suspected Russia) | Gov. and Research | Espionage, data Exfiltration |

| 2010 | Stuxnet | USA/Israel (alleged) | Nuclear (Iran) | ICS sabotage |

| 2016 | APT28/29 | Russia | Political (USA) | Phishing, info warfare |

| 2020 | SolarWinds | Russia | Supply Chain (Global) | Backdoor in software update |

| 2023 | APT41 | China | Health, Telecom, Gaming | Credential Theft, LotL |

| Isolation forest algorithm for anomaly detection |

| # Applying Isolation Forest for Anomaly Detection |

| iso_forest <- isolation.forest(data[ ,1:3], ntrees = 100, sample_size = 256) # Predict anomaly scores data$score <- predict(iso_forest, newdata = data[ ,1:3], type = “score”) # Flag anomalies based on threshold threshold <- quantile(data$score, 0.95) data$anomaly <- ifelse(data$score >= threshold, 1, 0) # Game Theory: Stackelberg Optimization payoff_matrix <- matrix(c(0, -10, 5, -5), nrow = 2, byrow = TRUE) costs <- c(-1, -1) # Objective function (minimization) constraints <- matrix(c(1, 1), nrow = 1) bounds <- c(1) |

| Algorithm for cyber defense in Stackelberg model |

| # Solve the Stackelberg game using linear programming |

| optimal_defense <- lp(direction = “min”, objective.in = costs, const.mat = constraints, const.dir = “=“, const.rhs = bounds, all.int = FALSE) # Extract defense strategy defense_strategy <- optimal_defense$solution # Compute Expected Impact impact <- sum(defense_strategy * c(-10, -5)) |

| Study | Detection Method | Mitigation Strategy | Strategic Modeling | Evaluation Dataset | Key Limitation |

|---|---|---|---|---|---|

| Khalid [11] | SVM + ensemble classification | Rule-based isolation | Not included | Public dataset (CICIDS 2017) | No response modeling |

| Al-Aamri [13] | Random forest, AI classifier | Partial; manual | Not included | Synthetic + enterprise log data | Detection-focused only |

| Challa [12] | Deep learning (LSTM) | Logging and alerting only | Not included | Simulated APT events | No real-time mitigation |

| Xiong C [30] | AI-based + anomaly detection | Semi-automated with feedback loop | Implicit only | Large-scale lab tests | No formalized defense strategy |

| Our Approach | Isolation forest (unsupervised) | Automated real-time mitigation | Stackelberg game model | 50 simulations (synthetic dataset) | Requires real-world validation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brandão, P.; Silva, C. Unveiling the Shadows—A Framework for APT’s Defense AI and Game Theory Strategy. Algorithms 2025, 18, 404. https://doi.org/10.3390/a18070404

Brandão P, Silva C. Unveiling the Shadows—A Framework for APT’s Defense AI and Game Theory Strategy. Algorithms. 2025; 18(7):404. https://doi.org/10.3390/a18070404

Chicago/Turabian StyleBrandão, Pedro, and Carla Silva. 2025. "Unveiling the Shadows—A Framework for APT’s Defense AI and Game Theory Strategy" Algorithms 18, no. 7: 404. https://doi.org/10.3390/a18070404

APA StyleBrandão, P., & Silva, C. (2025). Unveiling the Shadows—A Framework for APT’s Defense AI and Game Theory Strategy. Algorithms, 18(7), 404. https://doi.org/10.3390/a18070404