Artificial Intelligence and the Human–Computer Interaction in Occupational Therapy: A Scoping Review

Abstract

:1. Introduction

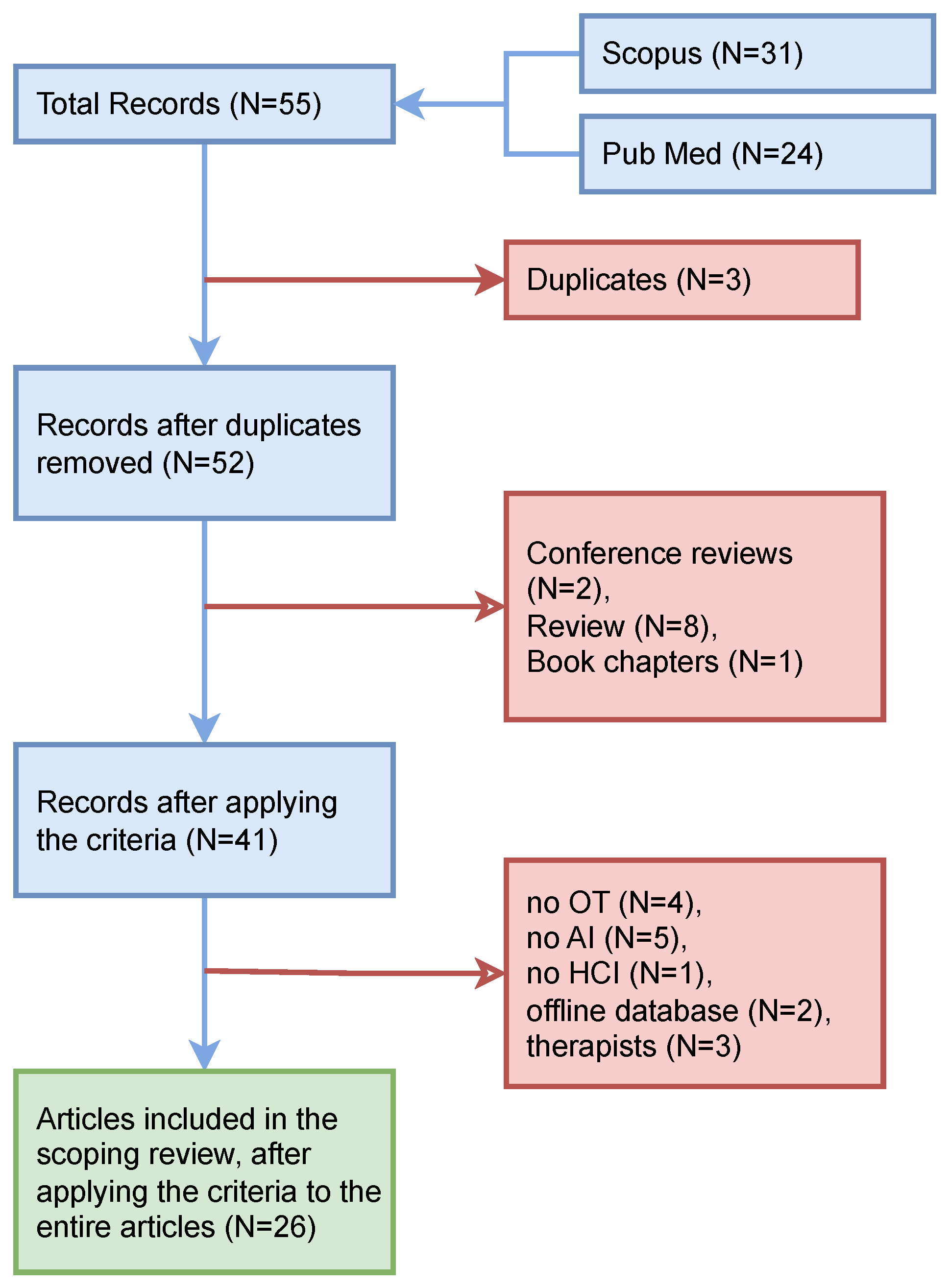

2. Materials and Methods

2.1. Literature Searches

2.2. Eligibility Criteria

2.2.1. Inclusion Criteria

2.2.2. Exclusion Criteria

2.3. Data Extraction

2.4. Assessed Outcomes

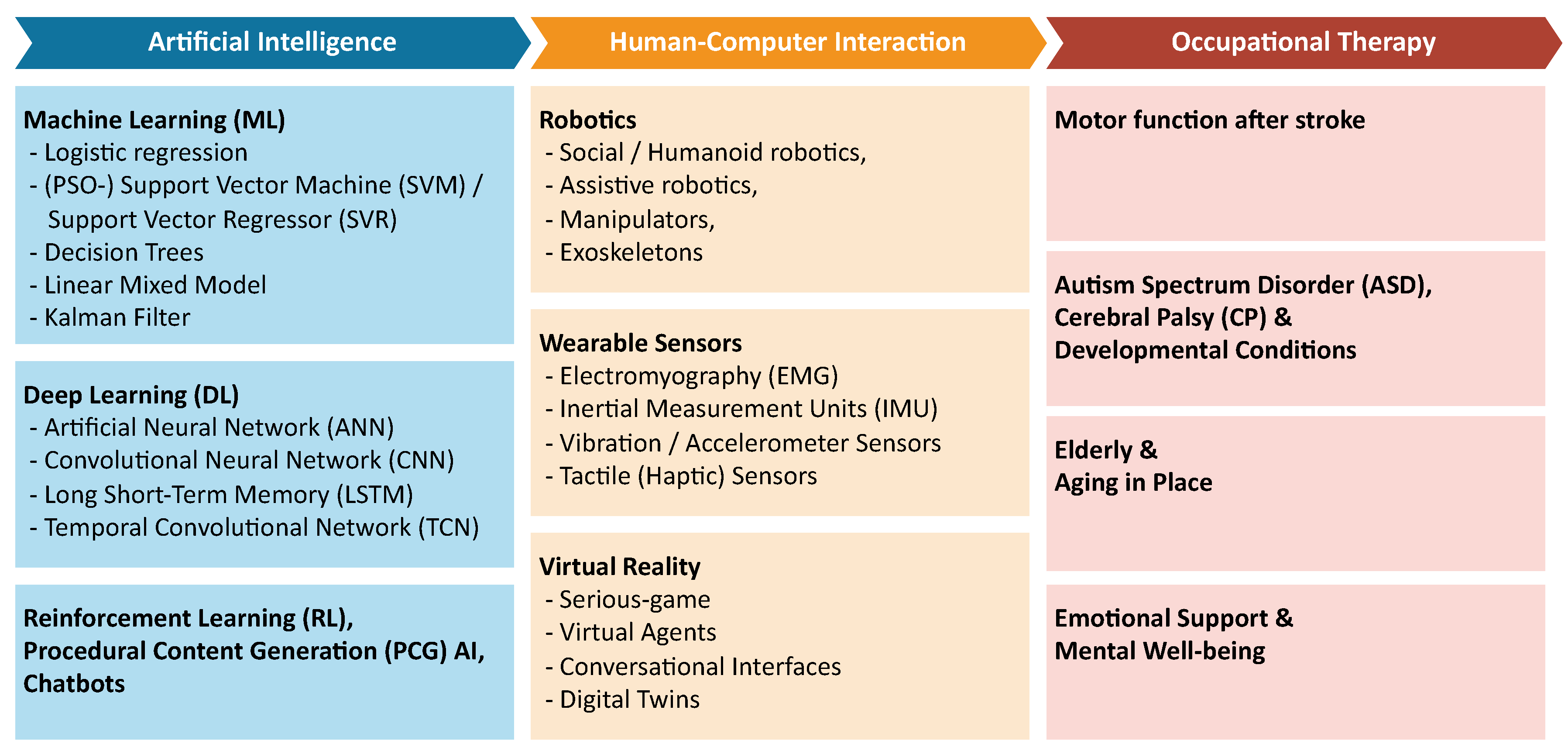

3. Results

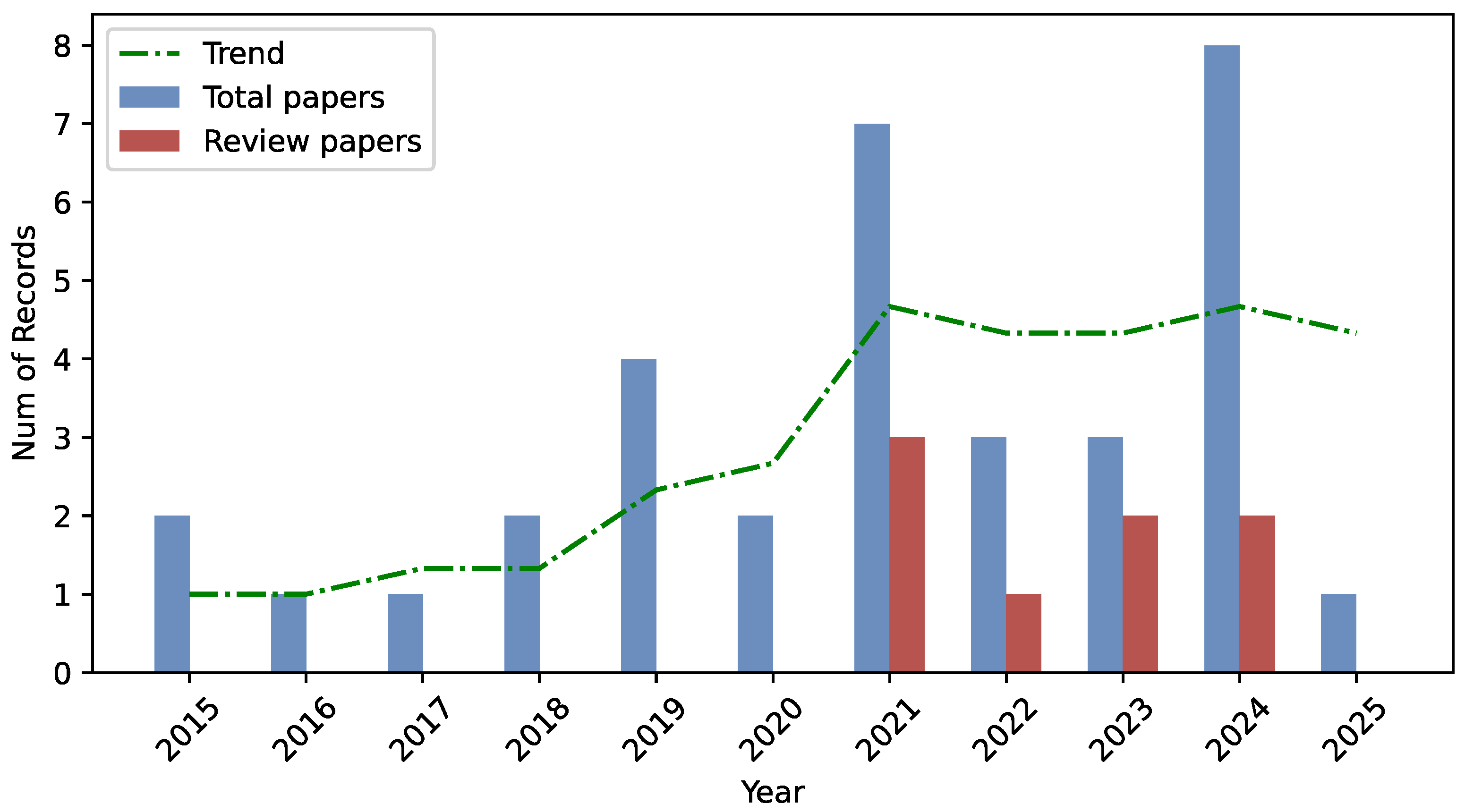

3.1. Search Results

3.1.1. Motor Function After Stroke

| Authors | Application Domain | Technology | Input Data | Validation Method | Employed Subjects | Results |

|---|---|---|---|---|---|---|

| Lauer et al., 2024 [31] | Exoskeleton and serious game-based stroke rehabilitation therapy configuration | Digital twins, exoskeleton, serious-game VR, logistic regression, and CNN | sEMG, RGB, and motion tracking | Real test on young adults | 8 patients and 6 therapists | detection of unnatural supportive movements |

| Li et al., 2023 [32] | Patient upper-limb motor ability recognition and space reshaping | 7-DoF robotic arm, particle swarm optimization (PSO)–SVM, and LSTM | EMG and kinematic data | Real test on young adults | 10 healthy | 73.47, 61.61, and 68.07% recognition of three training stages, and 0.3890 MAE on torques estimation |

| Connan et al., 2021 [33] | Teleoperation of a bimanual humanoid robot for daily tasks execution | Humanoid robot TORO and interactive ML | EMG and IMU-based motion tracking | Real test on young adults | 2 patients and 7 healthy | perceived difficulty decrease between first and last repetition of daily tasks |

| Adams et al., 2017 [35] | VR exercises for upper extremity recovery after stroke | VR (SaeboVR), Kalman filter-based kinematic pose estimation, and linear mixed model | Kinect sensory data | Real test on adults | 15 patients | FMUE test and WMFT–FAS |

| Adams et al., 2014 [34] | VR exercises for upper extremity assessment after stroke | VR (VOTA) and unscented Kalman filter | Kinect sensory data | Real test on adults | 14 patients | Spearman correlation between VOTA-duration and WMFT-TIME |

3.1.2. ASD and Developmental Conditions

3.1.3. Elderly Support and AL

| Authors | Application Domain | Technology | Input Data | Validation Method | Employed Subjects | Results |

|---|---|---|---|---|---|---|

| Tsui et al., 2025 [51] | Factors of robotic assistants for elderly | AI-enabled humanoid robots | Focus groups and questionnaires | Real test on older adults | 82 caregivers and care receivers | Robots performing household and communicating |

| Fei et al., 2024 [55] | Visually impaired assistive system for grasping | Dobot magician manipulator and YOLOv5 CNN | RGB-D images, speech data, and vibration data | Real test on healthy subjects | n/a | 99.3% mAP object detection, 37 s assisted grasping, and 24 s active grasping |

| Shahria et al., 2024 [56] | Object detection for AAL assistive robotic arms | Dataset | RGB images | No | − | 112,000 images from COCO, Open Images, LVIS, and Roboflow Universe |

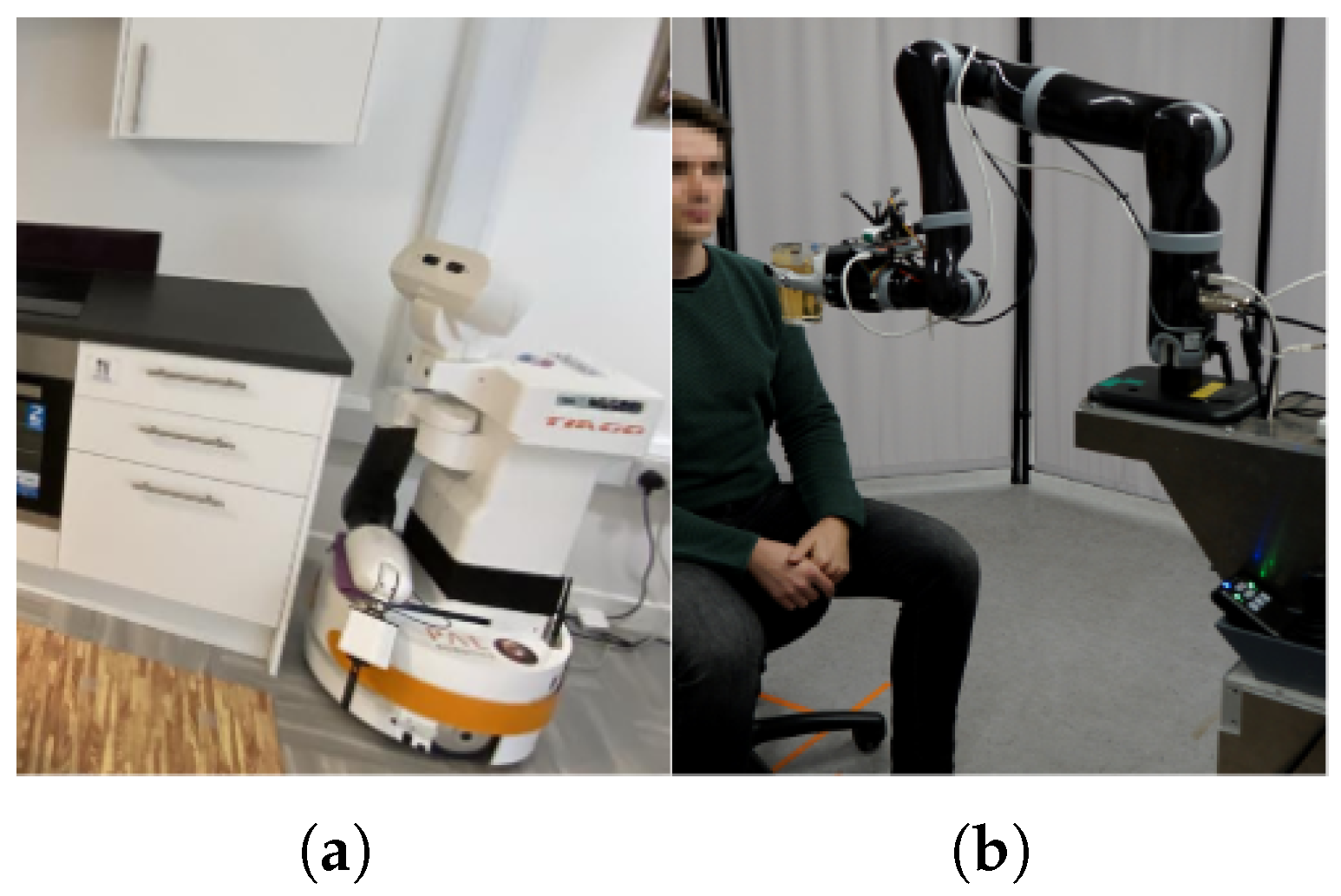

| Ranieri et al., 2021 [53] | Multi-modal ADL recognition | TIAGo robot, RALT living-lab, and CNN-LSTM/TCN | RGB images and IMU data | Real test on adults | 16 healthy | 98.61% action recognition on real data |

| Try et al., 2021 [57] | Robotic manipulation for assisted drinking | Kinova Jaco robotic arm, face, and dlib landmark detection | RGB images and distance sensory data | Real test on adults | 9 healthy | cup delivery success rate |

| Tiersen et al., 2021 [52] | Smart home systems for people with dementia and caregivers | Tablet-based puzzles, chatbots, smartwatches, ambient sensors, and physiological measurement devices | Semi-structured interviews, focus groups, and workshops | Real test on older adults | 35 caregivers and 35 care receivers | Participatory design processes foster more effective, inclusive, and rapid innovation in public health sectors |

| Erickson et al., 2019 [58] | Robotic manipulation for assisted dressing and bathing | PR2 robot, 7-DoF robotic arm, amd ANN | Capacitive sensory data | Real test on adults | 4 healthy | <2.6 cm distance and <6 N applied force |

| Coviello et al., 2019 [54] | Walking support for elderly | ASTRO robot and decision trees | Laser scan data, FSR sensory data, and questionnaire | Real test on adults | 7 healthy | driving path accuracy and positive user experience |

3.1.4. Emotional Expression and Virtual Companionship

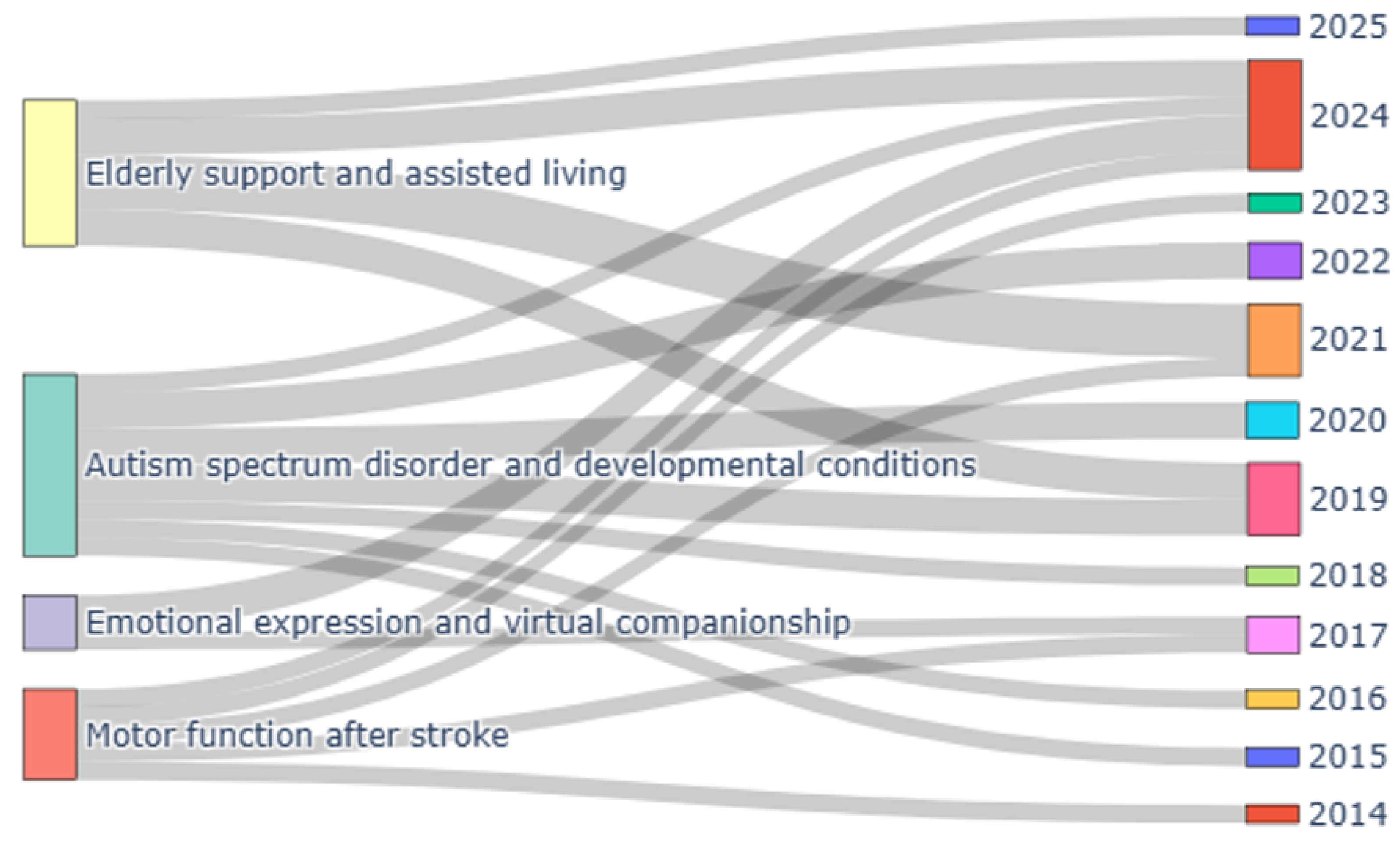

3.2. Bibliometric Analysis

4. Discussion

4.1. Principal Findings

4.2. Open Issues and Future Perspectives

4.3. Review Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

Abbreviations

| ADL | Activity of daily living |

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| HCI | Human–Computer Interaction |

| OT | Occupational Therapy |

References

- Cowen, K.; Collins, T.; Carr, S.; Wilson Menzfeld, G. The role of Occupational Therapy in community development to combat social isolation and loneliness. Br. J. Occup. Ther. 2024, 87, 434–442. [Google Scholar] [CrossRef]

- World Federation of Occupational Therapists. Definitions of Occupational Therapy from Member Organisations; World Health Organization: Geneva, Switzerland, 2013.

- Pancholi, S.; Wachs, J.P.; Duerstock, B.S. Use of artificial intelligence techniques to assist individuals with physical disabilities. Annu. Rev. Biomed. Eng. 2024, 26, 1–24. [Google Scholar] [CrossRef]

- Kaelin, V.C.; Valizadeh, M.; Salgado, Z.; Parde, N.; Khetani, M.A. Artificial intelligence in rehabilitation targeting the participation of children and youth with disabilities: Scoping review. J. Med. Internet Res. 2021, 23, e25745. [Google Scholar] [CrossRef]

- Oikonomou, K.M.; Papapetros, I.T.; Kansizoglou, I.; Sirakoulis, G.C.; Gasteratos, A. Navigation with Care: The ASPiDA Assistive Robot. In Proceedings of the 2023 18th International Workshop on Cellular Nanoscale Networks and their Applications (CNNA), Xanthi, Greece, 28–30 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4. [Google Scholar]

- Kansizoglou, I.; Tsintotas, K.A.; Bratanov, D.; Gasteratos, A. Drawing-aware Parkinson’s disease detection through hierarchical deep learning models. IEEE Access 2025, 13, 21880–21890. [Google Scholar] [CrossRef]

- Fanciullacci, C.; McKinney, Z.; Monaco, V.; Milandri, G.; Davalli, A.; Sacchetti, R.; Laffranchi, M.; De Michieli, L.; Baldoni, A.; Mazzoni, A.; et al. Survey of transfemoral amputee experience and priorities for the user-centered design of powered robotic transfemoral prostheses. J. Neuroeng. Rehabil. 2021, 18, 168. [Google Scholar] [CrossRef]

- Keroglou, C.; Kansizoglou, I.; Michailidis, P.; Oikonomou, K.M.; Papapetros, I.T.; Dragkola, P.; Michailidis, I.T.; Gasteratos, A.; Kosmatopoulos, E.B.; Sirakoulis, G.C. A survey on technical challenges of assistive robotics for elder people in domestic environments: The aspida concept. IEEE Trans. Med. Robot. Bionics 2023, 5, 196–205. [Google Scholar] [CrossRef]

- Sawik, B.; Tobis, S.; Baum, E.; Suwalska, A.; Kropińska, S.; Stachnik, K.; Pérez-Bernabeu, E.; Cildoz, M.; Agustin, A.; Wieczorowska-Tobis, K. Robots for elderly care: Review, multi-criteria optimization model and qualitative case study. Healthcare 2023, 11, 1286. [Google Scholar] [CrossRef]

- Berrezueta-Guzman, J.; Robles-Bykbaev, V.E.; Pau, I.; Pesántez-Avilés, F.; Martín-Ruiz, M.L. Robotic technologies in ADHD care: Literature review. IEEE Access 2021, 10, 608–625. [Google Scholar] [CrossRef]

- Zhang, Y.; D’Haeseleer, I.; Coelho, J.; Vanden Abeele, V.; Vanrumste, B. Recognition of bathroom activities in older adults using wearable sensors: A systematic review and recommendations. Sensors 2021, 21, 2176. [Google Scholar] [CrossRef]

- Piszcz, A.; Rojek, I.; Mikołajewski, D. Impact of Virtual Reality on Brain–Computer Interface Performance in IoT Control—Review of Current State of Knowledge. Appl. Sci. 2024, 14, 10541. [Google Scholar] [CrossRef]

- Ku, J.; Kang, Y.J. Novel virtual reality application in field of neurorehabilitation. Brain Neurorehabilit. 2018, 11, e5. [Google Scholar] [CrossRef]

- Piszcz, A. BCI in VR: An immersive way to make the brain-computer interface more efficient. Stud. I Mater. Inform. Stosow. 2021, 13, 11–16. [Google Scholar]

- Shen, J.; Zhang, C.J.; Jiang, B.; Chen, J.; Song, J.; Liu, Z.; He, Z.; Wong, S.Y.; Fang, P.H.; Ming, W.K.; et al. Artificial intelligence versus clinicians in disease diagnosis: Systematic review. JMIR Med. Inform. 2019, 7, e10010. [Google Scholar] [CrossRef]

- Ochella, S.; Shafiee, M.; Dinmohammadi, F. Artificial intelligence in prognostics and health management of engineering systems. Eng. Appl. Artif. Intell. 2022, 108, 104552. [Google Scholar] [CrossRef]

- Wang, C.; Zhu, X.; Hong, J.C.; Zheng, D. Artificial intelligence in radiotherapy treatment planning: Present and future. Technol. Cancer Res. Treat. 2019, 18, 1533033819873922. [Google Scholar] [CrossRef]

- Zhang, Y.; Weng, Y.; Lund, J. Applications of explainable artificial intelligence in diagnosis and surgery. Diagnostics 2022, 12, 237. [Google Scholar] [CrossRef]

- Shaik, T.; Tao, X.; Higgins, N.; Li, L.; Gururajan, R.; Zhou, X.; Acharya, U.R. Remote patient monitoring using artificial intelligence: Current state, applications, and challenges. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1485. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. How artificial intelligence is shaping medical imaging technology: A survey of innovations and applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef]

- Tang, X. The role of artificial intelligence in medical imaging research. BJR|Open 2019, 2, 20190031. [Google Scholar] [CrossRef] [PubMed]

- Bhinder, B.; Gilvary, C.; Madhukar, N.S.; Elemento, O. Artificial intelligence in cancer research and precision medicine. Cancer Discov. 2021, 11, 900–915. [Google Scholar] [CrossRef] [PubMed]

- Carini, C.; Seyhan, A.A. Tribulations and future opportunities for artificial intelligence in precision medicine. J. Transl. Med. 2024, 22, 411. [Google Scholar] [CrossRef] [PubMed]

- Badawy, M.; Ramadan, N.; Hefny, H.A. Healthcare predictive analytics using machine learning and deep learning techniques: A survey. J. Electr. Syst. Inf. Technol. 2023, 10, 40. [Google Scholar] [CrossRef]

- Khan, Z.F.; Alotaibi, S.R. Applications of artificial intelligence and big data analytics in m-health: A healthcare system perspective. J. Healthc. Eng. 2020, 2020, 8894694. [Google Scholar] [CrossRef] [PubMed]

- Moglia, A.; Georgiou, K.; Georgiou, E.; Satava, R.M.; Cuschieri, A. A systematic review on artificial intelligence in robot-assisted surgery. Int. J. Surg. 2021, 95, 106151. [Google Scholar] [CrossRef]

- Khalifa, M.; Albadawy, M.; Iqbal, U. Advancing clinical decision support: The role of artificial intelligence across six domains. Comput. Methods Programs Biomed. Update 2024, 5, 100142. [Google Scholar] [CrossRef]

- Peters, M.D.; Marnie, C.; Tricco, A.C.; Pollock, D.; Munn, Z.; Alexander, L.; McInerney, P.; Godfrey, C.M.; Khalil, H. Updated methodological guidance for the conduct of scoping reviews. JBI Evid. Implement. 2021, 19, 3–10. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.; Horsley, T.; Weeks, L.; et al. PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef] [PubMed]

- Ding, Z.; Ji, Y.; Gan, Y.; Wang, Y.; Xia, Y. Current status and trends of technology, methods, and applications of Human–Computer Intelligent Interaction (HCII): A bibliometric research. Multimed. Tools Appl. 2024, 83, 69111–69144. [Google Scholar] [CrossRef]

- Lauer-Schmaltz, M.W.; Cash, P.; Hansen, J.P.; Das, N. Human digital twins in rehabilitation: A case study on exoskeleton and serious-game-based stroke rehabilitation using the ethica methodology. IEEE Access 2024, 12, 180968–180991. [Google Scholar] [CrossRef]

- Li, X.; Lu, Q.; Chen, P.; Gong, S.; Yu, X.; He, H.; Li, K. Assistance level quantification-based human-robot interaction space reshaping for rehabilitation training. Front. Neurorobot. 2023, 17, 1161007. [Google Scholar] [CrossRef]

- Connan, M.; Sierotowicz, M.; Henze, B.; Porges, O.; Albu-Schäffer, A.; Roa, M.A.; Castellini, C. Learning to teleoperate an upper-limb assistive humanoid robot for bimanual daily-living tasks. Biomed. Phys. Eng. Express 2021, 8, 015022. [Google Scholar] [CrossRef]

- Adams, R.J.; Lichter, M.D.; Krepkovich, E.T.; Ellington, A.; White, M.; Diamond, P.T. Assessing upper extremity motor function in practice of virtual activities of daily living. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 23, 287–296. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.J.; Lichter, M.D.; Ellington, A.; White, M.; Armstead, K.; Patrie, J.T.; Diamond, P.T. Virtual activities of daily living for recovery of upper extremity motor function. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 26, 252–260. [Google Scholar] [CrossRef] [PubMed]

- Senadheera, I.; Hettiarachchi, P.; Haslam, B.; Nawaratne, R.; Sheehan, J.; Lockwood, K.J.; Alahakoon, D.; Carey, L.M. AI applications in adult stroke recovery and rehabilitation: A scoping review using AI. Sensors 2024, 24, 6585. [Google Scholar] [CrossRef] [PubMed]

- Lagos, M.; Pousada, T.; Fernández, A.; Carneiro, R.; Martínez, A.; Groba, B.; Nieto-Riveiro, L.; Pereira, J. Outcome measures applied to robotic assistive technology for people with cerebral palsy: A pilot study. Disabil. Rehabil. Assist. Technol. 2024, 19, 3015–3022. [Google Scholar] [CrossRef]

- Chandrashekhar, R.; Wang, H.; Rippetoe, J.; James, S.A.; Fagg, A.H.; Kolobe, T.H. The impact of cognition on motor learning and skill acquisition using a robot intervention in infants with cerebral palsy. Front. Robot. AI 2022, 9, 805258. [Google Scholar] [CrossRef]

- Horstmann, A.C.; Mühl, L.; Köppen, L.; Lindhaus, M.; Storch, D.; Bühren, M.; Röttgers, H.R.; Krajewski, J. Important preliminary insights for designing successful communication between a robotic learning assistant and children with autism spectrum disorder in Germany. Robotics 2022, 11, 141. [Google Scholar] [CrossRef]

- Jain, S.; Thiagarajan, B.; Shi, Z.; Clabaugh, C.; Matarić, M.J. Modeling engagement in long-term, in-home socially assistive robot interventions for children with autism spectrum disorders. Sci. Robot. 2020, 5, eaaz3791. [Google Scholar] [CrossRef]

- AlSadoun, W.; Alwahaibi, N.; Altwayan, L. Towards intelligent technology in art therapy contexts. In Proceedings of the HCI International 2020-Late Breaking Papers: Multimodality and Intelligence: 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Proceedings 22. Springer: Cham, Switzerland, 2020; pp. 397–405. [Google Scholar]

- Fang, Z.; Paliyawan, P.; Thawonmas, R.; Harada, T. Towards an angry-birds-like game system for promoting mental well-being of players using art-therapy-embedded procedural content generation. In Proceedings of the 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 15–18 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 947–948. [Google Scholar]

- Li, M.; Li, X.; Xie, L.; Liu, J.; Wang, F.; Wang, Z. Assisted therapeutic system based on reinforcement learning for children with autism. Comput. Assist. Surg. 2019, 24, 94–104. [Google Scholar] [CrossRef]

- Irani, A.; Moradi, H.; Vahid, L.K. Autism screening using a video game based on emotions. In Proceedings of the 2018 2nd National and 1st International Digital Games Research Conference: Trends, Technologies, and Applications (DGRC), Tehran, Iran, 29–30 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 40–45. [Google Scholar]

- Bonillo, C.; Cerezo, E.; Marco, J.; Baldassarri, S. Designing therapeutic activities based on tangible interaction for children with developmental delay. In Proceedings of the Universal Access in Human-Computer Interaction. Users and Context Diversity: 10th International Conference, UAHCI 2016, Held as Part of HCI International 2016, Toronto, ON, Canada, 17–22 July 2016; Proceedings, Part III 10. Springer: Cham, Switzerland, 2016; pp. 183–192. [Google Scholar]

- Zidianakis, E.; Zidianaki, I.; Ioannidi, D.; Partarakis, N.; Antona, M.; Paparoulis, G.; Stephanidis, C. Employing ambient intelligence technologies to adapt games to children’s playing maturity. In Proceedings of the Universal Access in Human-Computer Interaction, Access to Learning, Health and Well-Being: 9th International Conference, UAHCI 2015, Held as Part of HCI International 2015, Los Angeles, CA, USA, 2–7 August 2015; Proceedings, Part III 9. Springer: Cham, Switzerland, 2015; pp. 577–589. [Google Scholar]

- Baltrusaitis, T.; Zadeh, A.; Lim, Y.C.; Morency, L.P. OpenFace 2.0: Facial Behavior Analysis Toolkit. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition, Xi’an, China, 15–19 May 2018; pp. 59–66. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. Openpose: Realtime multi-person 2d pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef]

- Boersma, P. Praat, a system for doing phonetics by computer. Glot. Int. 2001, 5, 341–345. [Google Scholar]

- Mahmoudi Asl, A.; Molinari Ulate, M.; Franco Martin, M.; van der Roest, H. Methodologies used to study the feasibility, usability, efficacy, and effectiveness of social robots for elderly adults: Scoping review. J. Med. Internet Res. 2022, 24, e37434. [Google Scholar] [CrossRef]

- Tsui, K.M.; Baggett, R.; Chiang, C. Exploring Embodiment Form Factors of a Home-Helper Robot: Perspectives from Care Receivers and Caregivers. Appl. Sci. 2025, 15, 891. [Google Scholar] [CrossRef]

- Tiersen, F.; Batey, P.; Harrison, M.J.; Naar, L.; Serban, A.I.; Daniels, S.J.; Calvo, R.A. Smart home sensing and monitoring in households with dementia: User-centered design approach. JMIR Aging 2021, 4, e27047. [Google Scholar] [CrossRef]

- Ranieri, C.M.; MacLeod, S.; Dragone, M.; Vargas, P.A.; Romero, R.A.F. Activity recognition for ambient assisted living with videos, inertial units and ambient sensors. Sensors 2021, 21, 768. [Google Scholar] [CrossRef]

- Coviello, L.; Cavallo, F.; Limosani, R.; Rovini, E.; Fiorini, L. Machine learning based physical human-robot interaction for walking support of frail people. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3404–3407. [Google Scholar]

- Fei, F.; Xian, S.; Yang, R.; Wu, C.; Lu, X. A Wearable Visually Impaired Assistive System Based on Semantic Vision SLAM for Grasping Operation. Sensors 2024, 24, 3593. [Google Scholar] [CrossRef]

- Shahria, M.T.; Rahman, M.H. Activities of Daily Living Object Dataset: Advancing Assistive Robotic Manipulation with a Tailored Dataset. Sensors 2024, 24, 7566. [Google Scholar] [CrossRef]

- Try, P.; Schöllmann, S.; Wöhle, L.; Gebhard, M. Visual sensor fusion based autonomous robotic system for assistive drinking. Sensors 2021, 21, 5419. [Google Scholar] [CrossRef]

- Erickson, Z.; Clever, H.M.; Gangaram, V.; Turk, G.; Liu, C.K.; Kemp, C.C. Multidimensional capacitive sensing for robot-assisted dressing and bathing. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 224–231. [Google Scholar]

- Søraa, R.A.; Tøndel, G.; Kharas, M.W.; Serrano, J.A. What do older adults want from social robots? A qualitative research approach to human-robot interaction (HRI) studies. Int. J. Soc. Robot. 2023, 15, 411–424. [Google Scholar] [CrossRef]

- Winkle, K. Social robots for motivation and engagement in therapy. In Proceedings of the Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 614–617. [Google Scholar]

- Zentner, S.; Barradas Chacon, A.; Wriessnegger, S.C. The Impact of Light Conditions on Neural Affect Classification: A Deep Learning Approach. Mach. Learn. Knowl. Extr. 2024, 6, 199–214. [Google Scholar] [CrossRef]

- Xie, Z.; Wang, Z. Longitudinal examination of the relationship between virtual companionship and social anxiety: Emotional expression as a mediator and mindfulness as a moderator. Psychol. Res. Behav. Manag. 2024, 17, 765–782. [Google Scholar] [CrossRef]

- Kansizoglou, I.; Misirlis, E.; Tsintotas, K.; Gasteratos, A. Continuous emotion recognition for long-term behavior modeling through recurrent neural networks. Technologies 2022, 10, 59. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 39. [Google Scholar] [CrossRef]

- Aliferis, C.; Simon, G. Lessons Learned from Historical Failures, Limitations and Successes of AI/ML in Healthcare and the Health Sciences. Enduring Problems, and the Role of Best Practices. In Artificial Intelligence and Machine Learning in Health Care and Medical Sciences: Best Practices and Pitfalls; Springer: Cham, Swizterland, 2024; pp. 543–606. [Google Scholar]

- Naik, N.; Hameed, B.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and ethical consideration in artificial intelligence in healthcare: Who takes responsibility? Front. Surg. 2022, 9, 862322. [Google Scholar] [CrossRef]

- Yadav, N.; Pandey, S.; Gupta, A.; Dudani, P.; Gupta, S.; Rangarajan, K. Data privacy in healthcare: In the era of artificial intelligence. Indian Dermatol. Online J. 2023, 14, 788–792. [Google Scholar] [CrossRef]

- Voigt, P.; Von dem Bussche, A. The eu general data protection regulation (gdpr). In A Practical Guide, 1st ed.; Springer International Publication: Cham, Swizterland, 2017; Volume 10, pp. 10–5555. [Google Scholar]

- Chen, R.J.; Wang, J.J.; Williamson, D.F.; Chen, T.Y.; Lipkova, J.; Lu, M.Y.; Sahai, S.; Mahmood, F. Algorithmic fairness in artificial intelligence for medicine and healthcare. Nat. Biomed. Eng. 2023, 7, 719–742. [Google Scholar] [CrossRef]

- Nazer, L.H.; Zatarah, R.; Waldrip, S.; Ke, J.X.C.; Moukheiber, M.; Khanna, A.K.; Hicklen, R.S.; Moukheiber, L.; Moukheiber, D.; Ma, H.; et al. Bias in artificial intelligence algorithms and recommendations for mitigation. PLoS Digit. Health 2023, 2, e0000278. [Google Scholar] [CrossRef]

- Chemnad, K.; Othman, A. Digital accessibility in the era of artificial intelligence—Bibliometric analysis and systematic review. Front. Artif. Intell. 2024, 7, 1349668. [Google Scholar] [CrossRef]

- Guo, J.; Li, B. The application of medical artificial intelligence technology in rural areas of developing countries. Health Equity 2018, 2, 174–181. [Google Scholar] [CrossRef] [PubMed]

- Hazra, R.; Chatterjee, P.; Singh, Y.; Podder, G.; Das, T. Data Encryption and Secure Communication Protocols. In Strategies for E-Commerce Data Security: Cloud, Blockchain, AI, and Machine Learning; IGI Global: Hershey, PA, USA, 2024; pp. 546–570. [Google Scholar]

- Longpre, S.; Mahari, R.; Chen, A.; Obeng-Marnu, N.; Sileo, D.; Brannon, W.; Muennighoff, N.; Khazam, N.; Kabbara, J.; Perisetla, K.; et al. A large-scale audit of dataset licensing and attribution in AI. Nat. Mach. Intell. 2024, 6, 975–987. [Google Scholar] [CrossRef]

- Ruh, D.M. Patient perspectives on digital health. In Digital Health; Elsevier: Amsterdam, The Netherlands, 2025; pp. 481–501. [Google Scholar]

- Siala, H.; Wang, Y. SHIFTing artificial intelligence to be responsible in healthcare: A systematic review. Soc. Sci. Med. 2022, 296, 114782. [Google Scholar] [CrossRef]

| Authors | Application Domain | Technology | Input Data | Validation Method | Employed Subjects | Results |

|---|---|---|---|---|---|---|

| Lagos et al., 2024 [37] | Robotic assistants for people with CP | LOLA2 robotic platform and CNN | RGB images | Real test on adults | 9 patients | independence , competence (), adaptability (), and self-esteem () |

| Chandrashekhar et al., 2022 [38] | Cognition on motor learning in infants with CP | SIPPC robot | MDI and MOCS | Real test on infants | 63 patients and healthy | Relationship between cognitive ability and delayed motor skills improvement |

| Hortsmann et al., 2022 [39] | Design of successful interaction between robots and children with ASD | Robots and speech recognition | Speech and interviews | Real test on children | 7 patients and 6 therapists | Need for AI-based engagement detection, features, appearance, and functions |

| Jain et al., 2020 [40] | Engagement with socially assistive robotics for children with ASD | Socially assistive robotics, CNN, and online RL | RGB images, speech, and game scores | Real test on children | 7 patients | engagement in HRI and AUROC in engagement detection |

| AlSadoun et al., 2020 [41] | Art therapy for users with impaired communication skills | Virtual agent, ANN, and facial landmark extraction | RGB images | No | − | Framework of the smart art therapy system |

| Fang et al., 2019 [42] | Art therapy game for mental well-being promotion | VR-serious game, PCG AI, and CNN | Game scores and game images | No | − | Design and implementation of the AI-enabled game |

| Li et al., 2019 [43] | Assisted game for engaging children with ASD | RL, CNN, SVR, and robotic agent | RGB images | Real test on children | 11 patients | Minor decrease in SRS scores |

| Irani et al., 2018 [44] | Assisted game for screening children with ASD | Serious game and Gaussian SVM | RGB images and game scores | Real test on children | 23 patients and 22 healthy | ASD detection accuracy |

| Bonillo et al., 2016 [45] | Activities for children with developmental delay | Serious game, tangible interfaces, and tabletops | RGB images, videos, and game scores | Real test on children | 10 patients | 4 definite tangible games |

| Zidianakis et al., 2015 [46] | Ambient intelligent games adapting to children maturity | Serious game, augmented artifacts, and VR | Forse pressure, accelerometer, tactile sensor, and IR camera | No | − | Design and implementation of games |

| Authors | Application Domain | Technology | Input Data | Validation Method | Employed Subjects | Results |

|---|---|---|---|---|---|---|

| Zentner et al., 2024 [61] | Emotion recognition during HCI under lighting variations | VR and CNN | EEG | Real test on adults | 30 healthy | emotion recognition |

| Xie et al., 2024 [62] | Virtual interactions and emotional expression alleviating social anxiety | Chatbots and textural and vocal interaction | Frequency, mindfulness, social anxiety, emotional expression patterns, and questionnaires | Real test on undergraduate students | 618 participants | Frequency of HCI decreases online social anxiety, and emotion expression reduces social anxiety |

| Winkle et al., 2017 [60] | Socially assistive robotics in engagement and therapy | Socially assistive robotics | Focus groups, interviews, and observations | No | − | Design of AI, social behaviors, and interaction modalities |

| Author | Citations | Documents |

|---|---|---|

| Adams, R.J. | 162 | 2 |

| Lichter, M.D. | 162 | 2 |

| Ellington, A. | 162 | 2 |

| White, M. | 162 | 2 |

| Diamond, P.T. | 162 | 2 |

| Jain, S. | 115 | 1 |

| Thiagarajan, B. | 115 | 1 |

| Shi, Z. | 115 | 1 |

| Clabaugh, C. | 115 | 1 |

| Matarić, M.J. | 115 | 1 |

| Krepkovich, E.T. | 88 | 1 |

| Ranieri, C.M. | 85 | 1 |

| MacLeod, S. | 85 | 1 |

| Dragone, M. | 85 | 1 |

| Vargas, P.A. | 85 | 1 |

| Romero, R.A.F. | 85 | 1 |

| Armstead, K. | 74 | 1 |

| Patrie, J.T. | 74 | 1 |

| Tiersen, F. | 67 | 1 |

| Batey, P. | 67 | 1 |

| Harrison, M.J.C. | 67 | 1 |

| Naar, L. | 67 | 1 |

| Serban, A.I. | 67 | 1 |

| Daniels, S.J.C. | 67 | 1 |

| Calvo, R.A. | 67 | 1 |

| Source | Documents | Citations |

|---|---|---|

| Sensors | 4 | 100 |

| Lecture Notes in Computer Science | 3 | 12 |

| IEEE Transactions on Neural Systems and Rehabilitation Engineering | 2 | 162 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kansizoglou, I.; Kokkotis, C.; Stampoulis, T.; Giannakou, E.; Siaperas, P.; Kallidis, S.; Koutra, M.; Malliou, P.; Michalopoulou, M.; Gasteratos, A. Artificial Intelligence and the Human–Computer Interaction in Occupational Therapy: A Scoping Review. Algorithms 2025, 18, 276. https://doi.org/10.3390/a18050276

Kansizoglou I, Kokkotis C, Stampoulis T, Giannakou E, Siaperas P, Kallidis S, Koutra M, Malliou P, Michalopoulou M, Gasteratos A. Artificial Intelligence and the Human–Computer Interaction in Occupational Therapy: A Scoping Review. Algorithms. 2025; 18(5):276. https://doi.org/10.3390/a18050276

Chicago/Turabian StyleKansizoglou, Ioannis, Christos Kokkotis, Theodoros Stampoulis, Erasmia Giannakou, Panagiotis Siaperas, Stavros Kallidis, Maria Koutra, Paraskevi Malliou, Maria Michalopoulou, and Antonios Gasteratos. 2025. "Artificial Intelligence and the Human–Computer Interaction in Occupational Therapy: A Scoping Review" Algorithms 18, no. 5: 276. https://doi.org/10.3390/a18050276

APA StyleKansizoglou, I., Kokkotis, C., Stampoulis, T., Giannakou, E., Siaperas, P., Kallidis, S., Koutra, M., Malliou, P., Michalopoulou, M., & Gasteratos, A. (2025). Artificial Intelligence and the Human–Computer Interaction in Occupational Therapy: A Scoping Review. Algorithms, 18(5), 276. https://doi.org/10.3390/a18050276