Abstract

Estimating the Probability Density Function (PDF) of observed data is crucial as a problem in its own right, and also for diverse engineering applications. This paper utilizes two powerful mathematical tools, the concept of moments and the relatively little-known Padé approximation to achieve this. On the one hand, moments encapsulate crucial information that is central to both the “time-” and “frequency-”domain representations of the data. On the other hand, the Padé approximation provides an effective means of obtaining a convergent series from the data. In this paper, we invoke these established tools to estimate the PDF. As far as we know, the theoretical results that we have proven, and the experimental results that confirm them, are novel and rather pioneering. The method we propose is nonparametric. It leverages the concept of using the moments of the sample data—drawn from the unknown PDF that we aim to estimate—to reconstruct the original PDF. This is achieved through the application of the Padé approximation. Apart from the theoretical analysis, we have also experimentally evaluated the validity and efficiency of our scheme. The Padé approximation is asymmetric. The most unique facet of our work is that we have utilized this asymmetry to our advantage by working with two mirrored versions of the data to obtain two different versions of the PDF. We have then effectively “superimposed” them to yield the final composite PDF. We are not aware of any other research that utilizes such a composite strategy, in any signal processing domain. To evaluate the performance of the proposed method, we have employed synthetic samples obtained from various well-known distributions, including mixture densities. The accuracy of the proposed method has also been compared with that gleaned by several State-Of-The-Art (SOTA) approaches. The results that we have obtained underscore the robustness and effectiveness of our method, particularly in scenarios where the sample sizes are considerably reduced. Thus, this research confirms how the SOTA of estimating nonparametric PDFs can be enhanced by the Padé approximation, offering notable advantages over existing methods in terms of accuracy when faced with limited data.

1. Introduction

Most natural events are stochastic [1] because they involve uncertain interactions of random internal “environments”. To study these events, researchers have applied probability theory, which is a tool encompassing a branch of mathematics that aids in the analysis of the behavior of stochastic events. Probability theory, in particular, and stochastic processes, in general, are among the most fundamental fields of mathematics. They have applications in signal processing, pattern recognition, classification, forecasting, medical diagnosis, and many other applications. In practice, probability distributions find application in diverse fields such as electron emission, radar detection, weather prediction, remote sensing, economics, noise modelling, etc. [2,3,4,5]. Once the probabilistic model is well-defined, subsequent analysis often involves the theory of “estimation” (synonymously, learning, or training, depending on the application domain). Such estimation is always valuable, but it is nonetheless challenging [6], especially when the available data are limited [7,8].

In this context, the PDF of a random variable is central to various aspects of the analysis and characterization of the data and the model. Many studies have emphasized the need for estimating the PDF [9], which is challenging due to the absence of efficient construction methods.

Suppose that is a set of observed data with N samples randomly distributed with an unknown PDF. The given is characterized by its PDF () of the underlying random variable, which satisfies the fundamental properties of a PDF:

Once the PDF has been estimated based on a well-defined criterion, one also needs an assessment method to compare with the set of observed data to identify the accuracy of our estimation. This PDF can be used to study the statistical properties of the dataset, estimate future outcomes, generate more samples, and so on. Numerous methods have been proposed for estimating the PDF, and these can be classified into two approaches, namely, using parametric or nonparametric models

Unlike parametric models, which make assumptions about the parametric form of the PDF, nonparametric density estimation does not assume the form of the distribution from which the samples are drawn [10]. In parametric models, one develops a model to fit a probability distribution for the observed data, followed by the determination of the parameters of this distribution. The procedure is summarized into four steps:

- 1.

- Select some known candidate probability distribution models based on the physical characteristics of the process and known characteristics of the distribution of the available samples.

- 2.

- Determine the parameters of the candidate models.

- 3.

- Assess the goodness-of-fit of the fitted generative model using established statistical tests and graphical analysis.

- 4.

- Choose the model that yields the best results [2,11,12].

However, these methods cannot cover all data because some datasets do not follow specific distributional shapes. As a result, these methods may fail to accurately approximate the true but unknown PDF [3]. Nevertheless, they contribute to solving many applications.

In nonparametric density estimation, we resort to techniques to find a model to fit the idealistic probability distribution of the observed data [13]. For example, a histogram approximation is one of the earliest density estimation methods, introduced by Karl Pearson [14]. Here, the entire range of values is divided into a series of intervals, referred to as “bins”, followed by adding up the number of samples falling into each bin. Although the so-called bin width is an arbitrary value, extensive research has been done to find a robust way to indicate the effective bin width [15,16]. Moreover, some bins will be empty, while others may have few occurrences. Thus, the PDF will ultimately vary between adjacent bins because of fluctuations in the number of occurrences. By increasing the bin size, each bin tends to contain more samples [17]; however, many important details will be filtered out. This weakness causes the inferred PDF to be discontinuous, and so it cannot be viewed as an accurate method, especially if the derivatives of the histogram are required.

These drawbacks led to the development of more advanced methods, such as Kernel Density Estimation (KDE), Adaptive KDE, Gaussian Mixture Model (GMM), Quantized Histogram-Based Smoothing (QHBS), and the one-point Padé approximation model [18].

KDE [19] is a common density estimator resulting in smooth and continuous densities. It estimates the PDF by deploying a kernel function at each data point and then computing the sum of these kernels to construct a nonparametric estimate of the PDF. The kernel is usually a symmetric, differentiable function, such as the Gaussian. The bandwidth hyperparameter, which defines the width of the kernel, is crucial in determining the smoothness of the resulting estimate.

Adaptive KDE [20] is a form of KDE in which the bandwidth is not constant but instead changes according to the local density of the data. This approach allows for a more accurate depiction of the features of the distribution, especially in regions with dense data, while producing a smoother estimate where there are fewer data points.

GMM [21,22] is a parametric density estimation technique that assumes that the data have been produced by a weighted sum of Gaussian distributions. GMM models the mixture density by estimating the parameters of the (assumed) source Gaussian distributions. Each component of the mixture in the form of Gaussian has its own mean and covariance, while the overall density function is the weighted sum of these individual Gaussian densities. GMM offers a number of advantages over other models and can capture more complex and multi-modal distributions. It is, however, a parametric approach whose accuracy depends on the degree to which its underlying assumptions are met.

QHBS [23] is an approach to address the challenges posed by conventional histograms. It approximates the PDF by smoothing a histogram in a manner that reduces the discontinuities and enhances the smoothness of the resulting density estimate. This approach splits the data into sets of bins and then employs a quantization procedure to soften the estimates and thus produce a smoother, continuous, and differentiable representation of the PDF.

Each of these methods has its strengths and challenges. For instance, while KDE and Adaptive KDE offer smooth and continuous density estimates, selecting the appropriate kernel function and bandwidth can be difficult. On the other hand, methods like GMM and QHBS can address multimodal and discontinuous distributions effectively, but GMM requires knowledge of the number of component distributions, while QHBS is sensitive to the number of bins used in the underlying histogram. Despite these challenges, these methods are widely used in practice due to their ability to provide accurate and flexible density estimates in various applications.

Although we have presented only a rather brief review of these schemes, it can serve as a backdrop to our current work. In this paper, we shall argue that, unlike the previous methods, the use of the moments of the data can serve to yield an even more effective method to estimate the PDF, which also quite naturally leads us to the Padé transform, explained below. Note that we will synonymously refer to this phenomenon as the Padé approximation and the Padé Transform. Amindavar and Ritcey first proposed the use of the Padé approximation for estimating PDFs [24]. We include Amindavar’s original method in our quantitative evaluations below, here referred to as the “one-point Padé approximation model”. As originally formulated, this approach suffers from several challenges, which are mentioned in [24]. First, the Padé approximation method is only applicable to strictly positive data. Second, this method exhibits substantial distortion on the left side of the approximated PDF. Third, the original method did not demonstrate accurate PDF modelling when moments must be estimated directly from sample data. As detailed below, our proposed approach overcomes these challenges to arrive at a novel density estimation technique.

1.1. Organization of the Paper

In the next section, we briefly recall the basic concepts of the Padé approximation and moment estimation, both of which are used frequently in this research, and will be necessary for developing our proposed method. The subsequent section will illustrate the one-point Padé approximation method, which is an essential section to facilitate understanding of the concepts of our work. After that, the proposed method is introduced in reasonable depth. To demonstrate the efficiency of our work, we examine our work by invoking several criteria. Thereafter, the comparison between the proposed method and some of the mentioned methods will be presented. As shown in Section 5, the proposed method is a robust, accurate, and automatic way to estimate the PDF. It would be an efficient way to find an unknown PDF for many applications, especially if the sample size is small.

1.2. On the Experimental Results

It is prudent if we say a few sentences about the datasets, distributions, and experimental results that we have presented here. It is, of course, infeasible and impossible to survey and test all the techniques for estimating PDFs, and to further test them for all the possible distributions. Rather, we have compared our new scheme against a competitive representative method in the time domain. With regard to the frequency domain, we have compared our scheme against the Padé approximation, whose advantages are discussed in a subsequent section. Further, all the algorithms have been tested for four distributions with distinct properties and characteristics, namely Gaussian mixtures, the beta, the Gamma, and the exponential distribution.

2. Mathematical Background

In this section, we briefly present the mathematical foundations of the phenomena used here, namely that of Moments and of the Padé Transform in Section 2.1 and Section 2.2, respectively.

2.1. Moments

Moments are a set of statistical parameters used to describe a distribution [25]. They provide information about the statistical characteristics of a distribution, which is used in many applications. Let be the PDF of the random variable x. Then, for the continuous function , the MGF at zero is defined as:

In practical scenarios, we encounter two main challenges. Firstly, the PDF of the random variable x is often unknown. Secondly, access to samples is typically limited. In many cases, when estimating moments, we resort to the discrete form of finding the MGF. Assuming we have N samples, the moment can be estimated as follows:

While the number of moments is infinite, we will focus on utilizing a subset of these moments. Later, we will leverage these moments to describe the PDF, and thereafter, to the Padé Transform.

2.2. The Theoretical Aspects of the Padé Transform

The Padé approximation (or Transform) is a significant mathematical tool developed by Henry Padé around 1890 [26]. It finds numerous applications in engineering [27], but in the interest of brevity these are not detailed here. The concept of the Padé approximation involves representing a function in a rational form of the corresponding Taylor series expansion. There are several compelling reasons to use the Padé approximation. Firstly, it can be applied to accelerate the convergence of series. More interestingly, even in cases where a series does not converge, the Padé approximation can be employed and yields a good approximation that does converge. Secondly, the Padé approximation represents a rational form of a function, simplifying the problem in many instances. In this regard, the Padé approximation is a valuable tool for approximating functions [28], which is precisely the arena in which we are operating. It is fascinating that the existence of this rational function is derived from Cauchy’s representation of analytic functions [24], and there is an equivalence of the Cauchy integral with the Riemann integral.

In this equation, C represents a contour in the complex plane, over which the integration is performed. This equation holds true for all analytic functions. When employing the Riemann integral, this equation transforms into a rational function, as shown below:

where n is an index that runs over a set of discrete points, , in the complex plane. Each point, , corresponds to a specific value in this set, and the sum represents the contribution of all these points to the value of the function . We can thus see that all analytic functions can be transformed into rational functions. Later, we will observe that this conversion is essential for the convergence of and for finding the inverse Laplace transformation. But for the present, we assume that we are given a power series representing an analytic function :

Our goal is to find a rational function, , to represent the series in the form of the Padé approximation function:

where L and M are the maximum degrees of the denominator and numerator, respectively. Now, to find and , we equate the RHSs of Equations (6) and (7) as:

For this equation, we need coefficients [22]. Since we are dealing with practical problems, we assume that we have a finite number of available coefficients, and ignore the others. More specifically, represents the remaining terms that we have ignored in Equation (8). To obtain coefficients, we equate the two sides as:

By matching the coefficients, this system of linear equations can be solved easily using the so-called Hankel matrix:

These equations calculate the coefficients of the denominator of Equation (8). To find the coefficients of the numerator of Equation (8), we apply Equation (10) to Equation (9) to yield:

Therefore, by finding the coefficients, we can see how a Taylor series can be transformed into a rational function. In the next section, we demonstrate how we can leverage the advantages of the rational function for our work, namely to obtain the PDF of the samples by using the one-point Padé approximation and moments estimation.

2.3. Finding the PDF of Samples Using Moments Estimation Function

The one-point Padé approximation [24] was proposed for estimating the distribution of continuous functions, by using the MGF. However, it has some drawbacks. This method fails to provide accurate estimates for all the training samples. Specifically, it only estimates the PDF for positive random variables and is not applicable to negative ones, limiting its applicability to many PDFs. Additionally, the final estimation exhibits substantial distortion near zero (on the left side of the approximation). In this paper, our goal is to address these problems.

In this section, we explain how we can estimate the PDF with the aid of the biased approximation of the moments and the one-point Padé approximation. Indeed, through method refinement, the estimation noise can be reduced, leading to improved performance. To simplify the problem, we initially focus on positive random variables with a finite number of samples. Later, we explore how we can estimate the PDF for datasets comprising both positive and negative values. For the purpose of notation, let with N coefficients be a set of samples for which we aim to determine the PDF. Let:

Let denote the moment of . Further, let be the continuous PDF of the random variable X, and be its Laplace transformation, defined as:

We now expand the exponential function by using its Taylor series. By doing this, and simplifying the equation, we get:

As discussed in Section 2.1, where we briefly introduced the concept of moment estimation, we can use this approach to estimate . In the next section, we will demonstrate how we can apply the Padé approximation. The primary reason for using the Padé approximation is that it transforms the infinite series of into a rational function, which provides a good approximation which also converges more effectively. The rational function is represented as , where L and D denote the degrees of the numerator and denominator, respectively. Additionally, converting to a rational function enables us to apply the inverse Laplace transform to find .

2.4. Implementation Considerations of the Padé Transform

The first question that we must resolve is that of determining how many poles and zeros (i.e., the values of L and D) are adequate to yield an accurate approximation. For tackling this issue, we follow the arguments proposed by [29]. For uniqueness and convergence, we limit ourselves to Padé approximations where the degree of the numerator is less than the degree of the denominator. Thereafter, we invoke the residue theorem to find the inverse Laplace transformation as:

In Equation (15), represents the residue associated with each term in the decomposition and denotes the poles of the rational function, where each corresponds to a specific root of the denominator. One major issue with this method is the distortion incurred in estimating the PDF, near zero. As previously discussed, the accuracy of the one-point Padé approximation method is compromised in the right tail; hence, the left tail of the approximation is more reliable and accurate. To illustrate this issue, consider the example of a Gaussian PDF:

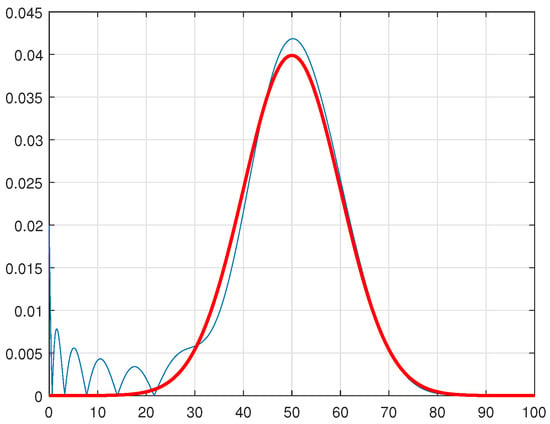

where and are the standard deviation and mean, respectively, as illustrated in Figure 1, where and . More specifically, the figure was drawn using 300 samples generated from this distribution. Indeed, we have estimated the PDF and compared it to the ideal (true) one. In Figure 1, we compare the ideal PDF with the estimated one.

Figure 1.

Comparison between the Gaussian function and its estimation using the Padé approximation. Here, the degree of the numerator and denominator are and , respectively. The blue series represents the ideal PDF, while the red series represents the estimated PDF.

As seen in Figure 1, the left part of the estimated PDF does not resemble the shape of the original PDF. Therefore, we require a method to estimate the left part of the PDF accurately. In the next section, we will propose a solution to address this issue. Subsequently, we will compare the proposed method with other methods to assess its effectiveness.

3. The Padé Approximation Using Artificial Samples

Many branches of science have invoked the Padé approximation as a tool to convert a series into a rational function. Similarly, in our study, we have applied it indirectly to samples to approximate an unknown PDF. The previously discussed method estimated using the Padé approximation. However, its accuracy decreases significantly as , causing distortion in that portion of the PDF. Moreover, all the samples in the previous method must be positive. To resolve this, we propose to utilize two datasets. The first is the given dataset. Thereafter, we create a new dataset from the original one, and the two of them will simultaneously be utilized to solve the problem. Suppose a given sample is randomly distributed from an unknown distribution (Equation (18)). We then generate N samples from by mirroring across the y-axis, creating the series :

As illustrated in Equation (19), all elements of the series will be mirrored values of . Consequently, the estimated PDF using the dataset, denoted as , is precisely the reflection of our PDF function, , across the negative Y-axis. To address the weakness of the one-point Padé approximation, in the following we will estimate the left part of using the right part . Consequently, we estimate the right part of with the help of , leading to a more accurate estimation of the function. Now, to calculate from , we follow the same procedure. To implement the one-sided Laplace transform, firstly, we need non-zero integer samples. Therefore, we shift all elements of by the maximum amount of the series + 1, which is :

Proceeding now from Equation (3):

is the moment generating function of our new training samples. So we continue:

It is easy to see that is the mirrored form of our desired PDF. However, due to the use of the Padé approximation, this estimation suffers from the same issue we discussed before: it is only reliable when is sufficiently large. Now, our objective is to utilize the right side of to replace the distorted part of . To achieve this, we need to roll back the entire shifting process. Considering Equation (19) and then combining Equation (21) and Equation (15), we have:

The estimated PDF is given by:

is the estimation of the series . An acceptable point C is required to be considered as the “centroid” for combining these two functions. The final result is equal to:

The denominators of sub-equations are necessary to ensure that the integral of is equal to unity. Selecting the best value for C is one of the challenges that should be taken into account. In this paper, we select the mean of because it yields good experimental results. For illustrative purposes, we apply the method in some examples. With this approach, one can approximate the function; however, a discontinuity may arise. The function is not smooth at the point C, and there is a jump at the point C. For illustrative purposes, we first apply the method in one example, and then we highlight the issue. Finally, we propose a method by which we can render the function to be continuous at this point. The ideal Gaussian PDF given in Equation (17) was used to generate normally (Gaussian) distributed samples. Thereafter, we randomly selected 200 samples, , with the help of an inverse transform sampling method [30].

These two sets, namely and , are samples that are generated to calculate the right and left parts of the PDF, respectively. As shown in Figure 1, after applying the approximant method, the left and right parts of the PDF are calculated separately. For example, is the dataset utilized to draw the left part of the PDF.

In the same vein, and are functions that draw the left and right sides of the learned PDF, respectively. After performing this operation, we obtain:

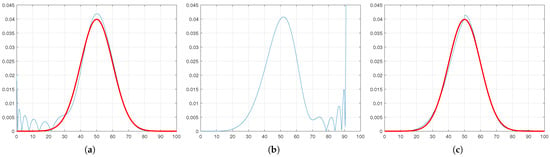

In Figure 2, we display the left and right parts of the PDF. The blue series represents the ideal PDF, while the red series represents the estimated PDF. Subsequently, these PDFs are combined to create a less noisy PDF; see Figure 2c, which is highly similar to the ideal PDF. However, as we discussed earlier, there is a discontinuity at the midpoint (50). To address this, in the next section (Section 4) we introduce a sigmoid-based smoothing function to eliminate this discontinuity.

Figure 2.

The results of estimating the Gaussian density function are presented. The blue line represents the estimated PDF, while the red line depicts the true source density function. As observed, the left tail of the estimated PDF (a) and the right tail of the estimated PDF (b) exhibit inaccuracies. The final result, shown in (c), is the fused estimate with a discontinuity where the left and right estimated PDFs join.

4. Using a Sigmoid-Based Transition

When dealing with piecewise functions, abrupt transitions between segments can introduce discontinuities or undesirable behaviour. To achieve a smooth transition between two functions, we can use a sigmoid-based blending approach [31]. This method is particularly useful in scenarios where continuity and differentiability are important, such as in working with PDFs or control systems. The sigmoid function is defined as:

The sigmoid function is defined as a smooth, continuous curve that transitions from 0 to 1. Here, t is the independent variable, c is the transition point, and k controls the steepness of the transition. The parameter k determines how sharply the curve transitions. For small values of k, the transition is gradual, blending the functions smoothly over a wider range. For larger values of k, the transition becomes sharper, closely approximating a step function at .

To smoothly blend two functions, and , around the transition point, c, we define the blended function by Equation (33) as:

This formulation ensures that as , , and the blended function approaches . Similarly, as , , and the blended function approaches . Around , the two functions are smoothly combined based on the sigmoid weighting. The sigmoid-based transition is commonly used in various applications, such as in control systems, where it facilitates gradual shifts between control regimes, and signal processing, where it is employed for blending signals or applying gradual filters. This approach provides a robust mechanism for creating smooth, continuous transitions between functions, enhancing both stability and interpretability in mathematical models. In our work, we used the sigmoid function for PDF reconstruction. This function ensures smooth transitions between the mirrored and original PDFs, effectively eliminating the discontinuity point.

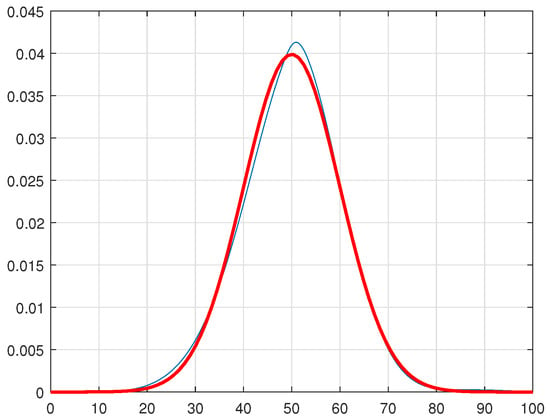

Figure 3.

The Padé approximation of the Gaussian distribution function (blue line) compared with the true density function (red line) after applying the sigmoid smoothing algorithm.

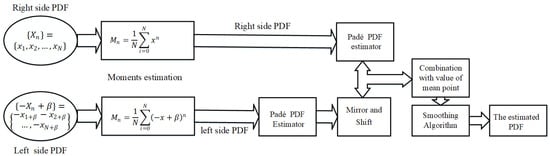

To illustrate the proposed method within the broader context, Figure 4 presents a schematic diagram of the PA procedure. This figure outlines the sequential steps required to achieve an accurate approximation. Specifically, using Equation (33), we depict the final estimation obtained by combining the two estimations.

Figure 4.

Schematic diagram of the PA procedure. After invoking the “merging” phase, one obtains the final estimation.

In addressing the challenge of estimating the probability density function for negative random variables, we utilized a methodology involving horizontally shifting all samples to create a new dataset comprising only positive values. This positive dataset was then utilized to accurately approximate the probability density function. The process was initiated by aggregating all samples with a maximum absolute value, and subsequently, employing the same procedure outlined in Section 3 to estimate the PDF. In the final step, the samples were reverted to their original positions. To illustrate this procedure, consider the example in which is a series of 500 samples randomly generated from a Gaussian distribution with a mean of zero and a variance of 20, i.e., ().

In the first step, all the samples were transformed into positive samples by shifting by the Min(), such that they were strictly positive.

The transformed samples, denoted as , were obtained using the following equation:

The proposed method was then applied to this set which results in an estimate of the PDF (see Figure 5).

Figure 5.

Estimation of Gaussian density function by considering the negative values. The blue and red lines show the estimated and ideal density functions, respectively.

5. Quantitative and Comparative Assessment

In this section, we report the results obtained by conducting several experiments to assess the performance of the proposed method. The experiments can be summarized as follows. Firstly, the proposed method was applied to datasets generated from well-known probability distributions. The results were then compared with the ideal PDF. Subsequently, the proposed method was compared with other state-of-the-art methods. We aimed to show that our new approach could produce favourable outcomes when working with fewer samples, i.e., in scenarios where data are limited, which is a common occurrence in real-world situations. In addition, we have made the MATLAB code, implementing all the proposed methods, publicly available in the following GitHub repository: https://github.com/hamidddds/Twosided_PadeAproximation (accessed on 23 January 2025).

In our research project, we conducted a series of tests to thoroughly investigate the problem using three datasets of varying sizes. The first dataset consisted of 300 samples, which we categorized as a large dataset, providing a robust representation for comprehensive analysis. The second dataset contained 100 samples, classified as a medium-sized dataset, offering a sufficient number of samples for a balanced evaluation. Lastly, the third dataset comprised only 50 samples, representing a very small dataset specifically designed to simulate challenging scenarios in which access to extensive data is limited. This enabled us to assess how well the performance varied across the sample sizes. In real-world applications, sample sizes of 50 are often insufficient to accurately calculate all data properties in specific ranges. In many cases, scientists address this limitation by making assumptions about the underlying structure of the density and then using parametric methods to estimate the parameters, although the overall accuracy of the results tends to decrease, particularly when incorrect assumptions are made regarding the density. Despite these challenges, we included these small sample sizes to demonstrate that, even in harsh conditions, our method can capture some characteristics of the data and perform reasonably well. However, it is important to note that such small sample sizes are highly sensitive to data sparsity, which can significantly impact performance. Clearly, it is important to mention that the accuracy of all estimations improved with increasing numbers of samples.

In this article, we employ five techniques for comparison with our proposed approach: Kernel Density Estimation (KDE), Adaptive KDE, Gaussian Mixture Model (GMM), Quantized Histogram-Based Smoothing (QHBS), and the one-point approximation model. Since our method is based on the one-point Padé approximation model, we have included it as a reference point for evaluating our approach. The KDE is a nonparametric technique that smooths data points to estimate the underlying PDF without assuming a specific distribution. It employs a kernel function, such as the Gaussian, and a bandwidth parameter to control the level of smoothing. In this study, a Gaussian kernel was used, with the bandwidth calculated using Silverman’s rule of thumb [32]. The Adaptive KDE enhances the standard KDE by dynamically adjusting the bandwidth based on the density of data points. This adaptability enables it to handle varying data densities efficiently, capturing intricate details in densely populated regions while ensuring uniformity in sparser areas. The Adaptive KDE utilized the same Gaussian kernel but varied the bandwidth locally to reflect data density. The GMM is a parametric technique that represents the PDF as a combination of weighted Gaussian components. It estimates the parameters using methods such as expectation–maximization, providing an effective approach for clustering and density estimation. While the GMM performs well when data adhere to Gaussian assumptions, it may face challenges with non-Gaussian distributions. In this study, a three-component Gaussian model was employed, a configuration commonly observed in many applications [33,34,35]. The QHBS combines histogram-based density estimation with smoothing techniques. It divides the data into segments, or bins, estimates the density within each bin, and then applies smoothing to reduce abrupt changes in the data distribution. For this study, the number of bins was set to 20 to balance detail and smoothness [36].

To evaluate the performance of our proposed approach, we utilized six metrics: the Wasserstein Distance, the Bhattacharyya Distance, the Correlation Coefficient, the Kullback–Leibler (KL) Divergence, the L1 Distance, and the L2 Distance. The Wasserstein Distance evaluates the cost of transforming one probability distribution into another by examining variations in their distributions, making it responsive to the distribution’s shape and useful for assessing alignment. A score of zero for the Wasserstein Distance signifies similarity between distributions. The Bhattacharyya Distance measures the overlap between two distributions, helping us understand how well two methods approximate densities by directly reflecting their probabilistic similarity. A smaller Bhattacharyya Distance suggests a better approximation quality, with zero indicating a perfect overlap. The Correlation Coefficient assesses the linear relationship between distributions, providing a clear interpretation of their alignment. A Correlation Coefficient of 1 signifies perfect linear correlation, whereas a value of 0 indicates no linear relationship between them. The KL Divergence measures the difference between two distributions, revealing the drawbacks of approximating one using the other. It is useful for identifying subtle variations in the distribution of probability mass. A lower KL Divergence is preferred, as it signifies greater similarity between distributions, with zero indicating identical distributions. The L1 Distance (also known as Manhattan or Taxicab Distance) calculates the sum of absolute differences between corresponding elements in two vectors or distributions. It is particularly effective in high-dimensional spaces and provides an intuitive measure of dissimilarity. A smaller L1 Distance indicates greater similarity between distributions, with zero representing identical distributions. The L2 Distance (commonly referred to as Euclidean Distance) measures the straight-line distance between two points in a multidimensional space using the Pythagorean theorem. It is widely used for numerical data and provides a geometric interpretation of similarity. Like L1, an L2 Distance of zero indicates identical distributions, while larger values signify greater dissimilarity. These measures collectively provide a comprehensive foundation for assessing the accuracy, reliability, and alignment of density estimation methods across various dimensions and scenarios.

5.1. Example 1: Gaussian Mixture

For the first experiment, we chose a Gaussian mixture distribution model. This is because many real-world problems are based on Gaussian distributions or their combinations so as to simulate real-world problems. The multi-modal distribution was constructed by summing three Gaussian distributions:

The value of 0.75 ensured that the properties of a probability distribution were preserved, with its integral being equal to unity. In this equation, represents a Gaussian distribution with mean and standard deviation , as defined in Equation (37). The coefficients before the Gaussian distributions were chosen arbitrarily.

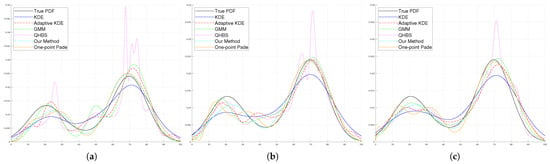

The results of the experiments are displayed in Figure 6.

Figure 6.

Visual assessment of different density estimation methods. The true Gaussian mixture PDF (black), KDE (blue), Adaptive KDE (red), GMM approximation (green), QHBS (pink), One-point approximation (orange), and our method (cyan). The results for different sample sizes are shown from left to right: 50 samples (a), 100 samples (b), and 300 samples (c).

Table 1, Table 2 and Table 3 detail the outcomes for varying sample sizes. We observed that, for small and medium and large sample sizes, our method outperformed the strategies proposed in prior studies. As the sample size increased, the GMM technique surpassed our method in performance. The GMM’s reliance on the underlying distribution has been noted for its effectiveness in estimating Gaussian density functions. However, when handling datasets where the histogram shape diverges significantly from a Gaussian distribution, its accuracy tends to decline. This makes parameter estimation challenging for methods like the GMM. Our method excels in such scenarios, as evidenced by Examples 2, 3, and 4 below. Notably, it produced results without visible distortions at the beginning of the graph, highlighting its robustness under these conditions.

Table 1.

Performance metrics for different methods for the Gaussian mixture using 300 samples. Here and elsewhere, bold is used to indicate the best results.

Table 2.

Performance metrics for different methods for the Gaussian mixture using 100 samples.

Table 3.

Performance metrics for different methods for the Gaussian mixture using 50 samples.

5.2. Example 2: The Beta Distribution

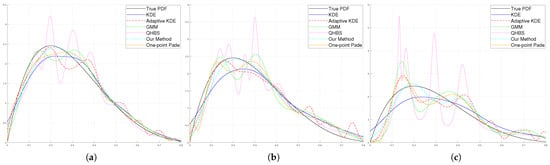

The next distribution model that we consider is the Beta distribution. The Beta distribution is a continuous probability distribution defined on the interval [0, 1], making it suitable for modelling probabilities and proportions. It is governed by two shape parameters, and , which influence the distribution’s shape, allowing it to be symmetric, skewed, or U-shaped. The Beta distribution is extensively utilized in Bayesian statistics as a conjugate prior for binomial and Bernoulli processes, enabling efficient updates of beliefs based on observed data. In our experiment, the parameters were chosen as and to evaluate the performance of our method in comparison to other methods. The formula for the PDF of the Beta distribution is shown in Equation (38). The results of the simulations are given in Figure 7.

Figure 7.

Visual assessment of different density estimation methods. The true Beta distribution (black), KDE (blue), Adaptive KDE (red), GMM approximation (green), QHBS (pink), One-point approximation (orange), and our method (cyan). The results for different sample sizes are shown from left to right: 300 samples (a), 100 samples (b), and 50 samples (c).

The results (see Table 4, Table 5 and Table 6) demonstrate that our method outperformed competing approaches and secured first place in the competition. For a sample size of 50, the results indicate that KDE achieved superior performance in terms of the Correlation Coefficient, showing an advantage in this specific criterion. However, across other evaluation metrics our method consistently delivered better performance.

Table 4.

Performance metrics for different methods for the beta distribution using 300 samples.

Table 5.

Performance metrics for different methods for the Beta distribution using 100 samples.

Table 6.

Performance metrics for different methods for the Beta distribution using 50 samples.

5.3. Example 3: The Gamma Distribution

In our study, we also examined the Gamma distribution, which is recognized for its versatility in effectively representing both symmetrical and skewed datasets. This particular distribution provides valuable perspectives when estimating PDFs. It finds applications in fields such as finance (for predicting waiting durations), engineering (for analyzing failures), and various scientific phenomena that involve the duration until a specific event occurs. Noteworthy is the fact that the Exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the Gamma distribution. The formula for the Gamma distribution is given in Equation (39).

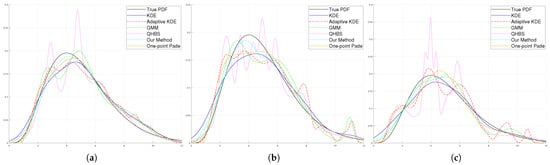

In our experiment, we assumed that was equal to 5 and was equal to 1. The results, as shown in Figure 8 and Table 7, Table 8 and Table 9, demonstrate the effectiveness of the proposed method, where it outperforms all other methods on all performance metrics and for all sample sizes.

Figure 8.

Visual assessment of different density estimation methods. The true Gamma PDF (black), KDE (blue), Adaptive KDE (red), GMM approximation (green), QHBS (pink), One-point approximation (orange), and our method (cyan). The results for different sample sizes are shown from left to right: 300 samples (a), 100 samples (b), and 50 samples (c).

Table 7.

Comparison of PDF approximation methods for the Gamma distribution using 300 samples.

Table 8.

Performance metrics for different methods for the Gamma distribution using 100 Samples.

Table 9.

Performance metrics for different methods for the Gamma distribution using 50 samples.

5.4. Example 4: The Exponential Distribution

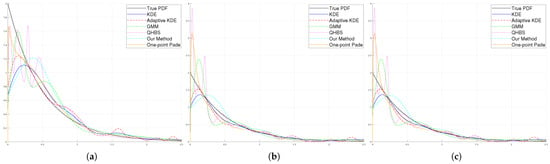

The above example illustrates the nature of moment-based methods, which are flexible with a limited number of samples. The next distribution model that we consider is the Exponential distribution. The Exponential distribution is commonly used to model the time between events in a Poisson process and is defined by a rate parameter, . The formula for the Probability Density Function (PDF) of the Exponential distribution is shown in Equation (40). In our experiments, was set to 1. The results of the simulations are given in Figure 9.

Figure 9.

Visual assessment of different density estimation methods. The true exponential PDF (black), KDE (blue), Adaptive KDE (red), GMM approximation (green), QHBS (pink), One-point approximation (orange), and our method (cyan). The results for different sample sizes are shown from left to right: 300 samples (a), 100 samples (b), and 50 samples (c).

Upon reviewing the data shown in Figure 7 and Table 10, Table 11 and Table 12, it is noticeable that the effectiveness of all techniques has decreased. The main cause for this decline is attributed to the fact that the Exponential distribution presents difficulties in capturing its characteristics near zero, which poses a challenge for the techniques to accurately depict its traits in this region. In some instances, it seems that the One-Point Padé approximation demonstrates better performance. The reason for this could be that the One-Point Padé approximation introduces a distortion near zero, which in this scenario appears advantageous at first glance. The distortion aids in approximating the behaviour near zero to match the characteristics of the Exponential function in that region. Nevertheless, it is crucial to realize that this can give a false impression of enhanced performance, as the distortion does not accurately represent the true nature of the Exponential distribution.

Table 10.

Performance metrics for different methods for the Exponential distribution using 300 samples.

Table 11.

Performance metrics for different methods for the Exponential distribution using 100 samples.

Table 12.

Performance metrics for different methods for the Exponential distribution using 50 samples.

6. Discussion and Conclusions

In this paper, we have considered the problem of estimating the PDF of observed data. Our novel scheme uses two powerful mathematical tools: the concept of moments and the relatively little-known Padé approximation. While moments incorporate crucial information which is central to both the time and frequency domains, the Padé approximation provides an effective means of obtaining convergent series from the data. Both of these phenomena are used in our new method to estimate the PDF in an inter-twined manner.

The method we propose is nonparametric. It invokes the concept of matching the moments of the original function and its learned PDF, and this, in turn is achieved by using the Padé approximation. Apart from the theoretical analysis, we have also experimentally evaluated the validity and efficiency of our scheme.

Although the Padé approximation is asymmetric, we have taken advantage of this asymmetry to our advantage. We have done this by working with two “mirrored” versions of the data so as to obtains different versions of the PDF. We have then effectively “superimposed” (or coalesced) them together to yield the final composite PDF. We are not aware of any other research that utilizes such a composite strategy, in any signal processing domain.

To evaluate the performance of the proposed method, we have employed synthetic samples obtained from various well-known distributions, including mixture densities. The accuracy of the proposed method has also been compared with that obtained by other approaches representative of the families of time- and frequency-domain methods available in the literature.

Our method has shown promising results across the distributions tested. However, we recognize the potential for further exploration. While our chosen examples demonstrate the method’s efficacy, future work could involve testing on a broader range of distributions, including heavy-tailed and multimodal distributions. This extended testing could provide additional insights into the method’s performance under diverse scenarios and potentially uncover new areas for refinement or application.

Moreover, in future research, we intend to explore methods that could enhance the robustness of the proposed approach, particularly in handling outliers. The current method, like other nonparametric density estimation techniques, may be sensitive to extreme values, as sample moments, which can be influenced by outliers. Although this issue can be mitigated through outlier detection and censoring prior to density estimation, we anticipate a potential for improvement. One promising direction would be to investigate the integration of more robust estimators for sample moments into the density estimation method. This could potentially increase the method’s resilience to outliers, without compromising its performance on “clean” data, thereby expanding its applicability to datasets possessing more challenging characteristics.

The results underscore the robustness and effectiveness of our method, particularly in scenarios when the sample sizes are considerably reduced. Thus, this research confirms how the state-of-the-art of estimating nonparametric PDFs can be enhanced by the Padé approximation, offering notable advantages over existing methods in terms of accuracy. As far as we know, the theoretical results that we have proven, and the experimental results that confirm them, are novel and rather pioneering.

Author Contributions

Conceptualization, S.A.H. and B.J.O.; methodology, all authors; software, H.R.A.; validation, all authors; writing—original draft preparation, H.R.A.; writing—review and editing, S.A.H., J.R.G. and B.J.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data used to generate the results in this study can be provided upon request to the authors.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Pinsky, M.; Karlin, S. An Introduction to Stochastic Modeling; Academic Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Zilz, D.P.; Bell, M.R. Statistical Results on the Performance of an Adaptive-Threshold Radar Detector in the Presence of Wireless Communications Interference Revision 1; Technical Report 482; Purdue University School of Electrical and Computer Engineering: West Lafayette, IN, USA, 2017. [Google Scholar]

- Tateo, A.; Miglietta, M.; Fedele, F.; Menegotto, M.; Pollice, A.; Bellotti, R. A statistical method based on the ensemble probability density function for the prediction of “Wind Days”. Atmos. Res. 2019, 216, 106–116. [Google Scholar] [CrossRef]

- Beitollahi, M.; Hosseini, S.A. Using curve fitting for spectral reflectance curves intervals in order to hyperspectral data compression. In Proceedings of the 2016 10th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP), Prague, Czech Republic, 20–22 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–5. [Google Scholar]

- Beitollahi, M.; Hosseini, S.A. Using Savitsky-Golay filter and interval curve fitting in order to hyperspectral data compression. In Proceedings of the 2017 Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 2–4 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1967–1972. [Google Scholar]

- Silverman, B.W. Density Estimation for Statistics and Data Analysis; Routledge: London, UK, 2018. [Google Scholar]

- Chen, W.C.; Tareen, A.; Kinney, J.B. Density estimation on small datasets. Phys. Rev. Lett. 2018, 121, 160605. [Google Scholar] [CrossRef] [PubMed]

- Kendall, M.G. The Advanced Theory of Statistics, 2nd ed.; Charles Griffin and Co., Ltd.: London, UK, 1946. [Google Scholar]

- Hwang, J.N.; Lay, S.R.; Lippman, A. Nonparametric multivariate density estimation: A comparative study. IEEE Trans. Signal Process. 1994, 42, 2795–2810. [Google Scholar] [CrossRef]

- Archambeau, C.; Verleysen, M. Fully nonparametric probability density function estimation with finite gaussian mixture models. In Proceedings of the 5th International Conference on Advances in Pattern Recognition, Calcultta, India, 10–13 December 2003; pp. 81–84. [Google Scholar]

- Clerc, S.; Dennison, J.R.; Hoffmann, R.; Abbott, J. On the computation of secondary electron emission models. IEEE Trans. Plasma Sci. 2006, 34, 2219–2225. [Google Scholar] [CrossRef]

- Gentle, J.E. Estimation of Probability Density Functions Using Parametric Models. In Computational Statistics; Springer: New York, NY, USA, 2009; pp. 475–485. [Google Scholar]

- April, S. Density Estimation for Statistics and Data Analysis Chapter 1 and 2; Chapman and Hall: London, UK, 2003. [Google Scholar]

- Hsu, C.Y.; Shao, L.J.; Tseng, K.K.; Huang, W.T. Moon image segmentation with a new mixture histogram model. Enterp. Inf. Syst. 2021, 15, 1046–1069. [Google Scholar] [CrossRef]

- Reyes, M.; Francisco-Fernández, M.; Cao, R. Bandwidth selection in kernel density estimation for interval-grouped data. Test 2017, 26, 527–545. [Google Scholar] [CrossRef]

- Knuth, K.H. Optimal data-based binning for histograms. arXiv 2006, arXiv:physics/0605197. [Google Scholar]

- Papkov, G.I.; Scott, D.W. aaaLocal-moment nonparametric density estimation of pre-binned data. Comput. Stat. Data Anal. 2010, 54, 3421–3429. [Google Scholar] [CrossRef]

- Tsuruta, Y.; Sagae, M. Properties for circular nonparametric regressions by von Miese and wrapped Cauchy kernels. Bull. Inform. Cybern. 2018, 50, 1–13. [Google Scholar] [CrossRef]

- Zambom, A.Z.; Ronaldo, D. A review of kernel density estimation with applications to econometrics. Int. Econom. Rev. 2013, 5, 20–42. [Google Scholar]

- Demir, S. Adaptive kernel density estimation with generalized least square cross-validation. Hacet. J. Math. Stat. 2019, 48, 616–625. [Google Scholar] [CrossRef]

- Hamsa, K. Some Contributions to Renewal Density Estimation. Ph.D. Thesis, University of Calicut, Kozhikode, India, 2009. [Google Scholar]

- Jarosz, W. Efficient Monte Carlo Methods for Light Transport in Scattering Media; University of California: San Diego, CA, USA, 2008. [Google Scholar]

- Lagha, K.; Adjabi, S. Non parametric sequential estimation of the probability density function by orthogonal series. Commun. Stat.-Theory Methods 2017, 46, 5941–5955. [Google Scholar] [CrossRef]

- Amindavar, H.; Ritcey, J.A. Padé approximations of probability density functions. IEEE Trans. Aerosp. Electron. Syst. 1994, 30, 416–424. [Google Scholar] [CrossRef]

- Davies, K.; Dembińska, A. Computing moments of discrete order statistics from non-identical distributions. J. Comput. Appl. Math. 2018, 328, 340–354. [Google Scholar] [CrossRef]

- Gamsha, A.M.; Hameed, M.A.; Roslan, R. Psolutions of poiseuille flow using homotopy perturbation method linked with Padé approximation. ARPN J. Eng. Appl. Sci. 2017, 12, 2545–2551. [Google Scholar]

- Hosseini, S.A.; Ghassemian, H. Rational function approximation for feature reduction in hyperspectral data. Remote Sens. Lett. 2016, 7, 101–110. [Google Scholar] [CrossRef]

- Singh, V.P.; Chandra, D. Reduction of discrete interval systems based on pole clustering and improved Padé approximation: A computer-aided approach. Adv. Model. Optim. 2012, 14, 45–56. [Google Scholar]

- Gilewicz, J. Numerical detection of the best Padé approximant and determination of the Fourier coefficients of insufficiently sampled functions. In Padé Approximants and Their Applications; Springer: Berlin/Heidelberg, Germany, 1973; pp. 99–103. [Google Scholar]

- Olver, S.; Townsend, A. Fast inverse transform sampling in one and two dimensions. arXiv 2013, arXiv:1307.1223. [Google Scholar]

- Jeffrey, M.R. Smoothing tautologies, hidden dynamics, and sigmoid asymptotics for piecewise smooth systems. Chaos Interdiscip. J. Nonlinear Sci. 2015, 25, 103125. [Google Scholar] [CrossRef] [PubMed]

- Scott, D.W. Scott’s rule. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 497–502. [Google Scholar] [CrossRef]

- Upcroft, B.; Kumar, S.; Ridley, M.; Ong, L.L.; Durrant-Whyte, H. Fast re-parameterisation of Gaussian mixture models for robotics applications. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 6–8 December 2004. [Google Scholar]

- Kasa, S.R.; Rajan, V. Avoiding inferior clusterings with misspecified Gaussian mixture models. Sci. Rep. 2023, 13, 19164. [Google Scholar] [CrossRef]

- Mas’ud, A.A.; Sundaram, A.; Ardila-Rey, J.A.; Schurch, R.; Muhammad-Sukki, F.; Bani, N.A. Application of the Gaussian Mixture Model to classify stages of electrical tree growth in epoxy resin. Sensors 2021, 21, 2562. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, S.A.; Ghassemian, H. Hyperspectral data feature extraction using rational function curve fitting. Int. J. Pattern Recognit. Artif. Intell. 2016, 30, 1650001. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).