Abstract

Unmanned Aerial Vehicles (UAVs) have transformed the process of data collection and analysis in a variety of research disciplines, delivering unparalleled adaptability and efficacy. This paper presents a thorough examination of UAV datasets, emphasizing their wide range of applications and progress. UAV datasets consist of various types of data, such as satellite imagery, images captured by drones, and videos. These datasets can be categorized as either unimodal or multimodal, offering a wide range of detailed and comprehensive information. These datasets play a crucial role in disaster damage assessment, aerial surveillance, object recognition, and tracking. They facilitate the development of sophisticated models for tasks like semantic segmentation, pose estimation, vehicle re-identification, and gesture recognition. By leveraging UAV datasets, researchers can significantly enhance the capabilities of computer vision models, thereby advancing technology and improving our understanding of complex, dynamic environments from an aerial perspective. This review aims to encapsulate the multifaceted utility of UAV datasets, emphasizing their pivotal role in driving innovation and practical applications in multiple domains.

1. Introduction

Unmanned Aerial Vehicles (UAVs) [1], commonly referred to as drones, have revolutionized the way we collect and analyze data from above, offering unparalleled versatility and efficiency across various research fields. This review examines the wide-ranging applications of UAV datasets, highlighting their significant advancements and potential across multiple domains. UAV datasets include various data types, such as satellite imagery, drone-captured images, and videos. Datasets may be classified as unimodal, focusing on a single data type, or multimodal, which incorporates multiple data types to provide deeper and more comprehensive insights.

UAV datasets facilitate the assessment of disaster damage by enabling the classification of damage from natural disasters through advanced semantic segmentation and annotation techniques. Training computer vision models with these datasets enables the automation of aerial scene classification for disaster events, thereby improving response and recovery efforts. The extraction of information and detection of objects from UAV-captured data is essential for tasks such as action recognition, which involves analyzing human behavior from aerial imagery, including the recognition of aerial gestures and the classification of disaster events.

A significant application of UAV datasets is in aerial surveillance, which facilitates advanced research by the convergence of computer vision, robotics, and surveillance. The datasets facilitate event recognition in aerial videos, contributing to the monitoring of urban environments and traffic systems. The application of pre-trained models and transfer learning techniques enhances the effectiveness of UAV datasets, facilitating the swift implementation of advanced models for event recognition and tracking.

UAV datasets improve object recognition in urban surveillance by offering extensive top-down and side views. This enables tasks including categorization, verification, object detection, and tracking of individuals and vehicles. UAV datasets play a critical role in the understanding and management of forest ecosystems by facilitating the segmentation of individual trees, an essential aspect of sustainable forest management.

The versatility of UAV datasets encompasses multiple domains, such as the development of speech recognition systems for UAV control through video capture and object tracking in low-light conditions, which is crucial for nighttime surveillance operations. Innovative UAV designs, including bionic drones with flapping wings, have resulted in specialized video datasets utilized for single object tracking (SOT) [2]. This illustrates the extensive scope and potential of UAV datasets in improving real-time object tracking across diverse lighting conditions.

UAV datasets serve as a fundamental resource for advanced research and practical applications in various fields. This review examines the specific applications and advantages of these datasets, emphasizing their contribution to technological advancement and enhancing our comprehension of complex, dynamic environments from an aerial viewpoint.

The subsequent sections provide a comprehensive exposition of the contributions made by our study, which can be stated as follows:

- Our study is driven by the increasing importance of UAV datasets in several research domains such as object detection, traffic monitoring, action identification, surveillance in low-light conditions, single object tracking, and forest segmentation utilizing point cloud or LiDAR point process modeling. Through an in-depth analysis of current datasets, their uses, and prospects, this paper intends to provide valuable insights that will assist researchers in harnessing these resources for creative solutions. Furthermore, they will acquire knowledge of existing constraints and prospective opportunities, enhancing their research endeavors.

- We conduct an extensive analysis of a dataset consisting of 15 UAVs, showcasing its diverse applications in research.

- We emphasize the applications and advancements of several novel methods utilizing these datasets based on UAVs.

- Our study also delves into the potential for future research and the feasibility of utilizing these UAV datasets, engaging in in-depth discussions on these topics.

2. Methodology

UAVs encompass a diverse range of applications, requiring a thorough investigation to examine and define the extensive utilization of UAV datasets. We aimed to comprehend how these datasets can be employed in different research and project scenarios. To accomplish this, we implemented an exhaustive search for UAV datasets, initially narrowing our focus to the keyword “satellite or drone image datasets”. The initial search led to the identification of “UAV datasets”. After acknowledging the potential of UAV datasets, we conducted further research in this field, identifying their diverse applications in object detection, tracking, and event detection, as well as semantic segmentation and single object tracking.

To gather relevant UAV datasets, we conducted systematic searches on the Internet, employing a range of keywords and search terms related to UAVs and their applications. We specifically looked for datasets that showed off the adaptability of UAVs, choosing those that researchers had proposed and used in other research contexts. This approach ensured that the datasets we included were novel and provided diverse examples of UAV applications.

We identified and collected 15 UAV image datasets for inclusion in our study. Our selection criteria focused on datasets that showcased a variety of use cases, including traffic systems (car identification, person identification, and surveillance systems), damage classification from disasters, and other object detection and segmentation tasks. Each dataset was thoroughly reviewed and analyzed to understand its characteristics, intended use, and underlying methodologies.

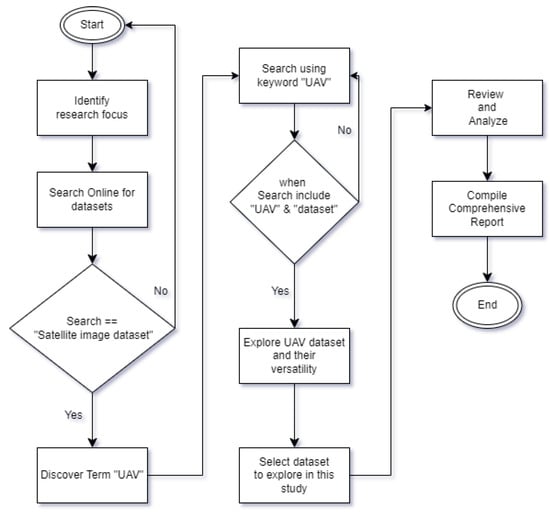

Our analysis involved a detailed examination of the datasets, resulting in the comprehensive report included in this paper. This report outlines the behavior, agenda, and applications of each dataset, providing insights into their respective fields of use. By presenting these findings, we aim to highlight the versatility and potential of UAV datasets in advancing various research domains. Figure 1 depicts the sequential process of our work.

Figure 1.

Workflow of this study.

Search Terms

We obtained the datasets surveyed in this paper mostly from the following website: https://paperswithcode.com/ (accessed on 12 November 2024). Before we found this website, we used various search terms to search for UAV datasets and came across the website through the search process.

Example search strings:

- (“unmanned aerial vehicle” OR UAV OR drone OR Satellite) AND (“dataset” OR “image dataset” OR “dataset papers”)

- (UAV OR “unmanned aerial vehicle”) AND (“disaster dataset” OR “traffic surveillance”)

These search strings and keywords facilitated a broad yet focused search, enabling us to gather a diverse set of UAV datasets that demonstrate their wide-ranging applications and research potential.

3. Literature Review

UAVs function without a human pilot present and can be remotely commanded or autonomously managed by computers onboard. They are used in diverse fields such as surveillance, aerial photography, agriculture, environmental monitoring, and military operations. UAVs greatly enhance computer vision jobs by collecting several forms of image and video data, in addition to satellite images, within the realm of datasets.

In addition to the datasets examined in this work, recent research has investigated novel applications of UAVs beyond conventional purposes. These include real-time 3D AQI mapping for air quality assessment [3], wildfire detection systems that integrate UAVs with satellite and ground sensors [4], federated learning frameworks for energy-efficient UAV swarm operations [5], and extensive surveys that tackle air pollution monitoring difficulties. These efforts underscore the adaptability of UAVs in tackling urgent environmental and computational issues, while also revealing prospects for additional research in particular fields like disaster management and machine learning integration.

Table 1.

Summary of research and findings of UAV datasets discussed.

Table 2.

Summary of research and findings of UAV datasets discussed.

3.1. RescueNet

Maryam Rahnemoonfar, Tashnim Chowdhury, and Robin Murphy presented the RescueNet [6] dataset in their paper, which focuses on post-disaster scene understanding using UAV imagery. The dataset contains high-resolution images with detailed pixel-level annotations for ten classes of objects, including buildings, roads, pools, and trees, which were collected by UAVs following Hurricane Michael. The authors employed state-of-the-art segmentation models like Attention UNet [21], PSPNet [22], and DeepLabv3 [23], achieving superior performance with attention-based and transformer-based methods. The findings demonstrated RescueNet’s effectiveness in improving damage assessment and response strategies, with transfer learning outperforming other datasets like FloodNet [24]. The dataset was observed to have limited generalization to other domains and to require a time-consuming annotation process, despite its detailed annotations.

3.2. UAV-Human

Tianjiao Li et al. developed the UAV-Human [7] dataset, a comprehensive benchmark for improving human behavior understanding with UAVs. The dataset contains 67,428 multi-modal video sequences with 119 subjects for action recognition, 22,476 frames for pose estimation, 41,290 frames for person re-identification with 1144 identities, and 22,263 frames for attribute recognition, all captured over three months in various urban and rural locations under varying conditions. The data encompass RGB videos, depth maps, infrared sequences, and skeleton data. The authors used methods such as HigherHRNet [25], AlphaPose [26], and the Guided Transformer I3D framework to recognize actions while addressing fisheye video distortions [27,28] and leveraging multiple data modalities. The results demonstrated the dataset’s effectiveness in improving action recognition, pose estimation, and re-identification tasks, with models showing significant performance improvements. The UAV-Human dataset stands out as a reliable benchmark, encouraging the creation of more effective UAV-based human behavior analysis algorithms.

3.3. AIDER

Christos Kyrkou and Theocharis Theocharides introduced the AIDER [8] dataset, which is intended for disaster event classification using UAV aerial images. The dataset contains 2565 images of Fire/Smoke, Flood, Collapsed Building/Rubble, Traffic Accidents, and Normal cases, which were manually collected from various sources—mainly from UAVs. To increase variability and combat overfitting, images were randomly augmented with rotations, translations, and color shifting. The paper presents ERNet, a lightweight CNN designed for efficient classification on embedded UAV platforms. ERNet, which uses components from architectures such as VGG16 [29], ResNet [30], and MobileNet [31], incorporates early downsampling to reduce computational costs. When tested on both embedded platforms attached to UAVs and desktop CPUs, ERNet achieved almost perfect accuracy (90%) while running three times faster on embedded platforms. This showed that it is a good choice for real-time applications that do not need a lot of memory. The study emphasizes the benefits of combining ERNet with other detection algorithms to improve situational awareness in emergency response.

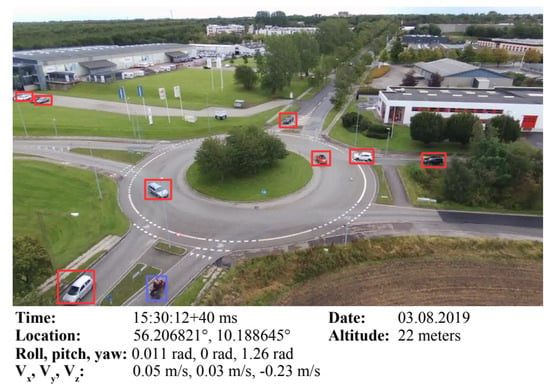

3.4. AU-AIR

In their paper, Ilker Bozcan and Erdal Kayacan presented the AU-AIR [9] dataset, a comprehensive UAV dataset designed for traffic surveillance. The dataset comprises 32,823 labeled video frames with annotations for eight traffic-related object categories, along with multi-modal data including GPS coordinates, altitude, IMU data [32], and velocity. To establish a baseline for real-time performance in UAV applications, the authors train and evaluate two mobile object detectors on this dataset: YOLOv3-Tiny [33] and MobileNetv2-SSDLite [34]. The findings highlight the difficulties of object detection in aerial images, emphasizing the importance of datasets tailored to mobile detectors. The study highlights the dataset’s potential for furthering research in computer vision, robotics, and aerial surveillance, while also acknowledging limitations and suggesting future improvements for broader applicability.

3.5. ERA

Lichao Mou et al. introduced the ERA [10] dataset, a comprehensive collection of 2864 labeled video snippets for twenty-four event classes and one normal class, designed for event recognition in UAV videos. The videos, sourced from YouTube, are 5 s long, 640 × 640 pixels, and run at 24 fps, ensuring a diverse dataset that includes both high-quality and extreme condition footage. The paper employs various deep learning models, including VGG-16, ResNet-50, DenseNet-201 [35], and video classification models like I3D-Inception-v1, to benchmark event recognition. DenseNet-201 achieved the highest performance with an overall accuracy of 62.3% in single-frame classification. The findings highlight the difficulties of recognizing events in a variety of environments and scales, noting that while models can identify specific events such as traffic congestion and smoke, they struggle with conditions such as night and snow scenes, indicating the need for improved attribute recognition and temporal cue exploitation in future research.

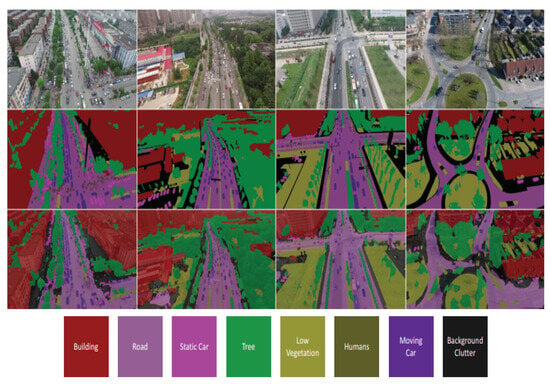

3.6. UAVid

Ye Lyu et al. introduced the UAVid [11] dataset in their paper, which addresses the need for semantic segmentation in urban scenes from the perspective of UAVs. The UAVid dataset consists of 30 video sequences with 4K high-resolution images, which capture top and side views for improved object recognition and include eight labeled classes. The paper highlights the challenges of large-scale variation, moving object recognition, and temporal consistency. The effectiveness of deep learning techniques, such as Multi-Scale-Dilation net, which is a novel technique proposed by the author, was evaluated and resulted in an average Intersection over Union [36] (IoU) score of approximately 50%. Further enhancements were observed by employing spatial–temporal regularization methods like FSO [37] and 3D CRF [38]. The dataset’s applicability extends to traffic monitoring, population density analysis, and urban greenery monitoring, showcasing its potential for diverse urban surveillance applications. The paper also discusses the dataset’s class imbalance and suggests future expansions and optimizations to enhance its utility for semantic segmentation and other UAV-based tasks.

3.7. VRAI

Peng Wang et al. introduced the VRAI [12] dataset, which is the largest vehicle re-identification (ReID) dataset containing over 137,613 images of 13,022 vehicles. This UAV-based dataset includes annotations for unique IDs, color, vehicle type, attributes, and distinguishing features, capturing a wide range of view angles and poses from UAVs flying between 15 m and 80 m. The study devised an innovative vehicle ReID algorithm that utilizes weight matrices, weighted pooling, and comprehensive annotations to identify distinctive components. This algorithm surpasses both the baseline and the most advanced techniques currently available. The paper utilizes a comprehensive strategy to perform vehicle ReID using aerial images, showcasing its effectiveness through a range of experiments. Ablation study results demonstrate that the novel Multi-task + DP model, which integrates attribute classification and additional triplet loss on weighted features, exhibits superior performance compared to less complex models. The proposed method outperforms ground-based methods such as MGN [39], RNN-HA [40], and RAM [41], because it can easily handle different view angles in UAV images. Weighted feature aggregation improves performance, as evidenced by the enhanced mean average precision (mAP) and cumulative match characteristic (CMC) metrics. Human performance evaluation highlights the algorithm’s strength in fine-grained recognition, though humans still excel in detailed tasks. The study suggests further research to improve flexibility, scalability, and real-world application of the algorithm.

3.8. FOR-Instance

For semantic and instance segmentation of individual trees, Stefano Puliti et al. presented the FOR-Instance [13] dataset in their paper “FOR-Instance: a UAV laser scanning benchmark dataset for semantic and instance segmentation of individual trees”. This dataset addresses a deficiency in the market for machine learning-ready datasets and standardized benchmarking infrastructure by providing publicly accessible annotated forest data for point cloud segmentation tasks. The major objective is to utilize data from unmanned aerial vehicle (UAV) laser scanning to accurately identify and distinguish individual trees. The dataset has comprehensive annotations utilized for training and evaluation, consisting of five meticulously selected samples from various forest types globally. In deep learning, the dataset is partitioned into several subsets for training and validation purposes. In picture segmentation research, rasterized canopy height models are employed alongside either raw point clouds or two-dimensional projections. The FOR-Instance dataset was beneficial for the examination and evaluation of sophisticated segmentation techniques. This underscores the need to understand forest ecosystems and develop sustainable management strategies. The standardization of the dataset in 3D forest scene segmentation study mitigates existing methodological shortcomings, including overfitting and insufficient comparability.

3.9. VERI-Wild

Yihang Lou et al. presented the VERI-Wild [14] dataset, the largest vehicle ReID dataset to date, in their paper. Over 400,000 photos of 40,000 vehicle IDs are included in the dataset, which was collected over the course of a month in an urban district using 174 CCTV cameras. The dataset poses a formidable challenge for ReID algorithms due to its inclusion of diverse conditions such as varying backgrounds, lighting, obstructions, perspectives, weather, and vehicle types. The authors introduced FDA-Net, a novel technique for vehicle ReID, to enhance the model’s ability to distinguish between different vehicles. FDA-Net combines a feature distance adversary network with a hard negative generator and embedding discriminator. After being tested on the VERI-Wild dataset and other established datasets, FDA-Net surpassed various standard methods, achieving higher accuracies in Rank-1 and Rank-5. This demonstrates the effectiveness of FDA-Net in vehicle ReID tasks. The method’s ability to generate hard negatives significantly improved model performance, highlighting its potential for advancing vehicle ReID research in real-world scenarios.

3.10. UAV-Assistant

G. Albanis and N. Zioulis et al. introduced the UAV-Assistant [15] (UAVA) dataset in their paper. The dataset was created using a data synthesis pipeline to generate realistic multimodal data, including exocentric and egocentric views from UAVs. The dataset is suitable for training a model aimed at estimating an individual’s pose by integrating a new smooth silhouette loss alongside a direct regression objective. The dataset serves as a valuable resource for training a model capable of precisely identifying a person’s position by integrating a distinctive smooth silhouette loss with a direct regression objective. It also uses differentiable rendering techniques to help the model learn from both real and fake data. The study highlights the critical role of tuning the kernel size for the smoothing filter to optimize model performance. The suggested smooth silhouette loss surpasses conventional silhouette loss functions by reducing discrepancies and enhancing the accuracy of 3D pose estimation. This approach specifically tackles the lack of available data for estimating the three-dimensional position and orientation of unmanned aerial vehicles (UAVs) in non-hostile environments. It is different from existing datasets that primarily focus on remote sensing or drones with malicious intent. The paper underscores the need for further research on rendering techniques, parameter optimization, and real-world validations to enhance the model’s generalizability and robustness.

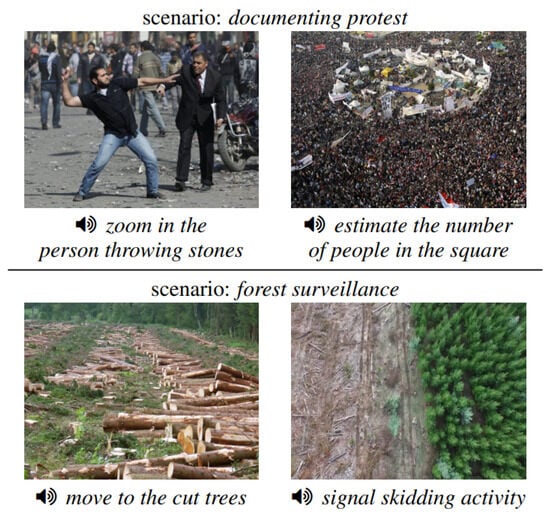

3.11. KITE

The KITE [16] dataset, created to improve speech recognition systems for UAV control, was presented by Dan Oneata and Horia Cucu in their paper. The KITE eval dataset is a comprehensive collection that includes 2880 spoken commands, alongside corresponding audio and images. It is specifically designed for UAV operations and covers a range of commands related to movement, camera usage, and specific scenarios. The authors employed time-delay neural networks [42] (which are implemented in Kaldi [43]) and recurrent neural networks to perform language modeling. They initialized the models with out-of-domain datasets and subsequently fine-tuned them for UAV tasks. The study emphasizes the efficacy of customizing language models for UAV-specific instructions, showcasing substantial enhancements in speech recognition precision through domain adaptation. Future directions include grounding uttered commands in images for enhanced context understanding and improving the acoustic model’s robustness to outdoor noises.

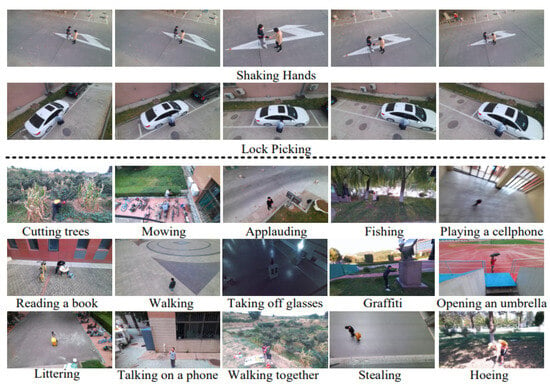

3.12. UAV-Gesture

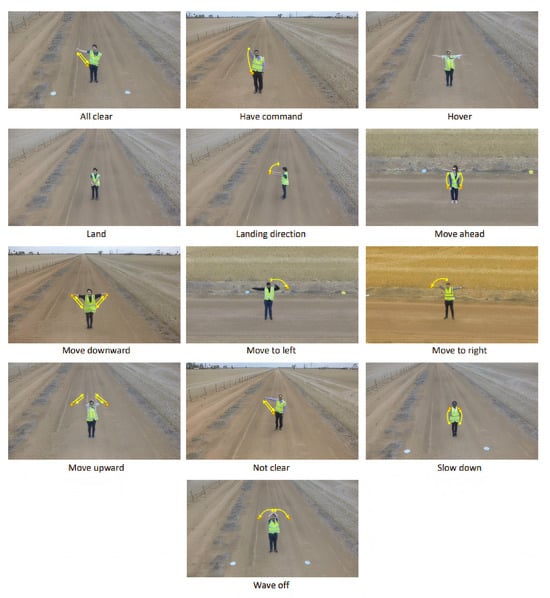

A. Perera et al. introduced the UAV-Gesture [17] dataset, which addresses the lack of research on gesture-based UAV control in outdoor settings. This dataset aims to fill the existing research gap, as most studies in this field are focused on indoor environments. The dataset consists of 119 high-definition video clips, totaling 37,151 frames, captured in an outdoor setting using a 3DR Solo UAV and a GoPro Hero 4 Black camera. The dataset comprises annotations of 13 body joints and gesture classes for all frames, encompassing gestures appropriate for UAV navigation and command. The dataset was captured with variations in phase, orientation, and camera movement to augment realism. The authors employed an extended version of the VATIC [44] tool for annotation and utilized a Pose-based Convolutional Neural Network [45] (P-CNN) for gesture recognition. This approach resulted in a baseline accuracy of 91.9%. This dataset facilitates extensive research in gesture recognition, action recognition, human pose recognition, and UAV control, showcasing its efficacy and potential for real-world applications.

3.13. UAVDark135

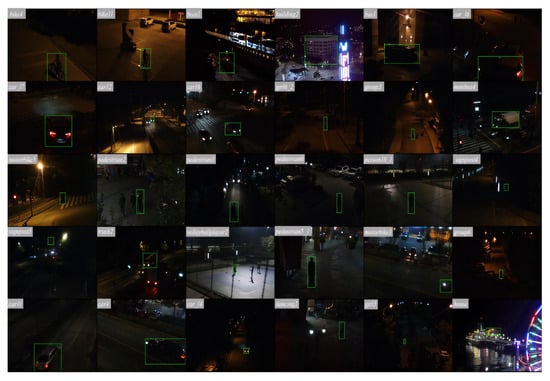

In their research, Bowen Li et al. presented the UAVDark135 [18] dataset and the ADTrack algorithm. Their work aimed to tackle the challenge of achieving reliable tracking of unmanned aerial vehicles (UAVs) under different lighting conditions. UAVDark135 is the inaugural benchmark specifically developed for tracking objects during nighttime. It consists of more than 125,000 frames that have been manually annotated, addressing a deficiency in current benchmarks. The paper details the ADTrack algorithm, a discriminative correlation filter-based tracker with illumination adaptive and anti-dark capabilities, utilizing image illuminance information and an image enhancer for real-time, all-day tracking. ADTrack performs better in both bright and dark environments—as evidenced by extensive testing on benchmarks such as UAV123@10fps [46], DTB70 [47], and UAVDark135—achieving over 30 FPS on a single CPU. While effective, the paper recommends broader comparisons with other state-of-the-art trackers and future research on image enhancement, multi-sensor fusion, and UAV hardware optimization.

3.14. DarkTrack2021

Junjie Ye et al. presented the DarkTrack2021 [19] dataset to tackle the difficulty of tracking unmanned aerial vehicles in low-light situations. The dataset consists of 110 annotated sequences containing more than 100,000 frames, providing a varied evaluation platform for tracking UAVs during nighttime. The researchers created an effective low-light enhancer called the Spatial-Channel Transformer (SCT), which combines a Spatial-Channel Transformer with a robust non-linear curve projection model to effectively enhance low-light images. The Spatial-Channel Attention Module (SCT) employs a technique that effectively combines global and local information, resulting in enhanced image quality by reducing noise and improving illumination in nighttime scenes. This study utilizes the proposed ADTrack algorithm together with 16 state-of-the-art handmade correlation filter (CF)-based trackers to evaluate their performance on tracking benchmarks UAV123@10fps, DTB70, and UAVDark135. The aim is to demonstrate the comprehensive robustness of the proposed ADTrack algorithm in all-day UAV tracking. Evaluations conducted on the public UAVDark135 and the new DarkTrack2021 benchmarks demonstrated that SCT exhibited superior performance compared to existing methods in tracking UAVs during nighttime. The practicality of the approach has been confirmed through real-world tests. The DarkTrack2021 dataset and SCT code are openly accessible on GitHub for additional research and experimentation.

3.15. BioDrone

Xin Zhao et al. presented the BioDrone [20] dataset. BioDrone is a pioneering visual benchmark for Single Object Tracking (SOT) that utilizes bionic drones. It specifically tackles the difficulties associated with tracking small targets that undergo significant changes in appearance, which are common in flapping-wing UAVs. The dataset consists of 600 videos containing 304,209 frames that have been manually labeled. Additionally, there are automatically generated labels for ten challenge attributes at the frame level. The study presents a new baseline method, UAV-KT, optimized from KeepTrack [48], and evaluates 20 SOT models, ranging from traditional approaches like KCF [49] to sophisticated models combining CNNs and SNNs. The results of comprehensive experiments demonstrate that UAV-KT outperforms other methods in handling challenging vision tasks with resilience. The paper emphasizes BioDrone’s potential for advancing SOT algorithms and encourages future research to address remaining challenges, such as camera shake and dynamic visual environments.

In the Appendix A, a visual representation of each of the aforementioned UAV datasets is provided, from which further clarification can be obtained.

4. Data Diversity of UAV

The advent of UAVs has opened new frontiers in data collection and analysis, transforming numerous fields with their versatile applications. The datasets generated by UAVs are diverse, encompassing various data types and serving multiple purposes. This section provides an overview of the various uses of UAV datasets, examines their diversity, and explores the methods applied to utilize these datasets in different studies.

4.1. Overview of UAV Dataset Uses

UAV datasets are pivotal in numerous domains, including disaster management, surveillance, agriculture, environmental monitoring, and human behavior analysis. The unique aerial perspectives provided by UAVs enable the collection of high-resolution imagery and videos, which can be used for mapping, monitoring, and analyzing different environments and activities.

4.1.1. Disaster Management

UAV datasets are often used to figure out how much damage hurricanes, earthquakes, and floods have done. High-resolution images and videos captured by UAVs allow for precise mapping of affected areas and the identification of damaged infrastructure.

4.1.2. Surveillance

In urban and rural settings, UAV datasets support advanced surveillance activities. They facilitate the monitoring of traffic, detection of illegal activities, and overall urban planning by providing real-time, high-resolution aerial views.

4.1.3. Agriculture

UAV datasets help in monitoring crop health, assessing irrigation needs, and detecting pest infestations. Multispectral and hyperspectral imaging from UAVs enable detailed analysis of vegetation indices and soil properties.

4.1.4. Environmental Monitoring

UAVs are used to monitor forest health, wildlife, and water bodies. They provide data for studying ecological changes, tracking animal movements, and assessing the impacts of climate change.

4.1.5. Human Behavior Analysis

UAV datasets contribute to analyzing human activities and behaviors in public spaces. They are used for action recognition, pose estimation, and crowd monitoring, offering valuable insights for security and urban planning.

4.2. Variability of UAV Databases

The diversity of UAV datasets lies in their varied data types, capture conditions, and application contexts. This diversity ensures that UAVs can address a wide range of tasks, each requiring specific data characteristics.

4.2.1. Data Types

UAV datasets include RGB images, infrared images, depth maps, and multispectral and hyperspectral images [50]. To capture complex scenarios for human behavior analysis, the UAV-Human dataset, for example, combines RGB videos, depth maps, infrared sequences, and skeleton data.

4.2.2. Capture Conditions

A variety of conditions, such as different times of day, weather, light (low light or varied illumination), and flight altitudes, are encountered when gathering UAV datasets. This variety makes sure that models trained on these datasets are strong and work well in a variety of settings.

4.2.3. Application Contexts

UAV datasets are tailored for specific applications. For example, visualizing data, object annotations, and flight data are used to address specific problems that come up when monitoring traffic from the air. Furthermore, the application of high-resolution images of damage taken after a disaster enables accurate assessment of the damage.

4.3. Methods Applied to the UAV Dataset

Various methods are applied to UAV datasets to extract valuable insights and solve specific problems. These methods include machine learning, computer vision techniques, and advanced data processing algorithms. In Table 3 and Table 4, an overview of the methods used and the analysis of results are given to gain a better understanding.

Table 3.

Summary of experimented methods and results on different datasets.

Table 4.

Summary of experimented methods and results on different datasets.

4.3.1. Machine Learning and Deep Learning

Deep learning models, such as convolutional neural networks (CNNs) [64], are widely used for tasks like object detection, segmentation, and classification. Some examples are as follows:

- The RescueNet dataset employs models like PSPNet, DeepLabv3+, and Attention UNet for semantic segmentation to assess disaster damage.

- The UAVid Dataset presents deep learning baseline methods like Multi-Scale-Dilation net. The ERA dataset establishes a benchmark for event recognition in aerial videos by utilizing pre-existing deep learning models like the VGG models (VGG-16, VGG19) [29], Inception-v3 [65], the ResNet models (ResNet-50, ResNet-101, and ResNet-152) [30], MobileNet, the DenseNet models (DenseNet-121, DenseNet-169, and DenseNet-201) [35], and NASNet-L [66].

Traditional computer vision algorithms, alongside advanced machine learning and deep learning methods, have shown considerable effectiveness in UAV applications. For instance, ORB (Oriented FAST and Rotated BRIEF) and FLANN (Fast Library for Approximate Nearest Neighbors) have been employed for efficient object recognition and feature matching in UAV rescue systems, enabling real-time processing under hardware constraints [67]. Similarly, the SIFT (Scale-Invariant Feature Transform) algorithm has proven to be highly effective in image mosaicking tasks, ensuring high accuracy and computational efficiency, particularly in agricultural monitoring [68]. These algorithms provide efficient alternatives in resource-constrained environments, proving essential for tasks including object detection, feature matching, and image alignment in UAV applications. Algorithms like ORB, FLANN, and SIFT, now utilized in object detection and mosaicking, could be further refined and incorporated into future UAV datasets to tackle developing issues.

In the domain of deep learning, ensemble methods play a crucial role. They not only assess model performance but also boost accuracy while keeping the model’s equilibrium intact, such as the following:

- In the VRAI dataset, ensemble techniques were utilized such as Triplet Loss, Contrastive Loss, ID Classification Loss, and Triplet + ID Loss, and multi-task and multi-task + discriminative parts were introduced. These ensemble methods performed better than the state-of-the-art methods in their claim.

4.3.2. Transfer Learning

Transfer learning is used to leverage pre-trained models on UAV datasets, allowing for quicker and more efficient training.

- Pre-trained YOLOv3-Tiny and MobileNetv2-SSDLite models, for example, are used for real-time object detection in the AU-AIR [9] dataset.

4.3.3. Event Recognition

UAVs have proven to be highly proficient in the field of event recognition and have gained significant popularity in this domain. Some examples are given below:

- The ERA dataset has been subjected to various methods for event recognition in aerial videos, including DenseNet-201 and Inception-v3. These methods have demonstrated notable accuracy in identifying dynamic events from UAV footage.

- The BioDrone dataset assesses single object tracking (SOT) models and investigates new optimization approaches for the cutting-edge KeepTrack method for robust vision, which is presented by flapping-wing unmanned aerial vehicles [20].

4.3.4. Multimodal Analysis

Combining data from multiple sensors enhances the analysis capabilities of UAV datasets. The multimodal approach of the UAV-Human dataset, which combines RGB, infrared, and depth data, makes a thorough analysis of human behavior possible.

4.3.5. Creative Algorithms

New algorithms are created to tackle particular problems in the analysis of data from unmanned aerial vehicles. Some examples are as follows:

- The UAV-Gesture [17] dataset employs advanced gesture recognition algorithms to enable UAV navigation and control based on human gestures.

- The UAVDark135 [18] makes use of ADTrack, a tracker that adapts to varying lighting conditions and makes use of discriminative correlation filters. It also has anti-dark capabilities.

- To address the issue of fisheye video distortions, the authors of the UAV-Human [7] dataset suggest a fisheye-based action recognition method that uses flat RGB videos as guidance.

- To classify disaster events from a UAV, the authors of the AIDER [8] dataset created a lightweight (CNN) architecture that they named ERNet.

- VERI-Wild [14] introduces FDA-Net, a novel method for vehicle identification. It includes an embedding discriminator and a feature distance adversary network to enhance the model’s capacity to differentiate between various automobiles.

4.3.6. Managing Diverse Conditions

Various environmental conditions, such as different lighting, weather, and occlusions, present challenges that are often addressed by methodologies. For example, DarkTrack2021 used the low-light enhancer-based method SCT to handle performance in low-light conditions.

The diversity of UAV datasets is a cornerstone of their utility, enabling a wide array of applications across different fields. From disaster management to human behavior analysis, the rich variety of data types, capture conditions, and application contexts ensures that UAV datasets can meet the specific needs of each task. The application of advanced methods, including deep learning, transfer learning, and multimodal analysis, further enhances the value derived from these datasets, pushing the boundaries of what UAVs can achieve in research and practical applications.

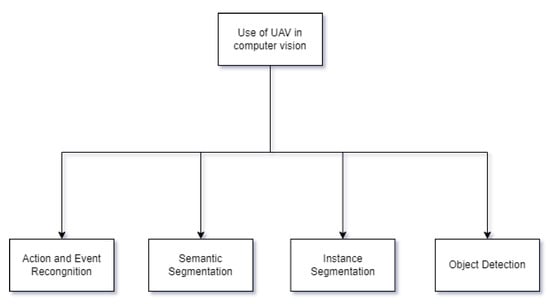

5. The Potential of Computer Vision Research in UAV Datasets

UAVs have greatly expanded the fields of computer vision research. UAV datasets offer unique and flexible data that are used in a range of computer vision tasks, from recognizing actions to finding objects. This section explores how UAV datasets are advancing computer vision research and contributing to various tasks from action recognition to object detection, as illustrated in Figure 2, which highlights the diverse applications and the development of new methods centered around these datasets. Table 5 presents the use cases of methods on UAV datasets identified during our survey of the UAV datasets.

Figure 2.

Diverse applications of UAV datasets in computer vision research.

Table 5.

Summary of methods employed on UAV datasets and their benefits.

5.1. Leveraging UAV Datasets for Computer Vision Applications

Human behavior analysis, emergency response, tracking at night, surveillance, and many other uses can be achieved with UAV datasets in computer vision. These are some of the areas where UAV datasets are used, along with an example of how to describe a dataset based on the datasets we talked about in our research paper.

5.1.1. Human Behavior Understanding and Gesture Recognition

The UAV-Human platform is essential for utilizing UAVs to study human behavior, including a range of conditions and perspectives for pose estimation and action recognition. This dataset contains multi-modal information, including skeleton, RGB, infrared, and night vision modalities. Essential for UAV control and gesture identification, UAV-Gesture contains 119 high-definition video clips with 13 gestures for command and navigation that are marked with body joints and gesture classes. Because this dataset was captured outside, it has more practical UAV control applications because of the variations in phase, orientation, and body shape.

5.1.2. Emergency Response and Disaster Management

RescueNet provides detailed pixel-level annotations and high-resolution images for 10 classes, including buildings, roads, pools, and trees. It is designed for post-disaster damage assessment using UAV imagery. It supports semantic segmentation using state-of-the-art models, enhancing natural disaster response and recovery strategies. AIDER focuses on classifying disaster events, utilizing images of traffic accidents, building collapses, fires, and floods to support real-time disaster management applications by training convolutional neural networks.

5.1.3. Traffic Surveillance and Vehicle Re-Identification

In traffic surveillance, AU-AIR prioritizes real-time performance and offers annotations for a variety of object categories, including cars, buses, and pedestrians. It bridges the gap between computer vision and robotics by offering multi-modal sensor data for advanced research in data fusion applications. VRAI is the largest UAV-based vehicle re-identification dataset, containing over 137,613 images of 13,022 vehicles with annotations for unique IDs, color, vehicle type, attributes, and discriminative parts. It supports vehicle ReID tasks with diverse scenarios and advanced algorithms. VERI-Wild, which contains over 400,000 photos of 40,000 vehicles taken by 174 CCTV cameras in various urban settings, is essential for research on vehicle re-identification. It uses techniques like FDA-Net to improve ReID accuracy by addressing variations in backgrounds, illumination, occlusion, and viewpoints.

5.1.4. Event Recognition and Video Understanding

For training models in event recognition in UAV videos, ERA contains 2864 labeled video snippets for twenty-four event classes and one normal class that were gathered from YouTube. This dataset captures dynamic events in various conditions, supporting temporal event localization and video retrieval tasks.

5.1.5. Nighttime Tracking and Low-Light Conditions

Including 110 annotated sequences with over 100,000 frames, DarkTrack2021 is crucial for improving UAV tracking at night. By employing Spatial-Channel Transformers (SCT) and non-linear curve projection models, it improves the quality of low-light images and offers a thorough assessment framework. The UAVDark135 dataset and the ADTrack algorithm are designed for all-day aerial tracking. ADTrack performs well in low light and adjusts to various lighting conditions thanks to its discriminative correlation filter foundation. More than 125,000 frames, specially annotated for low-light tracking scenarios, are included in the UAVDark135 dataset.

5.1.6. Object Tracking and Robust Vision

With 600 videos and 304,209 manually labeled frames, BioDrone is a benchmark for single object tracking with bionic drones. It captures challenges such as camera shake and drastic appearance changes, supporting robust vision analyses and evaluations of various single object tracking algorithms.

5.1.7. Urban Scene Segmentation and Forestry Analysis

UAVid provides annotations for eight classes and 30 high-resolution video sequences in 4K resolution to address segmentation challenges in urban scenes. It uses models such as Multi-Scale-Dilation net to support tasks like population density analysis and traffic monitoring. FOR-instance provides UAV-based laser scanning data for tree instance segmentation and is intended for use in point cloud segmentation in forestry. It facilitates benchmarking and method development by supporting both instance and semantic segmentation.

5.1.8. Multimodal Data Synthesis and UAV Control

UAV-Assistant facilitates monocular pose estimation by introducing a multimodal dataset featuring exocentric and egocentric views. It enhances 3D pose estimation tasks with a novel smooth silhouette loss function and differentiable rendering techniques. KITE incorporates spoken commands, audio, and images to enhance UAV control systems. It includes commands recorded by 16 speakers, supporting movement, camera-related, and scenario-specific commands with multi-modal approaches.

Together, these datasets improve a wide range of computer vision applications, including robust vision in difficult conditions, real-time traffic surveillance, emergency response, and human behavior analysis.

5.2. Development of Novel Methods Using UAV Datasets

UAV datasets have spurred the development of innovative methods in computer vision. As an example, the Guided Transformer I3D framework, which addresses distortions through unbounded transformations guided by flat RGB videos, was developed using the UAV-Human dataset. This framework enhances action recognition performance in fisheye videos. This approach is a prime example of how UAV datasets drive the creation of specialized algorithms to address particular difficulties brought about by aerial viewpoints.

The DarkTrack2021 benchmark introduces a Spatial-Channel Transformer (SCT) for enhancing low-light images in nighttime UAV tracking. Meanwhile, Bowen Li et al. present the UAVDark135 dataset and the ADTrack algorithm for all-day aerial object tracking. ADTrack, equipped with adaptive illumination and anti-dark capabilities, outperforms other trackers in both well-lit and dark conditions. It processes over 30 frames per second on a single CPU, ensuring efficient tracking under various lighting conditions. The study emphasizes how crucial image illuminance data are and suggests a useful image enhancer to improve tracking performance in all-day situations.

For emergency response applications, the AIDER dataset has facilitated the development of ERNet, a lightweight CNN architecture optimized for embedded platforms. ERNet’s architecture, which incorporates downsampling at an early stage and efficient convolutional layers, allows for real-time classification of aerial images on low-power devices. This showcases the practical use of UAV datasets in disaster management.

The VERI-Wild dataset introduces a novel approach called FDA-Net for vehicle reidentification. This method utilizes a unique type of network to generate difficult negative examples in the feature space. On the other hand, the VRAI dataset developed a specialized vehicle ReID algorithm that leverages detailed annotation information to explicitly identify unique parts for each vehicle instance in object detection.

Ultimately, UAV datasets are essential in the field of computer vision research, providing distinct data that are invaluable for a diverse array of applications. They allow for the development of novel methods tailored to the specific challenges and opportunities presented by UAV technology, accelerating progress in areas such as human behavior analysis, emergency response, and nighttime tracking.

6. Limitations of UAVs

While Unmanned Aerial Vehicles have significantly advanced data collection and analysis in numerous fields, they are not without limitations, particularly concerning the datasets they generate. This section delves into the primary constraints associated with UAV datasets, emphasizing their impact on the field and suggesting areas for improvement.

6.1. Data Quality and Consistency

One of the most pressing limitations of UAV datasets is the inconsistency in data quality. Weather, time of day, and UAV stability are just a few variables that can affect the quality of data that UAVs collect. For example, datasets collected during poor weather conditions or at night may need more visibility and increased noise, complicating subsequent analysis and model training. Even with advancements like low-light image enhancers and specialized algorithms for nighttime tracking, these solutions often need improvement and require further refinement to match the reliability of daytime data.

6.2. Limited Scope and Diversity

UAV datasets often need more diversity in terms of geographic locations, environmental conditions, and the variety of captured objects. Many existing datasets, such as AU-AIR and ERA, focus heavily on specific scenarios like urban traffic surveillance or disaster response, which limits their generalizability to other contexts. Additionally, datasets such as UAV-Human and UAVDark135 tend to feature limited subject diversity and controlled environments, which may not accurately represent real-world conditions. This lack of diversity can lead to models that perform well in specific conditions but struggle in untested environments.

6.3. Annotation Challenges

The process of annotating UAV datasets is often time-consuming and labor-intensive. High-resolution images and videos captured by UAVs require detailed, pixel-level annotations, which are essential for tasks like semantic segmentation and object detection. This is clearly seen in datasets such as RescueNet and FOR-Instance, where the annotation process is recognized as a major bottleneck. The intensive labor required for comprehensive annotation limits the availability of large, well-labeled datasets, which are crucial for training robust machine learning models.

6.4. Computational and Storage Demands

The high resolution and large volume of data generated by UAVs pose significant computational and storage challenges. Processing and analyzing large-scale UAV datasets demand substantial computational resources and advanced hardware, which may only be readily available to some researchers. For example, the dense and high-resolution images in datasets like UAVid and BioDrone require extensive processing power for effective utilization. Additionally, the storage of such vast amounts of data can be impractical for some institutions, hindering widespread access and collaboration.

6.5. Integration with Other Data Sources

Another limitation is the integration of UAV datasets with other data sources. While multimodal datasets that combine UAV data with other sensor inputs (such as satellite imagery, GPS data, and environmental sensors) provide richer insights, they also introduce complexity in data alignment and fusion. The AU-AIR dataset, which includes visual data along with GPS coordinates and IMU data, exemplifies the potential and challenges of such integration. Ensuring the synchronized and accurate fusion of data from multiple sources remains a technical hurdle that needs addressing.

6.6. Real-Time Data Processing

The ability to process and analyze UAV data in real-time is critical for applications like disaster response and surveillance. However, achieving real-time processing with high accuracy is challenging due to the aforementioned computational demands. Models such as those evaluated in the DarkTrack2021 and UAVDark135 datasets show promise but often require optimization to balance speed and accuracy effectively. Real-time processing also necessitates robust algorithms capable of handling dynamic environments and changing conditions without significant delays.

6.7. Ethical and Legal Considerations

Finally, the use of UAVs and their datasets is subject to various ethical and legal considerations. Issues such as privacy, data security, and regulatory compliance must be addressed to ensure responsible and lawful use of UAV technology. These considerations can limit the scope of data collection and usage, particularly in populated areas or sensitive environments, thereby constraining the availability and applicability of UAV datasets.

Despite the transformative potential of UAV datasets across various disciplines, their limitations must be acknowledged and addressed to maximize their utility. Improving data quality, enhancing dataset diversity, streamlining annotation processes, and overcoming computational and storage challenges are essential steps. Additionally, integrating UAV data with other sources, advancing real-time processing capabilities, and adhering to ethical and legal standards will ensure that UAV datasets can be effectively leveraged for future research and applications. By tackling these limitations, the field can fully harness the power of UAV technology to drive innovation and deepen our understanding of complex, dynamic environments from an aerial perspective.

7. Prospects for Future UAV Research

Future studies on UAV datasets need to focus on a few crucial areas to improve their usefulness and cross-domain applicability as the field grows. The following suggestions highlight the crucial paths for creating UAV datasets and maximizing their potential for future innovations.

7.1. Enhancing Dataset Diversity and Representativeness

Further investigations ought to concentrate on generating more varied and representative UAV datasets. This involves capturing data in a wider range of environments, weather conditions, and geographic locations to ensure models trained on these datasets are robust and generalizable. To obtain comprehensive data for tasks like environmental monitoring, urban planning, and disaster response, datasets can be expanded to include a variety of urban, rural, and natural settings.

7.2. Incorporating Multimodal Data Integration

Integrating multiple data modalities, such as thermal, infrared, LiDAR [66], and hyperspectral [50] imagery, can significantly enrich UAV datasets. In the future, these data types should be combined to create multimodal datasets that provide a more comprehensive view of the scenes that were recorded. This integration can improve the accuracy of applications such as vegetation analysis, search and rescue operations, and wildlife monitoring.

7.3. Advancing Real-Time Data Processing and Transmission

For applications like emergency response and traffic monitoring that demand quick analysis and decision-making, developing techniques for real-time data processing and transmission is essential. Future research should focus on optimizing data compression, transmission protocols, and edge computing techniques to enable swift and efficient data handling directly on UAVs.

7.4. Improving Annotation Quality and Efficiency

High-quality annotations are vital for the effectiveness of UAV datasets in training machine learning models. Future studies should investigate automated and semi-automated annotation tools that leverage AI to reduce manual labor and improve annotation accuracy. Additionally, crowdsourcing and collaborative platforms can be utilized to gather diverse annotations, further enhancing dataset quality.

7.5. Addressing Ethical and Privacy Concerns

As UAVs become more prevalent, addressing ethical and privacy issues becomes increasingly important. Guidelines and frameworks for the ethical use of UAV data should be established by future research, especially for applications involving surveillance and monitoring. It is important to focus on creating methods that protect privacy and collect data in a way that respects regulations and earns the trust of the public.

7.6. Expanding Application-Specific Datasets

The creation of customized datasets for specific uses can effectively boost new ideas in certain areas. For instance, datasets focused on agricultural monitoring, wildlife tracking, or infrastructure inspection can provide domain-specific insights and improve the precision of related models. To address the specific needs of various industries, future research should give priority to developing such targeted datasets.

7.7. Enhancing Interoperability and Standardization

Standardizing data formats and annotation protocols across UAV datasets can make it easier for researchers and developers to use and make the datasets more interoperable. Future efforts should aim to establish common standards and benchmarks, enabling the seamless integration of datasets from various sources and promoting collaborative research efforts.

7.8. Utilizing Advanced Machine Learning Techniques

The application of cutting-edge machine learning techniques, such as deep learning and reinforcement learning, to UAV datasets holds immense potential for advancing UAV capabilities. Future research should explore innovative algorithms and models that can leverage the rich data provided by UAVs to achieve breakthroughs in areas like autonomous navigation, object detection, and environmental monitoring.

7.9. Leveraging Advanced Machine Learning Techniques

Longitudinal studies that collect UAV data over long periods of time can provide useful information about how things change over time in different settings. Future research should emphasize continuous data collection efforts to monitor changes in ecosystems, urban developments, and disaster-prone areas, enabling more informed and proactive decision making.

7.10. Fostering Collaborative Research and Open Data Initiatives

Encouraging collaboration among researchers, institutions, and industries can accelerate advancements in UAV datasets. Open data initiatives that make UAV datasets public should be supported by future research. These initiatives will encourage innovation and allow a wider range of researchers to contribute to and use these resources.

By addressing these future research directions, the field of UAV datasets can continue to evolve, offering increasingly sophisticated tools and insights that drive progress across multiple domains. UAV datasets are still being improved and added to, which is very important for getting the most out of UAV technology and making room for new discoveries and uses.

8. Results and Discussion of Reviewed Papers

The datasets discussed in this section represent the application of the papers reviewed in this survey. Our analysis of the datasets revealed that KITE, RescueNet, and Biodrone are relatively new and have not been thoroughly investigated in the literature. While one of the datasets we reviewed, ERA, is not very recent, it still lacks a sufficient amount of study to fully emphasize its potential. The datasets included in our review were selected based on the number of citations their associated papers have received, emphasizing those with higher citation counts. We delved into several papers that make compelling use of the datasets we evaluated. In our examination, we carefully reviewed the details of the analysis of results and experiments conducted by other researchers. These researchers utilized the datasets we assessed as benchmarks and applied various methods. We have included the best results for the methods applied to the datasets we reviewed in this section and in Table 6, Table 7 and Table 8.

Table 6.

Performance metrics and results for different datasets and methods.

Table 7.

Performance metrics and results for different datasets and methods.

Table 8.

Performance metrics and results for different datasets and methods.

8.1. AU-AIR

In their study, Jiahui et al. [69] selected AU-AIR as a benchmark dataset to create their proposed real-time object detection model, RSSD-TA-LATM-GID, specifically designed for small-scale object detection. The performance of their model surpassed that of YOLOv4 [111] and YOLOv3 [33]. The researchers employed the MobileNetv-SSDLite ensemble approach, which yielded the lowest mean average precision (mAP) score.

Walambe et al. [71] employed baseline models on the AU-AIR dataset as one of their evaluative benchmarks. The objective of the study was to demonstrate the attainability of different techniques and ensemble techniques in the detection of objects with varying scales. The baseline technique yielded the highest performance, with a mean average precision (mAP) score of 6.63%. This outcome was achieved by employing color-augmentation on the dataset. The performance metrics for the ensemble methods YOLO+RetinaNet and RetinaNet+SSD were found to be 3.69% and 4.03%, respectively. The authors, Saeed et al. [70], made modifications to the architecture of the CenterNet model by using other Convolutional Neural Networks (CNNs) as backbones, such as resnet18, hourglass-104, resnet101, and res2net101. The findings are presented in Table 6.

In their paper [72], Gupta and Verma utilized the AU-AIR data as a reference point, employing a range of advanced models to achieve precise and automated detection and classification of road traffic. The YOLOv4 model achieved the highest mean average precision (mAP) score of 25.94% on the AU-AIR dataset. The Faster R-CNN and YOLOv3 models achieved the second and third highest maximum average precision (mAP) scores, with values of 13.77% and 13.33%, respectively.

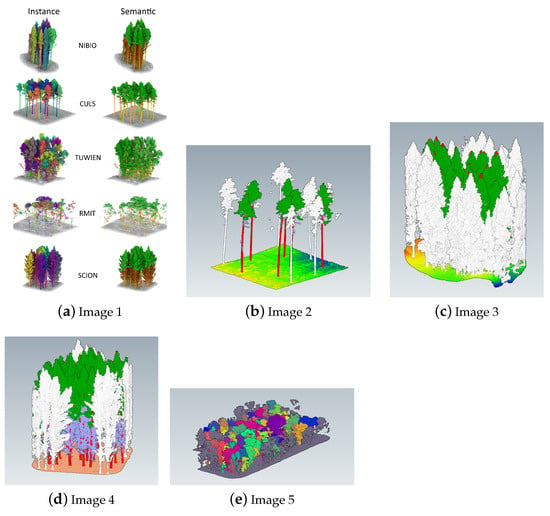

8.2. FOR-Instance

Bountos et al. extensively utilized the “FOR-Instance” dataset in their study [73], while introducing their innovative approach FoMo-Net. The dataset was utilized to analyze point cloud representations obtained from LiDAR sensors in order to gain a deeper understanding of tree geometry. Existing baseline techniques such as PointNet, PointNet++, and Point Transformer were employed to accomplish these objectives on aerial modality. The corresponding findings are presented in Table 7. In a separate paper, Zhang et al. [74] used the “FOR-instance” dataset to train their proposed HFC algorithm and compare its performance with other established approaches. The authors utilized several techniques and ensemble approaches (Xing2023, HFC+Xing2023, HFC+Mean Shift, HFC) on several forest types (CULS, NIBIO, NIBIO2, SCION, RMIT, TUWIEN) shown in the FOR-instance dataset. Among all the methods, HFC demonstrated superior performance. The optimal outcomes achieved by the HFC approach on various forest types represented in the FOR-instance dataset are presented in Table 7.

8.3. UAV-Assistant

Albanis et al. used the UAV-Assistant dataset as the benchmark for their research [75]. They conducted a comparative analysis of BPnP [76] and HigherHRNet’s [25] 6DOF object pose estimation using several different criteria. Analysis revealed that loss functions play a crucial role in posture estimation. Specifically, the l_p loss function outperformed the l_h loss function, particularly in the case of the M2ED drone, resulting in improved accuracy metrics. HigherHRNet demonstrated greater performance compared to HRNet [77] on smaller objects such as the Tello drone, but not on the M2ED drone, indicating its potential superiority under smaller object classifications. Their analysis of qualitative heatmaps revealed that the l_p loss function performed better than the Gaussian-distributed l_h model in accurately locating keypoints. Table 7 displays the accuracy metrics (ACC2 and ACC5) obtained from the research conducted by Albanis and his colleagues. In the case of BPnP, we have included the accuracy for both M2ED and Tello drones, respectively, as they achieved the highest accuracy outcomes. Regarding HRNet and HigherHRNet, they achieved the best accuracy specifically for M2ED.

8.4. AIDER

The AIDER dataset was utilized as a benchmark by Alrayes et al. and the authors of AIDER in developing their innovative method, “EmergencyNet”. In their paper [78], various pre-trained models were applied to the AIDER dataset, with the best F1 accuracy achieved using VGG16 (96.4%) and ResNet50 (96.1%). However, the memory consumption for VGG16 and ResNet50 was quite high, at 59.39 MB and 96.4 MB, respectively. However, EmergencyNet achieved 95.7% F1 accuracy with only 0.368 MB of RAM. ResNet50 had nearly 24 million parameters while VGG16 had 14.8 million. Alrayes et al. benchmarked their AISCC-DE2MS model with AIDER. They found that their algorithm outperformed the genetic, cat-swarm, and artificial bee colony algorithms. MSE and PSNR were utilized to evaluate the model. These methods were used to compare five photos to evaluate the model’s performance. The best result from the five photos is shown in Table 6.

8.5. DarkTrack2021

Changhong Fu and his team utilized the DarkTrack2021 benchmark as a foundation for developing the Segment Anything Model (SMA)-powered framework, SAM-DA. Their research [80] focused on effectively addressing illumination variation and low ambient intensity. They conducted a comparative analysis between their model and various methods, particularly the Baseline tracker UDAT [112] method. Their novel approach outperformed the Baseline UDAT method, achieving substantial improvements of 7.1% in illumination variation and 7.8% in low ambient intensity. The authors evaluated 15 state-of-the-art trackers and found that SAM-DA demonstrated the most promising results. Additionally, Changhong Fu delved into Siamese Object Tracking in their another study [81], highlighting the significance of UAVs in visual object tracking. They also leveraged the DarkTrack2021 datasets as a benchmark to assess model performance in low-illumination conditions, with detailed results and the applied models presented in Table 6.

8.6. UAV-Human

Azmat et al. [82] addressed UAV-captured data-based human action recognition (HAR) challenges and approaches in their UAV-Human dataset research. Azmat et al. evaluated their HAR system on 67,428 video sequences of 119 people in various contexts from the UAV-Human dataset. The approach has a mean accuracy of 48.60% across eight action classes, indicating that backdrops, occlusions, and camera motion hinder human movement recognition in this dataset. Lin et al. [83] studied text bag filtering techniques for model training, emphasizing data quality. Their ablation study indicated that text bag filtering ratio influences CLIP matching accuracy and zero-shot transfer performance. Filtering training data improved model generalization, especially in unsupervised learning. Huang et al. [86] evaluated the 4s-MS&TA-HGCN-FC skeleton-based action recognition model on the UAV-Human dataset. The model achieved 45.72% accuracy on the CSv1 benchmark and 71.84% on the CSv2 test, surpassing previous state-of-the-art techniques. They found that their technique can manage viewpoints, motion blurring, and resolution changes in UAV-captured data.

8.7. UAVDark135

Zhu et al. [88] and Ye et al. [90] used the UAVDark135 dataset to evaluate their strategies for increasing low-light tracking performance. The Darkness Clue-Prompted Tracking (DCPT) approach by Zhu et al. showed considerable gains, reaching a 57.51% success rate on UAVDark135. A 1.95% improvement over the base tracker demonstrates the effectiveness of including darkness clues. Additionally, DCPT’s gated feature aggregation approach increased success score by 2.67%, making it a reliable nighttime UAV tracking system. Ye et al.’s DarkLighter (DL) approach improved tracking performance on the UAVDark135 dataset. DL improved SimpAPN [91,113] tracker’s AUC by over 29% and precision by 21%. It also worked well across tracking backbones, enhancing precision and success rates in light variation, quick motion, and low-resolution circumstances. DL surpassed modern low-light enhancers like LIME by 1.68% in success rate and 1.45% in precision.

8.8. VRAI

VRAI was utilized to establish a vehicle re-identification baseline. Syeda Nyma Ferdous, Xin Li, and Siwei Lyu [92] tested their uncertainty-aware multitask learning framework on this dataset and achieved 84.47% Rank-1 accuracy and 82.86% mAP. This model’s capacity to handle aerial image size and position fluctuations was greatly improved by multiscale feature representation and a Pyramid Vision Transformer (PVT) architecture. Shuoyi Chen, Mang Ye, and Bo Du [93] focused on vehicle ReID using VRAI. RotTrans, a rotation-invariant vision transformer, surpassed current innovative approaches by 3.5% in Rank-1 accuracy and 6.2% in mean average precision (mAP). This approach solved UAV-based vehicle ReID challenges that typical pedestrian ReID methods struggle with. The process was further complicated by the need to present results in a certain format for performance evaluation.

8.9. UAV-Gesture

Usman Azmat et al. [94] and Papaioannidis et al. [95] utilized the UAV-Gesture dataset to evaluate their recommendations for human action and gesture recognition. They used the UAV-Gesture collection of 119 high-definition RGB movies representing 13 unique motions used to control UAVs. The dataset is ideal for testing recognition systems due to its diversity of views and movement similarities. The Usman Azmat et al. method achieved 0.95 action recognition accuracy on the UAV-Gesture dataset. Mean precision, recall, and F1-score for the system were 0.96, 0.95, and 0.94. Several investigations supported by confusion matrices showed the system’s ability to distinguish gestures. Papaioannidis et al. found that their gesture recognition method outperformed DD-Net [96] and P-CNN [45] by 3.5% in accuracy. The authors stressed the need of using 2D skeletal data from movies to boost recognition accuracy. Real-time performance makes their method suitable for embedded AI hardware in dynamic UAV situations.

8.10. UAVid

The UAVid dataset has been extensively utilized as a benchmark by several researchers in the development of innovative methods for semantic segmentation in urban environments. Wang et al. [97] introduced the Bilateral Awareness Network (BANet) and applied it to the UAVid dataset, achieving a notable mean Intersection-over-Union (mIoU) score of 64.6%. BANet’s ability to accurately segment various classes within high-resolution urban scenes was demonstrated through both quantitative metrics and qualitative analysis, outperforming other state-of-the-art models like the MSD benchmark.

Similarly, Rui Li et al. [98] proposed the Attention Aggregation Feature Pyramid Network (A²-FPN) and reported significant improvements on the UAVid dataset. A²-FPN achieved the highest mIoU across five out of eight classes, surpassing BANet by 1% in overall performance. The model’s effectiveness was particularly evident in its ability to correctly identify moving vehicles, a challenging task for many segmentation models.

Libo Wang et al. [99] introduced UNetFormer, which further pushed the boundaries of semantic segmentation on the UAVid dataset. Achieving an impressive mIoU of 67.8%, UNetFormer outperformed several advanced networks, including ABCNet [114] and hybrid Transformer-based models like BANet and BoTNet [115]. UNetFormer demonstrated a strong ability to handle complex segmentation tasks, particularly in accurately identifying small objects like humans.

Lastly, Michael Ying Yang et al. [100] applied the Context Aggregation Network(CAN) to the UAVid dataset, achieving an mIoU score of 63.5% while maintaining a high processing speed of 15 frames per second (FPS). This model was noted for its ability to maintain consistency in both local and global scene semantics, making it a competitive choice for real-time applications in urban environments.

8.11. VERI-Wild

The VERI-Wild dataset has been extensively utilized as a benchmark by several researchers in the development of innovative methods for vehicle re-identification (ReID) in real-world scenarios. Meng et al. [102] introduced the Parsing-based View-aware Embedding Network (PVEN) and applied it to the VERI-Wild dataset, achieving significant improvements in mean average precision (mAP) across small, medium, and large test datasets, with increases of 47.4%, 47.2%, and 46.9%, respectively. PVEN’s ability to perform view-aware feature alignment allowed it to consistently outperform state-of-the-art models, particularly in cumulative match characteristic (CMC) metrics, where it showed a 32.7% improvement over FDA-Net at rank 1.

Similarly, Lingxiao He et al. [103] evaluated the FastReID toolbox on the VERI-Wild dataset, highlighting its effectiveness in accurately identifying vehicles across various conditions. FastReID achieved state-of-the-art performance, particularly in Rank-1 accuracy (R1-Accuracy) and mAP, showcasing its robustness in handling the complexities of vehicle ReID tasks in surveillance and traffic monitoring environments.

Fei Shen et al. [105] applied the GiT method on the VeRi-Wild dataset, securing top performance across all testing subsets, including Test3000 (T3000), Test5000 (T5000), and Test1000 (T1000). The GiT method outperformed the second-place method, PCRNet, by 0.41% in Rank-1 identification rate and 0.45% in mAP on the Test1000 subset. The study emphasized the importance of leveraging both global and local features, as GiT demonstrated superior generalization across different datasets and conditions. In a separate study, Fei Shen et al. [107] developed the Hybrid Pyramidal Graph Network (HPGN) approach, which achieved the highest Rank-1 identification rate among the evaluated methods on the VERI-Wild dataset, thus making more contributions to the advancing field of vehicle ReID. The findings highlighted the resilience of HPGN, especially in difficult circumstances such as fluctuating day and night situations, where alternative approaches exhibited a decrease in effectiveness.

Lastly, Khorramshahi et al. [109] presented a residual generation model that improved mAP by 2.0% and CMC1 by 1.0% compared to baseline models. The model’s reliance on residual information, as indicated by a high alpha value ( = 0.94), proved crucial in extracting robust features from the dataset. This self-supervised method further proved its adaptability and usefulness in vehicle ReID tasks by showcasing its efficacy on the VERI-Wild dataset.

9. Conclusions

In this survey paper, we looked at the current state of UAV datasets, highlighting their various applications, inherent challenges, and future directions. UAV datasets are essential in areas such as disaster management, surveillance, agriculture, environmental monitoring, and human behavior analysis. Advanced machine learning techniques have improved UAV capabilities, enabling more precise data collection and analysis. Despite their potential, UAV datasets face several challenges, including data quality, consistency, and the need for standardized annotation protocols. Ethical and privacy concerns necessitate strong frameworks to ensure responsible use. Future research should increase dataset diversity, integrate multimodal data, and improve real-time data processing. Improving annotation quality and promoting collaborative research and open data initiatives will increase dataset utility. To summarize, UAV datasets are at a critical stage of development, with significant opportunities for technological advancements. Addressing current challenges and focusing on future research directions will result in new discoveries, keeping UAV technology innovative and practical.

Author Contributions

Conceptualization, K.D.G. and M.M.R.; methodology, M.M.R.; writing—original draft preparation, M.M.R. and S.S.; writing—review and editing, M.K. and R.H.R.; supervision, K.D.G.; project administration, M.M.R., S.S., M.K., R.H.R., and K.D.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Georgia Tech HBCU Research Collaboration Forum (RCF) funding initiative. The funding provided by Georgia Tech has been instrumental in fostering collaborative research between Clark Atlanta University (CAU) and Georgia Tech. The findings, interpretations, and opinions expressed in this work are those of the authors and do not necessarily reflect the views or policies of Georgia Tech.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Visual Representation of Reviewed Datasets

The following images were captured from the papers in which they were presented as a new dataset or from the dataset repositories referenced in their paper where they were made available as public dataset repositories.

Appendix A.1. AIDER

Figure A1.

Aerial Image Dataset for Applications in Emergency Response (AIDER): A selection of pictures from the augmented database.

Appendix A.2. BioDrone

Figure A2.

Illustrations of the flapping-wing UAV used for data collection and the representative data of BioDrone. Different flight attitudes for various scenes under three lighting conditions are included in the data acquisition process, ensuring that BioDrone can fully reflect the robust visual challenges of the flapping-wing UAVs.

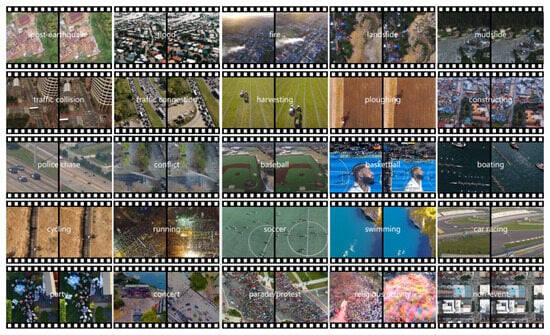

Appendix A.3. ERA

Figure A3.

Overview of the ERA dataset. Overall, they have collected 2864 labeled video snippets for 24 event classes and 1 normal class: post-earthquake, flood, fire, landslide, mudslide, traffic collision, traffic congestion, harvesting, ploughing, constructing, police chase, conflict, baseball, basketball, boating, cycling, running, soccer, swimming, car racing, party, concert, parade/protest, religious activity, and non-event. For each class, we show the first (left) and last (right) frames of a video. Best viewed zoomed in color.

Appendix A.4. FOR-Instance

Figure A4.

Samples of the various FOR-instance data collections’ instance and semantic annotations.

Appendix A.5. UAVDark135

Figure A5.

The first frames of representative scenes in newly constructed UAVDark135. Here, target ground-truths are marked out by green boxes and sequence names are located at the top left corner of the images. Dark special challenges like objects’ unreliable color feature and objects’ merging into the dark can be seen clearly.

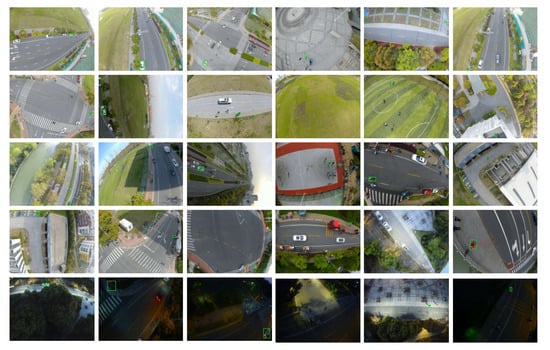

Appendix A.6. UAV-Human

Figure A6.

Examples of action videos in UAV-Human dataset. The first and second rows show two video sequences of significant camera motions and view variations, caused by continuously varying flight attitudes, speeds, and heights. The last three rows display action samples of the dataset, showing the diversities, e.g., distinct views, various capture sites, weathers, scales, and motion blur.

Appendix A.7. UAVid

Figure A7.

Example images and labels from UAVid dataset. First row shows the images captured by UAV. Second row shows the corresponding ground truth labels. Third row shows the prediction results of MS-Dilation net+PRT+FSO model. The last row shows the labels.

Appendix A.8. DarkTrack2021

Figure A8.

Initial frames of specific sequences from the DarkTrack2021 archive. Objects being tracked are indicated by green boxes, and sequence names are shown in the top left corner of the photos.

Appendix A.9. VRAI

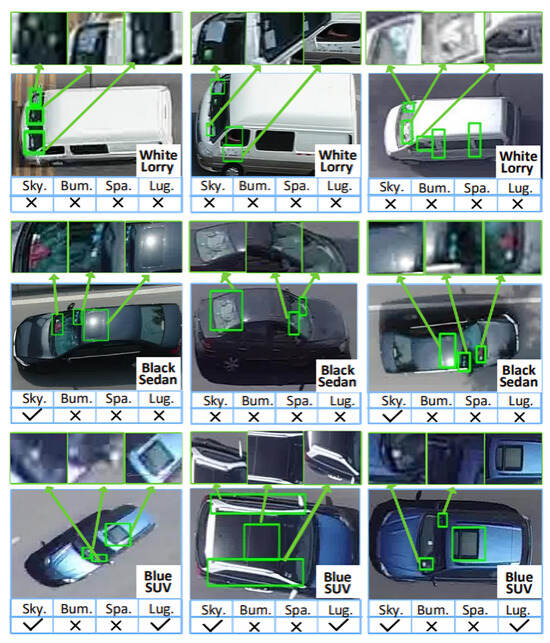

Figure A9.

Illustration of a UAV-based vehicle Re-Identification (ReID) dataset displaying annotated distinguishing elements, such as skylights, bumpers, spare tires, and baggage racks, across diverse vehicle categories (e.g., white truck, black sedan, blue SUV). The green bounding boxes and arrows delineate particular vehicle components essential for identification, while variations in perspective and resolution underscore the difficulties of ReID from UAV imagery. The tabular annotations beneath each vehicle indicate the presence or absence of key elements for ReID.

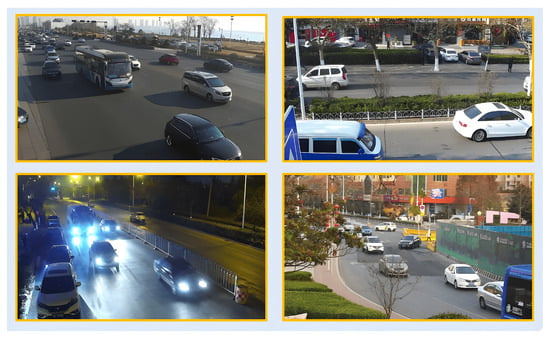

Appendix A.10. VERI-Wild

Figure A10.

Exemplary photos extracted from the dataset. The dataset is obtained from a comprehensive real video surveillance system including 174 cameras strategically placed around an urban area spanning over 200 square kilometers.

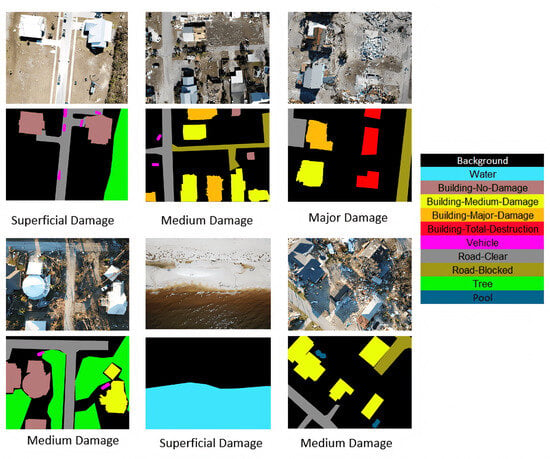

Appendix A.11. RescueNet

Figure A11.

Graphical representation of complex scenes from the RescueNet dataset. The first and third rows display the original photos, while the lower rows provide the associated annotations for both semantic segmentation and image classification functions. Displayed on the right are the 10 classes, each represented by their segmentation color.

Appendix A.12. UAV-Assistant

Figure A12.