1. Introduction

By leveraging Earth observation image data captured by sensors aboard satellite platforms, the rapid and automatic positioning and identification of targets such as aircraft and ships carry significant application value in domains like reconnaissance, early warning, and the protection of maritime rights and interests. At present, remote sensing (RS) satellite technology is continuously advancing toward maturity, and both the quantity and quality of acquired remote sensing images are steadily improving. Owing to the wide field of view, bird’s-eye perspective, and complex, rich ground feature information contained in remote sensing images, data processing and analysis of such images have garnered substantial attention in recent years.

Traditional RS detection algorithms [

1,

2] typically generate candidate regions based on features like edges and gray levels. They then extract key features such as corners, gradients, and orientations using manually designed feature operators, before finally employing classifiers and regressors for refined recognition and localization. While traditional remote sensing object detection has undergone careful design for each step and achieved favorable results in specific application scenarios, it still faces numerous problems and limitations [

3,

4]. Firstly, the traditional approach relying on candidate region extraction can only roughly extract horizontal rectangular regions, leading to poor localization accuracy. Secondly, manually designed shallow and mid-level features mostly utilize low-level visual information, resulting in weak feature representation capabilities. These features fail to accurately convey complex high-level semantic information in images and exhibit poor generality across different categories. In summary, the performance of traditional remote sensing image detection methods is far from meeting the practical application requirements of on-orbit platforms.

Neural network-based RS detection technology effectively integrates processes such as candidate region extraction, feature learning, and classification–regression of suspected object regions [

5,

6]. This effectively avoids the drawbacks of the cumbersome design and weak generalization inherent in manually crafted features. RS detection networks are primarily divided into two categories: two-stage [

7,

8,

9,

10] and one-stage detectors [

3,

6,

11]. For two-stage detectors, Oriented R-CNN [

12] directly generates high-quality rotated candidate boxes by designing a specialized oriented RPN network. These boxes are then precisely refined through the oriented R-CNN head, achieving accurate detection of tilted targets via a two-stage cascade optimization process. R

3Det [

13] gradually adjusts initial horizontal candidate boxes using a Feature Refinement Module, realizing progressive optimization from coarse localization to precise rotation boxes. To achieve faster detection speeds, RetinaNet-R [

14], built on a rotationally aware feature pyramid network, directly predicts rotated bounding boxes through a single forward inference. It also employs focal loss to address sample imbalance, enabling fast and accurate detection of rotated targets. R-DFPN [

15] designs a dense feature pyramid network structure that performs rotation box regression directly on multi-scale feature maps, ensuring real-time performance while achieving precise detection of rotated targets of varying scales.

Presently, research on detection architectures has shifted focus from accuracy enhancement to speed optimization [

16,

17,

18]. Yang’s Resolution Adaptive Network (RANet) [

19] consists of multiple deep subnetworks with different weights. Samples first undergo identification starting from the subnetwork with the lowest weight. If the results meet specific criteria, the process terminates; otherwise, the samples are fed into a subnetwork with a higher weight for further identification. This achieves a balance between accuracy and computational load while reducing spatial redundancy in images. BranchyNet [

20] designs additional branch classifiers, allowing most test samples to exit the network early through these classifiers based on their prediction results. SkipNet [

21] develops a residual network with gated units, selectively skipping unnecessary convolutional layers during inference, which significantly reduces the model’s inference time. With fixed input data, dynamic parameters can be selected to appropriately adjust network parameters, improving feature extraction performance while adding only a small amount of computational cost.

In the research field of lightweight and efficient remote sensing object detection, existing studies [

6,

10] have carried out targeted explorations focusing on the oriented nature, rotational property, multi-scale characteristic, and detection efficiency requirements of objects in remote sensing scenarios. LO-Det [

22] focuses on the lightweight implementation of oriented object detection in remote sensing images. By designing a lightweight backbone network and a parameter-efficient oriented object prediction branch, it reduces the model’s computational complexity and parameter count while adapting to the detection needs of common oriented objects (such as aircraft and ships) in remote sensing scenarios, thus balancing detection accuracy and operational efficiency. To address the issue of increased complexity caused by the coupling of spatial transformation and detection tasks in oriented object detection, STD-Net [

9] proposes a spatial transformation decoupling strategy. This strategy decouples object pose adjustment from feature extraction and classification–regression tasks, effectively simplifying the oriented detection process, reducing redundant computations, and providing a new approach for the efficient detection of oriented remote sensing objects. Starting from model generalization and rotational robustness, ReDet introduces rotation-equivariant properties. This enables the model to adapt to arbitrary rotation angles of remote sensing objects without relying on extensive rotated data augmentation. While improving the detection accuracy of rotated objects, it also reduces the costs of data preprocessing and model training, indirectly contributing to the efficiency of the detection process. Targeting the significant multi-scale differences of remote sensing objects, LSK-Net [

23] constructs a large selective kernel network. By adaptively selecting receptive fields that match the scale of objects, it achieves accurate capture of remote sensing objects of different sizes (e.g., small-scale vehicles and large-scale buildings). Meanwhile, it leverages the dynamic adjustment mechanism of selective kernels to avoid computational redundancy caused by fixed large kernels, optimizing the model’s operational efficiency while ensuring multi-scale detection capability. Additionally, there have been studies focusing on the lightweight property of SAR image segmentation: Ma et al. [

24] proposed a fast task-specific region merging method, integrating superpixel generation and merging into an end-to-end network, proposed a statistical dissimilarity measure and k-order connectivity, and optimized the segmentation accuracy and efficiency of SAR images. Soft-GCN [

25] combines deep task-specific superpixel sampling with soft graph convolution. Both aim to improve the efficiency of SAR image segmentation, providing technical insights for efficient segmentation tasks in lightweight remote sensing detection. These studies provide diverse technical pathways for the efficient detection of remote sensing objects from the perspectives of task decoupling, feature robustness, and scale adaptability, promoting the development of this field toward the coordinated advancement of high accuracy and high efficiency.

In conclusion, existing mainstream lightweight network structures place excessive emphasis on parameter reduction, leading to various adverse effects such as low computational efficiency. They also fail to fully consider the impact of feature redundancy on remote sensing object detectors [

4,

12,

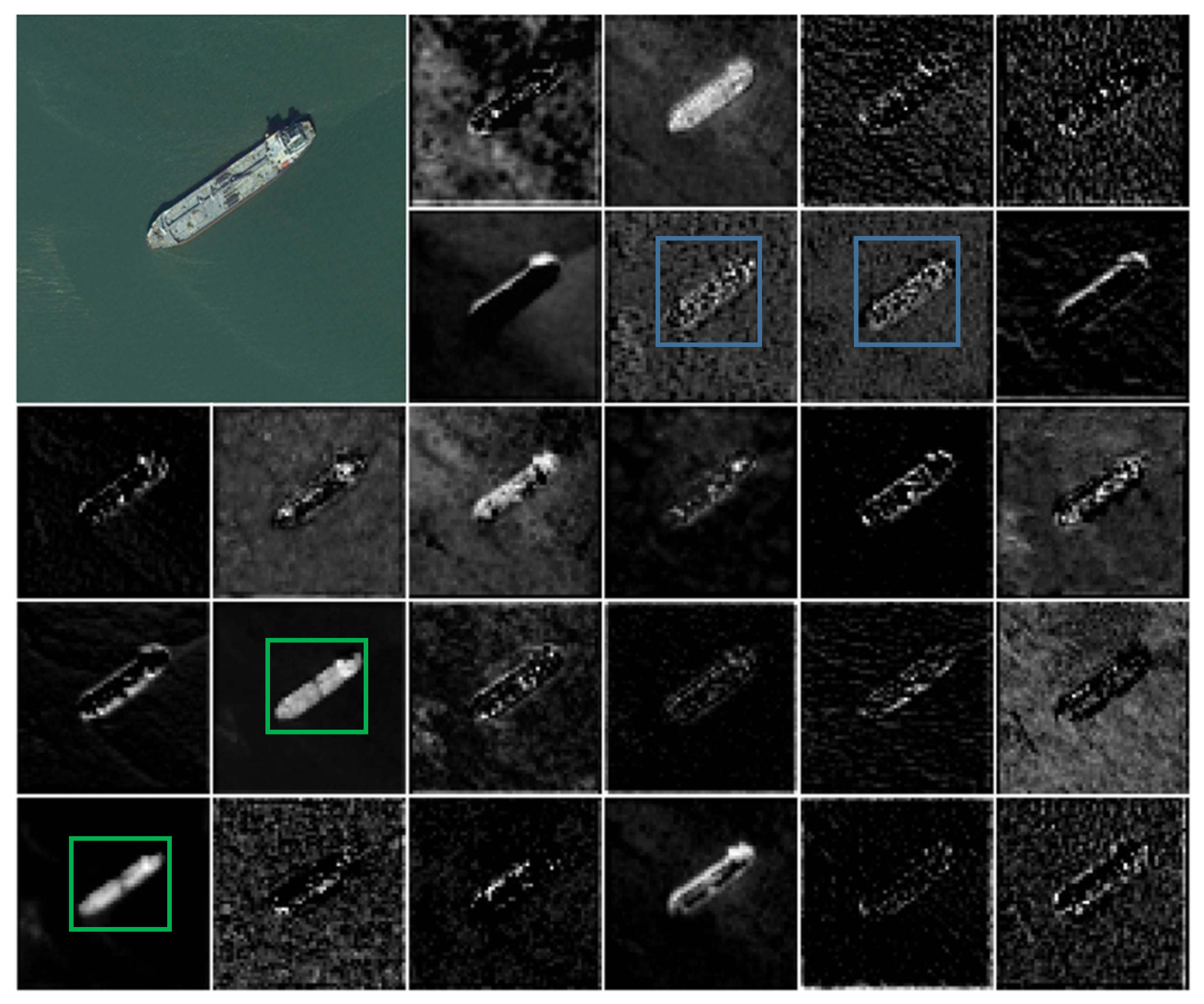

26]. Specifically, there is significant redundancy in the feature maps of deep neural networks. As shown in

Figure 1, the 26 subfigures correspond to feature maps from different channels in the convolutional layer. The highlighted blue and green squares indicate regions of feature maps with high similarity, intuitively demonstrating the redundancy in deep neural network feature maps—these similar feature maps mainly contain fine-grained information such as target edges and textures. Feature maps across different channels exhibit high similarity. We believe that such redundancy in feature maps may be crucial for accurately locating densely arranged small targets in remote sensing images, as these seemingly similar redundant feature maps mostly contain fine-grained information like target edge textures. Unlike previous studies, we do not discard redundant feature maps; instead, we utilize them in a simpler and more efficient manner to reduce memory access and convolutional computation. Additionally, detectors share features from the backbone, which are redundant and imprecise for the key features required by specific tasks. For example, classifiers need directionally invariant features to correctly classify the same target with different orientations, while regressors require directionally sensitive features to accurately locate targets. Indiscriminately using redundant backbone features as inputs for remote sensing object classification and regression tasks not only introduces excessive computational load but also causes mutual interference between classification and regression features, which is detrimental to the accurate detection of rotating objects.

To address this issue, this paper puts forward a novel solution. Specifically, within the convolutional layers, a dynamic mixed convolution (DM-Conv) mechanism is developed. It replaces traditional standard convolution with linear mapping, enabling the rapid generation of redundant feature maps and the reorganization of convolution operations. A feature aggregation strategy is designed between convolutional layers, which merges features from different intermediate layers through weighted fusion to generate deep-level features, thereby reducing the number of channels in each convolutional layer. In addition, a spatial orthogonal attention (SOA) mechanism is constructed to capture the dependencies between distant pixels in both horizontal and vertical directions, which helps enhance the feature representation ability of the convolutional layers. Depthwise separable convolution reduces computation by splitting spatial convolution and pointwise convolution, without utilizing feature redundancy and easily losing fine-grained information, while DM-Conv, through generating redundant feature maps via linear mapping + weighted fusion across intermediate layers, reduces computation while retaining key fine-grained information such as target edges and textures. Aiming at the problem that existing mainstream lightweight networks overemphasize parameter reduction, leading to low computational efficiency, and fail to fully consider the impact of feature redundancy on remote sensing object detectors, the design motivation of DM-Conv is to efficiently generate redundant feature maps through linear mapping while reducing the number of channels by combining cross-intermediate layer weighted fusion to avoid computational redundancy of traditional convolution; SOA is designed to solve the problem of high computational cost when traditional attention mechanisms capture long-range pixel dependencies, and simplifies computation through feature decomposition in horizontal and vertical directions. Experimental results show that compared with the baseline model, this method can reduce the computational complexity of the remote sensing detection network while keeping the detection accuracy almost unaffected.

3. Method

This section explores optimization methods for remote sensing detection models during deployment, focusing on the design of convolutional structures in remote sensing detection networks. The aim is to enhance actual hardware inference efficiency while maintaining high detection accuracy. The proposed structure is illustrated in

Figure 2. Within the convolutional layers, dynamic mixed convolution (DM-Conv) is designed, which divides the convolutional layers in depthwise separable convolution into two parts. The first part employs regular convolution, but its total number is strictly controlled. Subsequently, a series of simple linear operations are applied to the generated feature maps from the first part to generate all feature maps. Finally, the feature maps from both parts are fused in the channel dimension, enabling the rapid generation and replacement of redundant feature maps and reducing memory access frequency. To prevent the weakening of the expressive power of the rapidly generated feature maps, a spatial orthogonal attention mechanism is designed on this basis. This mechanism aggregates local and remote information from both horizontal and vertical directions, allowing the convolutional structure to capture dependencies between distant pixels while simplifying computations. Currently, widely used deep learning tools such as TF-Lite and ONNX can effectively support this strategy, making it convenient for rapid inference on various embedded devices.

3.1. Dynamic Mixed Convolution

In most remote sensing detection frameworks, the utilization of existing complex convolutional structures as feature extractors leads to the generation of a large number of redundant feature channels. Reducing these channels may result in the loss of key information such as edges and textures, thereby affecting detection accuracy. However, if these redundant features are not reduced, it will increase memory access costs and limit the effective deployment of the model on embedded or edge computing platforms. To address these issues, this paper designs a lightweight dynamic mixed convolution, which replaces standard convolution with a linear filter bank. While maintaining nearly lossless accuracy, it significantly enhances the generation speed of feature maps, thereby effectively reducing computational redundancy and memory access during the inference process of remote sensing detection networks. The following will provide a detailed introduction to this method.

The

n process of generating feature maps by a standard convolutional layer can be defined as follows

. In the formula, ∗ represents the convolution operation,

b is the bias term,

X is the input feature map

,

Y is the output feature map

, and

W is the convolution filter

. The number of parameters that need to be optimized in the convolution kernel is determined by the dimensions of the input and output feature maps. It can be seen that there is a high similarity between the targets in the blue and green boxes. Furthermore, calculating the minimum mean squared error (MSE) of these feature maps, as shown in

Table 1, where all MSE values are very small, indicates that there is a strong correlation between feature maps in deep neural networks, and these redundant feature maps can be generated from a few inherent feature maps. Based on this correlation, redundant feature maps can be efficiently derived from the core feature maps.

Therefore, there is no need to generate these redundant feature maps one by one through a large number of convolution operations. The output feature maps can be regarded as a small number of derived feature maps obtained through some simple transformations (as shown in the

Figure 3). The specific process is as follows:

where

is the intrinsic

i feature map in

Y the middle, and

is the

j linear operation used to generate the derivative feature map.

is the intrinsic feature, whose size is usually smaller than the original output feature. Then, cheap operations are used to generate more similar features. The two parts of the feature are connected along the channel dimension,

concatenated with the intrinsic feature map, thus forming a complete convolutional layer, as shown below:

where

Y represents the final output feature map,

represents the original intrinsic feature map, and

represents pointwise convolution for linear mapping. The concatenate operation effectively expands the dimension of feature representation without significantly increasing the computational burden by splicing the derived feature map with the intrinsic feature map. This splicing strategy ensures that the network can capture and retain the richness and diversity of input data while reducing the number of parameters and computational cost. The application of linear transformation enables each derived feature map to be processed independently and in parallel, which reduces the computational burden. At the same time, by reducing the number of convolution kernels, it effectively controls the growth of memory and FLOPs (Floating Point Operations), thereby improving overall computational efficiency.

Computational complexity theoretical analysis.

Figure 3 illustrates the basic working principle of the dynamic mixed convolution proposed in this paper. It only requires applying standard convolution on a portion of the input channels for spatial feature extraction, without affecting the remaining channels. For continuous or regular memory access, the first or last continuous channel sequence is used

as the computational benchmark for the feature map. In the case where the input and output feature maps have the same number of channels, the FLOPs of DM-Conv are only:

When using compression ratio

, the FLOPs of DM-Conv are only 1/16 of standard convolution. In addition, the memory access amount of DM-Conv is:

As can be seen from the above formula, the proposed DM-Conv has a memory access amount that is 1/4 of standard convolution. At this time, the

remaining channels outside remain unchanged. In order to fully and effectively utilize the information from all channels, this paper further appends Pointwise Convolution (PWConv) to the proposed DM-Conv. Compared to standard convolution that slides along a fixed direction, it pays more attention to the central position. The FLOPs of DM-Conv can be calculated as:

Although directly performing the aforementioned feature mapping can significantly reduce computational costs, it inevitably weakens its spatial representation ability. The relationship between spatial pixels is crucial for achieving accurate recognition. However, half of the features only capture spatial information through inexpensive operations. The remaining features are generated solely through 1 × 1 pointwise convolution without interacting with other pixels. The weak ability to capture spatial information may hinder further improvement in performance. To address this, based on this lightweight structure, we introduce a self-attention mechanism to effectively simulate and supplement the spatial correlation relationships over a large range.

3.2. Spatial Orthogonal Attention Mechanism

Generally, existing attention mechanisms often utilize a given input feature

, and then employ a fully connected layer to generate a set of attention feature maps. The specific operational process is as follows:

where ⊙ denotes element-wise multiplication, and

F is the learnable weight matrix of the layer,

is the feature value at spatial position

in the input feature map

Z, and

is the attention weight at position

. Generally, feature maps in CNNs are usually low rank, and there is no need to densely connect all inputs and outputs at different spatial positions. Therefore, this paper decomposes the fully connected layer into two orthogonal layers and aggregates features along the horizontal and vertical directions, respectively. It can be expressed as:

where

and

are the learnable horizontal and vertical attention weights, respectively, and

is the intermediate attention weight after horizontal aggregation. The aforementioned equations capture long-range dependencies in both directions. In this paper, we refer to this operation as decoupled fully connected attention. Due to the decoupling of horizontal and vertical transformations, the computational complexity of the attention module can be reduced to O(H* W* 2). The specific process is shown in

Figure 4.

The input feature map of the entire convolutional layer is sent to two branches. One branch generates the eigen-feature map and redundant feature map, while the other employs an orthogonal self-attention mechanism to produce an attention map

A. The final output of the convolutional layer is the product of the outputs from the two branches, that is:

where

is the sigmoid activation function scaling the attention map to

.

is the feature map output by the convolution module, and

O is the final output feature map after fusing attention weights. The output encompasses both the features from the original convolution module and the spatial information from the orthogonal attention module. The computation of each attention value incorporates a wide range of pixel blocks, allowing the output features to incorporate information from these pixel blocks.

The floating point operations (FLOPs) of a neural network are an important metric for evaluating the computational complexity of object detection networks. For a standard convolutional layer, when the input feature map has dimensions , the number of output channels is , and the kernel size is , the FLOPs are defined as: where represents the number of multiplication operations, represents the number of addition operations, and denote the width and height of the feature map, respectively, and accounts for the bias term in the convolutional layer. Directly parallelizing the orthogonal attention with other modules would introduce additional computational costs. Therefore, this paper reduces the size of the features through horizontal and vertical downsampling, enabling all operations within the orthogonal attention to be performed on smaller features. Typically, the width and height are reduced to half of their original length, resulting in a 75% reduction in FLOPs. Subsequently, the generated feature map is upsampled to its original size to match the size of the feature map from the other branch. This paper employs average pooling and bilinear interpolation for downsampling and upsampling, respectively. The features obtained from downsampling are activated using a function to accelerate actual inference.

4. Experiment

4.1. Parameter Settings

To validate the proposed method, this study conducts experiments on three public remote sensing object detection datasets, as detailed below: (1) The DOTA-v1.0 dataset comprises a large volume of high-resolution remote sensing images spanning diverse scenes. It includes 2806 images with resolutions ranging from 800 × 800 to 4000 × 4000 pixels, annotated with 15 distinct object categories—each labeled using rotated bounding boxes. (2) The HRSC2016 dataset, a publicly accessible remote sensing ship image dataset released by Northwestern Polytechnical University in 2016, is derived from six major ports in Google Earth. It features rich geographical environments and ship activity patterns, with image spatial resolutions ranging from 0.4 to 2 m—sufficient to clearly capture fine details of ships, thereby strongly supporting fine-grained detection tasks. The dataset contains 1680 images in total, encompassing 2976 ship targets, with image sizes varying from 300 × 300 to 1500 × 900 pixels to accommodate diverse research needs and adaptability testing of different algorithms. (3) The UCMerced_LandUse dataset, released by the University of California, Merced (UC Merced), is primarily utilized for remote sensing image analysis, scene classification, and land use research in computer vision. It includes high-resolution remote sensing images across 21 categories, totaling over 10,000 images. These categories cover common land use types such as agriculture, forests, water bodies, and urban buildings. The relatively high spatial resolution of the images enables clear identification of detailed features in various land use types, facilitating accurate recognition of small targets and complex structures within them.

In this research, ResNet serves as the baseline model. All networks are trained using the Adam optimizer. For the DOTA-v1.0, UCAS-AOD, and UCMerced-LandUse datasets, training runs for 300 epochs with a batch size of 16 and a momentum of 0.9. All images are resized to 800 × 800 pixels. The initial learning rate is set to 0.001, and it is reduced by a factor of 10 at 50% and 75% of the total training epochs. In all experiments, the scale factor for all channels is initialized to 0.5. For data augmentation, the strategies adopted in the experiment, including random horizontal flip (probability 0.5), random vertical flip (probability 0.3), random cropping (cropping ratio 0.7–1.0), and color jitter (brightness ±10%, contrast ±10%), have been supplemented; in terms of hardware, it has been clarified that the hardware used in the experiment is 2* NVIDIA RTX 3090 GPU (2* 16 GB memory) and an Intel Core i7-12700K CPU.

4.2. Analysis of Ablation Experiments

The proposed dynamic mixed convolution has two hyperparameters: the parameter s for generating the derived feature maps, and the kernel size d × d (i.e., the size of the depthwise convolution filter) for performing linear operations. This section tests the impact of these two parameters. First, with s = 2 fixed, dd is adjusted within

, and the results on the UCMerced-LandUse dataset are listed in

Table 2. It can be observed that the dynamic mixed convolution module with d = 3 outperforms smaller or larger modules. This is because a kernel of size 1 × 1 cannot introduce spatial information on the feature map, while larger kernels (e.g., d = 5 or d = 7) lead to overfitting and increased computational cost. Therefore, in the following experiments, d = 3 is chosen to achieve better detection accuracy and timeliness.

Next, fix d = 3, set

train on the dataset, and test the results. The results are shown in

Table 3. As can be seen from

Section 3.1, the hyperparameter

s is directly related to the consumption of computational resources. As can be observed in Equations (

4) and (

5), a larger value of

s leads to greater compression of computational resources and an increase in speed ratio. When increasing

s, the computational resources are significantly reduced, and the accuracy gradually decreases. Especially when

, the performance is good in terms of accuracy, computational resources, and time consumption.

- 2.

Visualization of intermediate features

This section first visualizes the intermediate features of the residual network ResNet18 in order to observe the feature similarities between the original feature maps. In

Figure 5, the first residual block of ResNet18 is used to extract features, with the input being the original image and the output being the output feature map of the residual block. The feature data of

Figure 1 comes from the output of the 3rd convolutional layer of the ResNet18 network; the input images are 50 randomly selected 800 × 800 resolution remote sensing images from the DOTA dataset, which are resized to 224 × 224 before inputting into the network; the feature visualization tool uses Matplotlib 3.10.0, presenting the feature map intensity distribution in the form of heatmaps; the blue and green highlighted areas in the figure are high-similarity feature regions screened by “Minimum Mean Square Error (MSE)”, and the MSE calculation is based on the feature map pixel value matrix (the specific calculation process corresponds to the MSE data when k = 3 in

Table 1). It can be seen that the feature maps in the boxes with the same color have high similarity. For example, in the feature map of the airplane in the upper left corner, the target features in the red box have similar contours, textures, and brightness. For complex backgrounds such as tennis courts in urban scenes (lower left corner of

Figure 5), a large number of similar feature maps can also be found, such as the areas in the red, blue, and orange boxes. For docks with high aspect ratios or oil tanks with circular geometric appearances, similar output feature maps are also included. Not only that, the MSE (mean squared error) values of each pair of feature maps in boxes with different colors are shown in

Table 4. From this table, it can be seen that all MSE values are extremely small. This indicates that there is a strong correlation between feature maps in deep neural networks, and these redundant feature maps can be generated from several inherent feature maps. Similar phenomena apply to most images.

This section also visualizes the intermediate feature maps generated by the proposed dynamic mixed convolution, as shown in

Figure 6. On the left is the feature map generated by standard convolution, while on the right is the one generated by linear mapping. Although the feature map on the right originates from the mapping of convolution features from the previous layer, these mapped feature maps exhibit significant differences from each other. This implies that the features generated by dynamic mixed convolution are flexible enough to provide a rich and diverse set of features (as shown in

Figure 7) for specific classification and regression tasks while reducing computational complexity.

To intuitively compare the effectiveness of this method with classic image classification and recognition methods, this section employs the confusion matrix ( as shown in

Figure 8) method for visual representation. Each row of the confusion matrix corresponds to all samples predicted to belong to that class, and the diagonal of the confusion matrix indicates the number of samples predicted correctly. The denser the distribution of predicted values on the diagonal, the better the model performance. The confusion matrix also facilitates identifying which categories the model tends to misclassify. As evident from

Figure 8, the confusion matrix corresponding to this method exhibits a higher density on the diagonal, indicating good classification performance. In

Table 5, this section also presents improvements made to classic backbone structures such as ResNet and Vgg using dynamic mixed convolution. It can be observed that while maintaining nearly lossless recognition accuracy, the computational complexity has been reduced by approximately two times.

4.3. Comparative Experiment

To verify the effective application of the proposed lightweight backbone structure in rotational object detection methods, this section compares the method proposed in this chapter with classic remote sensing object detection methods in recent years on the oriented bounding box (OBB) detection task of the DOTA dataset. The results are shown in

Table 6. These comparison methods include two major categories: two-stage detectors and one-stage detectors. As can be seen from the table, the method proposed in this chapter achieves a detection performance of 79.37% on the mAP metric, achieving the best detection results among all compared algorithms. For small objects, such as small vehicles (SV) and storage tanks (ST), the method achieves mAP values of 81.54% and 87.31%, respectively. For large-scale objects, such as swimming pools (SP), baseball fields (BD), and ports (HA), the AP values of the method are all greater than 75%. In addition, the method achieves good detection performance (as shown in

Figure 9) for two types of objects with variable orientations, sparse wide-neighborhoods, and multi-neighborhood aggregations: airplanes (PL) and ships (SH). The above results demonstrate that the method proposed in this chapter has certain adaptability to scale and rotational orientation.

Compared to the two-stage remote sensing detection algorithm, the algorithm presented in this chapter demonstrates certain advantages in terms of computational complexity and detection accuracy. Among them, RRPN, SCR-Det, and other methods are all based on the Faster-RCNN method as the baseline module, and rotational boxes are added to detect rotating targets. RRPN does not utilize a feature pyramid network, making it difficult to adapt to remote sensing targets with large scale differences, resulting in relatively low detection performance. SCR-Det achieved an mAP value of 72.61% on the DOTA dataset, achieving the best performance among the two-stage algorithms. Compared to SCR-Det, the computational complexity of the method presented in this chapter is only half of it, while it has a 6.76% advantage in mAP metrics. In single-stage algorithms, the method presented in this chapter has obvious advantages in detection. Specifically, methods such as R3Det, GWD, RSDet, CSL, and SASM have all made improvements in rotating feature extraction, rotational box representation, positive and negative sample allocation strategies, etc., enabling them to detect remote sensing targets with large scale differences and multiple rotational directions. Among the aforementioned single-stage detection algorithms, the most advanced SASM achieved an mAP value of 79.17% on the DOTA dataset. The detection accuracy of the method presented in this chapter is close to it, but its computational complexity is only 53.6% of SASM. These results demonstrate that the method presented in this chapter achieves an effective balance between detection accuracy and speed.

- 2.

Comparison experiment on HRSC-2016 dataset

HRSC-2016 contains a large number of ship targets with arbitrary orientations and significant scale differences. To evaluate the performance of the method proposed in this chapter on this dataset, this section compares it with classic remote sensing target detection methods in recent years. The quantitative comparison results are shown in

Table 7. When using diversity mixture convolution as the backbone network, the method proposed in this chapter achieves a mean average precision (mAP) of 90.3%, and its detection performance is comparable to advanced remote sensing detection networks such as ReDet and O-RCNN. However, the computational complexity of the method proposed in this chapter is only 50% of these detection networks. In addition, based on the previous chapter, the backbone structure of HAA-Net is modified using the diversity mixture convolution proposed in this chapter, resulting in a 27% reduction in computational complexity while maintaining similar detection accuracy. As can be seen from the visualization result in

Figure 10, the method proposed in this chapter still has good detection capabilities for ship targets with pose changes and scale variations.

- 3.

Comparison with well-known lightweight detectors

We have added additional comparative experiments with the aforementioned lightweight detectors and supplemented the relevant metrics (mAP, Params, FLOPs) on DOTA (as shown in

Table 8). The results show that: on the DOTA dataset, the proposed method achieves an mAP of 79.37%, which is higher than that of YOLOv4-tiny (68.23%), EfficientDet-D0 (72.5%), and the GhostNet-based detector (75.1%); meanwhile, the proposed method has FLOPs of 84 G, lower than that of YOLOv4-tiny (116 G), EfficientDet-D0 (107 G), and the GhostNet-based detector (112 G). In addition, its Params are only 23.0 M, which is significantly lower than those of the three detectors (i.e., 28.5 M, 31.2 M, and 29.8 M, respectively). The relevant comparative data have been organized into a new table and integrated into the experimental section.

On the CPU (Intel Core i7-12700K), the FPS of the proposed method under the input size of 800 × 800 on the DOTA dataset is 18.2 (shown in

Table 8), which is higher than that of YOLOv4-tiny (15.6), EfficientDet-D0 (12.3), and GhostNet-based detectors (14.8); on the edge device (NVIDIA Jetson Xavier NX), as shown in

Table 9, the FPS of the proposed method is 10.5, which is also higher than that of the three aforementioned detectors (8.9, 7.2, and 9.1, respectively); the test results prove the real-time performance of the proposed method.

5. Conclusions

The large amount of image data in remote sensing with a wide field of view brings high network computational complexity to deep neural networks, making it difficult to meet the demand for precise and fast detection in-orbit applications. Therefore, this paper proposes a convolutional structure lightweighting algorithm that ensures high-quality and compact representation by designing diverse hybrid operators to simplify the convolutional network, achieving a significant reduction in network computational complexity while maintaining approximate lossless precision. Specifically, dynamic mixed convolution is designed within the convolutional layer, where linear mapping replaces the standard convolution to generate redundant feature maps and reorganize the convolution operation; feature aggregation methods are designed between convolutional layers to generate deep features by weighted mixing of features from different intermediate layers; in addition, a spatial orthogonal attention mechanism is designed to capture the dependence between distant pixels in both horizontal and vertical directions, enhancing the feature expression ability of the convolutional layer. First, in the task of extremely small object detection, the linear mapping operation of DM-Conv may lead to the loss of some fine-grained features, which in turn has a certain impact on the detection accuracy of such objects; second, when processing ultra-high-resolution remote sensing images, the horizontal and vertical downsampling operations adopted by SOA would cause a small amount of spatial information loss, and at the same time lead to a certain increase in computational overhead; finally, the robustness of the proposed method in complex rain and cloud scenes has not been fully verified, and this issue needs to be further optimized and solved in subsequent studies by combining meteorological correction data.

However, the method proposed in this paper still has some shortcomings in the following scenarios: first, in the task of extremely small object detection, the linear mapping operation of DM-Conv may lead to the loss of some fine-grained features, which in turn has a certain impact on the detection accuracy of such objects; second, when processing ultra-high-resolution remote sensing images, the horizontal and vertical downsampling operations adopted by SOA would cause a small amount of spatial information loss, and at the same time lead to a certain increase in computational overhead; and finally, the robustness of the proposed method in complex rain and cloud scenes has not been fully verified, and this issue needs to be further optimized and solved in subsequent studies by combining meteorological correction data.