1. Introduction

In graph theory and network optimization, the concept of a

minimum cut plays a fundamental role in quantifying network reliability [

1], communication bottlenecks [

2], and resource allocation constraints [

3]. Given a network represented as a capacitated undirected graph, the classic minimum cut problem focuses on identifying the smallest set of edges whose removal disconnects a designated pair of nodes—typically a source and a sink. This problem has been extensively studied and forms the basis of many important results, including the celebrated Max-Flow Min-Cut Theorem [

3].

While traditional algorithms have concentrated on computing the minimum cut between a single source–sink pair [

4,

5,

6,

7], many emerging applications require a global view of network capacity. In particular, it is often important to identify the source node that can sustain the highest possible minimum cut to a fixed sink [

8,

9]. This leads to the

Global Maximum Minimum Cut with Fixed Sink (GMMC-FS) problem: given a fixed sink node in a capacitated graph, find the source node whose minimum

s–

t cut is the largest among all possible sources. Applications of this problem arise in a variety of real-world network scenarios. For example, consider a communication network in which multiple base stations transmit data to a central controller. Selecting the base station whose connection to the controller has the largest minimum cut ensures the most reliable data delivery path, even under potential link or node failures. This idea naturally extends to other domains. In server provisioning and data center selection [

10], the formulation assists in determining which server can sustain the highest aggregate throughput toward a fixed sink, thereby improving overall network utilization. In resilient data dissemination [

11], the approach helps identify a source node that maintains service continuity under multiple link disruptions. Finally, in load-aware routing, the model facilitates balanced traffic distribution by selecting sources that provide the strongest connectivity margins to the sink.

The GMMC-FS problem can be naturally expressed as a nested max–min optimization [

12], making it both conceptually and computationally more difficult than classical minimum cut computations. A naive approach would require solving a minimum cut problem from every other node to the sink, resulting in an excessive computational cost—especially in large or dense graphs. Furthermore, unlike the global minimum cut [

13], the GMMC-FS problem lacks known deterministic polynomial-time algorithms with guaranteed optimality.

In this paper, we propose a recursive reduction (RR) algorithm for solving the GMMC-FS problem with provable correctness and practical efficiency. The key idea is to iteratively select a pivot source, compute its minimum cut with respect to the fixed sink, and then prune all vertices that cannot possibly exceed the pivot in cut value. By recursively applying this elimination process, the algorithm progressively reduces the candidate set of sources until only the maximizer remains. This design transforms an otherwise exhaustive enumeration of all source nodes into a streamlined recursive search, often requiring exponentially fewer max-flow computations in practice.

Our main contributions are summarized as follows:

We formally define the Global Maximum Minimum Cut with Fixed Sink (GMMC-FS) problem, capturing a natural max–min connectivity formulation relevant to diverse applications.

We design RR, a recursive reduction algorithm that leverages pivot-based elimination to efficiently solve the GMMC-FS problem. RR guarantees correctness while drastically reducing the number of max-flow computations compared to naive enumeration.

We provide a rigorous theoretical analysis of RR, proving that it always identifies the optimal source and establishing both worst-case and expected complexity bounds.

We conduct extensive empirical evaluations across diverse graph topologies. Results demonstrate that RR consistently achieves optimal solutions with substantially reduced runtime relative to baseline methods, particularly on large and dense graphs.

The remainder of the paper is organized as follows.

Section 2 formally defines the GMMC-FS problem and presents the necessary preliminaries.

Section 3 introduces our RRA algorithm.

Section 4 provides theoretical analysis, including correctness and complexity bounds.

Section 5 presents experimental results with the baselines and varying scenarios.

Section 6 introduces the related work and

Section 7 concludes the paper.

2. Problem Definition and Preliminaries

We consider a capacitated undirected graph

, where

V is the vertex set and

E is the edge set, with the number of nodes

and edges

. Each edge

has a non-negative capacity

. Let

be a fixed terminal node, referred to as the sink. For any node

, define

where for any edge set

,

, and for any vertex subset

,

. That is,

represents the minimum capacity of any cut that separates node

s from the fixed sink

t. The objective of the problem is to identify the source node

2.1. Relationship to Classical Min–Cut and Max–Flow

The GMMC-FS problem differs from the classical minimum cut problem, which seeks the smallest cut separating a single pair of nodes. In contrast, GMMC-FS is a max–min problem: it aims to identify the source node that has the highest possible minimum cut to a fixed sink. This makes the problem inherently more complex, as it involves evaluating multiple source candidates rather than a single cut.

By the max–flow min–cut theorem, is equivalent to the value of the maximum flow from s to t. Therefore, solving the GMMC-FS problem can also be interpreted as identifying the node s that achieves the maximum value of the minimum capacity s–t cut (or equivalently, the maximum flow).

2.2. Computational Challenge

A naive approach to solving the GMMC-FS problem would involve computing the minimum

s–

t cut (or maximum flow) for every

. Each such computation requires invoking a flow oracle, whose running time we denote by

, depending on the algorithm used (e.g., Edmonds–Karp [

4], Dinic [

5], Push–Relabel [

6], or Orlin’s [

7] algorithm). In dense graphs, where

, such computations for all nodes become very expensive.

Hence, a more efficient strategy is required to avoid exhaustively evaluating all possible source nodes, particularly for large-scale or dense networks. Our proposed approach addresses this challenge by using recursive reduction with the cut information, which narrows the search space while maintaining correctness guarantees.

2.3. Illustrative Example

To better illustrate the structure and objective of the GMMC-FS problem, we provide a concrete example.

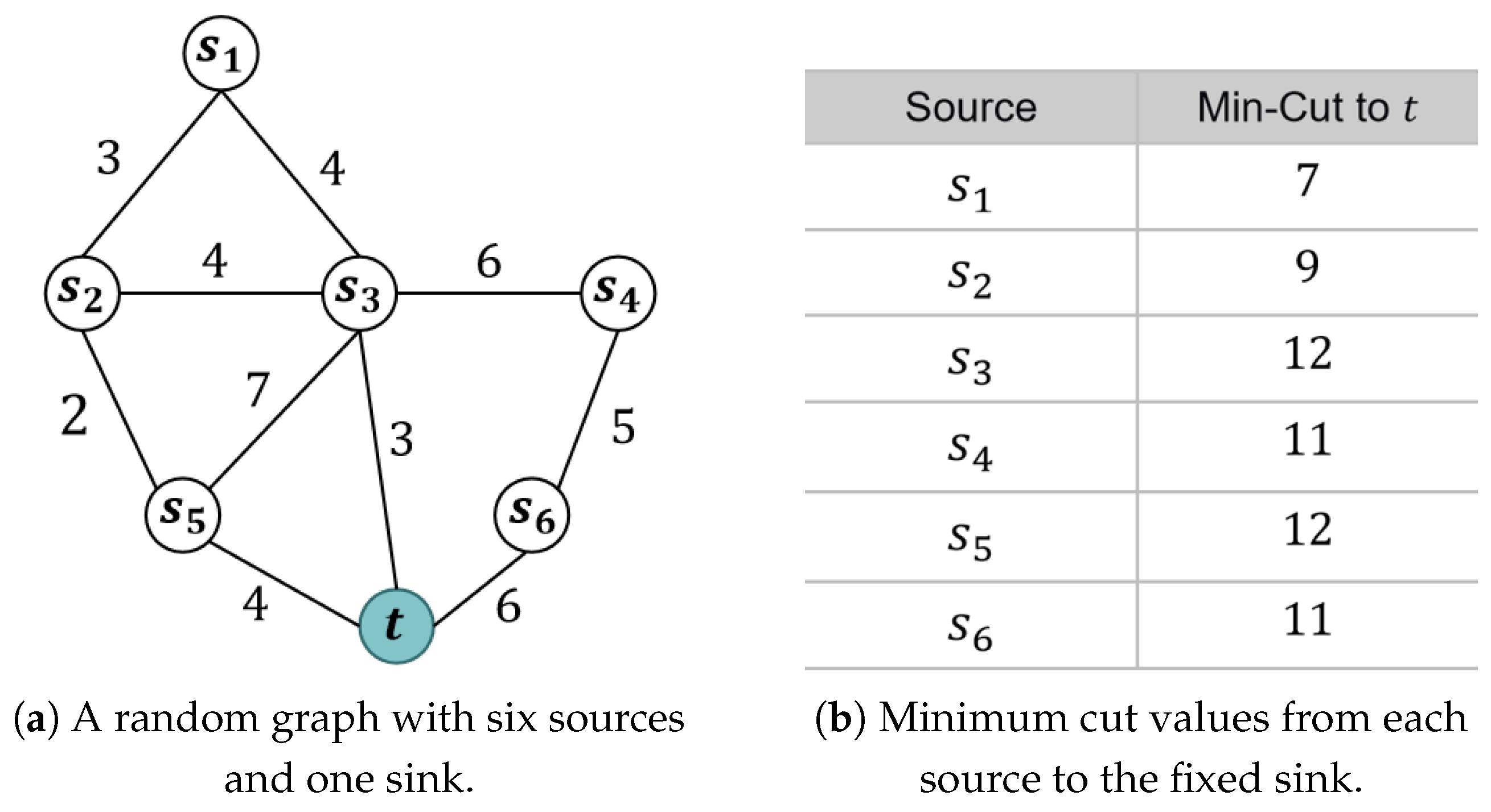

Figure 1a shows a small undirected graph consisting of six source nodes

and one fixed sink node

t. Each edge is labeled with its corresponding capacity, and all capacities are assumed to be non-negative integers. For each source node

, we compute the minimum

–

t cut value, denoted

. These values represent the smallest total capacity of edges that need to be removed to disconnect

from

t. The results are summarized directly in the associated

Figure 1b.

This example highlights that different source nodes yield different minimum cut values to the sink. For instance, and are the highest, whereas is the smallest. Therefore, the nodes and are optimal source nodes in this instance. The example demonstrates two key aspects of the GMMC-FS problem: (i) it illustrates the variability of connectivity strength between sources and the sink, and (ii) it shows that even on small graphs, computing all possible minimum cuts quickly becomes nontrivial, motivating the need for more efficient, scalable algorithms.

3. Recursive Reduction Algorithm

We now present the proposed recursive reduction (RR) algorithm, designed to efficiently identify the source node with the largest minimum s–t cut value in a capacitated undirected graph . While a straightforward solution requires computing max-flow problems against a fixed sink t, RR leverages the structural properties of s–t cuts to eliminate groups of nodes at once, substantially reducing the number of required computations.

3.1. Algorithm Overview

The recursive reduction algorithm builds on the simple yet powerful observation that once the minimum cut between a chosen pivot node r and the sink t is computed, all other nodes lying on the same side of the cut as r cannot have a strictly larger cut value than r itself. These nodes can therefore be safely discarded from further consideration. By iteratively selecting pivots, evaluating their minimum cuts, and pruning entire subsets of vertices at each step, the algorithm progressively narrows the candidate set of sources. The search thus shifts from evaluating all possible sources to a recursive elimination process, which substantially reduces the number of max-flow computations required in practice while still guaranteeing that the true maximizer is preserved.

3.2. Algorithm Description

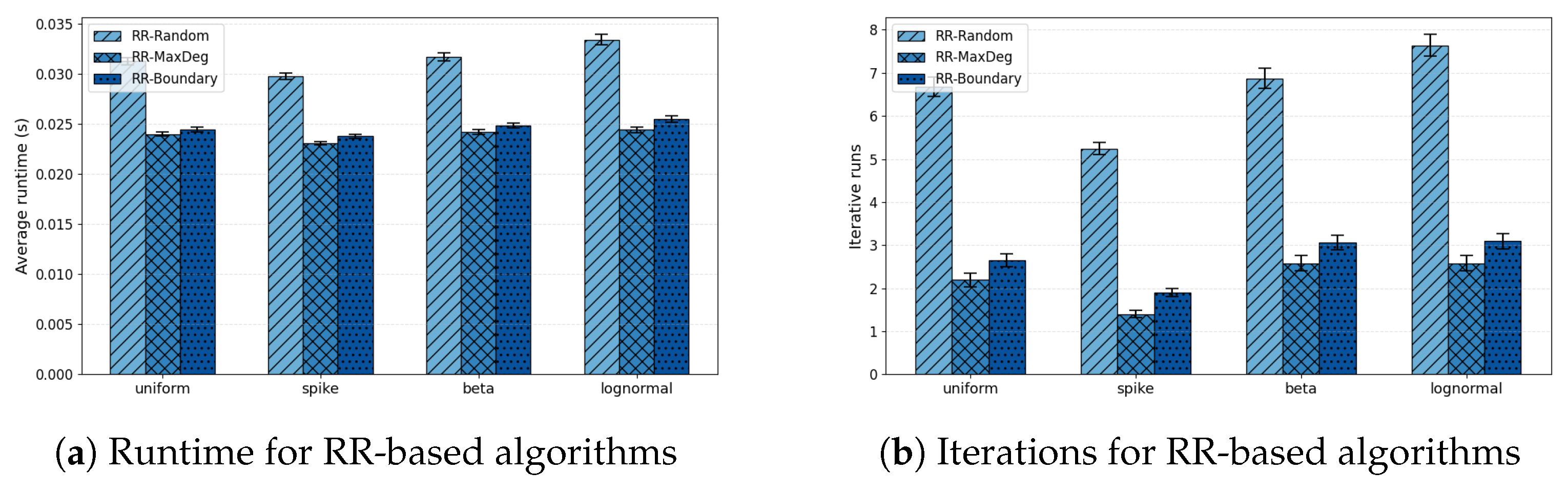

Let

denote the current candidate set of sources. The algorithm begins with all non-sink vertices and iteratively selects one pivot from

according to a prescribed strategy, such as choosing the node of highest weighted degree (RR-MaxDeg), selecting randomly (RR-Random), or prioritizing boundary vertices revealed by previous cuts (RR-Boundary). For the chosen pivot, a minimum cut with respect to

t is computed, and the resulting cut value

is compared with the best solution found so far. If it improves upon the current record, both the maximum value and the corresponding source are updated. Next, the algorithm eliminates from

all nodes that lie on the same side of the cut as the pivot, since their cut values are upper bounded by the pivot’s. The process then continues with the reduced candidate set. Once

becomes empty, the algorithm returns the source achieving the largest recorded cut value. The full procedure is summarized in Algorithm 1.

| Algorithm 1 Recursive reduction (RR) algorithm |

| Input: An undirected capacitated graph with a fixed sink t |

| Output: A source maximizing the s–t minimum cut value |

- 1:

Initialize candidate set , best value , and . - 2:

while

do - 3:

Select a pivot according to a chosen strategy (e.g., random, maximum weighted degree, or boundary proximity). - 4:

Compute the minimum cut with , and cut value . - 5:

if then - 6:

Update and . - 7:

if then - 8:

- 9:

else - 10:

- 11:

return

|

3.3. Illustrative Example

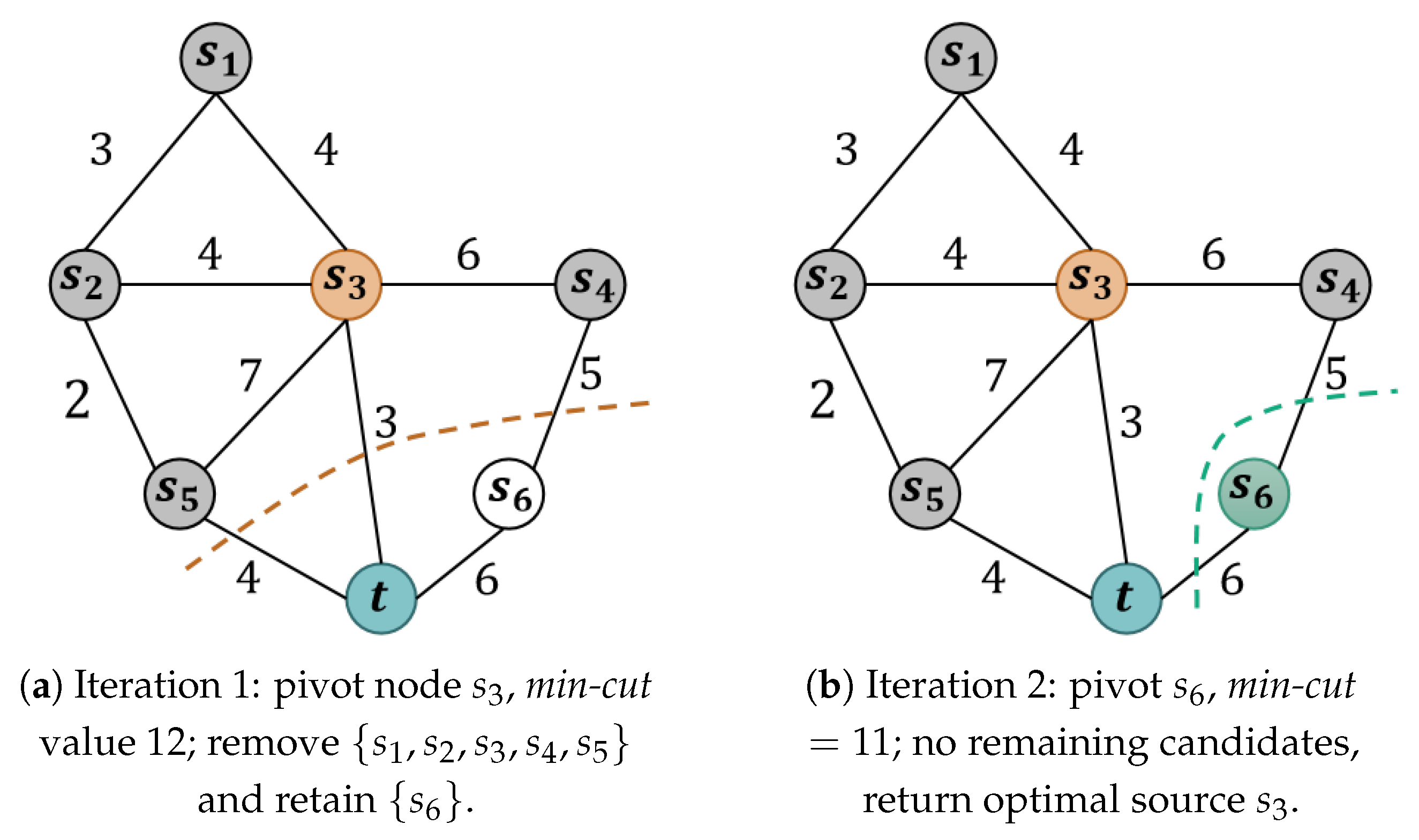

Figure 2, which adopts the same base graph as

Figure 1, illustrates the recursive reduction process step by step. In the first iteration, the pivot node is selected as

(colored orange) according to the maximum weighted degree strategy. The corresponding minimum

–

t cut is shown in orange dashed lines, with cut value 12. All vertices on the same side of the cut as

, namely

, are removed from the candidate set since their cut values cannot exceed 12. The remaining candidate set reduces to

(colored green).

In the second iteration, is the only remaining candidate. Its minimum cut shown in green dashed lines with t has value 11, which is strictly smaller than the current best value 12. Therefore, no update is made to the best solution. As the candidate set is now empty, the recursion terminates and the algorithm returns as the optimal source, achieving the global maximum minimum cut value of 12.

This example highlights the essence of the recursive reduction mechanism: by successively choosing pivots, evaluating their minimum cuts, and discarding dominated subsets, the candidate set shrinks rapidly while preserving correctness. In practice, this leads to substantial reductions in the number of max-flow computations compared to exhaustive enumeration.

3.4. Summary and Design Rationale

The recursive reduction (RR) algorithm is designed with two complementary goals: correctness and efficiency. Its correctness lies in the fact that each elimination step preserves the optimal source, a property that is formally established in

Section 4.1. Its efficiency comes from recursive pruning: instead of exhaustively evaluating all

possible sources, the algorithm progressively discards large subsets of candidates and quickly narrows the search space. As shown in

Section 4.2, this mechanism guarantees that the expected number of iterations is only

on typical random graphs, yielding a total expected running time of

, significantly improving over enumeration. This combination of provable optimality and practical efficiency constitutes the central design rationale of RR, making it a robust and scalable alternative to exhaustive max-flow computations.

4. Theoretical Analysis

We now establish the theoretical foundations of the recursive reduction (RR) algorithm. Our analysis is divided into two parts: first, we prove the correctness of the algorithm, showing that it always identifies a source node attaining the maximum s–t minimum cut value. Second, we analyze its computational complexity, contrasting the worst-case behavior with the typical performance observed in random graph models.

4.1. Correctness Guarantee

We now establish that Algorithm 1 always identifies a source node achieving the maximum

s–

t cut value. Recall that for any

,

4.1.1. Safe Elimination via Pivot Cuts

At each iteration, the algorithm selects a pivot r and computes a minimum r–t cut with value . Since every is separated from t by the same cut, . Thus, if the true maximizer lies in S, then and the optimum has already been attained; otherwise, and remains in the candidate set. Hence discarding S is always safe.

4.1.2. Invariant and Termination

This argument yields a simple invariant: after every iteration, either the optimum value has already been recorded, or the true maximizer remains in the candidate set. Since the candidate set shrinks strictly each round, the algorithm halts in at most iterations.

4.1.3. Main Theorem

Theorem 1 (Correctness of RR).

Upon termination, Algorithm 1 returns a source s with .

Proof sketch. By the invariant, the optimum is never lost. When the candidate set becomes empty, the recorded best value must equal

, and the corresponding source is returned. Formal details are deferred to

Appendix A. □

4.2. Complexity Analysis

We analyze the iteration complexity of the RR framework. In each round of RR, a pivot vertex r is selected from the current candidate set , and the minimum r–t cut is computed to eliminate vertices. Since every iteration removes at least one candidate, in the worst case, RR halts after iterations. This bound is tight but arises only in highly adversarial constructions, and is rarely encountered in practice. We therefore focus on two more structured regimes where substantially faster convergence can be proved: (i) graphs where star cuts dominate the minimum cut structure, and (ii) graphs where every minimum cut is balanced. Finally, we present a unified upper bound that combines the two cases.

4.2.1. Star-Cut-Dominated Graphs

We first consider the regime where the minimum r–t cut typically isolates only the pivot vertex. Formally, for a vertex , we call the cut a star cut. In sparse random graphs, such star cuts dominate the cut structure with high probability.

Assumption 1 (Erdős–Rényi random graph with i.i.d. capacities).

Let with for some absolute constant . Each potential edge e independently exists with probability p. Edge capacities are i.i.d., independent of the topology, with mean , variance , and finite third absolute moment.

Theorem 2 (Logarithmic iterations under star-cut dominance).

Under the above assumption, with high probability the minimum r–t cut is a star cut for every pivot r. Consequently, the RR algorithm terminates in iterations in expectation.

Proof sketch. For a vertex r, write for the capacity of its star cut . Under i.i.d. edge capacities with mean and light tails (e.g., bounded or sub-Gaussian), concentrates around its expectation with high probability. Moreover, any r–t cut strictly larger than the star cut has a capacity larger by a constant factor with high probability; hence, star cuts dominate. Because pivots are symmetric and chosen uniformly at random from the candidate set, each iteration removes a uniformly random candidate, so the candidate set shrinks geometrically in expectation, which yields an expected number of iterations. □

4.2.2. Non-Star Balanced-Cut Graphs

We next consider graphs where star cuts are not dominant, but balanced cuts occur with non-negligible probability. Intuitively, as long as each iteration has a constant probability of producing a cut that eliminates a constant fraction of the candidate set, the algorithm still converges in logarithmically many rounds in expectation.

Assumption 2 (Balanced-cut condition).

There exist constants such that for any iteration i and candidate set , the pivot cut eliminates at least an α-fraction of with probability at least β.

Lemma 1 (Geometric shrinkage in expectation).

Proof. If the balanced-cut event occurs (probability

), then at least

candidates are eliminated, yielding

Otherwise, fewer may be eliminated. Taking expectation over the two cases proves the stated inequality. □

Theorem 3 (Expected

iterations).

By iterating Lemma 1,Hence the expected number of iterations until termination satisfies

Thus, under the balanced-cut condition, RR terminates in expected

iterations. The overall expected running time is therefore

where

denotes the complexity of a maximum flow computation.

4.2.3. Complementary Upper Bound

Theorem 4 (Unified pathwise bound).

Let denote the realized number of iterations in which the minimum cut is a star cut, and assume the remaining iterations satisfy the β-balanced condition. Then for every run, the total number of iterations R satisfies Proof sketch. Each star-cut iteration eliminates one candidate, while each -balanced iteration shrinks the candidate set by a constant fraction. Combining linear progress from rounds with geometric progress from the balanced rounds yields the bound. □

In addition, if one introduces a probabilistic model of star-cut occurrence, taking expectations gives

If further each iteration produces a star cut with probability at least

, then

so that

This shows that frequent star cuts (

constant) keep the expected number of iterations logarithmic, while rare star cuts (

) lead to near-linear behavior.

4.2.4. Summary

The iteration complexity of RR depends on the structural properties of the underlying graph and detailed proofs are provided in

Appendix B.

Table 1 summarizes the main regimes: in the worst case adversarial constructions graphs may require

iterations, while star-cut-dominated or

-balanced graphs admit

convergence in expectation. Classical random graph families naturally align with these categories: ER graphs typically fall in the star-cut-dominated regime due to homogeneous degrees and weak clustering; RRG and RGG exhibit balanced partitions thanks to uniform connectivity and symmetry; while WS, BA, and SBM display mixed structures combining hubs, clustering, or community boundaries.

4.2.5. Discussion

The iteration complexity of RR is highly sensitive to the cut structure of the graph. Star-cut dominance and balanced cuts both lead to logarithmic performance, while the absence of such structure results in linear behavior.

A key algorithmic factor is the rule for selecting the pivot vertex r. Three natural strategies illustrate how pivoting interacts with graph structure:

Uniform random selection. In symmetric settings such as Erdős–Rényi graphs, uniformly random pivots exploit star-cut dominance, leading to the expected bound.

Boundary-based selection. In graphs with balanced cuts, choosing pivots near the cut boundary increases the likelihood of large reductions in the candidate set, consistent with the -balanced cut guarantee.

Maximum-degree selection. Choosing the vertex with the highest weighted degree leverages structural heterogeneity, particularly in hub-dominated or scale-free graphs. This strategy significantly raises the chance of isolating a t-star cut, which both the theoretical analysis and empirical results show to yield the fastest convergence.

While poor pivot choices can in principle realize the pessimistic bound, such cases are rare in practice unless pivoting is adversarial. Overall, the pivot selection rule serves as the practical bridge between the structural regimes identified in theory and the observed efficiency of RR across diverse graph families.

6. Related Work

The study of network connectivity optimization has its roots in the classical maximum–flowand minimum cut problems. Foundational deterministic algorithms such as Edmonds–Karp [

4] and Dinic [

5], together with later improvements like Push–Relabel [

6] and Orlin’s algorithm [

7], provide efficient polynomial-time solutions for computing a single source–sink min-cut. However, applying these algorithms to all possible source nodes relative to a fixed sink, as required in the GMMC-FS problem, incurs

flow computations. For dense graphs with

, this approach quickly becomes computationally infeasible.

Randomized contraction algorithms, pioneered by Karger [

13] and refined in the Karger–Stein algorithm [

8], shifted attention to the global minimum cut problem. These methods achieve near-optimal runtimes in unweighted graphs with high probability and have been extended through sparsification [

14], dynamic contraction thresholds [

15], and parallel implementations [

16]. While highly successful for global connectivity, contraction-based methods do not directly address the fixed-sink max–min formulation, where the objective is to maximize the minimum cut over all sources to a designated sink.

For multi-terminal and all-pairs cut problems, the Gomory–Hu tree [

2] offers a compact representation of all pairwise min-cuts using only

flow computations. Subsequent work has developed faster variants [

9,

17], as well as extensions to hypergraphs [

18] and dynamic settings [

19]. Despite their generality, Gomory–Hu-based approaches still require

flow computations, which are unnecessarily expensive when only cuts relative to a fixed sink are needed, as in GMMC-FS.

Beyond theory, practical algorithm engineering has produced solvers optimized for large-scale graphs. For example, VieCut [

20] integrates inexact preprocessing heuristics with exact cut routines to achieve significant speedups in practice. In distributed and parallel computation models, particularly the Congested Clique and MPC settings, researchers have developed near-optimal min-cut algorithms with sublinear round complexity [

15,

19], and streaming algorithms have been proposed for processing massive graphs under memory constraints [

21,

22]. These paradigms demonstrate strong scalability for general min-cut computations, but their overhead remains substantial for dense networks and fixed-sink formulations.

In networking applications, min-cut-based metrics have been widely adopted in traffic engineering [

11], resilient server placement, and fault-tolerant multicast design. The max–min connectivity objective studied in robust network design [

12,

23] is conceptually related to GMMC-FS, as both focus on maximizing bottleneck connectivity to a sink or terminal. Existing approaches, however, either perform a sequence of full max-flow computations or rely on linear programming relaxations, both of which struggle to scale in high-density topologies.

In contrast to these prior approaches, the GMMC-FS problem—identifying the source node with the largest minimum cut to a fixed sink—has remained underexplored. Existing deterministic and randomized algorithms either solve the problem indirectly via exhaustive enumeration or rely on structures designed for global or all-pairs settings. Our work fills this gap by introducing the recursive reduction (RR) algorithm, which leverages pivot-based elimination and recursive pruning to reduce the candidate set aggressively. Rather than computing all

flows, RR guarantees correctness while requiring only

iterations in expectation on typical random graphs, as established in

Section 4.1 and

Section 4.2. This combination of provable optimality and practical scalability distinguishes RR from contraction-based min-cut algorithms and Gomory–Hu tree methods, offering the first exact and efficient solution tailored to the fixed-sink max–min cut problem.

7. Conclusions

This paper addressed the Global Maximum Minimum Cut with Fixed Sink (GMMC–FS) problem, which seeks the source node with the largest minimum cut to a designated sink in a capacitated undirected graph. To solve this problem efficiently, we proposed the recursive reduction (RR) algorithm, which applies a pivot-based elimination strategy to recursively prune dominated candidates while ensuring exact correctness. Specifically, we provided a rigorous theoretical analysis establishing both correctness and logarithmic expected complexity, and demonstrated through extensive experiments on synthetic and real-world graphs that RR consistently achieves the optimal solution with substantially lower runtime than classical enumeration. The results confirm that recursive pruning is highly effective in reducing the search space and that RR scales robustly across graph sizes, densities, and structures.

The proposed RR framework establishes a foundation for efficient max–min source selection in undirected static networks. A promising direction for future work is to extend RR to more general settings, particularly to directed or weighted dynamic graphs, where edge capacities or directions evolve over time. These extensions would enable the framework to handle asymmetric communication networks and time-varying topologies. Another promising direction is to integrate RR with approximation or learning-based strategies to further reduce computational costs on large-scale systems. Such developments could broaden the applicability of RR in dynamic and data-driven network optimization scenarios.