Contextual Object Grouping (COG): A Specialized Framework for Dynamic Symbol Interpretation in Technical Security Diagrams

Abstract

1. Introduction

1.1. Problem Motivation: The Challenge of Symbol Standardization in Security Diagrams

1.2. Limitations of Traditional Approaches and the Nuance of Modern Contextual Models

- 1.

- First detecting individual objects (symbols, labels, geometric elements);

- 2.

- Then, applying post-processing heuristics to infer relationships and establish meaning.

- Symbol Ambiguity: The same visual symbol may represent completely different security devices across different design standards and individual preferences.

- Brittle Post-Processing: Rule-based heuristics for connecting symbols to their meanings are domain-specific, difficult to generalize, and prone to failure when encountering new design styles.

- Context Separation: Traditional pipelines separate perception (object detection) from interpretation (relationship inference), creating a semantic gap that is difficult to bridge reliably.

- Scalability Issues: Each new design style or symbol convention requires manual rule engineering, making the system difficult to scale across diverse security diagram formats.

- Reliance on Pre-defined Vocabularies/Large-Scale Knowledge: YOLO-World and Florence-2, while “open-vocabulary,” infer meaning based on broad pre-trained knowledge. They are adept at recognizing objects that have been extensively represented in their training data or can be logically inferred from existing vocabulary. In contrast, technical diagrams often use highly abstract or non-standard symbols whose meaning is exclusively defined within the diagram’s accompanying legend. A “red circle” can mean anything, and its meaning cannot be guessed from general world knowledge.

- Post Hoc Interpretation of Specific Symbols: For symbols unique to a specific diagram, these advanced models would still typically detect the visual primitive (e.g., “circle,” “arrow”) and then require a subsequent, separate process to link this primitive to its specific, dynamic meaning as defined in the legend. This reintroduces the semantic gap that COG aims to eliminate. The interpretation of the legend itself—identifying symbol–label pairs as functional units—appears not to be a first-class objective for these general-purpose models.

- Lack of Explicit “Legend-as-Grounding” Mechanism: While vision–language models can process text, they do not inherently possess a mechanism to treat a specific section of an image (the legend) as the definitive, dynamic ground truth for interpreting other visual elements within that same document. COG, by contrast, elevates the legend’s symbol–label pairing into a first-class detectable contextual object (Row_Leg). This allows the model to learn the compositional grammar of the diagram directly from the legend, making the interpretation process intrinsically linked to the diagram’s self-defining context.

1.3. The COG Solution: Context as Perception

1.4. Contributions

- Specific Ontological Framework: We introduce the concept of contextual COG classes as a new intermediate ontological level between atomic object detection and high-level semantic interpretation, supported by comprehensive evaluation using established metrics [17].

- Dynamic Symbol Interpretation: We demonstrate how COG enables automatic adaptation to different symbol conventions through legend-based learning, eliminating the need for symbol standardization in security diagram analysis.

- Hierarchical Semantic Structure: We show how COG can construct multi-level semantic hierarchies (Legend → COG(Row_Leg) → Symbol + Label) that capture the compositional nature of technical diagrams.

- Practical Application Framework: We demonstrate the application of COG to real-world security assessment tasks, showing how detected elements can be integrated with technical databases for comprehensive asset analysis in intelligent sensing environments [19].

2. Related Work

2.1. Object Detection Evolution: From Classical to Modern Approaches

2.2. Semantic Segmentation and Structured Understanding

2.3. Visual Relationship Detection (VRD)

2.4. Scene Graph Generation (SGG)

2.5. Document Layout Analysis (DLA) and Technical Drawing Understanding

2.6. Cyber-Physical Security Systems (CPPS) and Intelligent Sensing

2.7. Key Distinctions: COG vs. Existing Contextual Approaches

2.8. Contextual Understanding in Technical Diagram Analysis

2.9. Comparative Analysis: COG vs. Existing Methods

3. The COG Framework

3.1. Philosophical Foundations: COG as Visual Language Compositionality

3.2. Formal Definition and Notation Conventions

3.3. COG vs. Traditional Ontological Hierarchies

- Perceptual vs. Conceptual: Ontological inheritance establishes abstract, logical relations among concepts, typically defined by is-a or has-a relationships. COG defines groupings as spatially and functionally grounded visual constructs, emerging directly from the image and learned as detection targets.

- Dynamic vs. Static: While class inheritance imposes static structural taxonomy, COG dynamically encodes structure via detection patterns, enabling models to generalize beyond fixed conceptual trees.

- Data-Driven vs. Manual: Classical ontologies are manually curated by experts following formal ontology principles as described by Guarino [40] and Smith [41]. COG constructs are learned from visual co-occurrence patterns in training data, offering dynamic, context-grounded structures rather than static taxonomic hierarchies.

4. Implementation and Methodology

4.1. Dataset and Annotation Strategy

- Symbol: Individual graphical elements representing security devices;

- Label: Text descriptions corresponding to symbols;

- Row_Leg: Composite units encompassing symbol–label pairs within legend rows;

- L_title: Legend titles and headers;

- Column_S: Column of Symbols;

- Column_L: Column of Labels;

- Legend: Complete legend structures containing multiple rows.

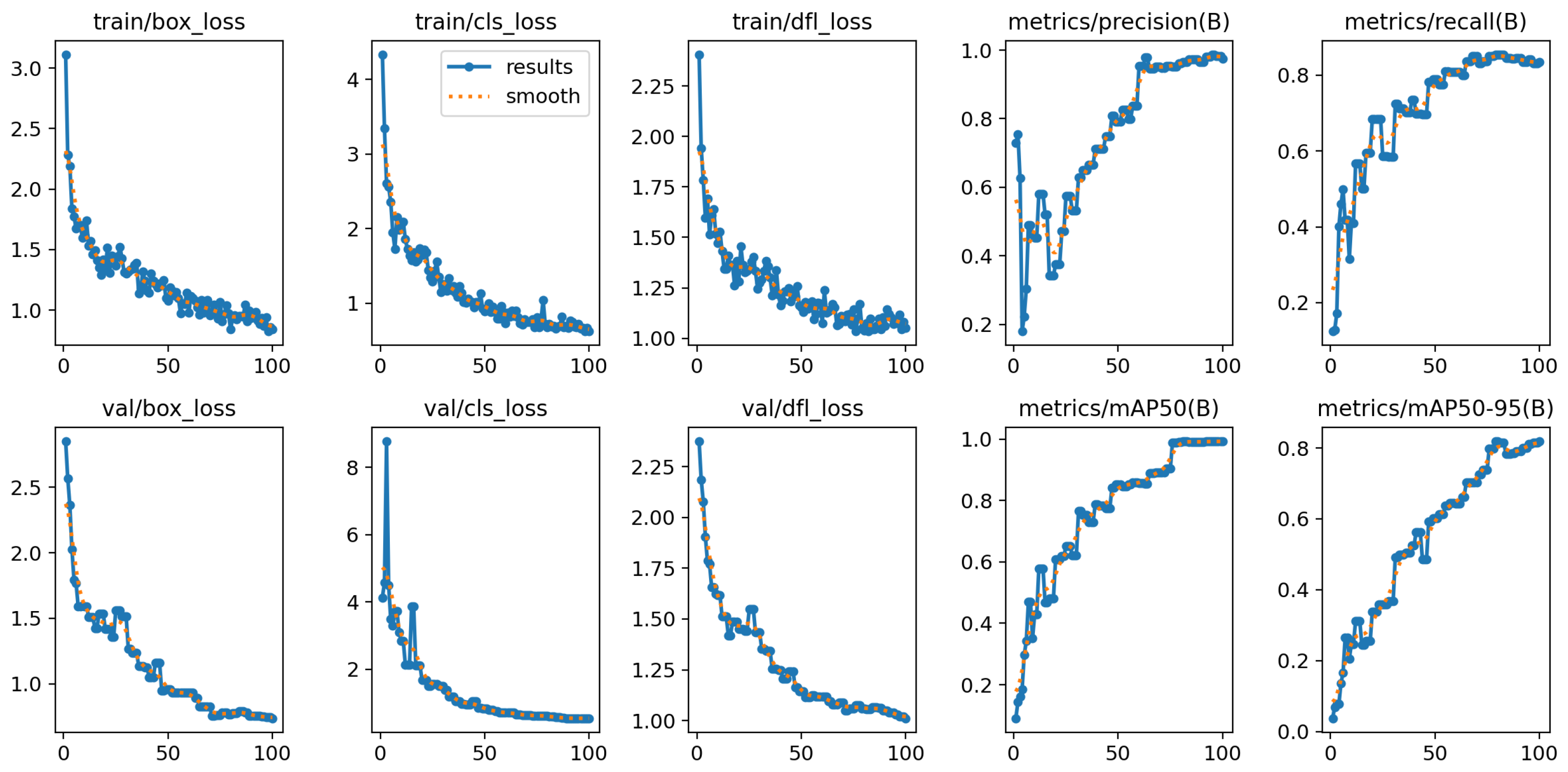

4.2. Model Architecture and Training

- Base model: YOLOv8m pretrained on COCO;

- Input resolution: 832 × 832 pixels;

- Batch size: 16;

- Training epochs: 100;

- Optimizer: AdamW [43] with learning rate ;

- Hardware: NVIDIA GeForce RTX 3080 (10 GB).

4.3. Footprint Description (Document Setting)

4.4. Pipeline Architecture

- YOLO-based detection of all object classes;

- Export of detection results to CSV format;

- Generation of annotated images with class-specific color coding;

- Comprehensive logging of detection statistics.

- Construction of hierarchical JSON structure from detection results;

- Spatial relationship analysis to assign symbols and labels to legend rows;

- OCR integration [44] for text extraction from label regions;

- Export of complete legend structure with embedded metadata.

4.5. Hierarchical Structure Construction

5. Experimental Results

5.1. Quantitative Performance

- mAP50: ≈0.99 across all classes, indicating robust localization and classification;

- mAP50–95: ≈0.81, demonstrating consistent performance under stricter IoU thresholds;

- Symbol–Label Pairing Accuracy: about 98% correct pairings in test cases.

5.2. Contextual Detection vs. Atomic Object Detection

- Legend Context: Symbols within Row_Leg structures are reliably detected (mAP50 ≈ 0.99);

- Isolated Symbols: The same geometric shapes in the main diagram are not detected by the YOLO model;

- Contextual Dependency: Symbol detection appears intrinsically linked to their spatial and semantic relationship with the label text;

- Structured Understanding: The model has learned the “grammar” of legend composition rather than just visual “vocabulary”.

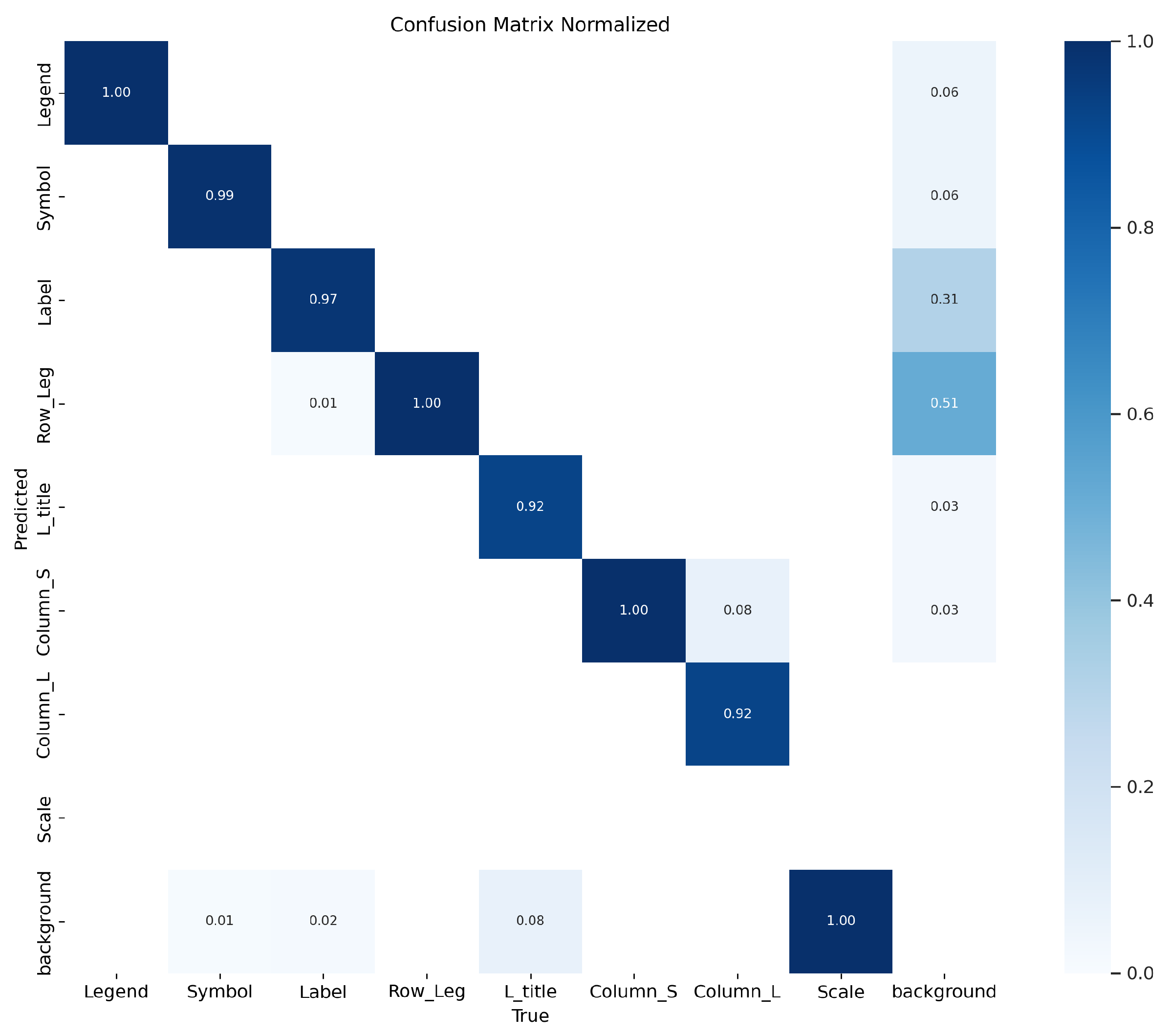

5.3. Detailed Class-Wise Performance Analysis

- Perfect Class Discrimination: The Legend, Symbol, Row_Leg, Column_S, and Scale classes achieve perfect classification accuracy (1.00 on diagonal);

- Minimal Cross-Class Confusion: The largest confusion occurs between Label and Row_Leg classes, which is expected given their spatial overlap;

- Robust Contextual Detection: The model successfully distinguishes between atomic elements (Symbol, Label) and their contextual groupings (Row_Leg).

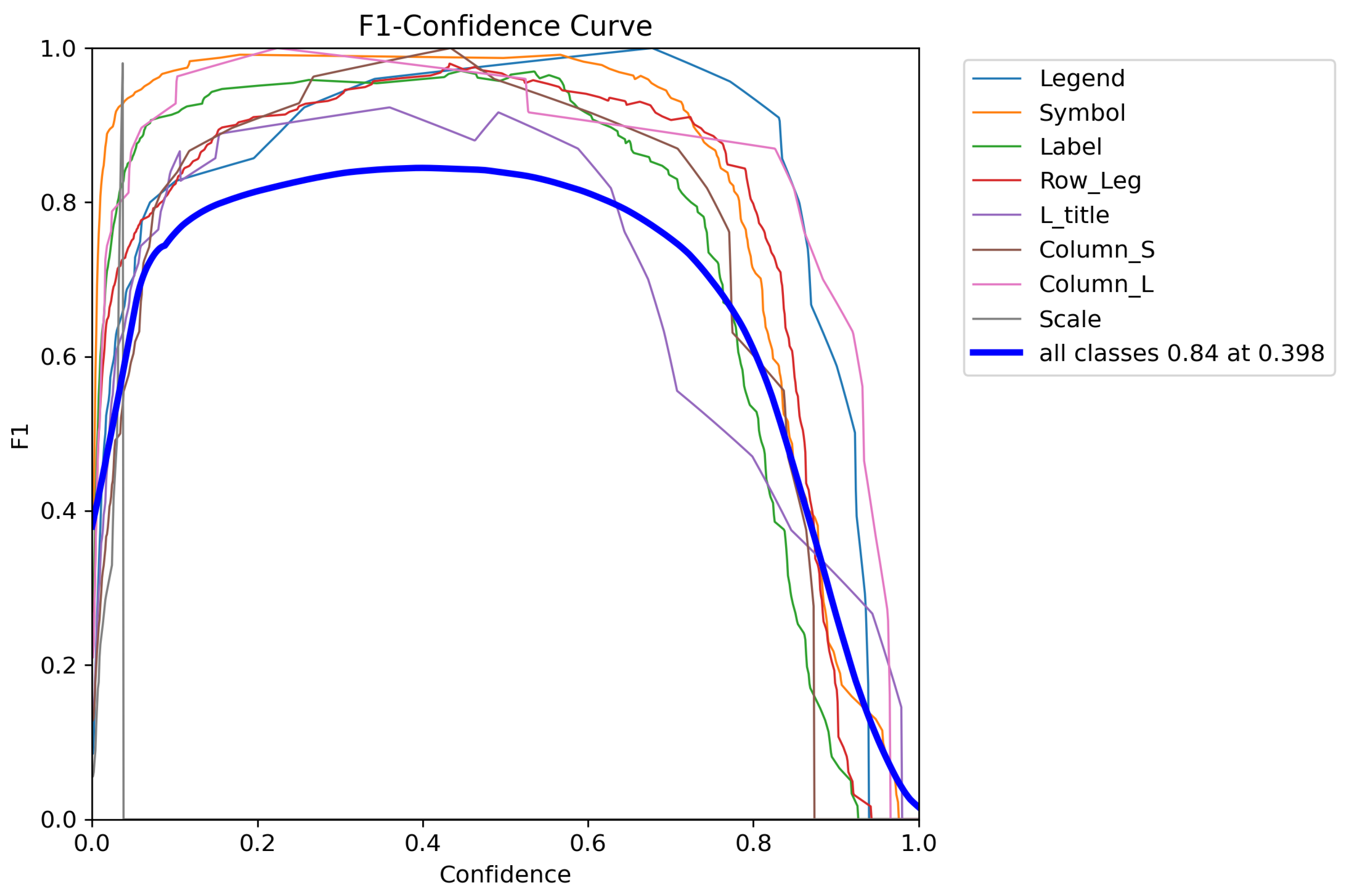

5.4. Model Confidence and Reliability Assessment

- Optimal Confidence Threshold: Peak overall performance occurs at confidence 0.398, balancing precision and recall;

- Robust Performance Range: Most classes maintain F1 scores above 0.8 across confidence values from 0.2 to 0.6;

- Class-specific Behaviors: Different classes exhibit varying confidence patterns, with Symbol and Legend classes showing particularly stable performance.

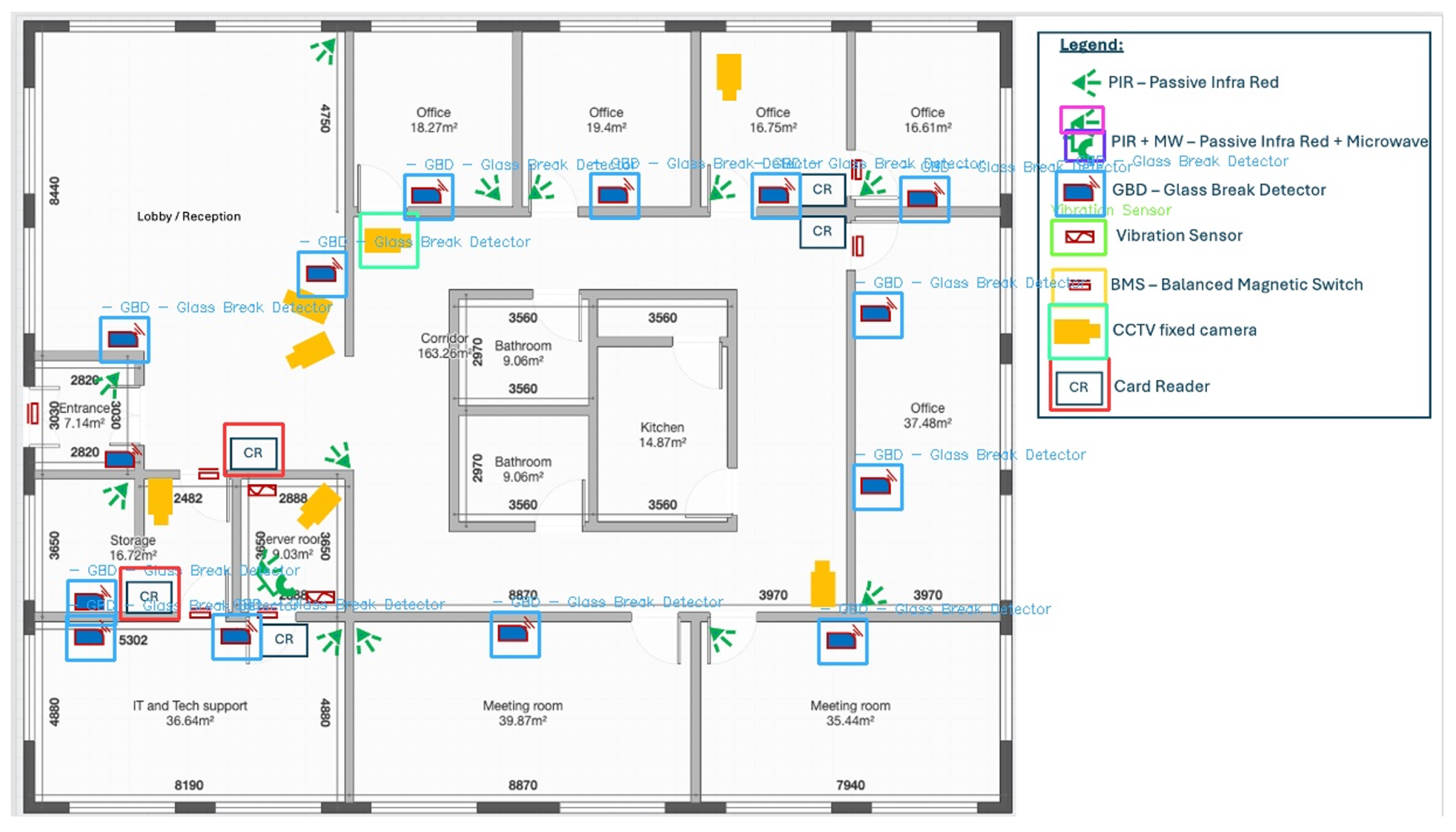

5.5. Qualitative Analysis: Hierarchical Structure Generation

5.6. Dynamic Symbol Interpretation and Real-World Performance

- Blue rectangle (SYM_005) → “GBD—Glass Break Detector”;

- Red/pink rectangle (SYM_003) → “Vibration Sensor”;

- Green arrow symbols → “PIR—Passive Infra Red sensors”;

- Yellow rectangles → “CCTV fixed camera”.

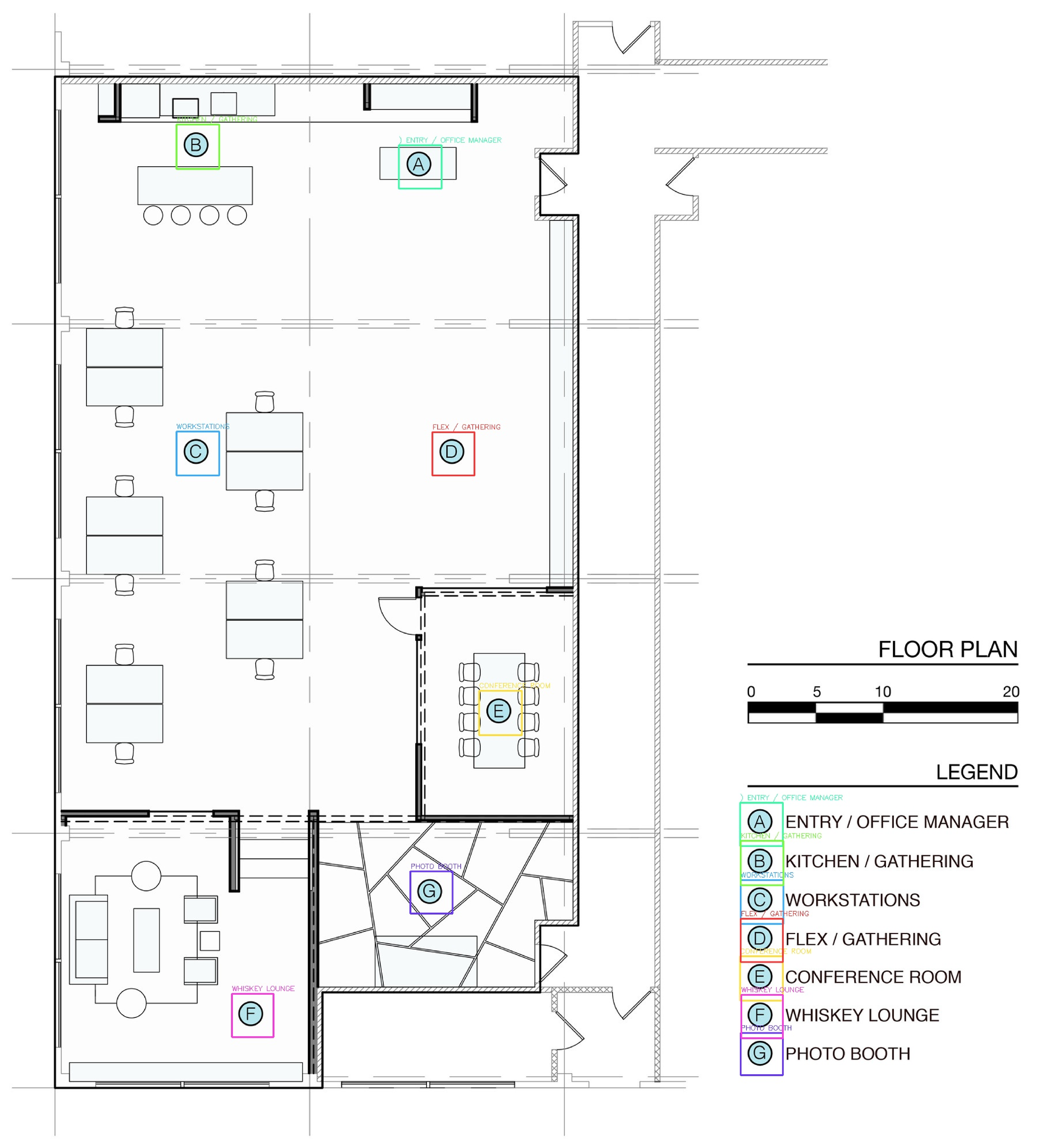

- Symbol A (SYM_004) → “ENTRY/OFFICE MANAGER”;

- Symbol B (SYM_003) → “KITCHEN/GATHERING”;

- Symbol C (SYM_005) → “WORKSTATIONS”;

- Symbol D (SYM_001) → “FLEX/GATHERING”;

- Symbol E (SYM_002) → “CONFERENCE ROOM”;

- Symbol F (SYM_007) → “WHISKEY LOUNGE”;

- Symbol G (SYM_006) → “PHOTO BOOTH”.

6. Discussion

6.1. When COG Provides Value vs. Traditional Approaches

- COG achieves mAP50 ≈ 0.99 for comprehensive legend component detection;

- Traditional object detection would require separate detection of symbols and labels, and complex post-processing, to establish relationships;

- Our approach achieves about 98% accuracy in symbol–label pairing.

- The model demonstrates contextual understanding by detecting symbols only within legend contexts, not in isolation within main diagrams;

- This behavior supports the hypothesis that semantic groupings have become first-class perceptual entities rather than post hoc reasoning constructs;

- Symbol detection confidence ranges from 0.82–0.99 when properly contextualized within legend structures.

- Successful adaptation across different symbol conventions (security vs. architectural diagrams) without retraining;

- Automatic establishment of symbol–meaning mappings through legend learning eliminates manual rule engineering;

- Cross-domain applicability demonstrated with consistent performance across diverse diagram types.

- Context is complex and semantically critical: In technical diagrams, where the same visual symbol can represent completely different devices depending on design conventions;

- Post-processing would be brittle: When rule-based heuristics for establishing relationships are domain-specific, difficult to generalize, and prone to failure;

- Semantic meaning emerges from structure: Where individual elements lack meaning without their contextual relationships (e.g., symbols without legend context);

- Domain standardization is impossible: When different designers, organizations, or standards use varying symbol conventions;

- In intelligent sensing applications: Where contextual interpretation is needed for building automation or security assessment [19].

- Objects are semantically complete in isolation: Individual entities (cars, people, standard traffic signs) carry inherent meaning regardless of context;

- Relationships are simple or optional: Basic spatial proximity or containment relationships can be reliably inferred through simple heuristics;

- Context provides enhancement rather than essential meaning: Where context improves interpretation but is not fundamental to object identity;

- Computational efficiency is paramount: For real-time applications where the additional complexity of contextual detection may not justify the benefits.

6.2. Proof-of-Concept Achievement vs. Future Development Roadmap

- Contextual Learning Validation: The model seems to demonstrate contextual awareness by detecting symbols only within legend contexts, not in isolation—suggesting that semantic groupings may become first-class perceptual entities rather than post hoc reasoning constructs.

- Dynamic Symbol Interpretation: Apparent successful adaptation to different symbol conventions through legend-based learning, potentially eliminating reliance on fixed symbol standards across diverse security diagram formats.

- Hierarchical Structure Construction: Demonstrated ability to construct what appear to be meaningful semantic hierarchies (Legend → COG(Row_Leg) → Symbol + Label) that seem to capture the compositional nature of technical diagrams.

- Cross-Domain Applicability: Initial validation across both security and architectural floor plans, suggesting the framework’s potential for broader intelligent sensing applications.

- Algorithmic Enhancement Track: Potential development of rotation-invariant detection mechanisms, multi-scale symbol matching, and advanced OCR integration for robust real-world deployment in intelligent sensing systems.

- Domain Expansion Track: Possible extension to P&ID diagrams, electrical schematics, and network topologies, potentially establishing COG as a general framework for structured visual understanding in industrial sensing applications.

- System Integration Track: Anticipated edge deployment optimization, digital twin connectivity, and multi-modal sensing integration for comprehensive cyber-physical system modeling.

6.3. Limitations and Challenges

- 1.

- COG-based legend detection and interpretation;

- 2.

- Separate symbol matching for main diagram elements.

6.4. Future Research Directions

6.5. Research Impact Statement

7. Conclusions

- Paradigm Innovation: Introduction of what seems to be a “perceive context directly” paradigm, potentially shifting from traditional “detect then reason” approaches and possibly enabling more sophisticated intelligent sensing capabilities.

- Ontological Framework: Development of contextual COG classes as what appears to be an intermediate level between atomic perception and semantic reasoning, potentially creating new possibilities for structured visual understanding in cyber-physical systems.

- Dynamic Learning Validation: What seems to be proof that models can learn to detect contextual groupings as unified entities, potentially opening new research directions for adaptive intelligent sensing systems that may learn visual languages on-the-fly.

- Cross-Domain Applicability: Demonstrated effectiveness across security and architectural diagrams, suggesting the framework’s potential for diverse intelligent sensing applications including building automation and industrial monitoring.

- Systematic Research Foundation: Clear identification of development tracks (algorithmic enhancement, domain expansion, system integration) that appear to provide a roadmap for advancing context-aware intelligent sensing systems.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| COG | Contextual Object Grouping |

| CPPS | Cyber-Physical Security Systems |

| CNN | convolutional neural network |

| DLA | Document Layout Analysis |

| FCN | Fully Convolutional Network |

| IoT | Internet of Things |

| mAP | mean Average Precision |

| OCR | Optical Character Recognition |

| PIR | Passive Infra Red |

| SGG | scene graph generation |

| VRD | Visual Relationship Detection |

| YOLO | You Only Look Once |

References

- Zhang, Y.; Wang, L.; Sun, W.; Green, R.C.; Alam, M. Cyber-physical power system (CPPS): A review on modeling, simulation, and analysis with cyber security applications. IEEE Access 2021, 9, 151171–151203. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Association for Computing Machinery: New York, NY, USA, 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. YOLO-World: Real-time open-vocabulary object detection. arXiv 2024, arXiv:2401.17270. [Google Scholar]

- Tan, J.; Jin, H.; Zhang, H.; Zhang, Y.; Chang, D.; Liu, X.; Zhang, H. A survey: When moving target defense meets game theory. Comput. Sci. Rev. 2023, 48, 100550. [Google Scholar] [CrossRef]

- Wang, X.; Shi, L.; Cao, C.; Wu, W.; Zhao, Z.; Wang, Y.; Wang, K. Game analysis and decision making optimization of evolutionary dynamic honeypot. Comput. Electr. Eng. 2024, 118, 109447. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Florence-2: Advancing a unified representation for a variety of vision tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 4478–4488. [Google Scholar]

- Tang, Y.; Li, B.; Chen, H.; Wang, G.; Li, H. Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9404–9413. [Google Scholar]

- Huang, Y.; Lv, T.; Cui, L.; Lu, Y.; Wei, F. LayoutLMv3: Pre-training for document AI with unified text and image masking. arXiv 2022, arXiv:2204.08387. [Google Scholar]

- Wang, D.; Zhao, Z.; Jain, A.; Chae, J.; Ravi, S.; Liskiewicz, M.; Sadeghi Min, M.A.; Tar, S.; Xiong, C. DocLLM: A layout-aware generative language model for multimodal document understanding. arXiv 2024, arXiv:2401.00908. [Google Scholar]

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A comparative analysis of object detection metrics with a companion open-source toolkit. Electronics 2020, 9, 279. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. Available online: https://github.com/ultralytics/ultralytics (accessed on 1 June 2025).

- Yohanandhan, R.V.; Elavarasan, R.M.; Manoharan, P.; Mihet-Popa, L. Cyber-physical systems and smart cities in India: Opportunities, issues, and challenges. Sensors 2021, 21, 7714. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Yao, L.; Han, J.; Wen, Y.; Luo, X.; Xu, D.; Zhang, W.; Zhang, Z.; Xu, H.; Qiao, Y. DetCLIPv3: Towards versatile generative open-vocabulary object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9691–9701. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Jin, J.; Hou, W.; Liu, Z. 4D panoptic scene graph generation. arXiv 2023, arXiv:2405.10305. [Google Scholar]

- Yang, J.; Ang, Y.Z.; Guo, Z.; Zhou, K.; Zhang, W.; Liu, Z. Panoptic scene graph generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 425–434. [Google Scholar]

- Lu, C.; Krishna, R.; Bernstein, M.; Fei-Fei, L. Visual relationship detection with language priors. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 852–869. [Google Scholar]

- Johnson, J.; Krishna, R.; Stark, M.; Li, L.J.; Shamma, D.A.; Bernstein, M.; Fei-Fei, L. Image retrieval using scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3668–3678. [Google Scholar]

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural motifs: Scene graph parsing with global context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5831–5840. [Google Scholar]

- Chen, T.; Yu, W.; Shuai, B.; Liu, Z.; Metaxas, D.N. Structure-aware transformer for scene graph generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1999–2008. [Google Scholar]

- Zuo, N.; Ranasinghe Delmas, K.; Harandi, M.; Petersson, L. Adaptive visual scene understanding: Incremental scene graph generation. arXiv 2024, arXiv:2310.01636. [Google Scholar] [CrossRef]

- Xu, Y.; Li, M.; Cui, L.; Huang, S.; Wei, F.; Zhou, M. LayoutLM: Pre-training of text and layout for document image understanding. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, San Diego, CA, USA, 6–10 July 2020; pp. 1192–1200. [Google Scholar]

- Xu, Y.; Xu, Y.; Lv, T.; Cui, L.; Wei, F.; Wang, G.; Lu, Y.; Florencio, D.; Zhang, C.; Che, W.; et al. LayoutLMv2: Multi-modal pre-training for visually-rich document understanding. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, Virtual, 1–6 August 2021; pp. 2579–2591. [Google Scholar]

- Kalkan, A.; Dogan, G.; Oral, M. A computer vision framework for structural analysis of hand-drawn engineering sketches. Sensors 2024, 24, 2923. [Google Scholar] [CrossRef]

- Lin, F.; Zhang, W.; Wang, Q.; Li, J. A comprehensive end-to-end computer vision framework for restoration and recognition of low-quality engineering drawings. Eng. Appl. Artif. Intell. 2024, 133, 108200. [Google Scholar]

- Zhang, K.; Li, Y.; Wang, Z.; Zhao, X. Cyber-physical systems security: A systematic review. Comput. Ind. Eng. 2022, 165, 107914. [Google Scholar]

- Li, H.; Zhang, W.; Chen, M. Smart sensing in building and construction: Current status and future perspectives. Sensors 2024, 24, 2156. [Google Scholar]

- Wang, J.; Liu, X.; Chen, H. Emerging IoT technologies for smart environments: Applications and challenges. Sensors 2024, 24, 1892. [Google Scholar]

- Frege, G. Über Sinn und Bedeutung. Z. Philos. Philos. Krit. 1892, 100, 25–50. [Google Scholar]

- Guarino, N. Formal ontology and information systems. In Proceedings of the First International Conference on Formal Ontology in Information Systems, Trento, Italy, 6–8 June 1998; pp. 3–15. [Google Scholar]

- Smith, B. Ontology and information systems. In Handbook on Ontologies; Staab, S., Studer, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 15–34. [Google Scholar]

- Hussain, M.; Al-Aqrabi, H.; Hill, R. The YOLO framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Smith, R. An overview of the Tesseract OCR engine. In Proceedings of the Ninth International Conference on Document Analysis and Recognition, Curitiba, Brazil, 23–26 September 2007; Volume 2, pp. 629–633. [Google Scholar]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar]

- Peng, H.; Zheng, X.; Chen, Q.; Li, J.; Xu, C. SOD-YOLOv8—Enhancing YOLOv8 for small object detection in aerial imagery and traffic scenes. Sensors 2024, 24, 6209. [Google Scholar]

- Rodriguez, M.; Kim, S.J.; Patel, R. Integrating cloud computing, sensing technologies, and digital twins in IoT applications. Sensors 2024, 24, 3421. [Google Scholar]

| Aspect | YOLO-World | Florence-2 | Traditional OD | COG |

|---|---|---|---|---|

| Symbol Learning | Pre-trained vocabulary | Multi-task general knowledge | Fixed training classes | Dynamic legend-based learning |

| Context Source | External world knowledge | Large-scale training data | Template matching | Document-specific legends |

| Detection Target | Individual objects + text | Multi-modal unified tasks | Atomic objects only | Structured entity groupings |

| Adaptation Method | Static vocabulary | Fine-tuning required | Retraining needed | Dynamic legend-based learning |

| Legend Processing | Secondary consideration | Generic text understanding | Not supported | First-class contextual objects |

| Domain Transfer | Broad but generic | Versatile but pre-defined | Domain-specific | Specialized but adaptive |

| Aspect | OD | VRD | SGG | DLA | COG |

|---|---|---|---|---|---|

| Output | Atomic bounding boxes | 〈subj, pred, obj〉 triplet labels | Object graph representations | Layout element structures | Atomic + composite bounding boxes |

| Detection | ✓ | ✓ | ✓ | ✓ | ✓ |

| Relation inference | × | Post hoc | Post hoc | Post hoc | Inline |

| First-class groups | × | × | × | × | ✓ |

| End-to-end detection | ✓ | × | × | × | ✓ |

| Context embedding | Implicit | External | External | External | Direct |

| Performance Aspect | COG Results |

|---|---|

| Legend component detection | mAP50 ≈ 0.99, mAP50–95 ≈ 0.81 |

| Symbol–label pairing accuracy | About 98% |

| Contextual awareness validation | Symbols detected only in legend context, not in isolation |

| Cross-domain adaptation | Successful on security and architectural diagrams |

| Processing efficiency | Single-stage detection without post-processing |

| Symbol detection confidence | 0.82–0.99 for contextualized symbols |

| Dynamic symbol interpretation | Automatic legend-based semantic mapping |

| Hierarchical structure construction | Complete JSON hierarchy with embedded metadata |

| Aspect | Ontological Inheritance | Contextual Object Grouping (COG) |

|---|---|---|

| Type of relationship | Conceptual abstraction (is-a, has-a) | Perceptual composition via spatial or functional co-occurrence |

| Definition level | Symbolic, model-level | Visual, instance-level |

| Construction method | Manually defined or logic-based | Learned through detection |

| Role in pipeline | Defines reasoning structure | Part of perception output |

| Visual grounding | Typically absent | Explicit and spatially grounded |

| Flexibility | Static taxonomy | Dynamic, data-driven groupings |

| Semantic function | Classification and inheritance | Semantic emergence through grouping |

| Example | Row_L is-a Legend entry | COG(Row_L) = Symbol + Label |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapusta, J.; Bauer, W.; Baranowski, J. Contextual Object Grouping (COG): A Specialized Framework for Dynamic Symbol Interpretation in Technical Security Diagrams. Algorithms 2025, 18, 642. https://doi.org/10.3390/a18100642

Kapusta J, Bauer W, Baranowski J. Contextual Object Grouping (COG): A Specialized Framework for Dynamic Symbol Interpretation in Technical Security Diagrams. Algorithms. 2025; 18(10):642. https://doi.org/10.3390/a18100642

Chicago/Turabian StyleKapusta, Jan, Waldemar Bauer, and Jerzy Baranowski. 2025. "Contextual Object Grouping (COG): A Specialized Framework for Dynamic Symbol Interpretation in Technical Security Diagrams" Algorithms 18, no. 10: 642. https://doi.org/10.3390/a18100642

APA StyleKapusta, J., Bauer, W., & Baranowski, J. (2025). Contextual Object Grouping (COG): A Specialized Framework for Dynamic Symbol Interpretation in Technical Security Diagrams. Algorithms, 18(10), 642. https://doi.org/10.3390/a18100642