Backward Signal Propagation: A Symmetry-Based Training Method for Neural Networks

Abstract

1. Introduction

1.1. Backpropagation Algorithm

1.2. The Core Contradiction of the Backpropagation Algorithm

- Differentiation in biological neurons: It remains unclear whether biological neurons are capable of performing differentiation. Modern artificial neural networks employ a wide variety of activation functions, each designed to be differentiable. Yet, such mathematical operations—and the diverse function types themselves—appear to have no biological counterpart [12].

- Global error propagation: The chain rule in BP assumes that error signals can be propagated globally across the network, requiring each neuron to possess a form of global awareness to compute gradients based on upstream signals. This assumption, however, is biologically unrealistic, as real neurons operate primarily on local information and lack such global processing capability [13].

1.3. Inspiration from Differential Geometry

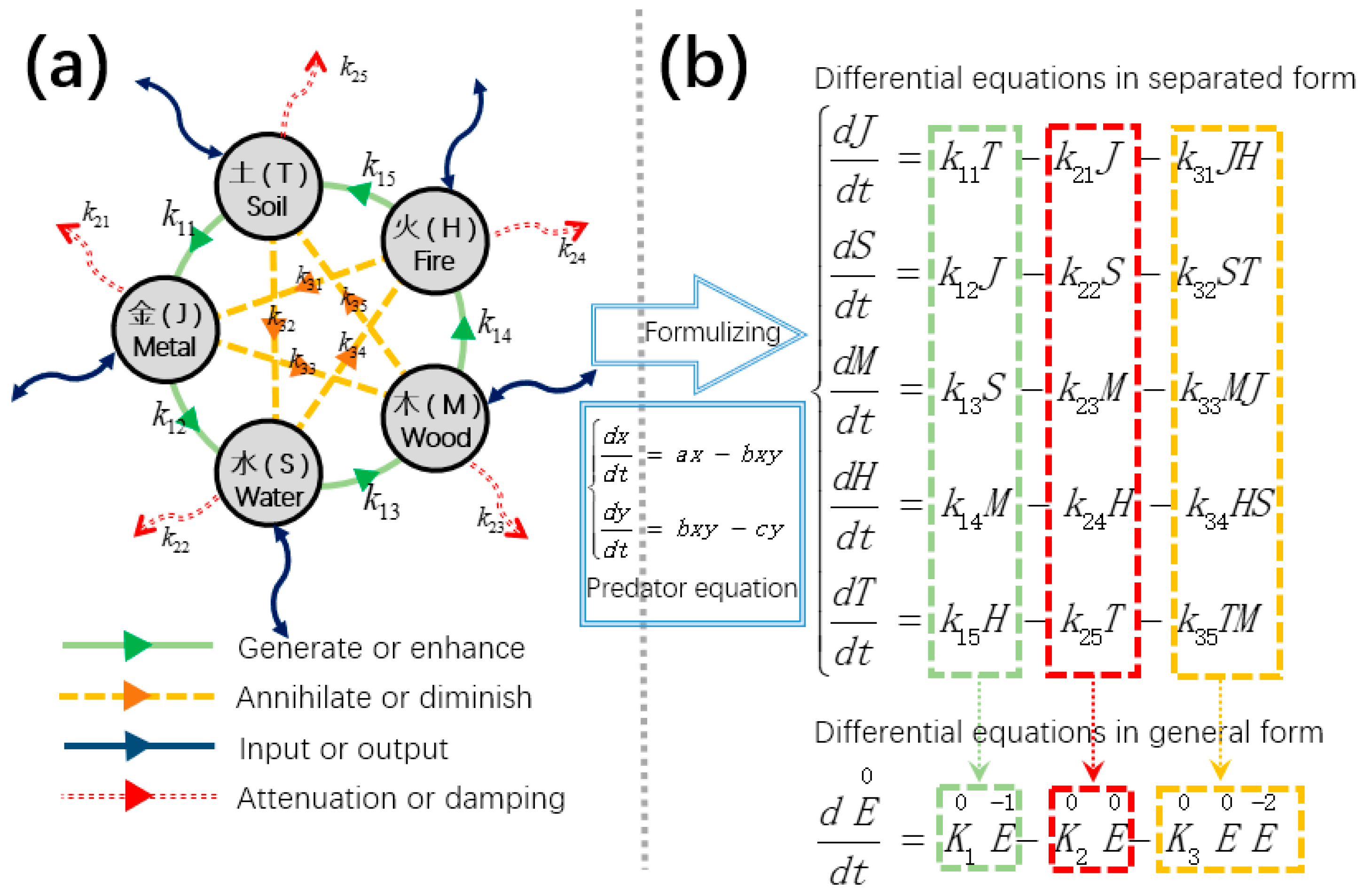

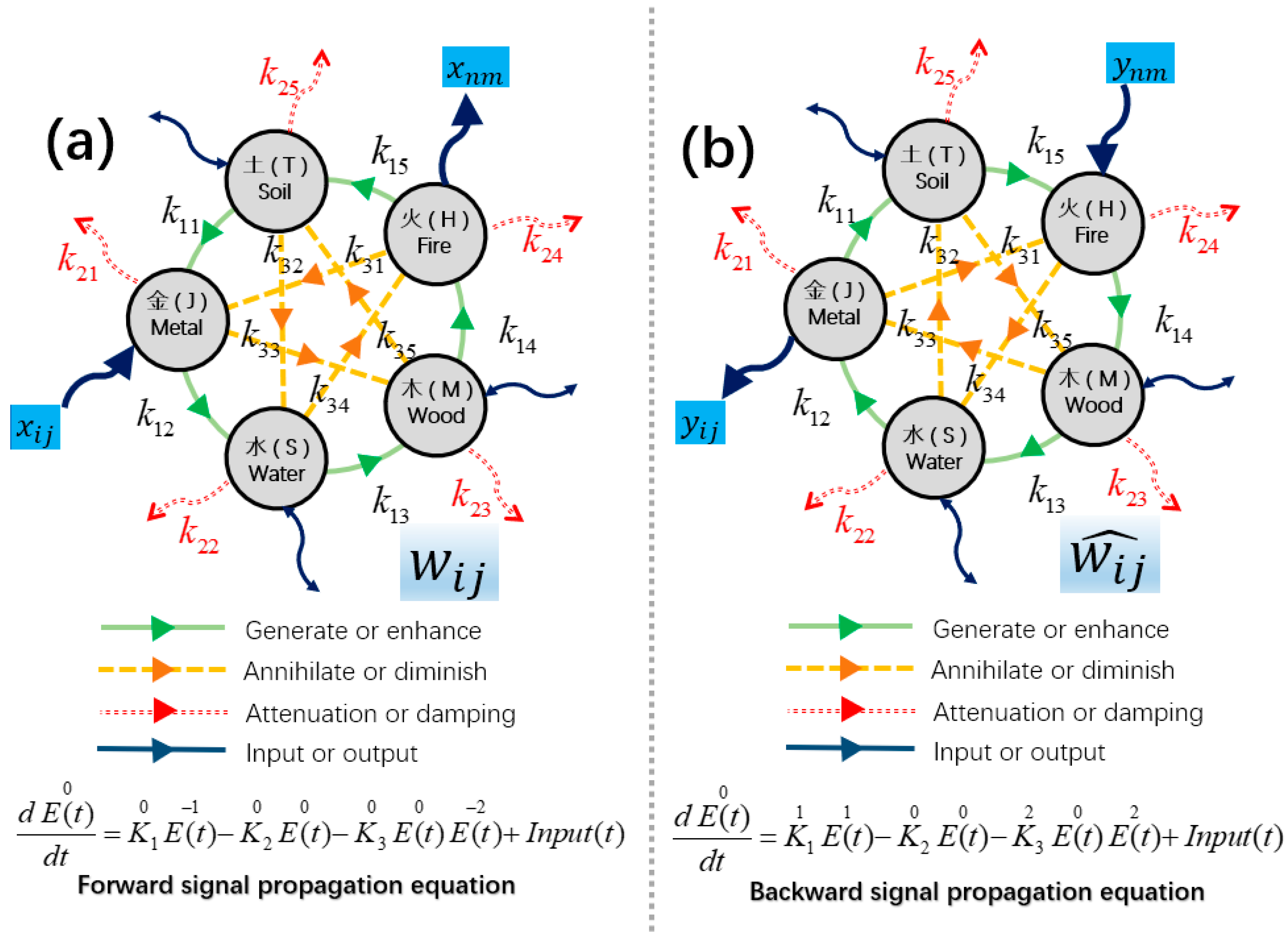

1.4. Solutions Based on Symmetric Differential Equations

2. Introduction to the Backpropagation Algorithm

2.1. Derivative Operation

2.2. Chain Rule

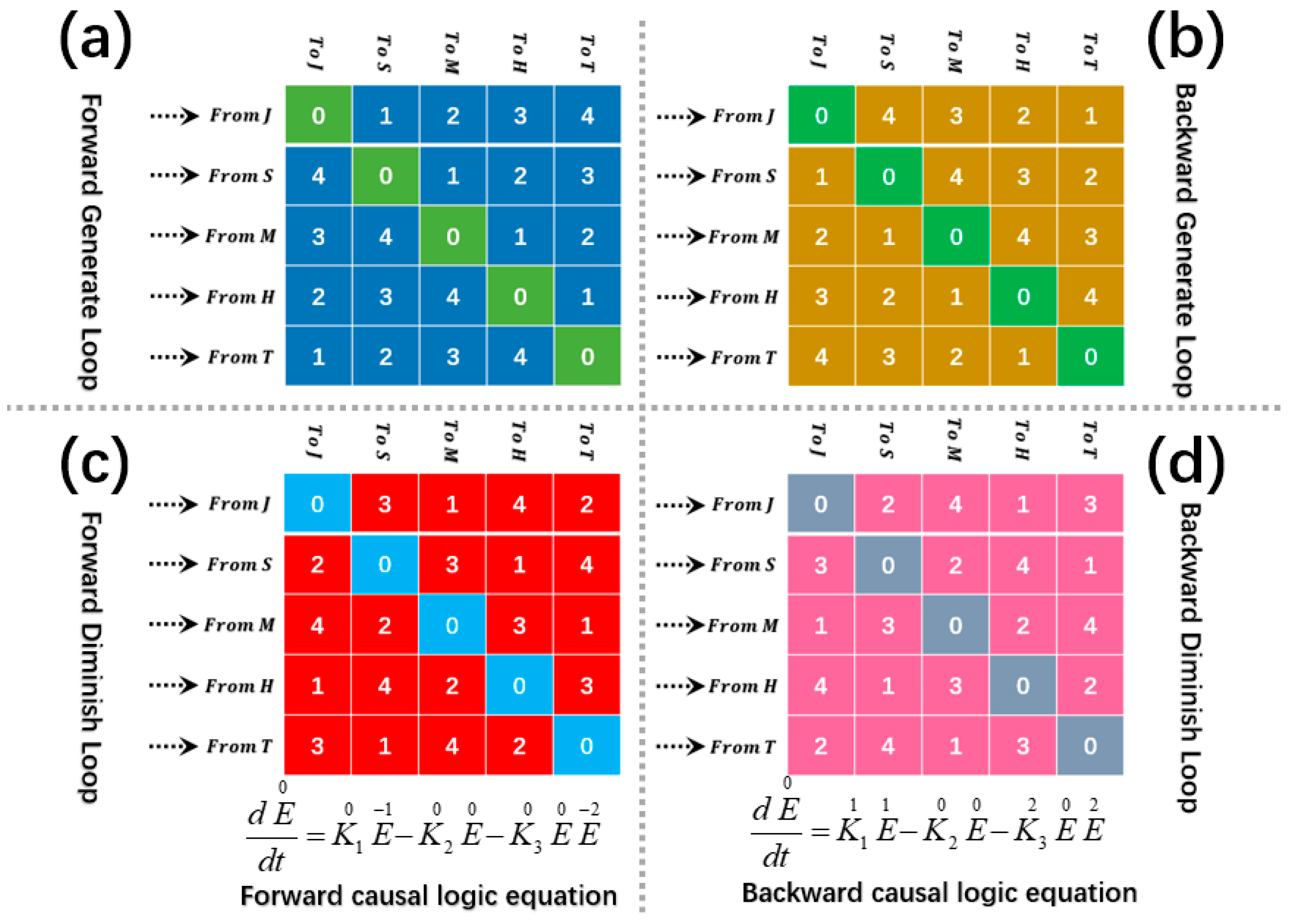

3. Symmetric Differential Equations and Causal Distance

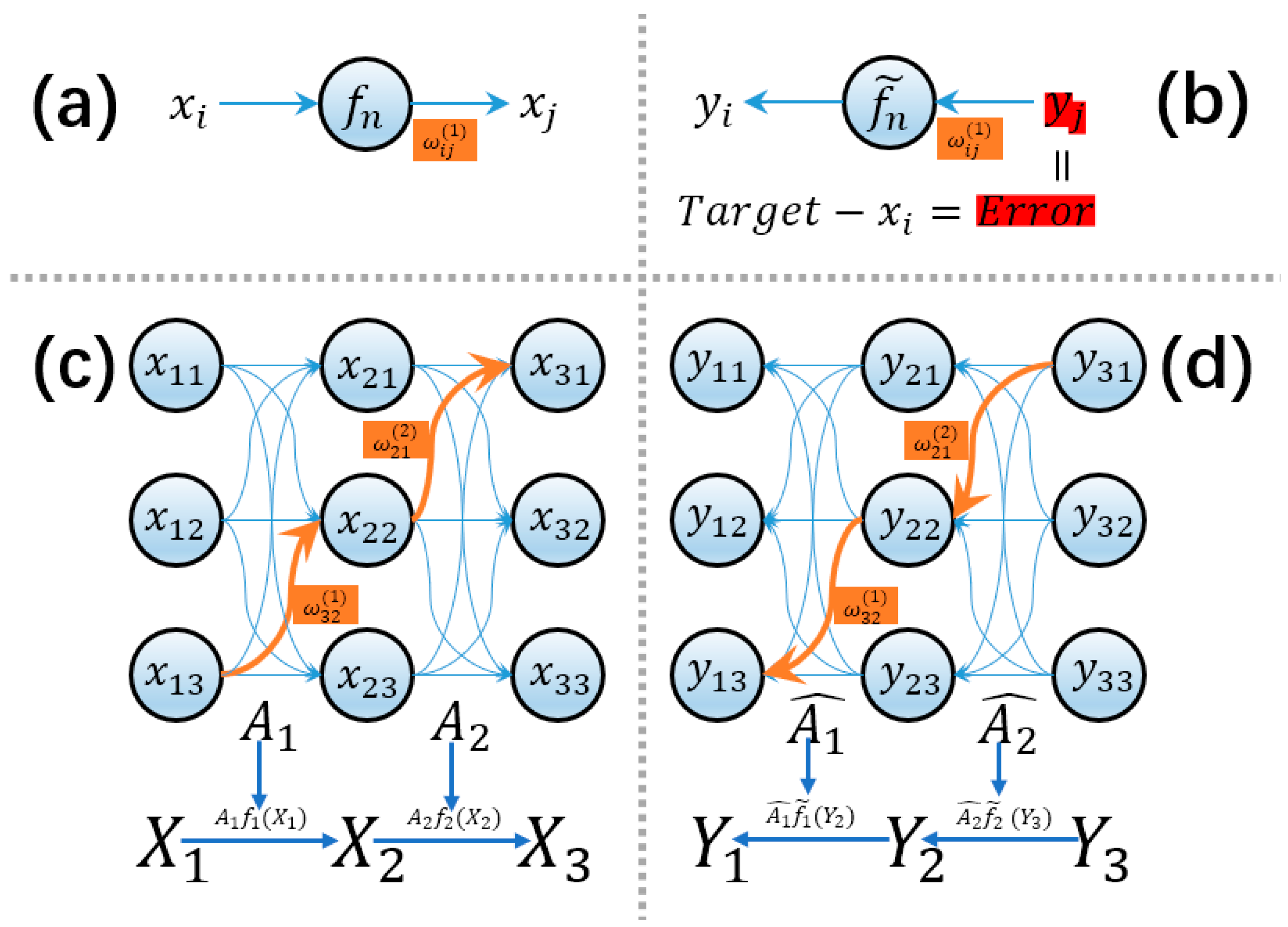

3.1. Neural Network Based on Symmetric Differential Equations

3.2. Causal Distance

- Reduction in analytical complexity: By assigning causal distances to each dependency relationship, the system’s analysis can adopt a strategy analogous to Principal Component Analysis (PCA) [18], ranking and filtering the contributing factors. Variables or parameters with short causal distances often constitute the core drivers of the system, while those with long distances and marginal influence can be safely ignored during preliminary modeling, thereby simplifying system analysis significantly.

- Quantification of influence strength: Causal distance provides not only a qualitative description of transmission paths but also a means for quantitative assessment when combined with coupling coefficients. In the aforementioned example, the direct dependency of J on k11 results in a strong and rapid effect, while the impact of k15 is attenuated due to intermediate transmission. This approach introduces a quantitative scale for dynamical systems, facilitating accurate identification of key control parameters in sensitivity analysis or parameter tuning tasks.

- Unified structural representation: Extending causal distance to cover parameter-variable dependencies enables a unified analytical tool that can describe both the internal causal feedback chains among variables and the regulatory effects of system parameters. This unification deepens our understanding of the “structural lag” phenomenon in complex systems and provides a theoretical foundation for the design of control strategies.

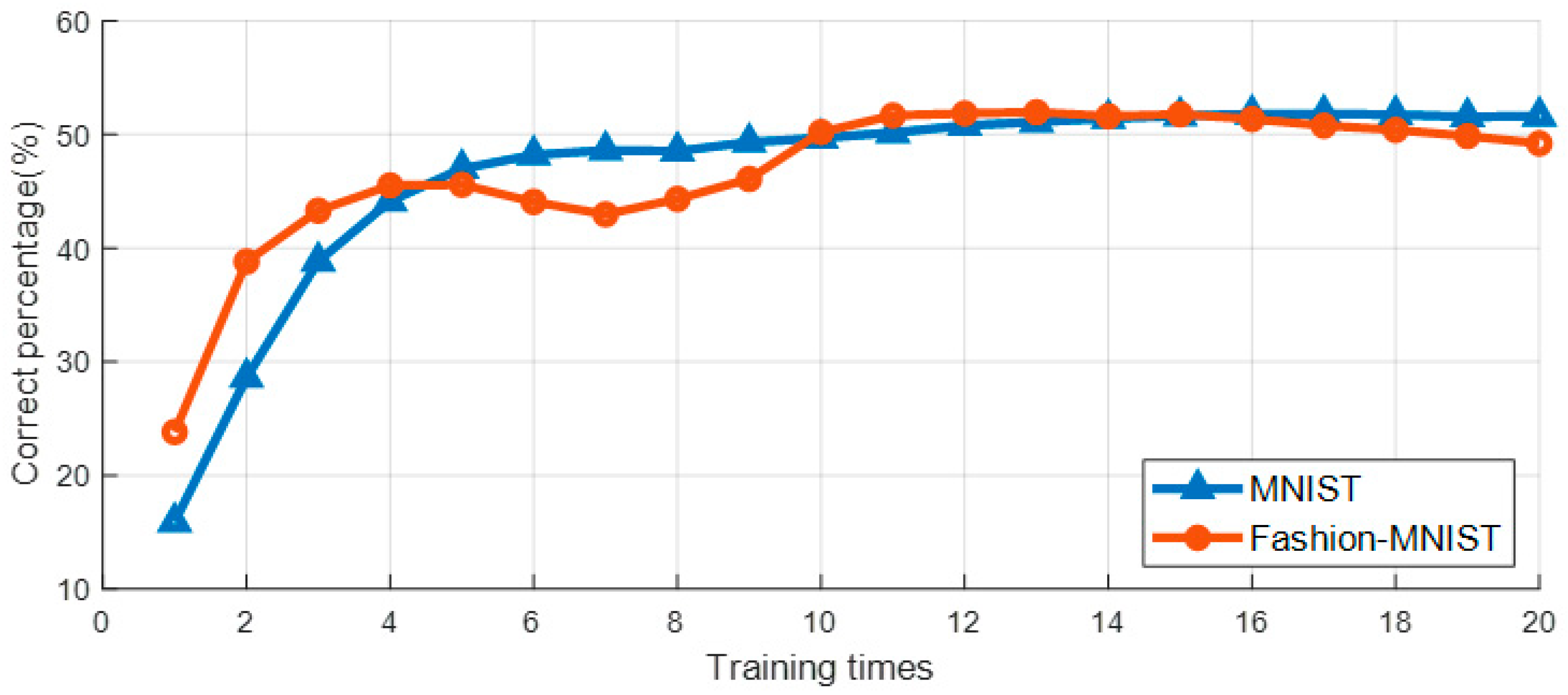

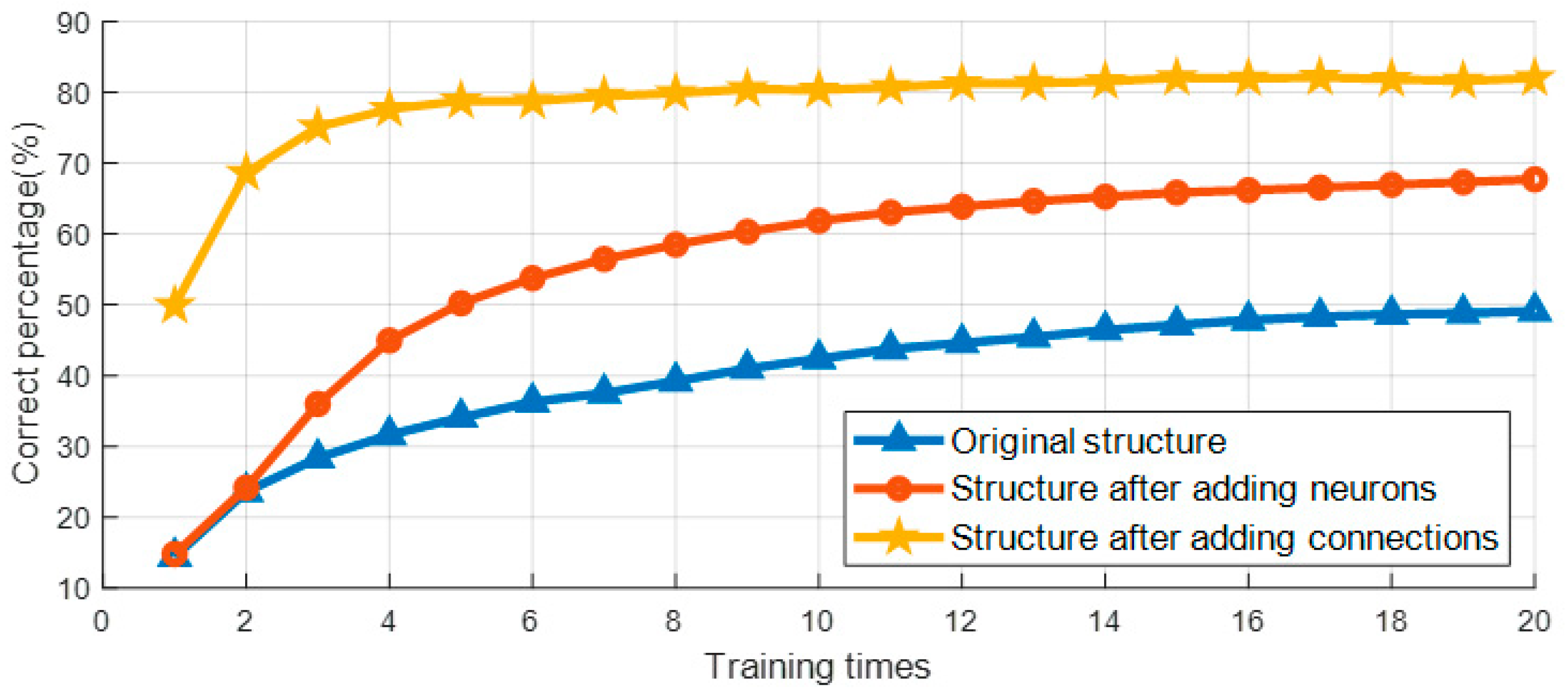

4. Experiment

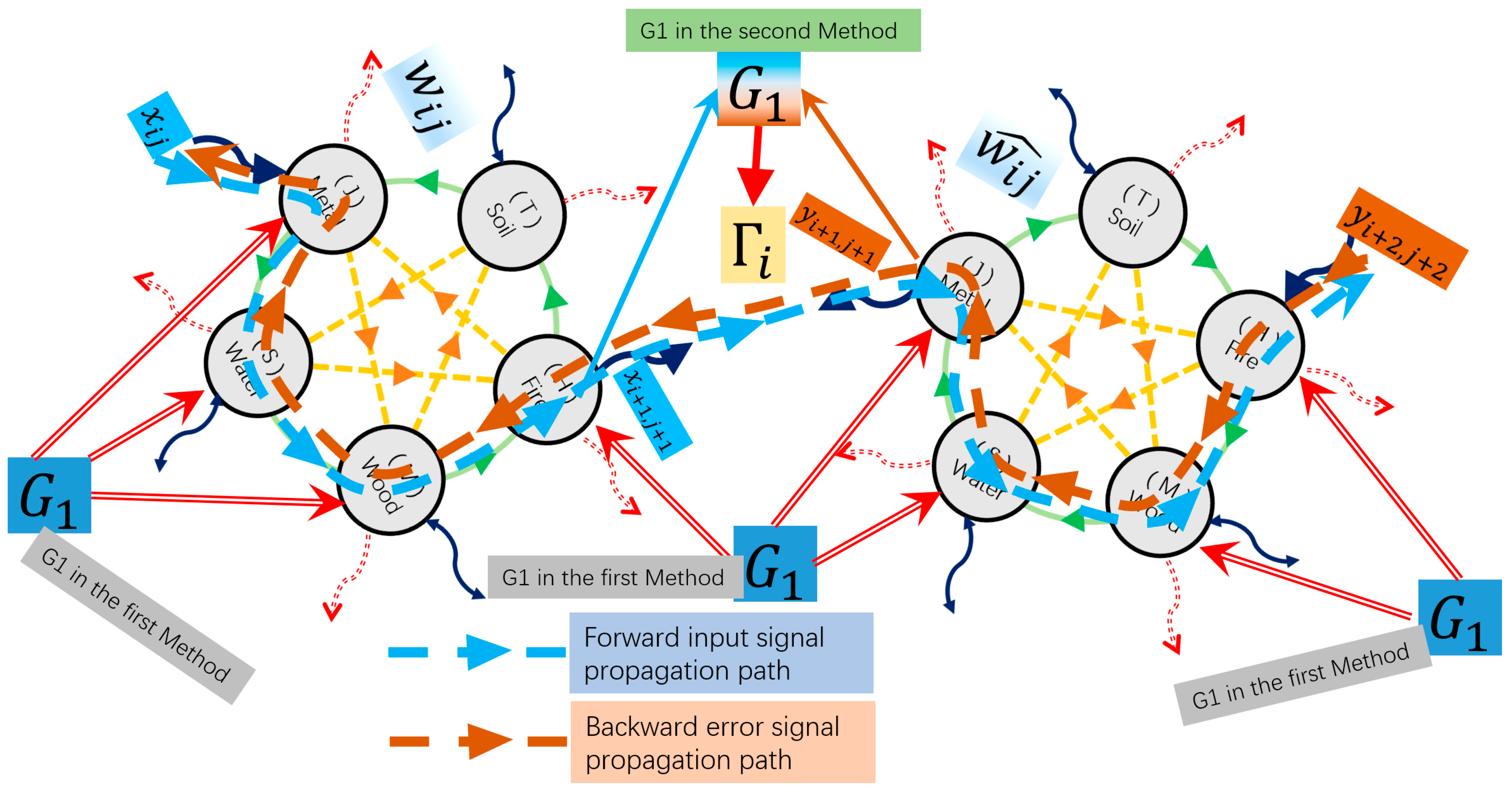

4.1. Forward and Backward Signal Design

4.2. Two Different Learning Methods

4.2.1. First Learning Method: Adjusting the System’s Fixed Points

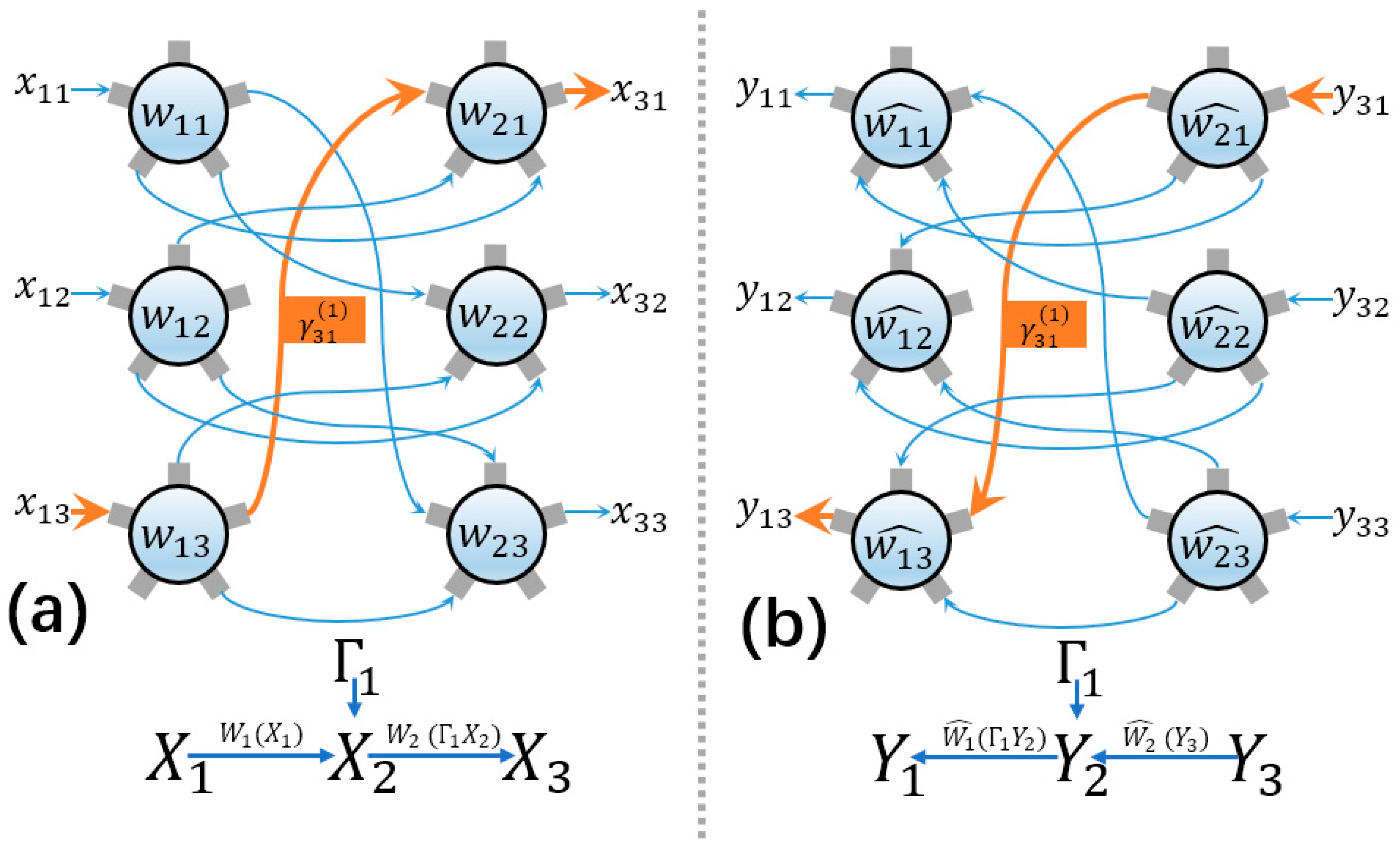

4.2.2. Second Learning Method: Adjusting Neuronal Connections

- Feed the input signal into the network. Compute the forward propagation of the signal through all layers.

- Compute the error signal based on the difference between the output and the target at the output layer.

- Propagate the error signal backward through the network.

- At each node, combine the forward signal and the backward error signal to construct the modulation variable G1.

- Update the system parameters according to the computed modulation variable G1.

- Repeat steps 1–5 until convergence criteria are satisfied (e.g., error or maximum epochs).

5. Summary

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BSP | Backward signal propagation |

| BP | Backpropagation |

| MLP | Multilayer perceptrons |

| MNIST | Modified National Institute of Standards and Technology database |

References

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef]

- Marvin, M.; Seymour, A.P. Perceptrons; MIT Press: Cambridge, MA, USA, 1969; Volume 6, p. 7. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009. [Google Scholar]

- Raina, R.; Madhavan, A.; Ng, A.Y. Large-scale deep unsupervised learning using graphics processors. In Proceedings of the ICML ‘09: Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Bottou, L. Large-scale machine learning with stochastic gradient descent. In Proceedings of the COMPSTAT’2010: 19th International Conference on Computational Statistics, Paris, France, 22–27 August 2010; Keynote, Invited and Contributed Papers. Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Hinton, G. The forward-forward algorithm: Some preliminary investigations. arXiv 2022, arXiv:2212.13345. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Santoro, A.; Marris, L.; Akerman, C.J.; Hinton, G. Backpropagation and the brain. Nat. Rev. Neurosci. 2020, 21, 335–346. [Google Scholar] [CrossRef] [PubMed]

- Crick, F. The recent excitement about neural networks. Nature 1989, 337, 129–132. [Google Scholar] [CrossRef] [PubMed]

- Stork. Is backpropagation biologically plausible? In Proceedings of the International 1989 Joint Conference on Neural Networks, Washington, DC, USA, 18–22 June 1989; IEEE: Piscataway, NJ, USA, 1989. [Google Scholar]

- Kun, J. A Neural Network Framework Based on Symmetric Differential Equations. ChinaXiv 2024. ChinaXiv:202410.00055. [Google Scholar]

- Jiang, K. From Propagator to Oscillator: The Dual Role of Symmetric Differential Equations in Neural Systems. arXiv 2025, arXiv:2507.22916. [Google Scholar]

- Izhikevich, E.M. Dynamical Systems in Neuroscience; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Olver, P.J. Equivalence, Invariants and Symmetry; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal Component Analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Jiang, K. A Neural Network Training Method Based on Distributed PID Control. Symmetry 2025, 17, 1129. [Google Scholar] [CrossRef]

- Jiang, K. A Neural Network Training Method Based on Neuron Connection Coefficient Adjustments. arXiv 2025, arXiv:2502.10414. [Google Scholar]

| Multilayer Perceptron | Symmetric Differential Equation Neural Networks | |

|---|---|---|

| Signal source | Defined by input signal and nonlinear function | Generate perturbation signal from input signal |

| Nonlinear property | Activation function | Natural property of the system |

| Causal tracing methods | Derivative operation | Differentiability of equations |

| Traversing the topology | Chain propagation law | Causal distance invariance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, K.; Fu, Z. Backward Signal Propagation: A Symmetry-Based Training Method for Neural Networks. Algorithms 2025, 18, 594. https://doi.org/10.3390/a18100594

Jiang K, Fu Z. Backward Signal Propagation: A Symmetry-Based Training Method for Neural Networks. Algorithms. 2025; 18(10):594. https://doi.org/10.3390/a18100594

Chicago/Turabian StyleJiang, Kun, and Zhihong Fu. 2025. "Backward Signal Propagation: A Symmetry-Based Training Method for Neural Networks" Algorithms 18, no. 10: 594. https://doi.org/10.3390/a18100594

APA StyleJiang, K., & Fu, Z. (2025). Backward Signal Propagation: A Symmetry-Based Training Method for Neural Networks. Algorithms, 18(10), 594. https://doi.org/10.3390/a18100594