Abstract

Neurodegenerative diseases, such as Parkinson’s and Alzheimer’s, present considerable challenges in their early detection, monitoring, and management. The paper presents NeuroPredict, a healthcare platform that integrates a series of Internet of Medical Things (IoMT) devices and artificial intelligence (AI) algorithms to address these challenges and proactively improve the lives of patients with or at risk of neurodegenerative diseases. Sensor data and data obtained through standardized and non-standardized forms are used to construct detailed models of monitored patients’ lifestyles and mental and physical health status. The platform offers personalized healthcare management by integrating AI-driven predictive models that detect early symptoms and track disease progression. The paper focuses on the NeuroPredict platform and the integrated emotion detection algorithm based on voice features. The rationale for integrating emotion detection is based on two fundamental observations: (a) there is a strong correlation between physical and mental health, and (b) frequent negative mental states affect quality of life and signal potential future health declines, necessitating timely interventions. Voice was selected as the primary signal for mood detection due to its ease of acquisition without requiring complex or dedicated hardware. Additionally, voice features have proven valuable in further mental health assessments, including the diagnosis of Alzheimer’s and Parkinson’s diseases.

1. Introduction

The paper presents a complex health monitoring platform, NeuroPredict, focused on managing neurodegenerative diseases by monitoring both physical and mental health and the emotion recognition algorithm integrated into the platform. NeuroPredict has been designed and is currently under development in our laboratory as part of a four-year research project, currently in its second year of implementation. The NeuroPredict platform aims to monitor and manage patients suffering from one of the following three neurodegenerative conditions: Alzheimer’s disease (AD), Parkinson’s disease (PD), or multiple sclerosis (MS). In the context of integrating IoMT (Internet of Medical Things) and AI (artificial intelligence) for proactive healthcare, our focus is on predictive models for managing the three targeted neurodegenerative diseases. Emotion detection from voice is integrated into the presented platform to highlight the connection between mental health and physical health and as a starting point for further analysis of the voice signals, enabling the development of predictive models tailored to the targeted diseases based on speech features.

It is a known fact that the world population is aging, and this is also shown in the latest Ageing Report of the European Commission [1], a report that aims to analyze the aging of the population within the European Union and the financial impact of the demographic changes on the economies of each state. The 2024 Ageing Report predicts a very clear upward shift in the age distribution across all member states of the European Union (EU), with a consistent and continuous growth of the old age population—aged 65 and over. This shift will be reflected in the old-age dependency ratio (the old-age population divided by the working population—aged 20 to 64), leading to an increased need for caregivers for the elderly who cannot tend for themselves.

Among the age-related problems, cognitive decline is one of the most common. For AD and PD, old age is the most significant risk factor [2,3]. There are other neurodegenerative diseases, such as MS, that have become more prevalent in recent years but are not necessarily age-related. Key risk factors and reasons for the increase in prevalence of neurodegenerative diseases are related to lifestyle factors like poor dietary habits, physical inactivity, smoking and alcohol use, certain medications, and pre-existing medical conditions like cardiovascular diseases and chronic inflammation [4]. By continuously monitoring these factors over extended periods, the importance of each of these factors will be better understood, and in the long run, the targeted medical conditions will be better and more easily diagnosed.

The aim of this paper is to present the NeuroPredict platform and the development of an emotion detection algorithm based on voice features specifically aimed at patients with neurodegenerative diseases, integrated into the NeuroPredict platform. Voice has been chosen as an easy-to-acquire signal that reflects information about the emotional state and may also be analyzed for neurological disorder recognition. The work emphasizes the use of advanced AI techniques for the monitoring and management of PD, AD, and MS. The paper explores how the NeuroPredict platform integrates IoMT devices with AI-driven data collection and real-time analysis to enable early detection and support personalized long-term care. By examining the platform’s architecture and predictive modeling capabilities, the paper aims to show how AI enhances proactive healthcare, improving patient outcomes and quality of life amidst the challenges of an aging population.

This research contributes to the field of emotional state monitoring, particularly in the realm of neurodegenerative diseases, by designing and integrating an effective voice-based emotion recognition algorithm into the NeuroPredict platform. The research involves an individual approach to assessing spoken word recordings with a neural network for emotions to be classified into three categories: positive, neutral, and negative. Unlike conventional speech emotion recognition (SER) algorithms used in human–computer interaction systems, which typically handle a wider, more detailed set of emotions (such as happiness, disgust, and fear), this study opts for the three broader categories, which are more suitable for mental health assessment.

To ensure the model’s robustness and generalizability, a diverse and comprehensive dataset was created using speech samples from four publicly available datasets focused on voice-based emotion detection. This comprehensive dataset supports model training and testing, minimizing the risk of overfitting and enhancing the algorithm’s performance on new, previously unseen data collected under different recording conditions. This way, the algorithm’s adaptability and flexibility are sustained. To further boost the model’s adaptability to various audio environments and settings, the research employs a range of data augmentation techniques, including noise addition, time stretching, and pitch shifting. The research addresses privacy concerns regarding voice recordings by not storing the raw voice recordings. Instead, the NeuroPredict platform only stores the final classification decision.

The integration of this algorithm within the NeuroPredict platform improves the health monitoring process. The platform encompasses data from IoMT devices with self-reported information to provide regular and thorough emotional state monitoring for patients. This integration enables preventative measures and prompt updates to treatment procedures, ultimately improving patient care.

The structure of this paper is as follows: Section 2 presents a literature review in the field of targeted neurodiseases, predictive modeling, and voice analysis for emotion detection. Section 3 presents the NeuroPredict platform, with the IoMT and deep learning algorithms based on data fusion from multiple modalities and the proposed emotion detection algorithm based on voice features. Section 4, Results, presents the performance of the mental state detection algorithm based on voice features and the integration of the algorithm into the platform. The final section discusses the results and future work.

2. Literature Review

This section provides the basis for screening and monitoring PD, AD, and MS. It outlines the common symptoms that can be detected and tracked for disease detection and progression monitoring. Additionally, it discusses the foundation of predictive modeling specifically for these targeted diseases. The third subsection details the state-of-the-art methods for emotion detection using voice features, serving as a basis for comparison between the current approach and other works in the literature.

2.1. Monitoring Neurodegenerative Diseases

Depending on the monitored condition, the following aspects have been found in the literature as relevant for monitoring neurodegenerative diseases:

- PD: (1) Motor symptoms: PD can cause a variety of motor symptoms, with the most common ones including: akinesia (paucity of movements and delayed initiation of movement), bradykinesia (slowness of movement), hypokinesia (reduced amplitude of movement), postural instability (impaired ability to recover balance), rigidity (increased resistance to passive joint movement), stooped posture (a forward-hunched posture), tremor at rest (involuntary shaking, typically starting in the hands or fingers when the muscles are relaxed) [2,5]. Postural instability, bradykinesia, stiffness, and tremors are the main symptoms. Wearables containing accelerometers are example technologies that can continuously track these motor signs [6]. Other movement disorders appear in patients with PD during the night [7], including nocturnal hypokinesia or akinesia, restless legs syndrome, periodic limb movement in sleep, among other abnormal movements during sleep; (2) Gait analysis: Patients with PD frequently have irregular gaits, which can be tracked by sensors worn on the body or placed in shoes [8]; (3) Speech analysis: Using microphones and voice analysis algorithms, voice alterations, such as reduced volume and pitch and rushed and slurred speech, can be observed [9]; (4) Non-motor symptoms: Sleep trackers, cognitive tests, and mood assessment apps can be used to monitor sleep disorders, cognitive deterioration, and mood swings [10]. Sleep disorders (SD) are some of the most common non-motor symptoms of PD [7]. SDs may be caused or worsened by PD related motor issues that occur during sleep, as abnormal movement events related to PD, such as akinesia, rigidity and dystonia, occur during sleep as well. PD affects primarily motor functions with mental decline generally appearing in later stages and the cognitive issues are more related to slowed thinking with memory loss problems appearing later in the progress of the disease [8].

- AD: (1) Cognitive function: Cognitive decline is usually the primary symptom of AD, with memory loss being one of the first noticeable symptoms [8]. Frequent cognitive assessments utilizing digital platforms can estimate cognitive impairments to gauge their degree and evolution over time, serving as screening assessments [11]; (2) Daily activity monitoring: Wearable technology and smart home devices can keep an eye on daily routines to spot any irregularities that can point to cognitive deterioration [12]; (3) Sleep patterns: Sleep-related health data (such as body movements, cardiac rhythm, respiration rate, snoring, discontinuances in the breathing episodes, etc.) is necessary in order to have an overview of the sleep quality, and patterns can be tracked using wearables to detect sleep disturbances common in AD [11]. Insufficient sleep is a risk factor for AD and AD leads to sleep deprivation and disruptions [13]; (4) Movement disorders: Although AD manifestations are typically related to cognitive functions, rigidity, slowness, and other movement disorders are common [14].

- MS: (1) Neurological function: Regular and comprehensive assessment of cognitive and motor functions utilizing digital platforms can help managing the symptoms [15]; (2) Mobility and balance: Mobility issues are also common in the majority of MS patients for whom decreased motor control, muscle weakness, balance problems, spasticity, fatigue and ataxia may lead to inability to walk long distances, impaired gait pattern, inability to support body weight [16,17], problems which may lead to complete inability to walk, either permanent or during relapses. Monitoring balance, coordination, and gait aids in determining the MS progression [18]; (3) Muscle strength and spasticity: Assessing the strength and tone of the muscles [18]; (4) Sensory changes: monitoring for numbness, tingling, and pain [19]; (5) Fatigue levels: Assessing the degree and influence of fatigue [20]; (6) Speech and swallowing: Evaluating speech and swallowing difficulties [21]; and (7) Sleep analysis: Poor sleep may affect daytime activities as it leads to daytime fatigue. Fatigue (both mental and physical) is a known problem directly linked to the condition [17].

Continuous and precise monitoring aids in diagnosis, tracking the progression of the disease, managing symptoms, and modifying treatment regimens as necessary. Medical technology and the Internet of Things (IoT) converge in the Internet of Medical Things (IoMT), which facilitates the remote transmission of device and application data to medical servers for analysis. IoMT enables healthcare professionals to access patients’ data in real-time via mobile apps or web platforms, integrating information processing with medical equipment and external activities. Connected technology, supported by software and smart devices, allows individuals to monitor their health and adhere to doctors’ treatment recommendations more effectively. Additionally, it simplifies the process for doctors to access patients’ medical records [22].

IoMT has several advantages for both patients and healthcare practitioners, including (1) Accessibility: real-time access to a variety of patient data is made possible by IoMT-based devices; (2) Enhanced efficiency: IoMT devices quickly gather extremely precise information, enabling well-informed decision-making. This data can also be analyzed by AI-driven algorithms to produce precise decisions or judgments; and (3) Reduced costs: patients may be able to leave the hospital sooner and have quicker access to medical care.

Modern technology is needed for IoMT in order to collect data, communicate quickly, and guarantee security and compliance [23]. The deployment of IoMT-based medical technology to older people has been the focus of extensive prior research [24]. Both “reasons for” and “reasons against” IoMT wearable adoption exist. The senior population’s routine of going to doctors for checkups and concerns about data privacy and security of their medical records kept remotely (in the cloud) are the primary “reasons against” arguments. It can be difficult for older people to use wearables based on IoMT. On the other hand, the relative advantages, compatibility with current healthcare procedures, and simplicity of use for health monitoring are the “reasons for” adopting IoMT wearables [25].

The integration of IoMT and AI for proactive healthcare aims to predict, track, and treat neurodegenerative disorders. This process consists of the following steps: (1) Monitoring: using IoMT devices, continuously gather data on a range of physical and cognitive parameters; (2) Predicting: analyzing data, finding patterns, and predicting disease progression or exacerbations; and (3) Managing: using predictive analytics to recommend tailored interventions, managing symptoms, or delaying the progression of the disease. By enabling prompt and personalized responses, this integration enhances patient outcomes and quality of life for both patients and healthcare professionals.

Recent developments in this sector include: wearable technology advancements (wearables with high-precision sensors and clothing-integrated sensors for continuous, discreet monitoring), enhanced data analytics (complex datasets are analyzed by machine learning—ML algorithms to develop predictive models for disease progression), Big Data integration (combining genetic data, wearable data, and medical records to improve the accuracy of predictive models), personalized healthcare (customizing interventions and treatment based on data insights), remote monitoring (integrating monitoring information with telemedicine systems to enable ongoing care and remote consultations), and home-based diagnostics (development of portable diagnostic tools that can be used at home for regular health assessments).

The high cost of cutting-edge wearables and AI-powered systems, clinical validation of the efficacy and safety of new technologies, privacy and security concerns regarding personal health data, interoperability issues in integrating data from diverse sources into a unified system, ensuring the accuracy and reliability of IoMT devices and AI models, and patient acceptability of wearable devices are some of the limiting factors.

2.2. Predictive Modeling for Neurodegenerative Diseases

Predictive modeling is an area of statistics and ML based on data analytics techniques that aims to model a system in order to predict certain states of interest that the system may get to. When applied in healthcare, predictive modeling may be used for multiple purposes: develop early diagnosis models that allow early intervention and potentially slow disease progression; identify risk factors; personalize treatment plans and screening programs; predict disease evolution and outbreak; and optimize patient care in general.

Most of the ML techniques may be applied for healthcare predictions, starting from simple logistic regression techniques to random forests, support vector machines, complex neural networks with multiple hidden layers and different activation functions, and complex combined architectures. Depending on the required task, different models have been proven to be more effective than others. Recent advancements in ML algorithms applied in other fields may lead to improvements in applications for healthcare. For example, advancements in the area of computer vision may be applied to medical image processing for disease diagnosis based on retinal images, CT or MRI data, or creating digital twins for different simulations [26,27,28]; advancements in natural language processing (NLP) may be applied in healthcare for different predictive analytics for electronic health records, for sentiment analysis, or even medical diagnosis [29,30]. Different computational improvements for time series forecasting may come from different fields and find applications in healthcare. Some specific examples are: the techniques presented in [31] for analyzing motion patterns of animals in the wild based on non-Brownian stochastic processes may be applied in analyzing and predicting motion-related symptoms of people with neurodegenerative diseases; pattern recognition in financial fraud detection presented in [32] may be applied in identifying fraud and unusual patterns in healthcare systems; predictive maintenance in industrial equipment presented in [33] may be applied for predictive health monitoring; and environmental monitoring using satellite data presented in [34] may be applied for epidemiology.

When building predictive models, the quality of the data used is of the essence and directly affects the performance of the built models. There are two major types of ML algorithms: supervised algorithms that learn from labeled data and unsupervised learning algorithms that make decision rules based on unlabeled data by finding patterns, structures, and relationships in the learning data. In both cases, the quality and quantity of data provided for learning are fundamental to the performance of the model. For predictive models for healthcare, data must be obtained in clinically validated conditions, rigorously checked and curated, and, often, obtained during long periods of time by periodically or continuously monitoring the same subjects. High-quality healthcare data must include comprehensive and accurate clinical records, demographic information, and relevant biomarkers, all collected following standardized protocols to ensure consistency and reproducibility. This meticulous approach to data collection and management is essential for developing robust predictive models that can support early diagnosis, personalized treatment plans, and improved patient outcomes.

Modern trends demonstrate advances in multimodal data integration (combining data from medical records, wearables, genetic analysis, and lifestyle factors to create comprehensive models), real-time monitoring (using IoMT devices to gather real-time data on physical and cognitive parameters, analyzing them in real-time, and providing instant alerts and recommendations based on the obtained results), and ML techniques (deep learning and combining multiple models to improve predictive performance and robustness), which enhance the accuracy and relevance of predictive models.

To fully realize the benefits of these technologies in proactive healthcare, however, issues like data quality (related to inconsistent or incomplete data and differences in data formats and collection methods across sources), model interpretability (for complex models that healthcare providers must comprehend and trust), ethical considerations, and clinical validation and approval of predictive models must be addressed.

Predictive models can identify patterns that may be imperceptible to human caregivers/medical professionals and that may be indicative of disease onset and progression. Despite their potential, before clinical validation, predictive models are still being deployed and used at small scales, and they are not integrated into standardized protocols for diagnosis and disease tracking. For the moment, predictive models are deployed locally to aid and assist healthcare professionals, but the human professional is still responsible for making responsible decisions. However, as different models undergo clinical validation and refinement, their integration into the broader healthcare system is expected to grow, leading to more widespread and standardized use. Example clinical trials for predictive models built on actual patient data are numerous and have various applications in healthcare, such as predicting the risk of coronary heart disease based on a specific health questionnaire using fuzzy logic and logistic regression [35], prediction tools for patients and physicians for different types of cancer risk assessment and outcome predictions [36], predicting the risk of hospital readmission [37], predicting outbreaks of diseases—an application that was used in real life during the COVID-19 pandemic, AI-assisted dermoscopy integrated into smart devices [38], and even AI-assisted surgery integrated into Da Vinci robots [39].

Predictive modeling for neurodegenerative diseases is a rapidly evolving field with the potential to significantly improve patient outcomes through early detection and personalized interventions. Predictive modeling for AD, PD, and MS involves the use of advanced computational techniques to improve early diagnosis, monitor disease progression, and tailor personalized treatment strategies. These models integrate various types of data, including genetic, clinical, and imaging data, to identify patterns and biomarkers indicative of disease onset and progression.

In the case of AD, predictive models may be used for early diagnosis and for analyzing disease progression. AI models may use genetic data comprising information about the APOE gene, associated with an increased risk of developing AD [2], and imaging data from magnetic resonance imaging (MRI) or positron emission tomography (PET) scans to detect brain changes or deposits of certain types of cells that are characteristic of AD [40]. In the case of predictive modeling to be integrated into a health monitoring system at home, predictive modeling can be used for cognitive evaluation, sleep quality assessment, activity monitoring, or analyzing speech to detect changes in language usage or speech production.

In the case of PD, predictive models serve critical roles in early detection and disease progression analysis. These AI-driven models utilize diverse data sources, such as genetic information, including mutations in genes associated with PD risk, and imaging data from technologies like MRI or dopamine transporter (DAT) scans, to identify structural and functional changes in the brain related to PD symptoms [40,41]. In the area of home health monitoring systems, predictive modeling enables continuous assessment of motor symptoms such as abnormal movements, posture, and balance. It can also aid in evaluating cognitive function, tracking physical activities, and analyzing speech patterns to detect changes indicative of PD progression or medication response.

In the case of MS, predictive modeling may be of use in monitoring disease progression and improving patient management. Utilizing a range of data sources, predictive models integrate genetic markers linked to MS susceptibility, along with clinical assessments, like the expanded disability status scale (EDSS), to track motor and cognitive impairments over time [17]. Imaging techniques, such as MRIs, provide insights into lesion formation and progression within the central nervous system [21]. In the context of home-health monitoring systems, predictive modeling facilitates continuous evaluation of mobility changes, physical activity levels, respiratory function, speech abilities and cognitive functions [15], all important for optimizing treatment plans and enhancing quality of life for individuals living with MS [21].

Being able to predict the onset, progression, and exacerbations of conditions like PD, AD, and MS involves the use of statistical and ML techniques:

- Time series analysis: These models analyze time series data from IoMT devices to forecast future health states [42];

- ML models: Supervised and unsupervised learning techniques are used to develop models that can predict the onset or progression of neurodegenerative diseases based on historical data and real-time monitoring. Deep Learning Techniques and neural networks are utilized to examine intricate patterns in huge datasets, increasing prediction accuracy. Convolutional neural networks (CNN) and VGG16 models have been used for predicting AD from MRI images and PD from spiral drawings with high accuracy [43]. Recurrent neural networks (RNNs), convolutional neural networks (CNNs), and transformer-based models have been used for building different predictive models. These techniques exhibit excellent accuracy, have the potential for early diagnosis, and improve patient outcomes [43,44];

- Multimodal data integration: Prediction accuracy is increased by combining data from multiple sources such as genetic information, medical records, and wearable technology. To forecast the course of a disease and comprehend biological trends in neurodegenerative disorders, data-driven models of disease progression incorporate multiple sources, for example imaging and cognitive testing. These models allow for accurate patient staging and offer fine-grained longitudinal patterns [41].

The NeuroPredict platform integrates predictive modeling algorithms tailored for each specific disease (AD, PD, and MS) using the data provided by the IoMT devices integrated into the home environment and/or worn by the monitored subjects in order to provide personalized healthcare.

2.3. Voice Analysis for Emotion Detection in Neurodegenerative Diseases

In voice analysis for emotion detection, speech patterns and acoustic properties are employed to identify emotional states. This approach is becoming more widely used in healthcare, especially in the treatment of neurodegenerative illnesses like PD, AD, and MS, where mental wellness is essential to general health [10,45]. Emotion detection modeling is the process of creating voice analysis-based models that can differentiate between various emotional states.

Voice analysis for emotion detection in neurodegenerative diseases is specifically used for the following:

- Monitoring emotional health: AD, PD, and MS patients should have their emotional health regularly assessed because it has a significant impact on their overall quality of life [46];

- Early detection of emotional changes: Recognizing the early warning indicators of emotional disorders, such as anxiety and depression, which are widespread in neurodegenerative illnesses;

- Personalized interventions: Delivering prompt, individualized emotional support in response to discerned shifts in emotional states.

Emotional states, whether positive or negative, induce significant influence on various physiological aspects of the human body, such as changes in muscle tension, blood pressure, heart rate, respiration, and vocal tone [47]. These physiological changes are reflected in vocal characteristics, independent of the lexical content of speech. Recent research in vocal analysis [48,49] has demonstrated that different emotional states can be differentiated based on specific acoustic features, including temporal and spectral features. Speech rate, pitch, tone, and rhythm analysis are used to discern emotional states. Natural language processing (NLP) and ML methods are utilized to extract emotional indicators from speech data [50].

The methods used for performing voice analysis for emotion detection are based on ML and deep learning methods. Various ML algorithms, including support vector machines, K-nearest neighbors, and decision trees, have been implemented to detect emotions from audio data. Features, such as Mel frequency cepstral coefficients (MFCCs), are used to achieve high accuracy in emotion classification [51]. Deep neural networks using convolutional and pooling layers have shown results with good accuracy. Emotions like melancholy, happiness, and rage are categorized by processing raw audio data and extracting attributes [52].

Voice analysis for emotion detection is a promising field with significant potential for improving the management of neurodegenerative diseases, such as PD and AD. Recent trends include advancements in acoustic feature extraction for capturing nuances in voice signals, ML techniques (CNNs and RNNs), multimodal emotion detection (combining voice analysis with facial expression recognition, physiological signals, and body language analysis), and real-time processing. These developments enable continuous and accurate monitoring of emotional states, which is crucial for personalized healthcare interventions.

However, challenges such as data quality (ambient noise, speech variability due to accent, dialect, etc.), model generalizability across different populations, privacy concerns about recorded voice data, technical limitations (low-latency and high-accuracy processing requirements), and the need for clinical validation by trials and studies must be addressed to fully realize the benefits of this technology in proactive healthcare.

The authors of [53] perform an experiment comparing different machine learning techniques on the same datasets using the same features. For example, they compare using only time domain features, only frequency domain features, or both, using multiple machine learning approaches: random forests (RFs), naïve Bayes, support vector machines (SVMs), and CNNs, among others. In their experiments, the CNN approach significantly outperformed the other models. The databases used for testing are Emo-DB, Savee [54], and RAVDESS [55], with a separate model built for each of the datasets. The reported average accuracies go as high as 96%, but these are tested only on data from the same dataset. The best reported performances were obtained using a mix of frequency and time domain features. In [56], using different approaches like Deep CNN combined with other ML techniques, the authors achieve a performance of 90.50% average accuracy for identifying emotions from the Emo-DB dataset, 66.90% for emotions from the Savee dataset, 76.60% on the IEMOCAP, and 73.50% on the RAVDESS. Classifiers are built only on one dataset and then tested on data from the same dataset. In [57], different approaches are presented for classifying emotions in different datasets. For RAVDESS, the reported average accuracy is 71.61%, believed to be the highest achieved for the reference dataset at the time of writing (2020). The same paper reports achieving an accuracy of 86.1% for Emo-DB and 64.30% for IEMOCAP. It is worth mentioning that the studied literature that performed testing on multiple datasets, did not get the best results on each dataset using the same feature selection and classifier building approach. The authors of [58] report the best average accuracy of 72.2% for classifying data from RAVDESS and 97.1% for data from Tess [59] using CNNs, outperforming their own models built using long short-term memory models (LSTMs) or GRU (advanced version or recurrent neural networks), SVMs, or RF. The work presented in [60] achieves high classification accuracy by using divided patches from images that contain coordinate information concatenation as part of a vision transformer-based classification model.

A table that centralizes results presented in other works in the area of SER algorithms is presented in Table 1.

Table 1.

Comparative presentation of other works for SER.

The approach selected for the presented emotion recognition algorithm is based on a CNN built using multiple datasets. For this reason, a straight comparison of performances may not be feasible; as seen from the work presented above, the same approaches performed differently on different datasets. Regarding emotion recognition, the interest of this work is to build a speaker-independent emotion recognition algorithm that performs well on new data that can be integrated into the NeuroPredict platform in an easy-to-use manner for the intended users: medical personnel, formal and informal caregivers, and patients.

3. Methods

This section contains two major sections: the descriptions of the NeuroPredict platform and the approach chosen for building the emotion detection algorithm using voice features.

3.1. The NeuroPredict Platform

3.1.1. Overview

The project “Advanced Artificial Intelligence Techniques in Science and Applied Domains” [65] is currently in its second year of development, with an overall schedule of four years. The NeuroPredict platform is one of the project’s most important biomedical applications. This platform is committed to the development of predictive models that are fundamental for the prevention, early detection, and long-term monitoring of people suffering from neurodegenerative diseases. NeuroPredict platform draws on advanced algorithms based on AI to enhance delivery of healthcare by precisely forecasting disease evolution, optimizing therapeutical procedures, and improving patient outcomes through proactive personalized care approaches. The platform, that encompasses effective AI algorithms with comprehensive physiological information, is targeted to deliver accurate and timely insights that have the potential to impact neurodegenerative disease management and comprehension. It intends to support customized healthcare delivery by focusing on both the physical and emotional aspects of patient care.

AD poses an array of challenges, mostly associated with cognitive decline and memory loss. Patients frequently report difficulty with everyday tasks, behavioral changes, and, finally, diminished autonomy. The NeuroPredict platform addresses these issues by incorporating cognitive evaluations and digital biomarkers gathered from the platform’s user-friendly interfaces to evaluate cognitive performance over time. The platform, which uses AI algorithms to analyze data on memory capacity, executive functioning, and behavioral patterns, allows for early identification of cognitive decline and prediction of disease development. This preventative approach promotes timely interventions and customized care plans that maintain cognitive function and improve quality of life, which could slow disease progression while also preserving cognitive function and maximizing treatment options based on individual patient profiles.

MS, a chronic autoimmune disorder impacting the central nervous system, presents a challenge due to its unpredictable progress and wide range of clinical symptoms. MS may appear as recurrent episodes alternating with phases of relapse or increasing impairment development. The NeuroPredict platform tackles this unpredictability by combining AI-driven analytics to monitor the patient’s activity and the disease’s progression. The platform combines real-time data from wearable devices and patient feedback to develop predictive models that foresee disease evolution. It enables customized management strategies by allowing continuous monitoring of disease activity indicators and treatment responses, aiming to reduce recurrence while enhancing all aspects of patient care.

The NeuroPredict platform’s integration of IoMT devices is a key component of its functioning. These devices monitor vital health parameters; combined with ambient sensors that collect environmental data, the platform creates an in-depth understanding of every patient’s overall health status over extended periods. This comprehensive dataset is securely stored within the robust ICIPRO cloud infrastructure. The NeuroPredict platform’s strategy is centered on the integration of these non-intrusive smart monitoring devices with clinical data from patients, which is augmented by specific open data repositories dedicated to particular neurodegenerative disorders. This integrated approach enables the platform to collect a wide range of medical, lifestyle, and behavioral data, which is essential for the establishment of tailored predictive models. These models are constantly updated using advanced AI-driven analytics in the ICIPRO cloud, with the goal of detecting symptoms early, predicting disease progression pathways, and improving long-term care plans.

More importantly, PD, AD, and MS frequently require long-term monitoring and management [66], and this can be challenging for both patients and caretakers. The NeuroPredict platform provides an alternative by enabling remote monitoring and delivering tailored health insights generated from particular disease profiles. IoMT integration improves patient engagement by encouraging active involvement in healthcare. Patients receive immediate feedback on their health parameters, customized health insights, and alarms for abnormal values or possible health risks. This preventive approach promotes a collaborative medical setting in which patients and healthcare providers collaborate closely to achieve the most beneficial health outcomes.

3.1.2. The Architecture of the NeuroPredict Platform

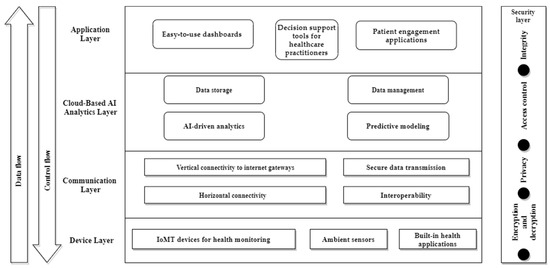

The NeuroPredict platform’s multi-level IoMT and AI-based architecture has been carefully designed to leverage advanced technologies to facilitate efficient PD, AD, and MS monitoring, analysis, and management. At its basis, the platform comprises a complex ensemble of hardware and software components that optimize data collection, processing, and integration in clinical and residential environments (Figure 1).

Figure 1.

Architecture of NeuroPredict platform.

The main levels of the NeuroPredict architecture are presented as follows:

- The Device Layer

The NeuroPredict platform comprises a wide range of IoMT devices meant to monitor vital health parameters. These devices consist of the following:

- IoMT devices for health monitoring regularly track health parameters including heart rate, blood pressure, EKG data, and sleep patterns. They provide accurate information on health status like cardiovascular health and neurological markers, which are critical for the early identification and continuous management of neurodegenerative diseases;

- Ambient sensors monitor living conditions related factors, such as temperature, humidity, air quality, and light levels. This information about the environment contributes to an improved awareness of how living conditions at home affect health outcomes, hence promoting proactive health management;

- Built-in health applications improve patient engagement by allowing self-reported input of information, medication adherence tracking, symptom reporting, and contact with healthcare providers. These applications enable increased involvement from patients and help to build an extensive set of data for AI-driven analytics.

IoMT devices in the NeuroPredict smart environment have complex connectivity features that allow for the real-time transfer of gathered data to the ICIPRO cloud infrastructure. This continuous data transmission guarantees that healthcare providers have rapid access to current patient data, allowing for prompt interventions and treatment plan revisions, if necessary, as well as the development of personalized healthcare plans tailored to particular patient requirements. The platform provides comprehensive patient profiles by merging data from many sources, including health records, IoMT device outputs, cognitive evaluations, and open data repositories.

- Communication layer

The NeuroPredict platform provides secure data transfer between IoMT devices and the tightly controlled ICIPRO cloud. Key features include the following:

- Secure data transmission makes use of strong protocols for encryption for securely transmitting data from IoMT devices to the cloud infrastructure. This preserves the confidentiality and accuracy of patient data throughout transmission and storage;

- Interoperability that is in line with the interoperability standards, allowing for effortless integration with current healthcare systems and electronic health records (EHRs). This interoperability facilitates data interchange across multiple infrastructures, improving coordinated care and consistency.

- Cloud-based AI analytics layer

The NeuroPredict platform’s design is centered on cloud-based infrastructure, notably the ICIPRO cloud, which acts as the repository for all collected information. The ICIPRO cloud is used for storing, processing, and analyzing data generated from IoMT devices and other sources in the NeuroPredict environment.

- -

- Data storage and management manages various datasets, such as medical records, IoMT device outputs, cognitive assessments, and open databases. This centralized process facilitates longitudinal data analysis while ensuring a comprehensive patient profile;

- -

- AI-driven analytics uses advanced algorithms to generate meaningful insights from multidimensional datasets. ML models analyze previous information to identify patterns, forecast disease progression, and enhance customized treatment approaches for neurodegenerative patients. By constantly acquiring knowledge from newly collected inputs, the NeuroPredict platform strengthens its predictive feature and reacts to changing requirements from patients;

- -

- Predictive modeling creates tailored predictive models by combining real-time IoMT data with clinical insights and cognitive assessments. These models allow for early identification of illness worsening, proactive management alternatives, and ongoing monitoring of patient health status.

Cloud technology provides data scalability, security, and accessibility, enabling healthcare providers to make effortless changes and gain real-time insights.

- Application Layer

The NeuroPredict platform includes intuitive applications and interfaces designed for healthcare providers, patients, and caretakers, which boost accessibility and usability.

- -

- Easy-to-use dashboards and decision-support tools for healthcare practitioners: these tools turn intricate data into meaningful recommendations, enabling more informed clinical decisions and tailored patient care.

- -

- Patient engagement applications support patients in taking an active role in the healthcare process by providing customized health insights, real-time feedback on health measurements, and educational tools. These applications enhance patient empowerment, compliance with medical care, and lifestyle changes that are necessary for properly managing PD, AD, and MS.

The NeuroPredict platform is designed with its scalability as an important key feature, which allows easier incorporation of novel technologies and other medical requirements as they become pertinent. This versatility guarantees that the platform stays at the cutting edge of technology and creative thinking in healthcare, with the ability to meet changing clinical requirements and patient care standards.

The NeuroPredict platform presents user interfaces that are intuitive for both healthcare providers and patients. These interfaces make it easier to navigate, visualize data, and connect with predictive models and analytics. Patient-centered design principles pledge that patients may actively engage in their healthcare management by receiving customized insights and alerts generated through the platform.

Considering the sensitivity of medical data, the NeuroPredict platform prioritizes extensive security measures to protect patient information. Encryption protocols, access controls, and compliance with healthcare data regulations ensure confidentiality and integrity for all data processing and storage in the ICIPRO cloud.

3.1.3. NeuroPredict Smart Environment and IoMT Devices

The data integration methodology used by NeuroPredict starts with the aggregation of heterogeneous data streams. Data gathering inside the NeuroPredict platform is made possible by the implementation of the NeuroPredict smart environment based on IoMT devices. These devices are carefully chosen to accurately measure numerous physiological parameters essential to assessing health problems as well as environmental factors. These real-time physiological and environmental data are regularly gathered and sent to the ICIPRO cloud infrastructure for storage and processing.

Data for each monitored patient are either manually input through a form, using the web interface, or acquired by an IoMT device that is either already available on the market or developed in-house.

- Commercially available devices

The commercially available devices integrated into the NeuroPredict platform are presented in the following list:

- The Withings Blood Pressure Monitor Core [67]

It allows users to acquire blood pressure, heart rate, perform ECGs, and to be used as a digital stethoscope for detecting valvular heart diseases. It provides Bluetooth and Wi-Fi connectivity.

- The Withings Move ECG [68] smartwatch

It performs ECGs, providing access to the recorded signal and the user’s heart rate, and it detects arrythmia events like atrial fibrillation events. It analyzes sleep, identifying sleep stages and assessing sleep quality. It keeps track of steps taken, the number of floors climbed, workout activities, walked distances, and burned calories. It provides Bluetooth and Wi-Fi connectivity.

- Withings ScanWatch [69]

Withings ScanWatch performs the same measurments as the Withings Move ECG. The pulse oximetry measurements, ECG measurements, and atrial fibrillation detection algorithm were validated in clinical studies [69].

- The Withings Body+ [70]

The Withings Body + is a smart body scale that computes the following parameters: body weight, body fat percentage, muscle mass, bone mass, water percentage, and body mass index. It offers Bluetooth and Wi-Fi connectivity.

- The Withings Body Scan [71]

The Withings Body Scan is a smart body scale that gathers the following parameters: body weight, body fat percentage, muscle mass, bone mass, water percentage, computes body mass index, analyzes detailed segmental body composition (arms, legs, and torso), and provides a special handle that allows users to perform cardiovascular measurements, including a 6-lead ECG and nerve activity assessment, detecting signs of neuropathy, as well as analyzes electrodermal activity. It provides Bluetooth and Wi-Fi connectivity.

- The Withings Sleep Analyzer [72]

This is a special kind of device, similar to a blanket, that is positioned under the mattress. It monitors respiratory rate, heart rate, apnea episodes, respiratory events, and it analyzes sleep to identify sleep stages and quality, wake up moments, getting out of bed events, and other sleep-related data; it has a total of 36 parameters available through the provided SDK. It is clinically validated for the detection of moderate–severe sleep apnea syndrome [73]. The device offers Bluetooth and Wi-Fi connectivity.

- The Withings Thermo [74]

This is a smart-body contactless thermometer using 16 infrared sensors for high accuracy. The device offers Bluetooth and Wi-Fi connectivity.

- Fitbit Charge 5 [75]

Fitbit Charge 5 is a wearable watch with very similar capabilities to the Withings Move ECG Watch, with the key advantage of continuous heart rate and oxygen saturation monitoring, not just while performing an ECG. It provides heart rate, blood oxygen saturation, activity related data, number of steps and floors climbed, workout activities, and it assesses walked distances and burned calories. It analyzes sleep, identifying sleep stages and assessing sleep quality correlated with heart and breathing rate data. It also detects atrial fibrillations.

- Oura Smart Ring [76]

Oura smart ring is a wearable device designed to be continuously worn on the finger that integrates a temperature sensor and a photopletismography module and integrates complex algorithms to provide heart rate information, analyze sleep quality, and assess stress states based on heart rate, HRV, and body temperature data. It provides access to the collected data through a dedicated API. The API allows access to heart rate data, body temperature (deviations from a user’s baseline body temperature, measured during sleep), activity data (number of steps, calories burned, distances, intensity of performed activities, activity sessions: workouts, walks, and other specific activities), and other information.

- EEG Muse Headband [77]

The EEG Muse Headband is a wearable device designed to be worn for several minutes a day or during sleep as a headband that acquires the EEG signal, heart rate, breathing rate, body temperature. It estimates movements and body posture and assesses sleep quality based on brain activity during sleep (sleep stages, duration). It provides Bluetooth connectivity. Developers are given access through an SDK to the raw EEG signal, heart rate, and accelerometer data and preprocessed data like alpha, beta, delta, gamma, and theta wave bands.

All the devices presented above use a dedicated mobile app made available by the device’s manufacturer to sync the acquired and locally processed data with a cloud service provided by the same manufacturer. An SDK/API is provided for interested 3rd parties to retrieve data from the cloud, not directly from the device or from the mobile app. In order to access data, the 3rd party must register an app with the device manufacturer, usually through a web form, and use Open Authentication v2 (OAuth2) to gain access to the user’s data without requiring his credentials.

Sensor data are sent via Bluetooth or WiFi to a smartphone/tablet, forwarded to the manufacturer’s servers, automatically retrieved by our data gathering module, and pushed into our local database.

Other readily available devices on the market may be integrated on the platform, with the condition that the manufacturer of the device provides an SDK/API for data access.

- 2.

- In-house-built devices

The devices for remote monitoring built by our team are described in the following paragraphs.

- The Medical BlackBox:

The medical BlackBox is a device developed by our team that integrates multiple sensors for physiological parameters, among them: (a) a body temperature sensor; (b) a photopletismography module for heart rate and blood oxygen saturation; (c) an alcohol sensor designed to detect alcohol vapors from breath; (d) an ECG acquisition module; (e) a urine test module based on color detection of dedicated testing strips; (f) a galvanic skin response module that estimates the electrodermal activity of the subject.

- The Ambiental BlackBox

The Ambiental BlackBox is a device developed by our team that acquires ambiental data, such as ambient temperature, humidity level, luminosity; assesses air quality; detects gas leaks; and analyzes movements based on data from a PIR sensor.

- The GaitBand

The GaitBand is a specially designed belt that integrates an accelerometer, a GPS, and other electronic devices needed to process and transmit the data for further analysis. It is designed to be worn as a belt and it has multiple purposes, among them, fall detection and subject localization.

- 3.

- Manually input health data forms

The platform also allows manual input of data by allowing all types of users (medical personnel, formal and informal caretakers. and monitored subjects) to input data using the provided forms. The form contains fields for: input value, parameter type, measuring unit, and device used.

3.1.4. Medical Monitoring and Evaluation Functionalities in the NeuroPredict Platform

The NeuroPredict platform aims to monitor and manage patients suffering from one of the following three neurodegenerative conditions: AD, PD, and MS. Each of the 3 targeted conditions has its own specific symptomatology, necessitating customized monitoring for effective management of the disease, including symptoms tracking, evaluation of disease progression or effectiveness of treatment, and evaluation of general health status in order to provide the best treatment plan for the monitored subject. The monitoring and evaluation functionalities described in Table 2 have been identified as necessary and useful for each of the targeted conditions.

Table 2.

Medical monitoring and evaluation functionalities in the NeuroPredict platform for each of the three monitored diseases: AD, PD, and MS.

These have been further analyzed and grouped into categories depending on the targeted monitored ability or parameter. The obtained groups are presented in Table 3 and described in the following paragraphs.

Table 3.

Medical monitoring and evaluation functionalities of the NeuroPredict platform.

The identified monitoring and evaluation functionalities are described in the following paragraphs.

- Cognitive function assessment

The platform integrates cognitive function evaluation through the following: (a) standardized tests for cognitive impairments; (b) reaction speed assessment tests; and (c) electrical brain activity monitoring. The standardized tests included in the platform include the following: (1) Mini-Mental State Examination (MMSE) and (2) the Montreal Cognitive Assessment (MoCA). The platform also allows the creation of different tests, using forms for inputting new questions and scoring for them. Tests may be given to patients and filled out by them directly through the platform, or forms may be downloaded as PDFs and filled out on paper by the subjects. Electrical brain activity is monitored using the Muse ECG Headband integrated into the platform using the data acquisition module.

- Motor symptoms monitoring

The motor symptoms designed to be monitored in the NeuroPredict platform include the following: (a) abnormal movement detection; (b) posture analysis; (c) gait analysis; (d) fall detection; and (e) abnormal movement during sleep (integrated into the polysomnography module).

These motor symptoms may be tracked in a monitored environment using IoMT devices. Low-amplitude abnormal body movements like tremor and different types of small movement impairments may be detected and tracked using wearable devices that include accelerometers and gyroscopes and fine-tuned algorithms for detecting the specific movements. Fall detection is also needed when monitoring patients, considering that most of the PD and AD patients are elderly and that PD is closely related to motor function impairments. The in-house-developed gaitband wearable device continuously records the accelerometer and gyroscope data and sends it to a local processing node for further processing. This is where the AI-based algorithms for fall detection, abnormal movements, and gait and posture analysis are to be implemented and/or further improved.

The platform is able to record and identify different movements while the patient is lying in bed by integrating data from the Withings Sleep Analyzer and correlating it with data from other wearable devices that also assess sleep quality. The polysomnography function included in the platform is presented in the following paragraph:

- Sleep monitoring

Getting enough quality sleep is essential for both physical and mental health, depression, anxiety, and rumination [13,78,79]. When SD is detected and assessed, customized intervention strategies may be defined, including meditation and relaxation techniques and possibly medication for improved sleep. Intuitively, improving sleep quality positively impacts mental and physical health [79]. SD identification may also aid in tracking disease progression.

The NeuroPredict platform assesses sleep quality in two ways: (a) through polysomnography and (b) using standardized forms for sleep disorders.

NeuroPredict integrates data from the Withings Sleep Analyzer for obtaining polysomnography data. Polysomnography is used to monitor multiple parameters related to sleep. It used to be performed only in clinical or laboratory conditions, but thanks to the advances in IoMT and wearables, necessary devices are widely available at affordable prices. The Withings Sleep Analyzer has been tested against data obtained by polysomnography analysis, the gold standard for sleep diagnostics [72]. It provides the following relevant sleep parameters: time-related parameters (time to fall asleep, time spent in each phase (light/deep/REM), total time awake, total time slept, and total time spent in bed), snoring-related parameters (total snoring time, snoring episodes count), apnea and breathing disturbances (apnea hypopnea index), number of wake-ups, number of times of getting out of bed, heart rate (minimum, average, and maximum value), respiratory rate (minimum, average, and maximum value).

The platform is also designed to allow patients to report their own perceived quality of sleep through standardized tests. There are multiple forms for self-reporting SD severity; among them, the Pittsburgh Sleep Quality Index (PSQI) is widely used [80]. There are also forms customized for PD: the PD Sleep Scale (PDSS and PDSS2) and the Scales for Outcomes in PD Sleep (SCOPA-S) [7], which mainly complete the general sleep form questions with questions related to symptoms specific to PD. PSQI and PDSS2 were chosen to be integrated into the NeuroPredict platform. As in the case of cognitive functioning testing, new forms may be defined by users with the necessary roles and permissions. Tests may be filled out by patients directly on the platform or may be downloaded as pdfs and scored manually.

- Daily activities monitoring

Daily activities monitoring include (a) physical activities monitoring and (b) location monitoring.

Daily activity monitoring is performed by integrating data from multiple sensors. Wearable devices providing accelerometer data provide the level of activity, including detection of physical activities like sports and GPS data, and provides location monitoring, specifically important for patients suffering from memory loss, for whom getting lost outside of their homes is a major risk.

- Emotional state monitoring

Emotional state monitoring is included in the platform as there is a known link between physical and mental health. Constant negative mental states may lead to mental health problems like anxiety and depression. Knowing about recurrent negative mental states may allow intervention and prevention of possible negative health effects. Predictive modeling based on emotional states can help forecast mental health problems and facilitate early intervention. Emotional state monitoring is performed in multiple ways: (a) based on the emotional state provided by the IoMT devices integrated into the platform (Oura Smart Ring, Muse ECG Headband), and (b) through our own algorithm based on voice processing. In the Results section, the current work presents an algorithm for emotion detection based on voice processing. Patients can log their perceived emotional states in an emotional state log. These entries can be kept private or shared with authorized users who have access to the patient’s medical file. Currently, the self-reported emotional states are neither used nor correlated with the states detected by the emotion detection algorithm or those assessed by the IoMT devices.

- Speech and language abilities monitoring

Monitoring speech and language abilities can be valuable for detecting and tracking the progression of both motor and cognitive issues. Certain changes in voice may be specific to motor control problems of the vocal tract, and certain speech problems may reflect language processing difficulties due to cognitive decline. Speech and language ability monitoring includes monitoring the following: (a) changes in certain acoustic features: pitch variation, speech rate, and words articulation; (b) language processing: evaluation of vocabulary usage, sentence structure, and grammatical accuracy; evaluation of word finding difficulty; comprehension testing; and narrative skills. Speech and language ability monitoring is a designed feature that will be implemented on top of the speech processing for emotional state monitoring, with functionality that already deals with voice acquisition, preprocessing, and feature extraction. Different models for language processing will be created based on new features that may be extracted and that are suggestive for the required task.

- General health status assessment

A monitoring functionality that is beneficial for any kind of monitored subject is general health status monitoring. Heart rate parameters, breathing rate, and body temperature are continuously/periodically acquired using the devices integrated into the platform. By default, the general health assessment module offers the following functionalities: (a) respiratory function evaluation; (b) muscle function evaluation; (c) bladder function evaluation. These are based on the health parameters offered by the IoMT devices described in the above sections. Additional devices that provide various health parameters can be integrated into the system as needed for the monitored subject. For instance, testing strips for different urine parameters may be added, allowing new measurements to be correlated with other symptoms and health conditions when necessary.

A symptoms log is implemented to track perceived symptoms, aiding patients in maintaining a journal of symptoms in an organized manner and sharing it with medical personnel and caregivers. The symptom log is designed to comprise the known symptoms associated with the 3 monitored diseases and to also allow manual input of custom-perceived symptoms. This is extremely useful for both memory-impaired individuals and subjects with no cognitive impairments, as accurately recalling symptoms, intensity levels, and timing is challenging. In the case of MS subjects, the log helps track a variety of symptoms that may indicate an exacerbation of the disease or a relapse and indicate the need for medical treatment.

- Environmental monitoring

In addition to the health monitoring and evaluation functionalities, the NeuroPredict platform integrates environmental monitoring using the in-house-developed Ambiental BlackBox. By integrating sensors to monitor environmental factors like temperature, humidity, and air quality, which may impact patients’ comfort and well-being, uncomfortable conditions or dangerous situations may be signaled so that the monitored subject or a caregiver may adjust the ambient parameters or mitigate associated risks. Third party smart home devices may also be integrated into the platform using the data acquisition module, provided that the manufacturer of the device allows data access through an SDK or API.

3.2. Emotion Detection Based on Voice Features

Speech emotion recognition (SER) is a research field that aims to identify the emotion of the speaker based on speech processing. SER can be either (a) text-dependent, where emotions are interpreted based on the content of the speech, or (b) text-independent, where the algorithm detects emotions solely based on the tone and manner of speech, disregarding the meaning of the actual words spoken. In the case of (a), there are applications that only use the voice signal in order to get a textual representation of it and completely disregard voice features. The current work implements a text-independent SER algorithm that aims to identify three categories of emotions: positive, neutral, and negative emotions.

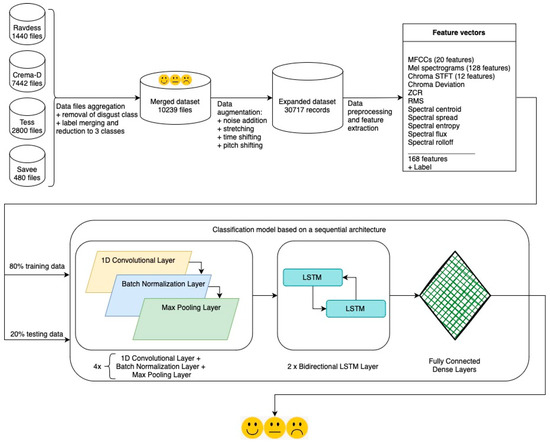

The flowchart for building the emotion recognition model integrated into the NeuroPredict platform is presented in Figure 2. The steps depicted in the figure will be detailed in the following sections.

Figure 2.

Flowchart for building the emotion classification model. The base for the architecture that led to the best results for the algorithm used in the NeuroPredict platform is presented.

3.2.1. Descriptions of Datasets

The SER classification model integrated into the NeuroPredict platform is constructed using speech data and forms 4 different speech emotion datasets. The following different datasets are used: Ryerson Audio-Visual Database of Emotional Speech and Song (Ravdess) [55], Torinto emotional speech set (Tess) [59], Crowd-Sourced Emotional Multimodal Actors Dataset (Crema-D) [81], and Surrey Audio-Visual Expressed Emotion (Savee) [54]. The recordings from these datasets are aggregated to create a more comprehensive dataset. The purpose of this is to create a more complex dataset with more diverse recordings (regarding speakers and recording environments) so that the built model can be trained on more varied data in order to achieve better generalization and to perform better on new, unseen data, recorded in different conditions. The initial and newly created datasets are presented in the following paragraphs.

Ravdess [55] is a multimodal dataset with voice and speech data for emotion processing. It contains recordings from 24 professional actors, who were asked to act a set of predefined phrases mimicking 7 emotions: calm, happy, sad, angry, fearful, surprised, and disgusted, with two intensities. The database also contains songs sang with mimicked emotions that were not used for the current work. The language of the recordings is English (North American).

Toronto emotional speech set (TESS) [59] is a database published in 2020 that contains a set of 200 target words spoken by two actresses portraying each of the seven emotions: anger, disgust, fear, happiness, pleasant surprise, sadness, and neutral. The language of the recordings is English (North American).

Crema-D [81] is another dataset containing recordings from 91 actors (48 male and 43 female actors, aged between 20 and 74) saying the same phrase while mimicking one of 7 emotions: anger, disgust, fear, happy, neutral, and sad, with four different intensities: low, medium, high, and unspecified. It contains a total of 7442 recordings. The language of the recordings is English (North American), although the speakers come from various ethnic backgrounds including Asian, European and Hispanic.

Surrey Audio–Visual Expressed Emotion (Savee) [54] is another dataset for emotion processing containing recordings of 4 male speakers (not professional actors) mimicking 7 emotions: anger, disgust, fear, happiness, sadness, surprise, and neutral. They recorded themselves saying the same phrases, 15 TIMIT sentences per emotion. It contains 480 recordings. Video recordings of the faces of the speakers are also available in the dataset and not used in the current work. The language of the recordings is English (British).

The 4 original datasets include the following emotions: happy, surprise, disgust, calm, angry, fear, sad, and neutral. The datasets were merged in order to create a more comprehensive dataset to be used for creating a model for identifying the targeted emotions. Disgust was excluded because it is not relevant to this specific study. The original datasets and the merged dataset are presented in Table 4. In order to recognize the three main classes of emotion (positive, negative, and neutral) the emotions are distributed as shown in Table 5.

Table 4.

Main features of each integrated SER dataset and of the combined dataset that contains the recordings from each of the 4 initial datasets, aggregated in order to create a more comprehensive dataset.

Table 5.

Redistribution of emotion recordings in the three classes of interest for the study.

The process for creating the aggregated dataset involves the following steps:

- Data upload: All data from the different datasets is stored in a data frame with its specific label. A key differentiator from other work is that our algorithm utilizes data from four different emotion datasets to develop a model that generalizes well to new data.

- Data relabeling: The dataset is refined by removing entries labeled with the “Disgust” emotion. The remaining labels are then consolidated: “Calm”- and “Neutral”-labeled data are relabeled as “Neutral”; “Angry, “Fear”, and “Sad” are relabeled as “Negative”; and “Happy” and “Surprise/Positive Surprise” are relabeled as “Positive”. The relabeling process is presented in Table 5.

- Data augmentation: To improve the diversity of the data the following augmentation techniques are used:

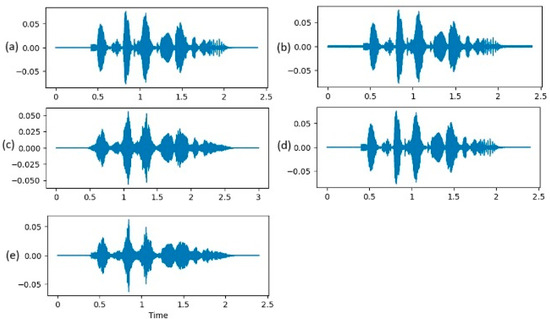

- Noise addition: A random noise is generated and added to the audio signal. This aids in making the model robust to background noises and other distortions (Figure 3b).

Figure 3. Representation of augmentation techniques. (a) original signal; (b) signal with random noise added; (c) stretched signal: duration changed and pitch kept the same; (d) signal shifted in time with a random number of samples; (e) signal with a shift in pitch of 0.7 musical steps.

Figure 3. Representation of augmentation techniques. (a) original signal; (b) signal with random noise added; (c) stretched signal: duration changed and pitch kept the same; (d) signal shifted in time with a random number of samples; (e) signal with a shift in pitch of 0.7 musical steps. - Stretching: The audio signal is stretched at a rate of 0,8 (Figure 3c). This changes the duration of the signal without altering features, like pitch, and, as an effect, the playback speed is increased by 25%. This way, the model will learn to work on signals with different variations in speed.

- Shifting: The audio signal is randomly shifted in time with a shift range randomly calculated between -5000 and 5000 samples. The purpose of this step is to make the model robust to time-based distortions (Figure 3d).

- Pitch shifting: A pitch shift of 0.7 musical steps is applied on the initial signal. This alters the frequency content but preserves the overall structure of the signal and helps the model to generalize better to pitch variations (Figure 3e).

3.2.2. Data Processing and Feature Extraction

All speech data manipulation is performed using the librosa library(v0.10) for Python3 [82]. All data recordings are brought to the same sampling frequency of 44.1 kHz before feature extraction. A window of approximately 25 milliseconds (around 1102 samples at the chosen frequency) is used. This is approximated to the nearest power of 2 for computational efficiency, resulting in 1024 samples per window. Hop size was chosen at half the value of the window size, which is 512 samples.

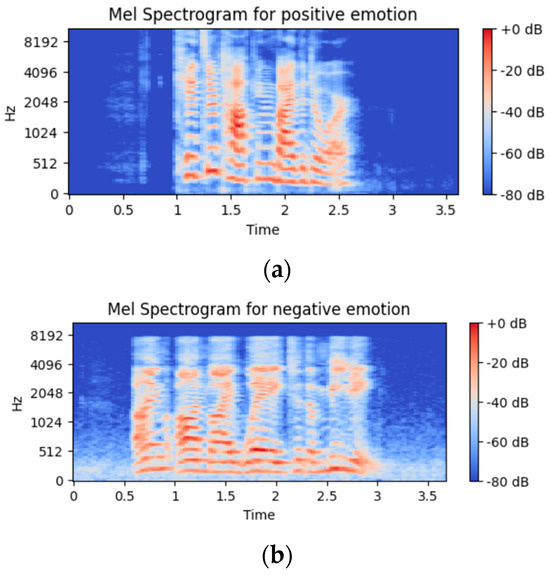

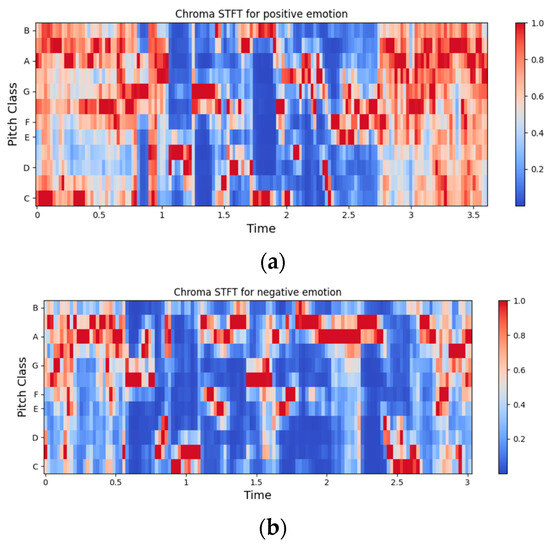

The following features are extracted and used for the presented algorithm: 20 MFCC coefficients, 128 values from Mel Spectrograms (Figure 4), 12 values for chroma STFT (Figure 5), chroma deviation, zero crossing rate, root mean square, spectral centroid, spectral spread, spectral flux, and spectral rolloff, for a total of 168 features that are combined to form each feature vector. The extracted features are detailed in the following paragraphs:

Figure 4.

Representation of Mel spectrogram for an audio signal of (a) positive class and (b) negative class.

Figure 5.

Representation of chroma STFT for an audio signal of (a) positive class and (b) negative class.

- Mel frequency cepstral coefficients (MFCCs): MFCCs are a set of coefficients that describe the shape of the power spectrum of a sound signal. The raw audio signal is transformed into the frequency domain using a discrete Fourier transform (DFT) and then the Mel scale is applied to approximate the human auditory perception of sound frequency. Cepstral coefficients are computed from the Mel-scaled spectrum using a discrete cosine transform. The first 20 MFCCs are used in the current work and included in the feature vector.

- Mel spectrograms extracted features: A Mel spectrogram represents the short-term power spectrum of a sound, with the frequencies converted to the Mel scale. The intensity of various frequency components in the audio signal can be extracted from this spectrogram. 128 features are extracted from each computed Mel spectrogram and included in the feature vector.

- 3.

- Chroma-STFT (short-time Fourier transform): There are 12 Chroma-STFT features, representing the 12 different musical pitch classes of the signal. They represent spectral energy and are useful in capturing harmonic and melodic characteristics of the signal. They can also be employed in differentiating the pitch class profiles between audio signals [83].

- 4.

- Chroma deviation: Chroma deviation measures the standard deviation of the Chroma features over time, indicating the variability of the pitch.

- 5.

- Zero crossing rate (ZCR): ZCR is defined as the rate at which a signal changes from positive to zero to negative, or vice versa. It serves as a crucial feature in identifying short and sharp sounds, effectively pinpointing minor fluctuations in signal amplitude. The count of zero crossings serves as an indicator of the frequency at which energy is concentrated within the signal spectrum [84].

- 6.

- Root mean square (RMS): RMS refers to the average loudness of the signal, taking into account the energy of the wave. The steps to compute the RMS of an audio frame are: computing the square of each sample of the signal, calculate the mean of those squared values and finally take the square root of the mean value.

- 7.

- Spectral centroid: Spectral centroid indicates the “center of mass” of the spectrum and measures where the energy of the spectrum is concentrated. It is used for timbre analysis. It is computed as the weighted mean of the frequencies present in the signal.

- 8.

- Spectral spread: Spectral spread indicates the dispersion of the spectrum around the spectral centroid, providing information about the bandwidth of the signal.

- 9.

- Spectral entropy: Spectral entropy quantifies the complexity or randomness of the spectrum.

- 10.

- Spectral flux: Spectral flux is used as a measurement of the rate of change of the power spectrum, indicating how quickly the spectrum is changing over time.

- 11.

- Spectral rolloff: Spectral rolloff describes the frequency below which a certain percentage of the total spectral energy is contained. The percentage chosen for computations in current work is 90%.

3.2.3. Model Architecture

The built emotion identification model uses a sequential model architecture, a type of multilayer neural network structure commonly employed in natural language processing (NLP) and speech recognition, and is also used in a multitude of different scenarios as a flexible model that allows stacking different types of layers.

The sequential model comprises multiple layers: an input layer, an output layer, and a variable number of hidden layers in between. Each layer has different weights that are learned during the training phase. Data are passed through the layers in sequence; the output of one layer serves as the input for the next layer, hence the name of the model.

To determine the best neural network architecture, multiple designs with varying hidden layers and parameters were tested, comprising the following:

- Convolutional layers

Convolutional layers apply 1D filters moving along the time axis of the data. Each convolution layer detects local features from the audio signal.

- Long short-term memory (LSTM) layers/bidirectional LSTM layers

LSTM layers are a type of recurrent neural network (RNN) architecture designed to handle the vanishing gradient problem, common in traditional RNNs. LSTMs are considered effective for learning long-term dependencies in sequential data. Bidirectional LSTMs consist of two LSTM layers: one processes the input sequence from start to finish (forward direction), while the other processes it in reverse (backward direction). This allows the network to capture information from both past and future states simultaneously. Bidirectional LSTMs are used for enhanced context understanding and improved performance in time series forecasting and other sequential data tasks.

- Gated recurrent unit (GRU) layers

GRU layers use two gates to control the flow of information: a reset gate that determines how much of the past information to lose track of and an update gate that controls how much of the past information should be passed along to future iterations or layers.

- Batch normalization layers

Batch normalization normalizes the output of the previous layer by subtracting the batch mean and dividing by the batch standard deviation.

- Different activation functions: rectified linear unit (ReLU) and leaky ReLU.

ReLU outputs the input directly if it is positive; otherwise, it outputs zero. It is a popular activation function used in neural networks but can have the problem of ‘killing’ negative inputs. This is why Leaky ReLU, which addresses this issue, might yield better results. The difference is in how leaky ReLU treats negative inputs; it multiplies them with a positive constant, preventing “dead” neurons.

The steps for building the classification algorithms are as follows:

- Data preparation: The dataset is split in training (80%) and testing (20%) sets.

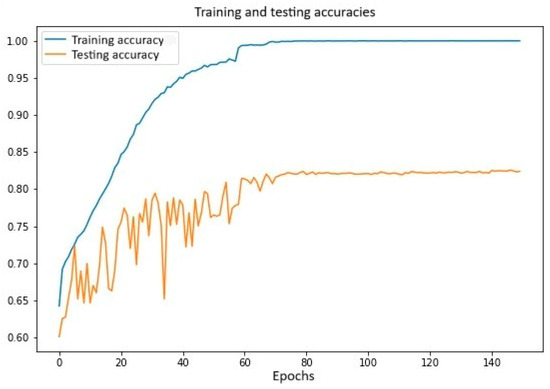

- Building the classification model: The architecture for the classification model is created.