Abstract

This article introduces Lester, a novel method to automatically synthesize retro-style 2D animations from videos. The method approaches the challenge mainly as an object segmentation and tracking problem. Video frames are processed with the Segment Anything Model (SAM) and the resulting masks are tracked through subsequent frames with DeAOT, a method of hierarchical propagation for semi-supervised video object segmentation. The geometry of the masks’ contours is simplified with the Douglas–Peucker algorithm. Finally, facial traits, pixelation and a basic rim light effect can be optionally added. The results show that the method exhibits an excellent temporal consistency and can correctly process videos with different poses and appearances, dynamic shots, partial shots and diverse backgrounds. The proposed method provides a more simple and deterministic approach than diffusion models based video-to-video translation pipelines, which suffer from temporal consistency problems and do not cope well with pixelated and schematic outputs. The method is also more feasible than techniques based on 3D human pose estimation, which require custom handcrafted 3D models and are very limited with respect to the type of scenes they can process.

1. Introduction

In industries such as cinema or video games, rotoscoping is employed to leverage the information from live-action footage for the creation of 2D animations. This technique contributed to the emergence of a unique visual aesthetic in certain iconic video games from the 80s and 90s, such as “Prince of Persia” (1989), “Another World” (1991) or “Flashback” (1992), and it continues to be employed in modern titles like “Lunark” (2023). However, as in the case of Lunark, this technique still requires manual work at the frame level, making it very expensive.

Recent advances in Artificial Intelligence (AI) have opened the possibility of automating this process to some extent. The latest progress in conditional generative models for video-to-video translation offers a promising approach to addressing this issue. However, these methods are not yet consistently able to deliver a polished, professional outcome with uniform style and spatial and temporal consistency. Their performance varies significantly depending on different aspects of the input video, such as scene and resolution. Additionally, they are slow and require enormous computational resources. Therefore, the need for an efficient and deterministic automated solution remains an open problem.

This article describes a novel method, called Lester, to automate this process. A user enters a video and a color palette and the system automatically generates a 2D retro-style animation in a format directly usable in a video game or in an animated movie (see Figure 1 for an example).

Figure 1.

Example synthesized animation frame (right) and its related original frame (left, face has been anonymized) from an example input video.

The method approaches the challenge mainly as an object segmentation problem. Given a frame depicting a person, a mask for each different visual trait (hair, skin, clothes, etc.) is generated with the Segment Anything Model (SAM) [1]. To univocally identify the masks in all the frames, a video object tracking algorithm is employed using the approach of [2]. The masks are purged to solve segmentation errors and their geometry is simplified with the Douglas–Peucker algorithm [3] to achieve the desired visual style. Finally, facial traits, pixelation and a basic rim light effect can be optionally added.

2. Related Work

Max Fleischer’s invention of the rotoscope in 1915 transformed the ability to create animated cartoons by using still or moving images. Variants of this technique have been used to this day in animated films (e.g., “A Scanner Darkly”, (2006)), television animated series (e.g., “Undone” (2019)) and video games (e.g., “Prince of Persia” (1989), “Another World” (1991), “Flashback” (1992), “Lunark” (2023)). Contemporary productions integrate image processing algorithms to facilitate the task, but they are mainly based on costly manual work. Achieving full automation with a polished professional outcome (uniform style, spatial and temporal consistency) remains unfeasible at present. However, recent advances in Artificial Intelligence (AI) opens the possibility to attempt advancing to fully automation.

To the best of our knowledge, there are two main strategies to address this problem. On the one hand, the challenge can be addressed with conditional generative models, prevalent in image translation tasks nowadays. The first methods (e.g., CartoonGAN [4], AnimeGAN [5] or the work in [6]) used conditional generative adversarial networks (GAN) to transform photos of real-world scenes into cartoon-style images. These methods have recently been surpassed by those based on diffusion models such as Stable Diffusion [7,8]. Although most of these methods have been designed for text-to-image generation, they have been successfully adapted for image-to-image translation using techniques such as SDEdit [9] or ControlNet [10].

Pure image-to-image translation methods with diffusion models also exists (e.g., SR3 [11] and Palette [12]), but with a supervised setup that can only be applied to situations in which a groundtruth of pairs of images (original, target) such as super-resolution or colorization. In any case, even if we have a good conditional generative model, there is still the challenge of applying it to the frames of a video while preserving temporal consistency. The latest attempts (e.g., [13]) combine tricks (e.g., tiled inputs) to uniformize the output of the generative model with video-guided style interpolation techniques (e.g., EbSynth [14]). The results of these methods have improved greatly, and for some styles the results are so good that they are already used in independent productions. However, for most digital art styles these methods impose an undesirable trade-off between style fidelity and temporal consistency. Among the latest works of this type, OpenAI’s Sora [15] is particularly noteworthy. Analysis of the provided samples reveals that Sora excels in comprehending the physical world and possesses the foundational elements of a world model, holding the potential to evolve into a physics engine in the future [16].

On the other hand, a completely different approach is to apply a 3D human pose estimation algorithm to track the 3D pose through the different frames. The 3D pose is then applied to a 3D character model with the desired aspect (hair, clothes, etc.). Typically, this 3D character model is created by hand with a specialized software. Finally, a proper shader and rendering configuration are setup to obtain the desired visual style. This is the approach taken in [17,18,19,20,21]. This approach has many drawbacks. First, it depends on the state-of-art of 3D human pose estimation, which has advanced a lot but still fails in many situations (low resolution, truncations, uncommon poses) mainly due to the difficulty to obtain enough groundtruth data. Second, it requires the design of a proper 3D model for each different character. Third, the 3D pose is based on a given model, typically SMPL. To apply it to a different model, it requires a pose retargetting step. The joint rotations can be easily retargetted but the SMPL blend shapes cannot, resulting in unrealistic body deformations. This is why demos of this kind of work are usually done with robots, as they do not have deformable parts. An advantage of this approach with respect to generative models is that it provides a good temporal consistency, as inconsistencies from the 3D human pose estimation step can be properly solved with interpolation, such as is done in [20].

In this work, we propose a third approach, mainly based on segmentation and tracking. Unlike the first mentioned strategy (generative models), the proposed approach is more predictable and provides much better temporal consistency. Unlike the second strategy mentioned (3D human pose estimation), the proposed approach is more robust and much more practical since it does not require having a custom 3D model for each new character. This is not the first time that this kind of problem has been addressed this way. In [22], segmentation was used to stylize a portrait video given an artistic example image, obtaining better results than neural style transfer [23], which was prevalent at that time.

3. Methodology

3.1. Overview

The main input to the method (the code is available at https://github.com/rtous/lester-code (accessed on 20 July 2024); the dataset and the results of the experiments are publicly available at https://github.com/rtous/lester (accessed on 20 July 2024)) is a target video sequence T of a human performance. There are not general constraints about the traits of the depicted person (gender, age, clothes, etc.), pose, orientation, camera movements, background, lighting, occlusions, truncations (partial body shots) or resolution. However, certain scenarios are known to potentially impact the results negatively (this will be addressed in Section 4). While the method is primarily designed for videos featuring a single person, its theoretical application with multiple individuals will be discussed in Section 4. When operating without additional inputs, the method defaults to specific settings for segmentation, colors and other attributes. However, it is anticipated that the user might prefer specifying custom configurations, necessitating supplementary inputs such as segmentation prompts, color palettes, etc., as detailed in corresponding subsections below. The output of the method is a set of PNG images showing the resulting animated character and no background (where the alpha channel is set to zero).

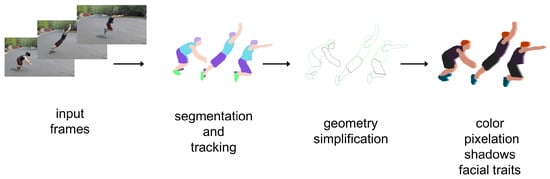

The method consists of three independent processing steps. The main one is the segmentation and tracking of the different visual traits (hair, skin, clothes, etc.) of the person featured in the video frames. The second step focuses on rectifying segmentation errors through mask purging and simplifying their polygonal contours. The third and final step involves applying customizable finishing details, including colors, faux rim light, facial features and pixelation. Figure 2 shows the overall workflow of the method. Each component is elaborated upon in the subsequent subsections.

Figure 2.

Overview of the system’s workflow (inputs on the left, output on the right).

3.2. Segmentation and Tracking

In order to segment the different visual traits of the character the Segment Anything Model (SAM) [1] is used. SAM is a promptable segmentation model which is becoming a foundation model to solve a variety of tasks. Given an input image with three channels (height × width × 3) and a prompt, that can include spatial or text information, it generates a mask (height × weight × 3), with each pixel assigned an RGB color. Each color represents an object label from the object label space , inferred from the prompt, corresponding to the predicted object type at the corresponding pixel location in the input image. The prompt can be any information indicating what to segment in an image (e.g., a set of foreground/background points, a rough box or mask or free-form text). SAM consists of a image encoder (based on MAE [24] a pre-trained Vision Transformer [25]) that computes an image embedding, a prompt encoder (being the free-form text based on CLIP [26]) that embeds prompts, and then the two information sources are combined in a mask decoder (based on Transformer segmentation models [27]) that predicts segmentation masks.

Applying SAM at the frame level would result in different labels for the same object in different frames. SAM does not start from a closed list of object labels but rather creates and assigns labels during execution. Therefore, there is no guarantee that the same labels are assigned to the same object in each frame. To do it consistently, we use SAM-Track [2]. SAM results for the first frame are propagated to the second frame, with the same labels, with DeAOT [28], a method of hierarchical propagation for semi-supervised video object segmentation. The hierarchical propagation can gradually propagate information from past frames to the current frame and transfer the current frame feature from object-agnostic to object-specific. SAM is applied to subsequent frames to detect new objects, but the segmentation of already seen objects is carried out by DeAOT.

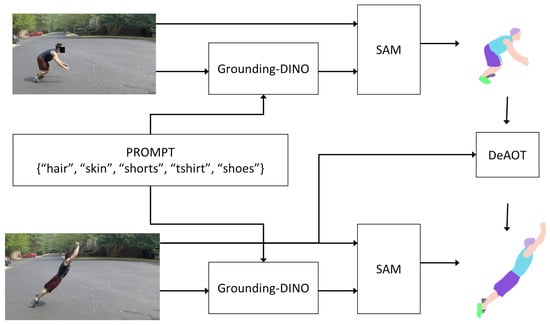

We have chosen keyword-based prompts to specify the desired segmentation. We provide some default prompts for common scenarios (e.g., ‘hair’, ‘skin’, ‘shoes’, ‘t-shirt’, ‘trousers’) but it is anticipated that in many scenarios, the user will need to specify an alternative. These prompts are not processed with the free-text capabilities of SAM because of their limitations, instead they are processed with Grounding-DINO [29], an open-set object detector, which translate them into object boxes that are sent to SAM as box prompts. One problem we have identified is that certain object combinations lead to incorrect masks when they are specified in the same prompt. To solve this, we allow specifying multiple prompts that are processed independently. Figure 3 shows the workflow of the segmentation and tracking stage.

Figure 3.

Workflow of the segmentation and tracking stage for the first two frames (inputs on the left, output on the right).

3.3. Contours Simplification

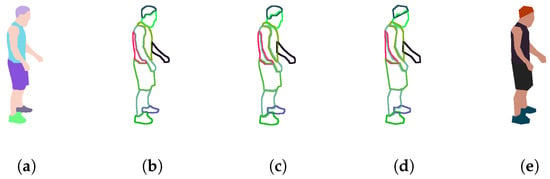

One of the desired choices for the target visual style is the possibility to display very simple geometries, i.e., shape contours with short polygonal chains, in order to mimic the visual aspect of, e.g., “Another World”. To achieve it, a series of steps are carried out. For each frame, the result of the segmentation and tracking step is a mask with each pixel assigned an RGB color representing an object label. The mask is first divided into submasks, one for each color/label. Each submask is divided, in turn, into contours with the algorithm from [30]. Each individual contour is represented with a vector of the vertex coordinates of the boundary line segments. The number of line segments of each contour is reduced with the Douglas–Peucker algorithm [3]. The algorithm starts by joining the endpoints of the contour with a straight line. Vertices closer to the line than a given tolerance value t are removed. Next, the line is split at the point farthest from the reference line, creating two new lines. The algorithm works recursively until all vertices within the tolerance are removed. Tolerance t is one of the optional parameters of the proposed method and let the user control the level of simplification. As each contour is simplified independently, undesirable spaces can appear between the geometries of bordering contours that previously were perfectly aligned. To avoid this, and before the simplification step, all contours are dilated convolving the submask with a 4 × 4 kernel. It is worth mentioning that the simplification algorithm may fail for certain contour geometries and values of t. In that situations (usually very small contours) the original contour is kept. See Algorithm 1 and Figure 4.

| Algorithm 1 Contour simplification algorithm |

| Require: M a list containing submasks, a minimum contour area, t a tolerance value for simplification |

| Ensure: R a list of contours where is the simplified contour j of submask i |

|

Figure 4.

Graphical overview of the contour simplification stage of one frame: (a) result of the segmentation and tracking step; (b) contours detection; (c) contours dilation; (d) contours simplification; (e) resulting colored frame.

3.4. Finishing Details

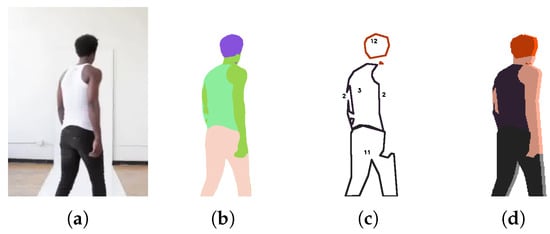

The third and final step of the method entails applying customizable finishing details, granting users flexibility in shaping the visual style of the outcome. The most important aspect is the color. The method generates a visual guide with unique identifiers for each color segment, as shown in Figure 5. One of the inputs to the method is a color palette, which indicates the RGB values that will be applied to each segment identifier.

Figure 5.

Coloring process: (a) input frame; (b) segmentation; (c) visual guide with unique identifiers for each color segment; (d) final frame.

Another finishing detail, which is optional, is the possibility of adding some schematic-looking facial features. The features are generated from the 68 facial landmarks that can be obtained using the frontal face detector described in [31], which employs a Histogram of Oriented Gradients (HOG) [32] combined with a linear classifier, an image pyramid and a sliding window detection scheme. Another option is the possibility of adding a very simple rim light effect by superimposing the same image with different brightness and displaced horizontally. Finally, a pixelation effect is achieved by resizing the image down using bilinear interpolation and scaling it back up with nearest neighbor interpolation. Figure 6 shows how rim light, facial features and pixelation are progressively applied to an example frame.

Figure 6.

Optional finishing details: (a) Flat method result (b) Rim light (c) Facial features (d) Pixelation.

4. Experiments and Results

In order to evaluate the performance of the method, we collected a set of 25 short videos ranging from 5 to 20 s in duration. The videos showcase a lone human subject displaying various clothing styles and engaging in diverse actions. The videos were obtained from the UCF101 Human Actions dataset [33], the Fashion Video Dataset [34], the Kinetics dataset [35] and YouTube. The assembled dataset includes both low- and high-resolution videos and both in-the-wild and controlled-setting videos. The dataset and the results of the experiments can be found in [36]. Figure 7 and Figure 8 show some of example results.

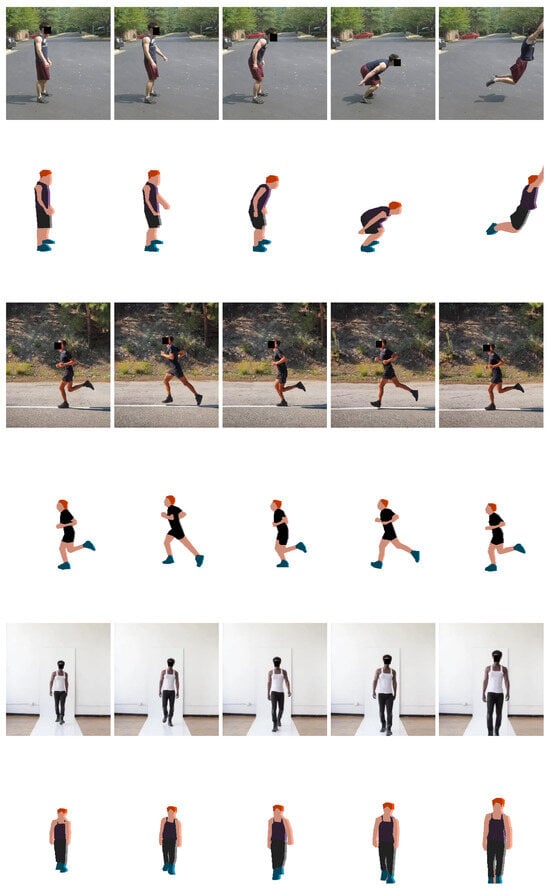

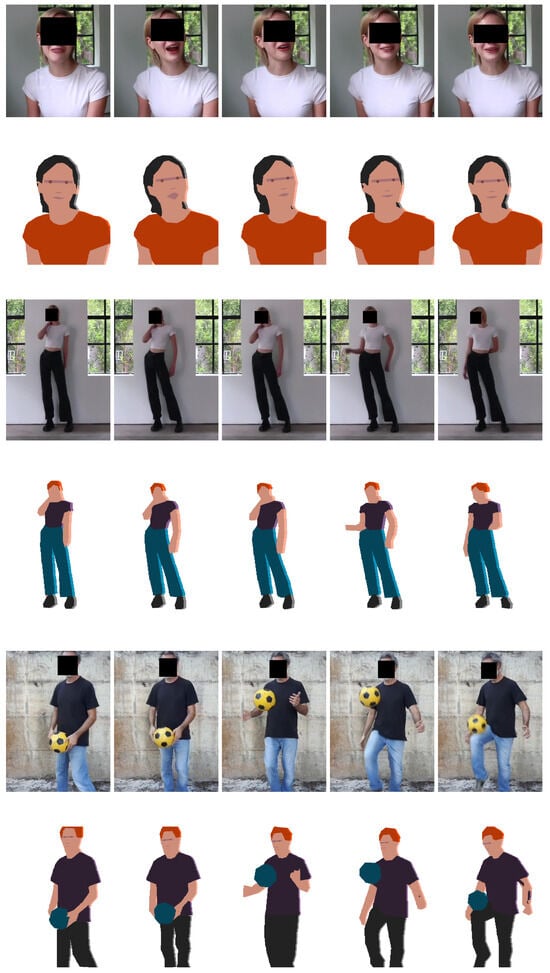

Figure 7.

Example results. Odd rows: input videos (faces have been anonymized). Even rows: method results.

Figure 8.

Example results. Odd rows: input videos (faces have been anonymized). Even rows: method results.

To assess the perceptual quality of the generated animations, a user study was conducted. A survey was designed comprising questions about three visual aspects: shape quality, color consistency and temporal consistency, plus and overall score. Scoring options were based on the Category Rating (ACR) described in ITU-T P.910 recommendation [37] scale from 1 = Bad to 5 = Excellent). The subjective quality assessment of the results is summarized in Table 1. We report the MOS (mean opinion score described in ITU-T P.800.1 [38]). The videos are grouped into three categories, in-the-wild low resolution (ITW-LR), in-the-wild high resolution (ITW-HR) and controlled setting high resolution (C-HR).

Table 1.

Subjective quality assessment MOS values of the ACR (1 = Bad to 5 = Excellent) of shape quality, color consistency, temporal consistency and overall quality. a ITW-LR → in-the-wild low resolution; b ITW-HR → in-the-wild high resolution; c C-HR → controlled setting high resolution.

Strengths: The results show that the method is able to synthesize quality animations from diverse input videos (an average MOS 4.02 over 5). The performance significantly improves (4.67) on high-resolution videos taken in controlled conditions (videos 2, 11, 12, 13, 14 and 15). The method exhibits a very good temporal consistency and can correctly process videos with different poses and appearances, dynamic shots (e.g., video 5), videos with partial shots (e.g., the medium close-up shot in video 7) and diverse backgrounds.

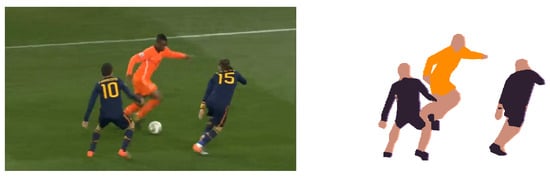

It is worth noting that, although it is not the primary focus of this work, the method can process videos containing multiple people. Objects associated with different individuals are tracked independently, each with a unique identifier, even if the objects are of the same type. This enables mapping these objects to different colors, allowing the entire pipeline to be applied to multi-person shots. Figure 9 shows an example result from a multi-person frame.

Figure 9.

Example synthesized animation frame (right) and its related original frame from an example input multi-person video.

Limitations: The segmentation and tracking performance decreases in videos with low resolution (all videos from the UCF101 Human Actions dataset), complex backgrounds (videos 17 and 20), low-contrast colors (videos 8, 10, 22 and 23), inappropriate lighting (videos 14 and 25) and occlusions (video 23). Figure 10 shows four example frames with errors from four different videos (videos 22, 23, 25 and 8 from the test dataset).

Figure 10.

Example failure cases. Top row: input videos (videos 22, 23, 25 and 8 from the test dataset, faces have been anonymized); bottom row: results.

Computational cost: The method has been implemented utilizing the Python programming language. The experiments have been run on a high-end server with a quadcore Intel i7-3820 at 3.6 GHz with 64 GB of DDR3 RAM, and 4 NVIDIA Tesla K40 GPU cards with 12 GB of GDDR5 each. The peak RAM usage of the method was recorded at 3.1 GB during the execution of the segmentation task, which also accounts for a peak GPU RAM usage of 2.1 GB. For a single frame, step 1 (segmentation and tracking) takes 0.56 s, step 2 (contours simplification) takes 0.43 s and step 3 (finishing details, mainly facial features) takes 1.32 s. Overall, the method operates at 0.43 FPS (1 FPS without facial features).

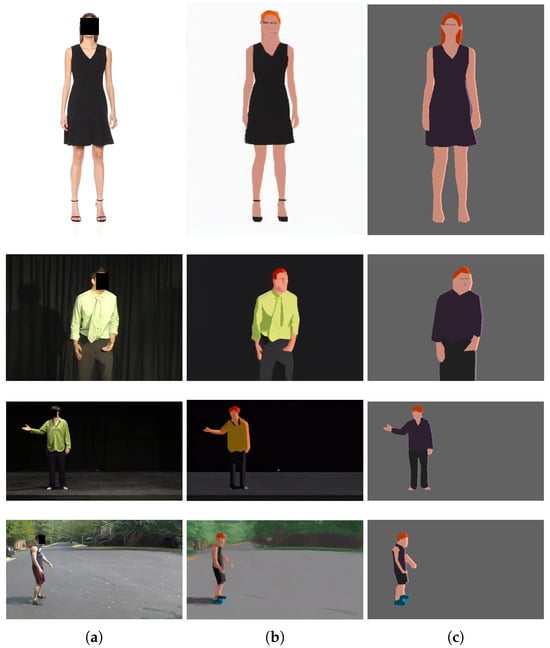

Comparison with image-to-image generative models: Although we are not aware of other methods that have attempted to solve the problem for this specific visual style, an alternative could involve using conditional generative models. One potential pipeline, described in [13], adapts Stable Diffusion [7] for image-to-image translation using ControlNet [10], and employs tiled inputs along with video-guided style interpolation (EbSynth [14]) to maintain visual consistency across frames. We tested this processing pipeline with some videos from the test dataset to evaluate the feasibility of this approach. To achieve the desired style, we fine-tuned Stable Diffusion with Dreambooth [39]. Figure 11 shows four example frames obtained with this approach, but to assess the temporal consistency the results can be found in our results repository in [36]. The results reveal several drawbacks compared to the proposed method. Firstly, they exhibit poor temporal consistency across frames. Secondly, the method requires specifying the level of fidelity to the desired style, where higher fidelity compromises the original frame structure and details, leading to an undesirable trade-off. Thirdly, the performance varies significantly depending on various aspects of the input video, such as scene and resolution. Lastly, the method operates significantly slower than the proposed method, with a processing speed of only 0.3 FPS.

Figure 11.

Comparison with a generative pipeline: (a) input frame; (b) generative pipeline described in [13]; (c) Lester (proposed method).

Discussion: The results obtained are very satisfactory and confirm that the latest advances in segmentation and tracking make new applications possible, also in the field of image synthesis. The proposed method has proven to be an effective method for generating rotoscopic animations from videos. The limitations detected do not represent a handicap since it is expected that the method will be used in videos selected or created expressly. Nor does its computational cost since the method is expected to be used in editing tasks that do not require real-time execution. The method is primarily designed for videos featuring a single person, but could be adapted to videos with multiple people by using multiple parameterizations for segmentation prompts and color palettes.

5. Conclusions

In this work we present Lester, a method for automatically synthesizing 2D retro-style animated characters from videos. Rather than addressing the challenge with an image-to-image generative model, or with human pose estimation tools, it is addressed mainly as an object segmentation and tracking problem. Subjective quality assessment shows that the approach is feasible and that the method can generate quality animations from videos with different poses and appearances, dynamic shots, partial shots and diverse backgrounds. Although it is not the objective, it is worth mentioning that the segmentation and tracking performance of the method is less consistent in the wild, especially against low-resolution videos. But, the method is expected to be used on selected or custom-made videos in semi-controlled environments.

Due to its capabilities, the method constitutes an effective tool that significantly reduces the cost of generating rotoscopic animations for video games or animated films. Due to its characteristics, it is simpler and less deterministic than generative models-based pipelines and more practical and flexible than techniques based on human pose estimation.

Funding

This research is partially funded by the Spanish Ministry of Science and Innovation under contract PID2019-107255GB, and by the SGR programme 2021-SGR-00478 of the Catalan Government.

Data Availability Statement

The dataset and the results of the experiments are publicly available at https://github.com/rtous/lester (accessed on 20 July 2024). The code is available at https://github.com/rtous/lester-code (accessed on 20 July 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ACR | Absolute Category Rating |

| GAN | Generative Adversarial Networks |

| HOG | Histogram of Oriented Gradients |

| MOS | Mean Opinion Score |

| SAM | Segment Anything Model |

References

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

- Cheng, Y.; Li, L.; Xu, Y.; Li, X.; Yang, Z.; Wang, W.; Yang, Y. Segment and Track Anything. arXiv 2023, arXiv:2305.06558. [Google Scholar]

- Douglas, D.H.; Peucker, T.K. Algorithms for the Reduction of the Number of Points Required to Represent a Digitized Line or its Caricature. In Classics in Cartography: Reflections on Influential Articles from Cartographica; John Wiley & Sons: Hoboken, NJ, USA, 2011; pp. 15–28. [Google Scholar] [CrossRef]

- Chen, Y.; Lai, Y.K.; Liu, Y.J. CartoonGAN: Generative Adversarial Networks for Photo Cartoonization. In Proceedings of the CVPR. IEEE Computer Society, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9465–9474. [Google Scholar]

- Chen, J.; Liu, G.; Chen, X. AnimeGAN: A Novel Lightweight GAN for Photo Animation. In Artificial Intelligence Algorithms and Applications, Proceedings of the 11th International Symposium, ISICA 2019, Guangzhou, China, 16–17 November 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 242–256. [Google Scholar] [CrossRef]

- Liu, Z.; Li, L.; Jiang, H.; Jin, X.; Tu, D.; Wang, S.; Zha, Z. Unsupervised Coherent Video Cartoonization with Perceptual Motion Consistency. In Proceedings of the The Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI-22), Virtual, 22 February–1 March 2022. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. arXiv 2021, arXiv:2112.10752. [Google Scholar]

- Esser, P.; Kulal, S.; Blattmann, A.; Entezari, R.; Muller, J.; Saini, H.; Levi, Y.; Lorenz, D.; Sauer, A.; Boesel, F.; et al. Scaling Rectified Flow Transformers for High-Resolution Image Synthesis. arXiv 2024, arXiv:2403.03206. [Google Scholar]

- Meng, C.; He, Y.; Song, Y.; Song, J.; Wu, J.; Zhu, J.Y.; Ermon, S. SDEdit: Guided Image Synthesis and Editing with Stochastic Differential Equations. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding Conditional Control to Text-to-Image Diffusion Models. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023. [Google Scholar]

- Saharia, C.; Ho, J.; Chan, W.; Salimans, T.; Fleet, D.J.; Norouzi, M. Image super-resolution via iterative refinement. arXiv 2021, arXiv:2104.07636. [Google Scholar] [CrossRef] [PubMed]

- Saharia, C.; Chan, W.; Chang, H.; Lee, C.; Ho, J.; Salimans, T.; Fleet, D.; Norouzi, M. Palette: Image-to-Image Diffusion Models. In Proceedings of the ACM SIGGRAPH 2022 Conference Proceedings, New York, NY, USA, 7–11 August 2022. SIGGRAPH ’22. [Google Scholar] [CrossRef]

- Yang, S.; Zhou, Y.; Liu, Z.; Loy, C.C. Rerender A Video: Zero-Shot Text-Guided Video-to-Video Translation. In Proceedings of the ACM SIGGRAPH Asia Conference Proceedings, Sydney, NSW, Australia, 12–15 December 2023. [Google Scholar]

- Jamriska, O. ebsynth: Fast Example-Based Image Synthesis and Style Transfer. 2018. Available online: https://github.com/jamriska/ebsynth (accessed on 20 July 2024).

- Brooks, T.; Peebles, B.; Holmes, C.; DePue, W.; Guo, Y.; Jing, L.; Schnurr, D.; Taylor, J.; Luhman, T.; Luhman, E.; et al. Video Generation Models as World Simulators. 2024. Available online: https://openai.com/index/video-generation-models-as-world-simulators/ (accessed on 20 July 2024).

- Chen, Z.; Li, S.; Haque, M. An Overview of OpenAI’s Sora and Its Potential for Physics Engine Free Games and Virtual Reality. EAI Endorsed Trans. Robot. 2024, 3. [Google Scholar] [CrossRef]

- Liang, D.; Liu, Y.; Huang, Q.; Zhu, G.; Jiang, S.; Zhang, Z.; Gao, W. Video2Cartoon: Generating 3D cartoon from broadcast soccer video. In Proceedings of the MULTIMEDIA ’05, Singapore, 11 November 2005. [Google Scholar]

- Ngo, V.; Cai, J. Converting 2D soccer video to 3D cartoon. In Proceedings of the 2008 10th International Conference on Control, Automation, Robotics and Vision, Hanoi, Vietnam, 17–20 December 2008; pp. 103–107. [Google Scholar] [CrossRef]

- Weng, C.Y.; Curless, B.; Kemelmacher-Shlizerman, I. Photo Wake-Up: 3D Character Animation From a Single Photo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Tous, R. Pictonaut: Movie cartoonization using 3D human pose estimation and GANs. Multimed. Tools Appl. 2023, 82, 1–15. [Google Scholar] [CrossRef]

- Ruben Tous, J.N.; Igual, L. Human pose completion in partial body camera shots. J. Exp. Theor. Artif. Intell. 2023, 1–11. [Google Scholar] [CrossRef]

- Fišer, J.; Jamriška, O.; Simons, D.; Shechtman, E.; Lu, J.; Asente, P.; Lukáč, M.; Sýkora, D. Example-Based Synthesis of Stylized Facial Animations. ACM Trans. Graph. 2017, 36, 1–11. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. A Neural Algorithm of Artistic Style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollar, P.; Girshick, R. Masked Autoencoders Are Scalable Vision Learners. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 15979–15988. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual, 3–7 May 2021; Available online: https://openreview.net/ (accessed on 20 July 2024).

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, Y. Decoupling Features in Hierarchical Propagation for Video Object Segmentation. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Li, C.; Yang, J.; Su, H.; Zhu, J.; et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Suzuki, S.; be, K. Topological structural analysis of digitized binary images by border following. Comput. Vision Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Zablotskaia, P.; Siarohin, A.; Zhao, B.; Sigal, L. DwNet: Dense warp-based network for pose-guided human video generation. In Proceedings of the 30th British Machine Vision Conference 2019, BMVC 2019, Cardiff, UK, 9–12 September 2019; p. 51. [Google Scholar]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The Kinetics Human Action Video Dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Tous, R. Lester Project Home Page. Available online: https://github.com/rtous/lester (accessed on 20 July 2024).

- ITU. Subjective Video Quality Assessment Methods for Multimedia Applications. ITU-T Recommendation P.910. 2008. Available online: https://www.itu.int/rec/T-REC-P.910 (accessed on 20 July 2024).

- ITU. Mean Opinion Score (MOS) Terminology. ITU-T Recommendation P.800.1 Methods for Objective and Subjective Assessment of Speech and Video Quality. 2016. Available online: https://www.itu.int/rec/T-REC-P.800.1 (accessed on 20 July 2024).

- Ruiz, N.; Li, Y.; Jampani, V.; Pritch, Y.; Rubinstein, M.; Aberman, K. DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation. arXiv 2023, arXiv:2208.12242. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).