1. Introduction

Generative AIs have increasingly become an integral part of our everyday lives [

1]. Particularly, generative AIs for text generation are engineered to replicate human-like dialogues and offer help, information, and even emotional support [

2,

3,

4,

5,

6]. They can be available 24/7 and provide instant responses, making them a valuable tool for customer service, personal assistants, and many other applications. Among them, ChatGPT (

https://openai.com/chatgpt, accessed on 29 January 2024) stands out as one of the most frequently utilized for text generation [

7]. Within just five days of its launch, more than one million users registered [

7].

The transformative power of AI also permeates the field of coding, an area where technology has made significant strides: AI-powered chatbots are not only capable of conducting human-like conversations but can also generate program code [

8,

9]. Simultaneously, Integrated Development Environments (IDEs), unit tests, and benchmarking tools have simplified coding, making it more accessible to both seasoned developers and beginners [

10]. In this sense, AI-powered chatbots and coding tools are two facets of the same coin, both contributing to the transformation of how we use technology. However, a critical challenge remains—the time and manual effort required for coding, especially for those new to the craft [

11,

12,

13].

While AI tools promise to streamline the coding process and lower the barriers to entry for aspiring coders, it is important to note that they still have their limits. For example, they may not always produce

correct,

efficient, or

maintainable program codes. Therefore, it is crucial to carefully consider various aspects when deciding whether or not to use these tools [

14].

While substantial research has focused on evaluation metrics for

correctness like

[

15,

16,

17], there is a noticeable gap: The extensive comparison of AI-generated and human-generated program codes based on various metrics has largely been understudied. Consequently, our study embarks on a comprehensive and in-depth exploration of the coding capabilities of seven state-of-the-art generative AIs. Our goal is to evaluate the AI-generated program codes based on the interaction of various metrics such as

cyclomatic complexity,

maintainability index, etc., concerning their

correctness,

efficiency and

maintainability. Moreover, we go one step further by comparing the AI-generated codes with corresponding human-generated codes written by professional programmers.

Our contributions are as follows:

We investigate and compare the correctness, efficiency and maintainability of AI-generated program codes using varying evaluation metrics.

We are the first to extensively compare AI-generated program codes to human-generated program codes.

We analyze the program codes that address problems of three difficulty levels—easy, medium and hard.

In the next section, we will provide an overview of related work. In

Section 3, we will present our experimental setup. Our experiments and results will be described in

Section 4. In

Section 5, we will conclude our work and indicate possible future steps.

2. Related Work

In this section, we will look at existing work regarding automatic program code generation and evaluation.

Codex (

https://openai.com/blog/openai-codex, accessed on 29 January 2024) is an AI model for program code generation based on GPT-3.0 and fine-tuned on program code publicly available on GitHub [

16]. Ref. [

16] tested Codex’s capabilities in generating Python code using natural language instructions found in in-code comments known as docstrings. They also created

HumanEval, a dataset of 164 hand-written coding problems in natural language plus their unit tests to assess the

functional correctness of program code. One discovery from their research was that if Codex is asked to generate code for the same problem several times, the probability that one generated code is

correct increases. Consequently, they used

as an evaluation metric, where

k is the number of generated program codes, and

is the number of tasks, of which all unit tests were passed. To obtain an unbiased estimation of

, ref. [

16] applied additional adjustments to the original calculation. Codex achieved a

of 28.8% in solving the provided problems. They compared the Codex-generated code with program code generated by GPT-3.0 and GPT-J [

18], but GPT-3.0 demonstrated a

of 0% and GPT-J obtained 11.4%. With

, Codex achieved even 70.2%.

Ref. [

19] evaluated

the validity,

correctness, and

efficiency of program code generated by GitHub (GH) Copilot using

HumanEval. GH Copilot is an IDE extension that uses Codex. Ref. [

19] defined a code as

valid, if it was compliant with the syntax rules of a given programming language. The

correctness was computed by dividing the programming tasks’ passed unit tests by all existing unit tests for this specific task. The

efficiency was measured by determining the

time and

space complexities of the generated codes. Their results demonstrate that Codex was able to generate

valid code with a success rate of 91.5%. Regarding code

correctness, 28.7% were generated

correctly, 51.2% were generated

partially correctly, and 20.1% were generated

incorrectly.

Ref. [

17] used

HumanEval to evaluate the

validity,

correctness,

security,

reliability, and

maintainability of Python code generated by GH Copilot, Amazon CodeWhisperer (

https://aws.amazon.com/de/codewhisperer, accessed on 29 January 2024), and ChatGPT. They defined a

valid code and a

correct code as in [

19]. To determine the

security,

reliability, and

maintainability, they used SonarQube (

https://www.sonarqube.org, accessed on 29 January 2024). Their calculation of a code’s

security is based on the potential cybersecurity vulnerabilities of this code. The

reliability is based on the number of bugs. The

maintainability is measured by counting present code smells. ChatGPT generated 93.3%

valid program codes, GH Copilot 91.5%, and CodeWhisperer 90.2%. ChatGPT passed most unit tests by generating 65.2% of

correct program codes. GH Copilot reached 46.3%, and CodeWhisperer 31.1%. But when they evaluated newer versions of GH Copilot and CodeWhisperer, they measured 18% and 7% better values for

correctness. Due to the small number of generated code fragments, the numbers for

security were not usable. Concerning

reliability, ChatGPT produced two bugs, GH Copilot three bugs and CodeWhisperer one bug. CodeWhisperer produced the most

maintainable code, ChatGPT the second most, and GH Copilot the least

maintainable.

Ref. [

15] introduced a new framework for program code evaluation named

EvalPlus. Furthermore, they created

HumanEval+, an extension of

HumanEval using

EvalPlus’

automatic test input generator.

HumanEval+ is 80 times larger than

HumanEval which enables more comprehensive testing and analysis of AI-generated code. With

HumanEval+, ref. [

15] evaluated the

functional correctness of program code generated by 26 different AI models which are based on GPT-4 [

20], Phind-CodeLlama [

21], WizardCoder-CodeLlama [

22], ChatGPT [

23], Code Llama [

24], StarCoder [

25], CodeGen [

26], CODET5+ [

27], MISTRAL [

28], CodeGen2 [

29], VICUNA [

30], SantaCoder [

31], INCODER [

32], GPT-J [

33], GPT-NEO [

34], PolyCoder [

35], and StableLM [

36] with

. Looking at

, the top five performers were GPT-4 (76.2%), Phind-CodeLlama (67.1%), WizardCoder-CodeLlama (64.6%), ChatGPT (63.4%), and Code Llama (42.7%).

DeepMind developed an AI model for code generation named

AlphaCode [

37]. On the coding competition website

codeforces.com, the AI model achieved an average ranking in the top 54.3% of more than 5000 participants for Python and C++ tasks. The ranking takes

runtime and

memory usage into account.

Ref. [

38] evaluated GH Copilot’s ability to produce solutions for 33 LeetCode problems using Python, Java, JavaScript, and C. They calculated the

correctness by dividing the passed test cases by the total number of test cases per problem. The numbers for

understandability,

cyclomatic and

cognitive complexity were retrieved using SonarQube, but due to configuration problems, they were not able to analyze the

understandability of the codes generated in C. Concerning

correctness, GH Copilot performed best in Java (57%) and worst in JavaScript (27%). In terms of

understandability, GH Copilot’s Python, Java and JavaScript program codes had a

cognitive complexity of 6 and a

cyclomatic complexity of 5 on average, with no statistically significant differences between the programming languages.

In comparison to the aforementioned related work, our focus is to evaluate the Java, Python and C++ code produced by Codex (GPT-3.0), CodeWhisperer, BingAI Chat (GPT-4.0), ChatGPT (GPT-3.5), Code Llama (Llama 2), StarCoder, and InstructCodeT5+. For comparison, we also assess human-generated program code. We obtain the correctness, efficiency and maintainability for our program codes by measuring time and space complexity, runtime and memory usage, lines of code, cyclomatic complexity, Halstead complexity and maintainability index. As a user usually does not generate code for the same problem several times, we evaluate with k = 1, which is our metric for correctness. Finally, we analyze which of the incorrect and the not executable program codes have the potential to be easily modified manually and then used quickly and without much effort to solve the corresponding coding problems.

4. Experiments and Results

To ensure the validity of our experiments, we had all program codes generated by the generative AIs in the short time period from August to September 2023, making sure that for each generative AI only the same version is used. In this section, we will first analyze which generated program codes are correct, i.e., solve the coding problems. Then we will compare the quality of the correct program codes using our evaluation criteria lines of code, cyclomatic complexity, time complexity, space complexity, runtime, memory usage, and maintainability index. Finally, we will evaluate which of the incorrect program codes—due to their maintainability and proximity to the correct program code—have the potential to be easily modified manually and then used quickly and without much effort to solve the corresponding problems.

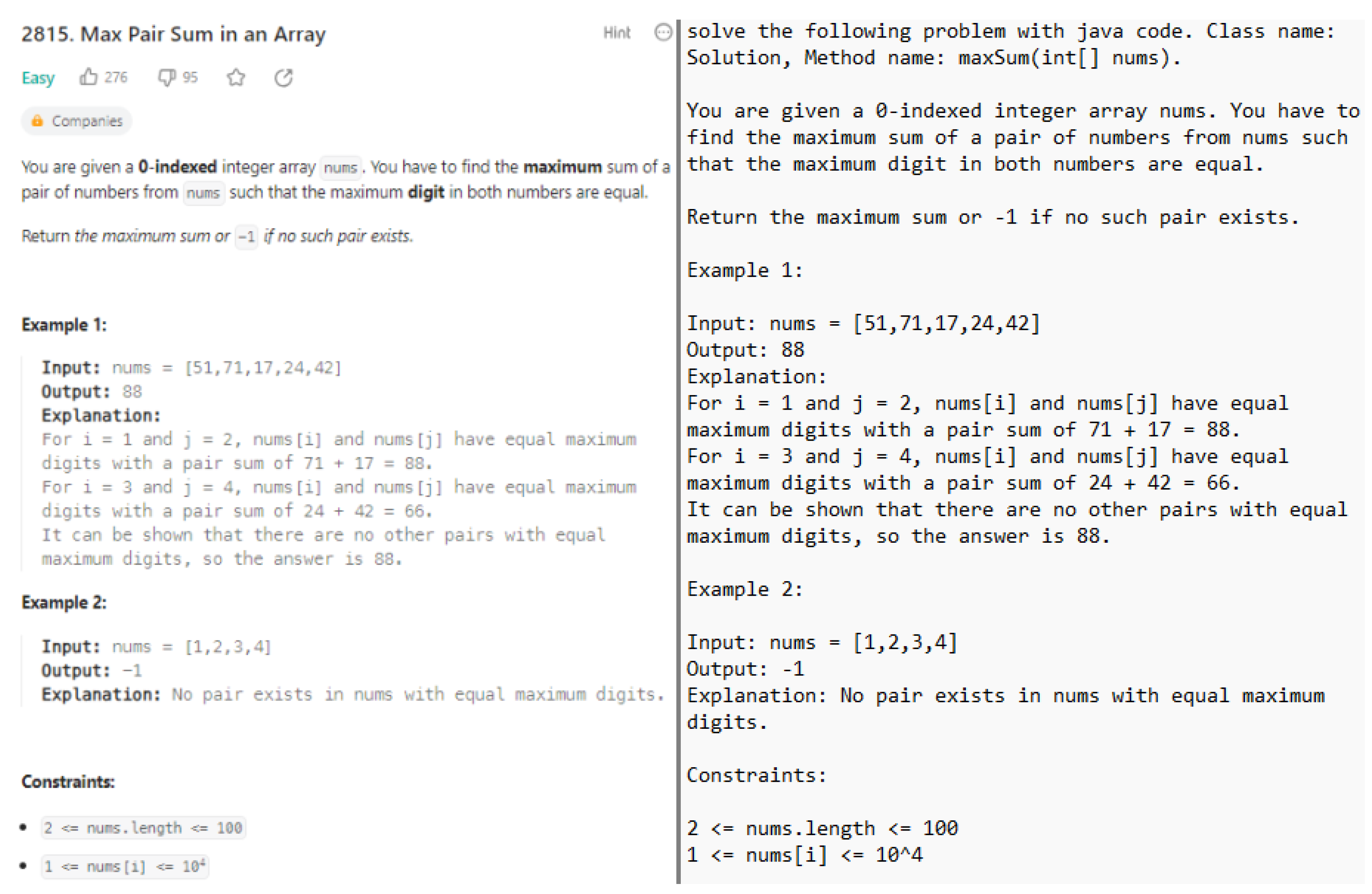

4.1. Correct Solutions

Table 3 demonstrates which generated program codes for our six tasks in the three programming languages Java (

J), Python (

P) and C++ (

C) were

correct (indicated with ✓), i.e., did solve the coding problem. The entry “—” indicates program code that was

incorrect, i.e., did not solve the coding problem.

We observe that 122 of the 126 AI-generated program codes are

executable. Four program codes result in a compilation error which is indicated with

. Out of our 18 programming tasks,

GH Copilot was able to generate nine

correct program codes (50%) in total (∑), followed by

Bing AI with seven

correct program codes (39%).

ChatGPT and

Code Llama produced only four

correct program codes (22%).

CodeWhisperer did not produce

correct program code in all three programming languages. This results in 26

correct program codes, which we will further analyze in

Section 4.2,

Section 4.3,

Section 4.4,

Section 4.5,

Section 4.6,

Section 4.7 and

Section 4.8.

Looking at the difficulty levels shows that the generative AIs rather generated correct program code for easy and medium coding problems. Regarding the programming languages, most correct Java (J) and C++ (C) code were generated with GH Copilot. Most correct Python (P) code was produced with Bing AI. The program code which was written by human programmers (human) was always correct, independent of the programming language and the difficulty of the coding problem.

4.2. Lines of Code

Table 4 shows the number of code lines in the

correct 26 program codes which solve the corresponding coding problem plus the number of code lines in our human-written reference program codes (

human).

Out of the 26 correct program codes, in eight cases (31%) a generative AI was able to solve the coding problem with code that contains fewer lines of code than human. However, 13 AI-generated program codes (50%) were outperformed by human in this evaluation metric. For task#2, all five corresponding program codes (19%) contained the same number of lines of code as human. BingAI and GH Copilot performed better than the other generative AIs, being able to generate three program codes with fewer lines of code than human.

In the hard task#6, where only ChatGPT produced a correct program code, ChatGPT was even able to solve the problem with 10 Python (P) lines of code, which is 60% fewer lines of code than human. BingAI generated 86% fewer lines of code to solve task#3 in Python (P). BingAI and GH Copilot produced 36% fewer lines of code to solve task#2 in Java (J); 22% fewer C++ (C) lines of code are required in BingAI’s program code for task#3. Furthermore, 10% fewer Java (J) lines of code are required in GH Copilot’s and Code Llama’s program code for task#3.

4.3. Cyclomatic Complexity

Table 5 shows the

cyclomatic complexity in the 26

correct program codes which solve the coding problem plus the

cyclomatic complexity in our human-written reference program codes (

human). The

cyclomatic complexity measures the number of linearly independent paths through a program code. As described in

Section 3.3.2, a higher

cyclomatic complexity indicates worse code quality.

Out of the 26 correct program codes, in seven cases (27%) a generative AI was able to solve the coding problem with program code that has less cyclomatic complexity than human. Only four AI-generated program codes (15%) were outperformed by human in this evaluation metric. Fifteen program codes (58%) contain the same cyclomatic complexity as human. GH Copilot performed better than the other generative AIs being able to generate three program codes with less cyclomatic complexity than human.

In the medium task#3, where only Bing AI produced correct program code in Python (P), Bing AI was even able to solve the problem with a cyclomatic complexity of 3, which is 67% less than human. ChatGPT generated code with 50% less cyclomatic complexity to solve task#6 in Python (P). GH Copilot and Code Llama produced code with 33% less cyclomatic complexity to solve task#3 in Java (J). Bing AI and GH Copilot also generated code with 33% lower cyclomatic complexity to solve task#3 in C++ (C). Moreover, 25% less cyclomatic complexity is in the C++ code (C) for task#4.

4.4. Time Complexity

Table 6 illustrates the

time complexity in the 26

correct program codes plus the

time complexity in our human-written reference program codes (

human). The

time complexity quantifies the upper bound of time needed by an algorithm as a function of the input [

56] as described in

Section 3.3.3. The lower the order of the function, the better the

complexity. The cross-column entries mean that the value is the same in all cross-columns.

Out of the 26 correct program codes, in one case (4%) (AI) a generative AI was able to solve the coding problem with code that has less time complexity than human. Thirteen AI-generated program codes (50%) were outperformed by human in this evaluation metric. Twelve program codes (46%) contain the same time complexity as human. GH Copilot performed better than the other generative AIs being able to generate one program code with lower time complexity than human and four program codes with equal time complexity. ChatGPT, Bing AI, and Code Llama produced program code with the same time complexity as human in two program codes each.

4.5. Space Complexity

Table 7 illustrates the

space complexity in the 26

correct program codes plus the

space complexity in our human-written reference program codes (

human). Similar to the

time complexity, the

space complexity quantifies the upper bound of space needed by an algorithm as a function of the input [

57] as described in

Section 3.3.3. Analogous to the

time complexity, the lower the order of the function, the better the

complexity. The cross-column entries also mean that the value is the same in all cross-columns.

Out of the 26 correct program codes, in seven cases (27%) (AI) a generative AI was able to solve the coding problem with code that has less space complexity than human. One AI-generated program code (4%) was outperformed by human in this evaluation metric. Eighteen program codes (69%) contain the same space complexity as human. This shows that the generative AIs perform significantly better in terms of space complexity compared to time complexity. Again, GH Copilot outperformed the other generative AIs being able to generate three program codes with lower space complexity than human and five program codes with equal space complexity. Bing AI generated program code with the same space complexity as human in five program codes, ChatGPT and Code LLama in three program codes each, as well as StarCoder and InstructCodeT5+ in one program code each.

4.6. Runtime

Table 8 demonstrates the

runtime of the 26

correct program codes plus the

runtime of our human-written reference program codes (

human) on LeetCode in milliseconds. The lower the

runtime of a program code, the better. The

runtime of the six

correct program codes labeled with “*” could not be measured since their execution resulted in a

Time Limit Exceeded error when executed on LeetCode.

Out of the 26 correct program codes, in six cases (23%) a generative AI was able to solve the coding problem with code that executes with less runtime than human. Seventeen AI-generated program codes (65%) were outperformed by human in this evaluation metric. Three program codes (12%) took the same runtime as human. GH Copilot performed better than the other generative AIs being able to generate two program codes with less runtime than human and one program code with the same runtime.

In easy task#1, where only GH Copilot produced the correct program code in Java (J), GH Copilot was even able to solve the problem in a runtime of 4 milliseconds, which is 125% less than human. InstructCodeT5+ generated code that took 55% less runtime to solve task#2 in Python (P). GH Copilot produced code that took 12% less runtime to solve task#4 in C++ (C). Bing AI, GH Copilot and Code Llama generated code that took the same runtime as human to solve task#2 in Java (J).

4.7. Memory Usage

Table 9 lists the

memory usage of the 26

correct program codes plus the

memory usage of our human-written reference program codes (

human) on LeetCode in megabytes. The lower the

memory usage of a program code, the better. The

memory usage of the six

correct program codes labeled with “

*” could not be measured since their execution resulted in a

Time Limit Exceeded error when executed on LeetCode.

Out of the 26 correct program codes, in five cases (19%) a generative AI was able to solve the coding problem with code that executes with less memory usage than human. Eleven AI-generated program codes (42%) were outperformed by human in this evaluation metric. Ten program codes (38%) took the same memory usage as human. GH Copilot performed better than the other generative AIs being able to generate one program code with less memory usage than human and five program codes with the same memory usage, closely followed by Bing AI which was able to generate one program code with less memory usage than human and three program codes with the same memory usage. However, in the AI-generated program codes, which have lower memory usage, the memory usage of less than 1% relative is not significantly lower than in human.

4.8. Maintainability Index

Table 10 demonstrates the

maintainability index of the 26

correct program codes plus the

maintainability index of our human-written reference program codes (

human). The higher the

maintainability index of a program code, the better.

Out of the 26 correct program codes, in 13 cases (50%) a generative AI was able to produce code with a higher maintainability index than human. Thirteen AI-generated program codes (50%) were exceeded by human in this evaluation metric. GH Copilot and Bing AI performed better than the other generative AIs being able to generate four program codes (15%) with a higher maintainability index than human. ChatGPT and Code Llama produced two program codes (8%) with a higher maintainability index than human.

4.9. Potential of Incorrect AI-Generated Program Code

After analyzing the 26 correct program codes, our goal was to evaluate which of the 96 incorrect and the four not executable program codes have the potential to be easily modified manually and then used quickly and without much effort to solve the corresponding coding problems. Consequently, we first had programmers determine which of the 96 incorrect program codes have the potential to be corrected manually due to their maintainability and proximity to the correct program code. This resulted in 24 potentially correct program codes, for which we further estimated the time to correct (TTC) the incorrect program code and retrieve the correct program code.

In order to have a fair comparison between the program codes that are not dependent on the experience of any programmers, we report the

TTC using Halstead’s estimates of the

implementation time, which is only dependent on the operators and operands in the program code—not on the expertise of a programmer. Consequently, to estimate the

TTC in seconds, we developed the following formula:

where

is Halstead’s

implementation time ([

58], pp. 57–59) of the

correct program code in seconds and

is Halstead’s

implementation time ([

58], pp. 57–59) of the

incorrect program code in seconds. The use of the absolute difference is necessary because

can have a lower value than

if parts of the program code need to be removed in order to obtain the

correct program code. As Healstead’s

implementation time only considers the time for the effort of implementing and understanding the program based on the operators and operands but not time to maintain the code—which is necessary when correcting program code—we additionally computed the time to maintain the program with the help of the

maintainability index MI. The

MI is based on

lines of code,

cyclomatic complexity and Halstead’s

volume as described in

Section 3.3.5 and shown in

Table 2.

in seconds is estimated with the following formula:

where

is the

maintainability index between 0 and 100, based on [

60]. To obtain

in a range of 0 to 1, we divided it by 100. This way,

is extended with a factor that is higher for less

maintainable program code.

Table 11 shows the

MI,

,

,

TTC as well as the relative difference between

and

(

–

(%)) of our 24

potentially correct program codes and their corresponding

correct program codes. We observe that for 11 program codes

TTC <

, i.e., the

time to correct (

TTC) the

incorrect program code takes less time than the

implementation time of the

correct program code

. With these 11 codes, between 8.85% and even 71.31% of time can be saved if the AI-generated program code is corrected and not programmed from scratch.

5. Conclusions and Future Work

The fast development of AI raises questions about the impact on developers and development tools since with the help of generative AI, program code can be generated automatically. Consequently, the goal of our paper was to answer the question: How efficient is the program code in terms of computational resources? How understandable and maintainable is the program code for humans? To answer those questions, we analyzed the computational resources of AI- and human-generated program code using metrics such as time and space complexity as well as runtime and memory usage. Additionally, we evaluated the maintainability using metrics such as lines of code, cyclomatic complexity, Halstead complexity and maintainability index.

In our experiments, we utilized generative AIs, including ChatGPT (GPT-3.5), Bing AI Chat (GPT-4.0), GH Copilot (GPT-3.0), StarCoder (StarCoderBase), Code Llama (Llama 2), CodeWhisperer, and InstructCodeT5+ (CodeT5+) to generate program code in Java, Python, and C++. The generated program code aimed to solve problems specified on the coding platform leetcode.com. We chose six LeetCode problems with varying difficulty, resulting in the generation of 18 program codes. GH Copilot outperformed others by solving 9 out of 18 coding problems (50.0%), while CodeWhisperer failed to solve any coding problem. BingAI Chat provided correct program code for seven coding problems (38.9%), while ChatGPT and Code Llama were successful in four coding problems (22.2%). StarCoder and InstructCodeT5+ each solved only one coding problem (5.6%). GH Copilot excelled in addressing our Java and C++ coding problems, while BingAI demonstrated superior performance in resolving Python coding problems. Surprisingly, while ChatGPT produced only four correct program codes, it stood out as the sole model capable of delivering a correct solution to a coding problem with a difficulty level of hard. This unexpected performance should be further investigated, for example by assessing pass@k or further coding problems with difficulty level hard.

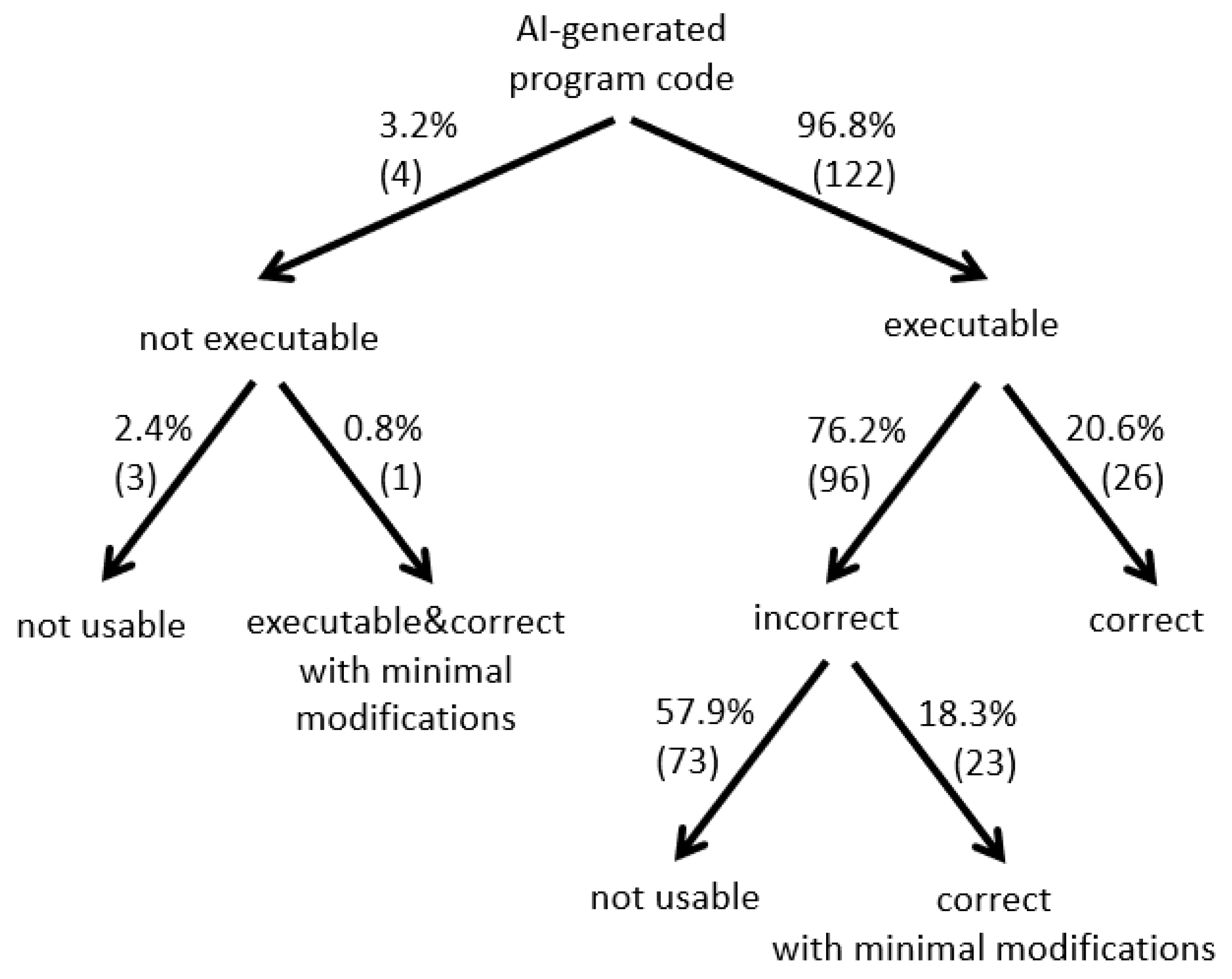

Figure 4 illustrates in a tree structure an overview of all 122 AI-generated

executable (96.8%), 4

not executable (3.2%), 26

correct (20.6%), 96

incorrect (76.2%) and 76

not usable (57.9% + 2.4%) program codes as well as the 24 program codes that can be made

correct with minimal modifications (18.3% + 0.8%).

To summarize: We have shown that different state-of-the-art generative AIs perform differently in program code generation depending on the programming language and coding problem. Our experiments demonstrated that we still seem to be a long way from a generative AI that delivers correct, efficient and maintainable program code in every case. However, we have learned that AI-generated program code can have the potential to speed up programming, even if the program code is incorrect because often only minor modifications are needed to make it correct. For a quick and detailed evaluation of the generated program codes, we used different evaluation metrics and introduced TTC, an estimation of the time to correct incorrect program code.

In future work, we plan to analyze the quality of AI-generated program code in other programming languages. For that, we will expand our AI/Human-Generated Program Code Dataset to cover further programming languages and coding problems. To have a fair comparison among the generative AIs, we applied a prompt engineering strategy that is applicable to a wide range of generative AIs. However, in future work, we plan to investigate the optimal prompting approach for each generative AI individually. Furthermore, we are interested in investigating whether the interaction of different chatbots leveraging different generative AIs helps to improve the final program code quality. For example, as in a human programming team, the generative AIs could take on different roles, e.g., a chatbot that develops the software architecture, a chatbot that is responsible for testing, a chatbot that generates the code or different all-rounders that interact with each other. Since many related works report , we could also have the program codes produced several times for comparability and report . Since the development of generative AIs is rapid, it makes sense to apply our experiments to new generative AIs soon. In this work, we estimated the time for writing and correcting program code based on Halstead metrics. But a comparison with the real time required by a representative set of programmers may also be part of future work. We have provided insights into how state-of-the-art generative AI deals with specific coding problems. Moving forward, it would be beneficial to comprehensively investigate how generative AIs handle the generation of more complex programs or even complete software solutions. This could include an analysis of their ability not only to generate intricate algorithms, but also to manage large codebases, use frameworks and libraries in reasonable contexts, and take best practices of software engineering into account. Additionally, it would be interesting to explore how generative AIs could be integrated into existing software development workflows, and whether they could contribute to increased efficiency and productivity.