Enhanced Curvature-Based Fabric Defect Detection: A Experimental Study with Gabor Transform and Deep Learning

Abstract

1. Introduction

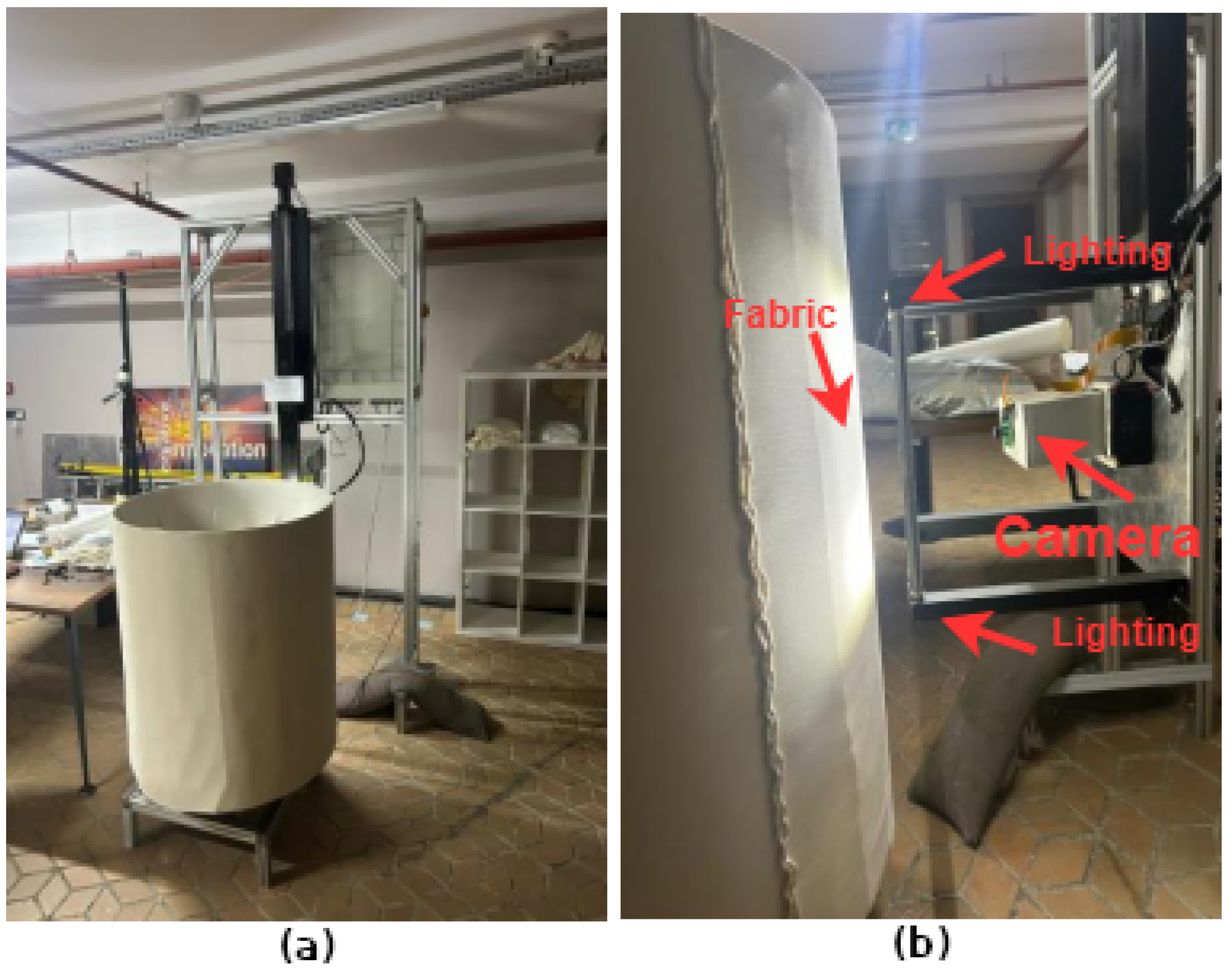

2. HIL (Hardware in the Loop) Implementation

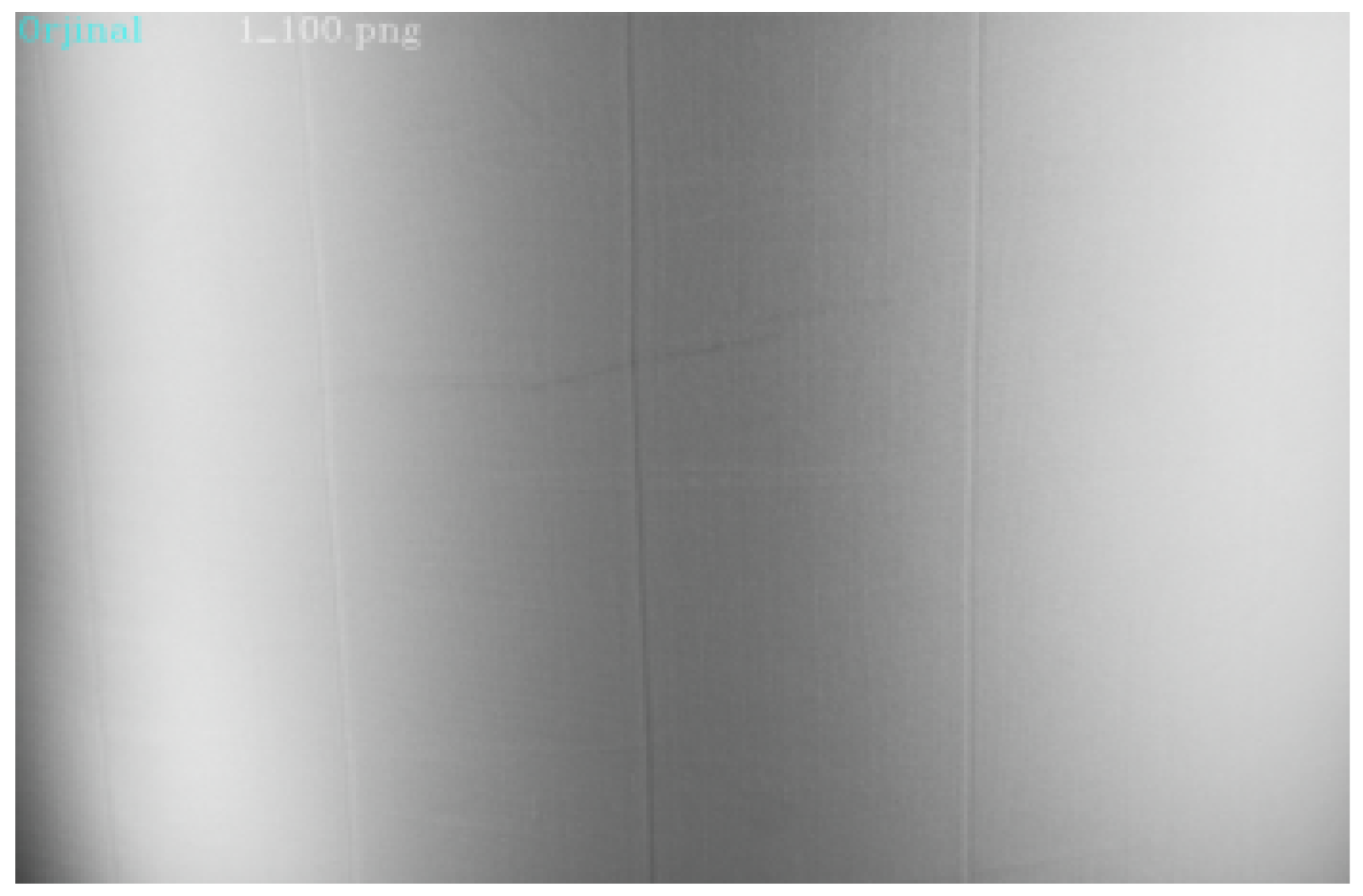

2.1. Data Acquisition

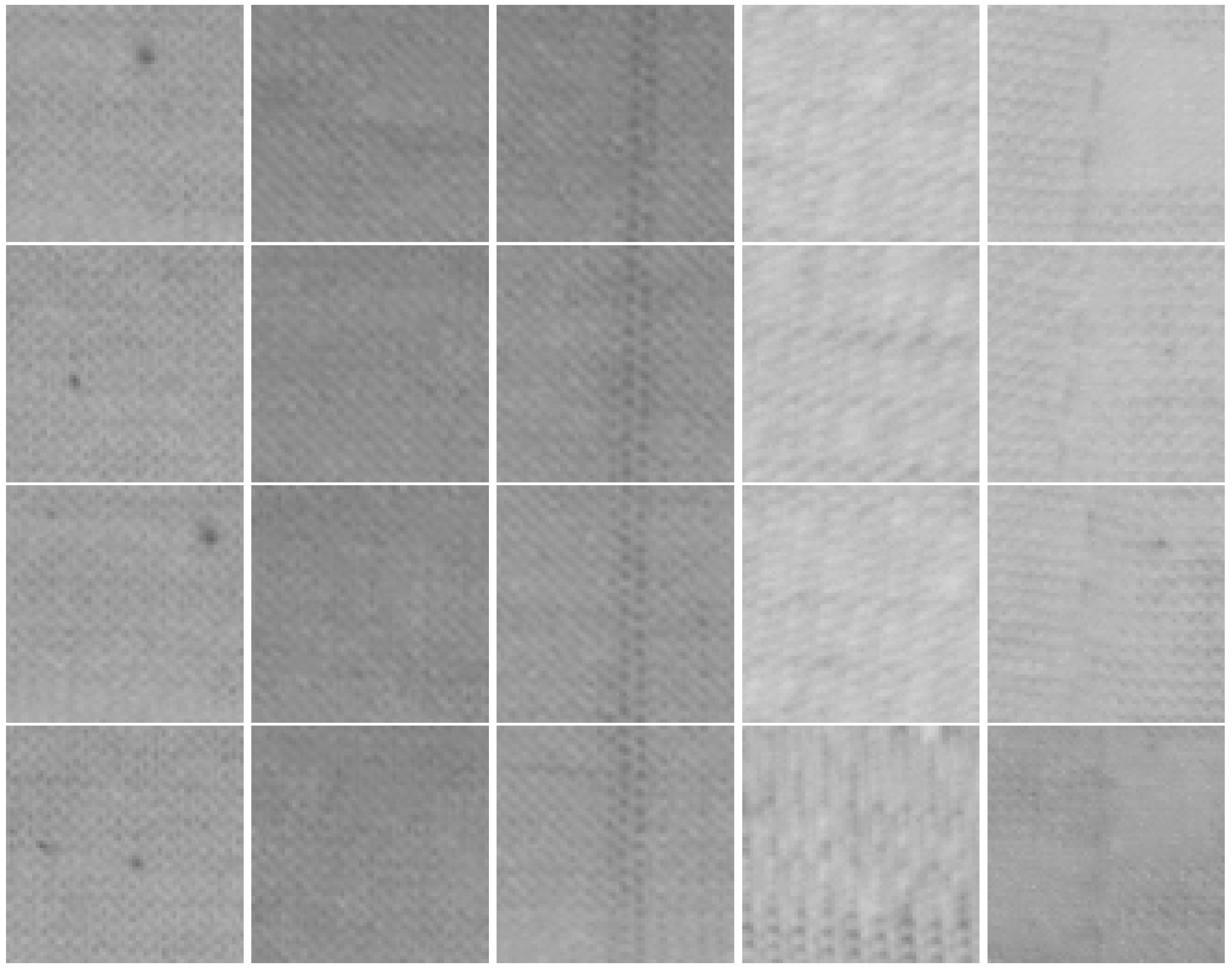

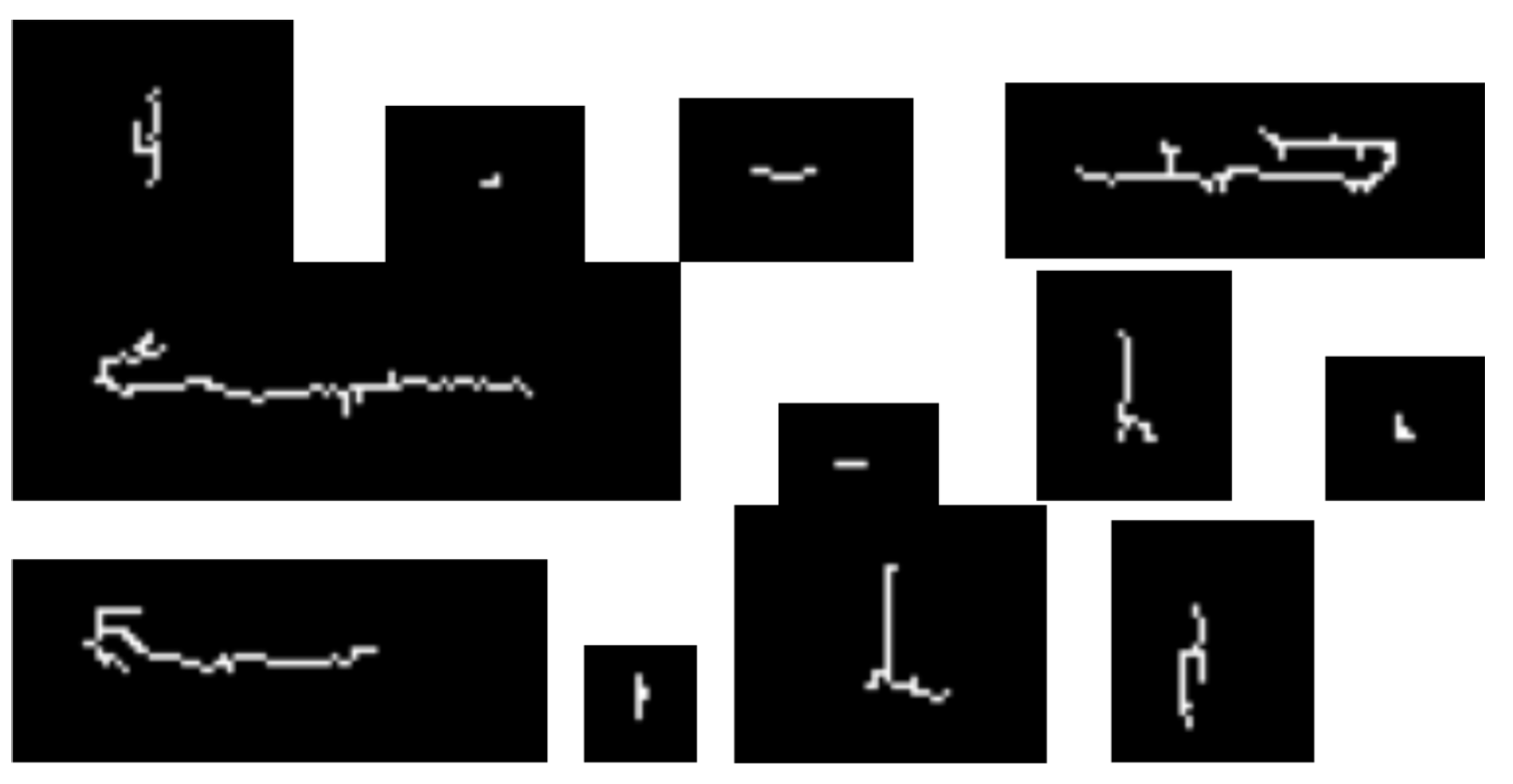

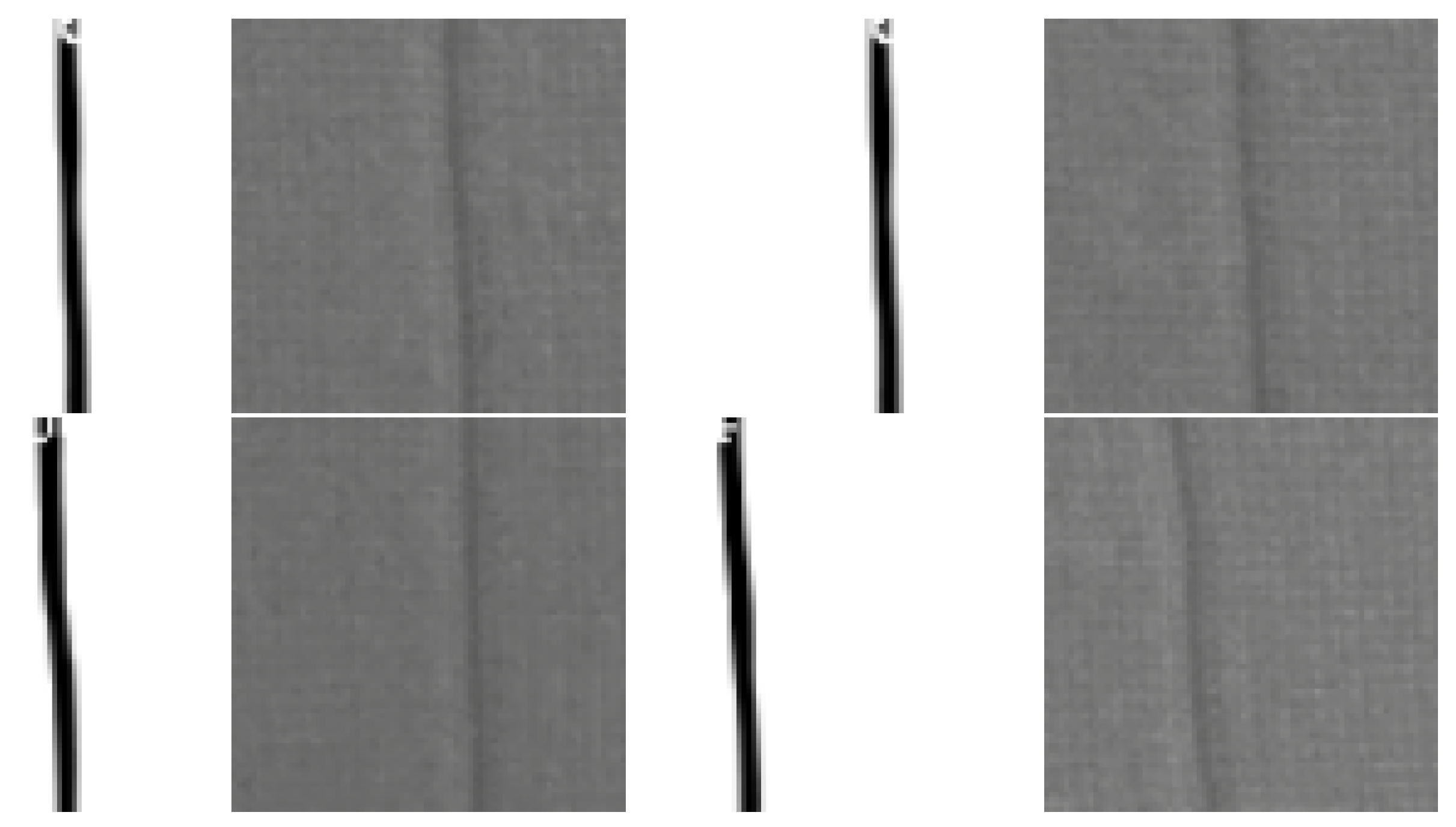

2.2. Prepare Data-Set

3. Curvature Algorithm

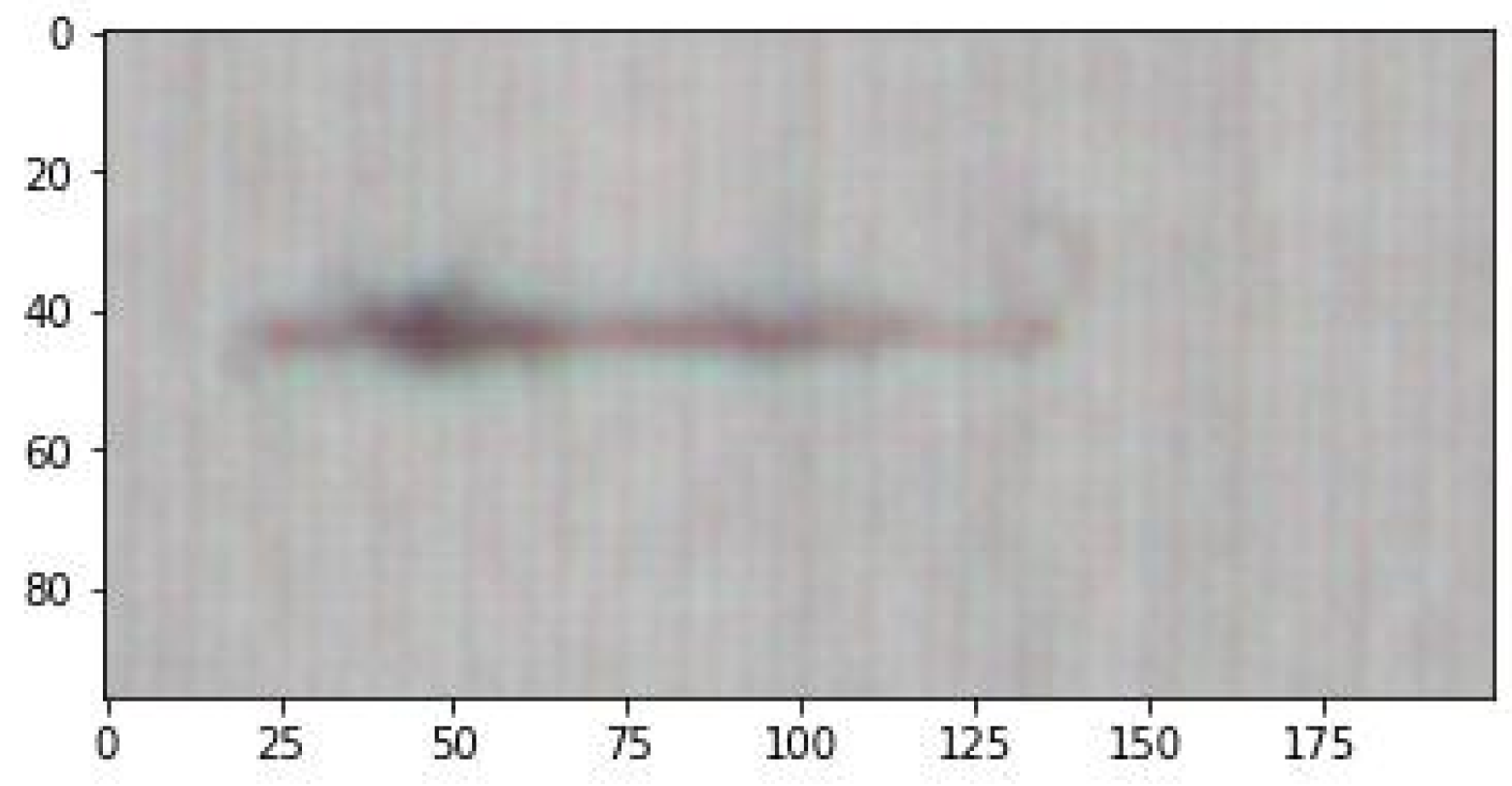

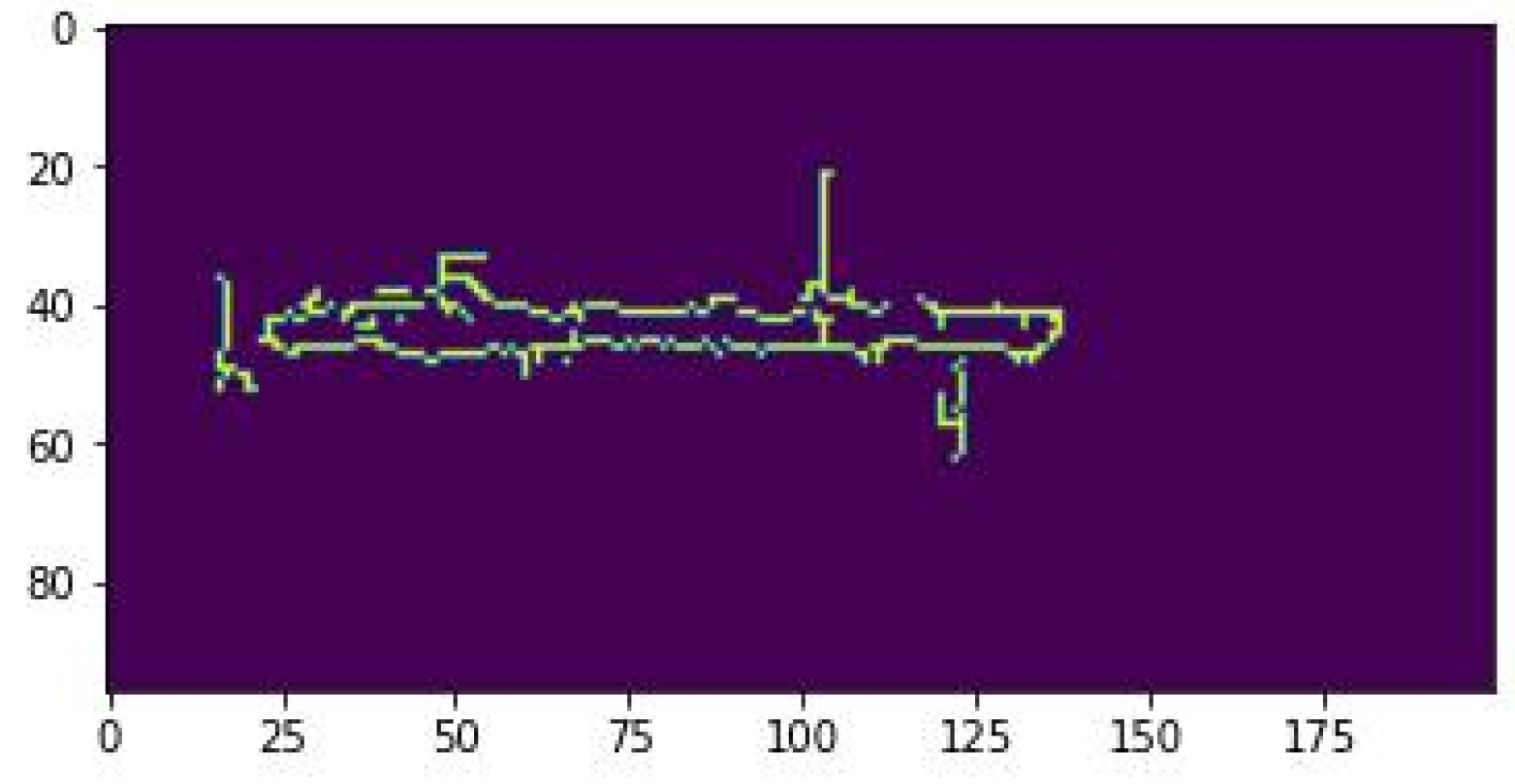

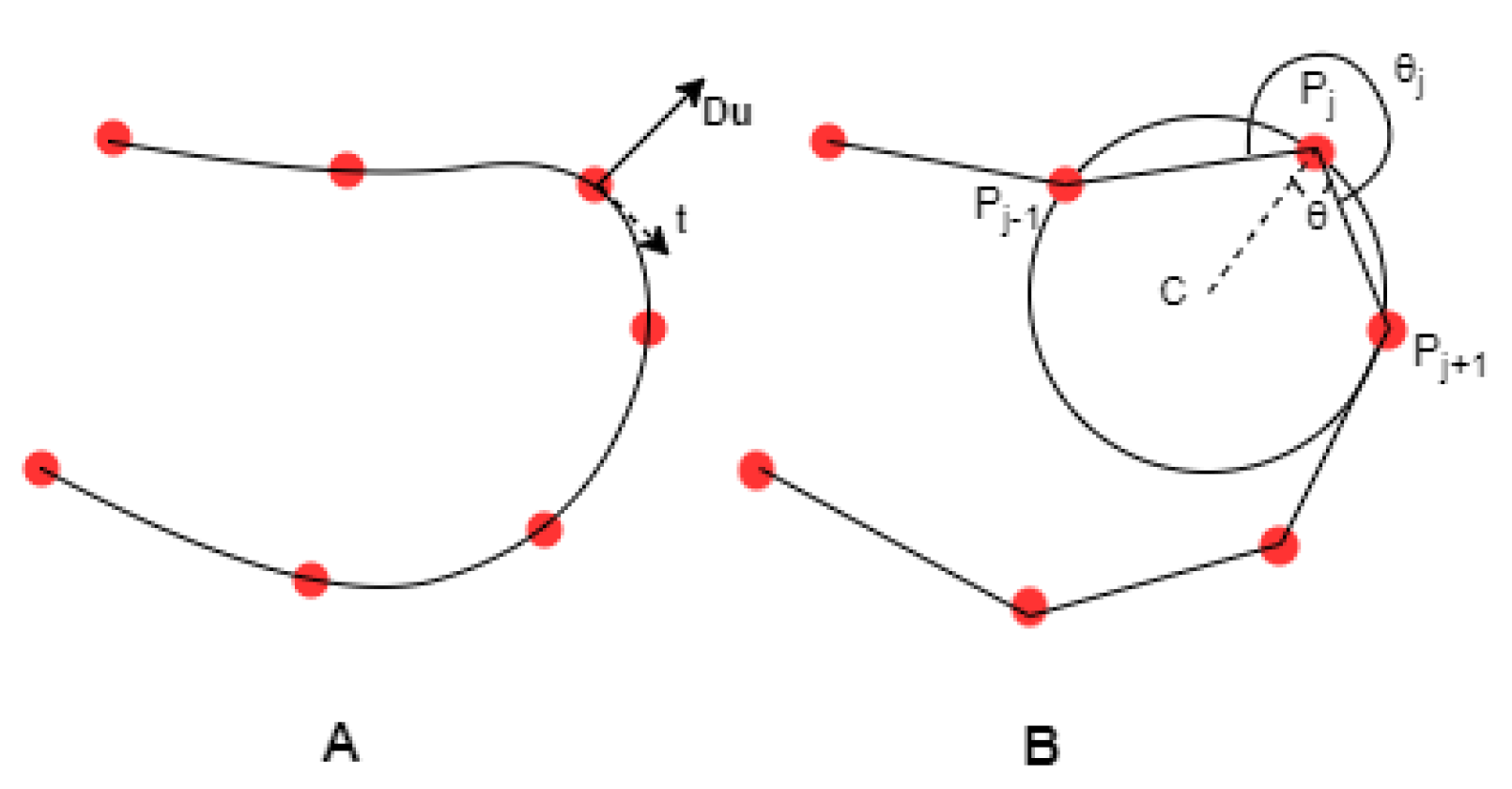

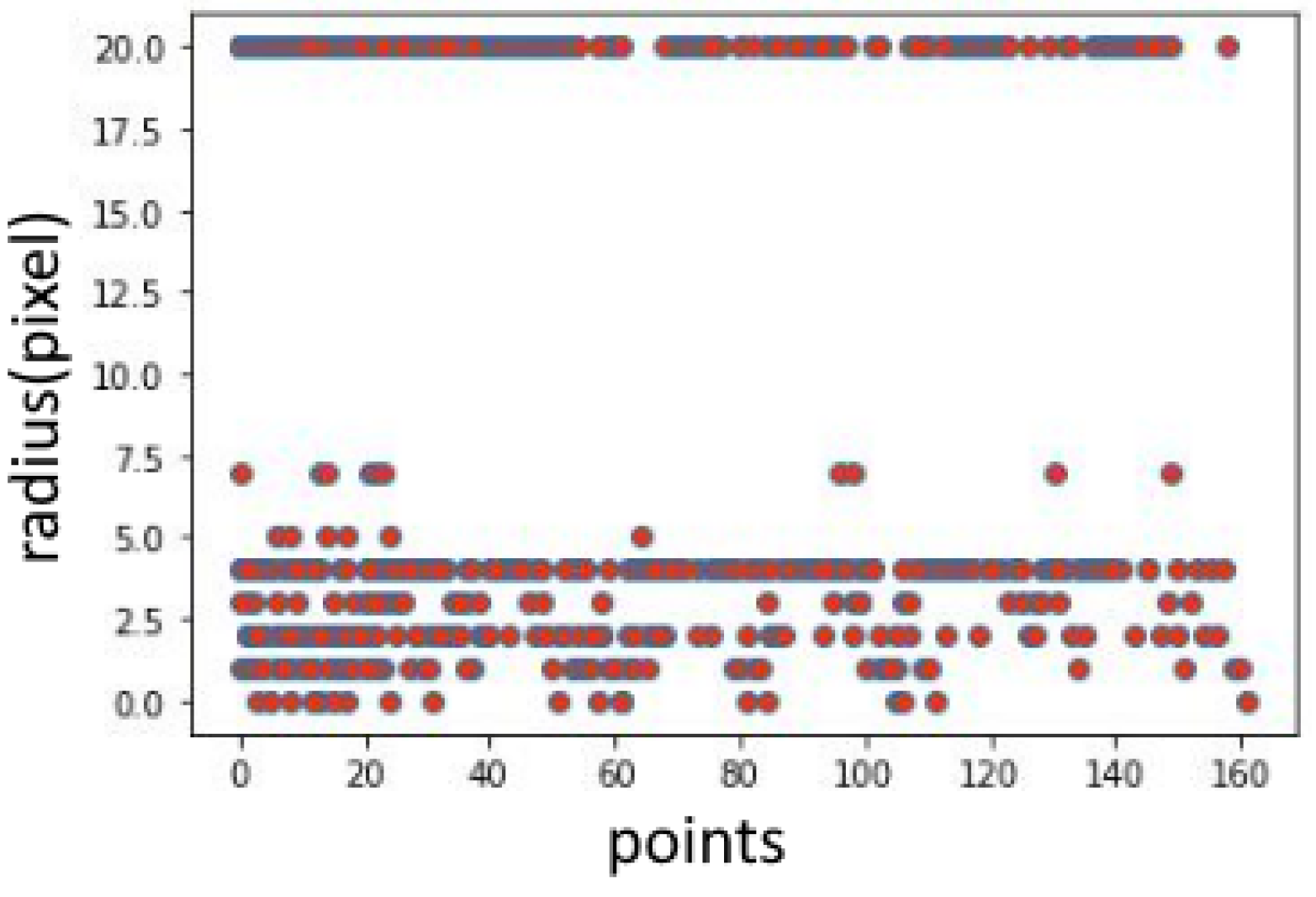

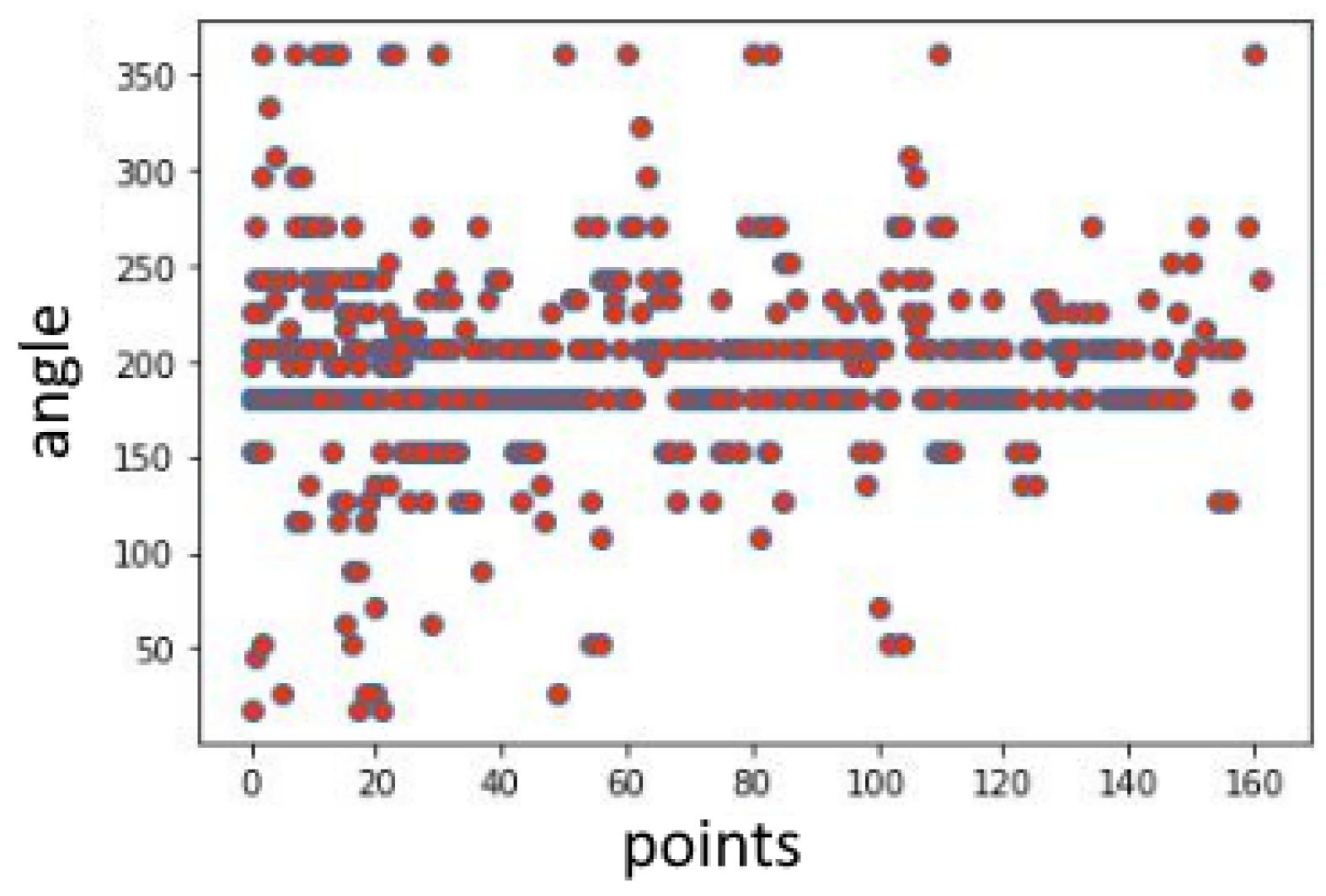

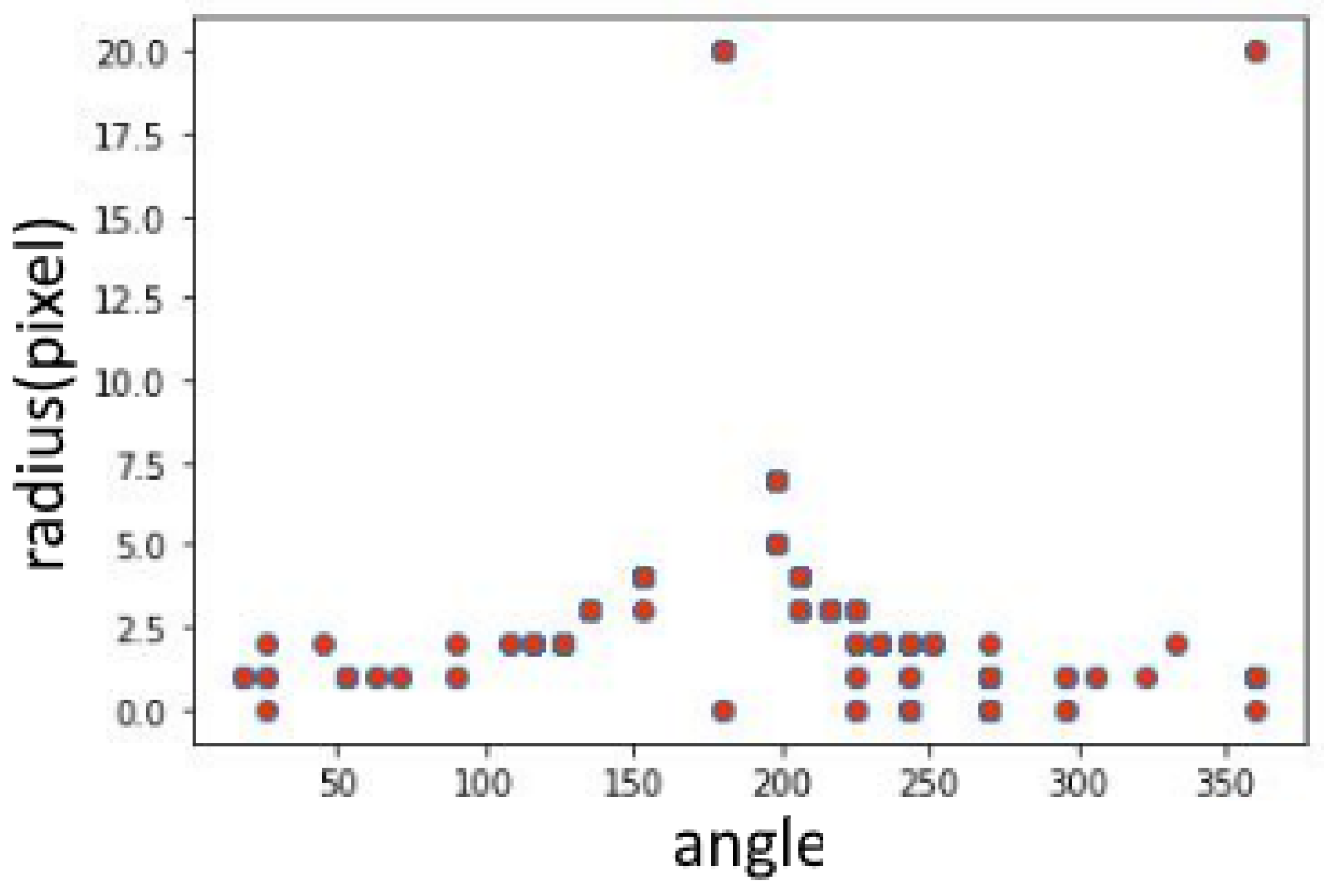

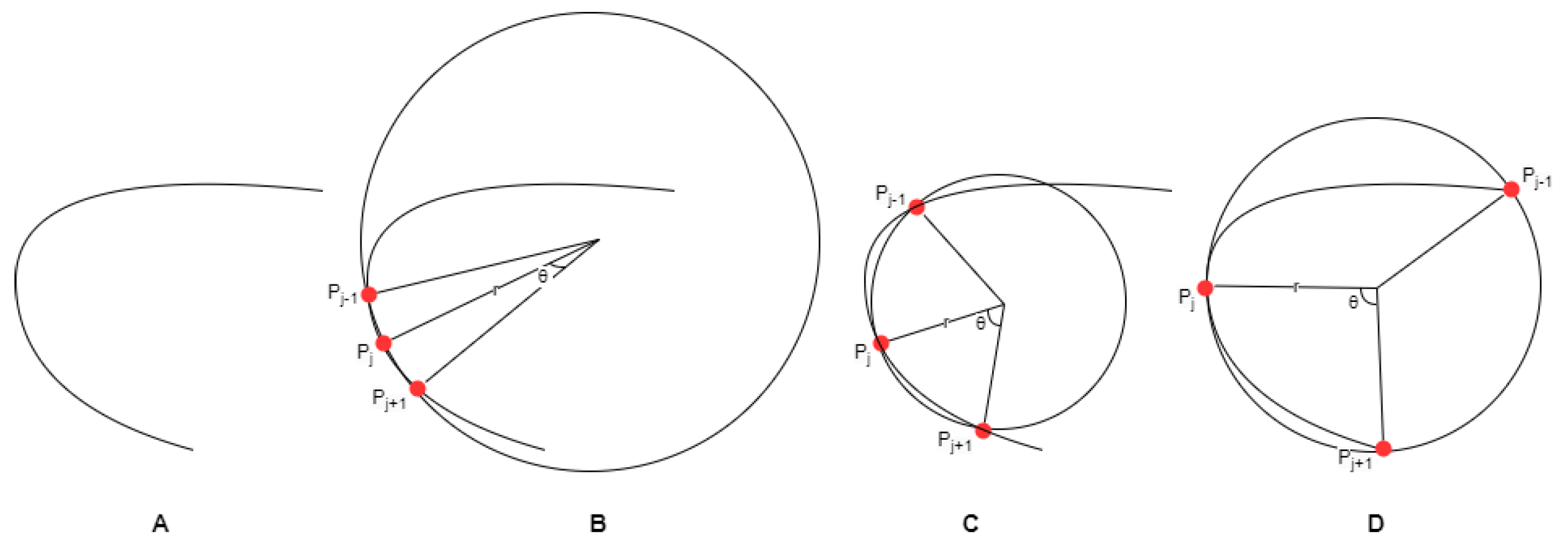

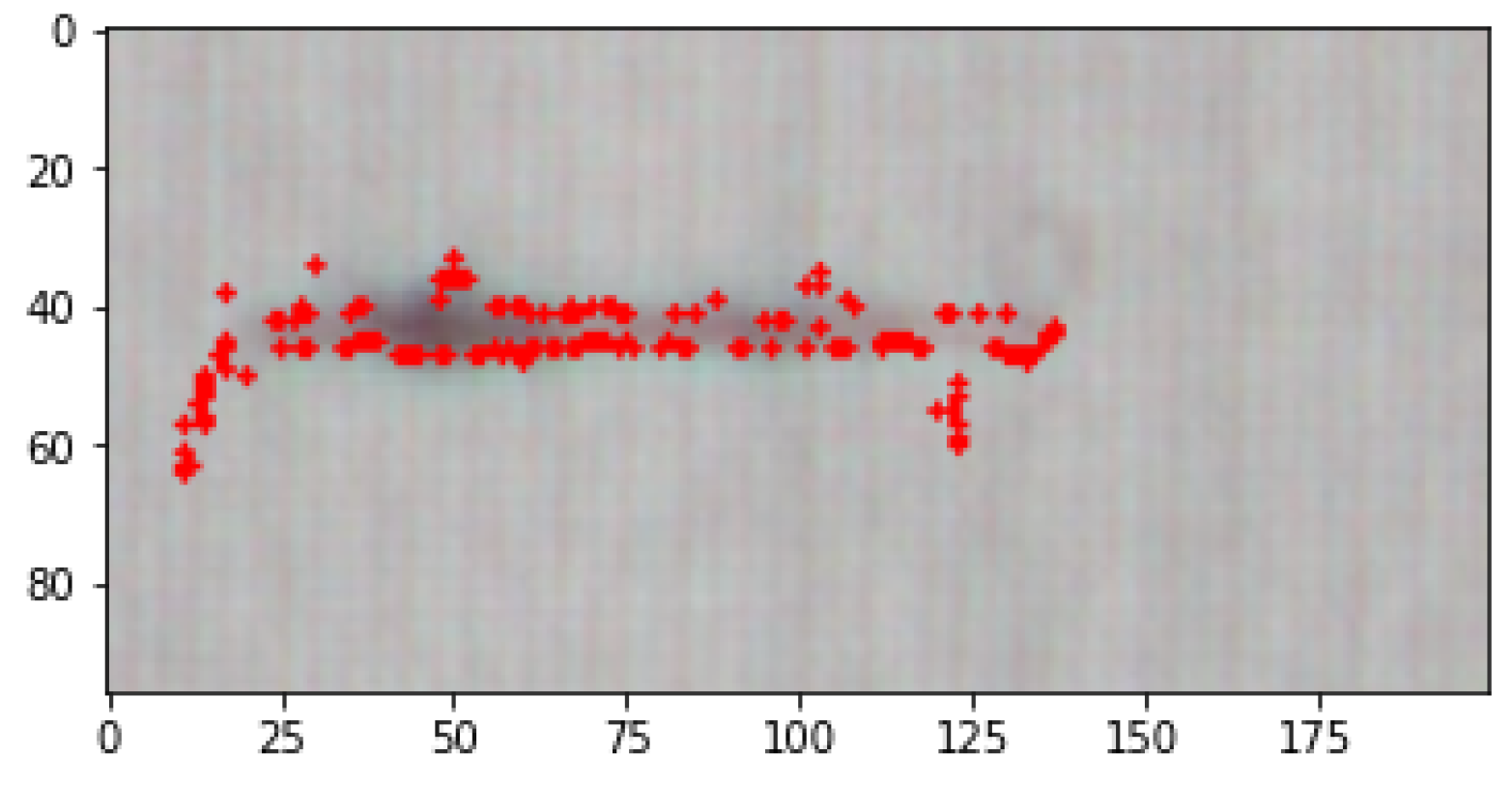

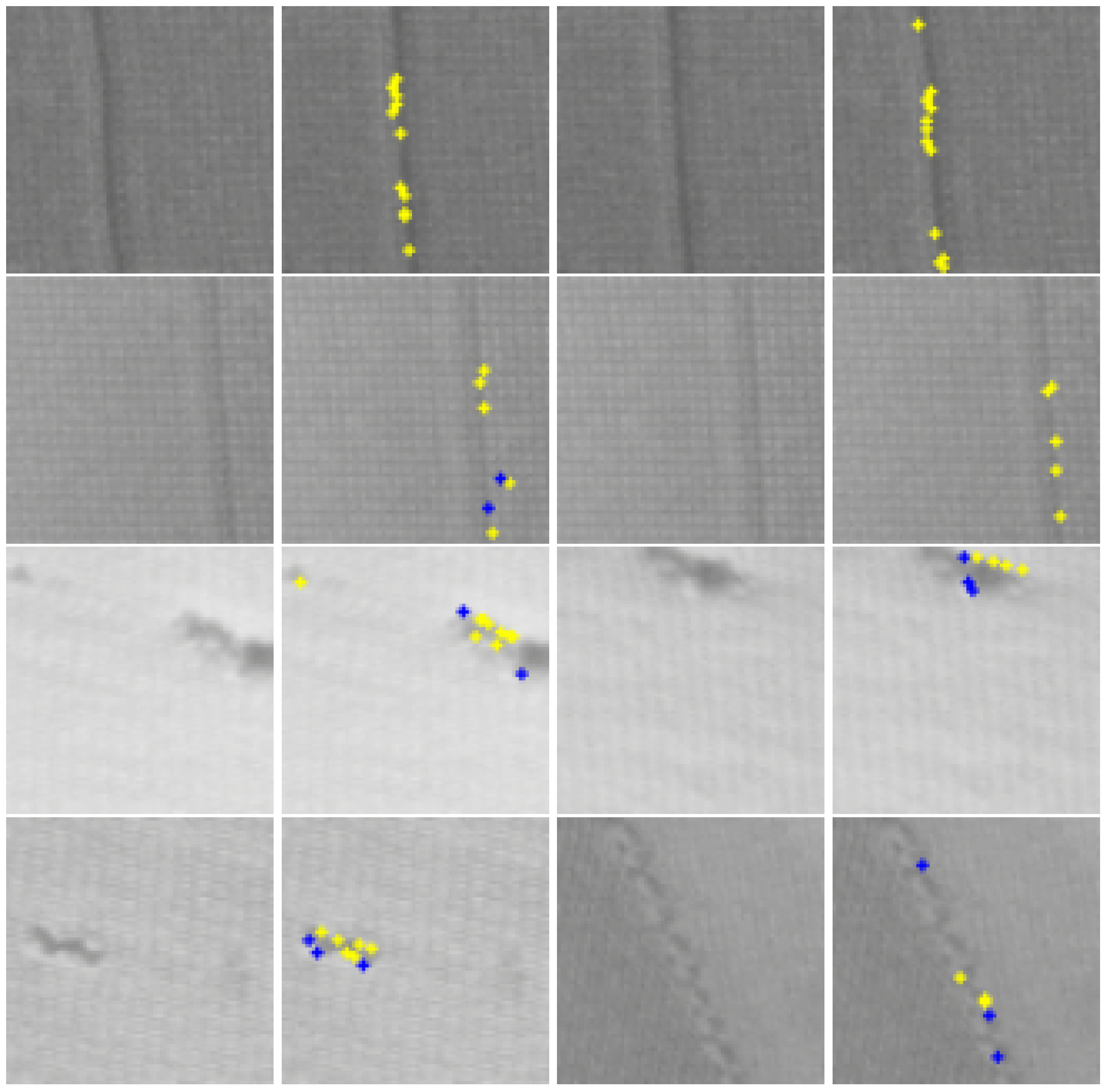

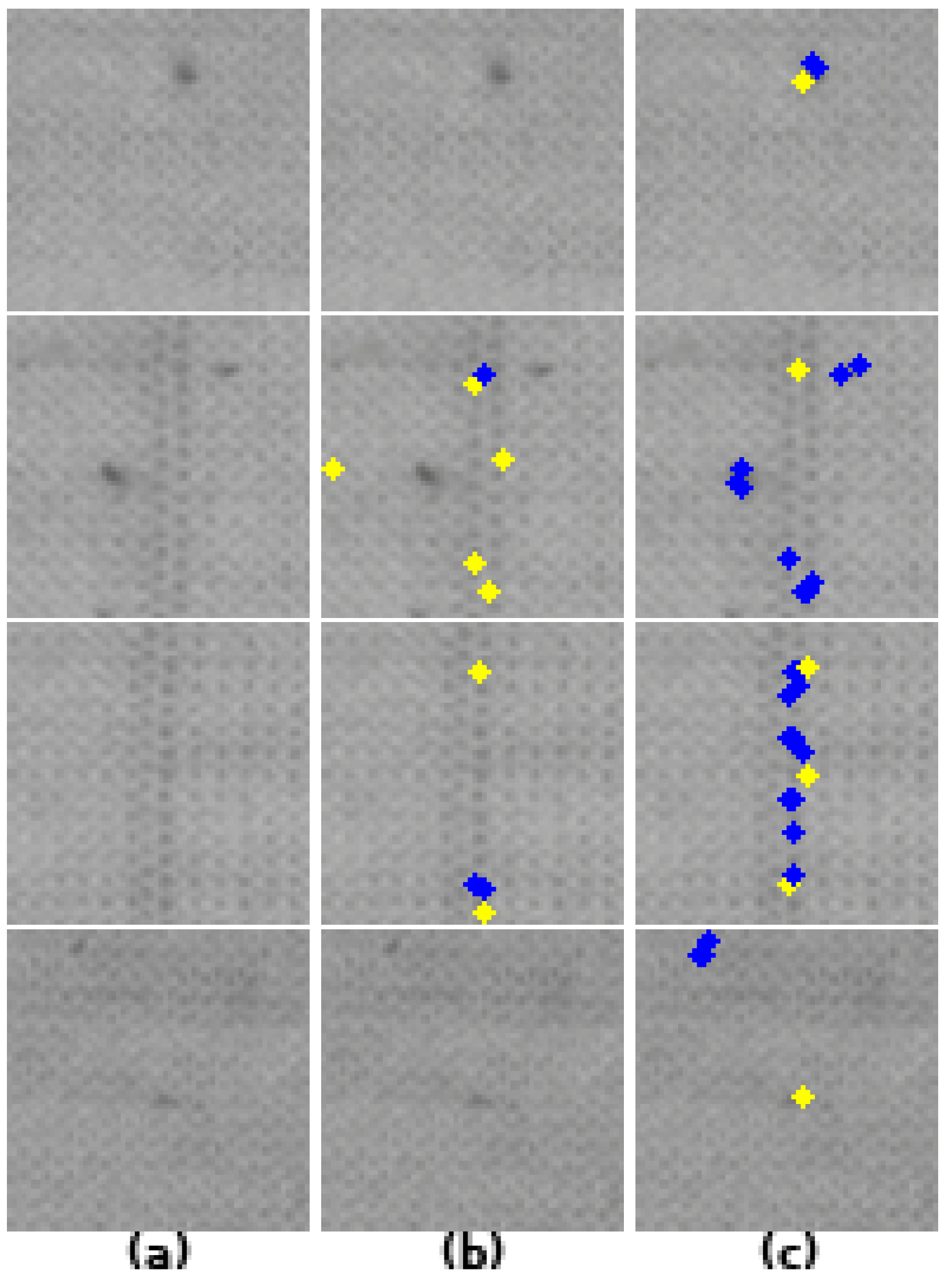

3.1. Discrete Curvature Algorithm

3.2. Modified Discrete Curvature Algorithm

| Algorithm 1 Modified Discrete Curvature Algorithm | |

| Require: image | |

| if Filter selection type then | |

| end if | |

| ▹ finding Countours | |

| while do | |

| ▹ return(,,,) | |

| end while | |

| while do | |

| if then | ▹ Checking convex or concave |

| else if then | |

| else if then | |

| end if | |

| dircurv is changed append values to the | |

| end while | |

| while do | |

| if is between then | |

| defect type is hole or oil | |

| end if | |

| if is between then | |

| defect type is vertical or horizontal | |

| end if | |

| end while | |

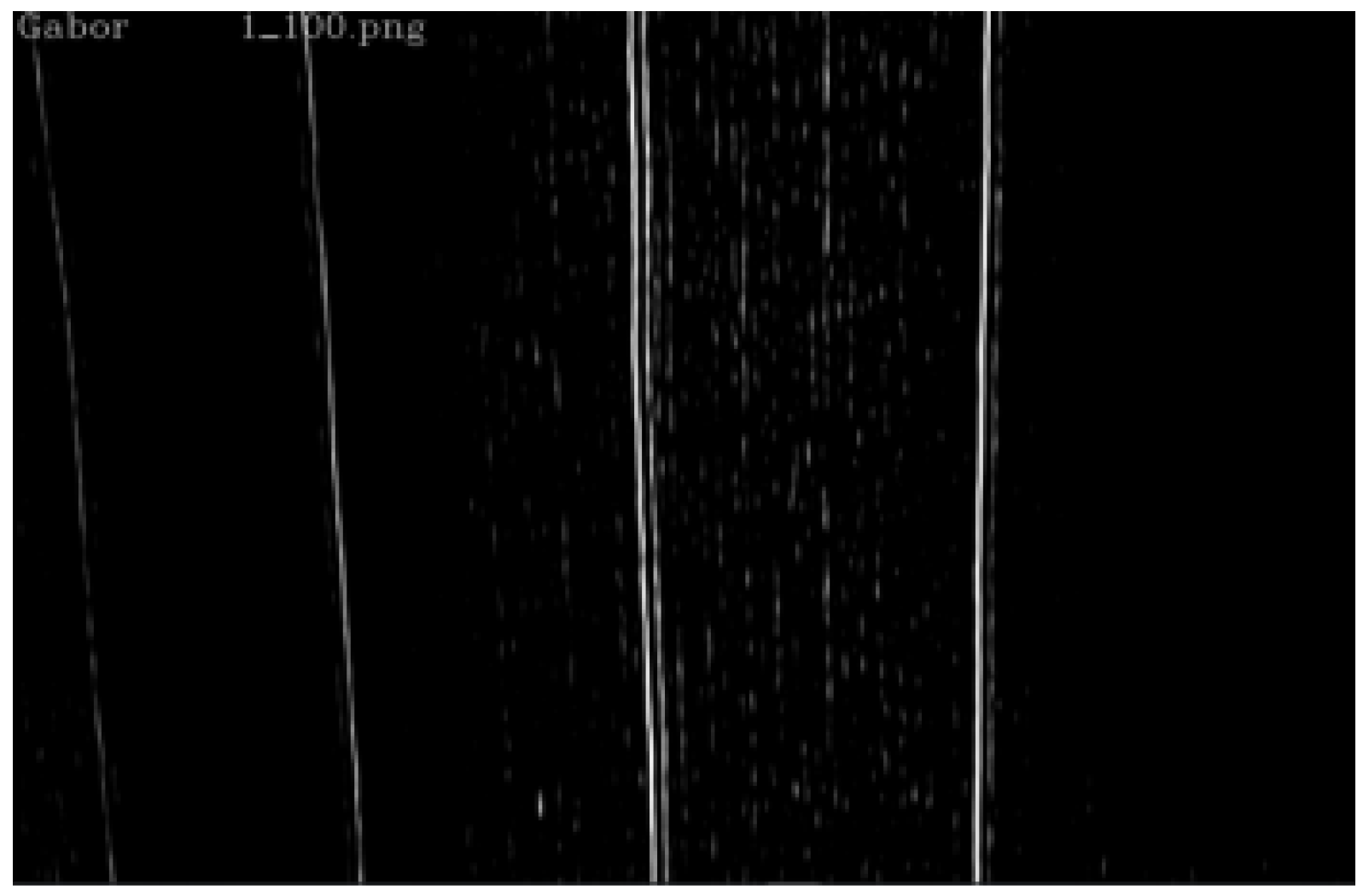

4. Gabor Transform-Based Approach

| Algorithm 2 Gabor Transform Algorithm | |

| Require: image | |

| if Filter selection type then | |

| end if | |

| ▹ Gabor Transform Image | |

| while do | |

| Sum the energy density of all pixels. | ▹ Calculate the energy density |

| end while | |

| if Energy density is ≥ Threshold Energy then | |

| Image is defected | |

| end if | |

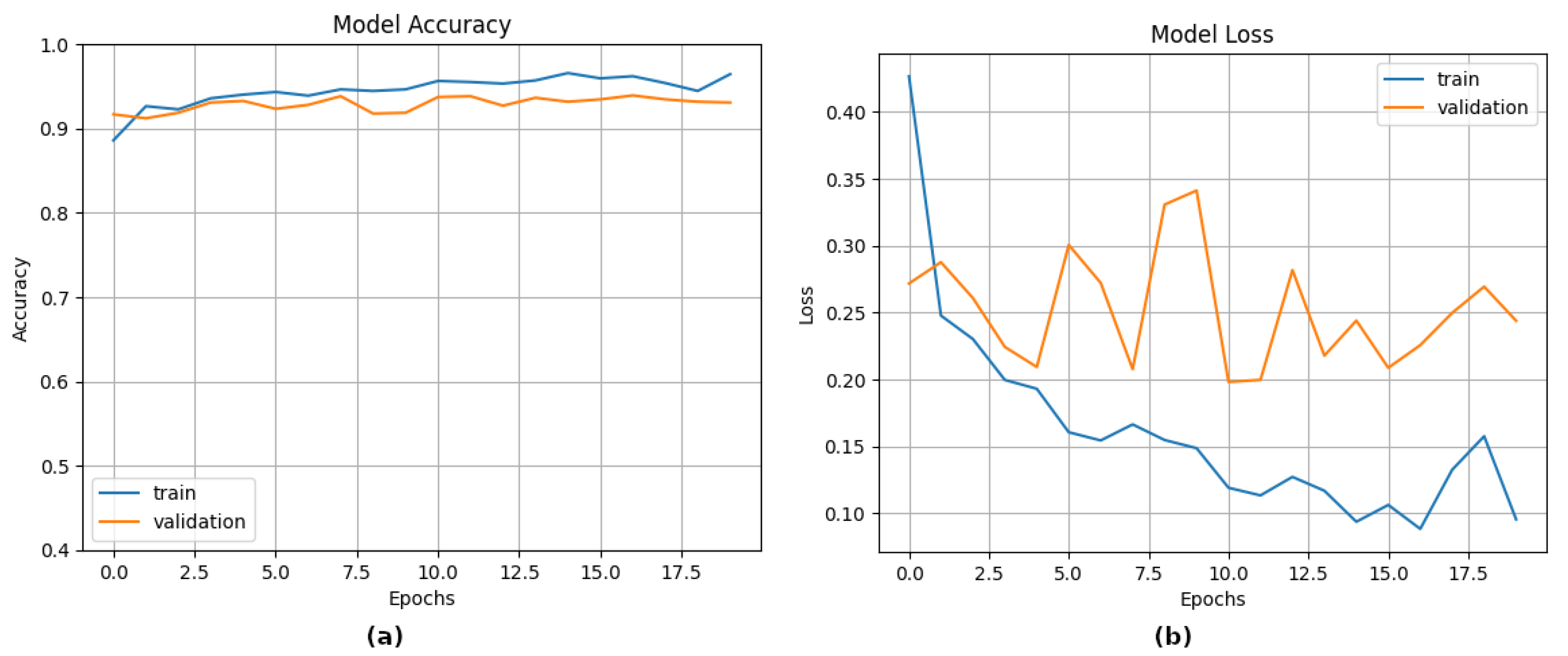

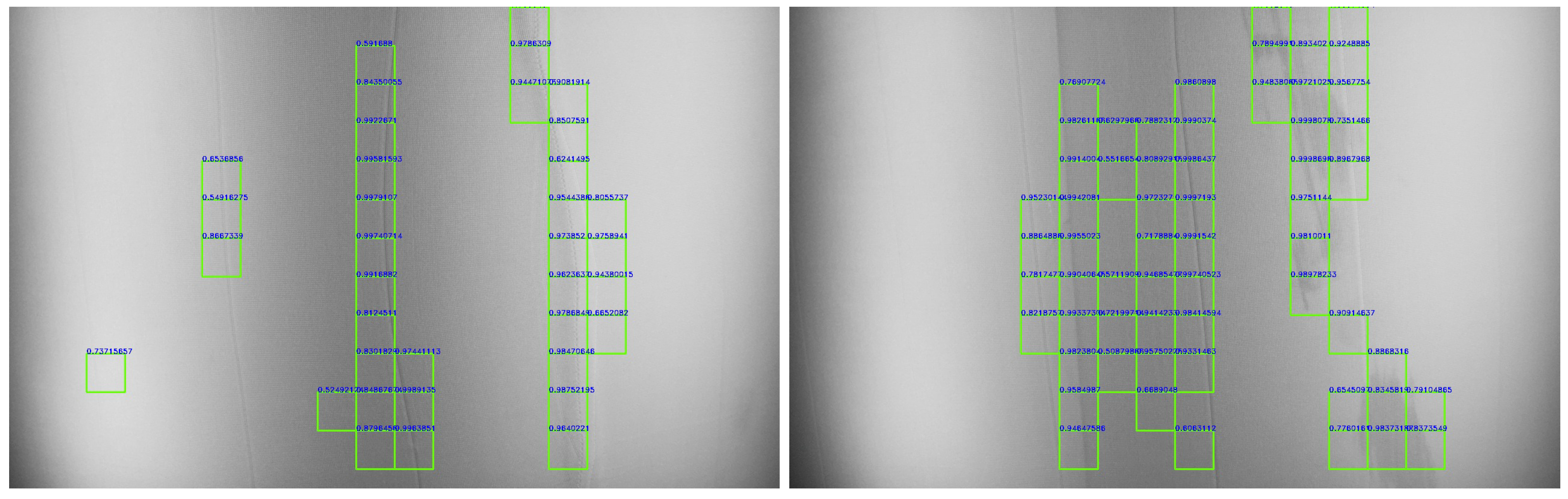

5. Ablation Study on Deep Learning-Based Defect Detection

| Algorithm 3 Deep Learning Based Algorithm |

| Require: There must be images with all types and divided by different directory with class names and image . Preparing The Data Training The Model model = Sequential() pretrained_model = tf.keras.applications.X() model.add(pretrained_model) # Add some layers of the pre-trained model # Modify a specific layer model.add(Flatten()) model.add(Dense(512, activation = ’relu’)) model.add(Dense(5, activation = ’softmax’)) history = model.fit() Making Predictions pred = model.predict(image) print(pred) |

6. Result and Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CA | Curvature Algortihm |

| MDCA | Modified Curvature Algortihm |

| GT | Gabor Transform |

| CNN | Convolutional Neural Network |

References

- Hanbay, K.; Talu, M.F.; Özgüven, Ö.F. Fabric defect detection systems and methods—A systematic literature review. Optik 2016, 127, 11960–11973. [Google Scholar] [CrossRef]

- Kumar, A. 3-Computer vision-based fabric defect analysis and measurement. In Computer Technology for Textiles and Apparel; Hu, J., Ed.; Woodhead Publishing: Cambridge, UK, 2011; pp. 45–65. [Google Scholar] [CrossRef]

- Harwood, J.; Davis, G.; Wolfe, P. Automated Fabric Inspection Systems: Evaluation of Commercial Products. Text. Res. J. 2003, 73, 702–709. [Google Scholar] [CrossRef]

- Tsai, D.M.; Hsieh, C.Y. Automated Surface Inspection Using Gabor Filters. Int. J. Adv. Manuf. Technol. 1995, 10, 419–426. [Google Scholar] [CrossRef][Green Version]

- Garbowski, T.; Maier, G.; Novati, G. On calibration of orthotropic elastic-plastic constitutive models for paper foils by biaxial tests and inverse analyses. Struct. Multidiscip. Optim. 2012, 46, 111–128. [Google Scholar] [CrossRef]

- Buljak, V.; Cocchetti, G.; Cornaggia, A.; Garbowski, T.; Maier, G.; Novati, G. Materials Mechanical Characterizations and Structural Diagnoses by Inverse Analyses. In Handbook of Damage Mechanics; Voyiadjis, G., Ed.; Springer: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Asada, H.; Brady, M. The Curvature Primal Sketch. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 2–14. [Google Scholar] [CrossRef]

- Anandan, P.; Sabeenian, R.S. Fabric defect detection using Discrete Curvelet Transform. Procedia Comput. Sci. 2018, 133, 1056–1065. [Google Scholar] [CrossRef]

- Cao, F.; Moisan, L. Geometric Computation of Curvature Driven Plane Curve Evolutions. SIAM J. Numer. Anal. 2001, 39, 624–646. [Google Scholar] [CrossRef]

- Kimmel, R.; Malladi, R.; Sochen, N. Images as Embedded Maps and Minimal Surfaces: Movies, Color, and Volumetric Medical Images. Int. J. Comput. Vis. 1997, 39, 111–129. [Google Scholar] [CrossRef]

- Zhu, Y.; Er, M.J. Moment-Based Analysis of Closed Contours for Texture and Shape Classification. IEEE Trans. Syst. Man Cybern. Part Syst. Hum. 2000, 30, 525–533. [Google Scholar] [CrossRef]

- Li, H. Image Contour Extraction Method based on Computer Technology. In Proceedings of the 2015 4th National Conference on Electrical, Electronics and Computer Engineering, Xi’an, China, 12–13 December 2015; Atlantis Press: Paris, France, 2015; pp. 1185–1189. [Google Scholar] [CrossRef]

- Koenderink, J.J. The Structure of Images. Biol. Cybern. 1984, 50, 363–370. [Google Scholar] [CrossRef]

- Ciomaga, A.; Monasse, P.; Morel, J.-M. The Image Curvature Microscope: Accurate Curvature Computation at Subpixel Resolution. Image Process. Line 2017, 7, 197–217. [Google Scholar] [CrossRef]

- Tu, L.W. Differential Geometry: Connections, Curvature, and Characteristic Classes. In Graduate Texts in Mathematics; Springer: Cham, Switzerland, 2017; ISBN 978-3-319-55082-4. [Google Scholar]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision, 3rd ed.; Cengage Learning: Boston, MA, USA, 2008; pp. 223–230. [Google Scholar]

- Subrahmonia, J.D.; Lakshmanan, S.; Goldberg, M. Edge and curvature extraction for real-time road boundary detection. IEEE Trans. Veh. Technol. 1996, 45, 774–784. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, S.; Abe, K. Topological structural analysis of digital binary images by border following. Comput. Vision Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Papoulis, A. Probability, Random Variables, and Stochastic Processes, 4th ed.; McGraw-Hill: New York, NY, USA, 2000; pp. 315–318. [Google Scholar]

- Bovik, A.C.; Clark, M.; Geisler, W.S. Multichannel texture analysis using localized spatial filters. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 55–73. [Google Scholar] [CrossRef]

- Dunn, D.; Higgins, W.; Wakeley, J. Texture segmentation using 2-D Gabor elementary functions. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 130–149. [Google Scholar] [CrossRef]

- Jain, A.K.; Farrokhnia, F. Unsupervised texture segmentation using Gabor filters. Pattern Recognit. 1991, 24, 1167–1186. [Google Scholar] [CrossRef]

- Manjunath, B.S.; Ma, W.Y. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef]

- Vautrot, P.; Bonnet, N.; Herbin, M. Comparative study of different spatial/spatial-frequency methods (Gabor filters, wavelets, wavelets packets) for texture segmentation/classification. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; Volume 3, pp. 145–148. [Google Scholar] [CrossRef]

- Bigun, J.; du Buf, J.M.H. N-folded symmetries by complex moments in Gabor space and their application to unsupervised texture segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 80–87. [Google Scholar] [CrossRef]

- Teuner, A.; Pichler, O.; Hosticka, B.J. Unsupervised texture segmentation of images using tuned matched Gabor filters. IEEE Trans. Image Process. 1995, 4, 863–870. [Google Scholar] [CrossRef] [PubMed]

- Bodnarova, A.; Bennamoun, M.; Latham, S. Optimal Gabor filters for textile flaw detection. Pattern Recognit. 2002, 35, 2973–2991. [Google Scholar] [CrossRef]

- Clausi, D.A.; Jernigan, M.E. Designing Gabor filters for optimal texture separability. Pattern Recognit. 2000, 33, 1835–1849. [Google Scholar] [CrossRef]

- Liu, G. Surface Defect Detection Methods Based on Deep Learning: A Brief Review. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; pp. 200–203. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, G.; Yang, J.; Wei, X. A review of machine learning-based defect detection methods. J. Manuf. Process. 2021, 66, 481–495. [Google Scholar] [CrossRef]

- Abbineni, J.; Thalluri, O. Software Defect Detection Using Machine Learning Techniques. In Proceedings of the 2018 2nd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 11–12 May 2018; pp. 471–475. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2014; pp. 3320–3328. [Google Scholar]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 328–339. [Google Scholar]

- Keshavan, M.S.; Janik, P. Transfer learning for improving performance of deep learning models in medical image analysis. J. Med. Imaging 2019, 6, 041201. [Google Scholar]

- Chen, Y.; Ding, Y.; Zhao, F.; Zhang, E.; Wu, Z.; Shao, L. Surface Defect Detection Methods for Industrial Products: A Review. Appl. Sci. 2021, 11, 7657. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Chen, M.; Yu, L.; Zhi, C.; Sun, R.; Zhu, S.; Gao, Z.; Ke, Z.; Zhu, M.; Zhang, Y. Improved faster R-CNN for fabric defect detection based on Gabor filter with Genetic Algorithm optimization. Comput. Ind. 2022, 134, 103551. [Google Scholar] [CrossRef]

- Yu, W.; Lai, D.; Liu, H.; Li, Z. Research on CNN Algorithm for Monochromatic Fabric Defect Detection. In Proceedings of the 2021 6th International Conference on Image, Vision and Computing (ICIVC), Qingdao, China, 23–25 July 2021; pp. 20–25. [Google Scholar] [CrossRef]

- Liu, Q.; Wang, C.; Li, Y.; Gao, M.; Li, J. A Fabric Defect Detection Method Based on Deep Learning. IEEE Access 2022, 10, 4284–4296. [Google Scholar] [CrossRef]

- Lee, Y.J.; Grauman, K. Learning the easy things first: Self-paced visual category discovery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1721–1728. [Google Scholar] [CrossRef]

- Wei, Y.; Sun, Q.; Wang, L.; Liu, C. Few-shot learning for surface defect classification. IEEE Access 2020, 8, 32728–32736. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Liu, S.; Jiang, X.; Yang, J.; Chen, B. A novel defect detection method based on feature extraction and recognition. Pattern Recognit. Lett. 2019, 119, 158–165. [Google Scholar] [CrossRef]

- Zhang, Y.; Wallace, B. A Sensitivity Analysis of (and Practitioners’ Guide to) Convolutional Neural Networks for Sentence Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 397–409. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- He, Z.; Shao, H.; Chen, X. A deep learning-based approach for defect detection in manufacturing. J. Intell. Manuf. 2020, 31, 399–411. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for multi-class classification: An overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

| Predicted | ||||

|---|---|---|---|---|

| non-faulty | faulty | ∑ | ||

| Actual | non-faulty | 50 | 102 | 152 |

| faulty | 52 | 22 | 74 | |

| ∑ | 102 | 124 | 226 |

| Predicted | ||||

|---|---|---|---|---|

| non-faulty | faulty | ∑ | ||

| Actual | non-faulty | 108 | 44 | 152 |

| faulty | 6 | 68 | 74 | |

| ∑ | 114 | 112 | 226 |

| Predicted | ||||

|---|---|---|---|---|

| non-faulty | faulty | ∑ | ||

| Actual | non-faulty | 132 | 20 | 152 |

| faulty | 1 | 73 | 74 | |

| ∑ | 133 | 93 | 226 |

| Predicted | ||||

|---|---|---|---|---|

| non-faulty | faulty | ∑ | ||

| Actual | non-faulty | 145 | 7 | 152 |

| faulty | 3 | 71 | 74 | |

| ∑ | 175 | 78 | 226 |

| Defect Type | Hole | Needle Break | May | Lycra | Oil |

|---|---|---|---|---|---|

| CA | 0.42 | 0.29 | 0.27 | 0.32 | 0.40 |

| MDCA | 0.78 | 0.82 | 0.74 | 0.81 | 0.60 |

| GT | 0.81 | 0.99 | 0.99 | 0.85 | 0.78 |

| CNN | 0.96 | 0.98 | 0.98 | 0.85 | 0.95 |

| Model | Acc | F1 | Precision | Recall | Process Time |

|---|---|---|---|---|---|

| CA | 0.32 | 0.39 | 0.56 | 0.29 | 203 ms |

| MDCA | 0.78 | 0.82 | 0.74 | 0.92 | 295 ms |

| GT | 0.91 | 0.94 | 0.89 | 0.99 | 110 ms |

| CNN | 0.96 | 0.96 | 0.96 | 0.96 | 1800 ms |

| Model | Yolov5 | Yolov8n | Yolov8 TensorRT |

|---|---|---|---|

| Inference time (min|max) | 172 ms|180 ms | 81.4 ms|81.9 ms | 65.6 ms|66.2 ms |

| mAP50 | 0.846 | 0.8576 | 0.896 |

| mAP50-95 | 0.591 | 0.6 | 0.622 |

| precision | 0.814 | 0.87 | 0.866 |

| recall | 0.802 | 0.84 | 0.859 |

| GFLOPs | 15.8 | 8.207 | 8.2 |

| parameters | 7,034,398 | 3,013,383 | 3,013,383 |

| Model | RPI4 CPU | Jetson Nano CPU | Jetson Nano GPU Acc. |

|---|---|---|---|

| CA | 4.9 fps | 5.5 fps | - |

| MDCA | 3.38 fps | 3.8 fps | - |

| GT | 9.1 fps | 10.2 fps | - |

| CNN | <1 fps | 1 fps | 8.33 fps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Erdogan, M.; Dogan, M. Enhanced Curvature-Based Fabric Defect Detection: A Experimental Study with Gabor Transform and Deep Learning. Algorithms 2024, 17, 506. https://doi.org/10.3390/a17110506

Erdogan M, Dogan M. Enhanced Curvature-Based Fabric Defect Detection: A Experimental Study with Gabor Transform and Deep Learning. Algorithms. 2024; 17(11):506. https://doi.org/10.3390/a17110506

Chicago/Turabian StyleErdogan, Mehmet, and Mustafa Dogan. 2024. "Enhanced Curvature-Based Fabric Defect Detection: A Experimental Study with Gabor Transform and Deep Learning" Algorithms 17, no. 11: 506. https://doi.org/10.3390/a17110506

APA StyleErdogan, M., & Dogan, M. (2024). Enhanced Curvature-Based Fabric Defect Detection: A Experimental Study with Gabor Transform and Deep Learning. Algorithms, 17(11), 506. https://doi.org/10.3390/a17110506