Abstract

Micro-expressions are very brief, involuntary facial expressions that reveal hidden emotions, lasting less than a second, while macro-expressions are more prolonged facial expressions that align with a person’s conscious emotions, typically lasting several seconds. Micro-expressions are difficult to detect in lengthy videos because they have tiny amplitudes, short durations, and frequently coexist alongside macro-expressions. Nevertheless, micro- and macro-expression analysis has sparked interest in researchers. Existing methods use optical flow features to capture the temporal differences. However, these optical flow features are limited to two successive images only. To address this limitation, this paper proposes LGNMNet-RF, which integrates a Lite General Network with MagFace CNN and a Random Forest classifier to predict micro-expression intervals. Our approach leverages Motion History Images (MHI) to capture temporal patterns across multiple frames, offering a more comprehensive representation of facial dynamics than optical flow-based methods, which are restricted to two successive frames. The novelty of our approach lies in the combination of MHI with MagFace CNN, which improves the discriminative power of facial micro-expression detection, and the use of a Random Forest classifier to enhance interval prediction accuracy. The evaluation results show that this method outperforms baseline techniques, achieving micro-expression F1-scores of 0.3019 on CAS(ME)2 and 0.3604 on SAMM-LV. The results of our experiment indicate that MHI offers a viable alternative to optical flow-based methods for micro-expression detection.

1. Introduction

The rapidly progressive neurodegenerative disorder known as Amyotrophic Lateral Sclerosis (ALS) is the loss of lower sensory neurons in the spinal cord and brainstem, and upper motor neurons in the motor cortex, causing the gradual loss of voluntary muscle control and eventually paralysis. Ultimately, this illness also impairs respiratory function and can cause respiratory failure three to five years after symptoms first appear [1]. Since there is no known cure for ALS, researchers have focused their attention on the treatment and rehabilitation of patients to extend and enhance their quality of life. Supportive therapy, speech and swallowing therapy, mobility, palliative care, counselling, symptomatic medical treatment, and ventilation are some of the therapies [1]. For ALS patients, facial expressions are also vital for communication, especially when other forms of interaction become challenging. Therefore, ALS patients use their facial expressions as a form of non-verbal communication, which can be detected and analysed. To analyse patients’ facial expressions, the first step is to detect the start and end of the expression from long recordings, followed by further analysis, such as Micro-Expression (ME) recognition, on the correctly detected segments. Many researchers focus on ME recognition, which classifies short videos into specific emotion classes, but neglect this ME detection study. This detection study is also important because, without the correct detection of the subtle ME interval, ME classification will receive input from non-expression intervals, leading to the significant misclassification of expressions.

The movements of several facial muscles constitute a facial expression. This is a form of nonverbal communication that could reveal someone’s emotional state. However, since people can purposefully display particular facial expressions to conceal their emotions (such as faking a smile), facial expressions are not always an accurate indicator of a person’s emotional state. Subtle facial movements that may occur during the suppression of emotions are referred to as facial MEs, and regular facial expressions as facial Macro-Expressions (MaEs). MaE is a frequent and easily recognised facial expression with a stronger intensity and a longer duration of around 0.5 to 4.0 s [2]. On the other hand, an involuntary ME is more subdued, often lasts within 0.5 s, and manifests when an individual tries to conceal their true emotions [3]. Since ALS patients have limited muscle movements, our research focuses on facial ME, a slight, involuntary movement of the face that lasts less than 0.5 s. MEs are analysed to improve the communication process with ALS patients.

Two primary steps in analysing expressions in ALS patients are detection and recognition. While the detection task requires determining the expression’s interval from long recordings, the recognition task classifies the ME clips into many emotion categories [4]. The point of interest in this study is that ME detection involves identifying frames showing the precise onset (when the expression begins), apex (when the expression is at its peak intensity), and offset (when the expression ends) of an expression within a video sequence to capture and analyse these brief, subtle facial movements accurately.

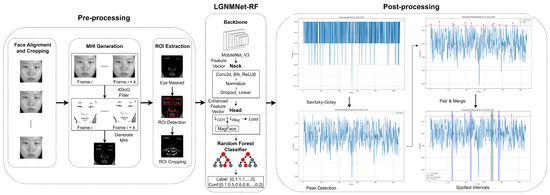

While optical flow images are frequently used to detect variations in the colour and intensity of facial pixels between frames to identify ME, temporal details are frequently missed by 2D deep learning algorithms. Therefore, this study proposed employing Motion History Images (MHI) [5] for ME and MaE detection. By creating a single entity out of several motion sequences, MHI can effectively capture temporal information. The Lite General Network and MagFace CNN (LGNMNet) model is a general low-dimensional feature embedding loss model built by [6]. Thus, inspired by [6], MobileNet V3 CNN backbone with the MagFace loss function [7] method, commonly used in facial recognition tasks, is proposed. This paper also combines the proposed model with the Random Forest machine learning classifier to improve the ME detection accuracy using MHI rather than optical flow as input features; this method is given the name LGNMNet-RF. To the best of our knowledge, this is the first application of a CNN-ML combination technique in the field of ME and MaE detection study. Additionally, the feature learning process is enhanced by incorporating pseudo-labelling during training and predicting the apex frame in each video using peak detection, following a smoothing process. The framework architecture is also illustrated in Figure 1.

Figure 1.

Illustration of LGNMNet-RF Overall Architecture.

The combination of the Lite General Network (LGN), MagFace CNN, and a Random Forest (RF) classifier was chosen for its unique balance of efficiency, discriminative power, and interpretability, which makes it particularly well-suited to the task of spotting micro-expressions. LGN offers a lightweight, computationally efficient architecture, while MagFace enhances feature regularisation, improving intra-class compactness and inter-class separability, which is crucial for distinguishing subtle micro-expressions. The Random Forest adds robustness and interpretability to the classification, making the overall system not only accurate but also resilient to small, imbalanced datasets. This combination outperforms other techniques by addressing both the computational and task-specific demands effectively.

The main contributions of this study are as follows:

- This study proposes MHI for the ME and MaE detection using LGNMNet-RF architecture.

- This study implements pseudo-labelling with temporal extensions to generate more accurate labels for deep learning training according to the characteristics of the MHI features.

- This study introduces an LGNMNet-RF architecture that integrates MobileNet V3 with the MagFace loss function and utilises a Random Forest classifier to enhance interval detection accuracy and mitigate noise.

2. Related Works

Initial efforts [8,9] made noteworthy advancements in the main mechanism of the ME-detecting task. Specifically, a 2-distance feature difference analysis is employed between two frames within a preset time interval, using a local binary pattern (LBP) as the feature descriptor. The ME is detected if the frame’s feature vector exceeds the peak detection threshold. Similarly, using a block-by-block face division technique, Davison et al. [10] calculated each frame’s Histogram of Oriented Gradient (HOG). Then, this method detects MEs by calculating the difference between frames at a predetermined interval using the Chi-Squared distance. These are the traditional hand-crafted methods that contributed to breakthroughs in ME detection at an early stage.

Apex detection is one of the early types of ME detection tasks, but it is often incorrectly grouped as ME and MaE detection in the literature. To overcome the shortcomings of LBP-TOP, which only employs features on three planes, Esmaeili and Shahdi [11] proposed Cubic-LBP. Six cube faces and nine jets that cross the central pixel make up Cubic-LBP. The sum of the squared differences is then used to compute the differences between frames. This method can also determine how much two histograms differ from one another.

For the optical flow images, this is still the most frequently utilised input feature. For the detection task, Zhang et al. [12] used Region of Interest (ROI) segmentation to extract features, and they then obtained the optical flow for each ROI image. After that, the Histogram of Oriented Optical Flow (HOOF) was computed using the obtained optical flow. For ME or MaE detection, all observed features are compiled into a feature matrix.

Another attribute utilised as an input is the absolute frame difference. Only two absolute image differences were used by Borza et al. [13]; however, this technique needs the face to remain stationary and selects the frame using half the expression’s interval—something that is impossible in real life because we never know when a facial expression will conclude.

Later, subtle facial movements can be detected using motion-based approaches. For the first time, Shreve et al. [8] developed optical strain, a derivative of optical flow, to assess minute motion changes based on the elastic stretches of facial skin tissue. Consideration is given to the total of the strain magnitudes seen over time at various facial locations. Main Directional Maximal Difference (MDMD) [14] was the baseline method for the Micro-Expression Grand Challenge (MEGC) 2020. This encodes the maximal difference magnitude along the significant direction of motion to identify whether an ME or MaE is present.

At the moment, the majority of research on ME detection uses explicit visual motion representations, such as optical flow. Researchers used the advantages of deep learning algorithms to extract features and identify MEs. Yap et al. [2] were the first to use a deep learning model for the MEGC 2021 competition. Yap used masked background images to build the Local Contrast Normalisation (LCN) images. Subsequently, distinct lengths of frame skips were implemented: MaE streams consisting of identical 3D-CNNs received larger frame skips, while ME streams received shorter frame skips. The Residual Dense network model first uses global average pooling, and then carries out extra classification. The two 3D-CNNs’ weights were pooled to reduce overfitting issues.

The first to use the human facial action units (AUs) in conjunction with the AU-based Graph Convolutional Network approach to learn finer spatial information and produce a probability map was Yin et al. [15] RetinaFace was used to crop, align, and conceal the faces’ backgrounds in the facial photographs. Therefore, the proposal generation algorithm generated the proposals based on the probability map. The Non-Maximum Suppression (NMS) technique was used in post-processing to minimise the overlapping intervals and filter out lower scores that significantly overlap with the high-score intervals.

In Xie and Cheng’s work [16], a 3D-CNN and a Prelayer extract spatio-temporal information from RGB and optical flow images. The system uses three one-dimensional convolutional layers to convolute these features, resulting in feature sequences with various channel numbers. While anchor-free networks perform better for short or long intervals, anchor-based clip proposal networks are better for medium-length intervals. The spatial attention mechanism reviews interval proposals, less significant characteristics are adaptively suppressed, and critical elements are adaptively enhanced by the channel attention mechanism.

He et al. [17] computed optical flow vectors between images that were separated by k frames using the TV-L1 approach. After that, they clipped the ROI at the mouth and eye regions and masked the eyes in a three-channel image by combining vertical and horizontal vectors. The features were extracted using a Swin-transformer, and peak detection and thresholding were made possible by post-processing the sequence using a specific formula. Gu et al. [6] took inspiration from this and used the hue and saturation of the vertical and horizontal optical flow features to generate an RGB image. The feature extractor used is MobileNet V2 with MagFace loss and a feature enhancer that helps to improve learning and reduce overfitting. For peak detection, interval detection, smoothing, and combining peaks to account for duplicate detections, they employed the Savitzky–Golay filter. Gu et al. [6] used the MobileNet V2 for feature extraction with a MagFace loss function.

Optical flow is the most widely used method for visualising motion data. Despite its extensive use and ease of use, our research suggests that optical flow may not be the best motion representation for two-dimensional ME detection using a deep learning network. Large geographic regions are typically integrated using optical flow algorithms, which operate under the presumption that the flow is smooth or that values at nearby pixels are close to one another. This reasonable assumption for stiff objects helps to alleviate the aperture problem since local measurements can only estimate displacements orthogonal to the prevailing texture direction. However, it is considered a weak assumption for spatially localised MEs and the flexible face.

3. Methodology

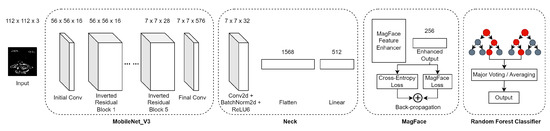

The LGNMNet-RF model uses the ROI of MHI features acquired during the pre-processing step as input images. The Intersection Over Minimum (IML) labelling method is used as input labels. As illustrated in Figure 1, this network’s overall structure comprises a neck, head, and backbone network. First, looking into the LGNMNet-RF feature extractor, as illustrated in Figure 2, the MobileNet V3 network without the Softmax activation function serves as the backbone, which comprises several convolutional, inverted residual blocks and pooling layers. The neck network has three layers: batch normalisation (BN), ReLU6, and Conv2d convolutional layers with a kernel function. The remainder of the neck network uses a dropout layer to reduce overfitting and a linear layer to eliminate redundant information after normalising the output of the first part of the network. Ultimately, the head network handles classification and loss.

Figure 2.

Illustration of Detailed LGNMNet-RF Architecture.

Inspired by [6], to enhance the model’s capacity for feature learning and generalisability, a novel loss function L was developed by Gu et al. [6] that used MagFace combined with MobileNet V2 to perform ME detection. This Function (1) is derived from a combination of cross-entropy loss () and MagFace loss (). Specifically, the MagFace model’s feature-embedding technique could successfully alter the feature distribution of AUs with varying intensities within the same class during training, drawing AUs with locations near apex frames, the peak intensity frames, towards the class’s centres and AUs with locations near onset/offset frames towards the adaptive margin. Applying the one-dimensional labelling results mapped from the high-dimensional feature embedding results of the MagFace yields the predicted classification labels and confidence scores.

The classification task generally uses the fully connected layers and Softmax classifier. However, many researchers have neglected the machine learning classifier approach, which significantly improves the accuracy of the classification task. In this research, the Random Forest classifier has the advantage of improving data generalisation, handling the complex, non-linear relationships between features, and being robust to noise.

3.1. Pre-Processing

The faces of the subjects in the datasets are aligned with reference facial landmarks using RetinaFace [18] and cropped according to the facial landmarks. The cropped images are edge-detected using eXtended Difference-of-Gaussian edge detection (XDoG) [19]. Next, inspired by the pre-processing method from [6], the MEI is generated between the i-th frame, and the -th frame, . Here, the value of k was set to half of the average length of the expressions, as shown in Equation (2). Setting the k value to half the average expression length allows the model to focus on small changes of a duration of k. Setting the k value too low will introduce noise, and setting it too high will cause the model to miss out on subtle important expression information. The frame stamp, , is equal to k.

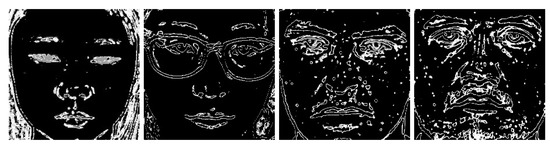

The examples of XDoG edge-detected images are displayed in Figure 3. The examples of MHI frames showing the recent pixel changes according to an of 6 are displayed for MEs in Figure 4. The MHI images that are generated without any edge detection have too much noise and showed lower accuracy in previous experiments. Therefore, MHI was generated based on the XDoG edge-detected images.

Figure 3.

Examples of facial edge features detected using XDoG filter.

Figure 4.

Examples of MHI generated from XDoG edge detected images.

3.2. Pseudo-Labelling

IML is also known as pseudo-labelling. The motion amplitude of an AU decreases with increasing distance from the start or end time of the corresponding expression. That being said, there might not be much difference between an expression AU and a non-expression AU close to the ME boundary. According to Formula (3), Yap et al. [2] computed the ratio between the union of the current k frames with the current expression and the intersection of the current interval of k frames with the duration of the current expression (corresponding to label = 1):

The label of the current frame is set to 1 for ; it is set to 0 otherwise. The average length of expressions in the target dataset of the same type as the current expression is represented by the symbol k. According to [6], there will be a significant error if the current expression’s length is significantly larger or smaller than k. Thus, Ref. [6] created an alternative indicator that is computed by dividing the intersection of and by . Similarly, the current frame’s label is 1 when . The indicator is calculated mathematically using the following Formula (4):

Each frame of the scene is iterated to set the current frame. So, the first frame of the scene is set as the current frame and then the subsequent frame becomes the current frame after the computation is completed. This iteration will continue until the current frame reaches the frame position of (total no. of frames—k) of the scene.

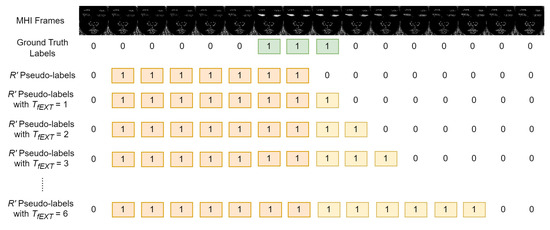

Moreover, in addition to the pseudo-labelling of [6], since MHI has the characteristics of having the features of the previous frames be extended to the subsequent few frames according to the value k, the offset point for the pseudo-labelling generation experiments with several frame extensions after , resulting in , where is the number of frames that are extended. This extension of the pseudo-labelling for the MHI images does not affect the original Ground Truth (GT) during evaluation. These labels are used for training only, and the evaluation GT follows the original annotations, as shown in Figure 5. In Figure 5, the GT labels are highlighted in green. After Equation (4), the values for every frame are calculated and highlighted in red. Then, according to the frame extension properties of MHI, as discussed earlier, the yellow highlighted frames after the red highlighted frames are the extended pseudo-labels. Therefore, the total new sequence of pseudo-labels is created.

Figure 5.

Illustration of pseudo-labelling with temporal extension of k = 6.

3.3. Post-Processing

This post-processing methodology is grounded in the approach detailed by Gu et al. in their recent study [6]. Their post-processing technique offers a comprehensive foundation for the current analysis, which seeks to refine and expand upon their findings. In the following sections, the key techniques and results from Gu et al.’s work will be adapted to align with this research, ensuring the rigorous application and extension of their methodology. After identifying the apex frame, non-maximal suppression is used in the apex frame-based expression detection task to combine the neighbouring detected apex frames. The most excellent apex score is chosen. Then, as indicated by Formula (5), the k frames that occur before and after the identified apex frame are regarded as its expression interval I. The optical flow feature difference between frame and frame is extracted as THE features of frame , and the optical flow feature difference between frame and frame is extracted as THE features of frame . Nevertheless, for one apex frame , frame can also be detected as one apex frame according to Liong’s [3] method or this method. Therefore, one actual and one pseudo-peak might correspond to the same expression interval. This means the conventional strategy of building the simple interval for the detected apex frame is insufficient.

Thus, a pair-merge strategy for expression peak construction was proposed for multiple peak frames , selected by finding a local maximum in our method. This pairs the adjacent peaks and extends the interval around this maximum by frames on each side to obtain . To obtain pairs of adjacent peaks, we finally merge THE overlapping intervals. In this instance, the expression interval E created by our method can satisfy the condition for a true apex frame , satisfying the condition . The corresponding pseudo-frame satisfies the condition , and the condition can be satisfied by its expression interval , which our strategy constructed. Next, the union set of expression intervals created by the frame of set —U—satisfies the condition for the subset that satisfies the condition . exists when . We can simplify U using Formula (6).

4. Experiments

4.1. Datasets

Currently, there are no datasets that provide long videos of MEs and MaEs for ALS patients as the subjects in the datasets. However, there are publicly available long video datasets that can be used to evaluate our proposed method, such as the CAS(ME)2 [20] and SAMM Long Videos [21], as shown in Table 1, to provide an overview of each of the datasets. Instead of ALS patients, these datasets include healthy individuals who suppressed their true emotions. Long videos exist to simulate real-life scenarios that occur independently and overlap among MEs and MaEs. Thus, these datasets were used for the ME detection task due to the challenging data provided.

Table 1.

MaE and ME datasets.

CAS(ME)2 [20] was the first dataset made accessible to the public that included ME expressions from lengthy videos, and is mainly used for ME detection. Nine emotional videos out of twenty were chosen as the emotion-eliciting materials, and the participants had a mean age of 22.59 years (standard deviation: 2.2 years). It is assumed that each emotion video will primarily evoke a single emotion. Participants in the simulation process sat in front of a [pixels] visible-light camera operating at 30 frames per second in a room lit by two LED lights. The participants were instructed to watch nine videos randomly while maintaining a neutral expression. Rather than classifying expressions into the seven basic emotions, this dataset was limited to four broad categories (positive, negative, surprise, and others), with the “others” class lacking a clear definition. Participants with comparable ages were chosen, and footage was recorded at a low frame rate of 30 frames per second.

SAMM Long Videos [21] is a collection of long videos explicitly created for ME detection in the ME dataset. The lack of existing datasets for MaE detection and spontaneous ME recognition was the driving force behind the creation of this dataset. It is an expansion of SAMM [25], using the information gathered from these trials. The collection includes 159 MEs and 343 MaEs across 147 lengthy videos. It was recorded at 200 frames per second. Every video has comprehensive AUs and is FACS-coded. Each expression’s offset, apex, and onset frames are also annotated.

4.2. Evaluation Metrics

This work adheres to the evaluation protocol specified by the MEGC2021 detection task to compare our suggested approach with the approaches of other researchers. When the following formula condition is met, the detected interval is compared to the and considered as a True Positive (TP):

represents the ground truth of the ME or MaE from onset to offset, IoU is the Intersection over Union, and according to the Micro-Expression Grand Challenge, MEGC 2020, 2021 and 2022, the is set to 0.5 as the standard value. The recall, precision, and F1-score are denoted by R, P, and F, respectively. The calculations are as follows:

where the numbers of TP, GT, and all predicted intervals are denoted by the variables a, m, and n, respectively.

4.3. Experiment Settings

The represents the frames extended after the offset frame. The same holds for the calculated from the frames per second of the datasets. In the experiment settings where the backbone MobileNet V3 is used, the classification layers are not dropped and are followed by a Random Forest classifier. The Adam optimiser is used without any weight decay, and the initial learning rate is with a learning rate decay of 0.5 at every epoch. The training was be Leave-One-Subject-Out (LOSO) cross-validation of 20 epochs each, with early stopping applied when there are no improvements in validation accuracy for the duration of four epochs. For post-processing parameters such as plot smoothing, peak detection threshold, and pairs and merge parameters, the grid search method was used instead of the manual settings. Since LOSO cross-validation is used, the post-processing parameter was a grid search based on each subject instead of each scene to reduce bias toward each test set.

For hyperparameter tuning, a grid search method was used to iterate and find the optimum hyperparameters for the post-processing section in this experiment. The hyperparameters were iterated for each subject training and each subject had multiple scenes. However, the hyperparameters were tuned to obtain the optimum accuracy for all the scenes of each subject. The hyperparameters used are parameter P, which controls the Moilanen threshold, which can help to define which peaks are significant when set based on the mean and maximum values of the filtered signal. The win and order parameters specify the window size and polynomial order for the Savitzky–Golay filter used to smooth the data. The tiny parameter adjusts the width of the region around each detected peak, while the sigma parameter is used in the Soft Non-Maximum Suppression (soft-NMS) step to regulate the penalty for overlapping peaks. Combining these parameters ensures the flexibility of the peak detection process for different signal properties and peak density conditions.

5. Results and Discussion

5.1. Experiment Results

The proposed method, LGNMNet-RF, shows a competitive performance against baseline methods on the CAS(ME)2 and SAMM-LV datasets, as shown in Table 2. The accuracy of our detection experiment is represented as F1-scores, where the higher the F1-score, the higher the accuracy of our proposed model. The input was the input features of the Main Directional Maximal Difference Analysis (MDMD), Optical Flow (OF), Optical Strain (OS), and Local Contrast Normalization (LCN). LGNMNet-RF significantly outperformed most of the state-of-the-art (SOTA) regarding overall F1-scores, achieving an F1-score of 0.3594 on CAS(ME)² and 0.4118 on SAMM-LV. Its performance in ME detection is particularly noteworthy, with an F1-score of 0.3019 on CAS(ME)² and 0.3604 on SAMM-LV, which are the highest among the compared methods, especially those methods that used optical flow and optical strain input features. This highlights the effectiveness of our LGNMNet-RF approach in handling complex ME detection tasks. The MaE detection F1-score for CAS(ME)2 is slightly lower but remains competitive with SOTA methods such as [3]. However, the MaE F1-score for SAMM-LV was the highest. One of the reasons for the MaE detection F1-score being lower in the CAS(ME)2 dataset might be that the k parameter to produce the MHI for the MaE videos did not have the optimum value. Another reason is that MaE has a longer duration in the CAS(ME)2 dataset as compared to the duration of MaE in the SAMM-LV dataset, which leads to a larger k value being required to generate MHI, and the accumulated intensity over time includes more head movement noise that can reduce the accuracy of MaE detection. However, this proposed method is superior in terms of ME detection because of the shorter duration of MEs, which introduces less noise, and the benefits of the temporal features of accumulating intensity are exploited well. This causes our method to have a stronger ME detection performance compared to its MaE detection performance.

Table 2.

F1-scores ↑ comparison of the proposed method and baseline methods.

5.2. Ablation Study

An experiment that studies the labelling, machine learning classifier, and network architecture’s backbone was conducted to produce a comprehensive analysis of the proposed method. The objective was to analyse the contributions of various components to the proposed model and their influence.

5.2.1. Labelling

The ablation study was conducted on the original IML-based pseudo-labelling and IML-based pseudo-labelling with frame extensions. The effects from the pseudo-labelling were investigated by increasing the parameter linearly according to the k parameter; that is, the average frames for MEs or MaEs in that particular dataset. The main purpose of this was to analyse the effects of pseudo-labelling and the model’s ability to learn from the MHI data provided.

Table 3 and Table 4 shows the results of the ablation study of a comparison between the Softmax classifier and the Random Forest classifier and frame extension pseudo-labelling. The optical flow input features were experimented with using our architecture to compare them with our MHI input feature. Unlike MHI, optical flow images do not have temporal features that require pseudo-labelling with temporal extensions. Optical flow features were tested using Softmax and Random Forest classifiers without any frame extension labelling.

Table 3.

LGNMNet-RF ME F1-scores ↑ with and MaE F1-scores with on CAS(ME)2.

Table 4.

LGNMNet-RF ME F1-scores ↑ of SAMM-LV with .

The results in Table 3 show the ME and MaE detection experiment for the CAS(ME)2 dataset. From Table 3, the results of the ME detection experiment with k = 6 for the CAS(ME)2 dataset show that the MHI method’s performance varied significantly with temporal extension settings. Both classifiers showed poor performance without extension labelling, but with temporal extensions, particularly at s, the Random Forest classifier achieved the best F1-score of 0.3019. In contrast, the Softmax classifier achieved 0.1880 at . This suggests that combining MHI with temporal extensions and Random Forest classification is the most effective approach in this comparative study. Moreover, optical flow input features trained with softmax and Random Forest classifier obtained F1-scores of 0.1529 and 0.2179, respectively, but were significantly lower than our proposed MHI features.

On the other hand, the results of the MaE detection experiment with k = 18 for the CAS(ME)2 dataset show that, for the input images of MHI features, the LGNMNet-RF architecture obtained the highest F1-scores of 0.3400 at s but its scores were still lower than the highest F1-score from LGNMNet without Random Forest classifier of 0.4242 at s. The reason for this is that the MHI features may not capture the larger facial intensity in MaEs. However, optical flow input features obtained similar results, with Softmax and Random Forest classifier of F1-scores, 0.2249 and 0.2215, but both were still lower than the results of MHI features.

Next, the results in Table 4 show the ME and MaE detection experiment for the SAMM-LV dataset. The ME detection experiment with k = 37 shows that the best performance was for the input of the MHI feature, with s at a 0.3604 F1-score. MHI with the LGNMNet architecture with Softmax obtained lower F1-scores. The optical flow input features with the Random Forest classifier achieved a slightly higher F1-score of 0.3842, which is 0.0238 higher than the MHI results. This may be due to the k parameter used to generate the MHI, which might not be the optimum value for the SAMM-LV dataset.

Lastly, in the MaE detection experiment with k = 174 for the SAMM-LV dataset, the MHI input feature with LGNMNet and LGNMNet-RF obtained similar results, but the LGNMNet-RF had the highest F1-score of 0.4444 at s. The optical flow input features with the Random Forest classifier are also slightly higher than the MHI input features, at an F1-score of 0.4778.

Optical flow input features obtained slightly better results for ME and MaE detection in the SAMM-LV dataset but obtained a low F1-score in the CAS(ME)2 dataset. On the other hand, even though the MHI input features obtained a slightly lower F1-score than the optical flow features in SAMM-LV datasets, MHI input features obtained high F1-scores in the CAS(ME)2 dataset.

5.2.2. Machine Learning Classifiers

A Random Forest classifier is used in the proposed method. However, other classifiers, such as basic fully connected layers with Softmax activation layers, Support Vector Classifier (SVC) [30], Linear Regression [31], and k-Nearest Neighbors (k-NN) [32] machine learning classifiers, have been used to analyse the performance of the proposed network architectures.

Table 5 illustrates the experimental findings of different machine learning classifiers using the input feature MHI and LGNMNet architecture with a MobileNet V3 backbone network. It was found that the Random Forest classifier obtained the highest F1-score of 0.3019, followed by SVC, with an F1-score of 0.2542. The lowest F1-score uses the Softmax activation function as a classifier at 0.1374. The second lowest F1-score was obtained with the Linear Regression machine learning classifier, which shows that machine learning classifiers can improve the detection accuracies compared to conventional fully connected layers with the Softmax activation function.

Table 5.

F1-scores ↑ of ME detection with different input features and classifiers of CAS(ME)2.

5.2.3. Network Architecture’s Backbone

Multiple CNN models were experimented with to evaluate the effectiveness of the proposed backbone model for the proposed network, while the other architectures remained the same. The experimental network models were lightweight CNN models with ImageNet pre-trained weights, such as ShuffleNet V2 [33], MobileNet V2 [34], Convnet [35], MaxVit [36], and MobileNet V3 [37].

Table 6 shows the experimental results for the LGNMNet-RF architecture with different backbones, when the Random Forest classifier is replaced with the Softmax activation function. The reason for this experiment was to study the impact of different backbones on the detection results without the aid of machine learning classifiers. The MobileNet V3 network obtained the highest results, with F1-scores of 0.2333, compared to MobileNet V2, which showed an F1-score of 0.1500. Since the MobileNet V2 enhances MobileNet V1, which includes a more optimised convolution, the MobileNet V3 can extract MHI features better than MobileNet V2.

Table 6.

F1-scores ↑ of ME detection with different backbones for CAS(ME)2 with MHI as input feature.

6. Conclusions

This article proposed a deep learning approach called the LGNMNet-RF method based on MHI features to detect ME and MaEs in long videos. When using the temporal features of MHI, these essential features achieved the best results. In addition, the experiment results showed that most of the datasets for the detection of only ME or MaE showed that the Random Forest classifier improved the accuracy more than the conventional Softmax classifier. The detection F1-score for the CAS(ME)2 dataset was 0.3019 for ME and 0.3400 for MaE. The overall F1-score was 0.3594. On the other hand, the detection F1-score for the SAMM-LV dataset was 0.3604 for ME and 0.4444 for MaE, with the overall F1-score being 0.4118. This F1-score for ME surpasses those of related works and shows that the MHI features with pseudo-labelling significantly improve detection accuracy.

Since the manual settings of the k parameter were used, it is possible that the k length of fixed time for ME intervals was not the best choice for each dataset. In the future, a more optimal k parameter could be researched in an in-depth study. It is possible to examine the reasonability and proximity of the FP intervals to the GT intervals by analysing the anticipated TP and false positive (FP) intervals. The reasonability of false positives can be assessed by analysing near-missed periods, even in cases where the IoU is not fulfilled. This study uses three input features: MHI, optical flow, and optical flow with optical strain. The same backbone architecture can be used to analyse each feature’s TP intervals and determine whether they differ from or are the same as each input feature. Following this analysis, a late fusion approach with a two- or three-stream architecture could be suggested. Testing the early fusion method to determine if it can increase the detection task’s accuracy is also possible.

While computation time is an important factor in practical applications, the primary focus of this research was on improving the accuracy of micro-expression detection by advancing the methodologies for a better detection performance. In future studies, the potential optimisation of computation time to make the method more applicable in practical settings will be the focus. Additionally, while our method performs well when faces are directly facing the camera, under conditions similar to those of the training dataset, its robustness in varied scenarios, such as different environments and facial orientations, remains an area for future studies. One of the main challenges in extending this work lies in the difficulty of collecting micro-expression datasets under diverse conditions, given the subtle and brief nature of these expressions, which require precise and controlled environments for accurate labelling. To ensure fairness compared to existing methods, this study focused on widely used benchmark datasets, a common practice in the research community. In future work, expanding datasets and testing the method under more diverse scenarios will be crucial to further enhancing the generalisability and applicability of the proposed approach.

Author Contributions

Conceptualization, M.K.K.T. and T.S.; methodology, M.K.K.T. and T.S.; software, M.K.K.T.; validation, M.K.K.T.; formal analysis, M.K.K.T. and T.S.; investigation, M.K.K.T. and T.S.; resources, M.K.K.T.; data curation, M.K.K.T.; writing—original draft preparation, M.K.K.T.; writing—review and editing, M.K.K.T., H.Z. and T.S.; visualization, M.K.K.T.; supervision, H.Z. and T.S.; project administration, T.S.; funding acquisition, T.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the JSPS KAKENHI Grant Number 23H03787.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this article come from [20,21].

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Tzeplaeff, L.; Wilfling, S.; Requardt, M.V.; Herdick, M. Current state and future directions in the therapy of ALS. Cells 2023, 12, 1523. [Google Scholar] [CrossRef] [PubMed]

- Yap, C.H.; Yap, M.H.; Davison, A.; Kendrick, C.; Li, J.; Wang, S.J.; Cunningham, R. 3D-CNN for facial micro-and macro-expression spotting on long video sequences using temporal oriented reference frame. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 7016–7020. [Google Scholar]

- Liong, G.B.; Liong, S.T.; See, J.; Chan, C.S. MTSN: A Multi-Temporal Stream Network for Spotting Facial Macro- and Micro-Expression with Hard and Soft Pseudo-labels. In Proceedings of the the 2nd Workshop on Facial Micro-Expression: Advanced Techniques for Multi-Modal Facial Expression Analysis, Lisboa, Portugal, 14 October 2022; pp. 3–10. [Google Scholar] [CrossRef]

- Leng, W.; Zhao, S.; Zhang, Y.; Liu, S.; Mao, X.; Wang, H.; Xu, T.; Chen, E. ABPN: Apex and Boundary Perception Network for Micro- and Macro-Expression Spotting. In Proceedings of the the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 7160–7164. [Google Scholar] [CrossRef]

- Bobick, A.F.; Davis, J.W. The recognition of human movement using temporal template. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 257–267. [Google Scholar] [CrossRef]

- Gu, Q.L.; Yang, S.; Yu, T. Lite general network and MagFace CNN for micro-expression spotting in long videos. Multimed. Syst. 2023, 29, 3521–3530. [Google Scholar] [CrossRef]

- Meng, Q.; Zhao, S.; Huang, Z.; Zhou, F. Magface: A universal representation for face recognition and quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14225–14234. [Google Scholar]

- Shreve, M.; Brizzi, J.; Fefilatyev, S.; Luguev, T.; Goldgof, D.; Sarkar, S. Automatic expression spotting in videos. Image Vis. Comput. 2014, 32, 476–486. [Google Scholar] [CrossRef]

- Moilanen, A.; Zhao, G.; Pietikäinen, M. Spotting rapid facial movements from videos using appearance-based feature difference analysis. In Proceedings of the 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 1722–1727. [Google Scholar] [CrossRef]

- Davison, A.K.; Yap, M.H.; Lansley, C. Micro-Facial Movement Detection Using Individualised Baselines and Histogram-Based Descriptors. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015. [Google Scholar] [CrossRef]

- Esmaeili, V.; Shahdi, S.O. Automatic micro-expression apex spotting using Cubic-LBP. Multimed. Tools Appl. 2020, 79, 20221–20239. [Google Scholar] [CrossRef]

- Zhang, L.W.; Li, J.; Wang, S.J.; Duan, X.H.; Yan, W.J.; Xie, H.Y.; Huang, S.C. Spatio-temporal fusion for Macro- and Micro-expression Spotting in Long Video Sequences. In Proceedings of the 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 245–252. [Google Scholar] [CrossRef]

- Borza, D.; Itu, R.; Danescu, R. Real-time micro-expression detection from high speed cameras. In Proceedings of the 2017 13th IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 7–9 September 2017; pp. 357–361. [Google Scholar]

- Wang, S.J.; Wu, S.; Qian, X.; Li, J.; Fu, X. A main directional maximal difference analysis for spotting facial movements from long-term videos. Neurocomputing 2017, 230, 382–389. [Google Scholar] [CrossRef]

- Yin, S.; Wu, S.; Xu, T.; Liu, S.; Zhao, S.; Chen, E. AU-aware graph convolutional network for Macroand Micro-expression spotting. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 228–233. [Google Scholar]

- Xie, Z.; Cheng, S. Micro-Expression Spotting Based on a Short-Duration Prior and Multi-Stage Feature Extraction. Electronics 2023, 12, 434. [Google Scholar] [CrossRef]

- He, E.; Chen, Q.; Zhong, Q. SL-Swin: A Transformer-Based Deep Learning Approach for Macro- and Micro-Expression Spotting on Small-Size Expression Datasets. Electronics 2023, 12, 2656. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Ververas, E.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-shot multi-level face localisation in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5203–5212. [Google Scholar]

- Winnemöller, H.; Kyprianidis, J.E.; Olsen, S.C. XDoG: An eXtended difference-of-Gaussians compendium including advanced image stylization. Comput. Graph. 2012, 36, 740–753. [Google Scholar] [CrossRef]

- Qu, F.; Wang, S.J.; Yan, W.J.; Fu, X. CAS(ME)2: A Database of Spontaneous Macro-expressions and Micro-expressions. In Human-Computer Interaction; Springer: Cham, Switzerland, 2016; pp. 48–59. [Google Scholar] [CrossRef]

- Yap, C.H.; Kendrick, C.; Yap, M.H. SAMM Long Videos: A Spontaneous Facial Micro- and Macro-Expressions Dataset. In Proceedings of the 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG2020), lBuenos Aires, Argentina, 16–20 November 2020; pp. 48–59. [Google Scholar] [CrossRef]

- Yan, W.J.; Wu, Q.; Liu, Y.J.; Wang, S.J.; Fu, X. CASME database: A dataset of spontaneous micro-expressions collected from neutralised faces. In Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; pp. 1–7. [Google Scholar] [CrossRef]

- Yan, W.J.; Li, X.; Wang, S.J.; Zhao, G.; Liu, Y.J.; Chen, Y.H.; Fu, X. CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS ONE 2014, 9, e86041. [Google Scholar] [CrossRef] [PubMed]

- Tran, T.K.; Vo, Q.N.; Hong, X.; Li, X.; Zhao, G. Micro-expression spotting: A new benchmark. Neurocomputing 2021, 443, 356–368. [Google Scholar] [CrossRef]

- Davison, A.K.; Lansley, C.; Costen, N.; Tan, K.; Yap, M.H. SAMM: A spontaneous micro-facial movement dataset. IEEE Trans. Affect. Comput. 2016, 9, 116–129. [Google Scholar] [CrossRef]

- He, Y.; Wang, S.J.; Li, J.; Yap, M.H. Spotting Macro-and Micro-expression Intervals in Long Video Sequences. In Proceedings of the 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 742–748. [Google Scholar] [CrossRef]

- Jingting, L.; Wang, S.J.; Yap, M.H.; See, J.; Hong, X.; Li, X. MEGC2020-the third facial micro-expression grand challenge. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 777–780. [Google Scholar]

- Pan, H.; Xie, L.; Wang, Z. Local Bilinear Convolutional Neural Network for Spotting Macro- and Micro-expression Intervals in Long Video Sequences. In Proceedings of the 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 343–347. [Google Scholar] [CrossRef]

- Liong, G.B.; See, J.; Wong, L.K. Shallow optical flow three-stream CNN for macro-and micro-expression spotting from long videos. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2643–2647. [Google Scholar]

- Cortes, C. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Tikhonov, A.N. On the solution of ill-posed problems and the method of regularization. Dokl. Akad. Nauk SSSR 1963, 151, 501–504. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Tu, Z.; Talebi, H.; Zhang, H.; Yang, F.; Milanfar, P.; Bovik, A.; Li, Y. MaxViT: Multi-axis vision transformer. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 459–479. [Google Scholar]

- Koonce, B.; Koonce, B. MobileNetV3. In Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Apress: Berkeley, CA, USA, 2021; pp. 125–144. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).