1. Introduction

A significant issue in Numerical Functional Analysis is problem-solving in science and engineering using mathematical modeling techniques. A specific problem can be modeled, under certain conditions, as a nonlinear equation in a Banach space.

Let be Banach spaces, D an open convex subset of X, and a nonlinear operator, . The primary focus in studying an iterative method is to find the closest approximation to the solution of the equation . However, it is crucial to ensure that the selected iterative method indeed converges to this approximation. To achieve both convergence and accurate results for the scheme, we can perform studies aimed at the necessary conditions that must be verified by the solution , the initial value , or the operator within the framework of the iterative method.

In Banach spaces, a convergence study can be approached from two perspectives: considering the neighborhood of the solution (referred to as local convergence analysis) or focusing on the initial estimates and the domain (known as semilocal convergence analysis). When we analyze local convergence (see [

1,

2]), conditions are applied on the operators and their respective derivatives at the solution

. In consequence, we obtain the local convergence ball, centered in the solution and with radius

r:

. The elements contained within this ball are possible initial estimates which guarantee convergence, meaning that they are suitable initial points for the scheme to work or for which the sequence of iterates converges to the solution of the problem. Additionally, an error bound is established.

Local convergence analysis plays a significant role in understanding the behavior, efficiency, and reliability of an iterative method. This analysis provides detailed information about how fast an iterative method converges to a solution given an initial estimate. Moreover, through local convergence analysis we are able to identify the conditions under which an iterative method will converge to a solution, concerning initial estimate proximity and smoothness conditions. This analysis often provides error bounds, showing how the error decreases in each iteration, which helps in determining how accurate the current approximation is. In summary, local convergence analysis helps in choosing the right method, refining existing methods, and understanding their behavior under realistic conditions.

Local convergence analysis is essential in science and engineering for guaranteeing the accuracy, efficiency, stability, and adaptability of iterative methods used to solve complex real-world problems. Whether optimizing designs, conducting scientific simulations, solving nonlinear equations, or managing large-scale data, understanding the local convergence properties of an iterative scheme is necessary to achieve reliable and efficient solutions. For example, in Computational Fluid Dynamics (CFD), where simulations of airflow around objects may engage a large number of variables, fast local convergence can save considerable computational cost and make the process feasible. In molecular dynamics, iterative methods are used to study complex systems and the efficiency provided by a local convergence analysis can allow more detailed and expansive simulations. In mechanical engineering, solving the analysis of material deformations often requires iterative methods and local convergence analysis ensures these methods can handle the complexities of the system. In physics and chemistry, where iterative methods are used to solve quantum mechanical equations, local convergence analysis helps ensure accurate energy levels and wave functions are obtained. Computer-Assisted Diagnostic (CAD) systems rely heavily on various computational models and algorithms. These models often use iterative methods to solve complex mathematical problems (such as nonlinear equations, optimization tasks, and machine learning model training) and local convergence analysis is essential in ensuring the accuracy, efficiency, robustness, and adaptability of iterative methods used in CAD systems.

Several studies [

2,

3] have explored, for some iterative methods, local convergence under various continuity conditions. For instance, Argyros in [

4], for the modified Newton’s method for multiple zeros, provides an estimate for the convergence radius, assuming the function meets Hölder and center-Hölder continuity conditions. This result is further refined by Zhou et al. in [

5]. Bi et al. in [

6] studied third-order methods, and they established local convergence using divided differences and its properties. Behl et al. (see [

7]) used Taylor’s expansions to establish a new set of bounds in order to ensure convergence. Zhou and Song (see [

8]) also employed Taylor’s expansions to analyze the local convergence of Osada’s method, but considering that the derivative verifies the center-Hölder continuity condition. In addition, Singh et al. [

9] examined, for a fifth-order iterative scheme, both the local and semilocal convergence under Hölder continuity conditions.

In the semilocal convergence analysis, certain conditions are placed both on the operators and their respective derivatives at

(the initial estimate), and iterations are then carried out. This leads to results such as the existence of the solution, its uniqueness, a priori error bound, the

R-order of convergence of the scheme, and the ascertainment of the convergence region where the operator

is defined. In this work, we will focus on local convergence analysis. Some elements of this study are shown in [

10].

This paper presents an iterative method along with its local convergence analysis for solving the nonlinear equation

, which has a solution

. Consider the (fourth-order) family of iterative schemes studied in [

11], defined for

by:

where

is a parameter and

is the initial estimate.

In order to approximate the solution of the nonlinear equation , it is assumed that the derivative exists in a neighborhood of the solution and that , where is the set of bounded linear operators mapping from Y to X.

In the following sections, this paper addresses these topics: a local convergence analysis is presented in

Section 2. In

Section 3, we undertake a computational efficiency study of the new method and compare it with other existing schemes.

Section 4 includes several numerical examples in order to validate the theoretical results. Concluding observations are summarized in

Section 5.

2. Local Convergence Analysis

In this section, we provide the local convergence analysis for scheme (

1). Let

and

represent the closed and open balls, respectively, in Banach space

X, centered in

(the solution) with radius

. In order to develop a local convergence study, certain conditions are applied on operators

in

, which is presumed to exist. Hence, we consider, for real numbers

and for all

, the conditions listed below:

- (C1)

- (C2)

- (C3)

- (C4)

Cordero et al., in [

11], examined the scheme (

1), applying complex dynamics techniques. However, they did not provide a theoretical analysis of local convergence, which we consider essential for the reasons previously outlined. In

Section 2.1, we perform this study.

2.1. Local Convergence Analysis Using Conditions (C1), (C2) and (C3)

In order to perform the study on local convergence analysis of the scheme, it is appropriate to define specific parameters and functions. Define function

on the interval

by:

Now, consider the function

, then

and

. Consequently,

has at least one zero in

. Let

be the smallest zero in

; it follows that:

Moreover, define function

in the interval

by:

Consider the function

. Following that:

From (

3), we have that

and

, and conclude that

Consequently,

has at least one zero in

. Let

r be the smallest zero in

, we have:

Thus, for ,

Let us consider the following lemma.

Lemma 1. If operator satisfies (C2) and (C3), then the inequalities listed below are verified, for all and : The proof is obvious, considering the following remarks.

Proof of Lemma 1. Then, consequently:

and using (

C2):

In addition,

and by using an adequate integral estimate, we have:

□

In Theorem 1, we present the local convergence result for scheme (

1), under conditions (

C1)–(

C4). For this theorem, condition (

C4) is omitted, and the radius of the convergence ball is determined excluding the use of constant

M.

Theorem 1. Let a differentiable Fréchet operator. Suppose that there exists and such that (C2) and (C3) are satisfied and , where r is the value obtained previously by using auxiliary functions and . The sequence generated by (1) for is well defined for remains in and converges to . In addition, the following estimates hold : Furthermore, if exists such that , then the limit point is the unique solution of equation in .

Proof of Theorem 1. Since

, taking (

C2), for

we have:

Assuming that

. Thus:

Hence, by Banach Lemma on invertible operators,

exists and we have:

Therefore,

is well defined. In (1), for

, we have

:

By taking norms on both sides of the last expression and using (

C3) and (

6), which results in:

where in the last inequality we have used Lemma 1 and the previously defined auxiliary function

. Therefore, taking (

2) and (

3):

Since

, using (

C2) we get:

thus, by Banach Lemma (see [

12]) on invertible operators

exists and:

Hence,

is well defined and we have:

Subtracting

on both sides of the equation:

By taking norms, from (

5), (

6), (

8), and (

9) we obtain:

Taking (

7):

and using the auxiliary function

:

Then, using (

10) and (

4):

It follows that the theorem is verified for

. By using mathematical induction, we replace

with

and have:

where the functions

have been previously obtained. Using

, it is hereby concluded that

. Function

is increasing over its domain, and therefore:

By applying limits in (

11), we get:

where

, and therefore,

. Subsequently, the method converges to the solution.

In order to demonstrate uniqueness, if we have

with

. Let the operator

. Therefore, using (

C2):

For the aforementioned reasons and Banach Lemma,

exists and:

Using the inverse operator

:

hence,

, and therefore,

. It is concluded that the root

is unique. □

2.2. Local Convergence Analysis Using Condition (C4)

In the preceding section, we refrained from using condition (C4), which sometimes is a downside when dealing with practical problems. In this section, condition (C4) is used, enabling us to make a comparison between the radii of local convergence obtained when using M with those obtained excluding this bound. The local convergence analysis is hereby completed.

Proceeding with the notation already established in (

C1)–(

C4), we now define new auxiliary functions

that depend on

M. For the study of local convergence of (

1), the solution

is assumed to exist and conditions are established on operators

in that solution. Therefore, we assume conditions (

C1)–(

C4) for all

and for real numbers

.

Let us define function the following function on the interval

:

Now, consider the function

, then

for

and

. Consequently,

has at least one zero in

. Let

the smallest zero in

, which implies that:

and

Moreover, define function

in the interval

by:

Consider the function

. Following that:

From (

13), we have that

,

and

, and conclude that

Consequently,

has at least one zero in

. Let

be the smallest zero in

, we have:

We will now consider the following lemma.

Lemma 2. Provided that operator satisfies (C1) and (C4), then the inequalities listed below are verified, for all and : The proof of this lemma can be accomplished by using the definition of and C4.

Proof of Lemma 2. Notice that

. Then,

. Using (

16) and (

C4), we obtain

□

We will now present the local convergence result for scheme (

1), given the (

C1)–(

C4) conditions.

Theorem 2. Let be a differentiable Fréchet operator, and . Let be such that conditions (C1)–(C4) are satisfied for all , and moreover, it is verified that and . Then, the sequence generated by (1) for is well defined for remains in and converges to . In addition, the estimates provided below hold the following conditions for :where the functions are defined before the introduction of Theorem 2. Furthermore, if exists such that , then the limit point stands as the unique solution to the equation in . Proof of Theorem 2. We do not include the proof of the preceding theorem as it is nearly the same as Theorem 1. The uniqueness proof is shown in Theorem 1. □

Next, we perform a comparative study of the Efficiency Index

and the Computational Efficiency Index

between schemes (

1), Jaiswal [

13], Choubey and Jaiswal [

14], and Sharma et al. [

15].

3. Efficiency Index (EI) and Computational Efficiency Index (CEI)

Sometimes, evaluating the nonlinear operator

or its derivatives involves a high computational cost, rendering other operations carried out in the iterative process insignificant. An important measure of the robustness of an algorithm is the Efficiency Index

, introduced by Ostrowski in [

16]. It is defined by:

where

p stands for the order of convergence of the method and

d denotes the number of functional evaluations per iteration. It is important to remember that the number of functional evaluations of

and

at each iteration is

N and

, respectively. Thus, for scheme (

1), the number of functional evaluations per iteration is

. Given that order of convergence of the method is

, the efficiency index is:

However, the previous concept does not consider the cost of all operations involved in each iteration. The computational efficiency, which takes these costs into consideration, was introduced by Traub [

17].

In the N-dimensional case, several linear systems have to be solved for each iteration, and therefore, it is important consider the number of operations performed. Let us recall that the number of products/quotients (per iteration) in the direct solution of a linear system is:

and the number of products/coefficients (per iteration) in the direct solution of

M linear systems with the same coefficient matrix, using LU factorization, is:

where

N is the size of the linear systems. The cost increases only in

operations for each linear system solved with the same coefficient matrix. Moreover, matrix–vector products correspond to

operations.

Hence, Cordero et al. in [

18] defined the Computational Efficiency Index

as:

where

p denotes the order of convergence of the method,

d represents the number of functional evaluations per iteration, and

stands for the number of products/quotients per iteration. However, it is pointless to add

d functional evaluations with operations and, for that reason, we will apply to

d a correction factor

, which transforms the number of evaluations into a number of operations, such that:

Therefore, for method (

1), the number of products/quotients per iteration is:

and:

Consequently, the Computational Efficiency Index of the method is:

As previously mentioned, the efficiency indexes are analyzed for other methods and then compared the respective indexes of method (

1) (hereinafter referred to as M1). The methods considered are Jaiswal (see [

13]):

as well as methods proposed by Choubey and Jaiswal (see [

14]), denoted as CHJ:

and Sharma et al. (see [

15]):

The comparisons of the Computational Efficiency Indexes for the aforementioned methods are shown in

Table 1. The notation

and

stands for the number of linear systems to be solved with the same coefficient matrix

as well as with other coefficient matrices, respectively. Moreover, in the case of matrix–vector multiplication (M × V)

products are obtained.

Since method (

1) depends on parameter

, we consider

and

, values which eliminate terms of the iterative expression, reducing the cost of solving the linear systems and the added computational effort. Also, let us consider

. All schemes have the same number of functional evaluations of

and

per iteration, and hence

.

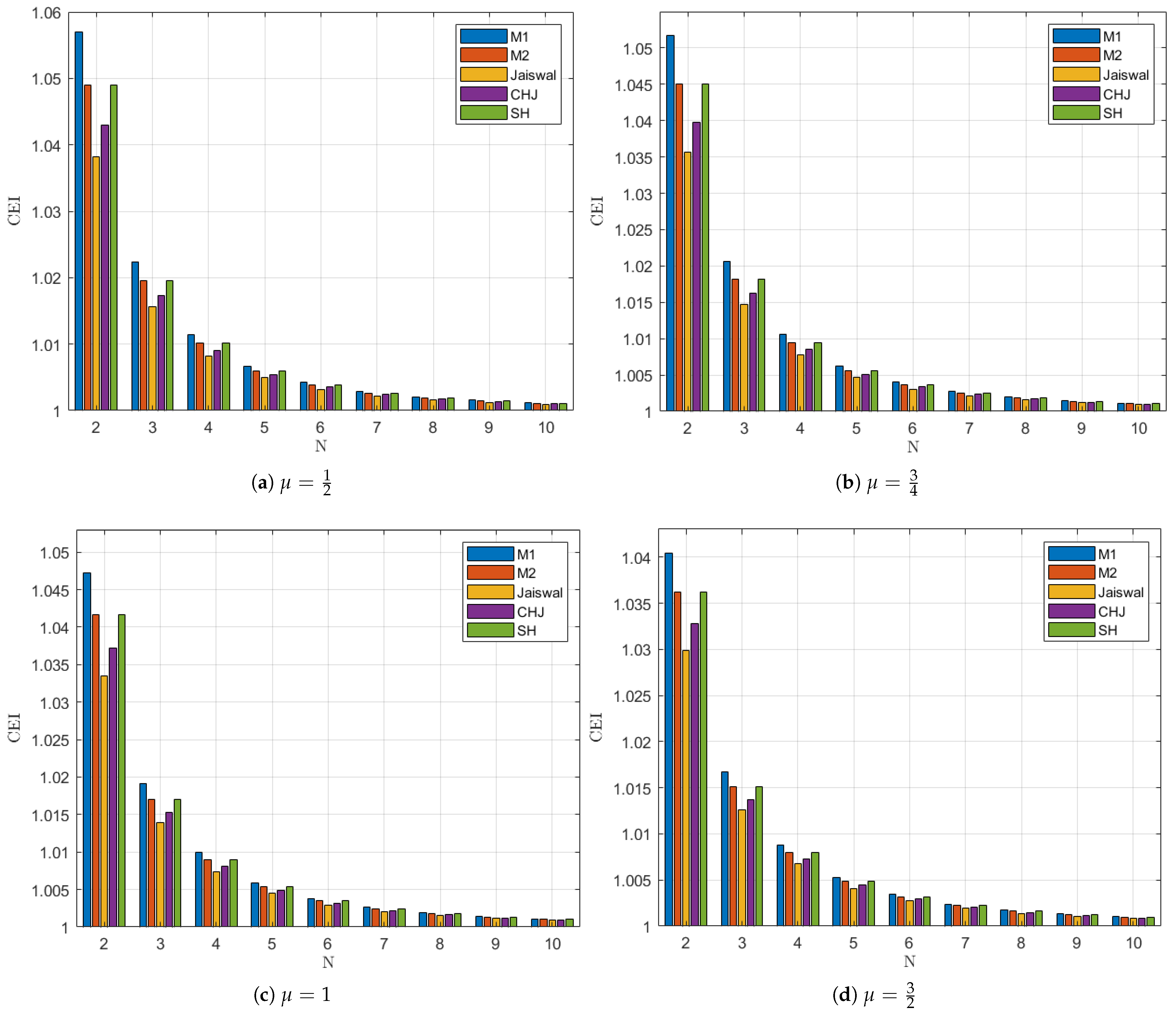

With the results obtained from

Table 1, we represent in

Figure 1 the performance of the efficiency index for the examined schemes.

Figure 1 shows that

for

has better behavior than all the other methods analyzed. Furthermore, Sharma and

for

are second in efficiency. It is also shown that as

increases, the Computational Efficiency decreases for all schemes.

In the next section, some examples are worked out in order to perform numerical tests.

4. Numerical Results

In this section, several numerical examples are provided to validate the efficiency of our analysis for local convergence (Examples 1–3).

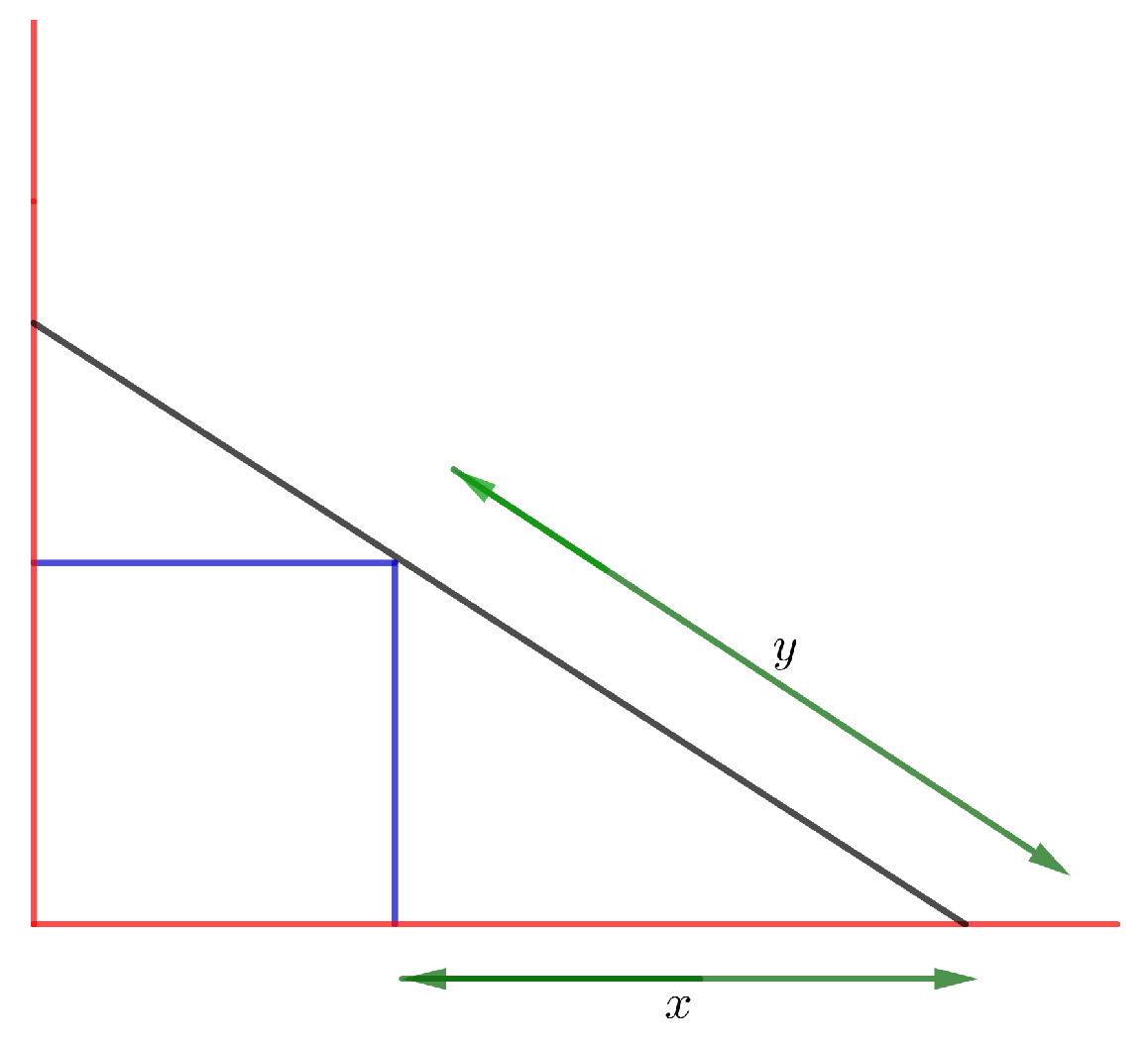

Example 1. The van der Waals equation of state (for a vapor) is (see [19,20]):This equation can be rearranged to the form:in V, where every constant possesses physical significance, with values that can be found in [19]. Let and . Choose kPa and K. Then, the solution V from the resulting equation is . Applying the conditions given in Theorems 1 and 2, we obtain , and . The radii are:The uniqueness radius is: Example 2 (Beam Design Problem)

. Here, we examine a beam positioning problem (see [21]), where a beam of length l units is positioned against the edge of a cubical box with one end placed on the floor and the other end positioned against the wall. The box, with sides of 1 units each, is positioned on the floor braced against the wall and one end rests on the floor, as shown in Figure 2.The triangles formed by the floor, box and beam (lower) and by the box, wall, and beam (upper) are similar.

In this case, the measurement from the base of the wall to where the beam touches the floor is . Suppose y represents the length measured from the floor to the edge of the box along the length of the beam, and let x be the distance measured from the bottom of the box to the lower edge of the beam. Let and . Then, we have: One positive solution of the equation is the zero , and the first derivative is . Applying the conditions given in Theorems 1 and 2, we obtain , and . The radii are: The uniqueness radius is: In Examples 3 and 4, we choose two members of the described iterative method and perform numerical tests to compare them with iterative schemes [

13,

14,

15].

Numerical calculations were executed on a computer with 16 MB RAM and Intel Core i7 processor using MATLAB R2024a software, using variable precision arithmetics with 2000 digits of mantissa. Since we are comparing schemes of high order of convergence, the error tolerance is set at , to reduce the risk of significant error accumulation, and therefore, ensure more accurate results.

For each method, the error estimate between two consecutive iterations is and the residual error is . The stopping criterium is set at . The necessary number of iterations (iter) to converge to the solution is provided.

To validate the theoretical order of convergence p, the ACOC is computed. The execution time, denoted as e-time, required to achieve convergence is displayed (in seconds), based on the average of 10 successive runs for each scheme.

Example 3. Let us examine a system of differential equations that describe the motion of an object given by (see [22]):with initial conditions . Let us assume . Let and . Define function on D for by: The Fréchet derivative is given by: Applying the conditions given in Theorems 1 and 2, we obtain and . The radii are: The uniqueness radius is: The results from

Table 2 show that scheme

for

converges adequately to the solution, and so do CHJ and Jaiswal methods. Scheme

for

does not converge to the solution. We must remember that, according to Theorem 1, the values of

for we can guarantee convergence are those in

. The Sharma scheme does not converge to the solution either.

Example 4. Consider the system of equations of size , with the expression:with . In a similar way to Example 3, the results from

Table 3 show that schemes

for

, CHJ and Jaiswal converge to the solution. Schemes

for

and Sharma fail to converge. According to Theorem 1, the values of

for we can guarantee convergence are those in

.

5. Conclusions

The current paper presents a local convergence study of a class family of iterative schemes within Banach spaces, provided that the Fréchet derivative satisfies the Lipschitz continuity condition. For the convergence balls, the radii have been obtained, according to the existence and uniqueness Theorem. In the examples shown, scheme (

1) gives smaller radii without using

M.

All schemes have the same Ostrowski’s Efficiency Index, since this index focuses exclusively on the number of functional evaluations and the order of convergence. Scheme (

1) has better behavior for

(in terms of Computational Efficiency) than all the other methods analyzed. Furthermore, Sharma and

for

are second in efficiency.

For the Computational Efficiency Index was introduced a correction factor , which transformed the number of evaluations into a number of operations. The value of this parameter can be tested computationally to establish a relationship between evaluating a function and performing arithmetic operations. Nevertheless, the key is to use the same value as in other studies to ensure a proper comparison.

Future works related to this line of research and based on these results are focused on semilocal convergence analysis using majorizing sequences. This study extends the scope of local convergence by not only establishing conditions under which an initial approximation leads to a solution, but also providing the domain of existence of that solution, thus being valid for proving the existence of a solution to a problem for which prior information was not known. This analysis is particularly valuable for demonstrating the existence of solutions and ensuring the reliability of iterative algorithms in functional analysis, optimization, and numerical applications.