Abstract

The Gromov-Wasserstein (GW) formalism can be seen as a generalization of the optimal transport (OT) formalism for comparing two distributions associated with different metric spaces. It is a quadratic optimization problem and solving it usually has computational costs that can rise sharply if the problem size exceeds a few hundred points. Recently fast techniques based on entropy regularization have being developed to solve an approximation of the GW problem quickly. There are issues, however, with the numerical convergence of those regularized approximations to the true GW solution. To circumvent those issues, we introduce a novel strategy to solve the discrete GW problem using methods taken from statistical physics. We build a temperature-dependent free energy function that reflects the GW problem’s constraints. To account for possible differences of scales between the two metric spaces, we introduce a scaling factor s in the definition of the energy. From the extremum of the free energy, we derive a mapping between the two probability measures that are being compared, as well as a distance between those measures. This distance is equal to the GW distance when the temperature goes to zero. The optimal scaling factor itself is obtained by minimizing the free energy with respect to s. We illustrate our approach on the problem of comparing shapes defined by unstructured triangulations of their surfaces. We use several synthetic and “real life” datasets. We demonstrate the accuracy and automaticity of our approach in non-rigid registration of shapes. We provide numerical evidence that there is a strong correlation between the GW distances computed from low-resolution, surface-based representations of proteins and the analogous distances computed from atomistic models of the same proteins.

1. Introduction

In 1776, Gaspard Monge presented an intriguing problem to the French Academy of Science [1]. Consider two domains and in the plane. (referred to as “déblai” by Monge) contains an excess of earth that needs to be transported to (“remblai” in Monge’s terminology). Assuming that the earth at a point in is transported to a position in , and that the masses associated with the point and image are equal, proportional to , the total “cost” C of the transportation is given by

where stands for the distance in the plane. Finding the minimum cost for moving the earth is then akin to finding this transport function F. Monge acknowledged in his presentation that he had not solved this problem on a practical level. This “allocation of ressources” problem became well known, however, reappearing in many disciplines and consequently has been the object of many studies. Erwin Schrödinger, for example, expressed it as the problem of finding how to evolve a probability distribution into another (see [2] for a review), the Schrödinger bridge problem. Kantorovich relaxed the Monge problem by allowing masses to split [3]. He also formulated a method, linear programming, for solving his relaxed version. All these problems are now referred to as the optimal transport problems, or OT. The OT problem is particularly intriguing since its solutions involve two crucial elements. It first specifies a distance between the measured spaces taken into account. This distance is known as the Monge-Kantorovich distance, the Wasserstein distance, or the earth mover’s distance, according to the field of applications. It also derives the optimal transport plan between the measured spaces, thereby defining a registration between the spaces. Consequently, applications of OT have exploded in the recent years (for in-depth reviews of OT and its uses, see [4,5]).

The Monge-Kantorovich OT problem can be formulated as follows. Let A and B be two subsets of a space M with a metric d, and let and be probability measures on A and B, respectively. Let C be a cost function . The objective is to find a coupling G on that minimizes a transportation cost U defined as

The minimum of is to be identified over the couplings G that satisfy the following constraints on their restrictions to the subsets A and B:

It was shown that this minimum exists and that it defines a distance between the two probability measures and that satisfies all metric properties [6].

One key condition for solving the optimal transport problem between two sets A and B is that those sets belong to the same metric space. This allows for the definition of a distance between any point of A and any point of B, and therefore of a cost function between the two sets. In practice, however, the two sets may not be in the same metric space, or, even if they do, the corresponding metric may not be practical. Consider for example two sets of points in ; while it is possible to compute the euclidean distance between any pairs of points belonging to the two sets, inter distances between the two sets depend on the relative position of the two sets, namely a rigid body transformation involving six real-valued parameters (three that define a rotation, three that define a translation). Distances within each set are independent of such transformation; such distances, however, define a different metric space, one for each set of points. Several methods have been developed to use this information within the framework of optimal transport [7,8,9]. Here we are concerned with the Gromov-Wasserstein formalism [8], which has become popular for shape matching [8], for word embedding [10], as well as in the machine learning community, solving learning tasks such as heterogenous domain adaptation [11], deep metric alignment [12], computing distances between graphs [13] and graph classification [14,15], clustering [16] or generative modeling [17], among others. The GW problem can be stated as follows. Let and be two metric spaces, and let and be probability measures on A and B, respectively. The goal is to find a coupling G on that minimizes the transport cost T defined as

with (the most common value for p is 2, as it will be discussed below). The minimum of is to be found over couplings that satisfy the constraints defined in Equation (3). As for the standard Monge-Kantorovich OT presented above, it was proved that a minimum to the transport cost defined in Equation (4) always exists [8]; we write this minimum as . Finding this minimum, however, is a quadratic optimization problem, as opposed to finding the coupling that minimizes the transport cost associated with the OT problem (Equation (2)), which is a linear optimization problem. This minimum of the transportation cost defines a distance over the space of metric measured spaces (i.e., the triplet ) modulo the measure-preserving isometries [8]. When the metrics and are the Euclidean distance over real numbers, i.e., when and (with n not necessarily equal to m), and and (where means the Euclidean norm), and when , an interesting property of is that it is invariant with respect to rigid body transformations (i.e., isometries). This is of significance for example if the GW framework is to be used to compare shapes in space, when this comparison has to be independent of the relative positions of the shapes..

As mentioned above, classical OT is a linear programming problem. It is intriguing, however, that the current successes of OT are not due to recent advances in solving LP problems. They were instead prompted by the idea of entropic regularization, namely minimizing a modified version of the transport cost defined in Equation (2):

where is the parameter that controls the amount of regularization and is an entropy on the coupling G. It is there to impose the positivity of its elements [18]. As the regularized problem tends to the traditional problem. Interestingly, the minimum of the regularized transport cost is a distance that satisfies all metric properties for all values of . This distance is called the Sinkhorn distance [18]. The main advantage of the entropic regularization is that it leads to a strictly convex problem that has a unique solution [18]. In addition, this solution can be found effectively using the Sinkhorn’s algorithm [19,20,21]. Sinkhorn’s algorithms have running times of order , while solving directly the OT problem as a linear program problem has a running time complexity of .

The same entropic regularization can be used the solve the GW problem, originally a quadratic optimization problem which is NP-hard in its general formulation. The idea is the same as for OT, namely add a regularized term to Equation (4) (see [22]):

Note that here it is not that is considered, but its p-th power. While this simplifies the optimization process, it sets to be a “discrepancy” (in the language of Peyré et al. [22]) and not a distance with metric properties. The addition of the entropic regularization led, however, to an iterative algorithm for finding the GW discrepancy, with each iteration amounting to solve a regularized OT problem [22].

While the regularization based on entropy significantly expanded the appeal of OT, there are issues with the numerical convergence of the regularized solution to the actual OT solution. Furthermore, the physical significance of this regularization is unclear, despite its reference to entropy. Using methods from statistical physics, we have recently designed a novel framework for solving the OT problem that alleviate those issues [23,24]. The main idea is to build a strongly concave temperature dependent effective free energy function that encapsulates the constraints of the OT problem. The maximum of this function is proved to define a metric distance in the space of measured sets of points of fixed cardinality for all temperatures. In addition, this distance is proved to decrease monotonically to the regular OT distance at zero temperature. This property enables a robust algorithm for finding the OT distance using temperature annealing. This approach has been adapted to solving the assignment, or Monge problem [25], as well as to the unbalanced optimal transport problem [26]. In this paper we adapt it to solving the GW problem.

The paper is structured as follows. In the next section, we introduce the GW problem and its regularized version for discrete metric measured spaces. The following section covers the specifics of the statistical physics method we propose. All proofs of important properties of this method are given in the appendices. The subsequent section is devoted to the algorithm that implements our method in a C++ program, FreeGW. Next, we present numerical applications to the problem of comparing and registering 3D shapes, using examples based on synthetic data as well as on real data. The conclusion highlights possible future developments.

2. The Discrete Gromov-Wasserstein Problem

This section briefly describes the discrete Gromov Wasserstein transport problem and its regularized version. More thorough descriptions can be found in Refs. [7,8,22].

The discrete version of the GW problem is an optimal transport problem between two discrete probability measures whose supports are metric spaces and with possibly different metrics. Let and be subsets of and with cardinality and , respectively. Each point k in (resp ) is characterized by a “mass” (resp ). We assume balance, namely that . In the following, these sums are set to 1, but the formalism could easily be adapted to handle a different values.

The discrete GW problem is defined as finding a coupling or transport plan G that minimizes the total transport cost U defined as

where p is a fixed integer greater or equal to 1, and the summations extend over all and . Note that G is a matrix of correspondence between points k in and points l in . The minimization is to be performed over those matrices G that satisfy the following constraints

The set of all matrices G for which those conditions (8) are satisfied defines a polytope, which we refer to as .

The minimization of the cost yields an optimal transport plan . We refer to the minimum of the cost as . Note that is not a distance. Its p-root, however, which we write as is a metric distance between and quotiented by measure-preserving isometries [8].

Solving for the transport plan that minimizes Equation (7) under the constraints defined in Equation (8) is a non-convex quadratic optimization problem [27,28] and therefore -hard in the general case (see for example [29]). To circumvent this large computing cost when N is large, following Cuturi’s idea proposed for the optimal transport problem [18], Peyré et al. proposed a regularized version of Equation (7) [22]:

where is a parameter that controls the level of regularization. This parameter scales an entropic term, with the entropy set to , the standard information theory entropy, which imposes the positivity of the terms [18]. This regularized GW problem can then be solved iteratively using a regularized linear optimal transport solver, as described in Algorithm 1.

Algorithm 1 is akin to a sequential quadratic programming method [30]. Briefly, given two sets of weighted points and the intra-set distances between those points, a “cost matrix” between the two sets is defined from the current transport plan G (initialized according to the masses of the points). This cost matrix is then used to solve a regularized linear optimal transport problem. The corresponding optimal transport plan is then used to update the cost matrix, and the procedure is then iterated until the transport plan does not change anymore (within a tolerance), i.e., when the transport plan and the cost matrix are consistent. There are many options to solve the regularized linear optimal transport problem in step 2, such as the Sinkhorn algorithm [20,21] initially proposed for solving the OT problem by Cuturi [18], or stabilized version of this algorithm [31,32,33,34,35], or using our own method based on statistical physics [23,24].

| Algorithm 1 An iterative solver for the regularized GW problem. |

|

Algorithm 1 can be seen as applying successive linear approximation to the quadratic GW problem and as such it is expected to be efficient in computing time. There remains difficulties, however, as:

- (i)

- Solving the regularized OT problem in step 2 is difficult when (a necessary condition to get to the real GW distance).

- (ii)

- Algorithm 1 is basically a fixed point method for which there is no guarantee of convergence. This is discussed in detail in Ref. [22].

- (iii)

- There is no easy option within Algorithm 1 to compute a scaling factor between distances within and distances within . Those distances may have different scales, however, which can significantly impact the numerical stability of the algorithm.

In the following section, we describe a different method for solving the GW problem that attempt to solve at least some of these concerns.

3. A Statistical Physics Approach to Solving the Gromov-Wasserstein Problem

Solving the GW problem amounts to finding the minimum of a function defined by Equation (7) over the space of possible couplings between the two discrete sets of points considered. If this function is reworded as an “energy”, statistical physics allows for a different perspective on how to solve this problem. Indeed, in statistical physics, finding the minimum of an energy function is equivalent to finding the most probable state of the system it characterizes. Here, this system corresponds to the different couplings between between the measured sets of points and . We refer to the space of such couplings (see above). Couplings G in this space satisfy multiple constraints. Their row sums and row columns correspond to the masses associated with and , respectively (see Equation (8)). In addition, their elements are positive, and in fact smaller than one, if we assume that the sums of the masses on and on are both equal to 1 (the fact that these sums are equal is referred to as the balance condition and setting them to 1 is arbitrary but useful, as illustrated below).

A state in this system is then characterized with a coupling G and its energy value as defined by Equation (7). To account for possible differences of scales between the metrics on and , we introduce a scaling factor s between the distances and :

where p is a constant and s is considered as a variable. The probability of finding the system in a state characterized by G and s is:

In this equation, is the inverse of the temperature, namely with the Boltzmann constant and T the temperature. is the partition function defined as

An interesting property of a partition function Z is that most thermodynamic variables of the system can be expressed as functions of Z, or as functions of its derivatives. This is the case for the free energy of the system:

as well as for the average energy by

In addition to finding the coupling matrix G, we want to find the scaling factor s that defines the best match between the two distributions. To reach this goal, we will minimize the free energy with respect to s. The minimized free energy is denoted by and similarly, the average energy computed with the minimal s is denoted as .

We start with an important property of the free energy and of the average energy:

Proposition 1.

For all , the free energy and the average energy are monotonically decreasing functions of β. Both functions converge to from which we can compute the GW distance as .

Proof.

How the functions and of behave as the parameter is increased is studied in Appendix A. □

This approach to solving the GW problem is appealing. It is based on a temperature-dependent free energy with a monotonic dependence on the inverse of the temperature, , and convergence to the actual GW distance at zero temperature. In practice, however, it is of limited interest because the partition function and thus the extrema of the free energy cannot be computed explicitly. We propose using the saddle point approximation to approximate these quantities. We will demonstrate that the corresponding mean field values have the same properties as the exact quantities defined above. These mean field values are easily calculated.

Following the method described in Ref. [23] to impose the constraints that define , the partition function can be rewritten as

To account for the quadratic term in the exponential, we introduce new variables that are constrained to mimic a cost function between and :

Using the Fourier representation of a delta function,

the partition function can be recast with integrals only. To do so, we introduce the Fourier variables , and , with . Omitting the normalization factors , the partition function can then be expressed as,

Note that we have introduced an explicit scaling factor for the variables , and . The corresponding terms are then consistent with the energy term. Note also that the terms within the integrals in Z are now complex functions, while the partition function Z itself is real. For sake of clarity, we include the i in , and , i.e., , and . Those variables are now complex.

After rearrangements,

Shifting ,

We can now perform the integration over the real variables to get

We rewrite this partition function as

where is the effective free energy defined by:

Let and be the expected values of and s with respect to the probability function given in Equation (11) (i.e., the values that lead to this probability to be maximum). It is unfortunately not possible to compute these expected values directly as even though we now have an expression for the partition function, this expression is not analytical. We use instead the concept of a saddle point approximation (SPA). The SPA is computed by searching for the effective free energy extrema with respect to the variables , , , , and s:

These equations define the following system of four equations:

where,

For all real values x, the function is defined and continuous (once we set . It is monotonically decreasing over , with the asymptotes and at and , respectively.

The free energy can then be minimized with respect to s, namely , leading to the equation:

which needs to be solved for s. Given , this equation is polynomial in s, with degree . We will see in the implementation section that in the special case , the solution is easy to obtain.

We have the following property that relates the solutions of the SPA system of equations to the expected values for the transport plan:

Proposition 2.

Let be the expected state of the system with respect to the probability given in Equation (11). is associated with an expected transport plan and optimal scaling factor . Let , , , and be the solutions of the system of Equations (25) and (27). Then the following identities hold,

Note that the solutions are mean field solutions, hence the superscript .

Proof.

See Appendix B. □

Equation (28) shows that each element of is built to be in the range of , namely , as expected by the constraints on G. This optimal coupling matrix is real, and therefore the variables , , and must be real. Otherwise stated, note that the integral defining the partition function (see Equation (22)) does not depend on the choice of the integration path. The saddle point Equation (25) shows that a path parallel to the real axis for each of the variables is preferred.

For a given value of , the expected values define a coupling between and that is an extremum of the free energy defined in Equation (23). This extremum is referred to as while the corresponding optimal internal energy is . Those two values are mean field approximations of the exact free energy and internal energy defined in Equations (13) and (14), respectively. They satisfy the following properties:

Proposition 3.

and are monotonic decreasing functions of the parameter β. They both converge to the GW quantity , with the GW distance being .

Proof.

See Appendix C. □

The benefits of the proposed framework that recasts the GW problem as a temperature dependent process are visible from Proposition 3. First, because of the exponential ratio in the function , the equations provide good numerical stability for computing the optimal coupling matrix G. Second, the energy associated with the solution of the modified problem approaches the traditional GW distance when . Finally, the temperature-dependent convergence is monotonic.

4. Implementation

The preceding section defines a framework for solving the GW optimal transport problem for any value of the parameter p. In practice, most applications consider the square loss with . This leads to two simplifications:

- (i)

- Faster computation of the “cost matrix” .Recall that in the SPA system of equations, the cost matrix C is defined as:with a total time complexity of to compute the whole matrix. In the special case , the absolute value is not necessary and the equation can be rewritten in matrix form aswhere is a vector of ones of dimension N and ⊙ is the Hadamard product. The time complexity of computing C using this equation of , a significant improvement compared to the general case when and are large. This property was already proposed as “Proposition 1” by Peyré et al. [22].

- (ii)

- Computing the scaling factor. In the general case, given the matrix D, solving Equation (27) for the scaling factor s amounts to finding the zeros of a polynomial function of degree , with possibly real roots (see Equation (27)). In the specific case , however, there is a unique solution to this problem, defined as

We have implemented the finite temperature GW framework for in a C++ program FreeGW that is succinctly described in Algorithm 2.

FreeGW is based on multiple iterative procedures. The outer loop performs a temperature annealing: the parameter (inverse of the temperature) is gradually increased. At each value of , the scaling factor and transport plan are computed iteratively. First, they are initialized at their values at the previous temperature (step 3). A cost matrix is then computed (step 4) and a non linear system of equations defined by equations 3 and 4 of the SPA system (25) is solved using an iterative Newton-Raphson method (step 5). This step is akin to solving the optimal transport problem at this temperature. Complete details on how to solve this system can be found in Ref. [23,24]. Once this system is solved for and , a new estimate of the transport plan is derived (step 6). This new transport plan is then used to compute new estimates of the cost matrix (step 4) and of the scaling factor (step 8). The procedure is then iterated over both estimates. When these new estimates do not change anymore (within a tolerance TOL generally set to ), the optimal coupling and the associated energy are calculated. The program stops when the inverse of the temperature has reached its maximum value that was provided as input (usually is set to .

| Algorithm 2 FreeGW: a temperature dependent framework for computing the Gromov Wasserstein Distance between two weighted set of points belonging to two different metric spaces. |

|

5. Computational Experiments

We present experimental results highlighting the advantages of using the GW framework to compare shapes defined by unstructured triangulations of their surfaces. We use synthetic (the TOSCA dataset) and “real life” (protein structures) datasets.

5.1. Shape Similarity: Synthetic Data from TOSCA

We use the Gromov-Wasserstein formalism to detect non-rigid shape similarity. The experiments were performed on meshes taken from the TOSCA non-rigid dataset [36,37]. Eleven classes of objects were considered (see Figure 1): 8 classes of animals, cats (9 poses), dogs (11 poses), gorilla (21 poses), horses (17 poses), seahorses (6 poses), shark (1 pose), wolves (3 poses), two male shapes, Michael (20 poses) and David (15 poses), one female shape, Victoria (24 poses), and one mythical shape, centaurs (6 poses), for a total of 133 shapes. Note that compared to the full TOSCA non rigid dataset, we removed all lions as their meshes had severe topological issues at the level of the mane. Each class consists of the same shape under different poses. These poses are the results of transformations that were designed to mimic non-rigid motions within objects (see [36,37], for details). Note that the different representatives within a class may be represented with different meshes (i.e., in addition to having different geometry those meshes may have different topologies), and may have different genera. Each shape is represented with a triangulated mesh with approximately 3400 vertices and 6600 faces, with the exception of the gorilla and seahorse meshes that include approximately 2100 vertices and 4200 faces. The Euclidean farthest point sampling procedure was used to select 1000 points from each shape’s set of vertices. In brief, one begins by selecting a point at random from the set of vertices. The second point is chosen among the remaining vertices as the one that is at the greatest distance from this first point. Subsequent points are always chosen to maximize the shortest distance to the previous points.

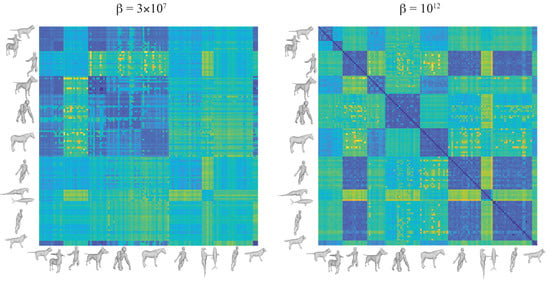

Figure 1.

Distance matrices for shape similarity within the TOSCA dataset using the Gromov-Wassertein framework at two different “temperatures”, (left) and (right). Blue colors represent small distances (high similarity), while yellow colors represent large distances (low similarity).

In each experiment, a pair of shapes i and j is represented with their sets of sampled vertices, and , and the geodesic distance matrices between those vertices, and . The geodesic distances are computed using the method proposed by Mitchell et al. [38] and implemented in the code “geodesic” by Danil Kirsanov, available at https://code.google.com/archive/p/geodesic/, and accessed on 1 June 2020. The masses associated to the vertices are set uniform, equal to , where Nv is the number of vertices. The GW problem is solved using FreeGW up to convergence. At each value of , (see above), defines , i.e., the -th element of the distance matrix over all shapes in our TOSCA dataset. Note that satisfies the properties of a metric distance only when is large (at convergence with respect to ). We generated a set of distance matrices for ranging from to . Figure 1 provides graphical representations of for two different temperatures, and . Note that the discrimination between the different shapes of TOSCA improves as increases.

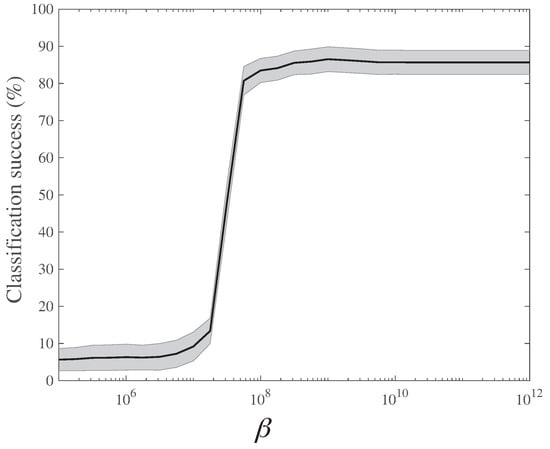

In order to assess quantitatively how well the different distance matrices classify correctly the shapes in TOSCA, we designed the following set of classification experiments. We first built a reference set: we selected randomly half the shapes from each of the 11 classes within TOSCA to form this reference set. Each remaining shape was then classified by considering its distances (derived from ) to all shapes in the reference set, and assigning it to the class of the shape with the shortest such distance (this is a 1-nearest neighbor classification experiment). By comparing this predicted class with the actual class to which the shape belongs we derived an estimate for the probability of correct classification based on . We repeated this procedure over 10,000 random selections of the reference set. In Figure 2, we plot the averaged computed over those 10,000 experiments as a function of the inverse temperature . The lower the temperature (or equivalently the higher the parameter ), the more discriminative the energy is. The highest level of correct classification is already observed for , i.e., significantly before convergence to the distance, which is usually reached for .

Figure 2.

Quality of 3D shape recognition based of the temperature-dependent GW distances as a function of temperature. The probability of classifying correctly a shape into its own class within the TOSCA dataset using the distance measure (see text for details) is plotted against , the inverse of the temperature. The curve is generated from the arithmetic means over 10,000 experiments (see text for details). Shaded areas represent standard deviations over those experiments.

5.2. Shape Correspondence: Synthetic Data from SHREC19

The second test case we consider is shape correspondence: identifying corresponding points between two (or more) 3D shapes. Note that this is different from shape registration, namely finding a transformation that brings one shape “close” to another. Indeed, correspondence can be derived from registration, while the reverse may not be true. The Gromov-Wassertein framework allows for finding correspondence, as the latter is embedded in the optimal transport plan it computes.

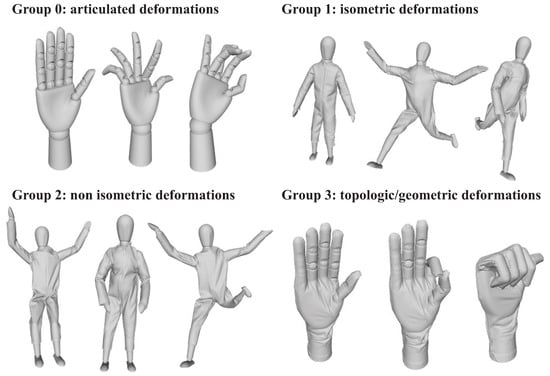

To assess how well GW can recover correspondence, we considered the SHREC19 benchmark [39]. This benchmark includes 3D shapes represented with a triangular mesh of their surfaces. These shapes are derived from 3D scans of real-world objects, with each object being present in multiple poses associated with one or more types of deformation. The deformations are classified into four different groups, referred to as test-sets. Those four groups correspond to articulated deformations (group 0), isometric deformations (group 1), non-isometric deformations (group 2), and topologic/geometric deformations (group 3). Example of shapes for each test-set are provided in Figure 3.

Figure 3.

Examples of shapes in each group of the SHREC19 benchmark [39].

The SHREC19 benchmark includes 76 shape pairs that are selected from the four different groups, and regrouped in four test sets (Table 1). Test-set 0 includes 14 pairs of articulating wooden hands from group 0. Test-set 1 includes 26 pairs of models corresponding to clothed humans as well as hands from group 1. Test-set 2 includes 19 pairs of models from the group 2. Each of those pairs includes a thin clothed mannequin and a larger mannequin, ensuring the the transformation is non-isometric. Finally test-set 3 includes 17 pairs of shapes from group 3 that contain challenging geometric and topological changes. We chose the low resolution version of this benchmark. In this version, each shape is represented by approximately 10,000 vertices and 20,000 triangles. For each pair of shapes, the ground-truth correspondence is known.

Table 1.

Quality of different methods for computing correspondence between 3D shapes.

The quality of shape correspondence is evaluated by measuring normalized geodesics between the ground-truth (available as part of the SHREC19 dataset) and the predicted correspondence that is derived from the GW optimal coupling. Specifically, let be a point on shape X, its predicted correspondence on shape Y and the ground truth position of on Y. Note that and are both on the surface of Y. The normalized geodesic error between the and is computed as:

where is the geodesic distance between and on the surface of Y. The geodesic distance is computed with the algorithm from Mitchell et al. [38], as described in the previous subsection.

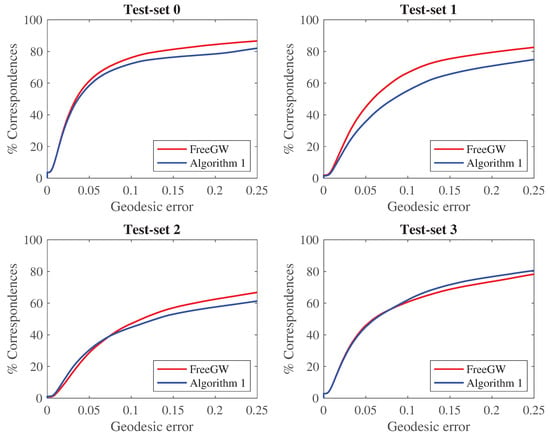

We compared two different implementations of the GW framework, based on two different algorithms, named the fixed point method described in Algorithm 1 and the physics-based algorithm described in Algorithm 2, both implemented in FreeGW. Algorithm 1 uses a fixed regularization parameter, . To make sure that the corresponding transport plan is close to the actual GW transport plan, we chose . Traditional regularized OT solver do not work well for such a small . We chose therefore our own OT solver [23,24] to solve step 2 in Algorithm 1. In contrast, Algorithm 2 is based on an annealing plan with respect to the parameter , such that when is large, the outputs of the program are guaranteed to match the actual GW results.

In each experiment, a pair of shapes i and j is represented with their complete sets of vertices, and , and the geodesic distance matrices between those vertices, and . The masses of the vertices are set uniform. The GW problem is solved using the two algorithms mentioned above. Both derive a transport plan G. For each vertex x on i, its correspondence y on j is set to the index of the maximum value on the row corresponding to x in G. Figure 4 shows the corresponding cumulative geodesic errors for the two algorithms for the four test sets in SHREC19. We observed that Algorithm 2 as implemented in FreeGW performs better for shape correspondence than the simpler Algorithm 1, on all test sets, and significantly better from shapes with isometric deformations (test set 1).

Figure 4.

Cumulative distribution functions for the geodesic errors for the correspondence computed with FreeGW (red), which implements an annealing procedure in the regularization parameter , and computed with Algorithm 1 (blue), that only considers one regularization value (see text for details). Results are shown for all four test sets in SHREC19, that consider articulated deformations (test set 0), isometric deformations (test set 1), non-isometric deformations (test set 2), and topological or geometric deformations (test set 3).

The SHREC19 dataset was originally used as a benchmark for a competition on comparing 3D shape registration that was part of the Workshop on 3D Object Retrieval that was held in Genova, Italy in may 2019. Several groups entered in this competition; results were published in Ref. [39]. Here we compare results based on the GW framework, as presented above, with results from the five top methods that were part of this competition. We briefly describe those five methods below.

In the first method, dubbed RTPS, the correspondence map between the template shape and the target shape is computed iteratively. Each iteration is built from two successive steps. In the first step, the vertices estimated to be in correspondence between the template and target shapes are derived from the registration result obtained from the previous iteration. These computed correspondences are used to derive a correspondence mapping. In the second step, the mapping is updated by using the closest points between template and target shapes identified by the mapping to find additional points that are in correspondence [40]. The second method, NRPA, is also based on registration. It consists of four key components, namely modeling of the deformation (assumed to be anisotropic and non-isometric), computing the correspondences, pruning those correspondences, and optimizing the deformation [41]. The third method, KM, is a kernel-based method, where the registration problem is formulated as matching between a set of pairwise and pointwise descriptors, imposing a continuity prior on the mapping [42]. The fourth method, GISC, uses a genetic algorithm to find the permutation matrix that encodes the correspondences between the vertices of the two shapes to compare [43]. Note that this is the most similar method to the GW formalism, as this permutation matrix is akin to a transport plan. Finally the fifth method, WRAP, is based on the commercial software WARP that includes a wrapping tool that non-rigidly fits one 3D shape to another, from which correspondence can be derived.

We report the results of the comparisons of the qualities of the different methods in Table 1 as the areas under the curve (AUC) for the cumulative distribution functions of the geodesic normalized errors. Results are divided according to the test sets of SHREC19, as well as summarized over all test sets.

There are a few observations we can make based on Table 1. First, the AUC values provides a quantification of the quality of a method for finding correspondence: the larger the value, the better the method. In particular, Table 1 confirms that the correspondences computed from the GW framework with temperature annealing (Algorithm 2) are better than those computed with the fixed point method described in Algorithm 1. Second the four methods based on registration, RTPS, NRP, WRAP, and KM, all perform better than the method that only compute correspondences. This is likely due to the fact the the deformations included in the SHREC19 dataset are all based on a mathematical morphing, and therefore are expected to be captured with a mapping function. Finally, the GW formalism performs better than a genetic algorithm (implemented in GISC). This genetic algorithm is the one closest to the GW formalism in its concept.

5.3. Shape Similarity: Morphodynamics of Protein Structure Surfaces

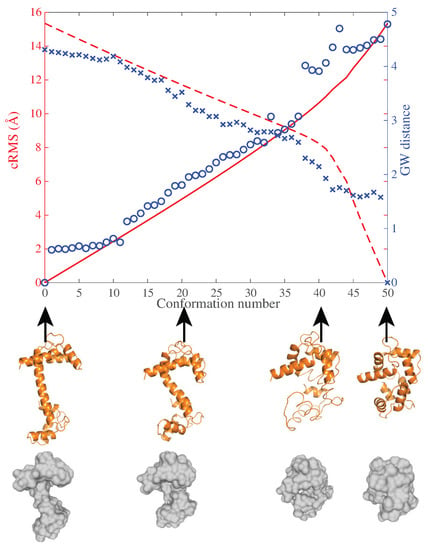

Different experimental techniques lead to different representations of protein structures. For example, high-resolution X-ray crystallography and NMR techniques derive models of proteins that include all their atoms and that are accurate at the Angstrom level. Recent progress on cryo electron microscopy (EM) can now often also reach atomic resolution. However, the difficulty of maintaining the integrity of stable complexes of interest on the various types of grids necessary to mount the sample in thin ice makes it often desirable to resort to negative staining techniques, in which case the resolution is much lower (typically 10–25 Angstroms). A low-resolution model derived from EM techniques is often represented as a density map, namely a shape characterized by its surface. Such a model is often available long before its high-resolution counterpart, as EM techniques are usually easier, faster, and cheaper to implement. It is therefore of interest to develop methods that can analyze the geometry of a protein directly from its EM density map. Such methods should generate information similar to those derived from methods that work directly on the high-resolution model of the protein structure. We consider here the problem of comparing the geometry of two protein structures using the GW distance between the surfaces of their density maps. To assess if this distance obtained from low resolution models of the protein structures mimic what could be found from high-resolution models, we compared it with the cRMS distance between atomistic models of the same proteins. We performed these tests on the protein calmodulin. Results are shown in Figure 5.

Figure 5.

Analyzing the dynamics of the conformational transition of calmodulin using coarse and high resolution models of the protein. We built a trajectory including 51 conformations between the apo (i.e., ligand-free) structure and an holo (i.e., with a ligand-bound) structure of the protein calmodulin using the program MinActionPath [44]. The transition between those two conformations leads to significant changes in the structure, as illustrated with the models of the structures shown below the horizontal axis. For all those 51 conformations, we computed their cRMS distances to the apo structure (structure number 0). These cRMS values are plotted versus the conformation number as a red solid line. In parallel, we plot the GW distance between the surfaces representing the same conformers and the surface of the apo protein as blue dots. The cRMS values and corresponding GW values exhibit a high correlation (0.985). The same observation can be made when comparing the 51 conformations with the holo structure based on cRMS (dashed red line) and based on the GW distance between surfaces (blue x’s). The cartoon representations of the high resolution structures and surface representations of the same structure are shown for a few conformations along the trajectory below the horizontal axis.

Calmodulin is a calcium binding protein that is found in all eukaryotic cells. Its structure looks like a dumbbell, with two small domains separated by a linker region. It is the flexibility of this linker that defines the ability of calmodulin to bind to a wide range of ligands [45].

We considered two conformations for calmodulin, a conformation in the absence of a ligand (referred to as the apo or ligand-free conformation) and a conformation in the presence of a substrate (referred to as holo ligand-bound conformation, where we use interchangeably the terms ligand and substrate to indicate a molecule that binds to calmodulin). Those conformations were found in the database of protein structure, the PDB [46], with codes 1CLL and 1A29, respectively. We built a trajectory between these two conformations. This trajectory is designed to mimic the structural transition that results from the binding of the ligand. We used the program MinActionPath who is designed to generate the most probable trajectory between the two conformers, namely the one with minimal action (for details, see [44]). The trajectory was sampled over 51 conformations, each represented with all the atoms of calmodulin. We then computed the distances between any two of these conformations in two different ways. First, we used the coordinate Root Mean Square (cRMS) distance computed over the atoms of the high-resolution structures (see Refs. [47,48] for details on how to compute the cRMS). Second, we compared the same structures using their skin surfaces [49]. To derive those skin surfaces, we started with the common convention in chemistry to represent a structure as a union of balls, with each ball corresponding to an atom. The coordinates of an atom define the center of a ball that is associated with it. The atom is also characterized with a van der Waals radius based on its chemical nature. The radius of the ball is then set to this vdW radius, plus a probe radius of Å, designed to mimic a water molecule in its proximity. The skin surface is then defined as the boundary of this union of balls. We generated a triangular mesh on the skin surface using the program smesh [50,51]. We found that those meshes have similar sizes for all 51 conformations we considered, with on average approximately 40,000 vertices and 70,000 triangles. 1000 points were selected from each mesh using the Euclidean farthest point sampling procedure described above for the TOSCA dataset. We compared these sampled meshes using FreeGW.

We compared all 51 conformations of calmodulin with both the apo and holo forms, using the cRMS and the GW distances. Results of these calculations are shown in Figure 5. We do observe that the GW distances measured based on the low resolution skin surfaces correlate well with the cRMS distances computed from the high resolution, atomistic representations of the proteins. The correlation have coefficients above 0.96.

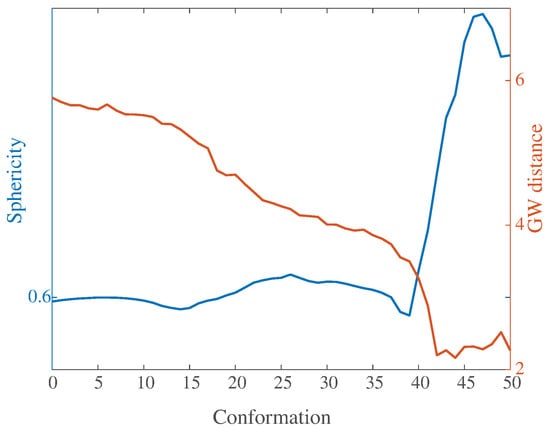

5.4. How Round Is Calmodulin?

A visual inspection of Figure 5 indicates that the ligand-bound conformation of calmodulin is more compact that its ligand-free conformation. To quantify this idea of “compactness” we use two independent measures of the surface of the proteins:

- (i)

- The sphericity S of a surface F quantifies how well it encloses volume. It is expressed as the surface area of an equivalent sphere (i.e., with the same volume V as the volume enclosed by F) divided by the surface area A of F:The sphericity is at most one, and equals one only for the round sphere,

- (ii)

- The GW distance between the surface of the protein and the surface of a round sphere.

We computed these two measures on all 51 conformations of the trajectory described in the previous subsection. Note that to compare the surfaces of the different conformations of calmodulin to the round sphere, we need a triangular mesh on the surface of that sphere. We generated this mesh by placing points uniformly on the sphere and generating a triangulation from these points. We used the Matlab package “Uniform sampling of the sphere” available from [52] to position the points and QHull [53] to generate the triangulation.

We compared the 51 sampled meshes representing the 51 conformations of calmodulin (see above for details) with the mesh representing the surface of the sphere using FreeGW. Results of these calculations are compared to the corresponding sphericity of the meshes computed using Equation (31) in Figure 6. The GW distances and the sphericity are (anti) correlated (correlation coefficient: −0.8): as the sphericity increases, the level of correspondence between the protein surface and the sphere increases, and the GW distance decreases. We observe an inflection point for the sphericity along the trajectory at the 40th conformation: the GW distance shows a similar inflection point at the same conformation. This indicates that the GW distance between a protein represented with its surface and the sphere has value as a tool to assess the compactness of that protein.

Figure 6.

The sphericity (left axis, blue) and the GW distance to the round sphere (right axis, red) of the 51 conformations of calmodulin in its trajectory from the ligand-free to the ligand-bound conformations.

6. Discussion

In the discrete Gromov Wasserstein problem, each set of points considered is characterized by a distance matrix that captures all pairwise distances between the points. When comparing two such sets of points, there is no guarantee that those distances have the same scale. For example, for the problem of comparing 3D shapes discussed in this paper, it is possible that those shapes were captured with different 3D scanners with different internal references. The shapes themselves may have different scales, for example when comparing animals of different sizes. One approach to circumvent this problem is to normalize the corresponding distance matrices, for example by setting the largest distance in each matrix to be 1. This approach is not optimal, especially in the presence of noise, as it is biased towards a single distance. While there are other ways to normalize a distance matrix, we have used a different approach to handle the scaling problem. Instead of arbitrarily scaling the distance matrices, we have added a free scaling parameter in our approach that is concurrently optimized with the transport plan between the two sets of points.

One of the current limitation of the algorithm we propose, Algorithm 2 implemented in FreeGW, is that it is demanding in computing power. It includes three nested loops: the outer loop controls the temperature annealing, the middle loop allows for an iterative update of the scaling factor, while the most inner loop is used to solve the SPA system at a given scaling factor by iterating over a cost matrix between the two sets of points. In addition, this SPA system is non linear and therefore it is also solved iteratively (see Refs. [23,24]). We have used a Newton-Raphson approach to solve this system. It should be noted that this approach requires that the Hessian of the free energy be computed (i.e., the Jacobian of the system of non linear equation). There are ways, however, to solve the system without the need to compute second derivatives (see for example Ref. [54]). We will try such alternate approaches in future work. For large problems, the overall computational cost can become large. For example, comparing two shapes of the SHREC19 benchmark, each with 10,000 points, require on average 12,500 s (i.e., approximately 3.5 h). There are several options to reduce this computing time. First, all calculations presented in this paper were run to convergence, i.e., up to an inverse temperature . As shown in Figure 2, if the problem is to classify shapes, there is no need to go to such a large value for . Second, the size of the problem itself can be reduced by sampling: this is the approach we used for comparing shapes in the TOSCA dataset for example. Comparing two shapes of the TOSCA dataset, each with 1000 points, require on average 70 s. However, none of those solutions are general. For instance, sampling cannot be applied if we are interested directly in the transport plan between the two sets of points, and not just in the optimized distance between the sets. We will work on the problem of optimizing the running time of our algorithm in future studies.

7. Conclusions

In this study, we developed a novel method based on statistical physics for solving the discrete Gromov Wasserstein (GW) problem. Given two sets of measured points and associated with two possibly different metric spaces, the GW problem amounts to finding a correspondence between those points, stored in a transport plan, which minimizes an energy based on comparisons of pairwise distances within each set. We build a free energy function that, at a finite temperature, reflects the GW problem’s constraints. While the extremum of this free energy cannot be computed exactly, it can be estimated using a saddle point approximation. At each temperature, the corresponding mean field solution defines an optimal coupling between the two discrete probability measures that are compared, and a distance between those measures. We proved that this distance approaches the traditional GW distance when in a monotonic way, thereby amenable to temperature annealing. We have illustrated the usefulness of our approach on the problem of comparing shapes defined by unstructured triangulations of their surfaces and revealed that it allows for accurate and automatic non-rigid registration of shapes. We have shown that the GW distances computed from low-resolution, surface-based representations of proteins correlate well with the corresponding distances computed from atomistic models for the same proteins.

It is important to realize that the method we have proposed to solve the GW problem only applies under the assumption of balance, namely to problems in which the sum of the masses on the two discrete set of points are equal. This is often too restrictive in many applications, such as those in which only a partial mapping is sought out. The unbalanced GW problem is an open problem [55], which we intend to work on.

Author Contributions

Conceptualization, P.K., M.D. and H.O.; methodology, P.K., M.D. and H.O.; software, P.K.; formal analysis, P.K., M.D. and H.O.; investigation, P.K., M.D. and H.O.; writing: original draft preparation, P.K., M.D. and H.O. All authors have read and agreed to the published version of the manuscript.

Funding

PK acknowledges support from the National Science Foundation (grant no.1760485).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The work discussed here originated from a visit by P.K. at the Institut de Physique Théorique, CEA Saclay, France, during the fall of 2019. He thanks them for their hospitality and financial support.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Property 1: Monotonicity of the Free Energy and Average Energy

Let us consider two sets of points and embedded in two metric spaces and and mass vectors and , respectively. We associate to this system a transport plan polytope and a scaling factor s between distances within and distances within . Recall that any matrix G in this polytope satisfies the three conditions in Equation (8). The free energy , internal energy , and entropy of this system are related through the general relation , where T is the temperature and .

We first prove that the volume of the polytope is smaller than 1. Indeed, considering the constraints that define this polytope, we have

As the take values between 0 and 1, and as the delta functions restrain the space of possible transport plans, we have indeed that

The internal energy is the thermodynamic average of the energy (see Equation (10)) and is given by

while the entropy is given by

An important implication of these relations is that

where the thermodynamics averages are computed over for s and over the polytope for G. The quantity on the right is minus the variance of the energy. It is therefore negative and this is true for all values of . This property is true for all s and therefore for , the optimal value of s. Therefore,

As a result, the internal energy of the system decreases as increases. As is positive, is positive: it has a limit when . This limit is the traditional GW quantity (see Section 2).

The entropy is negative. Indeed, as the total number of states at an energy is given by,

As the volume of the polytope is smaller than 1 (see above),

which implies that

Since , and all the properties above are valid for all s, they are valid in particular for the value which minimizes the free energy. Taking , we get for all (or equivalently for all T). The free energy is related to the entropy by

Consequently,

Therefore the free energy of the system decreases as increases. Its limit for is the same as the limit of , namely the GW quantity , with the GW distance being .

Appendix B. Proof of Proposition 2: Retrieving the Transport Plan from the SPA Solutions

Let us first recall the definition of the partition function (Equation (22))

and of the corresponding effective free energy (Equation (24))

is a function of variables, namely and for , for , for , and s. The values of these variables that solve the SPA conditions and minimize with respect to s are referred to as , , , and , respectively.

To find the expected values we need to introduce a vector field and modify the partition function:

Following the same procedure as described in the main text for evaluating this modified partition function, we find,

Then, the expected transport between point k in and point l in is given by

i.e.,

Appendix C. Proof of Proposition 3: Monotonicity and Limits of F MF (β) and U MF (β)

In Appendix A we have established that the exact free energy and internal energy defined in Equations (13) and 14, respectively, are monotonic functions of the parameter , and converge to when . Here we consider the approximation of those quantities obtained with the saddle point approximation, namely the mean field values and , and show that they satisfy the same properties.

Appendix C.1. Monotonicity of the Free Energy

The effective free energy defined in Equation (23) is a function of the distance matrices and and of the real unconstrained variables , , , , and s. For sake of simplicity, for any , we define:

The effective free energy is then

As written above, is a function of the variables , , , , , and s. However, under the saddle point approximation, with , namely its optimal value, the free energy takes the value , with the following constraints,

for all and all . In the following, we will use the notations and to differentiate between the total derivative and partial derivative of with respect to , respectively. Based on the chain rule,

Using the constraints defined in Equation (A14), we find that

namely that the total derivative with respect to is in this specific case equal to the corresponding partial derivative, which is easily computed to be

where , as defined in Equation (26). Let . As mentioned in the main text of the paper, is monotonically constrained in the interval and therefore correctly represent the possible values for the corresponding transport plan. The function is continuous and defined over all real values x (with the extension ) and is bounded above by 0, i.e., . As

we conclude that

namely that is a monotonically decreasing function of . In addition, we note that is the mean field approximation of the true free energy and that this approximation becomes exact when tends to ∞. Therefore,

where where is the traditional GW distance between the two sets of points and under the metric and , respectively.

Appendix C.2. Monotonicity of the Energy

Let be the transport plan at the temperature , and let

and the corresponding meanfield approximation of the internal energy at the saddle point and minimum of ,

At the saddle point, we have:

where and x are defined above.

Before computing , let us first notice that by replacing Equation (A13) into Equation (A18), and using the constraints above, we get:

Note that this equation can be rewritten as,

i.e., it extends the relationship shown in Equation (A3) known between the true free energy and the average energy to their mean field counterparts.

Based on the chain rule,

We compute the different partial derivatives of in this equation based on Equation (A26). For example,

where the zero is a consequence of the SPA constraints. Similarly, we can show that

As is always positive, and is always negative, we have

and the function is a monotonically decreasing function of . In addition, we note that is the mean field approximation of the true internal energy and that this approximation becomes exact when tends to ∞. Therefore,

where where is the traditional GW distance between the two sets of points and under the metric and , respectively.

References

- Monge, G. Mémoire sur la theorie des deblais et des remblais. Hist. l’Acad. R. Sci. Mem. Math. Phys. Tires Regist. Cette Acad. 1781, 1784, 666–704. [Google Scholar]

- Léonard, C. A survey of the Schrödinger problem and some of its connections with optimal transport. Discret. Contin. Dyn. Syst. Ser. A 2014, 34, 1533–1574. [Google Scholar] [CrossRef]

- Kantorovich, L. On the transfer of masses. Dokl. Acad. Nauk. USSR 1942, 37, 7–8. [Google Scholar]

- Villani, C. Optimal Transport: Old and New; Grundlehren der Mathematischen Wissenschaften; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Peyré, G.; Cuturi, M. Computational Optimal Transport. arXiv 2018, arXiv:1803.00567. [Google Scholar]

- Villani, C. Topics in Optimal Transportation; Graduate Studies in Mathematics; American Mathematical Society: Providence, RI, USA, 2003. [Google Scholar]

- Mémoli, F. On the use of Gromov-Hausdorff Distances for Shape Comparison. In Proceedings of the Eurographics Symposium on Point-Based Graphics, Prague, Czech Republic, 2–3 September 2007; pp. 81–90. [Google Scholar]

- Mémoli, F. Gromov-Wasserstein distances and the metric approach to object matching. Found. Comput. Math. 2011, 11, 417–487. [Google Scholar] [CrossRef]

- Boyer, D.; Lipman, Y.; StClair, E.; Puente, J.; Patel, B.; Funkhouser, T.; Jernvall, J.; Daubechies, I. Algorithms to automatically quantify the geometric similarity of anatomical surface. Proc. Natl. Acad. Sci. USA 2011, 108, 18221–18226. [Google Scholar] [CrossRef] [PubMed]

- Alvarez-Melis, D.; Jaakkola, T.S. Gromov-Wasserstein alignment of word embedding spaces. arXiv 2018, arXiv:1809.00013. [Google Scholar]

- Yan, Y.; Li, W.; Wu, H.; Min, H.; Tan, M.; Wu, Q. Semi-Supervised Optimal Transport for Heterogeneous Domain Adaptation. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; Volume 7, pp. 2969–2975. [Google Scholar]

- Ezuz, D.; Solomon, J.; Kim, V.G.; Ben-Chen, M. GWCNN: A metric alignment layer for deep shape analysis. In Proceedings of the Computer Graphics Forum, Lyon, France, 24–28 April; 2017; Volume 36, pp. 49–57. [Google Scholar]

- Nguyen, D.H.; Tsuda, K. On a linear fused Gromov-Wasserstein distance for graph structured data. Pattern Recognit. 2023, 138, 109351. [Google Scholar] [CrossRef]

- Titouan, V.; Courty, N.; Tavenard, R.; Flamary, R. Optimal transport for structured data with application on graphs. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6275–6284. [Google Scholar]

- Zheng, L.; Xiao, Y.; Niu, L. A brief survey on Computational Gromov-Wasserstein distance. Procedia Comput. Sci. 2022, 199, 697–702. [Google Scholar] [CrossRef]

- Chowdhury, S.; Needham, T. Generalized spectral clustering via Gromov-Wasserstein learning. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 13–15 April 2021; pp. 712–720. [Google Scholar]

- Bunne, C.; Alvarez-Melis, D.; Krause, A.; Jegelka, S. Learning generative models across incomparable spaces. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 851–861. [Google Scholar]

- Cuturi, M. Sinkhorn Distances: Lightspeed Computation of Optimal Transport. In Advances in Neural Information Processing Systems 26; Burges, C.J.C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2013; pp. 2292–2300. [Google Scholar]

- Deming, W.E.; Stephan, F.F. On a least squares adjustment of a sampled frequency table when the expected marginal totals are known. Ann. Math. Stat. 1940, 11, 427–444. [Google Scholar] [CrossRef]

- Sinkhorn, R. A relationship between arbitrary positive matrices and doubly stochastic matrices. Ann. Math. Stat. 1964, 35, 876–879. [Google Scholar] [CrossRef]

- Sinkhorn, R.; Knopp, P. Concerning nonnegative matrices and doubly stochastic matrices. Pacific J. Math. 1967, 21, 343–348. [Google Scholar] [CrossRef]

- Peyré, G.; Cuturi, M.; Solomon, J. Gromov-Wasserstein Averaging of Kernel and Distance Matrices. In Proceedings of the Proceeding ICML’16, New York, NY, USA, 19–24 June 2016; pp. 2664–2672. [Google Scholar]

- Koehl, P.; Delarue, M.; Orland, H. A statistical physics formulation of the optimal transport problem. Phys. Rev. Lett. 2019, 123, 040603. [Google Scholar] [CrossRef] [PubMed]

- Koehl, P.; Delarue, M.; Orland, H. Finite temperature optimal transport. Phys. Rev. E 2019, 100, 013310. [Google Scholar] [CrossRef] [PubMed]

- Koehl, P.; Orland, H. Fast computation of exact solutions of generic and degenerate assignment problems. Phys. Rev. E 2021, 103, 042101. [Google Scholar] [CrossRef]

- Koehl, P.; Delarue, M.; Orland, H. Physics approach to the variable-mass optimal-transport problem. Phys. Rev. E 2021, 103, 012113. [Google Scholar] [CrossRef]

- Gould, N.I.; Toint, P.L. A quadratic programming bibliography. Numer. Anal. Group Intern. Rep. 2000, 1, 32. [Google Scholar]

- Wright, S. Continuous optimization (nonlinear and linear programming). In The Princeton Companion to Applied Mathematics; Higham, N., Dennis, M., Glendinning, P., Martin, P., Sentosa, F., Tanner, J., Eds.; Princeton University Press: Princeton, NJ, USA, 2015; pp. 281–293. [Google Scholar]

- Pardalos, P.; Vavasis, S. Quadratic programming with one negative eigenvalue is (strongly) NP-hard. J. Glob. Optim. 1991, 1, 15–22. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.J. Quadratic programming. Numer. Optim. 2006, 448–492. [Google Scholar]

- Benamou, J.; Carlier, G.; Cuturi, M.; Nenna, L.; Peyré, G. Iterative Bregman Projections for Regularized Transportation Problems. SIAM J. Sci. Comput. 2015, 37, A1111–A1138. [Google Scholar] [CrossRef]

- Genevay, A.; Cuturi, M.; Peyré, G.; Bach, F. Stochastic Optimization for Large-scale Optimal Transport. In Advances in Neural Information Processing Systems 29; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 3440–3448. [Google Scholar]

- Schmitzer, B. Stabilized Sparse Scaling Algorithms for Entropy Regularized Transport Problems. arXiv 2016, arXiv:1610.06519. [Google Scholar] [CrossRef]

- Dvurechensky, P.; Gasnikov, A.; Kroshnin, A. Computational Optimal Transport: Complexity by Accelerated Gradient Descent Is Better Than by Sinkhorn’s Algorithm. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1367–1376. [Google Scholar]

- Chizat, L.; Peyré, G.; Schmitzer, B.; Vialard, F.X. Scaling Algorithms for Unbalanced Transport Problems. Math. Comp. 2018, 87, 2563–2609. [Google Scholar] [CrossRef]

- Bronstein, A.; Bronstein, M.; Kimmel, R. Efficient computation of isometry-invariant distances between surfaces. SIAM J. Sci. Comput. 2006, 28, 1812–1836. [Google Scholar] [CrossRef]

- Bronstein, A.; Bronstein, M.; Kimmel, R. Calculus of non-rigid surfaces for geometry and texture manipulation. IEEE Trans. Vis. Comput. Graph 2007, 13, 902–913. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, J.; Mount, D.; Papadimitriou, C. The discrete geodesic problem. SIAM J. Comput. 1987, 16, 647–668. [Google Scholar] [CrossRef]

- Dyke, R.M.; Stride, C.; Lai, Y.K.; Rosin, P.L.; Aubry, M.; Boyarski, A.; Bronstein, A.M.; Bronstein, M.M.; Cremers, D.; Fisher, M.; et al. Shape Correspondence with Isometric and Non-Isometric Deformations. In Proceedings of the Eurographics Workshop on 3D Object Retrieval; Biasotti, S., Lavoué, G., Veltkamp, R., Eds.; The Eurographics Association: Eindhoven, The Netherlands, 2019. [Google Scholar]

- Li, K.; Yang, J.; Lai, Y.K.; Guo, D. Robust non-rigid registration with reweighted position and transformation sparsity. IEEE Trans. Visual. Comput. Graphics 2018, 25, 2255–2269. [Google Scholar] [CrossRef]

- Dyke, R.; Lai, Y.K.; Rosin, P.; Tam, G. Non-rigid registration under anisotropic deformations. Comput. Aided Geom. Des. 2019, 71, 142–156. [Google Scholar] [CrossRef]

- Vestner, M.; Lähner, Z.; Boyarski, A.; Litany, O.; Slossberg, R.; Remez, T.; Rodolà, E.; Bronstein, A.; Bronstein, M.; Kimmel, R.; et al. Efficient deformable shape correspondence via kernel matching. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 517–526. [Google Scholar]

- Sahillioğlu, Y. A genetic isometric shape correspondence algorithm with adaptive sampling. ACM Trans. Graph. (ToG) 2018, 37, 1–14. [Google Scholar] [CrossRef]

- Franklin, J.; Koehl, P.; Doniach, S.; Delarue, M. MinActionPath: Maximum likelihood trajectory for large-scale structural transitions in a coarse-grained locally harmonic energy landscape. Nucl. Acids. Res. 2007, 35, W477–W482. [Google Scholar] [CrossRef]

- Chou, J.; Li, S.; Klee, C.; Bax, A. Solution structure of Ca(2+)-calmodulin reveals flexible hand-like properties of its domains. Nat. Struct. Biol. 2001, 8, 990–997. [Google Scholar] [CrossRef]

- Berman, H.; Westbrook, J.; Feng, Z.; Gilliland, G.; Bhat, T.; Weissig, H.; Shindyalov, I.; Bourne, P. The Protein Data Bank. Nucleic Acids Res. 2000, 28, 235–242. [Google Scholar] [CrossRef] [PubMed]

- Kabsch, W. A solution for the best rotation to relate two sets of vectors. Acta Crystallogr. 1976, 32, 922–923. [Google Scholar] [CrossRef]

- Coutsias, E.; Seok, C.; Dill, K. Using quaternions to calculate RMSD. J. Comput. Sci. 2004, 25, 1849–1857. [Google Scholar] [CrossRef] [PubMed]

- Edelsbrunner, H. Deformable Smooth Surface Design. Discret. Comput. Geom. 1999, 21, 87–115. [Google Scholar] [CrossRef]

- Cheng, H.; Shi, X. Guaranteed Quality Triangulation of Molecular Skin Surfaces. In Proceedings of the IEEE Visualization, Austin, TX, USA, 10–15 October 2004; pp. 481–488. [Google Scholar]

- Cheng, H.; Shi, X. Quality Mesh Generation for Molecular Skin Surfaces Using Restricted Union of Balls. In Proceedings of the IEEE Visualization, Minneapolis, MN, USA, 23–28 October 2005; pp. 399–405. [Google Scholar]

- Semeshko, A. Suite of Functions to Perform Uniform Sampling of a Sphere. GitHub. Available online: https://github.com/AntonSemechko/S2-Sampling-Toolbox (accessed on 2 January 2023).

- Barber, C.B.; Dobkin, D.; Huhdanpaa, H. The Quickhull Algorithm for Convex Hulls. ACM Trans. Math. Softw. 1996, 22, 469–483. [Google Scholar] [CrossRef]

- Abdul-Hassan, N.Y.; Ali, A.H.; Park, C. A new fifth-order iterative method free from second derivative for solving nonlinear equations. J. Appl. Math. Comput. 2021, 68, 2877–2886. [Google Scholar] [CrossRef]

- Séjourné, T.; Peyré, G.; Vialard, F.X. Unbalanced Optimal Transport, from theory to numerics. arXiv 2022, arXiv:2211.08775. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).