Efficient Online Engagement Analytics Algorithm Toolkit That Can Run on Edge

Abstract

1. Introduction

1.1. The Future of Workplaces and Meetings

1.2. The Problems

1.3. Understanding Audience

1.4. Concerns

1.5. Existing Proctoring Solutions

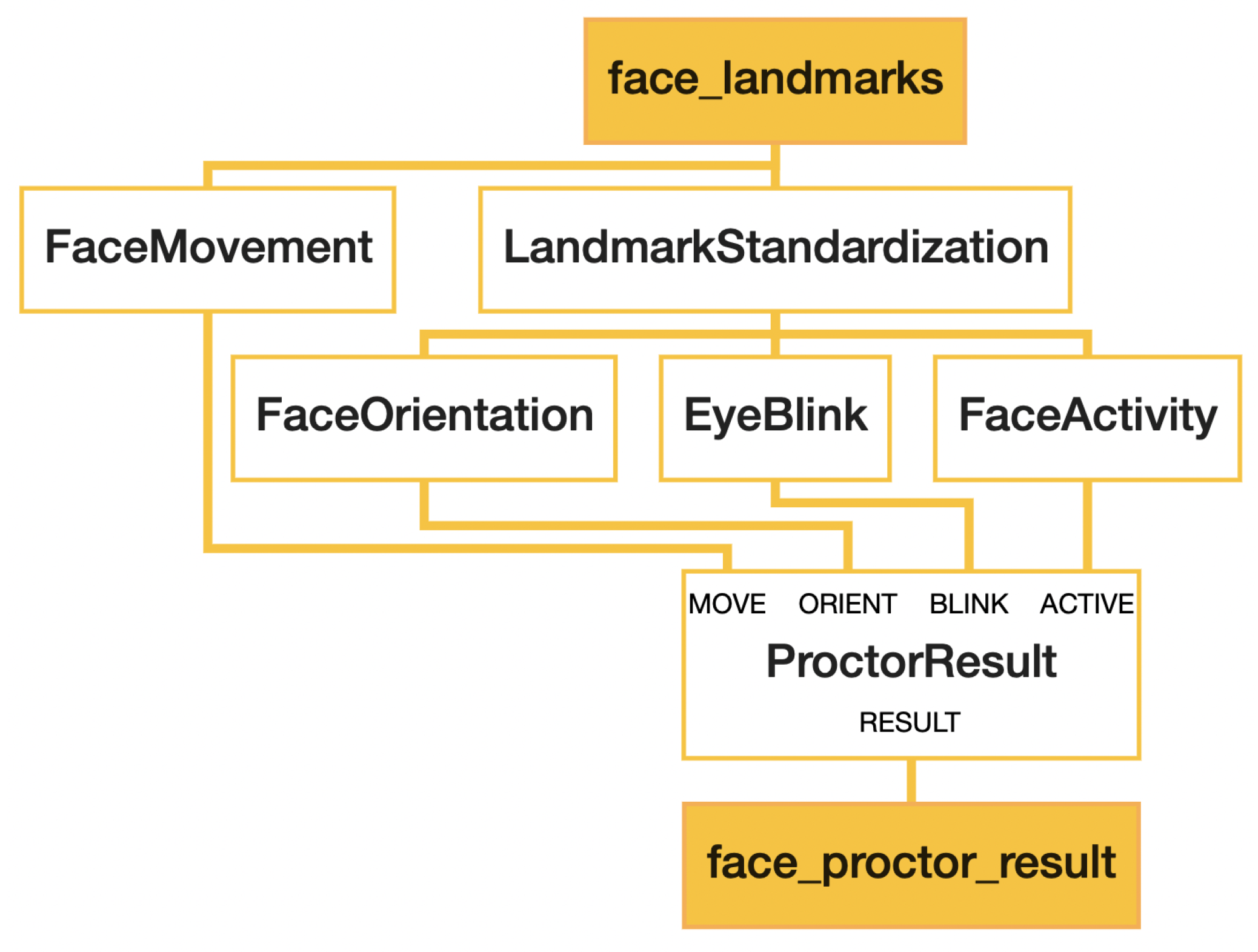

1.6. A Toolkit for Proctoring

1.7. MediaPipe

1.8. Contributions

2. Related Works

2.1. Landmark Standardization

2.2. Head Pose Estimation

2.3. Eye Blink Detection

3. Materials and Methods

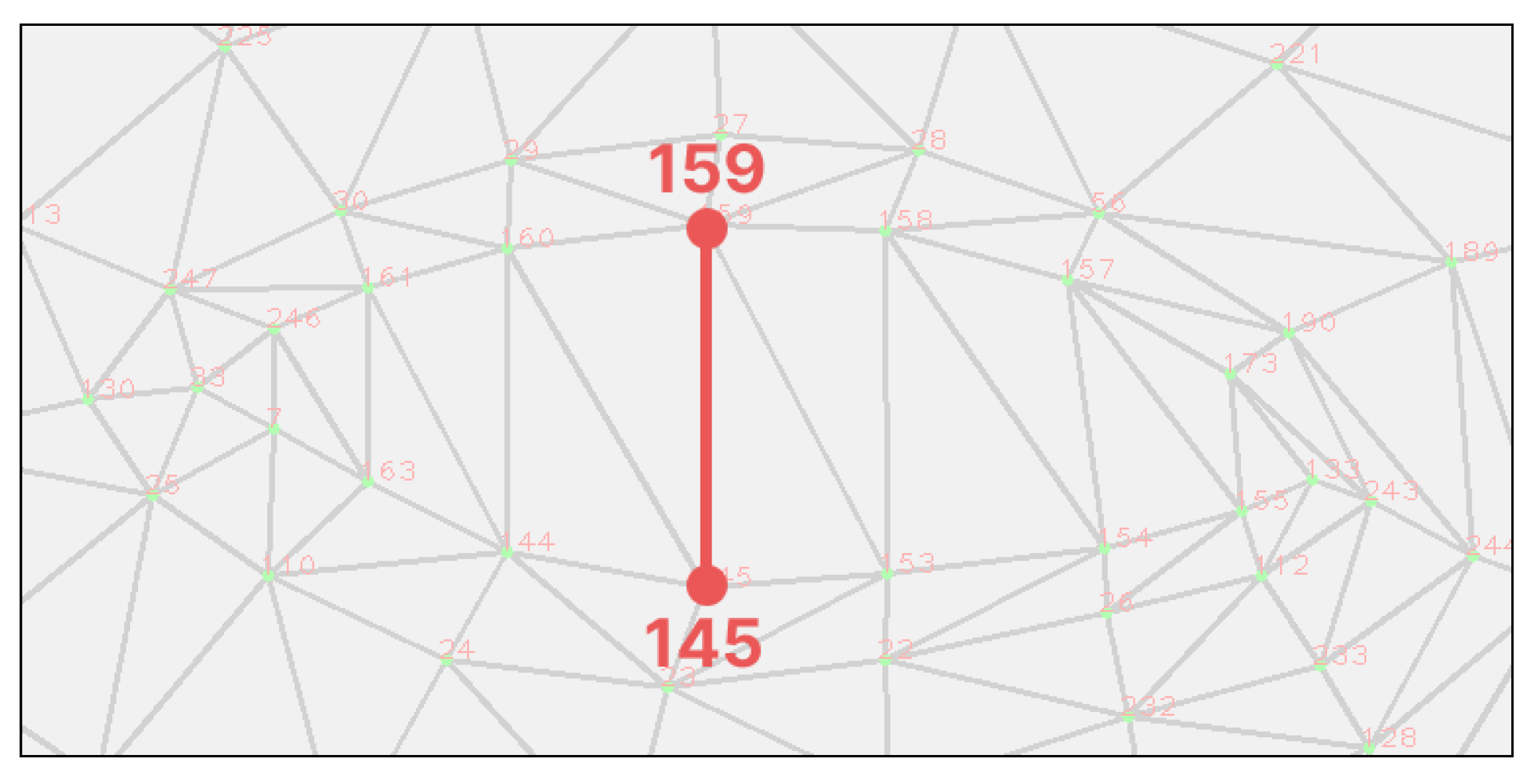

3.1. Landmark Standardization

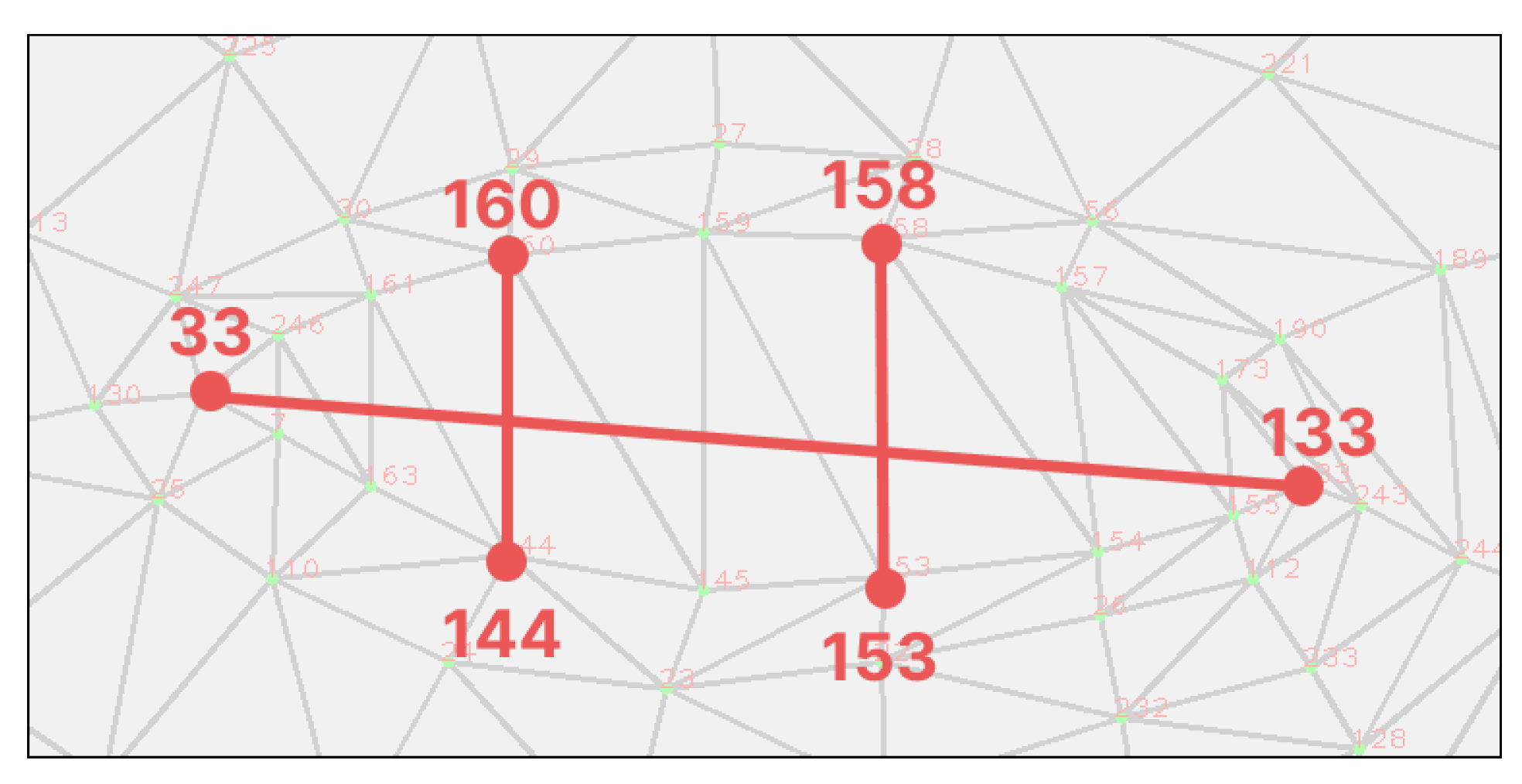

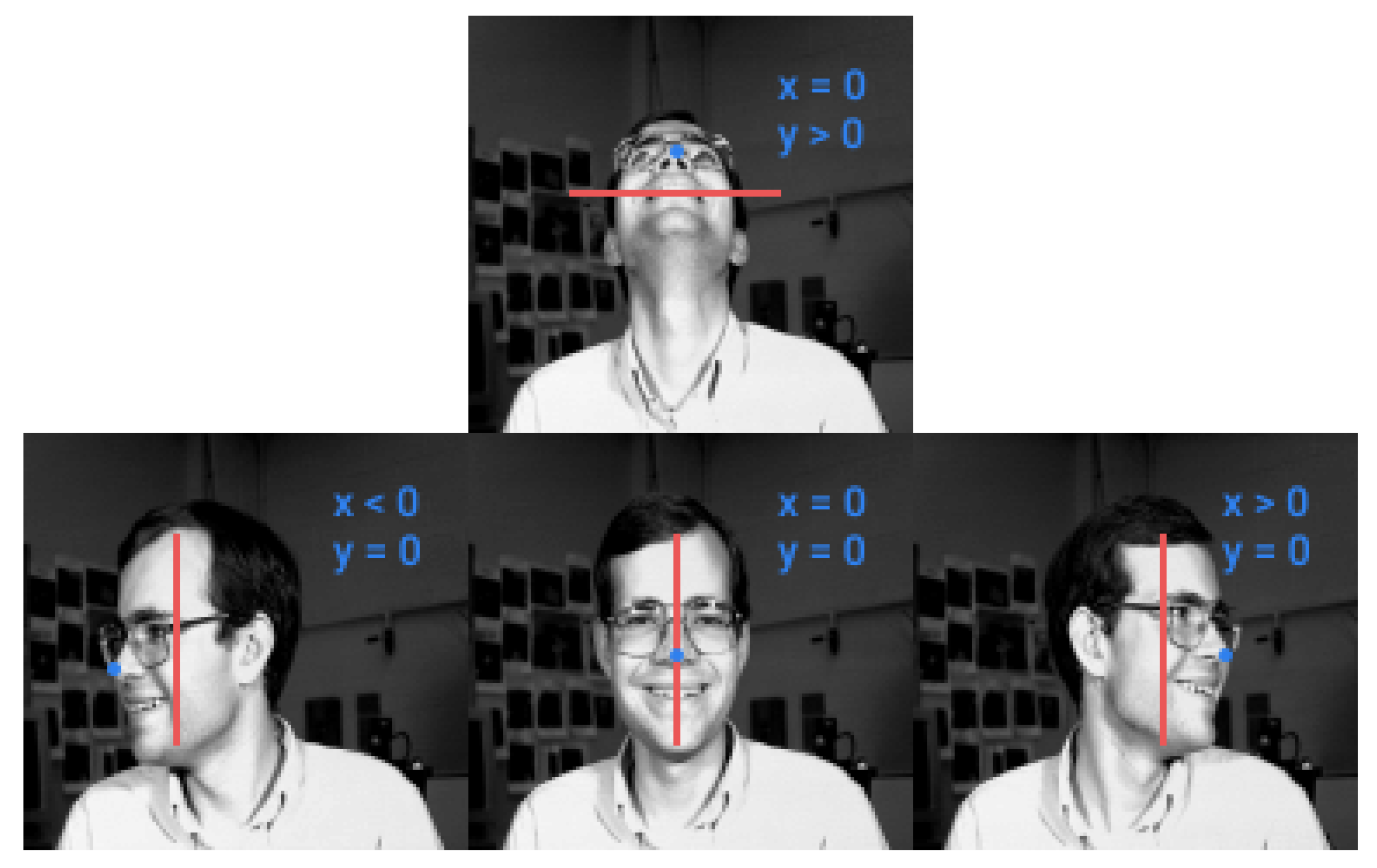

3.2. Face Orientation

3.3. Blink Detection—Adaptive Threshold

3.3.1. EAR with Constant Threshold

3.3.2. Eyelid Distance with Adaptive Threshold

3.3.3. EAR with Adaptive Threshold

3.3.4. Datasets

4. Experimental Results

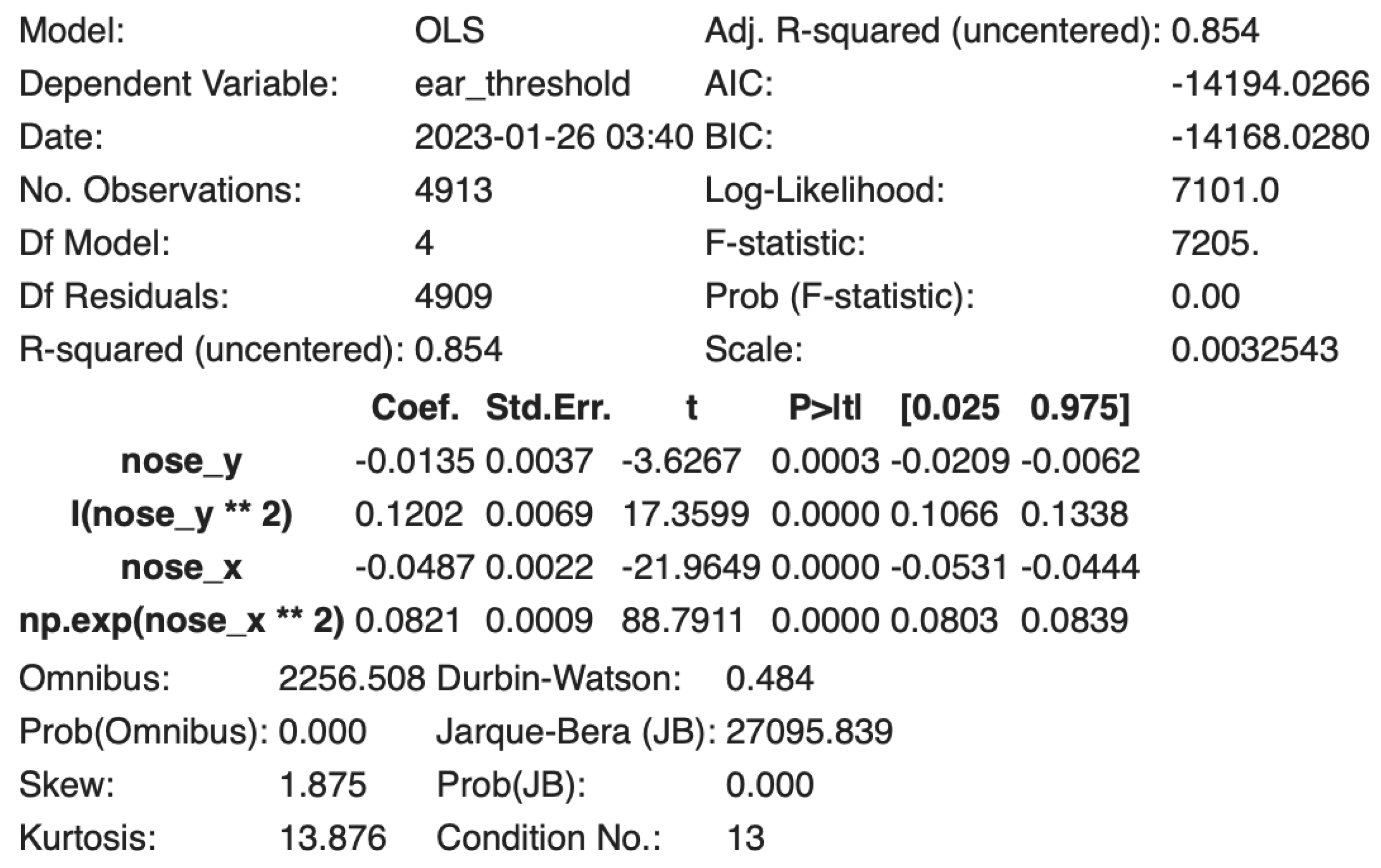

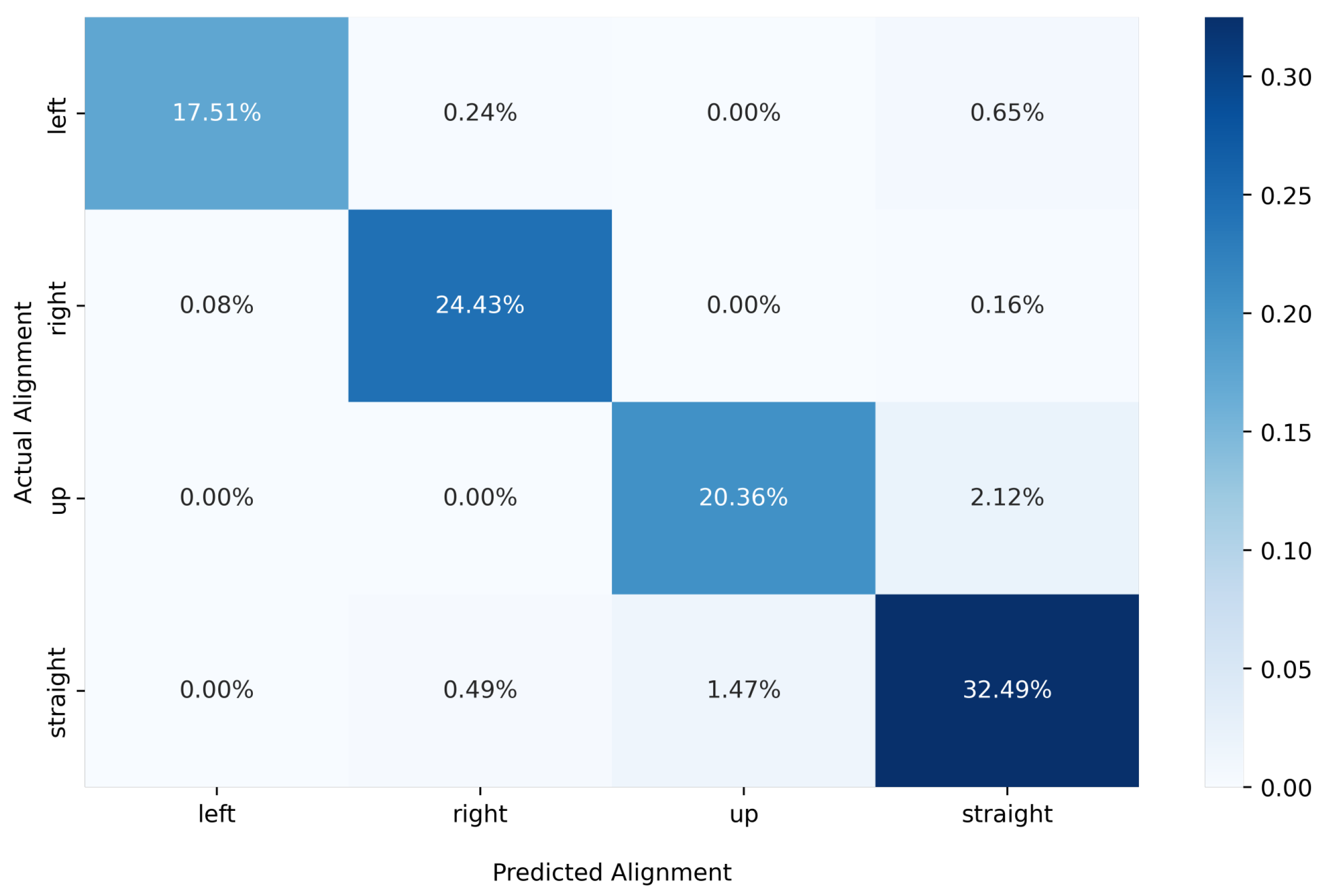

4.1. Face Orientation

4.2. Blink Detection

4.2.1. EAR with Constant Threshold

4.2.2. Eyelid Distance with Adaptive Threshold

4.2.3. EAR with Adaptive Threshold

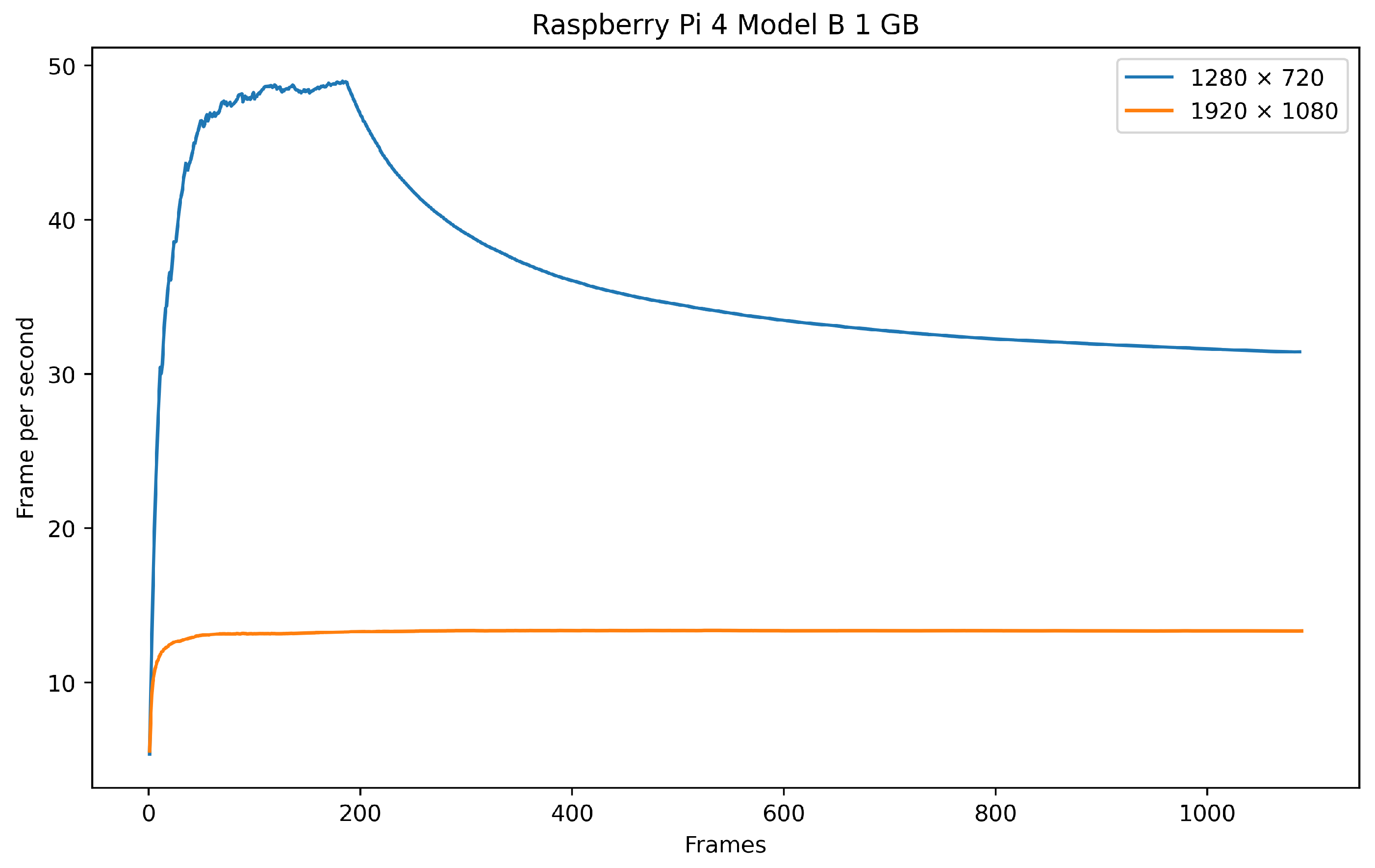

4.3. Runtime Performance

5. Conclusions

- Proposed an initiative open-source proctoring toolkit [32] for online engagement analytics with face orientation and eye blink detection algorithms that can be used on consumer devices or edge devices

- Demonstrated the effectiveness of z-score standardization for facial landmarks

- Statistically proven the impact of face orientation on eye blink

- Improved F1 score and Accuracy for Eye Aspect Ratio (EAR) using adaptive threshold

- Proposed a simple and efficient face orientation detection.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Sample Availability

Abbreviations

| CMU | Carnegie Mellon University (referred to a face dataset) |

| EAR | Eye Aspect Ratio |

| RT-BENE | A Dataset and Baselines for Real-Time Blink Estimation in Natural Environments |

| ANOVA | Analysis of Variance |

| OLS | Ordinary Least Square |

| EAR | Eye Aspect Ratio |

| CEAR | EAR with Constant Threshold |

| AELD | Eyelid Distance with Adaptive Threshold |

| AEAR | EAR with Adaptive Threshold |

| PnP | Perspective-n-Point |

| POSIT | Pose from Orthography and Scaling with ITerations |

Appendix A. Statistics on Standardized Landmarks

Appendix B. Statistics on Face Orientation

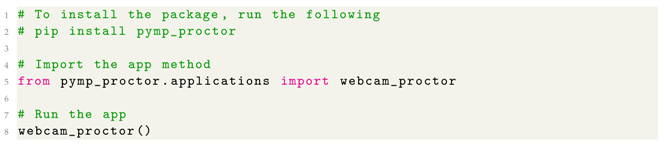

Appendix C. Example Application and Source Code

| Listing A1. Example application in Python. |

|

References

- Iqbal, M. Zoom Revenue and Usage Statistics. 2022. Available online: https://www.businessofapps.com/data/Zoom-statistics/ (accessed on 3 January 2023).

- Organisation for Economic Co-operation and Development. Teleworking in the COVID-19 Pandemic: Trends and Prospects; OECD Publishing: Paris, France, 2021. [Google Scholar] [CrossRef]

- KPMG. The Future of Work: A Playbook for the People, Technology and Legal Considerations for a Successful Hybrid Workforce. 2021. Available online: https://assets.kpmg/content/dam/kpmg/ie/pdf/2021/10/ie-kpmg-hybrid-working-playbook.pdf (accessed on 3 January 2023).

- Hilberath, C.; Kilmann, J.; Lovich, D.; Tzanetti, T.; Bailey, A.; Beck, S.; Kaufman, E.; Khandelwal, B.; Schuler, F.; Woolsey, K. Hybrid work is the new remote work. Boston Consulting Group. 22 September 2020. Available online: https://www.bcg.com/publications/2020/managing-remote-work-and-optimizing-hybrid-working-models (accessed on 3 January 2023).

- Barrero, J.M.; Bloom, N.; Davis, S.J. 60 Million Fewer Commuting Hours Per Day: How AMERICANS Use Time Saved by Working from Home; University of Chicago, Becker Friedman Institute for Economics: Chicago, IL, USA, 2020. [Google Scholar] [CrossRef]

- Hussein, M.J.; Yusuf, J.; Deb, A.S.; Fong, L.; Naidu, S. An evaluation of online proctoring tools. Open Prax. 2020, 12, 509–525. [Google Scholar] [CrossRef]

- Barrero, J.M.; Bloom, N.; Davis, S.J. Why Working from Home Will Stick; Technical Report No. 28731; National Bureau of Economic Research: Cambridge, MA, USA, 2021. [Google Scholar] [CrossRef]

- Fana, M.; Milasi, S.; Napierala, J.; Fernández-Macías, E.; Vázquez, I.G. Telework, Work Organisation and Job Quality During the COVID-19 Crisis: A Qualitative Study; Technical Report No 2020/11; JRC Working Papers Series on Labour, Education and Technology; European Commission, Joint Research Centre (JRC): Seville, Spain, 2020; Available online: http://hdl.handle.net/10419/231343 (accessed on 3 January 2023).

- Russo, D.; Hanel, P.H.; Altnickel, S.; van Berkel, N. Predictors of well-being and productivity among software professionals during the COVID-19 pandemic—A longitudinal study. Empir. Softw. Eng. 2021, 26, 1–63. [Google Scholar] [CrossRef]

- Jeffery, K.A.; Bauer, C.F. Students’ Responses to Emergency Remote Online Teaching Reveal Critical Factors for All Teaching. J. Chem. Educ. 2020, 97, 2472–2485. [Google Scholar] [CrossRef]

- Bailenson, J.N. Nonverbal Overload: A Theoretical Argument for the Causes of Zoom Fatigue. Technol. Mind Behav. 2021, 2. Available online: https://tmb.apaopen.org/pub/nonverbal-overload (accessed on 3 January 2023). [CrossRef]

- Elbogen, E.B.; Lanier, M.; Griffin, S.C.; Blakey, S.M.; Gluff, J.A.; Wagner, H.R.; Tsai, J. A National Study of Zoom Fatigue and Mental Health During the COVID-19 Pandemic: Implications for Future Remote Work. Cyberpsychol. Behav. Soc. Netw. 2022, 25, 409–415. [Google Scholar] [CrossRef]

- Fauville, G.; Luo, M.; Queiroz, A.C.M.; Bailenson, J.N.; Hancock, J. Zoom Exhaustion & Fatigue Scale 2021. Available online: https://ssrn.com/abstract=3786329 (accessed on 3 January 2023).

- Fauville, G.; Luo, M.; Queiroz, A.C.; Bailenson, J.; Hancock, J. Nonverbal Mechanisms Predict Zoom Fatigue and Explain Why Women Experience Higher Levels than Men. SSRN. 14 April 2021. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3820035 (accessed on 9 December 2022).

- Todd, R.W. Teachers’ perceptions of the shift from the classroom to online teaching. Int. J. Tesol Stud. 2020, 2, 4–16. [Google Scholar] [CrossRef]

- Perez-Mata, M.N.; Read, J.D.; Diges, M. Effects of divided attention and word concreteness on correct recall and false memory reports. Memory 2002, 10, 161–177. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.H.; Wu, W.H.; Lee, T.N. Using an online learning platform to show students’ achievements and attention in the video lecture and online practice learning environments. Educ. Technol. Soc. 2022, 25, 155–165. Available online: https://www.jstor.org/stable/48647037 (accessed on 3 January 2023).

- Lodge, J.M.; Harrison, W.J. Focus: Attention science: The role of attention in learning in the digital age. Yale J. Biol. Med. 2019, 92, 21. Available online: https://pubmed.ncbi.nlm.nih.gov/30923470 (accessed on 3 January 2023).

- Tiong, L.C.O.; Lee, H.J. E-cheating Prevention Measures: Detection of Cheating at Online Examinations Using Deep Learning Approach–A Case Study. arXiv 2021, arXiv:2101.09841. [Google Scholar] [CrossRef]

- Coghlan, S.; Miller, T.; Paterson, J. Good proctor or “big brother”? Ethics of online exam supervision technologies. Philos. Technol. 2021, 34, 1581–1606. [Google Scholar] [CrossRef] [PubMed]

- Haga, S.; Rappeneker, J. Developing social presence in online classes: A Japanese higher education context. J. Foreign Lang. Educ. Res. 2021, 2, 174–183. [Google Scholar] [CrossRef]

- Arnò, S.; Galassi, A.; Tommasi, M.; Saggino, A.; Vittorini, P. State-of-the-art of commercial proctoring systems and their use in academic online exams. Int. J. Distance Educ. Technol. 2021, 19, 55–76. [Google Scholar] [CrossRef]

- Nigam, A.; Pasricha, R.; Singh, T.; Churi, P. A systematic review on AI-based proctoring systems: Past, present and future. Educ. Inf. Technol. 2021, 26, 6421–6445. [Google Scholar] [CrossRef] [PubMed]

- Atoum, Y.; Chen, L.; Liu, A.X.; Hsu, S.D.; Liu, X. Automated online exam proctoring. IEEE Trans. Multimed. 2017, 19, 1609–1624. [Google Scholar] [CrossRef]

- Jia, J.; He, Y. The design, implementation and pilot application of an intelligent online proctoring system for online exams. Interact. Technol. Smart Educ. 2021, 19, 112–120. [Google Scholar] [CrossRef]

- Agarwal, V. Proctoring-AI. 2020. Available online: https://github.com/vardanagarwal/Proctoring-AI.git (accessed on 3 January 2023).

- Namaye, V.; Kanade, A.; Nankani, T. Aankh. 2022. Available online: https://github.com/tusharnankani/Aankh.git (accessed on 3 January 2023).

- Fernandes, A.; Fernandes, A.; D’silva, C.; D’cunha, S. GodsEye: Smart Virtual Exam System. 2022. Available online: https://github.com/AgnellusX1/GodsEye.git (accessed on 3 January 2023).

- Baltrušaitis, T.; Robinson, P.; Morency, L.P. OpenFace: An open source facial behavior analysis toolkit. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–10. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar] [CrossRef]

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time Facial Surface Geometry from Monocular Video on Mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar] [CrossRef]

- Saw, T. MediaPipe Proctoring Toolkit; Zenodo: Genève, Switzerland, 2023. [Google Scholar] [CrossRef]

- Haga, A.; Takahashi, W.; Aoki, S.; Nawa, K.; Yamashita, H.; Abe, O.; Nakagawa, K. Standardization of imaging features for radiomics analysis. J. Med. Investig. 2019, 66, 35–37. [Google Scholar] [CrossRef]

- Verhulst, S. Improving comparability between qPCR-based telomere studies. Mol. Ecol. Resour. 2020, 20, 11–13. [Google Scholar] [CrossRef]

- Mohamad, I.B.; Usman, D. Standardization and its effects on K-means clustering algorithm. Res. J. Appl. Sci. Eng. Technol. 2013, 6, 3299–3303. [Google Scholar] [CrossRef]

- Milligan, G.W.; Cooper, M.C. A study of standardization of variables in cluster analysis. J. Classif. 1988, 5, 181–204. [Google Scholar] [CrossRef]

- Ye, M.; Zhang, W.; Cao, P.; Liu, K. Driver fatigue detection based on residual channel attention network and head pose estimation. Appl. Sci. 2021, 11, 9195. [Google Scholar] [CrossRef]

- Venturelli, M.; Borghi, G.; Vezzani, R.; Cucchiara, R. Deep head pose estimation from depth data for in-car automotive applications. In Understanding Human Activities Through 3D Sensors—Second International Workshop (UHA3DS 2016), Held in Conjunction with the 23rd International Conference on Pattern Recognition (ICPR 2016), Cancun, Mexico, 4 December 2016; Springer: Cham, Switzerland, 2016; pp. 74–85. [Google Scholar] [CrossRef]

- Murphy-Chutorian, E.; Trivedi, M.M. Head pose estimation and augmented reality tracking: An integrated system and evaluation for monitoring driver awareness. IEEE Trans. Intell. Transp. Syst. 2010, 11, 300–311. [Google Scholar] [CrossRef]

- Indi, C.S.; Pritham, K.; Acharya, V.; Prakasha, K. Detection of Malpractice in E-exams by Head Pose and Gaze Estimation. Int. J. Emerg. Technol. Learn. 2021, 16, 47–60. [Google Scholar] [CrossRef]

- Prathish, S.; Narayanan, A.S.; Bijlani, K. An intelligent system for online exam monitoring. In Proceedings of the 2016 International Conference on Information Science (ICIS), Kochi, India, 12–13 August 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 138–143. [Google Scholar] [CrossRef]

- Chuang, C.Y.; Craig, S.D.; Femiani, J. Detecting probable cheating during online assessments based on time delay and head pose. High. Educ. Res. Dev. 2017, 36, 1123–1137. [Google Scholar] [CrossRef]

- Yang, T.Y.; Chen, Y.T.; Lin, Y.Y.; Chuang, Y.Y. FSA-Net: Learning Fine-Grained Structure Aggregation for Head Pose Estimation From a Single Image. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1087–1096. [Google Scholar] [CrossRef]

- Li, H.; Xu, M.; Wang, Y.; Wei, H.; Qu, H. A Visual Analytics Approach to Facilitate the Proctoring of Online Exams. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ’21), Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Ruiz, N.; Chong, E.; Rehg, J.M. Fine-grained head pose estimation without keypoints. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 2074–2083. [Google Scholar] [CrossRef]

- Murphy-Chutorian, E.; Trivedi, M.M. Head Pose Estimation in Computer Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 607–626. [Google Scholar] [CrossRef]

- Narayanan, A.; Kaimal, R.M.; Bijlani, K. Yaw estimation using cylindrical and ellipsoidal face models. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2308–2320. [Google Scholar] [CrossRef]

- Ju, K.; Shin, B.S.; Klette, R. Novel Backprojection Method for Monocular Head Pose Estimation. Int. J. Fuzzy Log. Intell. Syst. 2013, 13, 50–58. [Google Scholar] [CrossRef]

- Shao, M.; Sun, Z.; Ozay, M.; Okatani, T. Improving head pose estimation with a combined loss and bounding box margin adjustment. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Baluja, S.; Sahami, M.; Rowley, H.A. Efficient face orientation discrimination. In Proceedings of the 2004 International Conference on Image Processing (ICIP’04), Singapore, 24–27 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 1, pp. 589–592. [Google Scholar] [CrossRef]

- Królak, A.; Strumiłło, P. Eye-blink detection system for human–Computer interaction. Univers. Access Inf. Soc. 2012, 11, 409–419. [Google Scholar] [CrossRef]

- Jung, T.; Kim, S.; Kim, K. DeepVision: Deepfakes Detection Using Human Eye Blinking Pattern. IEEE Access 2020, 8, 83144–83154. [Google Scholar] [CrossRef]

- Li, Y.; Chang, M.C.; Lyu, S. In ictu oculi: Exposing ai created fake videos by detecting eye blinking. In Proceedings of the 2018 IEEE International workshop on information forensics and security (WIFS), Hong Kong, China, 11–13 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Danisman, T.; Bilasco, I.M.; Djeraba, C.; Ihaddadene, N. Drowsy driver detection system using eye blink patterns. In Proceedings of the 2010 International Conference on Machine and Web Intelligence, Algiers, Algeria, 3–5 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 230–233. [Google Scholar] [CrossRef]

- Divjak, M.; Bischof, H. Eye Blink Based Fatigue Detection for Prevention of Computer Vision Syndrome. In Proceedings of the MVA, Yokohama, Japan, 20–22 May 2009; pp. 350–353. [Google Scholar]

- Kim, K.W.; Hong, H.G.; Nam, G.P.; Park, K.R. A study of deep CNN-based classification of open and closed eyes using a visible light camera sensor. Sensors 2017, 17, 1534. [Google Scholar] [CrossRef]

- Sukno, F.M.; Pavani, S.K.; Butakoff, C.; Frangi, A.F. Automatic assessment of eye blinking patterns through statistical shape models. In Proceedings of the 7th International Conference on Computer Vision Systems (ICVS 2009), Liège, Belgium, 13–15 October 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 33–42. [Google Scholar] [CrossRef]

- Soukupová, T.; Cech, J. Real-Time Eye Blink Detection using Facial Landmarks. In Proceedings of the 21st Computer Vision Winter Workshop, Rimske Toplice, Slovenia, 3–5 February 2016. [Google Scholar]

- Al-gawwam, S.; Benaissa, M. Robust Eye Blink Detection Based on Eye Landmarks and Savitzky–Golay Filtering. Information 2018, 9, 93. [Google Scholar] [CrossRef]

- Ibrahim, B.R.; Khalifa, F.M.; Zeebaree, S.R.M.; Othman, N.A.; Alkhayyat, A.; Zebari, R.R.; Sadeeq, M.A.M. Embedded System for Eye Blink Detection Using Machine Learning Technique. In Proceedings of the 2021 1st Babylon International Conference on Information Technology and Science (BICITS), Babil, Iraq, 28–29 April 2021; pp. 58–62. [Google Scholar] [CrossRef]

- Liu, J.; Li, D.; Wang, L.; Xiong, J. BlinkListener: “Listen” to Your Eye Blink Using Your Smartphone. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–27. [Google Scholar] [CrossRef]

- Wang, J.; Cao, J.; Hu, D.; Jiang, T.; Gao, F. Eye blink artifact detection with novel optimized multi-dimensional electroencephalogram features. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1494–1503. [Google Scholar] [CrossRef]

- Hoffmann, S.; Falkenstein, M. The correction of eye blink artefacts in the EEG: A comparison of two prominent methods. PLoS ONE 2008, 3, e3004. [Google Scholar] [CrossRef] [PubMed]

- Abo-Zahhad, M.; Ahmed, S.M.; Abbas, S.N. A new multi-level approach to EEG based human authentication using eye blinking. Pattern Recognit. Lett. 2016, 82, 216–225. [Google Scholar] [CrossRef]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Tröster, G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 741–753. [Google Scholar] [CrossRef]

- Ishimaru, S.; Kunze, K.; Uema, Y.; Kise, K.; Inami, M.; Tanaka, K. Smarter eyewear: Using commercial EOG glasses for activity recognition. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; pp. 239–242. [Google Scholar] [CrossRef]

- Kosmyna, N.; Morris, C.; Nguyen, T.; Zepf, S.; Hernandez, J.; Maes, P. AttentivU: Designing EEG and EOG compatible glasses for physiological sensing and feedback in the car. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 355–368. [Google Scholar] [CrossRef]

- Wilson, H.R.; Wilkinson, F.; Lin, L.M.; Castillo, M. Perception of head orientation. Vis. Res. 2000, 40, 459–472. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, W.; Jiang, X.; Hou, S.; Qin, Y. Assessment of Driver Mental Fatigue Using Facial Landmarks. IEEE Access 2019, 7, 150423–150434. [Google Scholar] [CrossRef]

- Cortacero, K.; Fischer, T.; Demiris, Y. RT-BENE: A Dataset and Baselines for Real-Time Blink Estimation in Natural Environments (Source). In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019; Available online: https://zenodo.org/record/3685316#.Y2nO9C0RpQI (accessed on 3 January 2023).

- Fogelton, A.; Benesova, W. Eye blink detection based on motion vectors analysis. Comput. Vis. Image Underst. 2016, 148, 23–33. [Google Scholar] [CrossRef]

- Drutarovsky, T.; Fogelton, A. Eye Blink Detection Using Variance of Motion Vectors. In Proceedings of the Computer Vision—ECCV 2014 Workshops, Zurich, Switzerland, 6–7, 12 September 2014; Agapito, L., Bronstein, M.M., Rother, C., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 436–448. [Google Scholar]

- Mitchell, T. CMU Face Images Data Set. Donated to UCI Machine Learning Repository. 1999. Available online: https://archive.ics.uci.edu/ml/datasets/cmu+face+images (accessed on 3 January 2023).

- ROMAN, K. Selfies and Video Dataset (4000 People). 2022. Available online: https://www.kaggle.com/datasets/tapakah68/selfies-and-video-dataset-4-000-people (accessed on 3 January 2023).

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Dua, D.; Graff, C. UCI Machine Learning Repository. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 3 January 2023).

- Saw, T. Sample Application for MediaPipe Proctoring Solution. Available online: https://hakkaix.com/aiml/proctoring/sample (accessed on 3 January 2023).

| Accuracy | F1 | Precision | Recall | AUC-ROC | |

|---|---|---|---|---|---|

| CEAR | 89.59 | 30.49 | 19.34 | 73.27 | 81.59 |

| AELD | 97.59 | 53.14 | 46.43 | 62.53 | 80.44 |

| AEAR | 97.53 | 51.65 | 45.18 | 60.94 | 79.63 |

| Accuracy | F1 | Precision | Recall | AUC-ROC | |

|---|---|---|---|---|---|

| RT-BENE | 89.23 | 24.64 | 14.86 | 72.02 | 80.84 |

| Eyeblink8 | 84.80 | 10.58 | 5.94 | 47.8 | 66.65 |

| TalkingFace | 94.74 | 54.26 | 37.23 | 100 | 97.28 |

| Accuracy | F1 | Precision | Recall | AUC-ROC | |

|---|---|---|---|---|---|

| RT-BENE | 97.63 | 56.78 | 51.26 | 63.63 | 81.06 |

| Eyeblink8 | 97 | 26.34 | 24.5 | 28.47 | 63.39 |

| TalkingFace | 98.15 | 76.31 | 63.53 | 95.51 | 96.87 |

| Accuracy | F1 | Precision | Recall | AUC-ROC | |

|---|---|---|---|---|---|

| RT-BENE | 97.11 | 51.85 | 43.75 | 63.63 | 80.79 |

| Eyeblink8 | 97.22 | 26.03 | 26.13 | 25.94 | 62.26 |

| TalkingFace | 98.27 | 77.08 | 65.68 | 93.26 | 95.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thiha, S.; Rajasekera, J. Efficient Online Engagement Analytics Algorithm Toolkit That Can Run on Edge. Algorithms 2023, 16, 86. https://doi.org/10.3390/a16020086

Thiha S, Rajasekera J. Efficient Online Engagement Analytics Algorithm Toolkit That Can Run on Edge. Algorithms. 2023; 16(2):86. https://doi.org/10.3390/a16020086

Chicago/Turabian StyleThiha, Saw, and Jay Rajasekera. 2023. "Efficient Online Engagement Analytics Algorithm Toolkit That Can Run on Edge" Algorithms 16, no. 2: 86. https://doi.org/10.3390/a16020086

APA StyleThiha, S., & Rajasekera, J. (2023). Efficient Online Engagement Analytics Algorithm Toolkit That Can Run on Edge. Algorithms, 16(2), 86. https://doi.org/10.3390/a16020086