1. Introduction

Portfolio optimization is a complex problem not only in the depth of the topics that it covers, but also in its breadth. It is the process of determining which assets to include in a portfolio while simultaneously maximizing profit and minimizing risk. To illustrate the investment process, consider the game of Monopoly. In the game, players purchase property. When a player lands on another player’s property, that player must then pay the owner a fee. The more expensive the property purchased is, the higher the fees will be. Players must, therefore, strategize about which properties to buy. In some cases, a player might take a risk and purchase an expensive property, but unfortunately, it is hardly ever visited by other players. In this scenario, the risk does not pay off and possibly jeopardizes the player’s position in the game. Players must determine what the best possible way to spend their money would be in order to maximize their profits without going bankrupt.

The real world is much more complex, with many moving parts, but it is similar to Monopoly in that investing can be a risky venture. However, it is possible that an asset’s value increases significantly, making it a worthwhile investment. Identifying which asset or collection of assets—known as a portfolio—would yield an optimal balance between risk and reward is not an easy task. Moreover, there may exist multiple, but equally good, portfolios that have different risk and return characteristics, which further complicates the task. Lastly, when constraints that introduce nonlinearity and non-convexity (such as boundary constraints and cardinality constraints) are added, the problem becomes NP-Hard [

1,

2,

3]. Thus, approaches such as quadratic programming cannot be efficiently utilized to obtain solutions.

Meta-heuristics are computationally efficient and are effective approaches to obtaining good-quality solutions for a variety of portfolio models [

3]. Typically, solutions are represented by fixed-length vectors of floats where the elements in a vector correspond to asset weights. Unfortunately, the performance of fixed-length vector meta-heuristics deteriorate for larger portfolio optimization problems [

4]. An alternative approach is to redefine the portfolio problem as a set-based problem where a subset of assets are selected and then the weights of these assets are optimized. For example, hybridization approaches that integrate quadratic programming with genetic algorithms (GAs) have been shown to increase performance for constrained portfolio optimization problems [

5,

6,

7,

8,

9]. A new set-based approach, set-based particle swarm optimization (SBPSO) for portfolio optimization, uses particle swarm optimization (PSO) to optimize asset weights instead of quadratic programming and has demonstrated good performance for the portfolio optimization problem [

10].

Single-objective portfolio optimization requires a trade-off coefficient to be specified in order to balance the two objectives, i.e., risk and return. A collection of equally good but different solutions can then be obtained by solving the single-objective optimization problem for various trade-off coefficient values. However, a more sophisticated and appropriate approach would be to use a multi-objective optimization (MOO) algorithm to identify an equally spread set of non-dominated solutions, e.g., multi-guide particle swarm optimization (MGPSO). MGPSO is a multi-swarm multi-objective PSO algorithm that uses a shared archive to store non-dominated solutions found by the swarms [

11].

This paper proposes a new approach to multi-objective portfolio optimization, multiguide set-based particle swarm optimization (MGSBPSO), that combines elements of SBPSO with MGPSO. The novelty of the proposed approach is that it can identify multiple but equally good solutions to the portfolio optimization problem with the scaling benefits of a set-based approach. Furthermore, the proposed approach identifies subsets of assets to be included in the portfolio, leading to a reduction in the dimensionality of the problem. Lastly, MGSBPSO is the first MOO approach to SBPSO.

The performance of MGSBPSO for portfolio optimization is investigated and compared with that of other multi-objective algorithms, namely, MGPSO, non-dominated sorting genetic algorithm II (NSGA-II) [

12], and strength Pareto evolutionary algorithm 2 (SPEA2) [

13]. The single-objective SBPSO is also included in the performance comparisons as a baseline benchmark and to evaluate whether SBPSO is competitive amongst multi-objective algorithms. NSGA-II and SPEA2 were selected to compare with MGSBPSO, since these algorithms had been used extensively for portfolio optimization before [

14,

15,

16,

17,

18,

19,

20]. It should also be noted that this paper is the first to apply MGPSO to the portfolio optimization problem.

The main findings of this paper are:

MGSBPSO is capable of identifying non-dominated solutions to several portfolio optimization problems of varying dimensionalities.

The single-objective SBPSO can obtain results (over a number of runs) that are just as good as those obtained by multi-objective algorithms.

NSGA-II and SPEA2 obtain good-quality solutions, although they are not as diverse as the solutions found by SBPSO, MGPSO, and MGSBPSO.

MGPSO using a tuning-free approach [

21] performs similarly to NSGA-II and SPEA2 using tuned control parameter values.

MGSBPSO scales to larger portfolio problems better than MGPSO, NSGA-II, and SPEA2.

The remainder of this paper is organized as follows: The necessary background for the portfolio optimization is given in

Section 2.

Section 3 details the algorithms used in this paper.

Section 4 proposes MGSBPSO for portfolio optimization. The empirical process for determining the performance of the proposed approach is explained in

Section 5, and the results are presented in

Section 6.

Section 7 concludes the paper. Ideas for future work are given in

Section 8.

3. Optimization Algorithms for Portfolio Optimization

There have been many applications of optimization algorithms to both the single-objective and multi-objective portfolio optimization problems [

3]. This section presents a subset of meta-heuristics that have been applied to portfolio optimization.

Section 3.1 introduces PSO—a popular approach to single-objective portfolio optimization. Set-based particle swarm optimization, a recently proposed approach to single-objective optimization [

10], is explained in

Section 3.2. Multi-guide particle swarm optimization (this paper is the first to apply MGPSO to multi-objective portfolio optimization) is discussed in

Section 3.3.

Section 3.4 and

Section 3.5 present NSGA-II and SPEA2, respectively, which have previously been applied to multi-objective portfolio optimization [

14,

15,

16,

17,

18,

19,

20].

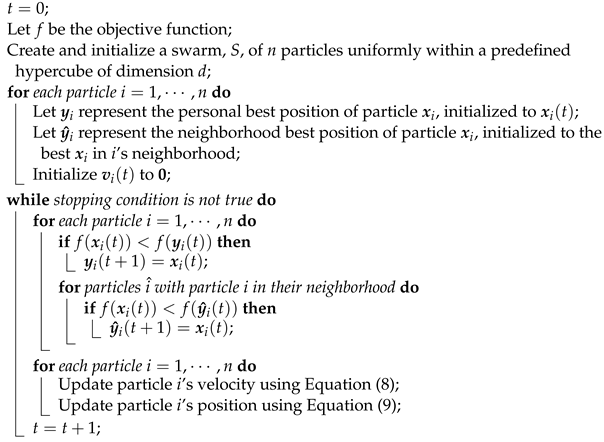

3.1. Particle Swarm Optimization

PSO, which was first proposed in 1995 by Eberhart and Kennedy, is a single-objective optimization algorithm [

23]. The algorithm iteratively updates its collection of particles (referred to as a swarm) to find solutions to the optimization problem under consideration. The position of a particle, which is randomly initialized, is a candidate solution to the problem. In the case of portfolio optimization, the position of a particle represents the weights used in the calculation of Equation (

3). Each particle also has a velocity (initially a vector of zeros) that guides the particle to more promising areas of the search space. The position of a particle is updated with their velocity at each time step

t to produce a new candidate solution. The velocity of a particle is influenced by the previous velocity of the particle and social and cognitive guides. The cognitive guide is a particle’s personal best-known solution that has been found thus far, and the social guide is the best-known solution found thus far within a neighborhood (network) of particles. A global network means that the social guide is the best-known solution found by the entire swarm thus far, which is what is used in this paper. This paper also uses an inertia-weighted velocity update to regulate the trade-off between exploitation and exploration [

24]. The velocity update is defined as

where

is the velocity of particle

i;

w is the inertia weight;

and

are acceleration coefficients that control the influence of the cognitive and social guides, respectively;

and

are vectors of random values sampled from a standard uniform distribution in the range [0, 1];

is the cognitive guide of particle

i;

is the social guide of particle

i. A particle’s position is updated using

Algorithm 1 contains pseudo-code for PSO.

| Algorithm 1: Particle Swarm Optimization |

![Algorithms 16 00062 i001 Algorithms 16 00062 i001]() |

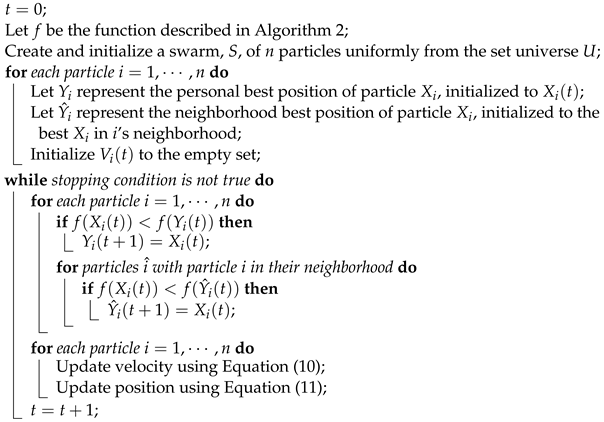

3.2. Set-Based Particle Swarm Optimization

PSO was designed to solve continuous-valued optimization problems. However, there are many real-world optimization problems that do not have continuous-valued decision variables, e.g., feature selection problems, assignment problems, and scheduling problems. The set-based PSO (SBPSO) algorithm combines PSO to find solutions to combinatorial optimization problems where solutions can be represented as sets [

25]. SBPSO uses sets to represent particle positions, which allows for positions (i.e., solutions) of varying sizes.

SBPSO was proposed, and later improved, for portfolio optimization [

26]. SBPSO for portfolio optimization is a two-part search process, where (1) subsets of assets are selected and (2) the weights of these assets are optimized. The asset weights are optimized using PSO according to Equation (

3). The PSO used for weight optimization runs until it converges, i.e., when there is no change in the objective function value over three iterations. The result of this weight optimization stage (summarized in Algorithm 2) is the best solution found by the PSO. The objective function value of the best solution is assigned to the corresponding set–particle. In addition, if there are any zero-weighted assets in the best solution, the corresponding assets in the set–particle are removed. There is a special case where a set–particle contains only one asset. In such a case, the objective function is immediately calculated, since the asset can only ever have a weight of 1.0 given Equation (

6).

| Algorithm 2: Weight Optimization for Set-Based Portfolio Optimization |

| Let t represent the current iteration; |

| Let f be the objective function; |

| Let represent set–particle i; |

| Minimize f using Algorithm 1 for iterations with assets in ; |

| ; |

| Return the best objective function value and corresponding weights found by Algorithm 1;

|

Like PSO, SBPSO also has position and velocity updates. However, these are redefined for sets. A set–particle’s position,

, is

, where

is the power set of

U, and

U is the universe of all elements in regard to a specific problem domain. For portfolio optimization,

U is the set of all assets. The velocity of a set–particle is a set of operations to add or remove elements to or from a set–particle’s position. These operations are denoted as

if an operation is to add an element to the position or

to remove an element from the position, where

. Formally, the velocity update is

where

is the velocity of set–particle

i;

is an exploration balance coefficient equal to

, where

is the maximum number of iterations;

,

, and

are random values, each sampled from a standard uniform distribution in the range [0, 2];

is the position of set–particle

i;

is the cognitive guide of set–particle

i;

is the social guide of set–particle

i;

is shorthand for

. The positions of set–particles are updated by using

The operators ⊗, ⊖, ⊕, and ⊞ are defined in

Appendix A, and the pseudo-code for SBPSO is given in Algorithm 3.

To better understand SBPSO for portfolio optimization, consider the following example. There are 50 assets in the universe. Initially, a set–particle randomly selects a subset of assets, say

, from the universe. These assets are then used to create a continuous search space for the inner PSO. Each dimension in the search space of the PSO represents the weight of an asset. Then, the PSO optimizes the asset weights for a fixed duration.

Table 1 contains example results obtained by the weight optimizer.

The combination of the assets and weightings is a candidate portfolio. Continuing with the example,

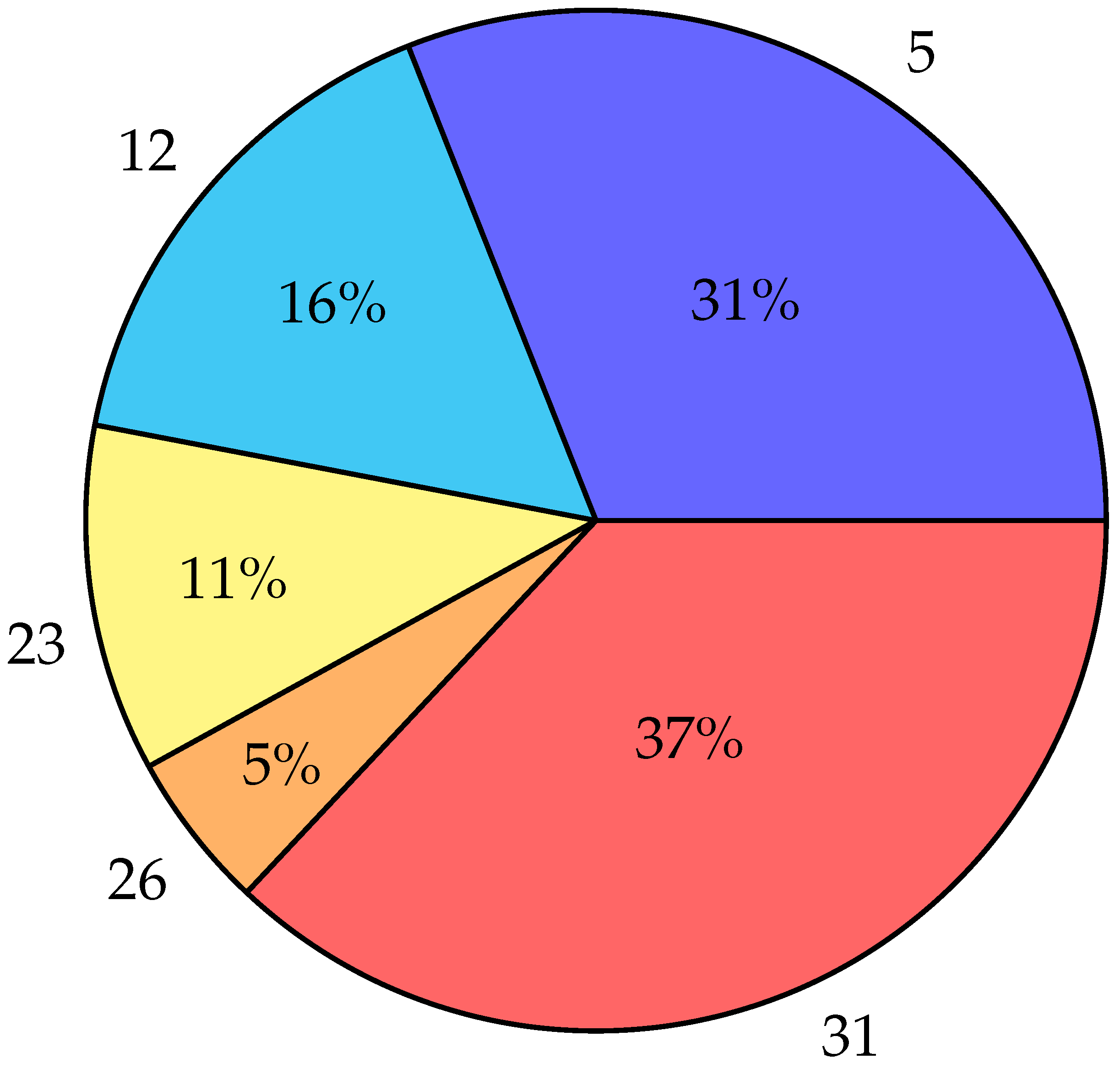

Figure 1 visualizes the portfolio.

| Algorithm 3: Set-Based Particle Swarm Optimization for Portfolio Optimization |

![Algorithms 16 00062 i002 Algorithms 16 00062 i002]() |

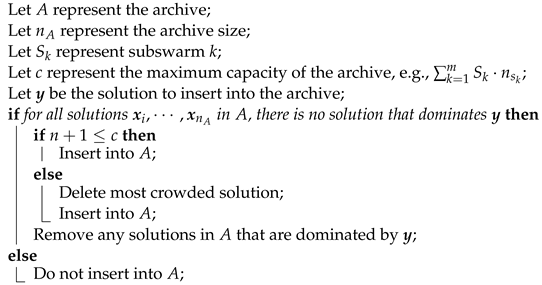

3.3. Multi-Guide Particle Swarm Optimization

MGPSO is a multi-objective multi-swarm implementation of PSO that uses an archive to share non-dominated solutions between the swarms [

27]. Each of the swarms optimizes one of the

m objectives for an

m-objective optimization problem. The archive, which can be bounded or unbounded, stores non-dominated solutions found by the swarms. MGPSO adds a third guide to the velocity update function, the archive guide, which attracts particles to previously found non-dominated solutions. The archive guide is the winner of a randomly created tournament of archive solutions. The winner is the least crowded solution in the archive. Crowding distance is used to measure how close the solutions are to one another [

12]. Alongside the introduction of the archive guide is the archive balance coefficient, i.e.,

. The archive balance coefficient is a value from a uniform distribution in the range

that remains fixed throughout the search. The archive balance coefficient controls the influence of the archive and social guides, where larger values favor the social guide and smaller values favor the archive guide.

The proposal of MGPSO also defines an archive management protocol (summarized in Algorithm 4) according to which a solution is only inserted into the archive if it is not dominated by any existing solution in the archive [

27]. Any pre-existing solutions in the archive that are dominated by the newly added solution are removed. In the case that a bounded archive is used and the archive is full, the most crowded solution is removed.

| Algorithm 4: Archive Insert Policy |

![Algorithms 16 00062 i003 Algorithms 16 00062 i003]() |

Formally, the velocity update is

where

is a vector of random values sampled from a standard uniform distribution in [0, 1];

is the archive acceleration coefficient;

is the archive guide for particle

i. Erwin and Engelbrecht recently proposed an approach for the MGPSO that randomly samples control parameter values from theoretically derived stability conditions, yielding similar performance to that when using tuned parameters [

21,

27]. Algorithm 5 contains pseudo-code for MGPSO.

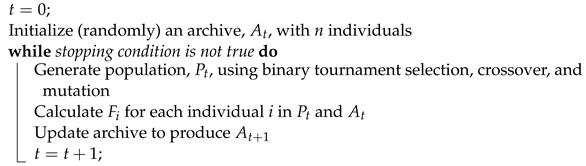

| Algorithm 5: Multi-Guide Particle Swarm Optimization |

![Algorithms 16 00062 i004 Algorithms 16 00062 i004]() |

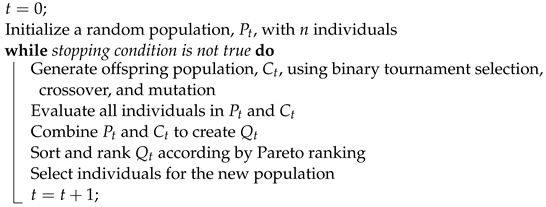

3.4. Non-Dominated Sorting Genetic Algorithm II

NSGA-II is a multi-objective GA that ranks and sorts each individual in the population according to its non-domination level [

12]. Furthermore, the crowding distance is used to break ties between individuals with the same rank. The use of the crowding distance maintains a diverse population and helps the algorithm explore the search space.

The algorithm uses a single population, , of a fixed size, n. At each iteration, a new candidate population, , is created by performing crossover (simulated binary crossover) and mutation (polynomial mutation) operations on . The two populations are combined to create . is then sorted by Pareto dominance. Non-dominated individuals are assigned a rank of one and are separated from the population. Individuals that are non-dominated in the remaining population are assigned a rank of two and are separated from the population. This process repeats until all individuals in the population have been assigned a rank. The result is a population separated into multiple fronts, where each front exhibits more Pareto-optimality than the last.

The population,

, for the next generation is created by selecting individuals from the sorted fronts. Elitism is preserved by transferring individuals that ranked first into the next generation. If the number of individuals in the first front is greater than

n, then the least crowded

n individuals (determined by the crowding distance) are selected. If the number of individuals in the first front is less than

n, then the least crowded individuals from the second front are selected, and then those from the third front, and so on, until there are

n individuals in

.

| Algorithm 6: Non-Dominated Sorting Genetic Algorithm II |

![Algorithms 16 00062 i005 Algorithms 16 00062 i005]() |

3.5. Strength Pareto Evolutionary Algorithm 2

SPEA2 uses an archive, , to ensure that elitism is maintained across generations. SPEA2 also uses a fine-grained fitness assignment. The fitness of an individual takes into account the number of individuals it dominates, the number of solutions it is dominated by, and its density in relation to other individuals.

Like NSGA-II, SPEA2 uses a single population,

, of a fixed size,

n. However,

is created by performing crossover (simulated binary crossover) and mutation (polynomial mutation) operations on

. Individuals in

and

are assigned strength values. The strength value

of individual

i is the number of individuals that

i dominates. Each individual also has what is referred to as a raw fitness value,

.

is calculated as the summation of the strength values of the individuals that dominate

i. Then, to account for the scenario where many, if not all, of the individuals are non-dominated, a density estimator is also added to the fitness calculation. The distance between individual

i and every other individual in

is calculated and sorted in increasing order. The

k-th individual in the sorted list is referred to as

. The density of individual

i is calculated as

Finally, the fitness of individual

i is calculated using:

All individuals with

are copied over into the archive for the next generation. If the number of individuals in the archive is not enough, the remaining individuals in

are sorted based on

in increasing order. The best individuals are selected from the sorted list until the archive is full. When there are too many good-quality individuals, i.e., individuals with

, to be inserted into the archive, the individual that has the minimum distance to another individual is removed. This process is repeated until there are

n individuals. In the case where there are several individuals with the same minimum distance, then the distances of those individuals to the second, third, etc. closest individuals are considered until the tie is broken.

| Algorithm 7: Strength Pareto Evolutionary Algorithm 2 |

![Algorithms 16 00062 i006 Algorithms 16 00062 i006]() |

4. Multi-Objective Set-Based Portfolio Optimization Algorithm

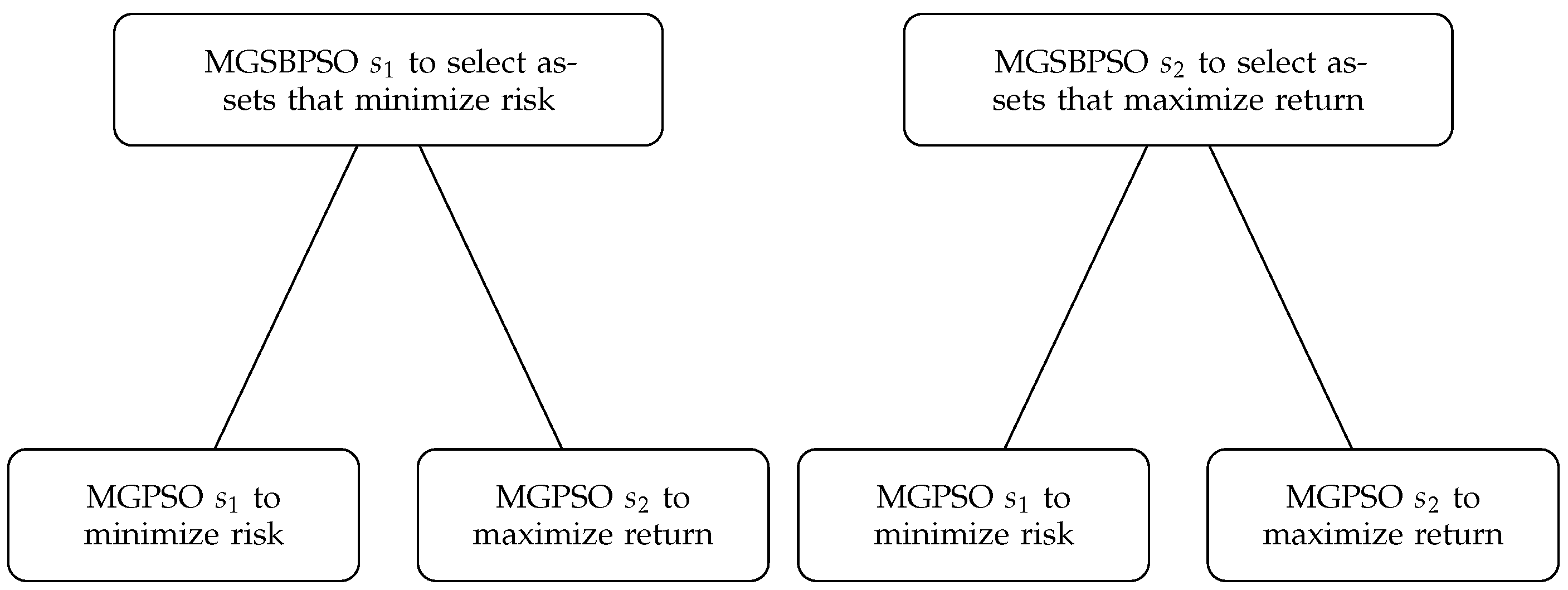

This section proposes a multi-objective set-based algorithm for multi-objective portfolio optimization. Elements from MGPSO are incorporated into SBPSO to enable SBPSO to solve multiple objectives simultaneously. The proposed approach, referred to as MGSBPSO, uses multiple swarms, where each swarm optimizes one of the objectives in the asset space. Thus, there is a swarm for selecting assets that minimize risk and a swarm for selecting assets that maximize profit. As for MGPSO, non-dominated solutions found by the swarms are stored in an archive (initially empty) of a fixed size. The archive management process described in

Section 3.3 is also used in the MGSBPSO. However, the crowding distance of the non-dominated solutions in the archive is calculated with respect to their objective function values instead of their set-based positions because the set-based positions lack distance in the traditional sense. Non-dominated solutions are selected from the archive using tournament selection and are used to guide the particles to non-dominated regions of the search space. Like the archive management strategy, the crowding distance of the non-dominated solutions in the tournament is calculated with respect to their objective function values. Furthermore, the successful modifications identified by Erwin and Engelbrecht are also included in the MGSBPSO, namely, the removal of zero-weighted assets, the immediate calculation of the objective function for single-asset portfolios, the decision to allow the weight optimizer to execute until it converges, only allowing assets to be removed via the weight determination stage, and the exploration balance coefficient for improved convergence behavior. Taking these improvements, as well as the archive guide, into account, the velocity equation is

where

,

,

, and

are random values, each sampled from a standard uniform distribution in the range [0, 1];

is the linearly increasing exploration balance coefficient;

is the position of particle

i;

is the best position found by particle

i;

is the best position within particle

i’s neighborhood;

is the archive guide for particle

i; the influence of the archive guide is controlled by the archive coefficient

;

is shorthand for

, where

U is the set universe, and ⊗ and ⊕ are the set-based operators defined in

Section 3.2.

For the purpose of weight determination, MGPSO with the newly proposed tuning-free approach is used. Hence, asset weight determination is also a multi-objective optimization task. For each set–particle, an MGPSO is instantiated to optimize the corresponding asset weights with regard to risk and return.

Figure 2 illustrates the overall structure of the swarms in MGSBPSO and their objective.

Each MGPSO in

Figure 2 has its own archive. Specifically, the MGPSO for MGSBPSO

has its own archive and the MGPSO for MGSBPSO

has its own archive. There is also a global archive, the MGSBPSO archive, which is used to store non-dominated solutions found by either MGPSO. An MGPSO terminates when no non-dominated solutions are added to their archives over three iterations. The non-dominated solutions in the archive of an MGPSO are then inserted into the global archive along with the corresponding set position. Lastly, the best objective function value of the non-dominated solutions in an MGPSO archive is assigned to the corresponding set–particle with regard to the objective of the swarm that the set–particle is in. For example, if the set–particle is in the swarm for minimizing risk, then the best risk value of the non-dominated solutions in the MGPSO archive is used. Algorithm 8 presents the pseudocode for the multi-objective weight optimization process and how the MGPSO archives interact with the global archive. The pseudocode for MGSBPSO is given in Algorithm 9.

The proposed MGSBPSO is expected to perform similarly to the MGPSO for multi-objective portfolio optimization, since MGSBPSO makes use of MGPSO for asset weight optimization. It is also expected that the reduction in dimensionality by MGSBPSO will result in higher-quality solutions than those of MGPSO for larger portfolio problems.

| Algorithm 8: Multi-Objective Weight Determination for Set-Based Portfolio Optimization |

![Algorithms 16 00062 i007 Algorithms 16 00062 i007]() |

| Algorithm 9: Multi-Guide Set-Based Particle Swarm Optimization |

![Algorithms 16 00062 i008 Algorithms 16 00062 i008]() |

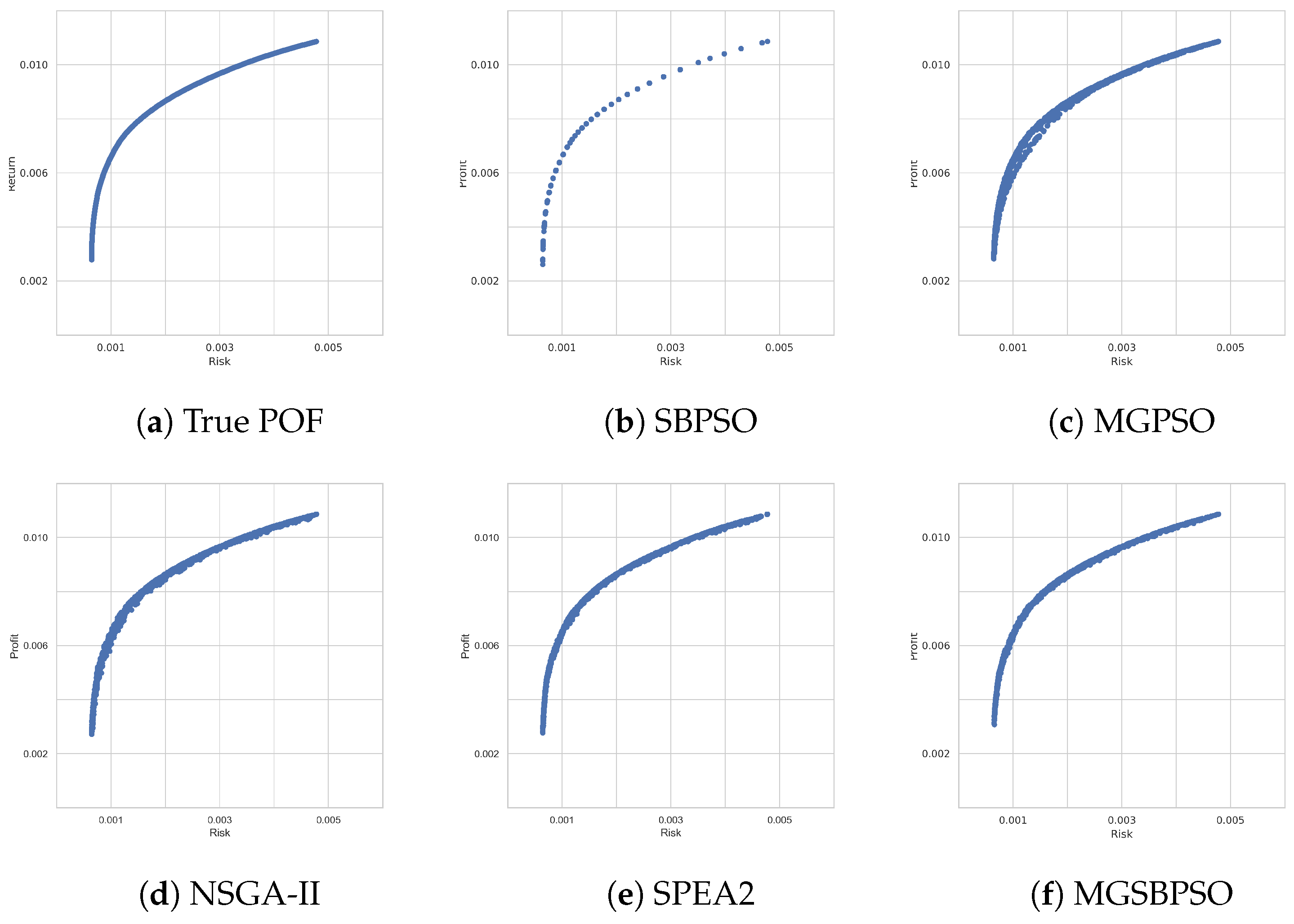

7. Conclusions

This paper proposed the multi-guide set-based particle swarm optimization (MGSBPSO) algorithm, a multi-objective adaptation of the set-based particle swarm optimization (SBPSO) algorithm that incorporates elements from multi-guide particle swarm optimization (MGPSO). MGSBPSO uses two set-based swarms, where the first selects assets that minimize risk, and the second swarm selects assets that maximize return. For the purpose of optimizing the asset weights, MGPSO, which samples’ control parameter values that satisfy theoretically derived stability criteria, was used. The performance of MGSBPSO was compared with that of MGPSO, NSGA-II, SPEA2, and the single-objective SBPSO across five portfolio optimization benchmark problems.

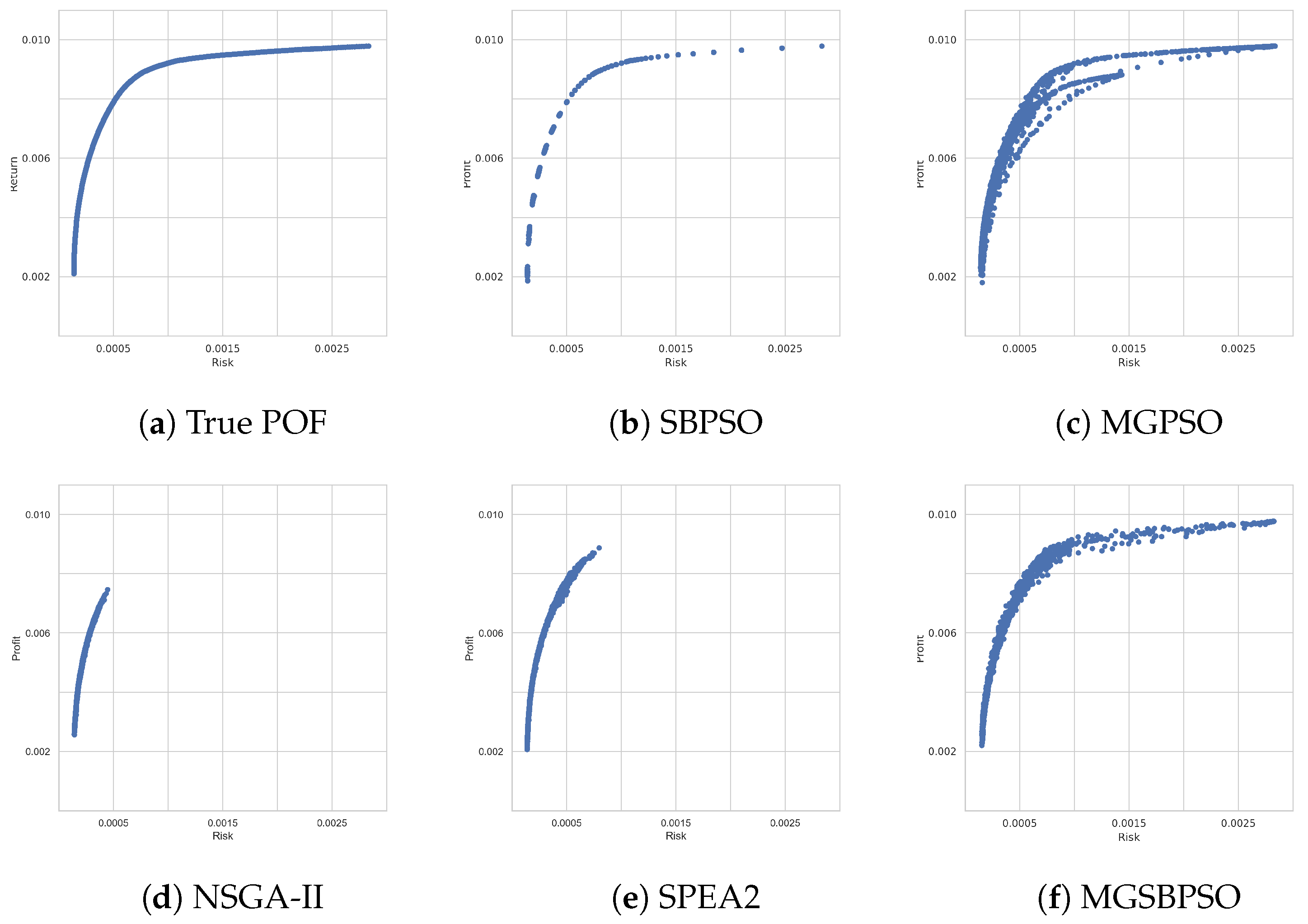

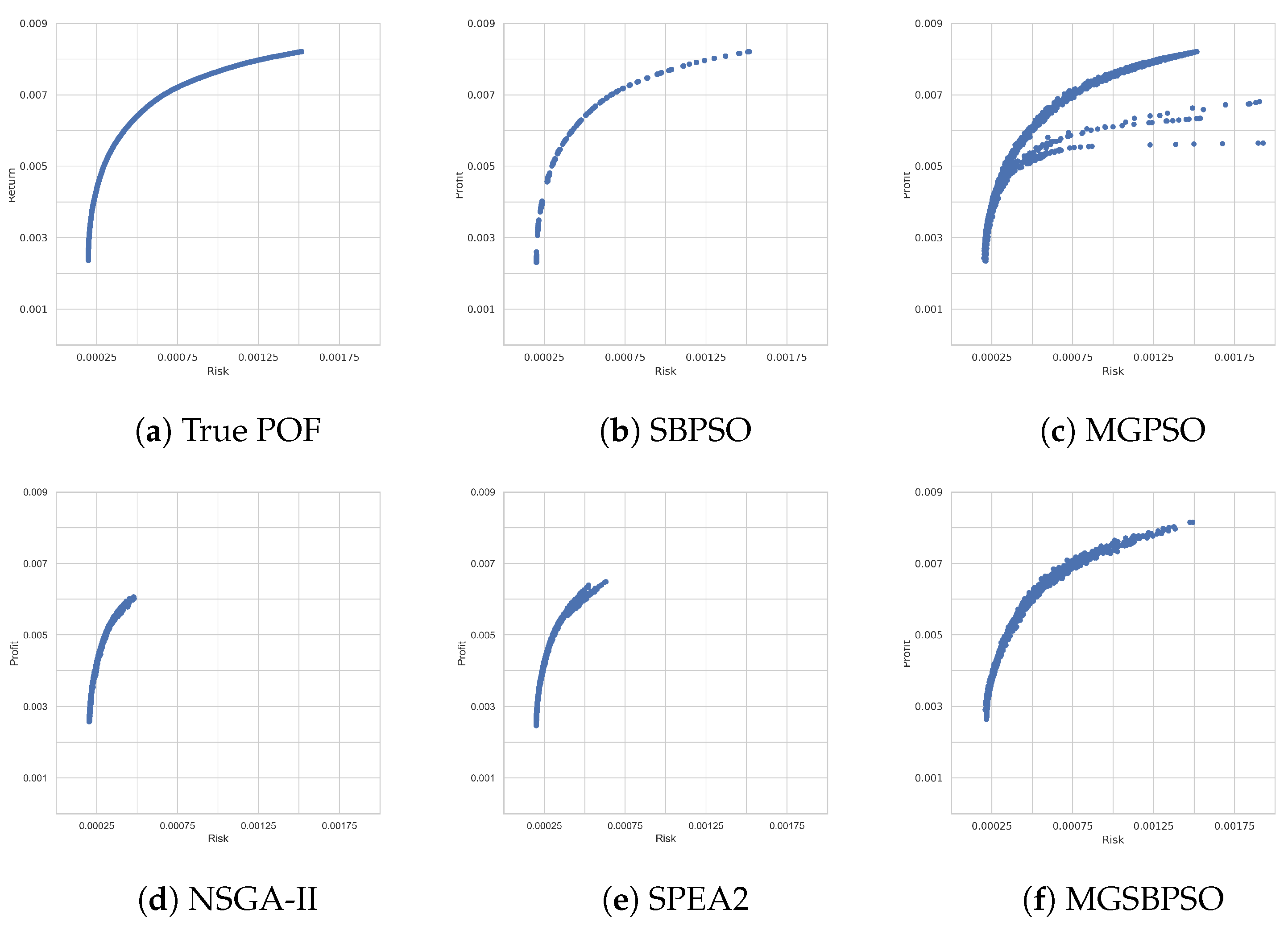

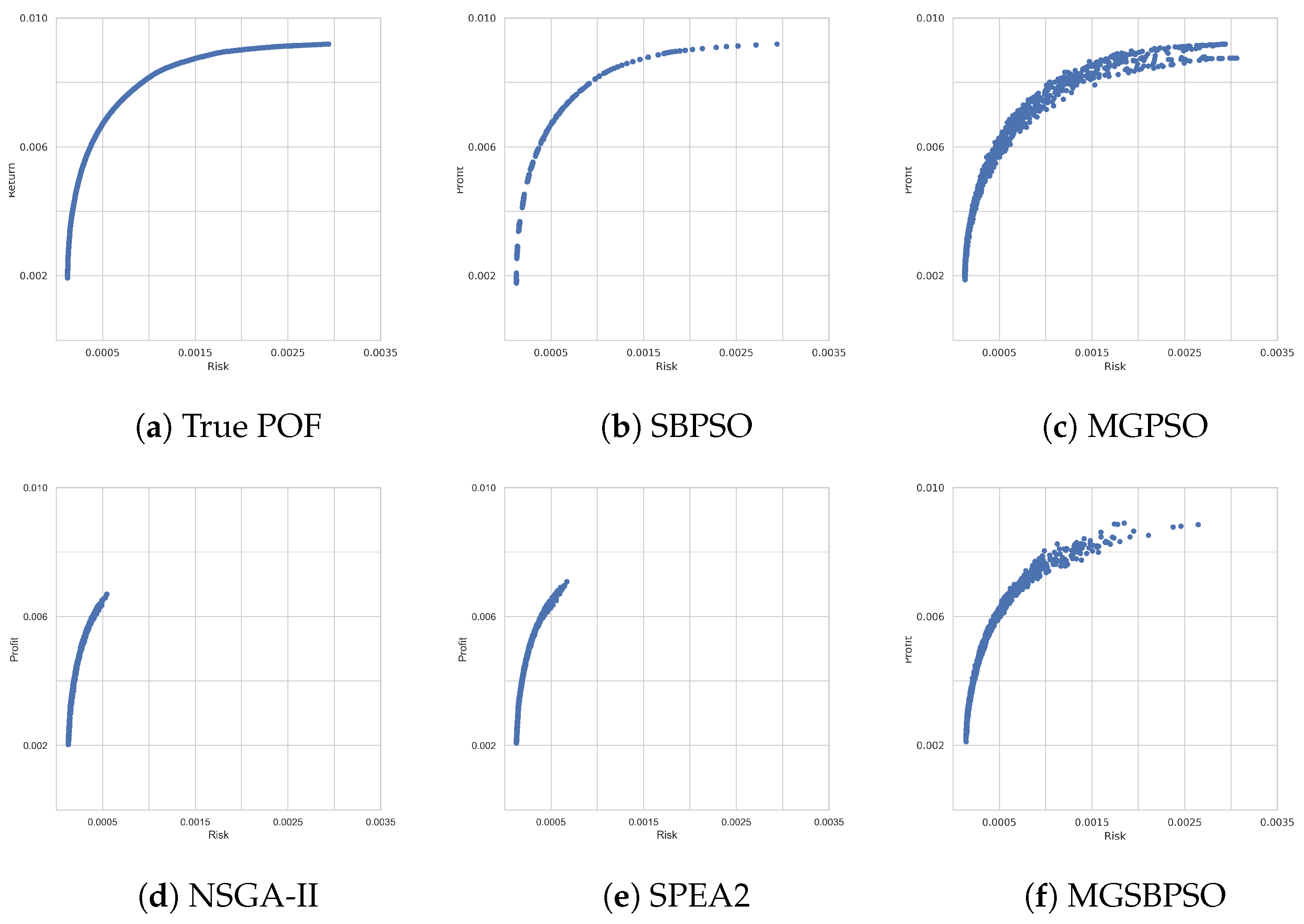

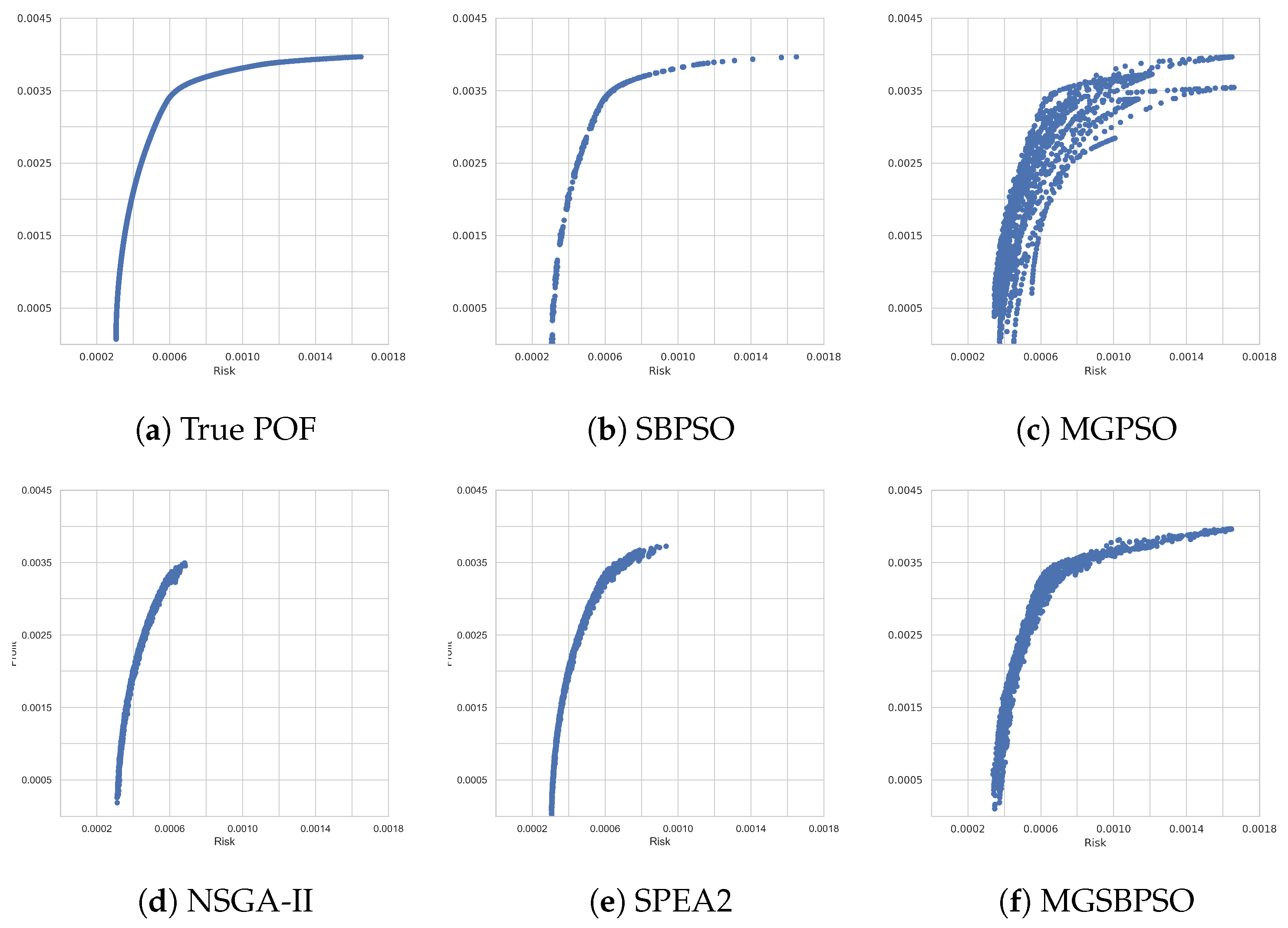

The results showed that all algorithms, in general, were able to approximate the true Pareto-optimal front (POF). NSGA-II and SPEA2 generally ranked quite high in comparison with the other algorithms. However, visual analysis of the POF obtained by NSGA-II and SPEA2 shows that these algorithms were only able to approximate part of the true POF. SBPSO, MGPSO, and MGSBPSO were able to approximate the full true POF for each benchmark problem, with the exception of MGPSO, for the last (and largest) benchmark problem. MGPSO, as well as MGSBPSO and SBPSO, did not scale to the largest portfolio problem, which redefined portfolio optimization as a set-based optimization problem. SBPSO optimized the mean-variance portfolio model of Equation (

3) for a given risk–return tradeoff value. By optimizing for multiple tradeoff values, SBPSO obtained a variety of solutions with differing risk and return characteristics. Thus, an advantage of MGSBPSO over its single-objective counterpart is that MGSBPSO does not require multiple runs to obtain a diverse set of optimal solutions, as risk (minimized) and return (maximized) are optimized independently of each other. It should also be noted that MGPSO without control parameter tuning performed similar to or better than NSGA-II and SPEA2 with tuned parameters.

Overall, the results show that the benefits of redefining the portfolio optimization problem as a set-based problem are also applicable to multi-objective portfolio optimization.