Abstract

This paper is concerned with the construction and convergence analysis of novel implicit Peer triplets of two-step nature with four stages for nonlinear ODE constrained optimal control problems. We combine the property of superconvergence of some standard Peer method for inner grid points with carefully designed starting and end methods to achieve order four for the state variables and order three for the adjoint variables in a first-discretize-then-optimize approach together with A-stability. The notion triplets emphasize that these three different Peer methods have to satisfy additional matching conditions. Four such Peer triplets of practical interest are constructed. In addition, as a benchmark method, the well-known backward differentiation formula BDF4, which is only -stable, is extended to a special Peer triplet to supply an adjoint consistent method of higher order and BDF type with equidistant nodes. Within the class of Peer triplets, we found a diagonally implicit -stable method with nodes symmetric in to a common center that performs equally well. Numerical tests with four well established optimal control problems confirm the theoretical findings also concerning A-stability.

Keywords:

implicit Peer two-step methods; BDF-methods; nonlinear optimal control; first-discretize-then-optimize; discrete adjoints MSC:

34H05; 49J15; 65L05; 65L06

1. Introduction

The design of efficient time integrators for the numerical solution of optimal control problems constrained by systems of ordinary differential equations (ODEs) is still an active research field. Such systems typically arise from semi-discretized partial differential equations describing, e.g., the dynamics of heat and mass transfer or fluid flow in complex physical systems. Symplectic one-step Runge–Kutta methods [1,2] exploit the Hamiltonian structure of the first-order optimality system—the necessary conditions to find an optimizer—and automatically yield a consistent approximation of the adjoint equations, which can be used to compute the gradient of the objective function. The first-order symplectic Euler, second-order Störmer–Verlet and higher-order Gauss methods are prominent representatives of this class, which are all implicit for general Hamiltonian systems, see the monograph [3]. Moreover, compositions of basic integrators with different steps sizes and splitting methods have been investigated. Generalized partitioned Runge–Kutta methods which allow one to compute exact gradients with respect to the initial condition are studied in [4]. To avoid the solution of large systems of nonlinear equations, semi-explicit W-methods [5] and stabilized explicit Runge–Kutta–Chebyshev methods [6] have been proposed, too. However, as all one-step methods, also symplectic Runge–Kutta schemes join the structural suffering of order reductions, which may lead to poor results in their application, e.g., to boundary control problems such as external cooling and heating in a manufacturing process; see [7,8] for a detailed study of this behaviour.

In contrast, multistep methods including Peer two-step methods avoid order reductions and allow a simple implementation [9,10]. However, the discrete adjoint schemes of linear multistep methods are in general not consistent or show a significant decrease of their approximation order [11,12]. Recently, we have developed implicit Peer two-step methods [13] with three stages to solve ODE constrained optimal control problems of the form

with the state , the control , , the objective function , where the set of admissible controls is closed and convex. Introducing for any the normal cone mapping

the first-order Karush–Kuhn–Tucker (KKT) optimality system [14,15] reads

In this paper, we assume the existence of a unique local solution of the KKT system with sufficient regularity properties to justify the use of higher order Peer triplets, see, e.g., the smoothness assumption in Section 2 of [14].

Remark 1.

The objective function in (1) is specified in the so-called Mayer form using terminal solution values only. Terms given in the Lagrange form

can be equivalently reduced to the Mayer form by introducing an additional state , the new state vector , and an additional differential equation with initial values . Then, the Lagrange term (8) simply reduces to .

Following a first-discretize-and-then-optimize approach, we apply an s-stage implicit Peer two-step method to (2) and (3) with approximations and , on an equi-spaced time grid with step size and nodes , which are fixed for all time steps, to get the discrete constraint nonlinear optimal control problem

with long vectors , , and . Further, , , and are additional parameter vectors at both interval ends, , and being the identity matrix. We will use the same symbol for a coefficient matrix like A and its Kronecker product as a mapping from the space to itself. In contrast to one-step methods, Peer two-step methods compute from the previous stage vector . Hence, also a starting method, given in (10), for the first time interval is required. On each subinterval, Peer methods may be defined by three coefficient matrices , where and are assumed to be nonsingular. For practical reasons, this general version will not be used, the coefficients in the inner grid points will belong to a fixed Peer method , . The last forward step has the same form as the standard steps but uses exceptional coefficients to allow for a better approximation of the end conditions.

The KKT conditions (5)–(7) for ODE-constrained optimal control problems on a time interval lead to a boundary value problem for a system of two differential equations, see Section 2.1. The first one corresponds to the original forward ODE for the state solution and the second one is a linear, adjoint ODE for a Lagrange multiplier . It is well known that numerical methods for such problems may have to satisfy additional order conditions for the adjoint equation [2,5,14,16,17,18]. While these additional conditions are rather mild for one-step methods they may lead to severe restrictions for other types of methods such as multistep and Peer methods, especially at the right-hand boundary at the end point T. Here, the order for the approximation of the adjoint equation may be limited to one.

For Peer methods, this question was discussed first in [19] and the adjoint boundary condition at T was identified as the most critical point. In a more recent article [13], these bottlenecks could be circumvented by two measures. First, equivalent formulations of the forward method are not equivalent for the adjoint formulation and using a redundant formulation of Peer methods with three coefficient matrices adds additional free parameters. The second measure is to consider different methods for the first and last time interval. Hence, instead of one single Peer method (which will be called the standard method) we discuss triplets of Peer methods consisting of a common standard method for all subintervals of the grid from the interior of , plus a starting method for the first subinterval and an end method for the last one. These two boundary methods may have lower order than the standard method since they are used only once.

The present work extends the results from [13] which considered methods with stages only, in two ways. We will now concentrate on methods with four stages and better stability properties such as A-stability. The purpose of an accurate solution of the adjoint equation increases the number of conditions on the parameters of the method. Requiring high order of convergence s for the state variable and order for the adjoint variable —which we combine to the pair from now on—a variant of the method BDF3 was identified in [13] as the most attractive standard method. However, this method is not A-stable, with a stability angle of . In order to obtain A-stability, we will reduce the required orders by one. Still, we will show that convergence with the orders can be regained by a superconvergence property.

The paper is organized as follows: In Section 2.1, the boundary value problem arising from the KKT system by eliminating the control and its discretization by means of discrete adjoint Peer two-step triplets are formulated. An extensive error analysis concentrating on the superconvergence effect is presented in Section 2.2. The restrictions imposed by the starting and end method on the standard Peer two-step method is studied in Section 2.3. The following Section 2.4 describes the actual construction principles of Peer triplets. Numerical tests are undertaken in Section 3. The paper concludes with a discussion in Section 4.

2. Materials and Methods

2.1. The Boundary Value Problem

Following the usual Lagrangian approach applied in [13], the first order discrete optimality conditions now consist of the forward Equations (10)–(12), the discrete adjoint equations, acting backwards in time,

and the control conditions

Here, and the Jacobians of F are block diagonal matrices and . The generalized normal cone mapping is defined by

The discrete KKT conditions (10)–(16) should be good approximations to the continuous ones (5)–(7). In what follows, we assume sufficient smoothness of the optimal control problem such that a local solution of the KKT system (1)–(3) exists. Furthermore, let the Hamiltonian satisfy a coercivity assumption, which is a strong form of a second-order condition. Then the first-order optimality conditions are also sufficient [14]. If is sufficiently close to , the control uniqueness property introduced in [14] yields the existence of a locally unique minimizer of the Hamiltonian over all . Substituting u in terms of in (5) and (6), gives then the two-point boundary value problem

with the source functions defined by

The same arguments apply to the discrete first-order optimality system (10)–(16). Substituting the discrete controls in terms of and defining

the approximations for the forward and adjoint differential equations read in a compact form

Here, the value of is determined by an interpolant with of appropriate order. In a next step, these discrete equations are now treated as a discretization of the two-point boundary value problem (18) and (19). We will derive order conditions and give bounds for the global error.

2.2. Error Analysis

2.2.1. Order Conditions

The local error of the standard Peer method and the starting method is easily analyzed by Taylor expansion of the stage residuals, if the exact ODE solutions are used as stages. Hence, defining for the forward Peer method, where , local order means that

In all steps of the Peer triplet, requiring local order for the state variable and order for the adjoint solution leads to the following algebraic conditions from [13]. These conditions depend on the Vandermonde matrix , the Pascal matrix and the nilpotent matrix which commutes with . For the different steps (22)–(24) and (25)–(27), and in the same succession we write down the order conditions from [13] when is diagonal. The forward conditions are

with the cardinal basis vectors , . The conditions for the adjoint methods are given by

2.2.2. Bounds for the Global Error

In this section, the errors , , , are analyzed. According to [13], the equation for the errors and is a linear system of the form

where the matrix has a -block structure and denote the corresponding truncation errors. Deleting all h-depending terms from , the block structure of the remaining matrix is given by

with and a mean value of the symmetric Hessian matrix of C. The index ranges of all three matrices are copied from the numbering of the grid, , for convenience. In fact, has rank one only with . The diagonal blocks of are nonsingular and its inverse has the form

The diagonal blocks have a bi-diagonal block structure with identity matrices in the diagonal. The individual -blocks of their inverses are easily computed with having lower triangular block form and upper triangular block form, with blocks

with the abbreviations , and , . Empty products for mean the identity .

Defining and rewriting (35) in fixed-point form

it has been shown in the proof of Theorem 4.1 of [13] for smooth right hand sides f and that

in suitable norms, where these norms are discussed in more detail in Section 2.2.3 below. Moreover, due to the lower triangular block structure of the estimate for the error in the state variable may be refined (Lemma 4.2 in [13]) to

with some constant L. Without additional conditions, estimates of the terms on the right-hand side of (40) in the form lead to the loss of one order in the global error. However, this loss may be avoided by one additional superconvergence condition on the forward and the adjoint method each, which will be considered next.

In our convergence result Theorem 1 below, we will assume the existence of the discrete solution satisfying (22)–(27), for simplicity. However, at least for quadratic objective functions C, this existence follows quite simply along the same lines used in this paragraph here.

Lemma 1.

Let the right-hand side f of (2) be smooth with bounded second derivatives and let the function C be a polynomial of degree two, at most. Let the coefficients of the standard scheme satisfy

where denotes the absolutely second largest eigenvalue of the matrix . Then, there exists a unique solution of the system (22)–(27) for small enough stepsizes h.

Proof.

Since is constant, in (37) is a fixed matrix and similar to (39) the Equations (22)–(27) may be written as a fixed-point problem

where the function is also smooth, having the Jacobian considered in (39). Due to (42) there exist norms such that , hold. In this case, the proof of Theorem 4.1 in [13] shows the bound with a constant L depending on the derivatives of g and . Hence, by the mean value theorem, the right-hand side of (43) has as a Lipschitz constant and the map is a contraction for , establishing the existence of a unique fixed point solution. □

2.2.3. Superconvergence of the Standard Method

For s-stage Peer methods, global order s may be attained in many cases if other properties of the method have lower priority. For optimal stiff stability properties like A-stability, however, it may be necessary to sacrifice one order of consistency as in [20,21]. Accordingly, in this paper the order conditions for the standard method are lowered by one compared to the requirements in the recent paper [13] to local orders , see Table 1. Still, the higher global orders may be preserved to some extent by the concept of superconvergence which prevents the order reduction in the global error by cancellation of the leading error term.

Superconvergence is essentially based on the observation that the powers of the forward stability matrix may converge to a rank-one matrix which maps the leading error term of to zero. This is the case if the eigenvalue 1 of is isolated. Indeed, if the eigenvalues of the stability matrix satisfy

then its powers converge to the rank-one matrix since and are the right and left eigenvectors having unit inner product due to the preconsistency conditions and of the forward and backward standard Peer method, see (30) and (33). The convergence is geometric, i.e.,

for any . Some care has to be taken here since the error estimate (40) depends on the existence of special norms satisfying , resp. . Concentrating on the forward error , a first transformation of is considered with the matrix

where . Since and , the matrix is block-diagonal with the dominating eigenvalue 1 in the first diagonal entry. Due to (44) there exists an additional nonsingular matrix such that the lower diagonal block of has norm smaller one, i.e.

Hence, with the matrix

the required norm is found, satisfying and in (45). Using this norm in (40) and (41) for , it is seen with (38) that

Only for a slight modification is required and the factors in the second sum have to be replaced by for with norms still of size . Now, in all cases the loss of one order in the first sum in (49) may be avoided if the leading -term of is canceled in the product with the left eigenvector, i.e., if . An analogous argument may be applied to the second term in (40). The adjoint stability matrix possesses the same eigenvalues as and its leading eigenvectors are also known: and by preconsistency and an analogous construction applies.

Under the conditions corresponding to local orders the leading error terms in and are given by

Considering now in (49) and similarly the following result is obtained.

Theorem 1.

Let the Peer triplet with stages satisfy the order conditions collected in Table 1 and let the solutions satisfy , . Let the coefficients of the standard Peer method satisfy the conditions

and let (44) be satisfied. Assume, that a Peer solution exists and that f and C have bounded second derivatives. Then, for stepsizes the error of these solutions is bounded by

.

Proof.

Remark 2.

The estimate (49) shows that superconvergence may be a fragile property and may be impaired if is too close to one, leading to very large values in the bound

for the second term in (49). In fact, numerical tests showed that the value plays a crucial role. While was appropriate for the three-stage method AP3o32f which shows order 3 in the tests in Section 3, for two four-stage methods with superconvergence was not seen in any of our three test problems below. Reducing farther with additional searches we found that a value below may be safe to achieve order 4 in practice. Hence, the value will be one of the data listed in the properties of the Peer methods developed below.

Remark 3.

By Theorem 1, weakening the order conditions from the local order pair to the present pair combined with fewer conditions for superconvergence preserves global order for the state variable. However, it also leads to a more complicated structure of the leading error. Extending the Taylor expansion for and applying the different bounds, a more detailed representation of the state error may be derived,

with some modified constant . Obviously, the leading error depends on four different derivatives of the solutions. Since the dependence on p is rather indirect, we may concentrate on the first line in (55). Both derivatives there may not be correlated and it may be difficult to choose a reasonable combination of both error constants as objective function in the construction of efficient methods. Still, in the local error the leading term is obviously , with defined in (50), and it may be propagated through non-linearity and rounding errors. Hence, in the search for methods,

is used as the leading error constant.

2.2.4. Adjoint Order Conditions for General Matrices

The number of order conditions for the boundary methods is so large that they may not be fulfilled with the restriction to diagonal coefficient matrices for . Hence, it is convenient to make the step to full matrices in the boundary methods and the order conditions for the adjoint schemes have to be derived for this case. Unfortunately, for such matrices the adjoint schemes (27) and (26) for have a rather unfamiliar form. Luckily, the adjoint differential equation is linear. We abbreviate the initial value problem for this equation as

with and for some boundary index , we consider the matrices , . Starting the analysis with the simpler end step (27), we have

which is some kind of half-one-leg form since it evaluates the Jacobian J and the solution p at different time points. This step must be analyzed for the linear Equation (57) only. Expressions for the higher derivatives of the solution p follow easily:

Lemma 2.

Proof.

Considering the residual of the scheme with the exact solution , Taylor expansion at and the Leibniz rule yield

where all derivatives are evaluated at . The second term can be further reformulated as

Looking at the factors of separately leads to the order conditions

Remark 4.

Condition (60) is the standard version for diagonal from [13]. Hence, the half-one-leg form of (58) introduces s additional conditions (61), only, for order 3 while the boundary coefficients , , may now contain additional elements. In fact, a similar analysis for step (26) with reveals again (61) as the only condition in addition to (33).

2.3. Existence of Boundary Methods Imposes Restrictions on the Standard Method

In the previous paper [13], the combination of forward and adjoint order conditions for the standard method into one set of equations relating only A and K already gave insight on some background of these methods such as the advantages of using symmetric nodes. It also simplifies the actual construction of methods leading to shorter expressions during the elimination process with algebraic software tools. For ease of reference, this singular Sylvester type equation is reproduced here,

A similar combination of the order conditions for the boundary methods, however, reveals crucial restrictions: the triplet of methods , , has to be discussed together since boundary methods of an appropriate order may not exist for any standard method , only for those satisfying certain compatibility conditions required by the boundary methods. Knowing these conditions allows one to design the standard method alone without the ballast of two more methods with many additional unknowns. This decoupled construction also greatly reduces the dimension of the search space if methods are optimized by automated search routines. We start with the discussion of the end method.

2.3.1. Combined Conditions for the End Method

We remind that we now are looking for methods having local order and everywhere, which we abbreviate from now on as . In particular this means that for the standard method. Looking for bottlenecks in the design of these methods, we try to identify crucial necessary conditions and consider the three order conditions for the end method in combination

From these conditions the matrices may be eliminated, revealing the first restrictions on B. Here, the singular matrix map

plays a crucial role.

Lemma 3.

A necessary condition for a boundary method to satisfy (63) is

Proof.

Unfortunately, this lemma leads to several restrictions on the design of the methods due to the properties of the map . Firstly, for diagonal matrices the image has a very restricted shape.

Lemma 4.

If is a diagonal matrix, then and are Hankel matrices with constant entries along anti-diagonals.

Proof.

With , we have , showing Hankel form of for , . Now, , which shows again Hankel form. □

This lemma means that an end method with diagonal only exists if also on the right-hand side of (65) has Hankel structure. Unfortunately it was observed that for standard methods with definite K this is the case for only (there exist methods with an explicit stage ).

Remark 5.

Trying to overcome this bottleneck with diagonal matrices , one might consider adding additional stages of the end method. However, using general end nodes with does not remove this obstacle. The corresponding matrix with appropriate Vandermonde matrices still has Hankel form.

However, even with a full end matrix , Lemma 3 and the Fredholm alternative enforce restrictions on the standard method due to the singularity of . This is discussed for the present situation with , only. The matrix belonging to the map is and its transpose is . Hence, the adjoint of the map is given by

Component-wise the map acts as

with elements having indices or being zero. For one gets

It is seen that the kernel of has dimension 3 and is given by

In (65), the Fredholm condition leads to restrictions on the matrix B from the standard scheme. However, since the matrix A should have triangular form, it is the more natural variable in the search for good methods and an equivalent reformulation of these conditions for A is of practical interest.

Lemma 5.

Assume that the standard method has local order . Then, end methods of order only exist if the standard method satisfies the following set of three conditions, either for B or for A,

Proof.

Multiplying equation (65) by from the right and using , an equivalent form is

with by the binomial formula. The Fredholm alternative requires that for all X from (66). We now frequently use the identities and with . The kernel in (66) is spanned by three basis elements. The first, (with the convention ) leads to

The second basis element is . Here and

For the third element, , one gets .

The third condition on B is

The versions for A follow from the order conditions. Let again . The first columns of (30) and (33) show and , which gives . The second column of (30) reads and leads to showing also . Hence, the second condition in (67) is equivalent with

In order to show the last equivalence in (67), we have to look ahead at the forward condition (30) for order 3, which is . This leads to , which is equivalent to . Now, this expression is required in the last reformulation which also uses the second adjoint order condition , yielding

□

Remark 6.

It can be shown that only the first condition in (67) is required if the matrix K is diagonal. This first condition is merely a normalization fixing the free common factor in the class of equivalent methods. The other two conditions are consequences of the order conditions on the standard method with diagonal K. However, the proof is rather lengthy and very technical and is omitted.

The restrictions (67) on the standard method also seem to be sufficient with (61) posing no further restrictions. In fact, with (67) the construction of boundary methods was always possible in Section 2.4.

2.3.2. Combined Conditions for the Starting Method

The starting method has to satisfy only two conditions

The first two columns of the first equation may be solved for and leading to the reduced conditions

The presence of the projection leads to changes in the condition compared to the end method.

Lemma 6.

A necessary condition for the starting method to satisfy (68) is

Proof.

Transposing the second condition from (68) and multiplying with gives

and may now be eliminated from both equations, yielding

Again, may be moved to the left side and leads to (69) since it commutes with . □

The situation is now similar to the one for the end method (also concerning a diagonal form of ) and we consider again the Fredholm condition. The matrix belonging to the matrix product is and it has the transpose . This matrix belongs to the map

For , images of this map are given by

Here, the map alone introduces no new kernel elements, the kernel of (70) coincides with that of the map given by matrices of the form . Since the right-hand side of (69) is , the condition for solvability

is always satisfied since . Hence, no additional restrictions on the standard method are introduced by requiring the existence of starting methods.

2.4. Construction of Peer Triplets

The construction of Peer triplets requires the solution of the collected order conditions from Table 1 and additional optimization of stability and error properties. However, it has been observed that some of these conditions may be related in non-obvious ways, see e.g., Remark 6. This means that the accuracy of numerical solutions may be quite poor due to large and unknown rank deficiencies. Instead, all order conditions were solved here exactly by algebraic manipulation with rational coefficients as far as possible.

The construction of the triplets was simplified by the compatibility conditions (67) allowing the isolated construction of the standard method without the many additional parameters of the boundary methods. Furthermore, an elimination of the matrix B from forward and adjoint conditions derived in [13], see (62), reduces the number of parameters in to elements with additional parameters from the nodes. This is so since is invariant under a common shift of nodes and we chose the increments , as parameters. Still, due to the mentioned dependencies between conditions, for a six-parameter family of methods exists which has been derived explicitly (with quite bulky expressions).

However, optimization of stability properties such as -stability or error constants from (56) was not possible in Maple with six free parameters. Instead, the algebraic expressions were copied to Matlab scripts for some Monte-Carlo-type search routines. The resulting coefficients of the standard method were finally approximated by rational expressions and brought back to Maple for the construction of the two boundary methods.

At first glance, having the full six-parameter family of standard methods at hand may seem to be a good work base. However, the large dimension of the search space may prevent optimal results with reasonable effort. This can be seen below, where the restriction to symmetric nodes or singly-implicit methods yielded methods with smaller than automated global searches.

-stability of the method may be checked [13] by considering the eigenvalue problem for the stability matrix , i.e.,

as an eigenvalue problem for where runs along the unit circle. Since we focus on A-stable methods, exact verification of this property would have been preferable, of course, but an algebraic proof of A-stability seemed to be out of reach. It turned out that the algebraic criterion of the second author [22] is rather restrictive (often corresponding to norm estimates, Lemma 2.8 ibd). On the other hand, application of the exact Schur criterion [20,23] is not straight-forward and hardly feasible, since the (rational) coefficients of the stability polynomial are prohibitively large for optimized parameters (dozens of decimal places of the numerators).

2.4.1. Requirements for the Boundary Methods

Since Lemma 5 guarantees the existence of the two boundary methods and , their construction can follow after that of the standard method. Requirements for these two members of the triplet may also be weakened since they are applied once only. This relaxation applies to the order conditions as shown in Table 1, but also to stability requirements. Still, the number of conditions at the boundaries is so large that the diagonal triangular forms of and respectively have to be sacrificed and replaced by some triangular block structure. Compared to the computational effort of the complete boundary value problem, the additional complexity of the two boundary steps should not be an issue. However, for non-diagonal matrices and , the four additional one-leg-conditions (61) have to be obeyed.

Weakened stability requirements mean that the last forward Peer step (23) for and the two adjoint Peer steps (26) for and need not be A-stable and only nearly zero stable if the corresponding stability matrices have moderate norms. This argument also applies to the two Runge–Kutta steps without solution output (22) and (27). However, the implementation of these steps should be numerically safe for stiff problems and arbitrary step sizes. These steps require the solution of two linear systems with the matrices or, rather

where are block diagonal matrices of Jacobians. These Jacobians are expected to have absolutely large eigenvalues in the left complex half-plane. For such eigenvalues, non-singularity of the matrices (72) is assured under the following eigenvalue condition:

In Table 2 the constants are displayed for all designed Peer triplets as well as the spectral radii and for the boundary steps of the Peer triplet. In the search for the boundary methods with their exact algebraic parameterizations, these spectral radii were minimized, and if they were close to one the values were maximized.

Table 2.

Properties of the boundary methods of Peer triplets.

2.4.2. -Stable Four-Stage Methods

Although our focus is on A-stable methods, we also shortly consider -stable methods. We like to consider BDF4 (backward differentiation formulas) as a benchmark, since triplets based on BDF3 were the most efficient ones in [13]. In order to distinguish the different methods, we denote them in the form APsoaaa, where AP stands for Adjoint Peer method followed by the stage number and the smallest forward and adjoint orders and in the triplet. The trailing letters are related to properties of the method like diagonal or singly diagonal implicitness.

The Peer triplet AP4o43bdf based on BDF4 has equi-spaced nodes , yielding . The coefficients of the full triplet are given in Appendix A.1. Obviously the method is singly-implicit and its well-known stability angle is . We also monitor the norm of the zero-stability matrix , which may be a measure for the propagation of rounding errors. Its value is . Since BDF4 has full global order 4, the error constant from (56) is . Still, the end methods were constructed with the local orders according to Table 1. The matrices of the corresponding starting method have a leading block and a separated last stage. We abbreviate this as block structure . All characteristics of the boundary method are given in Table 2.

Motivated by the beneficial properties of Peer methods with symmetric nodes seen in [13,19], the nodes of the next triplet with the diagonally-implicit standard method were chosen symmetric to a common center, i.e., . Unfortunately, searches for large stability angles with such nodes in the interval did not find A-stable methods, but the following method AP4o43dif with flip symmetric nodes and , which is an improvement of 10 degrees over BDF4. Its coefficients are given in Appendix A.2. Although there exist A-stable methods with other nodes in , this method is of its own interest since its error constant is surprisingly small. The node vector of AP4o43dif includes , leading again to . Further properties of the standard method are and the damping factor . See Table 2 for the boundary methods.

2.4.3. A-Stable Methods

By extensive searches with the full six-parameter family of diagonally-implicit four-stage methods many A-stable methods were found even with nodes in . In fact, regions with A-stable methods exist in at least three of the eight octants in -space. Surprisingly, however, for none of these methods the last node was the rightmost one. In addition, it may be unexpected that some of the diagonal elements of A and K have negative signs. This does not cause stability problems if , see also (72). A-stability assured, the search procedure tried to minimize a linear combination of the error constant and the norm of the stability matrix with small to account for the different magnitudes of these data. As mentioned in Remark 2, it was also necessary to include the damping factor in the minimization process. One of the best A-stable standard methods with general nodes was named AP4o43dig. Its coefficients are given in Appendix A.3. It has an error constant and damping factor , see Table 3. No block structure was chosen in the boundary methods in order to avoid large norms of the mixed stability matrices , see Table 2.

Table 3.

Properties of the standard methods of Peer triplets.

It was observed that properties of the methods may improve, if the nodes have a wider spread than the standard interval . In our setting, the general vector w allows for an end evaluation at any place between the nodes. Since an evaluation roughly in the middle of all nodes may have good properties, in a further search the nodes were restricted to the interval . Indeed, all characteristic data of the method AP4o43die with extended nodes presented in Appendix A.4 have improved. As mentioned before, the standard method is invariant under a common node-shift and a nearly minimal error constant was obtained with the node increments , and . Then, the additional freedom in the choice of was needed for the boundary methods, since the conditions (73) could only be satisfied in a small interval around . The full node vector with this choice has alternating node increments since . The method is A-stable, its error constant is almost half as large as for the method AP4o43dig, and and are smaller, too. The data of the boundary methods can be found in Table 2.

For medium-sized ODE problems, where direct solvers for the stage equations may be used, an additional helpful feature is diagonal singly-implicitness of the standard method. In our context this means that the triangular matrices and have a constant value in the main diagonal. Using the ansatz

for and , the order conditions from Table 1 lead to a cubic equation for with no rational solutions, in general. In order to avoid pollution of the algebraic elimination through superfluous terms caused by rounding errors, numerical solutions for this cubic equations were not used until the very end. This means that also the order conditions for the boundary methods were solved with as a free parameter, only the final evaluation of the coefficients in Appendix A.5 was performed with its numerical value. In addition, in the Matlab-search for A-stable methods, the cubic equation was solved numerically and it turned out that the largest positive solution gave the best properties. Hence, this Peer triplet was named AP4o43sil. For a first candidate with nearly minimal , the damping factor was again too close to one to ensure superconvergence in numerical tests, see Remark 2. However, further searches nearby minimizing the damping factor found a better standard method with . Its nodes are , the diagonal parameter is the largest zero of the cubic equation

Further properties of the standard method are A-stability, nodes in with , norm , and an error constant . The end method has full matrices , see Table 2.

For the sake of completeness, we also present an A-stable diagonally-implicit three-stage method, since in the previous paper [13] we could not find such methods with reasonable parameters. After relaxing the order conditions by using superconvergence, such methods exist. Applying all conditions for forward order and adjoint order , there remains a five-parameter family depending on the node differences , and three elements of A or K. A-stable methods exist in all four corners of the square in the -plane, the smallest errors were observed in the second quadrant. The method AP3o32f with has a node vector with . The coefficients can be found in Appendix A.6. The characteristic data are , , . The starting method is of standard form with lower triangular and diagonal .

3. Results

We present numerical results for all Peer triplets listed in Table 3 and compare them with those obtained for the third-order four-stage one-step W-method Ros3wo proposed in [5] which is linearly implicit (often called semi-explicit in the literature) and also A-stable. All calculations have been carried out with Matlab-Version R2019a, using the nonlinear solver fsolve to approximate the overall coupled scheme (22)–(27) with a tolerance . To illustrate the rates of convergence, we consider three nonlinear optimal control problems, the Rayleigh problem, the van der Pol oscillator, and a controlled motion problem. A linear wave problem is studied to demonstrate the practical importance of A-stability for larger time steps.

3.1. The Rayleigh Problem

The first problem is taken from [24] and describes the behaviour of a tunnel-diode oscillator. With the electric current and the transformed voltage at the generator , the Rayleigh problem reads

Introducing and eliminating the control yields the following nonlinear boundary value problem (see [5] for more details):

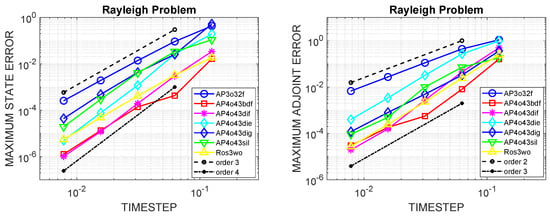

To study convergence orders of our new methods, we compute a reference solution at the discrete time points by applying the classical fourth-order RK4 with steps. Numerical results for the maximum state and adjoint errors are presented in Figure 1 for . AP3o32f and Ros3wo show their expected orders and for state and adjoint solutions, respectively. Order three for the adjoint solutions is achieved by all new four-stage Peer methods. The smaller error constants of AP4o43bdf, AP4o43dif and Ros3wo are clearly visible. The additional superconvergence order four for the state solutions shows up for AP4o43die and AP4o43sil and nearly for AP4o43dif and AP4o43dig. AP4o43bdf does not reach its full order four here, too.

Figure 1.

Rayleigh Problem: Convergence of the maximal state errors − (left) and adjoint errors − (right), .

3.2. The van der Pol Oscillator

Our second problem is the following optimal control problem for the van der Pol oscillator:

Introducing Lienhardt’s coordinates , , and eliminating the control , we finally get the following nonlinear boundary value problem in (see again [5] for more details):

The van der Pol equation with small positive values of gives rise to very steep profiles in , requiring variable step sizes for an efficient numerical approximation. Since the factor appears in the adjoint equations, the boundary value problem is even harder to solve. In order to apply constant step sizes with , we consider the mildly stiff case with for our convergence study.

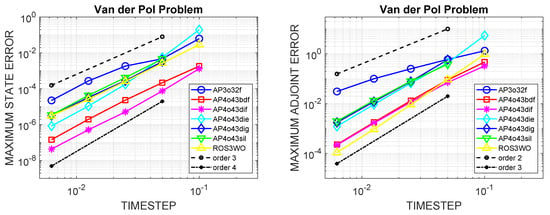

Numerical results for the maximum state and adjoint errors are presented in Figure 2, where a reference solution is computed with AP4o43bdf for . Order three for the adjoint solutions is achieved by all new four-stage Peer methods and also by Ros3wo. The three-stage Peer method AP3o32f drops down to order . For AP4o43dig and AP4o43sil applied with , Matlab’s fsolve was not able to deliver a solution. The additional superconvergence order four for the state solutions is visible for AP4o43die and nearly for AP4o43dif which performs best and beats AP4o43bdf by a factor five. AP4o43bdf does not reach its full order four here. The methods AP4o43sil and AP4o43dig show order three only, whereas AP3o32f and Ros3wo reach their theoretical order three.

Figure 2.

Van der Pol Problem: Convergence of the maximal state errors − (left) and adjoint errors − (right), .

3.3. A Controlled Motion Problem

This problem was studied in [1]. The motion of a damped oscillator is controlled in a double-well potential, where the control acts only on the velocity . The optimal control problem reads

where is the damping parameter and the target final position. As in [1], we set , , and .

Eliminating the scalar control yields the following nonlinear boundary value problem:

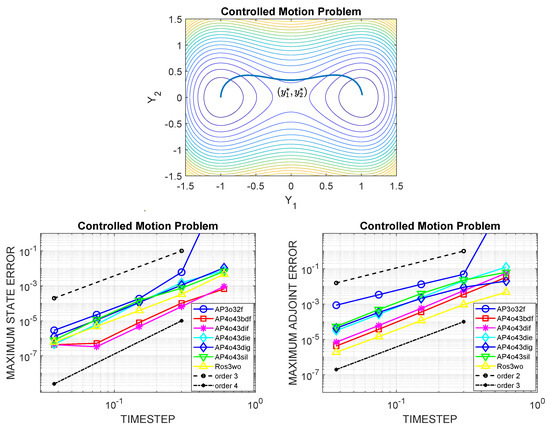

The optimal control must accelerate the motion of the particle to follow an optimal path through the total energy field , shown in Figure 3 on the top, in order to reach the final target behind the saddle point. The cost obtained from a reference solution with is , which is in good agreement with the lower order approximation in [1]. Numerical results for the maximum state and adjoint errors are presented in Figure 3 for . Worthy of mentioning is the repeated excellent performance of AP4o43bdf and AP4o43dif, but also the convincing results achieved by the third-order method Ros3wo. All theoretical orders are well observable, except for AP4o43dig, which tends to order three for the state solutions. A closer inspection reveals that this is caused by the second state , while the first one asymptotically converges with fourth order. However, the three methods AP4o43die, AP4o43dig, and AP4o43sil perform quite similar. Observe that AP3o32f has convergence problems for .

Figure 3.

Controlled Motion Problem: optimal path through the total energy field +− visualized by isolines and exhibiting a saddle point structure (top). Convergence of the maximal state errors − (bottom left) and adjoint errors − (bottom right), .

The stagnation of the state errors for the finest step sizes is due to the limited accuracy of Matlab’s fsolve—a fact which was already reported in [17].

3.4. A Wave Problem

The fourth problem is taken from [25] and demonstrates the practical importance of A-stability. We consider the optimal control problem

where is used. Introducing and eliminating the control yields the following linear boundary value problem:

The exact solutions are given by

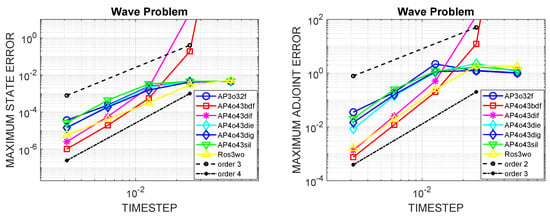

and the optimal control is . The key observation here is that the eigenvalues of the Jacobian of the right-hand side in (106)–(111) are and , which requests appropriate step sizes for only the )-stable methods AP4o43bdf and AP4o34dif due to their stability restrictions along the imaginary axis. Indeed, a closer inspection of the stability region of the (multistep) BDF4 method near the origin reveals that is a minimum requirement to achieve acceptable approximations for problems with imaginary eigenvalues and moderate time horizon. For the four-stage AP4o43bdf with step size , this yields and hence for the wave problem considered. A similar argument applies to AP4o34dif, too. Numerical results for the maximum state and adjoint errors are plotted in Figure 4 for . They clearly show that both methods deliver first feasible results for and below only, but then again outperform the other Peer methods. Once again, Ros3wo performs remarkably well. The orders of convergence for the adjoint solutions are one better than the theoretical values, possibly due to the overall linear structure of the boundary value problem.

Figure 4.

Wave Problem: Convergence of the maximal state errors − (left) and adjoint errors − (right), .

4. Discussion

We have extended our three-stage adjoint Peer two-step methods constructed in [13] to four stages to not only improve the convergence order of the methods but also their stability. Combining superconvergence of a standard Peer method with a careful design of starting and end Peer methods with appropriately enhanced structure, discrete adjoint A-stable Peer methods of order (4,3) could be found. Still, new requirements had to be dealt with for the higher order pair (4,3). The property of A-stability comes with larger error constants and a few other minor structural disadvantages. As long as A-stability is not an issue to solve the boundary value problem arising from eliminating the control from the system of KKT conditions, a Peer variant AP4o43bdf of the -stable BDF4 and the -stable AP4o43dif are the most attractive methods, which perform equally well depending on the problem type. The A-stable methods AP4o43dig and AP4o43die with diagonally implicit standard Peer methods are very good alternatives if eigenvalues close or on the imaginary axis are existent. We have also constructed the A-stable method AP4o43sil with a singly-diagonal main part as an additional option if large linear systems can be still solved by a direct solver and hence the property of requesting one LU-decomposition only is highly valuable. In future work, we plan to train our novel methods in a projected gradient approach to also tackle large-scale PDE constrained optimal control problems with semi-discretizations in space. In these applications, Peer triplets may have to satisfy even more severe requirements.

Author Contributions

Conceptualization, J.L. and B.A.S.; investigation, J.L. and B.A.S.; software, J.L. and B.A.S. All authors have read and agreed to the published version of the manuscript.

Funding

The first author is supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) within the collaborative research center TRR154 “Mathematical modeling, simulation

and optimisation using the example of gas networks” (Project-ID 239904186, TRR154/2-2018, TP B01).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

In what follows, we will give the coefficient matrices which define the Peer triplets discussed above. We have used the symbolic option in Maple as long as possible to avoid any roundoff errors which would pollute the symbolic manipulations by a great number of superfluous terms. If possible, we provide exact rational numbers for the coefficients and give numbers with 16 digits otherwise. It is sufficient to only show pairs and the node vector , since all other parameters can be easily computed from the following relations:

with and the special matrices

Appendix A.1. Coefficients of AP4o54bdf

Appendix A.2. Coefficients of AP4o43dif

Appendix A.3. Coefficients of AP4o43dig

Appendix A.4. Coefficients of AP4o43die

Appendix A.5. Coefficients of AP4o43sil

Appendix A.6. Coefficients of AP3o32f

References

- Liu, X.; Frank, J. Symplectic Runge-Kutta discretization of a regularized forward-backward sweep iteration for optimal control problems. J. Comput. Appl. Math. 2021, 383, 113133. [Google Scholar] [CrossRef]

- Sanz-Serna, J. Symplectic Runge–Kutta schemes for adjoint equations, automatic differentiation, optimal control, and more. SIAM Rev. 2016, 58, 3–33. [Google Scholar] [CrossRef]

- Hairer, E.; Wanner, G.; Lubich, C. Geometric Numerical Integration, Structure-Preserving Algorithms for Ordinary Differential Equations; Springer Series in Computational Mathematic; Springer: Berlin/Heidelberg, Germany, 1970; Volume 31. [Google Scholar]

- Matsuda, T.; Miyatake, Y. Generalization of partitioned Runge–Kutta methods for adjoint systems. J. Comput. Appl. Math. 2021, 388, 113308. [Google Scholar] [CrossRef]

- Lang, J.; Verwer, J. W-Methods in Optimal Control. Numer. Math. 2013, 124, 337–360. [Google Scholar] [CrossRef][Green Version]

- Almuslimani, I.; Vilmart, G. Explicit stabilized integrators for stiff optimal control problems. SIAM J. Sci. Comput. 2021, 43, A721–A743. [Google Scholar] [CrossRef]

- Lubich, C.; Ostermann, A. Runge-Kutta approximation of quasi-linear parabolic equations. Math. Comp. 1995, 64, 601–627. [Google Scholar] [CrossRef]

- Ostermann, A.; Roche, M. Runge-Kutta methods for partial differential equations and fractional orders of convergence. Math. Comp. 1992, 59, 403–420. [Google Scholar] [CrossRef]

- Gerisch, A.; Lang, J.; Podhaisky, H.; Weiner, R. High-order linearly implicit two-step peer—Finite element methods for time-dependent PDEs. Appl. Numer. Math. 2009, 59, 624–638. [Google Scholar] [CrossRef]

- Gottermeier, B.; Lang, J. Adaptive Two-Step Peer Methods for Incompressible Navier-Stokes Equations. In Proceedings of the ENUMATH 2009, the 8th European Conference on Numerical Mathematics and Advanced Applications, Uppsala, Sweden, 29 June–3 July 2009; Kreiss, G., Lötstedt, P., Malqvist, A., Neytcheva, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 387–395. [Google Scholar]

- Albi, G.; Herty, M.; Pareschi, L. Linear multistep methods for optimal control problems and applications to hyperbolic relaxation systems. Appl. Math. Comput. 2019, 354, 460–477. [Google Scholar] [CrossRef]

- Sandu, A. Reverse automatic differentiation of linear multistep methods. In Advances in Automatic Differentiation; Lecture Notes in Computational Science and Engineering; Bischof, C., Bücker, H., Hovland, P., Naumann, U., Utke, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 64, pp. 1–12. [Google Scholar]

- Lang, J.; Schmitt, B. Discrete adjoint implicit peer methods in optimal control. J. Comput. Appl. Math. 2022, 416, 114596. [Google Scholar] [CrossRef]

- Hager, W. Runge-Kutta methods in optimal control and the transformed adjoint system. Numer. Math. 2000, 87, 247–282. [Google Scholar] [CrossRef]

- Troutman, J. Variational Calculus and Optimal Control; Springer: New York, NY, USA, 1996. [Google Scholar]

- Bonnans, F.; Laurent-Varin, J. Computation of order conditions for symplectic partitioned Runge-Kutta schemes with application to optimal control. Numer. Math. 2006, 103, 1–10. [Google Scholar] [CrossRef]

- Herty, M.; Pareschi, L.; Steffensen, S. Implicit-Explicit Runge-Kutta schemes for numerical discretization of optimal control problems. SIAM J. Numer. Anal. 2013, 51, 1875–1899. [Google Scholar] [CrossRef]

- Sandu, A. On the properties of Runge-Kutta discrete adjoints. Lect. Notes Comput. Sci. 2006, 3394, 550–557. [Google Scholar]

- Schröder, D.; Lang, J.; Weiner, R. Stability and consistency of discrete adjoint implicit peer methods. J. Comput. Appl. Math. 2014, 262, 73–86. [Google Scholar] [CrossRef]

- Montijano, J.; Podhaisky, H.; Randez, L.; Calvo, M. A family of L-stable singly implicit Peer methods for solving stiff IVPs. BIT 2019, 59, 483–502. [Google Scholar] [CrossRef]

- Schmitt, B.; Weiner, R. Efficient A-stable peer two-step methods. J. Comput. Appl. Math. 2017, 316, 319–329. [Google Scholar] [CrossRef]

- Schmitt, B. Algebraic criteria for A-stability of peer two-step methods. Technical Report. arXiv 2015, arXiv:1506.05738. [Google Scholar]

- Jackiewicz, Z. General Linear Methods for Ordinary Differential Equations; John Wiley&Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Jacobson, D.; Mayne, D. Differential Dynamic Programming; American Elsevier Publishing: New York, NY, USA, 1970. [Google Scholar]

- Dontchev, A.; Hager, W.; Veliov, V. Second-order Runge-Kutta approximations in control constrained optimal control. SIAM J. Numer. Anal. 2000, 38, 202–226. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).