Abstract

In this work, we propose -Tree, a dynamic distributed deterministic structure for data management in decentralized networks, by engineering and extending an existing decentralized structure. Conducting an extensive experimental study, we verify that the implemented structure outperforms other well-known hierarchical tree-based structures since it provides better complexities regarding load-balancing operations. More specifically, the structure achieves an amortized bound (N is the number of nodes present in the network), using an efficient deterministic load-balancing mechanism, which is general enough to be applied to other hierarchical tree-based structures. Moreover, our structure achieves worst-case search performance. Last but not least, we investigate the structure’s fault tolerance, which hasn’t been sufficiently tackled in previous work, both theoretically and through rigorous experimentation. We prove that -Tree is highly fault-tolerant and achieves amortized search cost under massive node failures, accompanied by a significant success rate. Afterwards, by incorporating this novel balancing scheme into the ART (Autonomous Range Tree) structure, we go one step further to achieve sub-logarithmic complexity and propose the ART structure. ART achieves an communication cost for query and update operations (b is a double-exponentially power of 2 and N is the total number of nodes). Moreover, ART is a fully dynamic and fault-tolerant structure, which supports the join/leave node operations in expected WHP (with high proability) number of hops and performs load-balancing in amortized cost.

1. Introduction

Decentralized systems have generated interest worldwide among the computer networking community. Although they have existed for many years, they have become prevalent nowadays and are promoted as the future of Internet networking. They are widely used for sharing resources and storing extensive data sets, using small computers instead of large, costly servers. Typical examples include cloud computing environments, node-to-node (P2P) systems, and the Internet.

According to [1], in a decentralized network, individual nodes act as both suppliers and consumers of resources, without the need for centralized coordination by servers, in contrast to the centralized client-server model, where client nodes request access to resources provided by central servers.

In decentralized systems, data are stored at the network nodes, and the most crucial operations are data search and data updates. A decentralized network is represented by a graph, a logical overlay network, where its nodes correspond to the network nodes. In contrast, its edges may not correspond to existing communication links but to communication paths.

We assume constant size messages between nodes through links and asynchronous communication. Furthermore, it is considered that the network provides an upper bound on the time needed for a node to send a message and receive an acknowledgement. In this way, the network provides a mechanism to identify communication problems, which may refer to communication links or nodes that are down. The complexity (cost) of an operation is measured by the number of messages issued during its execution (internal computations at nodes are considered insignificant).

With respect to its structure, the overlay supports the operations Join (of a new node v; v communicates with an existing node u in order to be inserted into the overlay) and Departure (of an existing node u; u leaves the overlay announcing its intent to other nodes of the overlay).

Moreover, the overlay implements an indexing scheme for the stored data, supporting the operations Insert (a new element), Delete (an existing element), Search (for an element), and Range Query (for elements in a specific range).

Range query processing in decentralized network environments is a complex problem to solve efficiently and scalably. In cloud infrastructures, a most significant and apparent requirement is the monitoring of thousands of computer nodes, which often requires support for range queries: consider range queries issued to identify under-utilized nodes to assign them more tasks, or to identify overloaded nodes to avoid bottlenecks in the cloud. For example, we wish to execute range queries such as:

SELECT NodeID

FROM CloudNodes

WHERE Low < utilization < High

or both single and range queries such as:

SELECT NodeID

FROM CloudNodes

WHERE Low < utilization < High AND os = UNIX

Moreover, in cloud infrastructures that support social network services like Facebook, stored user profiles are distributed in several nodes and we wish to retrieve user activity information, executing range queries such as:

SELECT COUNT(userID)

FROM CloudNodes

WHERE 3/1/2015 < time < 3/31/2015 AND userID = 123456

AND NodeID IN Facebook

An acceptable solution for processing range queries in such large-scale decentralized environments must scale in terms of the number of nodes and the number of data items stored.

For very large volume data (trillions of data items at millions of nodes), the classic logarithmic complexity offered by solutions in literature is still too expensive for single and range queries.

Further, all available solutions incur large overheads concerning other critical operations, such as join/leave of nodes and insertion/deletion of items.

Our aim in this work is to provide a solution that is comprehensive and outperforms related work for all primary operations, such as search of elements, join/departure of nodes, insertion/deletion of elements, and load-balancing and to the required routing state that must be maintained to support these operations. In particular, our ultimate goal is to achieve a sub-logarithmic complexity for all the above processes.

2. Related Work

Extensive work has been done to support search and update techniques in distributed data essential operations in decentralized networks. In general, decentralized systems can be classified into two broad categories: Distributed Hash Table (DHT)-based systems and tree-based systems. Examples of the former, which constitute the majority, include Chord, CAN, Pastry, Symphony, Tapestry [2], and P-Ring [3]. DHT-based systems generally support exact match queries well and use (successfully) probabilistic methods to distribute the workload among nodes equally.

Since hashing destroys the ordering on keys, DHT-based systems typically do not possess the functionality to support straightforwardly range queries, or more complex queries based on data ordering (e.g., nearest-neighbour and string prefix queries). Some efforts towards addressing range queries have been made in [4,5], getting however approximate answers and also making exact searching highly inefficient.

Pagoda [6] achieves constant node degree but has polylogarithmic complexity for the majority of operations. SHELL [7] maintains large routing tables of space complexity, but achieves constant amortized cost for the majority of operations. Both are complicated hybrid structures and their practicality (especially concerning fault-tolerant operations) is questionable.

P-Ring [3] is a fully distributed and fault-tolerant system that supports both exact match and range queries, achieving range search performance (d is the order (Maximum fanout of the hierarchical structure on top of the ring. At the lowest level of the hierarchy, each node maintains a list of its first d successors in the ring.) of the ring and k is the answer size). It also provides load-balancing by maintaining a load imbalance factor of at most of a stable system, for any given constant , and has a stabilization process for fixing inconsistencies caused by node failures and updates, achieving an performance for load-balancing.

FMapper [8] is a novel distributed read mapper employing asynchronous I/O and parallel computations between different compute elements of the core groups and uses dynamic task scheduling and asynchronous data transfers. However, this highly scalable structure supports optimal scheduling and doesn’t investigate either range query, since it’s a hashed-based system, nor the load balancing performance of the whole cluster.

Tree-based systems are based on hierarchical structures. As a result, they support range queries more naturally and efficiently and a broader range of operations since they maintain the ordering of data. On the other hand, they lack the simplicity of DHT-based systems, and they do not always guarantee data locality and load balancing in the whole system.

Important examples of such systems include Family Trees [2], BATON [9], BATON [10], SPIS [11] and Skip List-based schemes like Skip Graphs (SG), NoN SG, SkipNet (SN), Deterministic SN, Bucket SG, Skip Webs, Rainbow Skip Graphs (RSG) and Strong RSG [2] that use randomized techniques to create and maintain the hierarchical structure.

We should emphasize that w.r.t. load-balancing, the solutions provided in the literature are either heuristics or provide expected bounds under certain assumptions or amortized bounds but at the expense of increasing the memory size per node. In particular, in BATON [9], a decentralized overlay is provided with load-balancing based on data migration. However, their amortized bound (N is the number of nodes in the network) is valid only subject to a probabilistic assumption about the number of nodes taking part in the data migration process. Thus it is an amortized expected bound. Moreover, its successor BATON, exploits the advantages of higher fanout (number of children per node), to achieve reduced search cost of , where m is the fanout. However, the higher fanout leads to larger update and load-balancing cost of .

SPIS [11] is the decentralized version of Interpolation Search Tree (IST), the most recent version of which has been presented recently in [12], and its concurrent version in [13]. It exploits the Apache Spark engine, the state-of-the-art solution for big data management and analytics. In particular, it is a Spark-based indexing scheme that supports range queries in such large-scale decentralized environments and scales well w.r.t. the number of nodes and the data items stored. Towards this goal, there have been solutions in the last few years, which, however, turn out to be inadequate at the envisaged scale since the classic linear or even the logarithmic complexity (for point queries) is still too expensive. In contrast, range query processing is even more demanding. Therefore, SPIS goes one step further and supports range query processing with sub-logarithmic complexity. Also, the weak point of this structure is the load balancing and fault tolerance after massive failures. For more details, see [14].

In the last decade, a deterministic decentralized tree structure, called -Tree [15], was presented that overcomes many of the weaknesses mentioned above of tree-based systems. In particular, -Tree achieves searching cost, amortized update cost both for element updates and for node joins and departures, and deterministic amortized bound for load-balancing. Its practicality, however, has not been tested so far.

As far as network’s fault tolerance is concerned, P-Ring [3] is considered highly fault-tolerant, using Chord’s Fault-Tolerant Algorithms [16]. BATON [9] maintains vertical and horizontal routing information not only for efficient search but to offer a large number of alternative paths between two nodes. In its successor BATON [10], fault tolerance is greatly improved due to higher fanout.

When , approximately 25% of nodes must fail before the structure becomes partitioned while increasing the fanout up to 10 leads to increasing fault tolerance ( of failed nodes partition the structure). -Tree can tolerate the failure of a few nodes, but cannot afford a massive number of node failures.

3. Our Contribution

In this work, we focus on hierarchical tree-based overlay networks that directly support range and more complex queries. We introduce a promising deterministic decentralized structure for distributed data, called -Tree. -Tree is an extension of -Tree [15] that adopts all of its strengths and extends it in two respects: it introduces an enhanced fault-tolerant mechanism, and it can answer efficiently search queries when massive node failures occur. -Tree achieves the same deterministic worst-case bound as -Tree for the search operations and amortized bounds for update and load-balancing operations, using an efficient deterministic weight-based load-balancing mechanism, which is general enough to be applied to other hierarchical tree-based structures. Moreover, -Tree answers search queries in amortized cost under massive node failures.

Note that all previous structures provided only empirical evidence of their capability to deal with massive node failures; no previous structure provided a theoretical guarantee for searching in such a case.

Our second contribution is an implementation of the -Tree and a subsequent comparative experimental evaluation (cf. Section 7) with its main competitors BATON, BATON, and P-Ring.

Our experimental study verified the theoretical results (as well as those of the -Tree) and showed that -Tree outperforms other state-of-the-art hierarchical tree-based structures. Furthermore, our experiments demonstrated that -Tree has a significantly small redistribution rate (structure redistributions after node joins/departures), while element load-balancing is rarely necessary.

We also investigated the structure’s fault tolerance in case of massive node failures and showed that it achieves a significant success rate in element queries.

Afterwards, by incorporating this novel balancing scheme into ART (Autonomous Range Tree), the state-of-the-art hierarchical distributed structure presented in [17], we go one step further to achieve sub-logarithmic complexity and propose the ART structure.

The outer level of ART is built by grouping clusters of nodes, whose communication cost of query and update operations is hops, where the base b is a double-exponentially power of two and N is the total number of nodes. Moreover, ART is a fully dynamic and fault-tolerant structure, which supports the join/leave node operations in expected w.h.p number of hops and performs load-balancing in amortized cost.

Each cluster node of ART is organized as a -Tree. A comparison of the state-of-the-art decentralized structures with our contribution is given in Table 1.

Table 1.

Comparison of P-Ring, BATON, BATON, -Tree, -Tree, ART and ART.

As noted earlier, preliminary parts of this work were presented in [18,19]. In this work, we further contribute, providing additional technical details regarding the key operations of D-Tree; node and element updates, load-balancing, search queries without/with massive node failures.

We also provide the proof for Lemma 2 about the amortized search cost in case of massive node failures. Moreover, we include a thorough presentation of the redistribution, load-balancing and extension-contraction operations, omitted in our previously published work.

We have also included 11 algorithms in pseudo-code, to clarify the algorithmic process of the most significant operations of our structure. The evaluation of D-Tree is further extended, including additional experiments for (a) the operations of node and element updates, taking into account different values of specific invariants of D-Tree (described in Section 4), and (b) the detailed behaviour of D-Tree in case of massive node failures.

The corresponding section also includes a brief presentation of the graphical interface of the D-Tree simulator.

The rest of this paper is organized as follows: The weight-based mechanism used in -Tree is briefly described in Section 4. Then, Section 5 presents our proposed structure by describing the theoretical background and discussing the enhancements and implementation aspects. In Section 6 we present the ART structure which exploits the performance of -Tree. Section 7 hosts the performance evaluation for both structures. The paper concludes in Section 8.

4. A Weight-Based Load-Balancer

In this section, we describe a weight-based load-balancing mechanism that is an efficient solution for element updates in hierarchical tree-based structures. All definitions used in this section and throughout this paper, have been compiled in Table 2. The main idea of this mechanism is the almost equal distribution of elements among nodes by making use of weights, a metric that shows how uneven is the load among nodes. When the load is uneven, a data migration process is initiated to distribute the elements equally. The method has two steps; (a) first, it provides an efficient and local update of weight information in a tree when elements are added or removed at the leaves, using virtual weights, and (b) it provides an efficient load-balancing mechanism which is activated when necessary.

Table 2.

Symbols and Definitions.

More specifically, when an element is added/removed to/from a leaf u in a tree structure , the weights on the path from u to the root must be updated. This is a costly operation, when it is performed on every element update. Instead of updating weights every time, a new metric is defined, virtual weight. Assume that node v lies at height h and its children are at height . of v is defined as the weight stored in node v. In particular, for node v the following invariants are maintained:

Invariant 1.

.

Invariant 2.

.

where . The constants and are chosen such that for all nodes the virtual weight will be within a constant factor of the real weight, i.e., .

When an update takes place at leaf u, the mechanism traverses the path from u to the root updating the weights by , until node z is found for which Invariants 1 and 2 hold. Let v be its child for which either Invariant 1 or 2 does not hold on this path. All weights on the path from u to v are recomputed; for each node z on this path, its weight information is updated by taking the sum of the weights of its children plus the number of elements that z carries.

Another Invariant which is maintained and is crucial for the load-balancing mechanism, involves the criticality of two brother nodes p and q (representing their difference in densities). The invariant guarantees that there will not be large differences between densities:

Invariant 3.

For two brothers p and q, it holds that .

For example, choosing we get that the density of any node can be at most twice or half of that of its brother.

When an update takes place at leaf u, weights are updated as described above. Then, the load-balancing mechanism redistributes the elements among leaves when the load between leaves is not distributed equally enough. In particular, starting from u, the highest ancestor w is located that is unbalanced w.r.t. his brother z, meaning that Invariant 3 is violated. Finally, the elements in the subtree of their father v are redistributed uniformly so that the density of the brothers becomes equal; this procedure is henceforth called redistribution of node v.

The weight-based mechanism is slightly modified to be applied also in node updates. Virtual size is defined and the same mechanism is applied using invariants similar to Invariants 1 and 2. Moreover, a new invariant is defined, involving node criticality.

Invariant 4.

The node criticality of all nodes is in the range .

Invariant 4 implies that the number of nodes in the left subtree of a node v is at least half and at most twice the corresponding number of its right subtree.

The weight-based mechanism described above (and its slight modification) achieves an amortized cost for weight update and amortized cost for redistribution. It is general enough to be applied to other hierarchical tree-based structures and was proposed and thoroughly described in [15].

5. The -Tree

In this section, we present our proposed structure, -Tree, which introduces many enhancements over the solutions in literature and its predecessor, -Tree [15].

In general, a -Tree structure with N nodes and n data elements residing on them achieves: (i) space per node; (ii) deterministic searching cost; (iii) deterministic amortized update cost both for element updates and for node joins and departures; (iv) deterministic amortized bound for load-balancing; (v) deterministic amortized search cost in case of massive node failures.

5.1. The Structure

Let N be the number of nodes present in the network and let n denote the size of data (). The structure consists of two levels. The upper level is a Perfect Binary Tree (PBT) of height . The leaves of this tree are representatives of the buckets that constitute the lower level of the -Tree. Each bucket is a set of nodes which are structured as a doubly linked list.

The number of nodes of the PBT is not connected by any means to the number of elements stored in the structure. Each node v of the -Tree maintains an additional set of links to other nodes apart from the standard links which form the tree.

The first four sets are inherited from the -Tree, while the fifth set is a new one that contributes to establishing a better fault-tolerance mechanism.

- Links to its father and its children.

- Links to its adjacent nodes based on an in-order traversal of the tree.

- Links to nodes at the same level as v. The links are distributed in exponential steps; the first link points to a node (if there is one) positions to the left (right), the second positions to the left (right), and the i-th link positions to the left (right). These links constitute the routing table of v and require space per node.

- Links to leftmost and rightmost leaf of its subtree. These links accelerate the search process and contribute to the structure’s fault tolerance when a considerable number of nodes fail.

- For leaf nodes only, links to the buckets of the nodes in their routing tables. The first link points to a bucket positions left (right), the second positions to the left (right) and the i-th link positions to the left (right). These links require space per node and keep the structure fault-tolerant since each bucket has multiple links to the PBT.

The next lemma captures some important properties of the routing tables.

Lemma 1.

(i) If a node v contains a link to node u in its routing table, then the parent of v also contains a link to the parent of u, unless u and v have the same father. (ii) If a node v contains a link to node u in its routing table, then the left (right) sibling of v also contains a link to the left (right) sibling of u, unless there are no such nodes. (iii) Every non-leaf node has two adjacent nodes in the in-order traversal, which are leaves.

Regarding the index structure of the -Tree, the range of all values stored in it is partitioned into sub-ranges each one of which is assigned to a node of the overlay. An internal node v with range may have a left child u and a right child w with ranges and respectively such that . Ranges are dynamic in the sense that they depend on the values maintained by the node.

5.2. Node Joins and Departures

5.2.1. Handling Node Updates

When a node z makes a join request (Algorithm 1) to v, v forwards the request to an adjacent leaf u. If v is a binary node, the request is forwarded to the left adjacent node, w.r.t. the in-order traversal, which is definitely a leaf (unless v is a leaf itself). In case v is a bucket node, the request is forwarded to the bucket representative, which is a leaf. Then, node z is added to the doubly linked list of the bucket represented by u. In node joins, we make the simplification that the new node is clear of elements.

| Algorithm 1 Join (Node newNode) |

|

It could be entered anywhere in the bucket, such as the first, the last, or an intermediate node, but we prefer to place it after the most loaded node of the bucket. Thus, the load is shared, and the new node stores half of the elements of the most loaded node. Finally, the left and right adjacents of the newly inserted node update their links to the previous and next node, and z creates new links to those nodes.

When a node v leaves the network, it is replaced by an existing node, to preserve the in-order adjacency. All navigation data are copied from the departing node v to the replacement node, along with the elements of v. If v is an internal binary node, then it is replaced by its right adjacent node, which is a leaf and which in turn is replaced by the first node z in its bucket. On the other hand, if v is a leaf, it is directly replaced by z.

If the departing node belongs to a bucket, no replacement occurs, but simply all stored elements of v are copied to the previous node of v (or to the next node if the last does not exist). Then v is free to depart.

After a node join or departure, the modified weight-based mechanism is activated and updates the sizes by on the path from leaf u to the root (Algorithm 2), as long as the defined Invariants 1 or 2 do not hold. When the first node w is accessed for which Invariants 1 and 2 hold, the nodes in the subtree of its child q in the path, have their sizes recomputed (Algorithm 3).

| Algorithm 2 UpdateVirtualSize (BinaryNode leaf, NodeUpdate operation) |

|

| Algorithm 3 ComputeSizeInSubtree () |

|

Afterwards, the mechanism traverses the path from leaf u to the root, in order to find the first node (if such a node exists) for which Invariant 4 is violated (Algorithm 4) and performs a redistribution in its subtree.

| Algorithm 4 CheckNodeCriticality(BinaryNode leaf) |

|

5.2.2. Node Redistribution

The redistribution guarantees that if there are z nodes in total in the y buckets of the subtree of v, then after the redistribution each bucket maintains either or nodes. The redistribution cost is , which is verified through experiments presented analytically in this work.

The redistribution in the subtree of v is carried out as follows (Algorithm 5). We assume that the subtree of v at height h has K buckets. A traversal of all buckets is performed in order to determine the exact value of (number of nodes in the buckets of the subtree of v). Then, the first k buckets, will contain nodes after redistribution, where . The remaining buckets will contain nodes. The redistribution starts from the rightmost bucket b and it is performed in an in-order fashion so that elements in the nodes are not affected.

We assume that b has q extra nodes, which must be transferred to other buckets. Bucket b maintains a link dest to the next bucket on the left, in which q extra nodes should be put. The q extra nodes are removed from b and are added to . The crucial step in this procedure is that during the transfer of nodes, internal nodes of PBT are also updated since the in-order traversal must remain untouched. More specifically, the representative z of b and its left in-order adjacent w are replaced by nodes of bucket b, and then, z and w along with the remaining nodes of b are added to the tail of bucket . Afterwards, the horizontal and vertical links for the replaced binary nodes are updated for nodes that point to them. Finally, bucket b informs to take over, and the same procedure applies again with as the source bucket.

The case where q nodes must be transferred to bucket b from bucket is completely symmetric. In general, q nodes are removed from the tail of bucket , two of them replace nodes w and z and the remaining nodes are added to the head of b. The intriguing part comes when contains less nodes than the q that b needs. In this case, b has to find the remaining nodes in the buckets on the left, so dest travels towards the leftmost bucket of the subtree, until , where is the size of the i-th bucket on the left. Then, nodes of move to , nodes from are transferred to and so on, until dest goes backwards to and q nodes are moved from into b.

5.2.3. Extension–Contraction

Throughout joins and departures of nodes, the size of buckets can increase undesirably or can decrease so much that some buckets may become empty. Either situations violate the -Tree principle for bucket size :

where .

| Algorithm 5 RedistributeSubtree() |

|

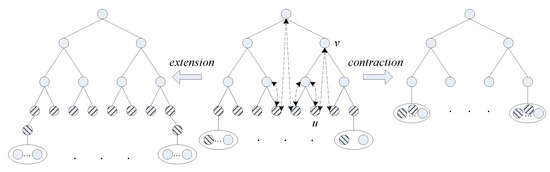

The structure guarantees that (1) is always true, by employing two operations on the PBT, extension and contraction (Figure 1).

Figure 1.

The initial -Tree structure (middle) and the operations of extension (left) and contraction (right).

These operations are activated when a redistribution occurs at the root of the PBT and they add one level to the PBT when or delete one level from the PBT when .

The extension (Algorithm 6) is carried out as follows: the last level of the PBT is affected and a new level of leaves, as well as, a new set of buckets are created, using nodes from the old buckets. In particular, each leaf u and its bucket B with nodes are replaced by a 2-level binary subtree preserving, at the same time, the in-order adjacency. Thus, the left leaf of is the old leaf u, the root of is the node of b, where , the bucket of u contains nodes , the right leaf of is the node of b and its bucket contains the remaining nodes of old bucket B, . During the process, all in-order adjacency links and father-child links are updated. After the extension has been carried out, leaves and nodes in height reconstruct their routing tables and all binary nodes update the link to the rightmost leaf of their subtree (the leftmost leaf is not affected).

| Algorithm 6 PerformExtension() |

|

The contraction (Algorithm 7) is carried out as follows: the last level of the PBT is deleted and every pair of adjacent buckets is merged into one bucket. In particular, each 2-level subtree at height and its buckets and are replaced by a leaf u and a bucket B, preserving, at the same time, the in-order adjacency. The leaf u is basically the left leaf of and the new bucket B contains the nodes of , the root of , the right leaf of and finally the nodes of bucket . During the process, all in-order adjacency links and father-child links are updated. After the contraction has been carried out, the leaves reconstruct their routing tables and all binary nodes update their rightmost leaf.

| Algorithm 7 PerformContraction() |

|

It is obvious that these two operations are quite costly since they involve a reconstruction of the overlay, but this reconstruction rarely happens.

5.3. Single and Range Queries

The search for an element a may be initiated from any node v at level l. If v is a bucket node, then if its range contains a the search terminates, otherwise the search is forwarded to the bucket representative, which is a binary node. In case v is a node of the PBT (Algorithm 8), let z be the node with range of values containing a, and assume w.l.o.g. that . The case where is completely symmetric. First, we perform a horizontal binary search at the level l of v using the routing tables, searching for a node u with right sibling w (if there is such sibling) such that and , unless a is in the range of u or in the range of any visited node of l and the search terminates.

More specifically, we keep travelling to the right using the rightmost links of the routing tables of nodes in level l (the most distant ones), until we find a node q such that , or until we have reached the rightmost node of level l. If the first case is true, then a is somewhere between q and the last visited node in the left of q, so we start travelling to the left decreasing our travelling step by 1. We continue travelling left and right, gradually decreasing the travelling step, until we find the siblings u and w mentioned above in step = 0. If the second case is true, then and according to the in-order traversal the search is confined to the right subtree of .

| Algorithm 8 Search(integer element) |

|

Having located nodes u and w, the horizontal search procedure is terminated and the vertical search is initiated. Node z will either be the common ancestor of u and w (Algorithm 9), or in the right subtree rooted at u (Algorithm 10), or in the left subtree rooted at w. If u is a binary node, it contacts the rightmost leaf y of its subtree. If then an ordinary top-down search from node u will suffice to find z. Otherwise, node z is in the bucket of y, or in its right in-order adjacent (this is also the common ancestor of u and w), or in the subtree of w. If z belongs to the subtree of w, a symmetric search is performed.

| Algorithm 9 Search(SearchAncestor(integer element)) |

|

When z is located, if a is found in z then the search was successful, otherwise a is not stored in the structure. The search for an element a is carried out in steps and is verified through experiments presented in this work.

A range query initiated at node v, invokes a search operation for element a. Node z that contains a returns to v all elements in its range. If all elements of u are reported then the range query is forwarded to the right adjacent node (in-order traversal) and continues until an element larger than b is reached for the first time.

5.4. Element Insertions and Deletions

5.4.1. Handling Element Updates

Assume that an update operation (insertion/ deletion) is initiated at node v involving element a. By invoking a search operation, node u with range containing element a is located and the update operation is performed on u. Element a is inserted in u or is deleted from u, depending on the request.

To apply the weight-based mechanism for load balancing, the element should be inserted in a bucket node (similar to node joins) or in a leaf. However, node u can be any node in the structure, even an internal node of PBT. Therefore, if u is a bucket node or a leaf, a is inserted to u, and no further action is necessary.

If u is an internal node of the PBT, element a is inserted in u. Then the first element of u (note that elements into nodes are sorted) is removed from u and inserted to q, the last node of the bucket of the left adjacent of u, to preserve the sequence of elements in the in-order traversal. This way, the insertion has been shifted to a bucket node. The case of element deletion is similar.

After an element update in leaf u or in its bucket, the weight-based mechanism is activated and updates the weights by on the path from leaf u to the root, as long as Invariants 1 or 2 do not hold. When the first node w is accessed for which Invariants 1 and 2 hold, then the nodes in the subtree of its child q in the path, have their weights recomputed. Afterwards, the mechanism traverses the path from leaf u to the root, in order to find the first node (if such a node exists) for which Invariant 3 is violated and performs a load-balancing in its subtree.

| Algorithm 10 Search(SearchSubtree(integer element)) |

|

5.4.2. Load Balancing

The load-balancing mechanism guarantees that if there are elements in total in the subtree of v of size (total number of nodes in the subtree of v including v), then after load-balancing each node stores either or elements. The load-balancing cost is , which is verified through experiments.

The load-balancing in the subtree of v is carried out as follows (Algorithm 11). We assume that v has nodes in its subtree. A bottom-up computation of the weights in all nodes of the subtree is performed, in order to determine the weight of v. Then, the first k nodes will contain elements after load-balancing, where . The remaining nodes will contain elements. The redistribution starts from the rightmost node w of the rightmost bucket b and it is performed in an in-order fashion.

| Algorithm 11 Search(LoadBalanceSubtree() |

|

We assume that w has m extra elements which must be transferred to other nodes. Hence, node w has a link to node in which the m additional elements should be inserted. To locate node , we take into consideration the following cases: (i) if w is a bucket node, is its left node, unless w is the first node of the bucket and then is the bucket representative, (ii) if w is a leaf, then is the left in-order adjacent of w and (iii) if w is an internal binary node, then its left in-order adjacent is a leaf, and is the last node of its bucket. Having located , the first m extra elements of w are removed from w and are added to the end of the element queue of , to preserve the indexing structure of the tree. Then, the ranges of w and are updated, respectively.

The case where m elements must be transferred to node w from node is completely symmetric. In general, the last m elements of are removed from and are inserted in the first m places in the element queue of w. The intriguing part is when contains less elements than the m elements that w needs. In this case, dest travels towards the leftmost node of the subtree, following the in-order traversal, until , where is the number of elements of the node on the left. Then, elements of node are transferred to , elements from are transferred to and so on, until dest goes backwards to and m elements are moved from into w.

5.5. Fault Tolerance

Searches and updates in the -Tree do not tend to favour any node and in particular nodes near the root, which are therefore not crucial, and their failure will not cause more problems than the failure of any node. However, a single node can be easily disconnected from the overlay, when all nodes with which it is connected fail. This means that four failures (two adjacent nodes and two children) are enough to disconnect the root. The most easily disconnected nodes are near the root since their routing tables are small in size.

When a node w discovers that v is unreachable, the network initiates a node withdrawal procedure by reconstructing the routing tables of v, in order v to be removed smoothly, as if v was departing. If v belongs to a bucket, it is removed from the structure, and the links of its adjacent nodes are updated.

In case v is an internal binary node, its right adjacent node u is first located, making use of Lemma 1, in order to replace v. More specifically, we assume that node w discovered that v is unreachable during some operation.

Considering all possible relative positions between w and v, we have the following cases, in which we want to locate the right child q of v that will lead to u. First, if w and v are on the same level, by Lemma 1(i) we locate q and thus the right adjacent node u of v is the leftmost leaf in the subtree of q. To be more clear, if w is connected to v by the i-th link of its routing table, then its right child is connected to q by the -th link of its routing table.

Second, if w is the father of v, by Lemma 1(i) its left (right) child p has a link to the missing node v and the right child of p has a link to q, so u is located. Third, if w is the left (right) child of v, then u is easily located.

If v is a leaf, it should be replaced by the first node u in its bucket. However, in -Tree predecessor, if a leaf was found unreachable, contacting its bucket would be infeasible since the only link between v and its bucket would have been lost. This weakness was eliminated in -Tree, by maintaining multiple links towards each bucket, distributed in exponential steps (in the same way as the horizontal adjacency links). This way, when w is unable to contact v, it contacts directly the first node of its bucket u and u replaces v.

In any case, the elements stored in v are lost. Moreover, the navigation data of u (left adjacent of v) are copied to the first node z in its bucket, which takes its place, and u has its routing tables recomputed.

5.6. Single Queries with Node Failures

The problem of searching an element in a network where several nodes have fallen introduces some very intriguing aspects. It can be considered two-dimensional since the search must be successful and cost-effective. A successful search for element a refers to locating the target node z for which .

An unsuccessful search refers to the cases where (i) z is unreachable and (ii) there is a path to z, but the search algorithm couldn’t follow it to locate z, due to failures of intermediate nodes. Unfortunately, the -Tree predecessor doesn’t provide a search algorithm in case of node failures since it doesn’t sufficiently confront the structure’s fault tolerance. In the following, we present the key features of our search algorithm, mainly through examples, due to the complexity of its implementation.

The search procedure is similar to the simple search described in Section 5.3. One difference in horizontal search lies in the fact that if the most distant right adjacent of v is unreachable, v keeps contacting its right adjacent nodes by decreasing the step by 1, until it finds node q which is reachable.

If all right adjacents are unreachable, v contacts its left adjacents, afterwards it tries to contact its children, its father and as a last chance, when all other nodes have failed, it contacts its left/right in-order adjacents and its left/rightmost leaf. Contacting children, in-order adjacents and leaves means changing the search level.

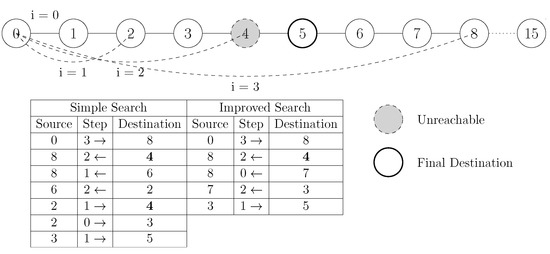

In case the search continues to the right using the most distant right adjacent of q, otherwise the search continues to the left and q contacts its most distant left adjacent p which is in the right of v. If p is unreachable, q doesn’t decrease the travelling step by 1, but directly contacts its nearest left adjacent (at step = 0) and asks it to search to the left. This improvement reduces the number of messages meant to fail because of the exponential positions of nodes in routing tables and the nature of binary horizontal search. For example, in Figure 2, search starts from and contacts since has fallen. No node contacts from then on, and the number of messages is reduced by 2.

Figure 2.

Example of binary horizontal search with node failures.

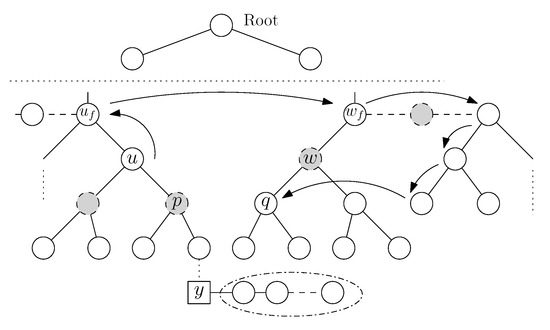

A vertical search to locate z is always initiated between two siblings u and w, which are both active, or one of them is unreachable, as shown in Figure 3 where the left sibling u is active and w, the right one, is unreachable. In both cases, first, we search into the subtree of the active sibling. Next, we contact the common ancestor, and then, if the other sibling is unreachable, the active sibling tries to reach its corresponding child (right child for left sibling and left child for right sibling). When the child is found, the search is forwarded to its subtree.

Figure 3.

Example of vertical search between u and unreachable w.

We assume (w.l.o.g.) that the left sibling u is active and w, the right one, is unreachable, as shown in Figure 3. First u contacts its rightmost leaf y of its subtree. If y is reachable and or if y is unreachable, then an ordinary top-down search from node u is initiated to find z. If a node in the searching path is unreachable, a mechanism described below is activated to contact its children. Otherwise, if , node z is in the bucket of y, or in its right in-order adjacent (this is also the common ancestor of u and w), or in the left subtree of w.

In general, when node u wants to contact the left (right) child of unreachable node w, the contact is accomplished through the routing table of its own left (right) child. If its child is unreachable, (Figure 3), then u contacts its father and contacts the father of w, , using Lemma 1(i). Then , using Lemma 1(ii) twice in succession, contacts its grandchild through its left and right adjacents and their grandchildren.

In case the initial node v is a bucket node, if its range contains a the search terminates, otherwise the search is forwarded to the bucket representative. If the bucket representative has fallen, the bucket contacts its other representatives right or left, until it finds a representative that is reachable. The procedure continues as described above for the case of a binary node.

The following lemma gives the amortized upper bound for the search cost in case of massive failures of nodes.

Lemma 2.

The amortized search cost in case of massive node failures is .

Proof.

Let be the number of failed nodes in a network of N nodes and n elements. In general , and we may assume w.l.o.g. that , for some (large) constant expressing the space capacity of each node (i.e., how many data elements can be stored in it).

In the worst case, we want to execute search operations. If a node v cannot be contacted during the search procedure, the network initiates a node withdrawal procedure to remove v. In case of massive failures, node withdrawals will not be negligible; hence the network will often activate the redistribution mechanism to avoid the structure’s imbalance. The redistribution cost is bounded by the amortized bound .

In the worst case, the maximum number of redistributions will be (equal to the number of failed nodes), so the whole search process will require hops, where

The amortized cost will then be

where is some constant with .

□

6. The ART Structure

In this section, we briefly describe and present the theoretical background of ART. ART is similar to its predecessor, ART [17] regarding the structure’s outer level. Their difference, which introduces performance enhancements, lies in the fact that each cluster node of ART is structured as a -Tree [18], instead of the BATON* [10] structure, used as the inner level of ART.

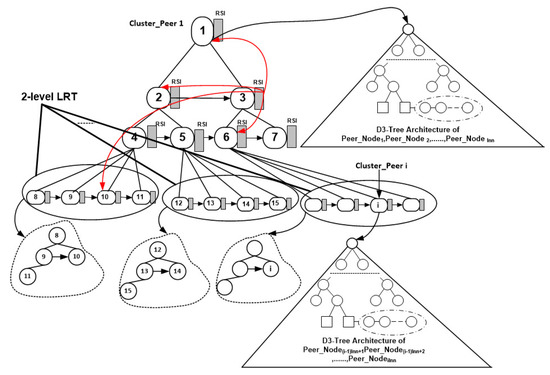

6.1. Building the ART Structure

The backbone structure of ART is similar to LRT (LRT: Level Range Tree), in which some interventions have been made to improve its performance and increase the robustness of the whole system. ART is built by grouping cluster nodes with the same ancestor and recursively organizing them in a tree structure. A cluster node is defined as a bucket of ordered nodes. The innermost level of nesting (recursion) will be characterized by having a tree in which no more than b cluster nodes share the same direct ancestor, where b is a double-exponentially power of two (e.g., 2, 4, 16, …). Thus, multiple independent trees are imposed on the collection of cluster nodes. The height of ART is in the worst case. The ART structure remains WHP-number unchanged. Figure 4 illustrates a simple example, where .

Figure 4.

The ART structure for b = 2.

The degree of the cluster nodes at level is , where indicates the number of cluster nodes at level i. It holds that and . At initialization step, the 1st node, the node, the node and so on are chosen as bucket representatives, according to the balls in bins combinatorial game presented in [20].

Let n be w-bit keys, N be the total number of nodes and be the total number of cluster nodes. Each node with label i (where ) of a random cluster, stores ordered keys that belong in the range , where . Each cluster node with label (where ) stores ordered nodes with sorted keys belonging in the range , where or is the number of cluster nodes.

ART stores cluster nodes only, each of which is structured as an independent decentralized architecture, which changes dynamically after node join/leave and element insert/delete operations inside it. In contrast to its predecessor, ART, whose inner level was structured as a BATON, each cluster node of ART is structured as a -Tree.

Each cluster node is equipped with a routing table named Random Spine Index (RSI), which stores pointers (red arrows in Figure 4) to cluster nodes belonging to a random spine of the tree (instead of the LSI (LSI: Left Spine Index) of LRT, which stores pointers to the nodes of the left-most spine). Moreover, instead of using fat CI (CI: Collection Index) tables, which store pointers to the collections of nodes presented at the same level, the appropriate collection of cluster nodes is accessed by using a 2-level LRT structure. In ART, the overlay of cluster nodes remains unaffected in the expected WHP-case when nodes join or leave the network.

6.2. Load Balancing

The operation of join/leave of nodes inside a cluster node is modelled as the combinatorial game of bins and balls presented in [20]. In this way, for an random sequence of join/leave node operations, the load of each cluster node never exceeds size and never becomes zero in the expected WHP-case. In skew sequences, though, the load of each cluster node may become in the worst case.

The load-balancing mechanism for a -Tree structure, as described previously, has an amortized cost of , where K is the total number of nodes in the -Tree. Thus, in an ART structure, the cost of load-balancing is amortized.

6.3. Routing Overhead

We overcome the problem of fat CI tables with routing overhead of in the worst case, using a 2-level LRT structure. The 2-level LRT is an LRT structure over buckets each of which organizes collections in an LRT manner, where Z is the number of collections at the current level and c is a big positive constant. As a consequence, the routing information overhead becomes in the worst case.

6.4. Search Algorithms

Since the structure’s maximum number of nesting levels is and at each nesting level i we have to apply the standard LRT structure in collections, the whole searching process requires hops. Then, we have to locate the target node by searching the respective decentralized structure. Through the polylogarithmic load of each cluster node, the total query complexity follows. Exploiting now the order of keys on each node, range queries require hops, where is the answer size.

6.5. Join/Leave Operations

A node u can make a join/leave request to a node v, which is located at cluster node W. Since the size of W is bounded by a size in the expected WHP-case, the node join/leave can be carried out in hops.

The outer structure of ART remains unchanged WHP, as mentioned before, but each -Tree structure changes dynamically after node join/leave operations. According to -Tree performance evaluation, the node join/leave can be carried out in hops.

6.6. Node Failures and Network Restructuring

In the ART structure, similarly to ART, the overlay of cluster nodes remains unchanged in the expected case WHP, so in each cluster node, the algorithms for node failure and network restructuring are according to the decentralized architecture used. Finally, -Tree is a highly fault-tolerant structure since it supports procedures for node withdrawal and handles massive node failures efficiently.

7. Experimental Study

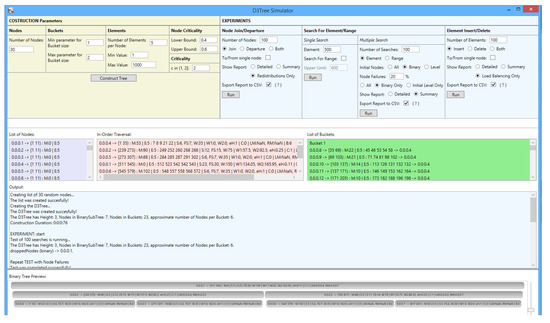

We built a simulator (Our simulator is a standalone desktop application, developed in Visual Studio 2010, available in https://github.com/sourlaef/d3-tree-sim (accessed on 5 March 2022)) with a user-friendly interface and a graphical representation of the structure, to evaluate the performance of -Tree.

In Figure 5 we present the user interface of the -Tree simulator. At the top left, the user can construct a new tree structure after setting the tree parameters. At the top right, the user can conduct experiments regarding node joins/departures, element insertions/deletions, single and range queries, setting the parameters for each experiment. In the center of the screen, helpful information regarding the tree construction and experiments is displayed. Finally, a graphical representation of the structure is shown at the bottom.

Figure 5.

The -Tree simulator user interface.

7.1. Performance Evaluation for -Tree

To evaluate the cost of operations, we ran experiments with a different number of nodes N from 1000 to 10,000, directly compared to BATON, BATON, and P-Ring. BATON is a state-of-the-art decentralized architecture, and P-Ring outperforms DHT-based structures in range queries and achieves a slightly better load-balancing performance compared to BATON. For a structure of N nodes, 1000 ×N elements were inserted. We used the number of passing messages to measure the system’s performance.

7.1.1. Cost of Node Joins/Departures

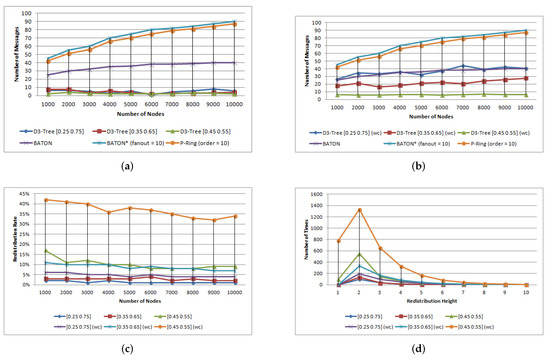

To measure the network performance for node updates, we conducted experiments for three different value ranges of node criticality: [0.25 0.75], [0.35 0.65] [0.45 0.55].

For a network of N initial nodes, we performed node updates. In a preliminary set of experiments with mixed operations (joins/departures), we observed that redistributions rarely occurred, thus leading to negligible node update costs. Hence, we decided to perform only one type of update, joins, that are expected to cause several redistributions.

Figure 6a shows the average amortized redistribution cost, while Figure 6b depicts the same metrics in the worst case, where the same node (the leftmost leaf) is the host node for all node joins. Note that we have taken into account only the amortized cost of node joins causing redistributions since otherwise, the amortized cost is negligible. Figure 6c shows the redistribution rate for -Tree in average and worst case.

Figure 6.

Node update operations. (a) average case, (b) worst case, (c) redistribution rate of winner -Tree, (d) redistr/ion height of winner -Tree.

Through experiments, we observed that even in the worst-case scenario, the -Tree node update and redistribution mechanism achieves a better-amortized redistribution cost, compared to that of BATON, BATON, and P-Ring.

We also observed that in the average case, during node joins, the Invariant 4 is rarely violated to invoke a redistribution operation (Figure 6c). This also depends on the range in which node criticality belongs. When the range is narrowed, more redistributions take place during node joins, but the amortized cost is low since the majority of redistributions occur in subtrees of low height, as shown in Figure 6d. This is more obvious in the worst case. When we use wide ranges, more node joins take place before a redistribution occurs, making the redistribution operation more costly since many nodes have been cumulated into the bucket of the leftmost leaf.

Figure 6d shows in detail the allocation of redistribution height for different node criticality ranges in a network of 10,000 initial nodes. We observe that in the worst case, the number of redistributions is more than twice the number of redistributions of the average case.

7.1.2. Cost of Element Insertions/Deletions

To measure the network performance for the operation of element updates, we conducted experiments for three different value ranges of criticality: [0.90 1.10], [0.67 1.50], [0.53 1.90] formed from values of constant correspondingly. For a network of N nodes and n elements, we performed n element updates.

In a preliminary set of experiments with mixed operations (insertions/deletions), we observed that load-balancing operations rarely occurred, thus leading to negligible node update costs. Hence, we decided to perform only one type of update, n insertions.

Figure 7a shows the average amortized load-balancing cost, while Figure 7b shows the load-balancing rate. Both average cases and worst cases are depicted in the same graph. The average cases for c values of 1.5 and 1.9 led to negligible amortized cost, so they were disregarded. The same node (the leftmost leaf) is the host node for all element insertions in the worst case. Note that we have only considered the amortized cost of element insertions causing load-balancing operations since otherwise, the amortized cost is negligible.

Figure 7.

Element update operations. (a) average messages, (b) load-balancing rate of winner -Tree, (c) load-balancing height of winner -Tree.

Conducting experiments, we observed that in the average case, the -Tree outperforms BATON, BATON, and P-Ring. However, in -Tree’s worst case, the load-balancing performance is degraded compared to BATON of fanout = 10 and P-Ring.

Moreover, we observed that in the average case, during element insertions, the Invariant 3 is rarely violated to invoke the load-balancing mechanism (Figure 7b). This also depends on the value range of criticality. When the range is narrowed, more load-balancing operations occur during element insertions, but the amortized cost is low since the subtree isn’t very imbalanced. However, the majority of load-balancing operations occur in subtrees of high height, as shown in Figure 7c. On the other hand, when we use wide ranges, more element insertions occur before the load-balancing mechanism is activated, leading to more frequent and costly load-balancing operations.

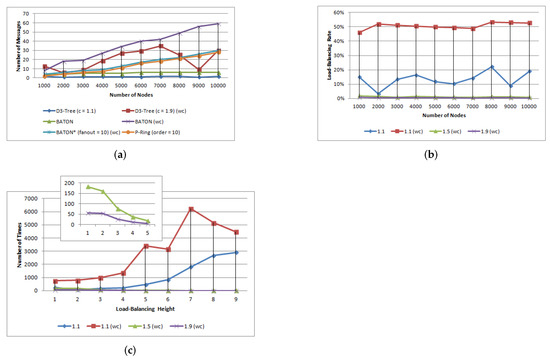

7.1.3. Cost of Element Search with/without Node Failures

To measure the network performance for the operation of single queries, we conducted experiments in which, for each N, we performed (M is the number of binary nodes) searches. The search cost is depicted in Figure 8a. An interesting observation here was that although the cost of search in -Tree doesn’t exceed , it is higher than the cost of BATON, BATON, and P-Ring. This is because when the target node is a Bucket node, the search algorithm, after locating the correct leaf, performs a serial search into its bucket to locate it.

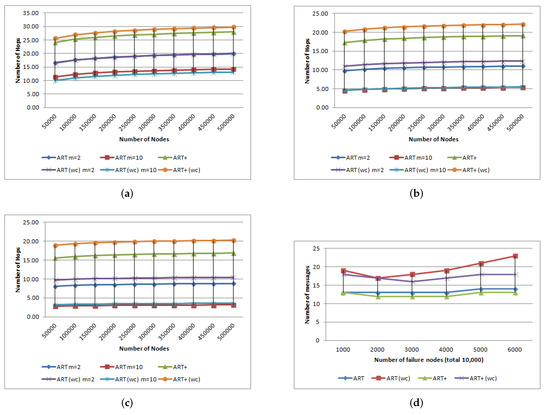

Figure 8.

Single queries without/with node failures. (a) average messages without failures, (b) effect of massive failure, (c) average messages of -Tree, (d) success percentage of -Tree.

To measure the network performance for the operation of element search with node failures, we conducted experiments for different percentages of node failures: 10%, 20%, 30%, 50% and 75%. For each N and node failure percentage, we performed searches divided into four groups, each of searches. To better estimate the search cost, we forced a different set of nodes to fail in each group.

Figure 8b depicts the increase in search cost when massive failures of nodes take place in -Tree, BATON, different fanouts of BATON and P-Ring. We observe that -Tree maintains low search cost, compared to the other structures, even for a failure percentage ≥30%.

Details about the behaviour of the enhanced search mechanism of -Tree in case of node failures are depicted in Figure 8c,d, which show the average number of messages required and the success percentage, respectively.

Experimenting, we observed that when the node failure percentage is small (10% to 15%), the majority of single queries that fail are those whose elements belong to failed nodes. When the number of failed nodes increases, single queries are not always successful since the search mechanism fails to find a path to the target node although the node is reachable. However, even for the significant node failure percentage of , our search algorithm is successful, confirming thus our claim that the proposed structure is highly fault-tolerant.

7.2. Performance Evaluation for ART

We also evaluated the performance of ART structure and compared it to its predecessor, ART [17]. Each cluster node of the ART and ART is a BATON and -Tree structure respectively. BATON was implemented and evaluated in [10], while ART was evaluated in [17], using the Distributed Java D-P2P-Sim simulator presented in [21]. The source code of the whole evaluation process, which showcases the improved performance, scalability, and robustness of ART over BATON is publicly available (http://code.google.com/p/d-p2p-sim/) (accessed on 5 December 2021). For the performance evaluation of ART, we used the -Tree simulator.

To evaluate the performance of ART and ART for the search and load-balancing operations, we ran experiments with a different number of total nodes N from 50,000 to 500,000. As proved in [17], each cluster node stores no more than nodes in smooth distributions (normal, beta, uniform) and no more than nodes in non-smooth distributions (powlow, zipfian, weibull). Moreover, we inserted elements equal to the network size multiplied by 2000, numbers from the universe [1 … 1,000,000,000]. Finally, we used the number of passing messages to measure the performance.

Note here that, as proved in [17], ART outperforms BATON in search operations, except for the case where . Moreover, ART achieves better load-balancing compared to BATON, since the cluster node overlay remains unaffected WHP through joins/departures of nodes, and the load-balancing performance is restricted inside a cluster node. Consequently, in this work, ART is compared directly to ART.

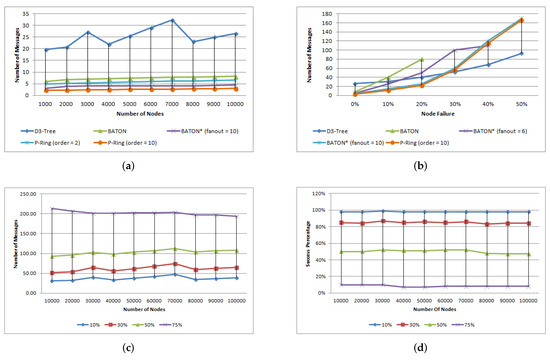

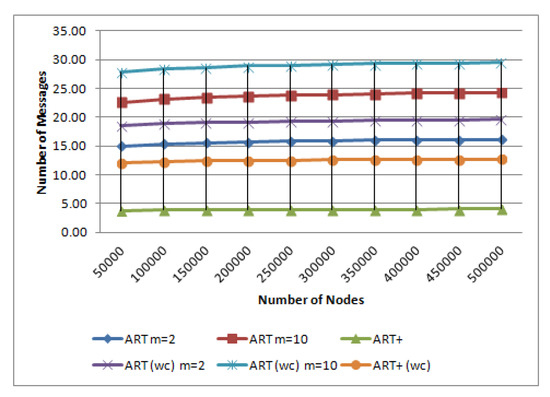

7.2.1. Cost of Search Operations

To measure the network performance for the search operations (single and range queries), we conducted experiments for different values of b, 2, 4 and 16. For each N, we executed 1000 single queries and 1000 range queries. The search cost is depicted in Figure 9. Both normal (beta, uniform) and worst cases (powlow, zipfian, weibull) are depicted in the same graph.

Figure 9.

Cost of search operations. (a) Case b = 2, (b) case b = 4, (c) case b = 16, (d) massive failures.

Experiments confirm that the query performance of ART and ART is and the slight performance divergences are because BATON, as the inner structure of ART’s cluster node, performs better than -Tree in search operations.

In case of massive failures, the search algorithm finds alternative paths to overcome the unreachable nodes. Thus, an increase in node failures increases search costs. To evaluate the system in case of massive failures, we initialized the system with 10,000 nodes and let them randomly fail without recovering. At each step, we check if the network is partitioned or not. Since the backbone of ART and ART remains unaffected WHP, the search cost is restricted inside a cluster node (BATON or -Tree respectively), meaning that b parameter does not affect the overall expected cost. Figure 9d illustrates the effect of massive failures.

We observe that both structures are fault-tolerant since the failure percentage has to reach the threshold of to partition them. Moreover, even in the worst-case scenario, the ART maintains a lower search cost compared to ART, since -Tree handles node failures more effectively than BATON.

7.2.2. Cost of Load-Balancing Operations

To evaluate the cost of load-balancing, we tested the network with a variety of distributions. For a network of N total nodes, we performed node updates. Both ART and ART remain unaffected WHP, when nodes join or leave the network, thus the load-balancing performance is restricted inside a cluster node (BATON or -Tree respectively), meaning that the b parameter does not affect the overall expected cost. The load-balancing cost is depicted in Figure 10. Both normal and worst cases are depicted in the same graph.

Figure 10.

Cost of load-balancing operation.

Experiments confirm that ART has an load-balancing performance, instead of the ART performance of . Thus, even in the worst case scenario, the ART outperforms ART, since -Tree has a more efficient load-balancing mechanism than BATON (Figure 10).

8. Conclusions

In this work, we presented a dynamic distributed deterministic structure called -Tree. Our proposed structure introduces many enhancements over the solutions in literature and its predecessor regarding load-balancing and fault tolerance.

Verifying the theory, we have proved through experiments that -Tree outperforms other well-known tree-based structures by achieving an amortized bound in the most costly operation of load-balancing, even in a worst-case scenario.

Moreover, investigating the structure’s fault tolerance, both theoretically and through experiments, we proved that -Tree is highly fault-tolerant, since, even for massive node failures of , it achieves a significant success rate of 85% in element search, without increasing the cost considerably.

Afterwards, we went one step further to achieve sub-logarithmic complexity and proposed the ART structure, exploiting the excellent performance of -Tree.

We proved that the communication cost of query operations, element updates, and node join/leave operations of ART scale sub-logarithmically as expected WHP. Moreover, the cost for the load-balancing operation is sub-logarithmic amortized. Experimental comparison to its predecessor, ART, showed slightly less efficiency towards search operations (single and range queries), but improved performance for the load-balancing operation and the search operations in case of node failures. Moreover, experiments confirmed that ART is highly fault-tolerant in case of massive failures. Note that, so far, ART outperforms the state-of-the-art decentralized structures. In the near future, we would like to incorporate new fuzzy randomized load balancing techniques [22], based on machine learning, as well as parallelism and concurrency [23] to increase the performance of all operations. Finally, it’s a big challenge to embed our novel techniques in real p2p architectures currently used in social networking, taking into account data privacy and anonymity issues [24]. In addition, we will incorporate algorithms and techniques that have recently been presented in the following decentralized systems [25,26,27,28,29].

Author Contributions

S.S., E.S., K.T., G.V. and C.Z. conceived of the idea, designed and performed the experiments, analyzed the results, drafted the initial manuscript and revised the final manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Competitiveness, Entrepreneurship and Innovation Operational Program (co-financed by EU and Greek national funds), under contract no. T2EDK-03472 (project iDeliver).

Acknowledgments

We are indebted to the anonymous reviewers whose comments helped us to improve the presentation.

Conflicts of Interest

The author has no conflicts of interest.

References

- Schollmeier, R. A Definition of Peer-to-Peer Networking for the Classification of Peer-to-Peer Architectures and Applications. In Proceedings of the 1st International Conference on Peer-to-Peer Computing, Linköping, Sweden, 27–29 August 2001. [Google Scholar]

- Ozsu, M.T.; Valduriez, P. Principles of Distributed Database Systems; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Crainiceanu, A.; Linga, P.; Machanavajjhala, A.; Gehrke, J.; Shanmugasundaram, J. Load Balancing and Range Queries in P2P Systems Using P-Ring. ACM Trans. Internet Technol. 2011, 10, 16:1–16:30. [Google Scholar] [CrossRef]

- Gupta, A.; Agrawal, D.; Abbadi, A.E. Approximate Range Selection Queries in Peer-to-Peer Systems. In Proceedings of the 1st Biennial Conference on Innovative Data Systems Research (CIDR 2003), Asilomar, CA, USA, 5–8 January 2003. [Google Scholar]

- Sahin, O.; Gupta, A.; Agrawal, D.; Abbadi, A.E. A peer-to-peer framework for caching range queries. In Proceedings of the 20th Conference on Data Engineering (ICDE 2004), Boston, MA, USA, 2 April 2004; pp. 165–176. [Google Scholar]

- Bhargava, A.; Kothapalli, K.; Riley, C.; Scheideler, C.; Thober, M. Pagoda: A Dynamic Overlay Network for Routing, Data Management, and Multicasting. In SPAA ’04, Proceedings of the 16th Annual ACM Symposium on Parallelism in Algorithms and Architectures, Barcelona, Spain, 27–30 June 2004; ACM: New York, NY, USA, 2004; pp. 170–179. [Google Scholar]

- Scheideler, C.; Schmid, S. A Distributed and Oblivious Heap. In Automata, Languages and Programming; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5556, pp. 571–582. [Google Scholar]

- Xu, K.; Duan, X.; Müller, A.; Kobus, R.; Schmidt, B.; Liu, W. FMapper: Scalable read mapper based on succinct hash index on SunWay TaihuLight. J. Parallel Distrib. Comput. 2022, 161, 72–82. [Google Scholar] [CrossRef]

- Jagadish, H.V.; Ooi, B.C.; Vu, Q.H. BATON: A Balanced Tree Structure for Peer-to-Peer Networks. In Proceedings of the 31st Conference on Very Large Databases (VLDB ’05), Trondheim, Norway, 30 August–2 September 2005; pp. 661–672. [Google Scholar]

- Jagadish, H.V.; Ooi, B.C.; Tan, K.; Vu, Q.H.; Zhang, R. Speeding up Search in P2P Networks with a Multi-way Tree Structure. In Proceedings of the ACM International Conference on Management of Data (SIGMOD 2006), Chicago, IL, USA, 27–29 June 2006; pp. 1–12. [Google Scholar]

- Papadopoulos, A.N.; Sioutas, S.; Zaroliagis, C.D.; Zacharatos, N. Efficient Distributed Range Query Processing in Apache Spark. In Proceedings of the 19th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, CCGRID 2019, Larnaca, Cyprus, 14–17 May 2019; pp. 569–575. [Google Scholar] [CrossRef]

- Kaporis, A.C.; Makris, C.; Sioutas, S.; Tsakalidis, A.K.; Tsichlas, K.; Zaroliagis, C.D. Dynamic Interpolation Search revisited. Inf. Comput. 2020, 270, 104465. [Google Scholar] [CrossRef]

- Brown, T.; Prokopec, A.; Alistarh, D. Non-blocking interpolation search trees with doubly-logarithmic running time. In PPoPP ’20: 25th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, San Diego, California, USA, 22–26 February 2020; Gupta, R., Shen, X., Eds.; ACM: New York, NY, USA, 2020; pp. 276–291. [Google Scholar] [CrossRef] [Green Version]

- Sioutas, S.; Vonitsanos, G.; Zacharatos, N.; Zaroliagis, C. Scalable and Hierarchical Distributed Data Structures for Efficient Big Data Management. In Algorithmic Aspects of Cloud Computin-5th International Symposium, ALGOCLOUD 2019, Munich, Germany, 10 September 2019; Revised Selected Papers; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 12041, pp. 122–160. [Google Scholar] [CrossRef]

- Brodal, G.; Sioutas, S.; Tsichlas, K.; Zaroliagis, C. D2-Tree: A New Overlay with Deterministic Bounds. In International Symposium on Algorithms and Computation; Springer: Berlin/Heidelberg, Germany, 2014; pp. 1–22. [Google Scholar]

- Stoica, I.; Morris, R.; Karger, D.; Kaashoek, M.F.; Balakrishnan, H. Chord: A Scalable Peer-to-peer Lookup Service for Internet Applications. SIGCOMM Comput. Commun. Rev. 2001, 31, 149–160. [Google Scholar] [CrossRef]

- Sioutas, S.; Triantafillou, P.; Papaloukopoulos, G.; Sakkopoulos, E.; Tsichlas, K. ART: Sub-Logarithmic Decentralized Range Query Processing with Probabilistic Guarantees. J. Distrib. Parallel Databases (DAPD) 2012, 31, 71–109. [Google Scholar] [CrossRef] [Green Version]

- Sioutas, S.; Sourla, E.; Tsichlas, K.; Zaroliagis, C. D3-Tree: A Dynamic Deterministic Decentralized Structure. In Proceedings of the Algorithms-ESA 2015-23rd Annual European Symposium, Lecture Notes in Computer Science. Patras, Greece, 14–16 September 2015; Volume 9294, pp. 989–1000. [Google Scholar]

- Sioutas, S.; Sourla, E.; Tsichlas, K.; Zaroliagis, C. ART+: A Fault-Tolerant Decentralized Tree Structure with Ultimate Sub-logarithmic Efficiency. In Proceedings of the Algorithms-ALGOCLOUD 2015-1st International Workshop on Algorithmic Aspects of Cloud Computing, Lecture Notes in Computer Science. Patras, Greece, 14–15 September 2015; Volume 9511, pp. 126–137. [Google Scholar]

- Kaporis, A.; Makris, C.; Sioutas, S.; Tsakalidis, A.; Tsichlas, K.; Zaroliagis, C. Improved bounds for finger search on a ram. In Proceedings of the 11th Annual European Symposium on Algorithms (ESA), Budapest, Hungary, 16–19 September 2003; Volume 2832, pp. 325–336. [Google Scholar]

- Sioutas, S.; Papaloukopoulos, G.; Sakkopoulos, E.; Tsichlas, K.; Manolopoulos, Y. A novel Distributed P2P Simulator Architecture: D-P2P-Sim. In CIKM ’09, Proceedings of the 18th ACM Conference on Information and Knowledge Management, Hong Kong, China, 2–6 November 2009; ACM CIKM: New York, NY, USA, 2009; pp. 2069–2070. [Google Scholar]

- Sagar, S.; Ahmed, M.; Husain, M.Y. Fuzzy Randomized Load Balancing for Cloud Computing. In Advances on P2P, Parallel, Grid, Cloud and Internet Computing-Proceedings of the 16th International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, 3PGCIC 2021, Fukuoka, Japan, 28–30 October 2021; Barolli, L., Ed.; Lecture Notes in Networks and Systems; Springer: Berlin/Heidelberg, Germany, 2021; Volume 343, pp. 18–29. [Google Scholar] [CrossRef]

- Ren, Y.; Parmer, G. Scalable Data-structures with Hierarchical, Distributed Delegation. In Proceedings of the 20th International Middleware Conference, Middleware 2019, Davis, CA, USA, 9–13 December 2019; ACM: New York, NY, USA, 2019; pp. 68–81. [Google Scholar] [CrossRef]

- Moualkia, Y.; Amad, M.; Baadache, A. Hierarchical and scalable peer-to-peer architecture for online social network. J. King Saud Univ.-Comput. Inf. Sci. 2021, in press. [Google Scholar] [CrossRef]

- Güting, R.H.; Behr, T.; Nidzwetzki, J.K. Distributed arrays: An algebra for generic distributed query processing. Distrib. Parallel Databases 2021, 39, 1009–1064. [Google Scholar] [CrossRef]

- Kläbe, S.; Sattler, K.U.; Baumann, S. PatchIndex: Exploiting approximate constraints in distributed databases. Distrib. Parallel Databases 2021, 39, 833–853. [Google Scholar] [CrossRef]

- Bilidas, D.; Koubarakis, M. In-memory parallelization of join queries over large ontological hierarchies. Distrib. Parallel Databases 2021, 39, 545–582. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Ng, J.S.; Xiong, Z.; Jin, J.; Zhang, Y.; Niyato, D.; Leung, C.; Miao, C. Decentralized edge intelligence: A dynamic resource allocation framework for hierarchical federated learning. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 536–550. [Google Scholar] [CrossRef]

- Weng, T.; Zhou, X.; Li, K.; Peng, P.; Li, K. Efficient Distributed Approaches to Core Maintenance on Large Dynamic Graphs. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 129–143. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).