1. Introduction

A remarkable development in Natural Language Processing (NLP) towards creating models that understand human languages has been observed in recent years. Text generation is one of the main challenges in the field of NLP, and this task has seen an important development after the introduction of Transformers [

1]. The Transformer uses an encoder–decoder architecture, self-attention, and positional encodings to facilitate parallel training. The GPT-2 model developed by OpenAI [

2] was the first model with remarkable text generation capabilities. GPT-2 was trained for predicting the next token in a sequence and could easily be adjusted for specific tasks. The follow-up improving the GPT-3 model [

3] is more than 10-times larger in terms of the parameters and deduces the task only from the provided prompt. There have been several open-source variations of the model, such as GPT-Neo [

4] and GPT-J [

5]. Other architectures consider a unified framework to cover text-to-text formats and convert text-based language problems, such as the Text-To-Text Transfer Transformer (T5) [

6]. This model can perform zero-shot learning and deduce the task from the context of the prompt received as the input, even if it was not presented in the training stage.

For the Romanian language, there are not many specific resources (i.e., pre-trained models and datasets), although there has been significant progress in recent years. The most notable models for Romanian consider the BERT architecture (e.g., RoBERT [

7], BERT-base-ro [

8], Distil-BERT [

9]) and the GPT-2 architecture (e.g., RoGPT2 [

10]) and were developed in the last 2 years. Romanian has only one available benchmark, namely LiRo [

11]. However, the models are small compared to their English counterparts, and there are no available datasets for common NLP tasks. Overall, Romanian remains a low-resource language with low international usage (

https://www.worlddata.info/languages/romanian.php; last accessed on 20 October 2022), despite recent efforts in terms of datasets and models; as such, we argue for the necessity of our efforts to develop tools tailored to this language.

Text summarization is a task of particular importance in NLP centered on extracting critical information from the text using two approaches. First, extractive summarization involves removing the most-important phrases or sentences that include the main ideas of a text. Second, abstractive summarization considers the generation of a new summary starting from the text. One of the most popular datasets in English used for this task is

CNN/Daily Mail [

12], having a total number of 280,000 examples; the dataset was afterward extended to other languages, including French, German, Spanish, Russian, and Turkish, thus generating the large-scale multilingual corpus

MLSUM [

13]. Another dataset used in studies for abstractive summarization is Extreme Summarization (

X-Sum) [

14] to generate a short, one-sentence summary for each news article;

X-Sum was derived from BBC news and consists of 220,000 examples. Another dataset is

Webis-TLDR-17 Corpus [

15] with approximately three million examples constructed with the support of the Reddit community. Extractive summarization in Romanian has been previously tackled by Cioaca et al. [

16] and Dutulescu et al. [

17] with small evaluation datasets. We now introduce the first dataset for Romanian abstractive summarization (

https://huggingface.co/datasets/readerbench/ro-text-summarization; last accessed on 20 October 2022).

A wide variety of architectures has been employed for text summarization, including general Transformer-based models [

6,

18,

19,

20] and specific models such as BRIO [

21], ProphetNet [

22], or PEGASUS [

23]. We aim to provide a baseline abstractive summarizer for Romanian built on top of RoGPT2 [

10] and to control the characteristics of the generated text. This is an additional step to better imitate human capabilities by considering one or more specifications that improve the summary. As such, we assessed the extent to which text generation is influenced by using control tokens specified in the prompt received by the model to induce specific characteristics of a text. The idea of specifying control tokens directly in the prompt was exploited first in MUSS [

24] and CONTROL PREFIXES [

25]. The GPT-2 model was also used in combination with BERT [

26]; however, to our knowledge, the generation task was not tackled until now in combination with control tokens to manipulate the characteristics of the generated summary.

Following the introduction of various models for text summarization, evaluating the quality of a generated text is a critical challenge, which can be even more difficult than the text generation task itself. Text evaluation is generally performed using synthetic metrics developed for machine translation, such as Bilingual Evaluation Understudy (BLEU) [

27], Recall Oriented Understudy for Gisting Evaluation (ROUGE) [

28], or Metric for Evaluation for Translation with Explicit Ordering (METEOR) [

29]; however, these metrics are limited as they focus on the lexical overlap. Newer metrics based on Transformers, such as BERTScore [

30], BARTScore [

31], or Bilingual Evaluation Understudy with Representations from Transformers (BLEURT) [

32], are much more accurate compared to the classical metrics. Still, they require more resources (i.e., pre-trained models and higher computing power) and have longer processing times. Besides comparing automated similarity metrics, Celikyilmaz et al. [

33] argued that a human evaluation is the gold standard for evaluating a Natural Language Generation (NLG) task; nevertheless, it is the most expensive and cumbersome to accomplish.

Thus, our research objective is threefold: create a dataset for summarization in Romanian, train a model that generates coherent texts, and introduce control tokens to manipulate the output easily. Following this objective, our main contributions are the following:

2. Method

This section presents the dataset created for the summarization task, the model architecture, the training method with the control tokens, as well as the methods employed to evaluate the generated text.

2.1. Corpus

The dataset for the summarization task was constructed by crawling all articles from the AlephNews website (

https://alephnews.ro/; last accessed on 20 October 2022) until July 2022. The site presents a section with the news summary as bullet points with sentences representing the main ideas for most articles. This peculiarity of the site enabled the automatic creation of a reasonably qualitative dataset for abstractive summarization. The news articles that did not have a summary or were too short were eliminated by imposing a minimum limit set of 20 characters. This resulted in 42,862 collected news articles. The news and summary texts were cleaned using several heuristics: these were the repair of diacritics, the elimination of special characters, the elimination of emoticons, and fixing punctuation (if it has more points, if it has no punctuation mark, a period is added at the end of the sentence), eliminating words such as “UPDATE”, “REPORT”, “AUDIO”, etc. The dataset was split into 3 partitions (i.e., train, dev, and test) with proportions of 90%–5%–5%. Articles with a maximum of 715 tokens based on the RoGPT2 tokenizer were selected for the test partition; out of 724 tokens, 9 were reserved for the control tokens. After analyzing the dataset and based on the limitations regarding the sequence length of a context, the maximum size was set to 724 tokens. In the case of entries from the training and dev partitions having the combined length of the article and the summary greater than 724, the article content was divided into a maximum of 3 distinct fragments, which had the last sentences removed; this was applied to approximately 10% of the entries to increase the number of examples and to keep the beginning of the news, which contains key information to be considered. We chose not to apply this augmentation technique for the entries in the test partition, as this would have altered the content of the original texts and would have generated multiple artificial test entries; moreover, we limited the text to the first 715 tokens so that control tokens could also be added when running various configurations. The total number of examples for each partition was: 47,525 for training, 132 for validation, and 2143 for testing.

2.2. RoGPT2 Model for Summarization

The model was trained to predict the next token using the previous sequence, similar to the RoGPT2 [

10] training for the Romanian language. The model architecture consists of several decoder layers of architecture Transformers [

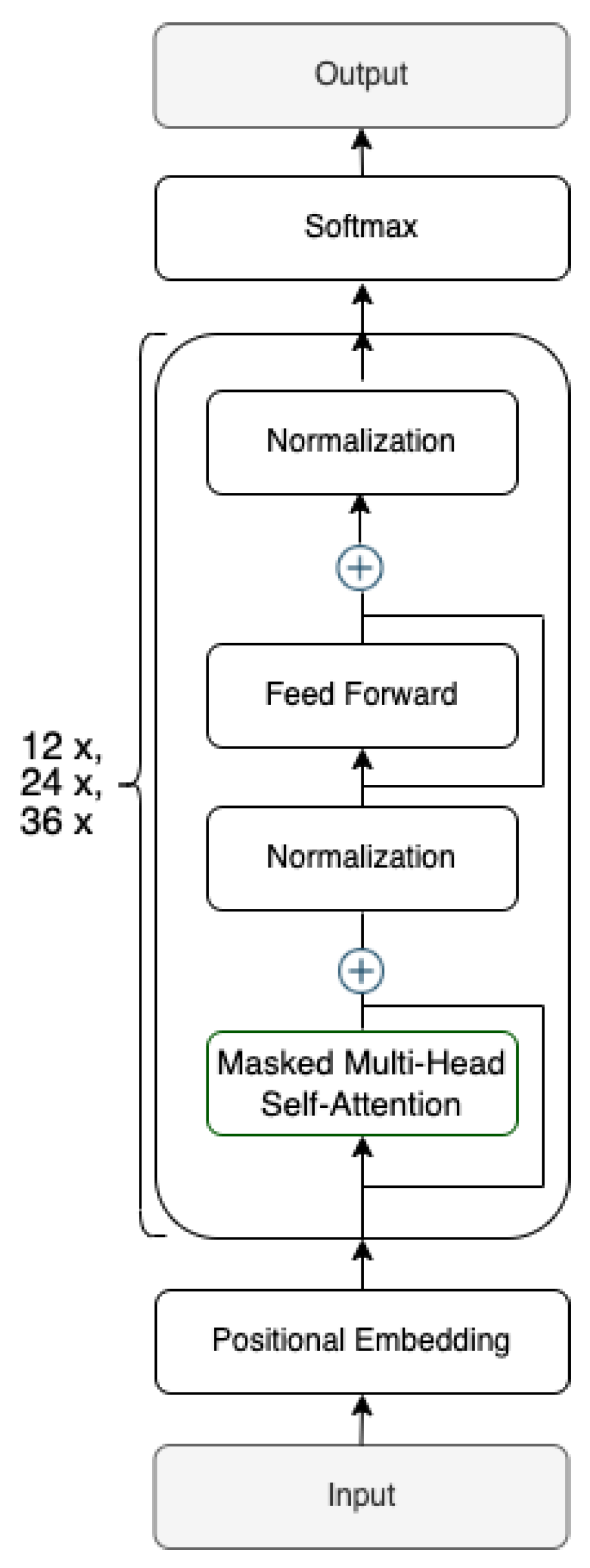

1], as presented in

Figure 1. There are 3 versions of the model, each with a different number of decoder layers: 12 layers were used for the base version, 24 layers for the medium version, and 36 layers for the large version.

Control tokens were used to indicate the task and the characteristics of the generated text, which are presented in the following subsections. This assumes that the model maximizes the probability of a subword depending on the context and the previously generated subwords:

Cross-entropy was the loss function for the supervised learning task:

where

is the label and

is the probability of the

ith class, or more specifically, a class is considered the

id of a token.

Three decoder methods for text generation were considered to choose the next token depending on the tokens generated up to that point and the probability distribution over the vocabulary.

Greedy search: This strategy is based on choosing a local optimum, in this case the token with the highest probability, which converges to a local maximum. First, the probability distribution is generated, and then, the next token is selected by choosing the highest probability. The procedure continues until the desired length is achieved or the token indicating the end is found. An advantage of this method is that it is efficient and intuitive, but it does not guarantee finding a global optimum for the generated sequence; this can lead to the non-exploration of some branches with a higher probability.

Beam search: Beam search [

35] partially solves the maximum global problem by keeping the best beam width sequences with a higher total probability. Multiple contents are generated for each step, and the sequence with the highest probability is chosen at each step. The advantage of this method is that it obtains better results for relatively small beam widths, but it requires more memory for a larger beam width or longer sequences, whereas the text does not vary much, being quite monotone. Beam search also does not guarantee finding the global optimum. Beam search works quite well when it can approximate the generated text’s length, but has issues when the corresponding length varies greatly. Holtzman et al. [

36] argued that people do not choose the phrase with the highest probability as the element of unpredictability is important.

Top-p (nucleus) sampling: This method involves choosing the smallest subset of words with a probability equal to p. Based on the new probability distribution, a new token is chosen. The advantage of this method is that it achieves results quite close to human ones and does not require many resources. The disadvantage is that p is fixed and not dynamic.

2.3. Control Tokens

Starting from previous studies presented in the Introduction and related to the specifics of the summarization task, we chose to specify a set of 4 control tokens representative of various characteristics of the text, namely:

NoSentences indicates the number of sentences that the summary should have;

NoWords indicates the number of words to be generated within the summary;

RatioTokens reflects how many times the sequence of tokens of the summary must be longer than the input;

LexOverlap is the ratio of the number of 4-grams from the summary that also appears in the reference text; stop words and punctuation marks were omitted.

The first 3 control tokens are purely quantitative and reflect different use-case scenarios: a summary containing at most a specific number of sentences, a summary having an imposed number of words, or a compression ratio to be used globally. The last control token ensures a lower or higher degree of lexical overlap between the two texts.

The prompt for the summarization task was the following:

The model learns that, after the control token

“Summary:”, it must generate the summary of the text preceding that token. Control tokens are specified before the token that indicates the input (i.e., marked by the

Text token), while the token specific to the task is placed after the end. The prompt used for an item from the dataset used for training is the following:

where FeatureToken is <

NoSentences>, <

NoWords>, <

RatioTokens>, or <

LexOverlap>.

Following the initial experimentation, we noticed that the model learns best when subsequent entries have the same input text, but with different values for the control tokens and a different text to be generated; this refers to the extraction of fragments from the original summary and their use as the output. This variation is reflected in the text to be generated and was used for the <NoSentences>, <NoWords>, and <RatioToken> control tokens. The generation of multiple variations was applied if the summary text had more than 3 sentences; thus, incremental examples were generated by adding sentences and calculating the value for the control token each time. An example for a summary comprising 4 sentences , , , and <NoWords> would consider two entries in the training dataset: the first item would consist of the first 3 sentences and the corresponding <NoWords> for this first shorter summary and a second item where the sentence would be added and <NoWords> is set at the global count of words from the summary.

Besides training the summarization model with each control token individually, we also considered combinations of 2 control tokens, namely: <NoWords>-<NoSentences>, <RatioTokens>-<NoSentences>, and <LexOverlap>-<NoWords>. The combination <NoWords>-<NoSentences> was chosen because it reflects the most straightforward manner to manually enforce the length of the summary by an end user (i.e., specify an approximate number of words and the number of sentences that the generated summary should have). <RatioTokens> presents the same idea as <NoWords>, only that it is much more difficult to learn by the model as it represents the ratio between the length of the news and that of the summary. The combination of <LexOverlap>-<NoWords> is interesting because it forces the model to generate a text with an approximate number of words. Still, the generated text must not match the one received by the model. <NoWords> indicates how many words the summary should have, while <LexOverlap> restricts the percentage of combinations of words that are present in the news and generated text by the model; a small value for <LexOverlap> indicates that the model must reformulate an idea from the news, whereas a large value makes the model extract the most important phrases within a word limit.

2.4. Evaluation Metrics

Our evaluations considered both automated and human evaluations of the generated summaries. We wanted the evaluation of the model to be a sustainable one; for this, the three evaluation metric methods used were: Recall Oriented Understudy for Gisting Evaluation (ROUGE) [

28] as a classic metric, which is used in the majority of research in the field of abstract summarization, BERTScore [

30], a metric that uses a pre-trained model to understand the generated text and the reference to provide a better comparison, and human evaluation. To evaluate the characteristics of the control token, the following metrics were used: Mean Absolute Error (MAE) and Mean-Squared Error (MSE) for <NoSentences> and <NoWords>, and the Pearson and Spearman coefficients were used for <RatioTokens> and <LexOverlap>.

2.4.1. BERTScore

Metrics based on Transformers [

1], such as BERTScore [

30], have been introduced to better capture the similarity between texts. BERTScore shows how good and realistic a text generated by a model is at the semantic level (i.e., the metric considers the meaning of the text by computing the cosine similarity between token embeddings from the generated sentences versus the tokens in the given sentences as a reference). The token embeddings are the numerical representations of subwords obtained using the BERT [

37] tokenizer. The precision, recall, and F

scores are computed based on the scalar product between the embeddings in the two texts. Precision refers to the generated text and is calculated as the average value for the largest scalar product between the embeddings of the generated sentence and those of the reference sentence; in contrast, recall is centered on the reference text and is computed in an equivalent manner while considering the embedding of the reference versus the generated sentence embeddings. The original paper showed good correlations to human evaluations. Even if BERTScore is more accurate when compared to classical machine translation metrics, which account for the overlap between words using n-grams or synonyms (e.g., BLEU, ROUGE), the metric requires a language model for the targeted language. We used the implementation offered by HuggingFace (

https://huggingface.co/spaces/evaluate-metric/bertscore; last accessed on 20 October 2022), which considers mBERT [

37] for the Romanian language. The performance metrics are computed as follows:

where:

2.4.2. Human Evaluation

Human evaluation is considered the gold standard in measuring the quality of generated text [

33], but it is costly and difficult to achieve. For human evaluation, the most-used method is the one by which a form is created, and the respondents are asked to evaluate the generated text. In our case, correspondents were asked to assess the generated text from the point of view of five metrics: main idea (i.e., the main idea of the article is present within the summary), details (i.e., the key information is found in the generated text for irrelevant ideas), cohesion (i.e., phrases and ideas have a logic), wording/paraphrasing (i.e., the text is not the same as that of the news and the model-made changes), and language beyond the source text (i.e., there is a varied range of lexical and syntactic structures). The scores ranged from 1 to 4, the best being 4. The summary scoring rubric is based on the studies of Taylor [

38] and Westley, Culatta, Lawrence, and Hall-Kenyon [

39]. The raters were asked to evaluate 5 examples chosen randomly from the texts generated using the 3 decoding methods, and for 3 variants of the model; in total, 45 questions were included in the form. The Intraclass Correlation Coefficient (ICC3) [

40] was calculated for each configuration and model-version-decoding method to measure the consistency of the evaluations. The form was sent to people collaborating with our research laboratory to obtain the relevant results, primarily due to the complexity of the 5 metrics used.

2.5. Experimental Setup

The Adam [

34] optimizer started from a learning rate equal to 1 × 10

and was reduced to 4 × 10

using the callback ReduceLROnPlateau, for patience equal to 2 and a factor of 1/e. The patience parameter was set to 1 for combinations of control tokens due to the task’s complexity and the dataset’s size; the training was more aggressive, modifying the learning rate if there were no improvements after an epoch. The training was stopped if no improvements were noticed after 3 epochs for baseline summarization or 4 epochs for the control token. A context size equal to 724 was considered, and the batch size varied for each model version: 128 for the base, 24 for the medium, and 16 for the large models. Three decoding methods were used for text generation: greedy, beam-search, and top-p sampling. The experiments were performed on TPU v3.8 for training, while the NVIDIA Tesla A100 and NVIDIA Tesla P100 were used for text generation and evaluation. The model received prompts that contained the summary token and those that specified the characteristics of the text to be generated.

4. Discussion

The baseline model managed to achieve good results (see

Table 1) for the summarization task, and the best results for ROUGE-L (34.67%) and BERTScore (74.34%) were obtained by the medium version with the beam search decoding method. It is worth noting that the best results were obtained with the beam search decoding method regardless of the considered model. Poorer results obtained by the large version are arguable, given the relatively small size of the dataset.

Results from the human evaluations (see

Table 2) were also consistent, based on the obtained ICC3 score. The best score for the main idea was obtained by the large model with greedy decoding (3.73/4), followed by the medium version with beam search with a score of 3.43/4, thus arguing that the models managed to identify the main idea from the news. In terms of the provided details, the best score (3.36/4) was achieved by the medium model with beam search decoding (see

Appendix A.1 for an example). The model managed to have coherent sentences with an elevated language; this was also shown in the paper that introduced RoGPT2 [

10]. The large model obtained the highest overall score in terms of cohesion with greedy decoding (3.27/4), followed by the medium model with beam search with a score of 3.13/4; this lower score is justifiable since the contents of some randomly sampled news articles were challenging to summarize (see

Appendix A.2 for a horoscope example). Paraphrasing was the main problem of the texts generated by the model since the models mostly repeated information from the reference text. Nevertheless, the results obtained by the model are impressive, considering that the human-evaluated news articles originated from a dataset on which the model was not trained.

The summaries using control tokens obtained better scores than the baseline summarization task (see

Table 3). The small differences indicate that a winning configuration cannot be determined with certainty as the largest difference was up to 2%; however, we observed that beam search consistently obtained the best results. Despite being the most complex token, the largest improvement in BERTScore

with 1.08% was obtained with the <LexOverlap> control token. The worst results for controlling text characteristics were obtained by <NoSentences>, whereas <RatioTokens> obtained a lower BERTScore than <NoWords> because it is a token more difficult to understand by the model.

Lower performance for combinations of tokens was expected because the dataset is relatively small and the task difficulty was higher. Then, comparing the performance of the models on each control token individually, we noticed that a higher performance was obtained for the second token specified in the prompt; this suggests that the model was influenced more by the second token from the prompt. The combination <NoWords>-<LexOverlap> obtained the best overall results, highlighting the benefits of complementarity between control tokens. Overall, the best decoding method was beam search.

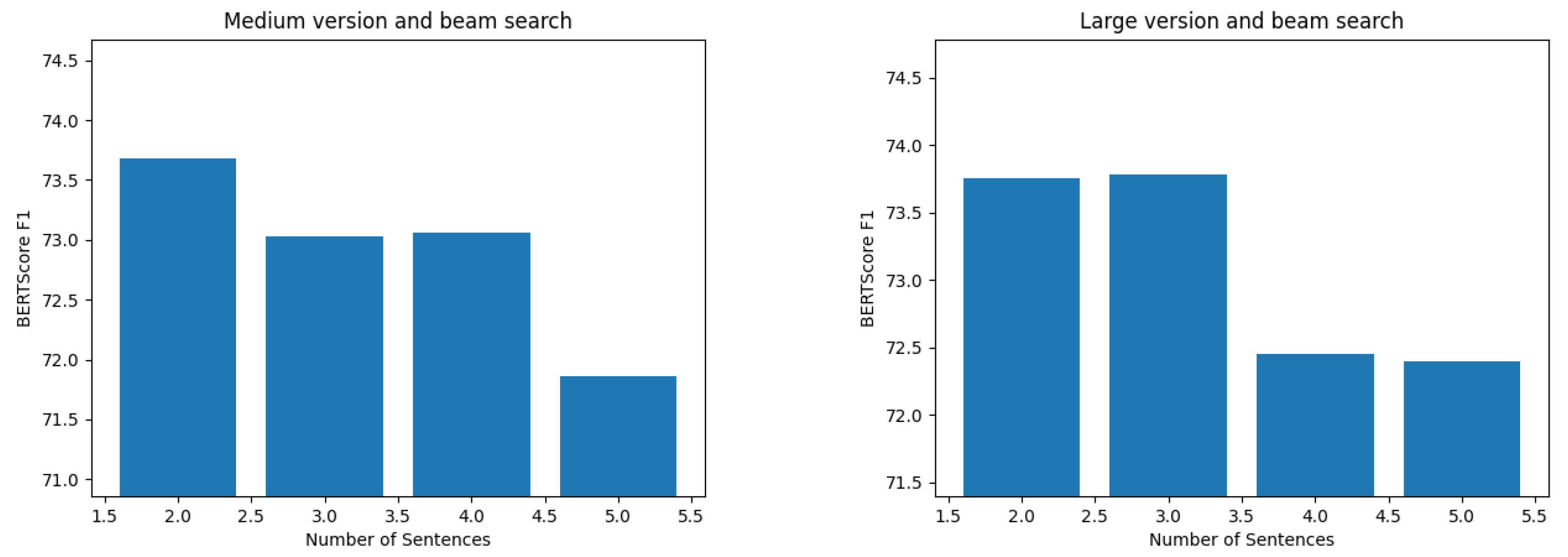

When considering the exploratory analysis, the best results when varying the number of sentences were obtained for values of 2 and 3; this was expected as most summaries had 3 sentences. The example from

Appendix C.1 highlights that the model seems to only extract sentences from the original text without paraphrasing. With <NoSentences> set at three, the model copied a central sentence and reiterated it based on a repetition present in the source text (i.e., the news article contained “Roxana Ispas este fondatoarea brandului Ronna Swimwear.” and “Roxana Ispas, fondatoare Ronna Swimwear”, which confused the model). Furthermore, there was a problem when setting the control token to 5 as the model failed to generate five sentences; nevertheless, it generated considerably longer sentences than the previous use case with only four sentences.

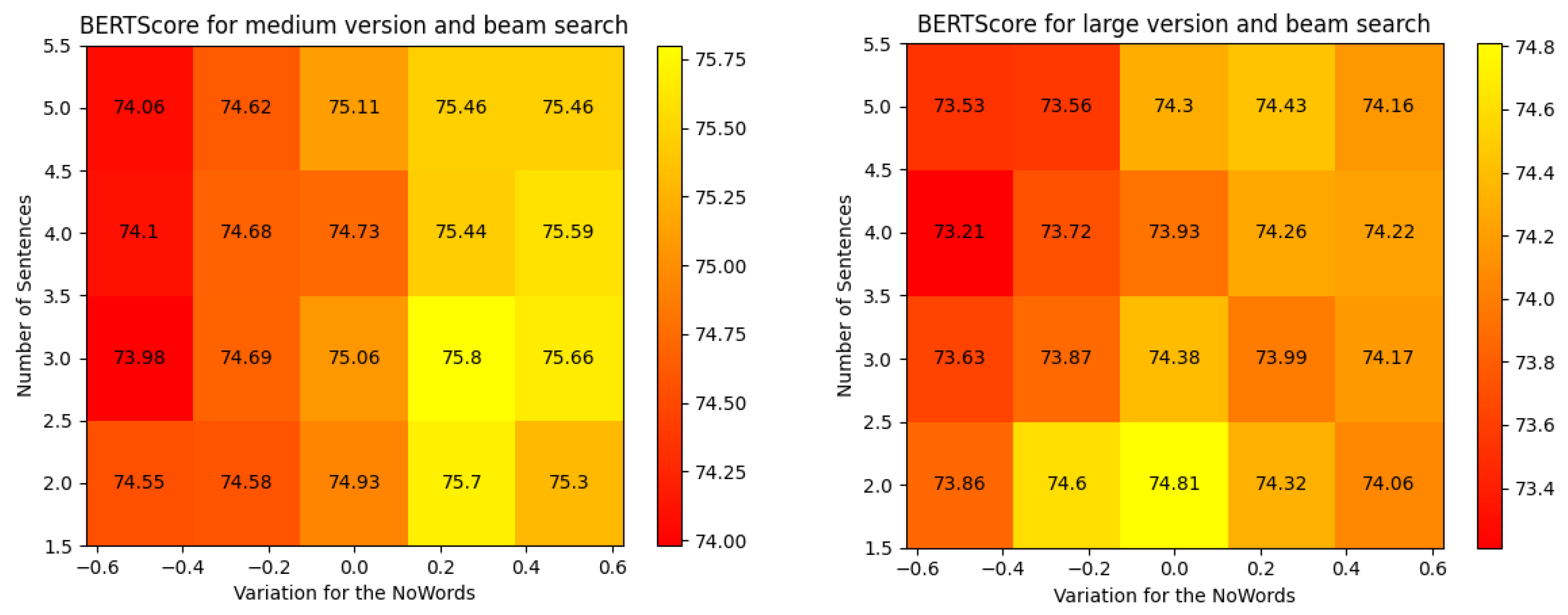

The best results for the experiment with the <NoSentences>-<NoWords> combination were obtained when the number of sentences was equal to 2 or 3 and the number of words was equal to +25% or +50% more words than the original summary. The best BERTScore was obtained for the medium version with <NoSentence> = 3 and <NoWords> = +25%, followed by a similar scenario with <NoSentences> = 2 and the same value for <NoWords>. As exemplified in

Appendix C.2, the model takes into account the number of words that must be generated, i.e., there is a proportional relationship between the length of the summary and the value of the control token. Furthermore, a higher compression rate given by a smaller number of words forced the model to generate one less sentence than specified.