Abstract

Gradient-based methods are popularly used in training neural networks and can be broadly categorized into first and second order methods. Second order methods have shown to have better convergence compared to first order methods, especially in solving highly nonlinear problems. The BFGS quasi-Newton method is the most commonly studied second order method for neural network training. Recent methods have been shown to speed up the convergence of the BFGS method using the Nesterov’s acclerated gradient and momentum terms. The SR1 quasi-Newton method, though less commonly used in training neural networks, is known to have interesting properties and provide good Hessian approximations when used with a trust-region approach. Thus, this paper aims to investigate accelerating the Symmetric Rank-1 (SR1) quasi-Newton method with the Nesterov’s gradient for training neural networks, and to briefly discuss its convergence. The performance of the proposed method is evaluated on a function approximation and image classification problem.

1. Introduction

Neural networks have shown to have great potential in several applications. Hence, there is a great demand for large scale algorithms that can train neural networks effectively and efficiently. Neural network training posses several challenges such as ill-conditioning, hyperparameter tuning, exploding and vanishing gradients, saddle points, etc. Thus the optimization algorithm plays an important role in training neural networks. Gradient-based algorithms have been widely used in training neural networks and can be broadly categorized into first order methods (e.g., SGD, Adam) and higher order methods (e.g., Newton method, quasi-Newton method), each with its own pros and cons. Much progress has been made in the last 20 years in designing and implementing robust and efficient methods suitable for deep learning and neural networks. While several works focus on sophisticated update strategies for improving the performance of the optimization algorithm, several works propose acceleration techniques such as incorporating momentum, Nesterov’s acceleration or Anderson’s accleration. Furthermore, it has been shown that second-order methods show faster convergence compared to first order methods, even without the acceleration techniques. While most of the second-order quasi-Newton methods used in training neural networks are rank-2 update methods, rank-1 methods are not widely used since they do not perform as well as the rank-2 update methods. In this paper, we investigate if the Nesterov’s acceleration can be applied to the rank-1 update methods of the quasi-Newton family to improve performance.

Related Works

First order methods are most commonly used due to their simplicity and low computational complexity. Several works have been devoted to first-order methods such as the gradient descent [1,2] and its variance-reduced forms [3,4,5], Nesterov’s Accelerated Gradient Descent (NAG) [6], AdaGrad [7], RMSprop, [8] and Adam [9]. However, second order methods have shown to have better convergence, with the only drawbacks being high computational and storage costs. Thus, several approximations have been proposed under Newton [10,11] and quasi-Newton [12] methods to efficiently use the second order information while keeping the computational load minimal. Recently there has been a surge of interest in designing efficient second order quasi-Newton variants which are better suited for large scale problems, such as in [13,14,15,16] since in addition to better convergence, second order methods are more suitable for parallel and distributed training. It is notable that among the quasi-Newton methods, the Broyden-Fletcher-Goldfarb-Shanon (BFGS) method is most widely studied for training neural networks. The Symmetric Rank-1 (SR1) quasi-Newton method, though less commonly used in training neural networks, is known to have interesting properties and provide good Hessian approximations when used with a trust-region approach [17,18]. Several works in optimization [19,20,21] have shown SR1 quasi-Newton methods to be efficient. Recent works such as [22,23] have proposed sampled LSR1 (limited memory) quasi-Newton updates for machine learning and describe efficient ways for distributed training implementation. Recent studies such as [24,25] have shown that the BFGS method can be accelerated by using Nesterov’s accelerated gradient and momentum terms. In this paper, we explore if the Nesterov’s acceleration can be applied to the LSR1 quasi-Newton method as well. We thus propose a new limited memory Nesterov’s acclerated symmetric rank-1 method (L-SR1-N) for training neural networks. We show that the performance of the LSR1 quasi-Newton method can be significantly improved using the trust-region approach and Nesterov’s acceleration.

2. Background

Training in neural networks is an iterative process in which the parameters are updated in order to minimize an objective function. Given a subset of the training dataset with input-output pair samples drawn at random from the training set and error function parameterized by a vector , the objective function to be minimized is defined as

where , is the batch size. In full batch, and where . In gradient based methods, the objective function under consideration is minimized by the iterative formula (2) where is the iteration count and is the update vector, which is defined for each gradient algorithm.

Notations: We briefly define the notations used in this paper. In general, all vectors are denoted by boldface lowercase characters, matrices by boldface uppercase characters and scalars by simple lowercase characters. The scalars, vectors and matrices at each iteration bear the corresponding iteration index k as a subscript. Below is a list of notations used.

- iteration index

- n is the number of total samples in and is given by .

- b is the number of samples in the minbatch and is given by .

- d is the number of parameters of the neural network.

- is the limited memory size.

- is the learning rate or step size.

- is the momentum coefficient, chosen in the range (0,1).

- is the error evaluated at .

- is the gradient of the error function evaluated at .

In the following sections, we briefly discuss the common first and second order gradient based methods.

2.1. First-Order Gradient Descent and Nesterov’s Accelerated Gradient Descent Methods

The gradient descent (GD) method is one of the earliest and simplest gradient based algorithms. The update vector is given as

The learning rate determines the step size along the direction of the gradient . The step size is usually fixed or set to a simple decay schedule.

The Nesterov’s Accelerated Gradient (NAG) method [6] is a modification of the gradient descent method in which the gradient is computed at instead of . Thus, the update vector is given by:

where is the gradient at and is referred to as Nesterov’s accelerated gradient. The momentum coefficient is a hyperparameter chosen in the range (0,1). Several adaptive momentum and restart schemes have also been proposed for the choice of the momentum [26,27]. The algorithms of GD and NAG are as shown in Algorithm 1 and Algorithm 2, respectively.

| Algorithm 1 GD Method |

| Require: and Initialize:

|

| Algorithm 2 NAG Method |

| Require:, and Initialize: and = 0.

|

2.2. Second-Order Quasi-Newton Methods

Second order methods such as the Newton’s method have better convergence than first order methods. The update vector of second order methods take the form

However, computing the inverse of the Hessian matrix incurs a high computational cost, especially for large-scale problems. Thus, quasi-Newton methods are widely used where the inverse of the Hessian matrix is approximated iteratively.

2.2.1. BFGS Quasi-Newton Method

The Broyden-Fletcher-Goldfarb-Shanon (BFGS) algorithm is one of the most popular quasi-Newton methods for unconstrained optimization. The update vector of the BFGS quasi-Newton method is given as where is the search direction. The hessian matrix is symmetric positive definite and is iteratively approximated by the following BFGS rank-2 update formula [28].

where denotes the identity matrix, and

2.2.2. Nesterov’s Accelerated Quasi-Newton Method

The Nesterov’s Accelerated Quasi-Newton (NAQ) [24] method introduces Nesterov’s acceleration to the BFGS quasi-Newton method by approximating the quadratic model of the objective function at and by incorporating Nesterov’s accelerated gradient in its Hessian update. The update vector of NAQ can be written as:

where is the search direction and the Hessian update equation is given as

where

(9) is derived from the secant condition and the rank-2 updating formula [24]. It is proven that the Hessian matrix updated by (9) is a positive definite symmetric matrix, given is initialized to identity matrix [24]. It is shown in [24] that NAQ has similar convergence properties to that of BFGS.

The algorithms of BFGS and NAQ are as shown in Algorithm 3 and Algorithm 4, respectively. Note that the gradient is computed twice in one iteration. This increases the computational cost compared to the BFGS quasi-Newton method. However, due to acceleration by the momentum and Nesterov’s gradient term, NAQ is faster in convergence compared to BFGS. Often, as the scale of the neural network model increases, the O cost of storing and updating the Hessian matrices and become expensive. Hence, limited memory variants LBFGS and LNAQ were proposed, and the respective Hessian matrices were updated using only the last curvature information pairs , where is the limited memory size and is chosen such that .

| Algorithm 3 BFGS Method |

| Require: and Initialize: and = .

|

| Algorithm 4 NAQ Method |

| Require:, and Initialize: , = and = 0.

|

2.2.3. SR1 Quasi-Newton Method

While the BFGS and NAQ methods update the Hessian using rank-2 updates, the Symmetric Rank-1 (SR1) method performs rank-1 updates [28]. The Hessian update of the SR1 method is given as

where,

Unlike the BFGS or NAQ method, the Hessian generated by the SR1 update may not always be positive definite. Also, the denominator can vanish or become zero. Thus, SR1 methods are not popularly used in neural network training. However, SR1 methods are known to converge faster towards the true Hessian than the BFGS method, and have computational advantages for sparse problems [17]. Furthermore, several strategies have been introduced to overcome the drawbacks of the SR1 method, resulting in them performing almost on par with, if not better than, the BFGS method.

Thus, in this paper, we investigate if the performance of the SR1 method can be accelerated using Nesterov’s gradient. We propose a new limited memory Nesterov’s accelerated symmetric rank-1 (L-SR1-N) method and evaluate its performance in comparison to the conventional limited memory symmetric rank-1 (LSR1) method.

3. Proposed Method

Second order quasi-Newton (QN) methods build an approximation of a quadratic model recursively using the curvature information along a generated trajectory. In this section, we first show that the Nesterov’s acceleration when applied to QN satisfies the secant condition and then show the derivation of the proposed Nesterov Accelerated Symmetric Rank-1 Quasi-Newton Method.

Nesterov Accelerated Symmetric Rank-1 Quasi-Newton Method

Suppose that is continuosly differentiable and that , then from Taylor series, the quadratic model of the objective function at an iterate is given as

In order to find the minimizer , we equate and thus have

The new iterate is given as,

and the quadratic model at the new iterate is given as

where is the step length and and its consecutive updates are symmetric positive definite matrices satisfying the secant condition. The Nesterov’s acceleration approximates the quadratic model at instead of the iterate at . Here and is the momentum coefficient in the range . Thus we have the new iterate given as,

In order to show that the Nesterov accelerated updates also satisfy the secant condition, we require that the gradient of should match the gradient of the objective function at the last two iterates and . In other words, we impose the following two requirements on ,

From (16),

Substituting in (21), the condition in (19) is satisfied. From (20) and substituting in (21), we have

On rearranging the terms, we have the secant condition

where,

We have thus shown that the Nesterov accelerated QN update satisfies the secant condition. The update equation of for SR1-N can be derived similarly to that of the classic SR1 update [28]. The secant condition requires to be updated with a symmetric matrix such that is also symmetric and satisfies the secant condition. The update of is defined using a symmetric-rank-1 matrix formed by an arbitrary vector is given as

where and are chosen such that they satisfy the secant condtion in (24). Substituting (26) in (24), we get

Since is a scalar, we can deduce a scalar multiple of and thus have

where

Thus the proposed Nesterov accelerated symmetric rank-1(L-SR1-N) update is given as

Note that the Hessian update is performed only if the below condition in (31) is satisfied, otherwise .

By applying the Sherman-Morrison-Woodbury Formula [28], we can find as

where,

The proposed algorithm is as shown in Algorithm 5. We implement the proposed method in its limited memory form, where the Hessian is updated using the recent curvature information pairs satisfying (31). Here denotes the limited memory size and is chosen such that . The proposed method uses the trust-region approach where the subproblem is solved using the CG-Steihaug method [28] as shown in Algorithm 6. Also note that the proposed L-SR1-N has two gradient computations per iteration. The Nesterov’s gradient can be approximated [25,29] as a linear combination of past gradients as shown below.

Thus we have the momentum accelerated symmetric rank-1 (L-MoSR1) method by approximating the Nesterov’s gradient in L-SR1-N.

| Algorithm 5 Proposed Algorithm |

|

| Algorithm 6 CG-Steihaug |

| Require: Gradient , tolerance , and trust-region radius . Initialize: Set

|

4. Convergence Analysis

In this section we discuss the convergence proof of the proposed Nesterov accelerated Symmetric Rank-1 (L-SR1-N) algorithm in its limited memory form. As mentioned earlier, the Nesterov’s acceleration approximates the quadratic model at instead of the iterate at . For ease of representation, we write . In the limited memory scheme, the Hessian matrix can be implicitly constructed using the recent number of curvature information pairs . At a given iteration k, we define matrices and of dimensions as

where the curvature pairs are each vectors of dimensions . The Hessian approximation in (30) can be expressed in its compact representation form [30] as

where is the initial Hessian matrix, is a lower triangular matrix and is a diagonal matrix as given below,

Let be the level set such that and , denote the sequence generated by the explicit trust-region algorithm where be the trust-region radius of the successful update step. We choose . Since the curvature information pairs () given by (33) are stored in and only if they satisfy the condition in (31), the matrix ) is invertible and positive semi-definite.

Assumption 1.

The sequence of iterates and remains in the closed and bounded set Ω on which the objective function is twice continuously differentiable and has Lipschitz continuous gradient, i.e., there exists a constant such that

Assumption 2.

The Hessian matrix is bounded and well-defined, i.e., there exists constants ρ and M, such that

and for each iteration k

Assumption 3.

Let be any symmetric matrix and be an optimal solution to the trust region subproblem,

where lies in the trust region. Then for all ,

This assumption ensures that the subproblem solved by trust-region results in a sufficiently optimal solution at every iteration. The proof for this assumption can be shown similar to the trust-region proof by Powell.

Lemma 1.

Proof.

We begin with the proof for the general case [31], where the Hessian is bounded by

The proof for (43) is given by mathematical induction. Let be the limited memory size and be the curvature information pairs given by (33) at the kth iteration for . For , we can see that (43) holds true. Let us assume that (43) holds true for some . Thus for we have

Since we use the limited memory scheme, , where is the limited memory size. Therefore, the Hessian approximation at the iteration satisfies

We choose as it removes the choice of the hyperparameter for the initial Hessian and also ensures that the subproblem solver CG algorithm (Algorithm 6) terminates in at most iterations [22]. Thus the Hessian approximation at the kth iteration satisfies (54) and is still bounded.

This completes the inductive proof. □

Theorem 1.

Given a level set that is bounded, let be the sequence of iterates generated by Algorithm 5. If assumptions (A1) to (A3) holds true, then we have,

Proof.

From the derivation of the proposed L-SR1-N algorithm, it is shown that the Nesterov’s acceleration to quasi-Newton method satisfies the secant condition. The proposed algorithm ensures the definiteness of the Hessian update as the curvature pairs used in the Hessian update satisfies (31) for all k. The sequence of updates are generated by solving using the trust region method where is the optimal solution to the subproblem in (41). From Theorem 2.2 in [32], it can be shown that the updates made by the trust region method converges to a stationary point. Since is shown to be bounded (Lemma 1), it follows from that theorem that as , converges to a point such that □

5. Simulation Results

We evaluate the performance of the proposed Nesterov accelerated symmetric rank-1 quasi-Newton (L-SR1-N) method in its limited memory form in comparison to conventional first order methods and second order methods. We illustrate the performances in both full batch and stochastic/mini-batch setting. The hyperparameters are set to their default values. The momentum coefficient is set to 0.9 in NAG and 0.85 in oLNAQ [33]. For L-NAQ [34], L-MoQ [35], and the proposed methods, the momentum coefficient is set adaptively. The adaptive is obtained from the following equations, where and .

5.1. Results of the Levy Function Approximation Problem

Consider the following Levy function approximation problem to be modeled by a neural network.

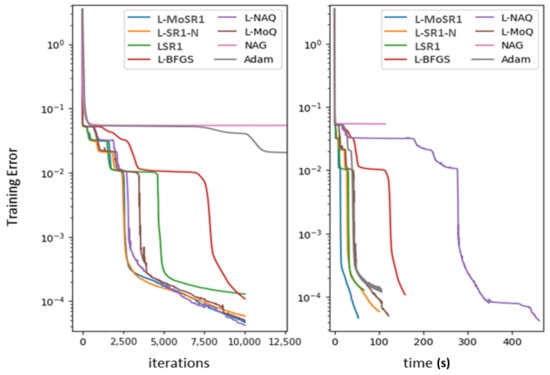

The performance of the proposed L-SR1-N and L-MoSR1 is evaluated on the Levy function (58) where . Therefore the inputs to the neural network is . We use a single hidden layer with 50 hidden neurons. The neural network architecture is thus . We terminate the training at 10,000, and set and . Sigmoid and linear activation functions are used for the hidden and output layers, respectively. Mean squared error function is used. The number of parameters is . Note that we use full batch for the training in this example and the number of training samples is . Figure 1 shows the average results of 30 independent trials. The results confirm that the proposed L-SR1-N and L-MoSR1 have better performance compared to the first order methods as well as the conventional LSR1 and rank-2 LBFGS quasi-Newton method. Furthermore, it can be observed that incorporating the Nesterov’s gradient in LSR1 has significantly improved the performance, bringing it almost equivalent to the rank-2 Nesterov accelerated L-NAQ and momentum accelerated L-MoQ methods. Thus we can confirm that the limited memory symmetric rank-1 quasi-Newton method can be significantly accelerated using the Nesterov’s gradient. From the iterations vs. training error plot, we can observe that the L-SR1-N and L-MoSR1 are almost similar in performance. This verifies that the approximation applied to L-SR1-N in L-MoSR1 is valid, and has an advantage in terms of computation wall time. This can be observed in the time vs. training error plot, where the L-MoSR1 method converges much faster compared to the other first and second order methods under comparison.

Figure 1.

Average results on levy function approximation problem with (full batch).

5.2. Results of MNIST Image Classification Problem

In large scale optimization problems, owing to the massive amount of data and large number of parameters of the neural network model, training the neural network using full batch is not feasible. Hence a stochastic approach is more desirable where the neural networks are trained using a relatively small subset of the training data, thereby significantly reducing the computational and memory requirements. However, getting second order methods to work in a stochastic setting is a challenging task. A common problem in stochastic/mini-batch training is the sampling noise that arises due to the gradients being estimated on different mini-batch samples at each iteration. In this section, we evaluate the performance of the proposed L-SR1-N and L-MoSR1 methods in the stochastic/mini-batch setting. We use the MNIST handwritten digit image classification problem for the evaluation. The MNIST dataset consists of 50,000 train and 10,000 test samples of pixel images of handwritten digits from 0 to 9 that needs to be classified. We evaluate the performance of this image classification task on a simple fully connected neural network and LeNet-5 architectures. In a stochastic setting, the conventional LBFGS method is known to be affected by sampling noise and to alleviate this issue, [16] proposed the oLBFGS method that computes two gradients per iteration. We thus compare the performance of our proposed method against both the naive stochastic LBFGS (denoted here as oLBFGS-1) and the oLBFGS proposed in [16].

5.2.1. Results of MNIST on Fully Connected Neural Networks

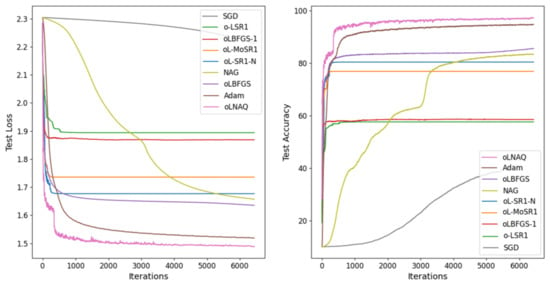

We first consider a simple fully connected neural network with two hidden layers with 100 and 50 hidden neurons respectively. Thus, the neural network architecture used is . The hidden layers use the ReLU activation function and the loss function used is the softmax cross-entropy loss function. Figure 2 shows the performance comparison with a batch size and limited memory size of . It can be observed that the second order quasi-Newton methods show fast convergence compared to first order methods in the first 500 iterations. From the results we can see that even though the stochastic L-SR1-N (oL-SR1-N) and stochastic MoSR1 (oL-MoSR1) does not perform the best on the small network, it has significantly improved the performance of the stochastic LSR1 (oLSR1) method, and performs better than the oLBFGS-1 method. Since our aim is to investigate the effectiveness of the Nesterov’s acceleration on SR1, we focus on the performance comparison of oLBFGS-1, oLSR1 and the proposed oL-SR1-N and oL-MoSR1 methods. As seen from Figure 2, oLBFGS-1, oLSR1 does not further improve the test accuracy or test loss after 1000 iterations. However, incorporating Nesterov’s acceleration significantly improved the performance compared to the conventional oL-SR1 and oLBFGS-1, thus confirming the effectiveness of Nesterov’s acceleration on LSR1 in the stochastic setting.

Figure 2.

Results of MNIST on fully connected neural network with and .

5.2.2. Results of MNIST on LeNet-5 Architecture

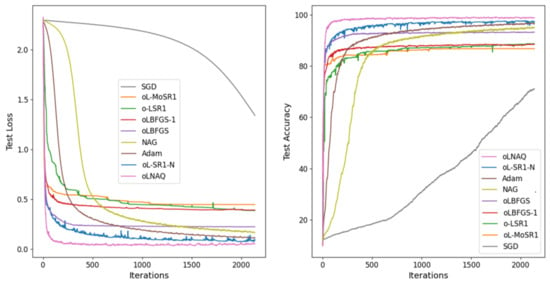

Next, we evaluate the performance of the proposed methods on a bigger network with convolutional layers. The LeNet-5 architecture consists of two sets of convolutional and average pooling layers, followed by a flattening convolutional layer, then two fully-connected layers and finally a softmax classifier. The number of parameters is 61,706. Figure 3 shows the performance comparison when trained with a batch size of and limited memory . From the results, we can observe that oLNAQ performs the best. However, the proposed oL-SR1-N method performs better compared to both the first order SGD, NAG, Adam and second order oLSR1, oLBFGS-1 and oLBFGS methods. It can be confirmed that incorporating the Nesterov’s gradient can accelerate and significantly improve the performance of the conventional LSR1 method, even in the stochastic setting.

Figure 3.

Results of MNIST on LeNet-5 architecture with and .

6. Conclusions and Future Works

Acceleration techniques such as the Nesterov’s acceleration have shown to speed up convergence as in the cases of NAG accelerating GD and NAQ accelerating the BFGS methods. Second order methods are said to achieve better convergence compared to first order methods and are more suitable for parallel and distributed implementations. While the BFGS quasi-Newton method is the most extensively studied method in the context of deep learning and neural networks, there are other methods in the quasi-Newton family, such as the Symmetric Rank-1 (SR1), which are shown to be effective in optimization but not extensively studied in the context of neural networks. SR1 methods converge towards the true Hessian faster than BFGS and have computational advantages for sparse or partially separable problems [17]. Thus, investigating acceleration techniques on the SR1 method is significant. The Nesterov’s acceleration is shown to accelerate convergence as seen in the case of NAQ, improving the performance of BFGS. We investigate whether the Nesterov’s acceleration can improve the performance of other quasi-Newton methods such as SR1 and compare the performance among second-order Nesterov’s accelerated variants. To this end, we have introduced a new limited memory Nesterov accelerated symmetric rank-1 (L-SR1-N) method for training neural networks. We compared the results with LNAQ to give a sense of comparison of how the Nesterov’s acceleration affects the two methods of the quasi-Newton family, namely BFGS and SR1. The results confirm that the performance of the LSR1 method can be significantly improved in both the full batch and the stochastic settings by introducing Nesterov’s accelerated gradient. Furthermore, it can be observed that the proposed L-SR1-N method is competitive with LNAQ and is substantially better than the first order methods and second order LSR1 and LBFGS method. It is shown both theoretically and empirically that the proposed L-SR1-N converges to a stationary point. From the results, it can also be noted that, unlike in the full batch example, the performance of oL-SR1-N and oL-MoSR1 do not correlate well in the stochastic setting. This can be regarded as due to the sampling noise, similar to that of oLBFGS-1 and oLBFGS. In the stochastic setting, the curvature information vector of oL-MoSR1 is approximated based on the gradients computed on different mini-batch samples. This could introduce sampling noise and hence result in oL-MoSR1 not being a close approximation of the stochastic oL-SR1-N method. Future works could involve solving the sampling noise problem with multi-batch strategies such as in [36], and further improving the performance of L-SR1-N. Furthermore, a detailed study on larger networks and problems with different hyperparameter settings could test the limits of the proposed method.

Author Contributions

Conceptualization, S.I. and S.M.; Methodology, S.I.; Software, S.I.; formal analysis, S.I., S.M. and H.N; validation, S.I., S.M., H.N. and T.K.; writing—original draft preparation, S.I.; writing—review and editing, S.I. and S.M.; resources, H.A.; supervision, H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Source code is available on: https://github.com/indra-ipd/sr1-n (accessed on 23 December 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bottou, L.; Cun, Y.L. Large scale online learning. Adv. Neural Inf. Process. Syst. 2004, 16, 217–224. [Google Scholar]

- Bottou, L. Large-scale machine learning with stochastic gradient descent. In Proceedings of COMPSTAT’2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 177–186. [Google Scholar]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Peng, X.; Li, L.; Wang, F.Y. Accelerating minibatch stochastic gradient descent using typicality sampling. IEEE Trans. Neural Networks Learn. Syst. 2019, 31, 4649–4659. [Google Scholar] [CrossRef]

- Johnson, R.; Zhang, T. Accelerating stochastic gradient descent using predictive variance reduction. Adv. Neural Inf. Process. Syst. 2013, 26, 315–323. [Google Scholar]

- Nesterov, Y.E. A method for solving the convex programming problem with convergence rate O(1/kˆ2). Dokl. Akad. Nauk Sssr 1983, 269, 543–547. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Tieleman, T.; Hinton, G. Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. Neural Netw. Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Martens, J. Deep learning via Hessian-free optimization. ICML 2010, 27, 735–742. [Google Scholar]

- Roosta-Khorasani, F.; Mahoney, M.W. Sub-sampled Newton methods I: Globally convergent algorithms. arXiv 2016, arXiv:1601.04737. [Google Scholar]

- Dennis, J.E., Jr.; Moré, J.J. Quasi-Newton methods, motivation and theory. SIAM Rev. 1977, 19, 46–89. [Google Scholar] [CrossRef]

- Mokhtari, A.; Ribeiro, A. RES: Regularized stochastic BFGS algorithm. IEEE Trans. Signal Process. 2014, 62, 6089–6104. [Google Scholar] [CrossRef]

- Mokhtari, A.; Ribeiro, A. Global convergence of online limited memory BFGS. J. Mach. Learn. Res. 2015, 16, 3151–3181. [Google Scholar]

- Byrd, R.H.; Hansen, S.L.; Nocedal, J.; Singer, Y. A stochastic quasi-Newton method for large-scale optimization. SIAM J. Optim. 2016, 26, 1008–1031. [Google Scholar] [CrossRef]

- Schraudolph, N.N.; Yu, J.; Günter, S. A stochastic quasi-Newton method for online convex optimization. Artif. Intell. Stat. 2007, 26, 436–443. [Google Scholar]

- Byrd, R.H.; Khalfan, H.F.; Schnabel, R.B. Analysis of a symmetric rank-one trust region method. SIAM J. Optim. 1996, 6, 1025–1039. [Google Scholar] [CrossRef]

- Brust, J.; Erway, J.B.; Marcia, R.F. On solving L-SR1 trust-region subproblems. Comput. Optim. Appl. 2017, 66, 245–266. [Google Scholar] [CrossRef]

- Spellucci, P. A modified rank one update which converges Q-superlinearly. Comput. Optim. Appl. 2001, 19, 273–296. [Google Scholar] [CrossRef]

- Modarres, F.; Hassan, M.A.; Leong, W.J. A symmetric rank-one method based on extra updating techniques for unconstrained optimization. Comput. Math. Appl. 2011, 62, 392–400. [Google Scholar] [CrossRef][Green Version]

- Khalfan, H.F.; Byrd, R.H.; Schnabel, R.B. A theoretical and experimental study of the symmetric rank-one update. SIAM J. Optim. 1993, 3, 1–24. [Google Scholar] [CrossRef]

- Jahani, M.; Nazari, M.; Rusakov, S.; Berahas, A.S.; Takáč, M. Scaling up quasi-newton algorithms: Communication efficient distributed sr1. In Proceedings of the International Conference on Machine Learning, Optimization, and Data Science, Siena, Italy, 19–23 July 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 41–54. [Google Scholar]

- Berahas, A.; Jahani, M.; Richtarik, P.; Takáč, M. Quasi-Newton methods for machine learning: Forget the past, just sample. Optim. Methods Softw. 2021, 36, 1–37. [Google Scholar] [CrossRef]

- Ninomiya, H. A novel quasi-Newton-based optimization for neural network training incorporating Nesterov’s accelerated gradient. Nonlinear Theory Its Appl. IEICE 2017, 8, 289–301. [Google Scholar] [CrossRef]

- Mahboubi, S.; Indrapriyadarsini, S.; Ninomiya, H.; Asai, H. Momentum acceleration of quasi-Newton based optimization technique for neural network training. Nonlinear Theory Its Appl. IEICE 2021, 12, 554–574. [Google Scholar] [CrossRef]

- Sutskever, I.; Martens, J.; Dahl, G.E.; Hinton, G.E. On the importance of initialization and momentum in deep learning. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. 1139–1147. [Google Scholar]

- O’donoghue, B.; Candes, E. Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 2015, 15, 715–732. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.J. Numerical Optimization, 2nd ed.; Springer Series in Operations Research; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Mahboubi, S.; Indrapriyadarsini, S.; Ninomiya, H.; Asai, H. Momentum Acceleration of Quasi-Newton Training for Neural Networks. In Pacific Rim International Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2019; pp. 268–281. [Google Scholar]

- Byrd, R.H.; Nocedal, J.; Schnabel, R.B. Representations of quasi-Newton matrices and their use in limited memory methods. Math. Program. 1994, 63, 129–156. [Google Scholar] [CrossRef]

- Lu, X.; Byrd, R.H. A Study of the Limited Memory Sr1 Method in Practice. Ph.D. Thesis, University of Colorado at Boulder, Boulder, CO, USA, 1996. [Google Scholar]

- Shultz, G.A.; Schnabel, R.B.; Byrd, R.H. A family of trust-region-based algorithms for unconstrained minimization with strong global convergence properties. SIAM J. Numer. Anal. 1985, 22, 47–67. [Google Scholar] [CrossRef]

- Indrapriyadarsini, S.; Mahboubi, S.; Ninomiya, H.; Asai, H. A Stochastic Quasi-Newton Method with Nesterov’s Accelerated Gradient. In ECML-PKDD; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Mahboubi, S.; Ninomiya, H. A Novel Training Algorithm based on Limited-Memory quasi-Newton method with Nesterov’s Accelerated Gradient in Neural Networks and its Application to Highly-Nonlinear Modeling of Microwave Circuit. IARIA Int. J. Adv. Softw. 2018, 11, 323–334. [Google Scholar]

- Indrapriyadarsini, S.; Mahboubi, S.; Ninomiya, H.; Takeshi, K.; Asai, H. A modified limited memory Nesterov’s accelerated quasi-Newton. In Proceedings of the NOLTA Society Conference, IEICE, Online, 6–8 December 2021. [Google Scholar]

- Crammer, K.; Kulesza, A.; Dredze, M. Adaptive regularization of weight vectors. Adv. Neural Inf. Process. Syst. 2009, 22, 414–422. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).