K-Means Clustering Algorithm Based on Chaotic Adaptive Artificial Bee Colony

Abstract

1. Introduction

2. Relative Work

2.1. Artificial Bee Colony Algorithm

2.1.1. Initialization Stage

2.1.2. Employed Bee Stage

2.1.3. The Probability of Selecting the New Food Source

2.1.4. Scout Bee Stage

2.2. K-MEANS Cluster Algorithm

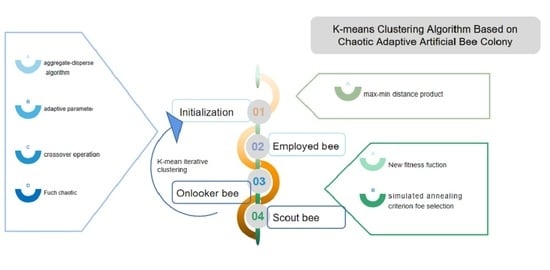

3. Chaotic Adaptive Artificial Bee Colony (CAABC) for Clustering

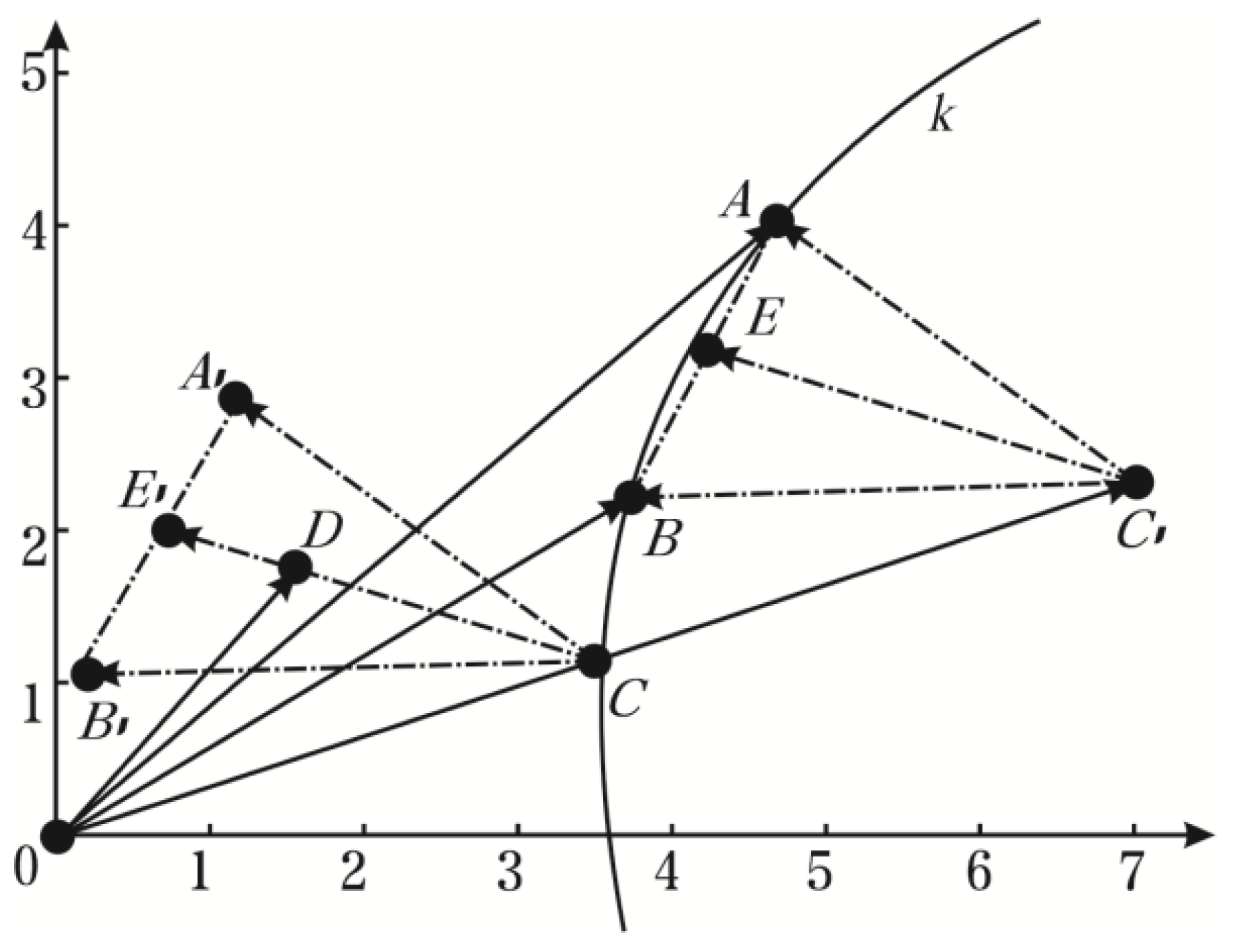

3.1. MAX–MIN Distance Product Algorithm

3.2. New Fitness Function

3.3. New Position Update Rules

3.3.1. Arregate-Dispeise Algorithm

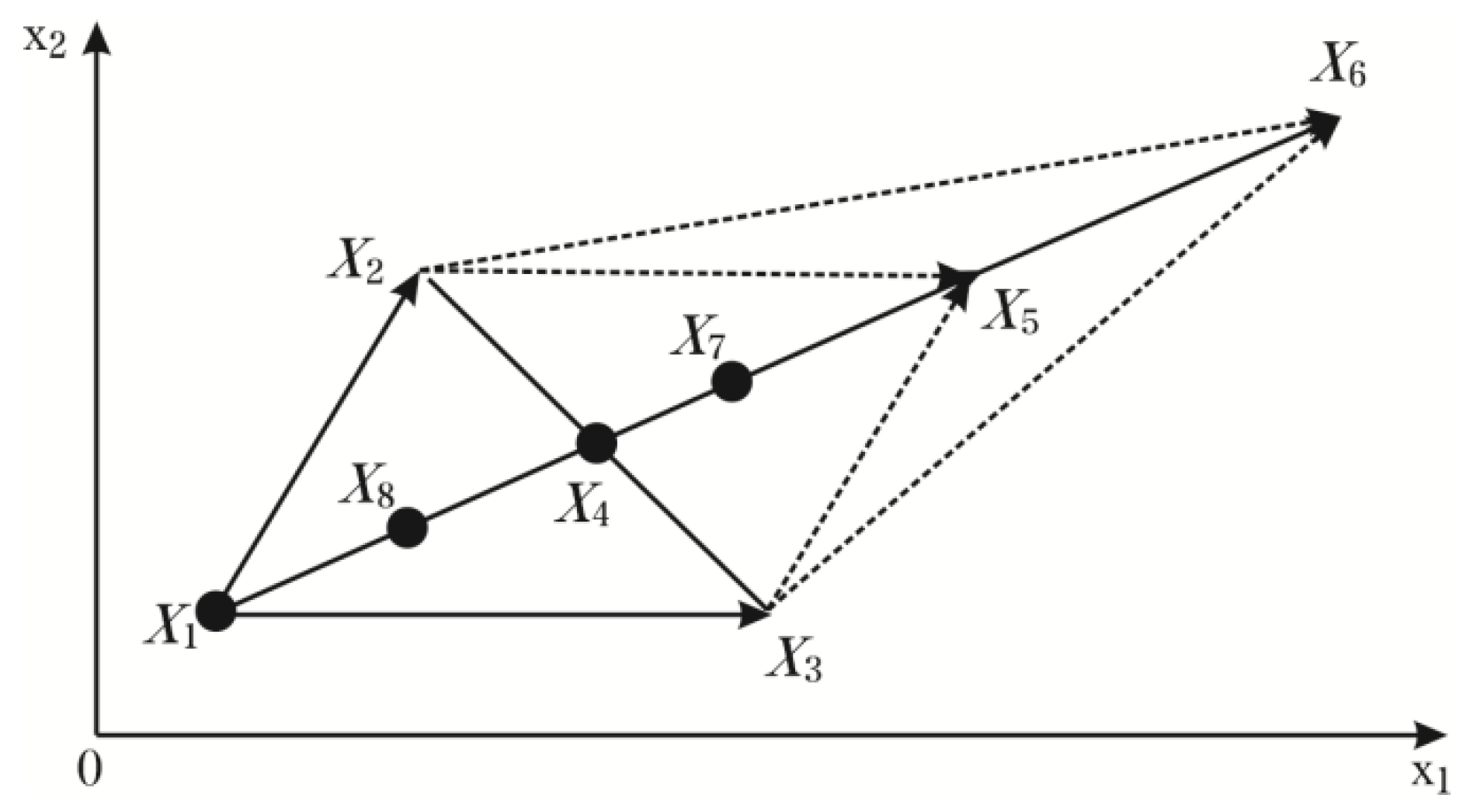

- The Simplex Method

- (1)

- means is the worst solution, and is the best one. The algorithm should search in the opposite direction to find the minimum. is the midpoint of , is on the extension line of , and is called the reflection point of with respect to :where is the reflection coefficient, which equals 1 as usual. The geometric relationship is shown in Figure 2:

- (2)

- denotes that that direction of searching is correct, the algorithm should keep going in this direction. Let . If , is replaced by to form a new simplex, or is dropped.

- (3)

- means that the searching is going in the right direction, but doesn’t need to expand.

- (4)

- demonstrates that has gone too far to need to be retracted.

- (5)

- If , need to be retracted toward .

- Aggregate and Disperse Operator

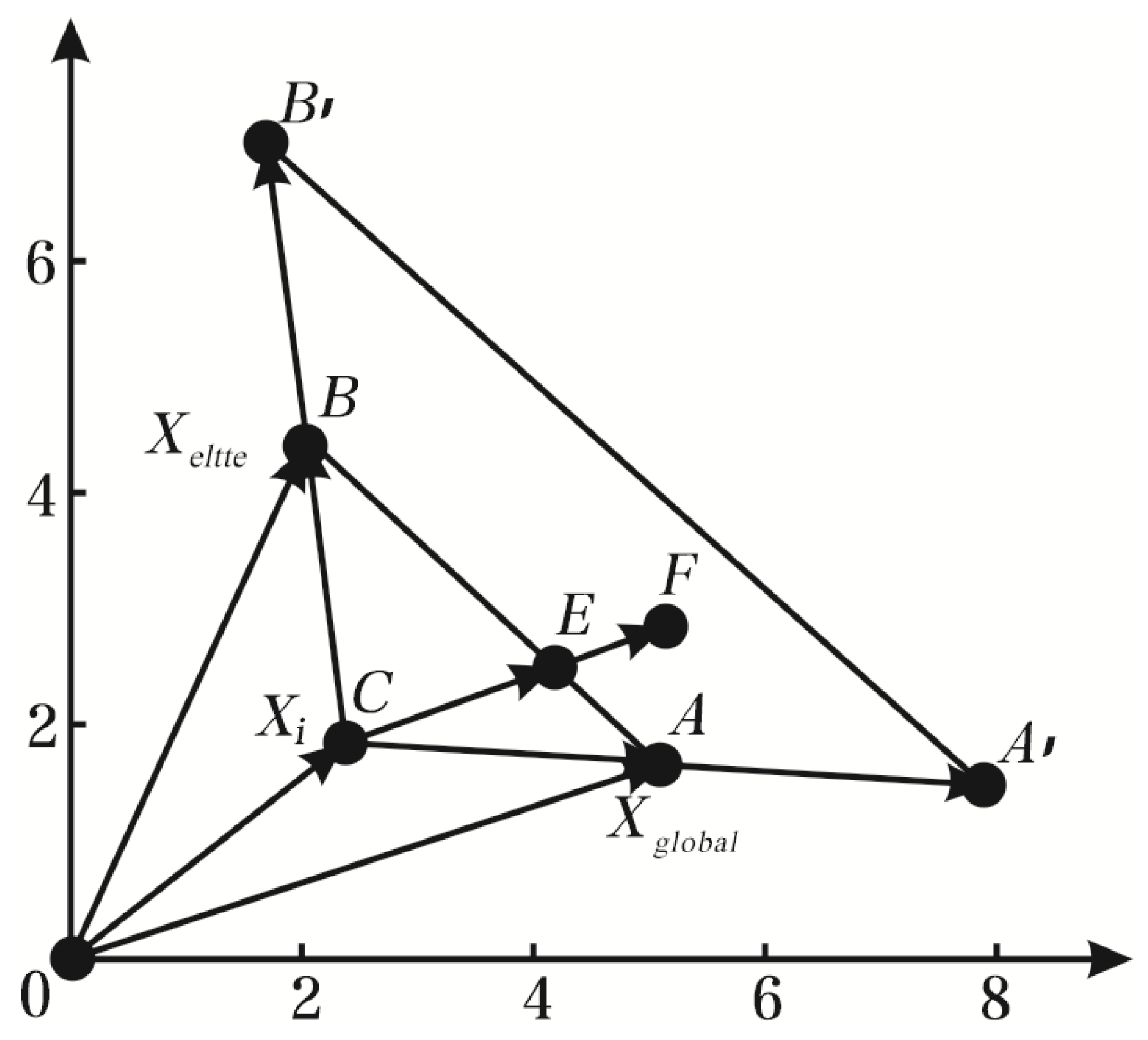

3.3.2. Adaptive Adjustment

3.3.3. Genetic Crossover

3.4. New Chaotic Disturbance

3.5. New Probability of Selecting Based on SA

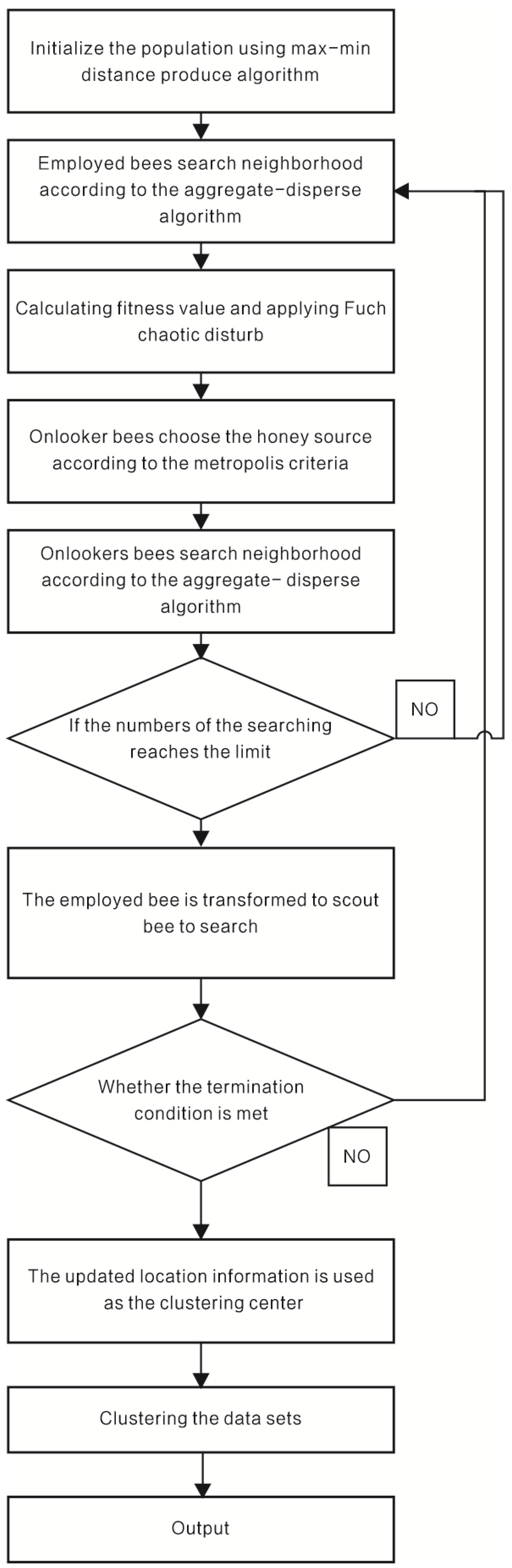

3.6. The Procedures of CAABC-K-means

- Initial parameters are set as follows: represents the number of population, denotes the space vector dimension, is the maximum iteration times, and cross parameter . is the threshold of maximum optimization times, and the annealing coefficient . The initial population is obtained according to the max–min distance product algorithm.

- The fitness value can be obtained according to Equation (3), and then solution approaches to the global optimal solution. At the same time, chaotic perturbations are added into the elite solution, which is selected from the preponderant solution set randomly and the infeasible solution in the bottom 15% according to Equation (16). The position is updated according to Equation (14) or Equation (15). Eventually, the location of the honey source is extended to the D-dimensional space. Whether the new solution is accepted depends on the Metropolis criteria.

- Onlooker bee executes the employed bee option and neighborhood searching performs under the same criteria.

- The updated location information, which is obtained after all the onlooker bees have completed the search, is used as the clustering center, the data set is performed a K-means iterative clustering, and the clustering center of each class is refreshed with the clustering division.

- If for abandonment is reached, the employed bee determines whether the number of updates reaches the limit. If the limit is reached, the employed bee is translated into a scout when the food source of which has been exhausted. A new round of honey source searching begins.

- If the number of iterations has reached the maximum “”, the optimal solution is output, otherwise, the algorithm goes back to step 2.

- K-means algorithm is executed to get results.

4. Numerical Experiments

4.1. Test Environment and Parameter Settings

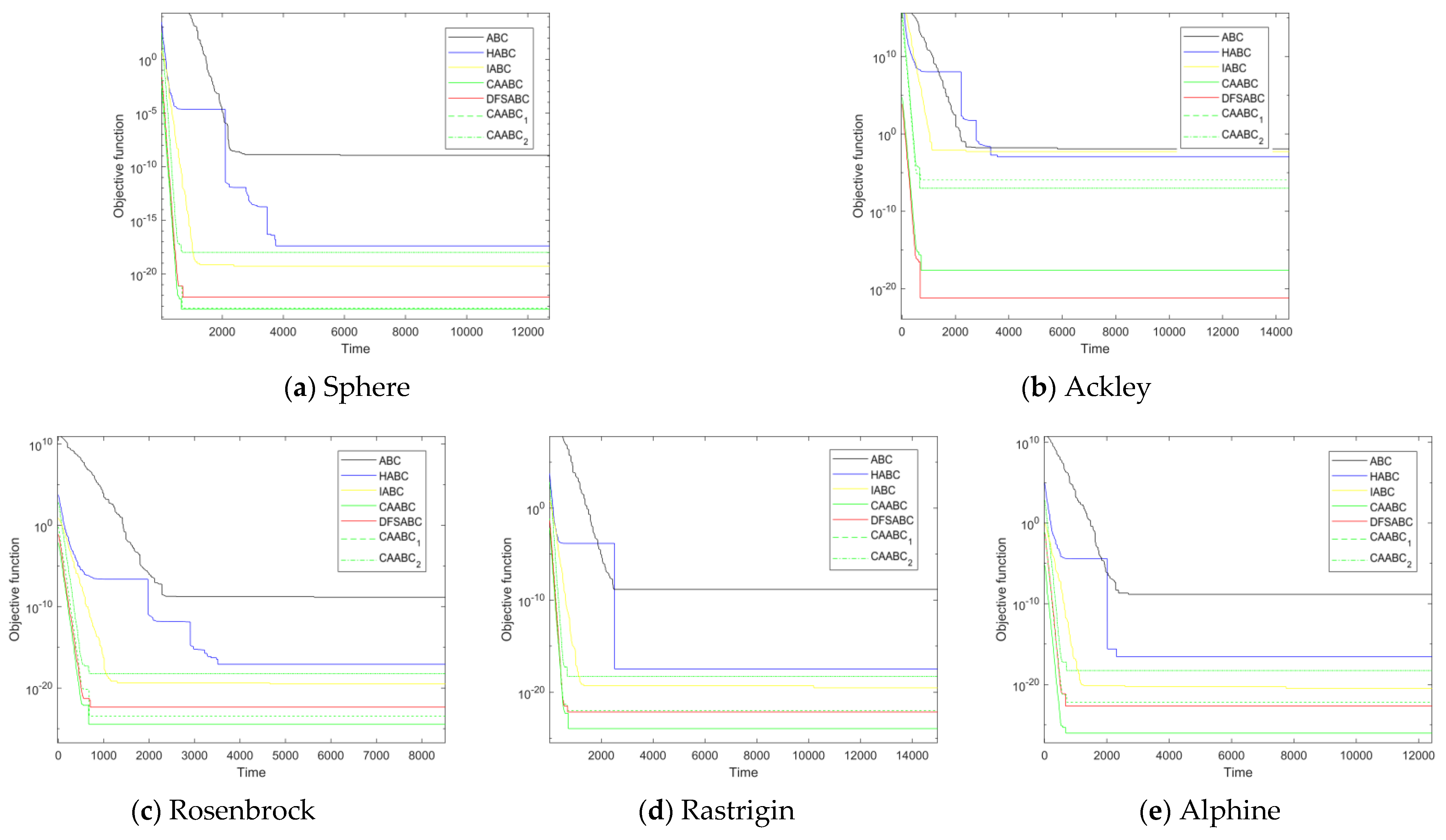

4.2. CAABC Performance Analysis

4.3. CAABC-K-means Performance Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hartigan, J.A.; Wong, M.A. A K-Means Clustering Algorithm. Appl. Stat. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- Punit, R.; Dheeraj, K.; Bezdek, J.C.; Sutharshan, R.; Palaniswami, M.S. A rapid hybrid clustering algorithm for large volumes of high dimensional data. IEEE Trans. Knowl. Data Eng. 2018, 31, 641–654. [Google Scholar]

- Ramadhani, F.; Zarlis, M.; Suwilo, S. Improve birch algorithm for big data clustering. IOP Conf. Ser. Mater. Sci. Eng. 2020, 725, 012090. [Google Scholar] [CrossRef]

- Cerreto, F.; Nielsen, B.F.; Nielsen, O.A.; Harrod, S.S. Application of data clustering to railway delay pattern recognition. J. Adv. Transp. 2018, 377–394. [Google Scholar] [CrossRef]

- Kouta, T.; Cullell-Dalmau, M.; Zanacchi, F.C.; Manzo, C. Bayesian analysis of data from segmented super-resolution images for quantifying protein clustering. Phys. Chem. Chem. Phys. 2020, 22, 1107–1114. [Google Scholar] [CrossRef] [PubMed]

- Dhifli, W.; Karabadji NE, I.; Elati, M. Evolutionary mining of skyline clusters of attributed graph data. Inf. Sci. 2020, 509, 501–514. [Google Scholar] [CrossRef]

- Eberhart, S.Y. Particle swarm optimization: Developments, applications and resources. In Proceedings of the Congress on Evolutionary Computation, Seoul, Korea, 27–30 May 2001. [Google Scholar]

- Xiang, W.L.; Li, Y.Z.; He, R.C.; Gao, M.X.; An, M.Q. A novel artificial bee colony algorithm based on the cosine similarity. Comput. Ind. Eng. 2018, 115, 54–68. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey bee Swarm for Numerical Optimization; Technical Report-tr06; Erciyes University: Kayseri, Turkiye, 2005. [Google Scholar]

- Zhang, X.; Zhang, X.; Ho, S.L.; Fu, W.N. A modification of artificial bee colony algorithm applied to loudspeaker design problem. IEEE Trans. Magn. 2014, 50, 737–740. [Google Scholar] [CrossRef]

- Zhu, G.; Kwong, S. Gbest-guided artificial bee colony algorithm for numerical function optimization. Appl. Math. Comput. 2010, 217, 3166–3173. [Google Scholar] [CrossRef]

- Zhang, P.H.; Jing-Ming, L.I.; Xian-De, H.U.; Jun, H.U. Research on global artificial bee colony algorithm based on crossover. J. Shandong Univ. Technol. 2017, 11, 1672–6197. [Google Scholar]

- Cui, L.; Li, G.; Lin, Q.; Du, Z.; Gao, W.; Chen, J. A novel artificial bee colony algorithm with depth-first search framework and elite-guided search equation. Inf. Sci. 2016, 367–368, 1012–1044. [Google Scholar] [CrossRef]

- Sharma, T.K.; Pant, M. Enhancing Scout Bee Movements in Artificial Bee Colony Algorithm. In Proceedings of the International Conference on Soft Computing for Problem Solving, Roorkee, India, 20–22 December 2011. [Google Scholar]

- Yang, Z.; Gao, H.; Hu, H. Artificial bee colony algorithm based on self-adaptive greedy strategy. In Proceedings of the IEEE International Conference on Advanced Computational Intelligence, Xiamen, China, 29–31 March 2018; pp. 385–390. [Google Scholar]

- Gao, W.; Liu, S.; Huang, L. A global best artificial bee colony algorithm for global optimization. J. Comput. Appl. Math. 2012, 236, 2741–2753. [Google Scholar] [CrossRef]

- Gao, W.; Liu, S.; Huang, L. A novel artificial bee colony algorithm with Powell’s method. Appl. Soft Comput. 2013, 13, 3763–3775. [Google Scholar] [CrossRef]

- Wu, B.; Qian, C.; Ni, W.; Fan, S. Hybrid harmony search and artificial bee colony algorithm for global optimization problems. Comput. Math. Appl. 2012, 64, 2621–2634. [Google Scholar] [CrossRef]

- Kang, F.; Li, J.; Li, H. Artificial bee colony algorithm and pattern search hybridized for global optimization. Appl. Soft Comput. 2013, 13, 1781–1791. [Google Scholar] [CrossRef]

- Tasgetiren, M.F.; Pan, Q.K.; Suganthan, P.N.; Chen, H.L. A discrete artificial bee colony algorithm for the total flowtime minimization in permutation flow shops. Inf. Sci. 2011, 181, 3459–3475. [Google Scholar] [CrossRef]

- Guanlong, D.; Zhenhao, X.; Xingsheng, G. A Discrete Artificial Bee Colony Algorithm for Minimizing the Total Flow Time in the Blocking Flow Shop Scheduling. Chin. J. Chem. Eng. 2012, 20, 1067–1073. [Google Scholar]

- Zhang, R.; Song, S.; Wu, C. A hybrid artificial bee colony algorithm for the job shop scheduling problem. Int. J. Prod. Econ. 2013, 141, 167–178. [Google Scholar] [CrossRef]

- Pan, Q.K.; Wang, L.; Li, J.Q.; Duan, J.H. A novel discrete artificial bee colony algorithm for the hybrid flowshop scheduling problem with makespan minimisation. Omega 2014, 45, 42–56. [Google Scholar] [CrossRef]

- Gao, K.Z.; Suganthan, P.N.; Pan, Q.K.; Tasgetiren, M.F.; Sadollah, A. Artificial bee colony algorithm for scheduling and rescheduling fuzzy flexible job shop problem with new job insertion. Knowl. Based Syst. 2016, 109, 1–16. [Google Scholar] [CrossRef]

- Li, J.Q.; Pan, Q.K. Solving the Large-Scale Hybrid Flow Shop Scheduling Problem with Limited Buffers by a Hybrid Artificial Bee Colony Algorithm; Elsevier Science Inc.: Beijing, China, 2015. [Google Scholar]

- Liu, J.; Meng, L.-Z. Integrating Artificial Bee Colony Algorithm and BP Neural Network for Software Aging Prediction in IoT Environment. IEEE Access 2019, 7, 32941–32948. [Google Scholar] [CrossRef]

- Karaboga, D.; Gorkemli, B. A quick artificial bee colony -qABC- algorithm for optimization problems. In Proceedings of the 2012 International Symposium on Innovations in Intelligent Systems and Applications, Trabzon, Turkey, 2–4 July 2012. [Google Scholar]

- Zaragoza, J.C.; Sucar, E.; Morales, E.; Bielza, C.; Larranaga, P. Bayesian Chain Classifiers for Multidimensional Classification. In Proceedings of the International Joint Conference on Ijcai, Catalonia, Spain, 16–22 July 2011. [Google Scholar]

- Park, J.Y.; Han, S.Y. Application of artificial bee colony algorithm to topology optimization for dynamic stiffness problems. Comput. Math. Appl. 2013, 66, 1879–1891. [Google Scholar] [CrossRef]

- Xiang, Y.; Zhou, Y. A dynamic multi-colony artificial bee colony algorithm for multi-objective optimization. Appl. Soft Comput. 2015, 35, 766–785. [Google Scholar] [CrossRef]

- Borchani, H.; Bielza, C.; Larranaga, P. Learning CB-decomposable multi-dimensional bayesian network classifiers. In Proceedings of the 5th European Workshop on Probabilistic Graphical Models, Helsinki, Finland, 13–15 September 2010; pp. 25–32. [Google Scholar]

- Lou, A. A Fusion Clustering Algorithm Based on Global Gravitation Search and Partitioning Around Medoid. In Proceedings of the CSSE 2019: Proceedings of the 2nd International Conference on Computer Science and Software Engineering, Rome, Italy, 22–23 June 2019; p. 6. [Google Scholar]

- Wu, M. Heuristic parallel selective ensemble algorithm based on clustering and improved simulated annealing. J. Supercomput. 2018, 76, 3702–3712. [Google Scholar] [CrossRef]

- Fan, C. Hybrid artificial bee colony algorithm with variable neighborhood search and memory mechanism. J. Syst. Eng. Electron. 2018, 29, 405–414. [Google Scholar] [CrossRef]

- Tellaroli, P. CrossClustering: A Partial Clustering Algorithm. PLoS ONE 2018, 11, e0152333. [Google Scholar]

- Peng, K.; Pan, Q.K.; Zhang, B. An Improved Artificial Bee Colony Algorithm for Steelmaking-refining-Continuous Casting Scheduling Problem. Chin. J. Chem. Eng. 2018, 26, 1727–1735. [Google Scholar] [CrossRef]

- Wenyuan, F.U.; Chaodong, L. An Adaptive Iterative Chaos Optimization Method. J. Xian Jiaotong Univ. 2013, 47, 33–38. [Google Scholar]

- Yu, S.-S.; Chu, S.-W.; Wang, C.-M.; Chan, Y.-K.; Chang, T.-C. Two improved k-means algorithms. Appl. Soft Comput. 2018, 68, 747–755. [Google Scholar] [CrossRef]

| Number | Equation | Name | Domain |

|---|---|---|---|

| 1 | Alpine | ||

| 2 | Schwefel2.22 | ||

| 3 | Schwefel2.21 | ||

| 4 | QuarticWN | ||

| 5 | Quartic | ||

| 6 | SumPower | ||

| 7 | ShiftedSphere | ||

| 8 | Step | ||

| 9 | Zakharow | ||

| 10 | SumQuares | ||

| 11 | SumDifference | ||

| 12 | Schwefel2.26 | ||

| 13 | ShiftedRosenbrock | ||

| 14 | Schwfel1.2 | ||

| 15 | Ackley | ||

| 16 | Griewank | ||

| 17 | Rastrigin | ||

| 18 | Schaffer | ||

| 19 | Rosenbrock | ||

| 20 | Sphere |

| Algorithm | Parameter |

|---|---|

| DFSABC | , , |

| IABC | , , , , |

| CAABC | , , |

| ABC | , , |

| HABC | M = 3, , , |

| PSO+K-means | , , , , |

| No. | Mean/Std. | ABC | IABC | HABC | CAABC | DFSABCelite | CAABC1 | CAABC2 |

|---|---|---|---|---|---|---|---|---|

| f1 | Mean | 1.37 × 10−16 | 2.10 × 10−16 | 1.15 × 10−15 | 3.17 × 10−29 | 8.91 × 10−25 | 4.55 × 10−25 | 6.90 × 10−16 |

| Std. | 1.14 × 10−16 | 5.39 × 10−16 | 3.04 × 10−15 | 2.39 × 10−145 | 6.24 × 10−25 | 5.19 × 10−105 | 1.82 × 10−15 | |

| CPUtime | 25.23 | 8.45 | 6.25 | 4.03 | 6.49 | 5.36 | 7.02 | |

| f2 | Mean | 8.94 × 10−186 | 1.04 × 10−30 | 3.76 × 10−183 | 3.73 × 10−195 | 7.98 × 10−193 | 3.73 × 10−195 | 2.26 × 10−183 |

| Std. | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 16.44 | 5.26 | 7.68 | 3.60 | 4.02 | 5.92 | 6.74 | |

| f3 | Mean | 2.30 × 10−180 | 1.99 × 10−9 | 3.04 × 10−179 | 1.45× 10−179 | 8.94 × 10−175 | 6.25 × 10−177 | 5.37 × 10−175 |

| Std. | 0.00 | 4.84 × 10−3 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 15.50 | 6.64 | 7.65 | 4.67 | 5.63 | 7.45 | 8.34 | |

| f4 | Mean | 7.52 × 10−4 | 1.95 × 10−4 | 2.42 × 10−4 | 2.95 × 10−4 | 6.92 × 10−4 | 2.92 × 10−4 | 5.61 × 10−4 |

| Std. | 4.28 × 10−6 | 4.33 × 10−6 | 4.80 × 10−6 | 2.30 × 10−6 | 3.07 × 10−6 | 3.33 × 10−6 | 4.72 × 10−6 | |

| CPUtime | 52.42 | 25.21 | 24.43 | 11.53 | 16.43 | 15.92 | 42.53 | |

| f5 | Mean | 4.76 × 10−228 | 6.01 × 10−4 | 9.95 × 10−217 | 1.49 × 10−237 | 1.95 × 10−230 | 1.82 × 10−230 | 5.97 × 10−217 |

| Std. | 0.00 | 0.00 | 6.67 × 10127 | 0.00 | 3.01 × 10−197 | 1.01 × 10−197 | 4.00 × 10127 | |

| CPUtime | 40.05 | 30.21 | 25.34 | 18.77 | 23.43 | 19.52 | 20.32 | |

| f6 | Mean | 1.86 × 10−189 | 7.00 × 10−32 | 4.43 × 10−198 | 4.83 × 10−218 | 1.41 × 10−199 | 4.00 × 10−200 | 2.74 × 10−198 |

| Std. | 0.00 | 8.85 × 10−32 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 59.32 | 31.46 | 29.56 | 10.42 | 28.45 | 25.64 | 35.28 | |

| f7 | Mean | 3.47 × 10−118 | 3.03 × 10−6 | 3.06 × 10−120 | 2.67 × 10−124 | 8.68 × 10−123 | 1.02 × 10−124 | 1.84 × 10−120 |

| Std. | 7.77 × 10−218 | 6.21 × 10−7 | 9.18 × 10−120 | 3.53 × 10−124 | 8.33 × 10−122 | 1.03 × 10−122 | 5.56 × 10−120 | |

| CPUtime | 17.04 | 10.26 | 12.46 | 7.53 | 12.64 | 12.02 | 13.31 | |

| f8 | Mean | 4. 72× 10−121 | 8.83 × 10−6 | 1.12 × 10−123 | 2.04 × 10−124 | 1.06 × 10−123 | 2.04 × 10−124 | 1.31 × 10−123 |

| Std. | 1.05 × 10−124 | 2.19 × 10−7 | 2.39 × 10−123 | 2.93 × 10−124 | 2.06 × 10−123 | 2.83 × 10−123 | 2.67 × 10−123 | |

| CPUtime | 5.66 | 3.61 | 4.73 | 2.63 | 3.76 | 3.32 | 4.47 | |

| f9 | Mean | 3.81 × 10−69 | 5.35 × 10−26 | 3.73 × 10−69 | 3.87 × 10−69 | 3.20 × 10−68 | 3.97 × 10−69 | 2.14 × 10−68 |

| Std. | 9.36 × 10−67 | 1.54 × 10−27 | 9.69 × 10−68 | 9.74 × 10−69 | 9.74 × 10−69 | 9.74 × 10−67 | 1.17 × 10−68 | |

| CPUtime | 47.32 | 35.22 | 33.52 | 23.38 | 26.71 | 28.41 | 32.25 | |

| f10 | Mean | 2.21 × 10−5 | 2.73 × 10−5 | 3.36 × 10−258 | 1.09 × 10−237 | 3.39 × 10−236 | 1.22 × 10−238 | 2.22 × 10−235 |

| Std. | 1.32 × 10−6 | 1.90 × 10−6 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 34.75 | 15.75 | 14.75 | 12.82 | 16.43 | 15.76 | 17.34 | |

| f11 | Mean | 5.56 × 10−184 | 7.83 × 10−32 | 1.19 × 10−190 | 1.00 × 10−192 | 1.39 × 10−192 | 1.99 × 10−190 | 9.06 × 10−181 |

| Std. | 0.00 | 2.73 × 10−33 | 0.00 | 2.97 × 10−161 | 6.97 × 10−159 | 3.57 × 10−159 | 6.64 × 10−144 | |

| CPUtime | 24.69 | 15.67 | 14.39 | 11.71 | 14.04 | 13.52 | 15.43 | |

| f12 | Mean | 4.32 × 10−5 | 3.05 × 10−5 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Std. | 2.98 × 10−6 | 3.63 × 10−7 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 23.53 | 13.24 | 12.88 | 7.54 | 12.67 | 13.85 | 27.48 | |

| f13 | Mean | 1.94 × 10−238 | 2.61 × 10−8 | 8.74 × 10−286 | 1.65 × 10−294 | 8.24 × 10−286 | 1.44 × 10−288 | 5.74 × 10−285 |

| Std. | 0.00 | 2.21 × 10−9 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 25.65 | 19.56 | 17.55 | 10.59 | 17.66 | 18.95 | 19.05 | |

| f14 | Mean | 1.36 × 10−56 | 1.46 | 5.95 × 10−102 | 3.50 × 10−106 | 5.95 × 10−103 | 4.90 × 10−105 | 3.93 × 10−102 |

| Std. | 3.00 × 10−56 | 1.29 × 10−2 | 4.75 × 10−102 | 6.99 × 10−106 | 6.75 × 10−106 | 7.99 × 10−106 | 2.85 × 10−102 | |

| CPUtime | 18.64 | 15.45 | 15.04 | 6.24 | 8.75 | 9.77 | 13.94 | |

| f15 | Mean | 8.86 × 10−17 | 4.49 × 10−16 | 2.21 × 10−17 | 1.54 × 10−20 | 9.81 × 10−19 | 7.91 × 10−20 | 1.39 × 10−17 |

| Std. | 1.15 × 10−16 | 5.02 × 10−17 | 3.12 × 10−17 | 1.76 × 10−20 | 3.45 × 10−19 | 1.40 × 10−19 | 1.89 × 10−17 | |

| CPUtime | 9.86 | 9.07 | 8.52 | 6.87 | 8.05 | 7.92 | 8.09 | |

| f16 | Mean | 3.09 × 10−16 | 2.83 × 10−17 | 3.44 × 10−17 | 3.30 × 10−20 | 3.54 × 10−19 | 3.58 × 10−19 | 2.09 × 10−17 |

| Std. | 2.03 × 10−17 | 3.53 × 10−17 | 3.64 × 10−17 | 4.16 × 10−20 | 6.67 × 10−18 | 6.63 × 10−19 | 2.59 × 10−17 | |

| CPUtime | 20.34 | 14.35 | 17.45 | 12.86 | 15.99 | 17.63 | 18.63 | |

| f17 | Mean | 1.81 × 10−19 | 3.32 × 10−17 | 7.86 × 10−17 | 2.32 × 10−20 | 2.09 × 10−20 | 3.00 × 10−18 | 4.72 × 10−17 |

| Std. | 2.59 × 10−17 | 2.53 × 10−17 | 3.34 × 10−17 | 3.85 × 10−20 | 3.97 × 10−18 | 3.97 × 10−18 | 2.24 × 10−17 | |

| CPUtime | 12.96 | 10.32 | 8.44 | 7.56 | 8.94 | 8.07 | 9.30 | |

| f18 | Mean | 3.39 × 10−12 | 1.47 × 10−242 | 2.21 × 10−242 | 6.81 × 10−251 | 4.38 × 10−247 | 4.38 × 10−247 | 1.33 × 10−242 |

| Std. | 2.22 × 10−13 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 22.37 | 20.73 | 19.55 | 18.83 | 20.08 | 20.44 | 21.56 | |

| f19 | Mean | 1.36 × 10−15 | 4.21 × 10−17 | 2.09 × 10−17 | 7.68 × 10−21 | 6.59 × 10−19 | 8.51 × 10−20 | 1.29 × 10−17 |

| Std. | 8.02 × 10−16 | 6.04 × 10−17 | 3.42 × 10−17 | 2.62 × 10−25 | 3.75 × 10−19 | 2.25 × 10−19 | 2.08 × 10−17 | |

| CPUtime | 16.32 | 13.44 | 14.35 | 13.01 | 15.33 | 15.53 | 16.22 | |

| f20 | Mean | 1.36 × 10−15 | 6.25 × 10−17 | 3.09 × 10−17 | 7.68 × 10−21 | 2.53 × 10−18 | 1.51 × 10−18 | 2.01 × 10−17 |

| Std. | 8.02 × 10−16 | 8.84 × 10−17 | 3.02 × 1017 | 7.70 × 10−21 | 3.94 × 10−19 | 3.75 × 10−19 | 1.84 × 10−17 | |

| CPUtime | 4.78 | 4.14 | 4.02 | 3.63 | 3.98 | 4.02 | 4.64 |

| No. | Mean/Std. | ABC | IABC | HABC | CAABC | DFSABC_elite | CAABC1 | CAABC2 |

|---|---|---|---|---|---|---|---|---|

| f1 | Mean | 1.52 × 10−14 | 4.93 × 10−17 | 4.53 × 10−19 | 2.78 × 10−22 | 1.04 × 10−19 | 5.36 × 10−20 | 3.61 × 10−19 |

| Std. | 2.99 × 10−14 | 2.06 × 10−17 | 6.22 × 10−19 | 2.16 × 10−22 | 6.17 × 10−19 | 3.17 × 10−19 | 8.03 × 10−19 | |

| CPUtime(s) | 34.36 | 11.55 | 12.25 | 8.09 | 9.99 | 7.56 | 11.03 | |

| f2 | Mean | 3.92 × 10−210 | 6.88 × 10−61 | 7.14 × 10−210 | 9.17 × 10−253 | 5.45 × 10−240 | 2.80 × 10−240 | 4.63 × 10−210 |

| Std. | 0.00 | 1.70 × 10−61 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 25.40 | 11.23 | 13.60 | 5.69 | 7.32 | 8.92 | 11.04 | |

| f3 | Mean | 4.54 × 10−179 | 4.45 × 10−2 | 5.96 × 10−178 | 2.26 × 10−181 | 8.32 × 10−180 | 4.40 × 10−180 | 3.92 × 10−178 |

| Std. | 0.00 | 5.29 × 10−3 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 27.50 | 13.64 | 15.63 | 8.97 | 10.43 | 12.55 | 15.32 | |

| f4 | Mean | 2.03 × 10−4 | 1.22 × 10−4 | 6.04 × 10−4 | 2.02 × 10−4 | 2.04 × 10−4 | 2.09 × 10−4 | 2.09 × 10−4 |

| Std. | 3.36 × 10−7 | 9.67 × 10−7 | 9.54 × 10−6 | 6.08 × 10−7 | 2.11 × 10−7 | 4.21 × 10−7 | 6.32 × 10−6 | |

| CPUtime | 80.47 | 39.21 | 37.43 | 20.33 | 26.42 | 30.02 | 34.53 | |

| f5 | Mean | 2.52 × 10−229 | 2.02 × 10−4 | 4.08 × 10−237 | 0.00 | 8.15 × 10−242 | 4.19 × 10−242 | 2.64 × 10−237 |

| Std. | 0.00 | 3.07 × 10−5 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 79.05 | 59.34 | 60.32 | 37.08 | 40.02 | 43.22 | 69.02 | |

| f6 | Mean | 4.05 × 10−195 | 3.05 × 10−62 | 6.66 × 10−205 | 1.47 × 10−249 | 4.56 × 10−224 | 2.35 × 10−224 | 4.32 × 10−205 |

| Std. | 0.00 | 7.49 × 10−63 | 6.02 × 10−157 | 0.00 | 3.32 × 10−189 | 1.71 × 10−189 | 3.90 × 10−157 | |

| CPUtime | 106.99 | 43.42 | 39.53 | 18.02 | 36.43 | 37.66 | 49.58 | |

| f7 | Mean | 3.70 × 10−121 | 4.25 × 10−6 | 7.08 × 10−120 | 1.49 × 10−122 | 1.33 × 10−120 | 6.92 × 10−121 | 5.45 × 10−120 |

| Std. | 7.66 × 10−121 | 2.20 × 10−8 | 4.10 × 10−119 | 3.08 × 10−122 | 2.10 × 10−119 | 1.08 × 10−119 | 4.02 × 10−119 | |

| CPUtime | 23.44 | 15.42 | 15.33 | 10.93 | 13.41 | 13.02 | 16.33 | |

| f8 | Mean | 1.08 × 10−120 | 3.56 × 10−6 | 9.05 × 10−119 | 2.19 × 10−121 | 5.65 × 10−120 | 3.02 × 10−120 | 6.23 × 10−119 |

| Std. | 2.23 × 10−120 | 6.24 × 10−7 | 2.85 × 10−118 | 4.54 × 10−121 | 1.27 × 10−120 | 8.87 × 10−121 | 1.85 × 10−118 | |

| CPUtime | 11.73 | 7.61 | 7.52 | 3.32 | 4.66 | 5.32 | 6.45 | |

| f9 | Mean | 9.34 × 10−254 | 1.37 × 10−28 | 2.66 × 10−258 | 2.54 × 10−265 | 9.06 × 10−258 | 4.66 × 10−258 | 7.59 × 10−258 |

| Std. | 0.00 | 1.24 × 10−29 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 87.77 | 55.22 | 53.88 | 27.31 | 29.75 | 30.41 | 58.22 | |

| f10 | Mean | 6.63 × 10−178 | 5.40 × 10−6 | 9.12 × 10−123 | 1.29 × 10−123 | 9.01 × 10−123 | 5.30 × 10−123 | 1.17 × 10−122 |

| Std. | 1.65 × 10−128 | 1.38 × 10−7 | 1.84 × 10−122 | 2.33 × 10−132 | 9.84 × 10−123 | 6.26 × 10−123 | 1.83 × 10−122 | |

| CPUtime | 46.64 | 19.75 | 24.56 | 18.62 | 19.04 | 19.35 | 20.53 | |

| f11 | Mean | 6.63 × 10−178 | 8.67 × 10−62 | 1.86 × 10−204 | 4.01 × 10−220 | 9.86 × 10−214 | 5.07 × 10−214 | 1.21 × 10−204 |

| Std. | 1.65 × 10−128 | 6.90 × 10−63 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 31.72 | 23.42 | 19.34 | 18.21 | 20.35 | 20.42 | 23.22 | |

| f12 | Mean | 3.98 × 10−5 | 1.83 × 10−5 | 2.04 × 10−5 | 2.07 × 10−5 | 2.31 × 10−5 | 2.25 × 10−5 | 2.82 × 10−5 |

| Std. | 8.19 × 10−6 | 3.31 × 10−6 | 3.87 × 10−7 | 3.84 × 10−5 | 5.99 × 10−7 | 5.06 × 10−7 | 9.00 × 10−8 | |

| CPUtime | 34.13 | 18.34 | 17.15 | 12.66 | 17.68 | 18.97 | 20.88 | |

| f13 | Mean | 6.10 × 10−235 | 1.32 × 10−8 | 6.29 × 10−236 | 1.08 × 10−241 | 5.96 × 10−238 | 3.07 × 10−238 | 4.11 × 10−236 |

| Std. | 0.00 | 1.12 × 10−9 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 35.95 | 30.66 | 27.64 | 20.59 | 28.43 | 27.05 | 28.43 | |

| f14 | Mean | 8.43 × 10−136 | 2.00 | 4.13 × 10−103 | 1.74 × 10−145 | 5.13 × 10−131 | 2.64 × 10−131 | 2.68 × 10−103 |

| Std. | 8.00 × 10−9 | 5.62 × 10−2 | 8.54 × 10−103 | 3.61 × 10−145 | 8.04 × 10−113 | 4.14 × 10−113 | 5.53 × 10−103 | |

| CPUtime | 28.14 | 16.85 | 19.44 | 10.32 | 12.75 | 12.42 | 15.64 | |

| f15 | Mean | 9.96 × 10−18 | 2.24 × 10−18 | 2.57 × 10−1 | 1.54 × 10−20 | 8.96 × 10−21 | 4.92 × 10−4 | 1.67 × 10−1 |

| Std. | 1.15 × 10−16 | 2.24 × 10−2 | 5.76 × 10−1 | 1.76 × 10−20 | 9.96 × 10−9 | 5.12 × 10−9 | 3.73 × 10−1 | |

| CPUtime | 12.43 | 10.77 | 10.42 | 7.04 | 9.65 | 7.32 | 10.04 | |

| f16 | Mean | 3.09 × 10−16 | 5.83 × 10−17 | 1.68 × 10−17 | 2.17 × 10−22 | 4.97 × 10−18 | 2.56 × 10−18 | 1.41 × 10−17 |

| Std. | 2.03 × 10−17 | 3.93 × 10−17 | 1.55 × 10−17 | 2.24 × 10−22 | 1.35 × 10−19 | 6.96 × 10−20 | 1.01 × 10−17 | |

| CPUtime | 30.03 | 27.44 | 26.42 | 24.60 | 25.56 | 25.60 | 29.33 | |

| f17 | Mean | 3.06 × 10−16 | 3.33 × 10−17 | 6.94 × 10−17 | 3.84 × 10−24 | 6.44 × 10−20 | 3.31 × 10−20 | 4.50 × 10−17 |

| Std. | 2.59 × 10−17 | 2.53 × 1017 | 1.41 × 10−16 | 4.91 × 10−24 | 3.43 × 10−22 | 1.79 × 10−22 | 9.14 × 10−17 | |

| CPUtime | 18.06 | 17.32 | 15.84 | 15.01 | 17.33 | 16.87 | 18.05 | |

| f18 | Mean | 1.56 × 10−12 | 2.00 × 10−216 | 5.33 × 10−242 | 2.09 × 10−248 | 5.03 × 10−245 | 2.59 × 10−245 | 3.46 × 10−242 |

| Std. | 2.05 × 10−13 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| CPUtime | 27.56 | 23.77 | 23.05 | 22.85 | 22.98 | 23.05 | 24.63 | |

| f19 | Mean | 3.76 × 10−15 | 7.03 × 10−17 | 6.20 × 10−18 | 6.00 × 10−24 | 3.42 × 10−19 | 1.76 × 10−19 | 4.24 × 10−18 |

| Std. | 6.25 × 10−15 | 6.66 × 10−17 | 8.07 × 10−18 | 6.91 × 10−24 | 3.07 × 10−21 | 1.58 × 10−21 | 5.23 × 10−18 | |

| CPUtime | 21.93 | 17.46 | 17.55 | 17.02 | 17.39 | 17.53 | 18.27 | |

| f20 | Mean | 3.82 × 10−16 | 3.21 × 10−17 | 1.24 × 10−17 | 4.21 × 10−25 | 3.77 × 10−21 | 1.94 × 10−21 | 8.04 × 10−18 |

| Std. | 1.63 × 10−16 | 2.36 × 10−17 | 5.71 × 10−17 | 4.39 × 10−25 | 3.01 × 10−19 | 1.55 × 10−19 | 3.71 × 10−17 | |

| CPUtime(s) | 9.36 | 4.55 | 4.23 | 3.04 | 3.99 | 3.54 | 5.03 |

| Datasets | Samples | Dimensions | Classes |

|---|---|---|---|

| Iris | 150 | 4 | 3 |

| Balance-scale | 625 | 4 | 3 |

| Glass | 214 | 10 | 6 |

| Wine | 178 | 13 | 3 |

| ECOLI | 336 | 7 | 8 |

| Abalone | 4177 | 8 | 28 |

| Musk | 6598 | 166 | 2 |

| Pendigits | 10,992 | 16 | 10 |

| Skin Seg. | 245,057 | 3 | 2 |

| CMC | 1473 | 9 | 3 |

| Cancer | 683 | 9 | 2 |

| Datasets | K-Means | ABC+K-Means | PSO+K-Means | CAABC-K-Means | PAM | GPAM |

|---|---|---|---|---|---|---|

| Iris | 70.22 | 71.32 | 73.32 | 79.08 | 77.39 | 79.06 |

| Balance-scale | 52.33 | 53.21 | 56.33 | 59.03 | 53.49 | 57.83 |

| Glass | 39.44 | 40.32 | 40.36 | 49.32 | 48.23 | 49.03 |

| Wine | 38.09 | 40.30 | 53.20 | 57.20 | 56.32 | 57.04 |

| ECOLI | 57.00 | 57.30 | 57.40 | 60.32 | 56.03 | 58.33 |

| Abalone | 49.00 | 47.30 | 49.50 | 68.09 | 48.22 | 57.34 |

| Musk | 50.02 | 53.40 | 59.32 | 59.98 | 47.56 | 54.99 |

| Pendigits | 40.05 | 40.78 | 49.03 | 58.93 | 40.00 | 56.09 |

| Skin Seg. | 78.00 | 79.03 | 83.01 | 88.7 | 63.04 | 80.37 |

| CMC | 68.04 | 79.03 | 83.07 | 89.00 | 69.34 | 74.95 |

| Cancer | 53.99 | 58.34 | 56.83 | 59.99 | 57.09 | 59.03 |

| Datasets | K-Means | ABC+K-Means | PSO+K-Means | CAABC-K-Means | PAM | GPAM |

|---|---|---|---|---|---|---|

| Iris | 50.28 | 54.32 | 53.02 | 59.06 | 58.99 | 59.00 |

| Balance-scale | 50.33 | 52.29 | 51.03 | 60.01 | 54.04 | 58.32 |

| Glass | 60.44 | 59.32 | 58.96 | 69.32 | 68.33 | 69.08 |

| Wine | 89.10 | 89.19 | 90.43 | 93.11 | 92.84 | 92.94 |

| ECOLI | 83.76 | 84.30 | 85.29 | 89.04 | 86.00 | 87.04 |

| Abalone | 70.99 | 72.02 | 74.91 | 84.11 | 84.01 | 84.05 |

| Musk | 60.73 | 69.93 | 68.34 | 70.00 | 68.35 | 69.68 |

| Pendigits | 50.82 | 50.01 | 59.11 | 63.47 | 53.06 | 62.44 |

| Skin Seg. | 70.93 | 72.38 | 80.93 | 81.02 | 60.99 | 75.64 |

| CMC | 59.02 | 59.24 | 59.15 | 62.03 | 58.37 | 60.75 |

| Cancer | 49.03 | 53.04 | 53.75 | 59.23 | 57.98 | 59.00 |

| Datasets | K-Means | ABC+K-Means | PSO+K-Means | CAABC-K-Means | PAM | GPAM |

|---|---|---|---|---|---|---|

| Iris | 0.35 | 0.49 | 0.27 | 0.23 | 0.33 | 0.25 |

| Balance-scale | 0.78 | 0.79 | 0.7 | 0.49 | 0.79 | 0.52 |

| Glass | 0.98 | 1.03 | 0.9 | 0.79 | 1.03 | 0.96 |

| Wine | 1.06 | 1.79 | 0.99 | 0.61 | 1.11 | 0.85 |

| ECOLI | 0.73 | 0.95 | 0.7 | 0.57 | 0.95 | 0.60 |

| Abalone | 3.90 | 3.07 | 2.33 | 0.93 | 7.99 | 0.95 |

| Musk | 2.02 | 1.68 | 1.42 | 0.61 | 10.04 | 3.02 |

| Pendigits | 2.97 | 2.03 | 1.93 | 0.38 | 9.73 | 1.04 |

| Skin Seg. | 3.01 | 2.93 | 4.09 | 0.46 | 6.83 | 1.97 |

| CMC | 1.92 | 1.31 | 1.77 | 0.58 | 2.98 | 0.82 |

| Cancer | 0.34 | 0.32 | 0.28 | 0.15 | 0.44 | 0.19 |

| K-Means | ABC+K-Means | PSO+K-Means | CAABC-K-Means | PAM | GPAM | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Datasets | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F |

| Iris | 0.90 | 0.88 | 0.90 | 0.90 | 0.90 | 0.90 | 0.91 | 0.92 | 0.92 | 0.99 | 0.97 | 0.98 | 0.94 | 0.96 | 0.94 | 0.97 | 0.97 | 0.97 |

| Balance-scale | 0.93 | 0.92 | 0.93 | 0.91 | 0.92 | 0.93 | 0.94 | 0.95 | 0.95 | 1.00 | 1.00 | 1.00 | 0.98 | 0.96 | 0.94 | 0.98 | 0.99 | 0.94 |

| Glass | 0.84 | 0.83 | 0.85 | 0.84 | 0.83 | 0.80 | 0.82 | 0.83 | 0.8 | 0.90 | 0.91 | 0.95 | 0.82 | 0.83 | 0.8 | 0.9 | 0.88 | 0.82 |

| Wine | 0.93 | 0.94 | 0.94 | 0.93 | 0.93 | 0.94 | 0.97 | 0.98 | 0.96 | 1.00 | 1.00 | 1.00 | 0.96 | 0.96 | 0.92 | 0.98 | 0.96 | 0.94 |

| ECOLI | 0.76 | 0.77 | 0.79 | 0.80 | 0.84 | 0.83 | 0.82 | 0.84 | 0.83 | 0.89 | 0.89 | 0.89 | 0.80 | 0.84 | 0.83 | 0.83 | 0.85 | 0.84 |

| Abalone | 0.29 | 0.34 | 0.32 | 0.23 | 0.24 | 0.22 | 0.19 | 0.24 | 0.22 | 0.49 | 0.39 | 0.42 | 0.22 | 0.24 | 0.22 | 0.29 | 0.34 | 0.32 |

| Musk | 0.73 | 0.72 | 0.70 | 0.63 | 0.60 | 0.67 | 0.57 | 0.52 | 0.60 | 0.83 | 0.82 | 0.80 | 0.53 | 0.50 | 0.50 | 0.63 | 0.72 | 0.70 |

| Pendigits | 0.70 | 0.72 | 0.73 | 0.77 | 0.72 | 0.75 | 0.78 | 0.77 | 0.78 | 0.83 | 0.83 | 0.83 | 0.70 | 0.70 | 0.73 | 0.79 | 0.79 | 0.73 |

| Skin Seg. | 0.66 | 0.63 | 0.64 | 0.76 | 0.72 | 0.74 | 0.60 | 0.63 | 0.64 | 0.88 | 0.85 | 0.85 | 0.66 | 0.63 | 0.64 | 0.71 | 0.73 | 0.71 |

| CMC | 0.79 | 0.74 | 0.78 | 0.82 | 0.82 | 0.82 | 0.79 | 0.79 | 0.79 | 0.94 | 0.94 | 0.94 | 0.79 | 0.74 | 0.76 | 0.83 | 0.84 | 0.83 |

| Cancer | 0.69 | 0.69 | 0.69 | 0.73 | 0.73 | 0.73 | 0.79 | 0.76 | 0.77 | 0.90 | 0.89 | 0.89 | 0.69 | 0.69 | 0.59 | 0.83 | 0.79 | 0.89 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, Q.; Lin, N.; Zhang, Y. K-Means Clustering Algorithm Based on Chaotic Adaptive Artificial Bee Colony. Algorithms 2021, 14, 53. https://doi.org/10.3390/a14020053

Jin Q, Lin N, Zhang Y. K-Means Clustering Algorithm Based on Chaotic Adaptive Artificial Bee Colony. Algorithms. 2021; 14(2):53. https://doi.org/10.3390/a14020053

Chicago/Turabian StyleJin, Qibing, Nan Lin, and Yuming Zhang. 2021. "K-Means Clustering Algorithm Based on Chaotic Adaptive Artificial Bee Colony" Algorithms 14, no. 2: 53. https://doi.org/10.3390/a14020053

APA StyleJin, Q., Lin, N., & Zhang, Y. (2021). K-Means Clustering Algorithm Based on Chaotic Adaptive Artificial Bee Colony. Algorithms, 14(2), 53. https://doi.org/10.3390/a14020053