Abstract

Chimp Optimization Algorithm (ChOA), a novel meta-heuristic algorithm, has been proposed in recent years. It divides the population into four different levels for the purpose of hunting. However, there are still some defects that lead to the algorithm falling into the local optimum. To overcome these defects, an Enhanced Chimp Optimization Algorithm (EChOA) is developed in this paper. Highly Disruptive Polynomial Mutation (HDPM) is introduced to further explore the population space and increase the population diversity. Then, the Spearman’s rank correlation coefficient between the chimps with the highest fitness and the lowest fitness is calculated. In order to avoid the local optimization, the chimps with low fitness values are introduced with Beetle Antenna Search Algorithm (BAS) to obtain visual ability. Through the introduction of the above three strategies, the ability of population exploration and exploitation is enhanced. On this basis, this paper proposes an EChOA-SVM model, which can optimize parameters while selecting the features. Thus, the maximum classification accuracy can be achieved with as few features as possible. To verify the effectiveness of the proposed method, the proposed method is compared with seven common methods, including the original algorithm. Seventeen benchmark datasets from the UCI machine learning library are used to evaluate the accuracy, number of features, and fitness of these methods. Experimental results show that the classification accuracy of the proposed method is better than the other methods on most data sets, and the number of features required by the proposed method is also less than the other algorithms.

1. Introduction

With society gradually becoming digitalized, the question of how to extract useful information effectively from complex and huge data has become the focus of research in recent years. Machine learning is one of the important research fields of information recognition and pattern recognition in data sets [1]. It has been widely used due to its strong data processing ability. Machine learning is evolving from computer science [2], which is a multidisciplinary and interdisciplinary major. It covers probability theory, statistics, approximate theory, and complex algorithms, and it can design efficient and accurate prediction algorithms [3]. It can be divided into supervised learning and unsupervised learning according to the learning mode [4]. SVM is one of the most widely used supervised learning algorithms. Vapnik and other researchers built the cornerstone of SVM [5]. It is widely used in pattern recognition [6,7], text recognition [8], biomedical [9,10,11], imaging medicine [12], anomaly detection [13] and in other fields. SVM classifies feature spaces into two categories and defines a non-parametric method for finding decision boundaries [14]. SVM has shown excellent performance in many problems [15]. Using the support vector machines to solve problems can improve generalization performance, improve computational efficiency, reduce running time, and produce a very accurate classification model [16,17]. The Support Vector Machines can solve both linear and nonlinear classification problems. When the data set is linearly inseparable, the nonlinear problem is usually transformed into a linear problem. Then, the data set can be used to construct an optimal hyperplane in the feature space, so that the feature space can be divided into two classes more easily [18]. However, when machine learning processes high-dimensional data sets, there will be problems such as noise and redundant data [19]. In this case, feature selection [20] can be used to reduce the number of features.

Feature selection is to select the optimal feature subset from the original data set [21]. According to the form of feature selection, the feature selection methods can be divided into the following three types: filter, wrapper, and embedded [22,23,24]. The wrapper-based approach is used in this article. The wrapper method, which relies on the classification method [25], is more efficient than the other two, but its computational intensity is relatively greater [26]. The filter method [27] uses numerous indicators to evaluate and select high-order features according to their discriminant attributes [28]. After that it will select the subset with the richest information [29]. The main idea of the wrapper approach is to treat the selection of subsets as a search optimization problem. At first it will generate different combinations, then evaluate them, and finally compare them with other combinations. The wrapper method is often used in feature selection problems due to the superiority of its calculation results, and the wrapper method can directly interact with classifier [30]. The wrapper method can be effectively combined with the meta-heuristic optimization algorithm so to achieve better practical application effect.

In recent years, many researchers have combined optimization algorithms with SVM to solve problems. Influenced by this, this paper tries to combine ChOA with SVM for feature selection and parameter optimization. ChOA is a new meta-heuristic optimization algorithm proposed by Khishe and Mosavi in 2019 [31] which is inspired by the hierarchy mechanism of chimps in nature when they are hunting. It can solve the problem of slow convergence and local optimum when it is solving the serious dimensional problems. However, the ChOA still has some shortcomings. Firstly, the population diversity of the algorithm is insufficient in the initial stage. Secondly, there is a risk of falling into local optimal in the final search stage. Therefore, an Enhanced Chimp Optimization Algorithm is proposed in this paper. Three strategies have been introduced successively, including highly disruptive polynomial mutation [32], Spearman’s correlation coefficient, and Beetle Antennae Search Algorithm [33]. In the initial stage, HDPM is used to increase the population’s variety, which improved the detection ability of the optimization algorithm. Due to the updating of the position of the chimp being determined by each level of chimp, this paper will improve the global search ability of chimps by improving the position updating ability of lower level chimps. The algorithm firstly introduces the Spearman’s rank correlation coefficient to calculate the distance between low grade and high grade chimp. For the lower chimps that are far away from the higher chimps, this paper will introduce the beetle antenna search algorithm. It can make the chimps with low fitness achieve the visual ability, thus they can change their movement direction according to the surrounding environment. This strategy improves the local and global search ability of the chimps with lower fitness. On this basis, this paper proposes an EChOA-SVM model. This model is used for feature selection and parameter optimization at the same time to obtain good classification accuracy and performance. The main contributions of this paper are summarized as follows:

- An Enhanced Chimp Optimization Algorithm is proposed to solve the shortcomings of the ChOA and make it better applied to feature selection problems.

- HDPM strategy is introduced in the initial stage to enhance the population diversity.

- Spearman’s rank correlation coefficient helps to identify the chimps that need to be improved. Then BAS is introduced to improve the positions updating ability.

- The EChOA-SVM model is used for feature selection and SVM parameter optimization simultaneously. The model is evaluated by 17 benchmark data sets in UCI machine learning library [34]. In order to verify the effectiveness of this method, it is compared with seven optimization algorithms, such as ChOA [31], GWO [35], WOA [36], ALO [37], GOA [38], MFO [39], and SSA [40].

2. Literature Review

Optimization algorithms have been widely used in different fields such as medicine, multi-objective optimization, data classification, feature selection, and Support Vector Machine optimization. Zhao and Zhang proposed a Learning-based Evolutionary Multi-objective Algorithm [41]. By comparing five algorithms, the proposed algorithm is significantly superior to the other compared algorithms in determining the convergence and the approximation of the Pareto front. Dulebenets proposed an Adaptive Polyploid Memetic Algorithm to solve the cross-docking terminal vehicle scheduling problem [42]. This algorithm can assist the correct operation planning of CDT. Liu, Wang and Huang proposed an Alternative Algorithm to deal with Multiobjective optimization problems [43] which is proven to be better than other many-objective evolutionary algorithms. Furthermore, it is easily extended to solve constrained multi-objective optimization problems. Junayed et al., established an optimization model and solution algorithm to optimize the factory-in-a-box supply chain [44]. Gianni et al., Established specific rules or formulas that can distinguish bacterial from viral meningitis by machine learning methods [45]. The method even achieved 100% accuracy in detecting bacterial meningitis. Panda and Majhi used the Salp Swarm Algorithm to train the Multilayer Perceptron for data classification [46]. Compared with other classical optimization algorithms, the results show that this method is very advantageous.

This paper mainly studies the application of optimization algorithm in feature selection and support vector machine optimization. Feature selection is to select the optimal feature subset from the original data set, which can be regarded as an optimization problem [47]. In order to obtain a better classification accuracy, it is necessary to input the optimal SVM parameters into the feature subset. The selection of the feature subset will also affect the parameters of SVM. Therefore, in order to obtain the ideal classification accuracy, both feature selection and optimization of SVM parameters are required [48]. Huang and Wang [49] proposed a GA algorithm for feature selection and support vector machine parameter optimization simultaneously. Compared with other methods, the proposed method can achieve higher classification accuracy with fewer features. Shih-Wei Lin et al. [50] proposed a feature selection and parameter optimization method based on SA, and compared the method with grid algorithm. The results showed that the proposed method could significantly improve the classification accuracy. Heming Jia and Kangjian Sun proposed an IBMO-SVM classification model [51]. This model can help SVM to find the optimal feature subset and parameters at the same time. Compared with other optimization algorithms, this model shows good performance on both low and high dimensional data sets. The ECHOA-SVM model, which is proposed in this paper, is inspired by these methods.

3. Chimp Optimization Algorithm (ChOA)

ChOA is a mathematical model that is based on intelligent diversity [31]. Driving, chasing, blocking and attacking are accomplished by four different types of chimps, which are accomplished by attackers, obstacles, chasers, and drivers. The four hunting steps are completed in two stages. The first stage is the exploration stage, and the second stage is the exploitation stage. The exploration stage includes driving, blocking, and chasing the prey. As for the exploitation stage, it has to attack the prey. In which, the driving and chasing are represented by Equations (1) and (2).

where is the vector of prey position, is the vector of chimp position, t is the number of current iterations, , , are coefficient vectors and they can be obtained through Equations (3)–(5).

where non-linearly declined from 2.5 to 0, r1 and r2 is the random number between 0 and 1, and is the chaotic vector. The dynamic coefficient can be selected for different curves and slopes, thus chimps can use different abilities to search the prey.

a = 2 · f · r1 – f

c = 2 · r2

Chimps can update their positions based on the other chimps, and this mathematical model can be represented by Equations (6) and (8).

4. Enhanced Chimp Optimization Algorithm (EChOA)

4.1. Highly Disruptive Polynomial Mutation (HDPM)

The highly disruptive polynomial mutation is the improved version of the polynomial mutation method. It can solve the shortcoming that the polynomial mutation method may fall into local optimum when the variable is close to the boundary [32]. Equations (9)–(12) show the process of HDPM changes the

where ub and lb represent the upper and lower boundaries of the search space. is a random number between 0 and 1. is a distribution exponential, which is a non-negative number. As can be seen from the above formula, HDPM can explore the entire search space.

4.2. Spearman’s Rank Correlation Coefficient

Spearman’s rank correlation coefficient is a statistical index to reflect the degree of the relationship between two groups of variables, which is figured upon a level basis. The formula for calculating as Equation (13).

where is the difference of grades among each pair of samples, and n is the dimension of the series. If the absolute value of the correlation coefficient is equal to 1, then the two series are monotonically correlated; otherwise, they are uncorrelated.

4.3. Beetle Antennae Search Algorithm (BAS)

The long-horned beetle has two too long antennae, which can combine the scent of prey to expand detection range and act as a protective alarm mechanism [33]. The beetle explores nearby areas by swinging its antennae on one side of its body to accept the smell. The beetle will move toward to the side where it detects a high odor concentration, as it is shown in Figure 1. The Beetle Antennae Search Algorithm is designed based on this property of beetles.

Figure 1.

Schematic diagram of beetle movement [52].

The search direction of beetles is represented by Equation (14).

where is the direction vector of the beetle, rnd is a random function, and k is the dimension of the position.

Next, Equation (15) respectively presents the search behaviors on the left and right sides to simulate the activity tracking of the beetle.

where and respectively represent the locations within the left and right search areas, represents the beetle’s position at th time instant, and represents the perceived length of the antenna, which will gradually decrease with the passage with time.

The position update of the beetle can be represented by Equation (16).

where is the step size of the search, which initial scope should be equal to the search area, and the sign represents the sign function. The odor concentration at x is expressed by , which is also known as the fitness function.

4.4. Improvement Strategy

Although the original ChOA divides the chimps into four different levels to complete the hunting process, the algorithm still has two obvious defects when applied to higher dimensional problems. The first disadvantage is that in the initial stage of the population it lacks the population diversity. The other disadvantage is there is a risk of falling into the local optimal in the final search stage. Therefore, this article proposes an Enhanced Chimp Optimization Algorithm, which can be better applied to feature selection problems.

Three strategies including HDPM, Spearman’s rank correlation coefficient, and BAS algorithm are introduced into EChOA based on the original algorithm. First, the HDPM strategy is introduced to enhance the population diversity in the initialization phase. The traditional polynomial mutation (PM) has almost no effect when the variable is close to the boundary. The HDPM strategy uses Equation (12) to generate the mutation location of the chimp, which helps further to explore the regions and boundaries of the initial space. In this way, even if the variables are on the search boundary, the search space can be fully utilized to ensure the diversity of the population. Second, the Spearman’s rank correlation coefficient between the driver and attacker chimp is calculated. As it can be seen from Equation (7), position update is jointly determined by all chimps of different grades. By improving the position of the lower chimp, the population can avoid falling into local optimal effectively. The distance between the driver and the attacker can be determined by calculating the Spearman’s rank correlation coefficient of the two by Equation (13). The two chimp species are negatively or uncorrelated when Spearman’s rank correlation coefficient is less than or equal to 0. This will show whether the driver and the attacker are close or far away. Finally, the BSA is introduced for the chimp which is far away from the attacker. The position of the chimp is improved by using Equation (16), which makes it acquire the visual ability. Thus, they can judge the surrounding environment and decide the direction of movement. This increases the performance of the driver chimp and avoids local optimal.

4.5. EChOA for Optimizing SVM and Feature Selection

EChOA-SVM firstly uses optimization algorithm to search the optimal feature subset, and then classify the feature subset by SVM. In the feature selection, the positions of population are separated into 0 and 1 by logical function. If the feature corresponds to 1, the feature will be selected; if the feature corresponds to 0, the feature will not be selected. Since the selection of kernel function and its parameters is related to the performance of SVM, in order to obtain better classification accuracy, EChOA is also needed to optimize the parameters. The EChOA-SVM model generates the primary agents through the penalty parameter c and the nuclear parameter g, and calculates the fitness value to evaluate the chimp’s positions. Finally outputs the optimal value of c, g and the best accuracy to obtain the final classification result.

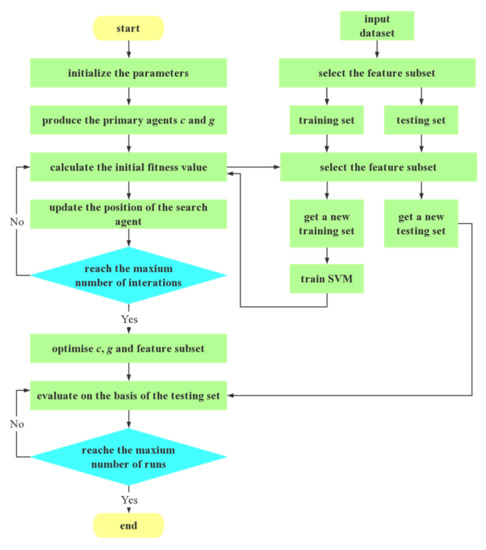

The description of the EChOA-SVM is shown as follows. The flow chart is shown in Figure 2.

Figure 2.

The flow chart of EChOA-SVM.

- Initialize the population, position and fitness value of the chimps.

- SVM randomly generates the values of c and g, and then calculates the initial fitness value.

- The training model is obtained by training set in support vector machine.

- Reorder the population to get the agent position and fitness value after sorting.

5. Experimental Results and Discussion

5.1. Datasets Details

The implementation of the proposed algorithm is done using Matlab. Seventeen data sets from the University of California at Irvine (UCI) machine learning repository [34] has been selected to evaluate the proposed EChOA-SVM approach. Table 1 describes the number of instances, the number of features, and the number of classes of the data set. Seventeen data sets of different sizes and latitudes are selected to observe the performance of the algorithm at different scales.

Table 1.

List of data sets.

All the experimental series are carried out on Matlab R2016a, and the computer is configured as Intel(R) Core (TM) i5-1035G1 CPU @ 1.00 GHz 1.19 GHz, using Microsoft Windows 10 system. Each experiment is run ten times independently to reduce the random influence. In addition, the general parameters are set as follows: the population size is 30, and each run is set to 100 iterations as stopping criteria.

5.2. Parameters Setting

In order to verify the optimization performance of EChOA-SVM, this paper compares the algorithm with different meta-heuristic algorithms such as ChOA [31], GWO [35], WOA [36], ALO [37], GOA [38], MFO [39] and SSA [40].

All settings and parameters that are used in the experiments for all algorithms are presented in Table 2.

Table 2.

Parameter settings of different algorithms.

5.3. Results and Discussion

In order to compare the different feature selection methods with the method proposed in this paper, three indexes are used in this paper [53].

- Accuracy: Accuracy represents the ratio between the number of correct classification and the actual number, which is reflecting the accuracy of the classifier recognition results. It is an important index to evaluate algorithm performance;

- The number of features: The number of features reflects ability of eliminate redundancy. It shows whether the method can find the optimal feature subset;

- Fitness value: Fitness value can reflect the advantages and disadvantages of the solution selected by the classifier. The better fitness value can get the better the solution.

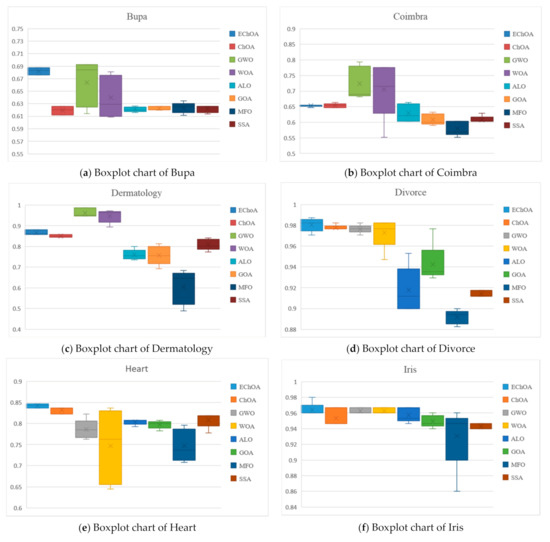

Table 3 shows the mean and the standard deviation of the accuracy. Figure 3 presents the boxplot charts of the accuracy for eight used data sets. The boxplots are shown for the seventeen values of classification accuracy that are given by each algorithm at the end of the SVM training. The boxplots indicate the improved performance of EChOA for optimizing the SVM.

Table 3.

Mean and standard deviation of classification accuracy for all data sets with applying feature selection.

Figure 3.

Boxplot charts for the accuracy of EChOA and other algorithms based on 8 data sets.

The performance of EChOA is better than other optimization algorithms in terms of average accuracy. EChOA obtains the best performance on 13 data sets out of the 17 data sets, which accounts for 76.5% of the total data sets. For the other four data sets, EChOA also has strong competitiveness. Although EChOA do not achieve the highest accuracy in Coimbra, Dermatology and Glass, its classification accuracy ranked the third among the eight optimization algorithms, just next to GWO and WOA. It is noteworthy that the accuracy of EChOA is 10 percent higher than ChOA on Bupa data sets. It is 12 percent higher than GWO on the Knowledge data set and 18 percent higher than GWO on the Lymphography data set. At the same time, it can be seen from the box chart that each optimization algorithm generates ten accuracies in the process of running each data set ten times. From the perspective of their distribution, the accuracy of EChOA is very stable. The accuracy of the EChOA optimization algorithm hardly changes significantly in the 17 data sets. Especially in the Coimbra and Heart data sets, the EChOA optimization algorithm is still very stable in the case that the accuracy of almost all optimization algorithms varies significantly each time. All these show the superiority of the EChOA algorithm.

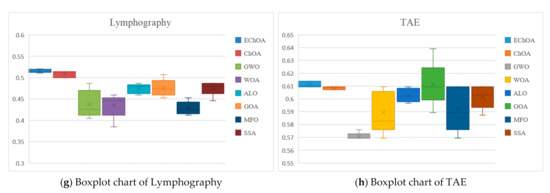

Table 4 shows the mean and the standard deviation of the number of the features. Figure 4 presents the histogram of the number of features for all used data sets. We can intuitively see that the number of features required by EChOA is almost below the average of the number of features of all the algorithms. There are only two data sets do not get the best results (Bupa and Transfusion), which accounts for only 11.8 percent of the total. In Liver data set EChOA even requires only one feature. It shows that EChOA can use space more efficiently. In the analysis of classification accuracy, it has been found that EChOA’s classification accuracy is lower than GWO and WOA in Coimbra, Dermatology and Glass. However, it is not difficult to find in Table 4 that the number of features required by EChOA in these three data sets is less than GWO and WOA. Especially in the Dermatology data set, EChOA required nearly seven fewer features than GWO and WOA. It can be seen in Figure 4, in Coimbra, Dermatology, Divorce and Liver data sets, there are two or three optimization algorithms with far higher features counts than the other optimization algorithms, but EChOA never had this situation. It should be noticed that the number of features required by CHOA is much higher than that of EChOA in Dermatology and Wine data sets, which also indicates the superiority of EChOA over CHOA and other algorithms in the quantitative aspect of features.

Table 4.

Mean and standard deviation of the number of features for all data sets with applying feature selection.

Figure 4.

Histogram for the number of features of EChOA and other algorithms based on 17 data sets.

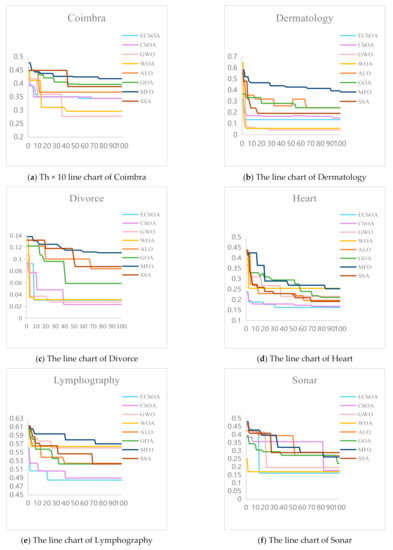

Table 5 shows the mean and the standard deviation of the fitness. Figure 5 presents the line chart of the fitness for six used data sets. The lines in Figure 5 represents the convergence curves of corresponding algorithm. The fitness value of EChOA is the minimum in all the 12 data sets, and the convergence time is very short. The convergence speed of Coimbra, Dermatology, Diagnostic, Divorce and Heart data sets is all higher than ChOA, and some fitness values are also lower than ChOA. At the same time, EChOA convergence curve is relatively flat, in the performance of each data set is relatively good, which are almost below to the average fitness value, does not appear similar to GOA (e.g., Liver) in a certain data set fitness value too much higher than the other algorithms. As it can be seen from Figure 5, the convergence curve of EChOA in the Heart data set is smoother than other algorithms, which shows that the local search ability of the algorithm has been greatly improved. EChOA algorithm can achieve the maximum objective function value when the number of iterations is less than 10 on Dermatology and Divorce data sets, which is earlier than other optimization algorithms. This indicates that the convergence rate of EChOA is improved. As you can see, EChOA is better and more stable than most algorithms.

Table 5.

Mean and standard deviation of fitness values for all data sets with applying feature selection.

Figure 5.

The line chart for the fitness of EChOA and other algorithms based on 17 data sets.

6. Conclusions

This work presents a novel hybrid method for optimizing SVM based on the EChOA. The proposed approach is able to tune the parameters of the SVM kernel and at the same time can find the best accuracy. Experimental results show that the proposed algorithm is effective in improving the classification accuracy of SVM. The experimental results show that the EChOA algorithm has certain advantages over the other seven optimization algorithms in terms of accuracy, feature number and fitness value, and its comprehensive performance is relatively stable. All these indicate that the EChOA algorithm is very competitive.

Although EChOA can effectively improve the exploitation and exploration of ChOA, EChOA introduces three strategies at the same time that increase the complexity of the algorithm. Therefore, it is necessary to consider how to reduce ineffective improvement strategies to alleviate this problem. In subsequent studies, some parallel strategies such as co-evolutionary mechanism can be introduced.

In the future research, EChOA can be applied in multi-objective problems, social manufacturing optimization, and video coding optimization. Simultaneously, the ECHOA-SVM model that proposed in this paper can be studied in a larger scale. It can also be applied to other practical problems like data mining.

Author Contributions

Conceptualization, D.W. and H.J.; methodology, D.W. and H.J.; software, D.W. and W.Z.; validation, W.Z., X.L.; formal analysis, D.W. and W.Z.; investigation, D.W. and W.Z.; writing—original draft preparation, D.W. and W.Z.; writing—review and editing, H.J.; visualization, D.W. and H.J.; funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Sanming University Introduces High-level Talents to Start Scientific Research Funding Support Project (21YG01S), Bidding project for higher education research of Sanming University (SHE2101), Fujian Natural Science Foundation Project, the Guiding Science and Technology Projects in Sanming City (2021-S-8), the Educational Research Projects of Young and Middle-aged Teachers in Fujian Province (JAT200618), and the Scientific Research and Development Fund of Sanming University (B202009), Open Research Fund of Key Laboratory of Agricultural Internet of Things in Fujian Province (ZD2101), Ministry of Education Cooperative Education Project (202002064014), School level education and teaching reform project of Sanming University (J2010305), Higher education research project of Sanming University (SHE2013), Fundamental research funds for the Central Universities (2572018BF11).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Raju, B.; Bonagiri, R. A cavernous analytics using advanced machine learning for real world datasets in research implementations. Mater. Today Proc. 2020. [Google Scholar] [CrossRef]

- Jiang, J.; Yu, C.; Xu, X.; Ma, Y.; Liu, J. Achieving better connections between deposited lines in additive manufacturing via machine learning. Math. Biosci. Eng. 2020, 17, 3382–3394. [Google Scholar] [CrossRef] [PubMed]

- Kline, A.S.; Kline, T.J.B.; Lee, J. Item response theory as a feature selection and interpretation tool in the context of machine learning. Med. Biol. Eng. Comput. 2021, 59, 471–482. [Google Scholar] [CrossRef]

- Ebrahimi-Khusfi, Z.; Nafarzadegan, A.R.; Dargahian, F. Predicting the number of dusty days around the desert wetlands in southeastern Iran using feature selection and machine learning techniques. Ecol. Indic. 2021, 125, 1–15. [Google Scholar] [CrossRef]

- Tanveer, M. Robust and Sparse Linear Programming Twin Support Vector Machines. Cogn. Comput. 2015, 7, 137–149. [Google Scholar] [CrossRef]

- Yang, P.; Li, Z.; Yu, Y.; Shi, J.; Sun, M. Studies on fault diagnosis of dissolved oxygen sensor based on GA-SVM. Math. Biosci. Eng. 2021, 18, 386–399. [Google Scholar] [CrossRef] [PubMed]

- Aziz, W.; Hussain, L.; Khan, I.R.; Alowibdi, J.S.; Alkinani, M.H. Machine learning based classification of normal, slow and fast walking by extracting multimodal features from stride interval time series. Math. Biosci. Eng. 2021, 18, 495–517. [Google Scholar] [CrossRef]

- Hussain, L.; Aziz, W.; Khan, I.R.; Alkinani, M.H.; Alowibdi, J.S. Machine learning based congestive heart failure detection using feature importance ranking of multimodal features. Math. Biosci. Eng. 2021, 18, 69–91. [Google Scholar] [CrossRef] [PubMed]

- Brown, M.P.S.; Grundy, W.N.; Lin, D.; Cristianini, N.; Sugnet, C.W.; Furey, T.; Ares, M.; Haussler, D. Knowledge-based analysis of microarray gene expression data by using support vector machines. Proc. Natl. Acad. Sci. USA. 2000, 97, 262–267. [Google Scholar] [CrossRef] [Green Version]

- Takeuchi, K.; Collier, N. Bio-medical entity extraction using support vector machines. Artif. Intell. Med. 2005, 33, 125–137. [Google Scholar] [CrossRef] [PubMed]

- Babaoğlu, I.; Findik, O.; Bayrak, M. Effects of principle component analysis on assessment of coronary artery diseases using support vector machine. Expert Syst. Appl. 2010, 37, 2182–2185. [Google Scholar] [CrossRef]

- Du, Z.; Yang, Q.; He, H.; Qiu, M.; Chen, Z.; Hu, Q.; Wang, Q.; Zhang, Z.; Lin, Q.; Huang, L.; et al. A comprehensive health classification model based on support vector machine for proseal laryngeal mask and tracheal catheter assessment in herniorrhaphy. Math. Biosci. Eng. 2020, 17, 1838–1854. [Google Scholar] [CrossRef]

- Sotiris, V.A.; Tse, P.W.; Pecht, M. Anomaly Detection through a Bayesian Support Vector Machine. IEEE Trans. Reliab. 2010, 59, 277–286. [Google Scholar] [CrossRef]

- Rostami, O.; Kaveh, M. Optimal feature selection for SAR image classification using biogeography-based optimization (BBO), artificial bee colony (ABC) and support vector machine (SVM): A combined approach of optimization and machine learning. Comput. Geosci. 2021, 25, 911–930. [Google Scholar] [CrossRef]

- Joachims, T. Making Large-Scale Support Vector Machine Learning Practical; MIT Press: Cambridge, MA, USA, 1999; pp. 169–184. [Google Scholar]

- Weston, J.; Mukherjee, S.; Chapelle, O. Feature selection for SVMs. Adv. Neural Inf. Process Syst. 2000, 13, 668–674. [Google Scholar]

- Nguyen, M.H.; Torre, F.D.L. Optimal feature selection for support vector machines. Pattern Recognit. 2010, 43, 584–591. [Google Scholar] [CrossRef]

- Shahbeig, S.; Helfroush, M.S.; Rahideh, A. A fuzzy multi-objective hybrid TLBO–PSO approach to select the associated genes with breast cancer. Signal Process. 2017, 131, 58–65. [Google Scholar] [CrossRef]

- Wu, Q.; Ma, Z.; Fan, J.; Xu, G.; Shen, Y. A feature selection method based on hybrid improved binary quantum particle swarm optimization. IEEE Access 2019, 7, 80588–80601. [Google Scholar] [CrossRef]

- Souza, T.A.; Souza, M.A.; Costa, W.C.D.A.; Costa, S.C.; Correia, S.E.N.; Vieira, V.J.D. Feature Selection based on Binary Particle Swarm Optimization and Neural Networks for Pathological Voice Detection. Int. J. Bio-Inspired Comput. 2018, 11, 2. [Google Scholar] [CrossRef]

- Wang, H.; Niu, B.; Tan, L. Bacterial colony algorithm with adaptive attribute learning strategy for feature selection in classification of customers for personalized recommendation. Neurocomputing 2021, 452, 747–755. [Google Scholar] [CrossRef]

- Jha, K.; Saha, S. Incorporation of multimodal objective optimization in designing a filter based feature selection technique. Appl. Soft Comput. 2021, 98, 106823. [Google Scholar] [CrossRef]

- Han, M.; Liu, X. Feature selection techniques with class separability for multivariate time series. Neurocomputing 2013, 110, 29–34. [Google Scholar] [CrossRef]

- Nithya, B.; Ilango, V. Evaluation of machine learning based optimized feature selection approaches and classification methods for cervical cancer prediction. SN Appl. Sci. 2019, 1, 641. [Google Scholar] [CrossRef] [Green Version]

- Kohavi, R.; John, G.H. Wrappers for feature subset selection. Artif. Intell. 1997, 97, 273–324. [Google Scholar] [CrossRef] [Green Version]

- Pourpanah, F.; Lim, C.P.; Wang, X.; Tan, C.J.; Seera, M.; Shi, Y. A hybrid model of fuzzy min–max and brain storm optimization for feature selection and data classification. Neurocomputing 2019, 333, 440–451. [Google Scholar] [CrossRef]

- Liu, H.; Setiono, R. A probabilistic approach to feature selection-a filter solution. In Proceedings of the 9th International Conference on Industrial and Engineering Applications of AI and ES, Fukuoka, Japan, 4–7 June 1996. [Google Scholar]

- Wang, J.; Wu, L.; Kong, J.; Li, Y.; Zhang, B. Maximum weight and minimum redundancy: A novel framework for feature subset selection. Pattern Recognit. 2013, 46, 1616–1627. [Google Scholar] [CrossRef]

- Sihwail, R.; Omar, K.; Ariffin, K.A.Z.; Tubishat, M. Improved Harris Hawks Optimization Using Elite Opposition-Based Learning and Novel Search Mechanism for Feature Selection. IEEE Access 2020, 8, 121127–121145. [Google Scholar] [CrossRef]

- Elgamal, Z.M.; Yasin, N.B.M.; Tubishat, M.; Alswaitti, M.; Mirjalili, S. An Improved Harris Hawks Optimization Algorithm with Simulated Annealing for Feature Selection in the Medical Field. IEEE Access 2020, 8, 186638–186652. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Abed-Alguni, B. Island-based Cuckoo Search with Highly Disruptive Polynomial Mutation. Int. J. Artif. Intell. 2019, 17, 57–82. [Google Scholar]

- Jiang, X.; Li, S. BAS: Beetle Antennae Search Algorithm for Optimization Problems. arXiv 2017, arXiv:1710.10724. [Google Scholar] [CrossRef]

- Lichman, M. UCI Machine Learning Repository. Available online: http://archive.ics.uci.edu/ml (accessed on 15 August 2013).

- Renita, D.B.; Christopher, C.S. Novel real time content based medical image retrieval scheme with GWO-SVM. Multimed. Tools Appl. 2020, 79, 17227–17243. [Google Scholar] [CrossRef]

- Yin, X.; Hou, Y.; Yin, J.; Li, C. A novel SVM parameter tuning method based on advanced whale optimization algorithm. J. Phys. Conf. Ser. 2019, 1237, 022140. [Google Scholar] [CrossRef] [Green Version]

- Zhao, S.; Gao, L.; Dongmei, Y.; Jun, T. Ant Lion Optimizer with Chaotic Investigation Mechanism for Optimizing SVM Parameters. J. Front. Comput. Sci. Technol. 2016, 10, 722–731. [Google Scholar]

- Aljarah, I.; Al-Zoubi, A.M.; Faris, H.; Hassonah, M.A.; Mirjalili, S.; Saadeh, H. Simultaneous Feature Selection and Support Vector Machine Optimization Using the Grasshopper Optimization Algorithm. Cogn. Comput. 2018, 10, 478–495. [Google Scholar] [CrossRef] [Green Version]

- Lin, G.Q.; Li, L.L.; Tseng, M.L.; Liu, H.M.; Yuan, D.D.; Tan, R.R. An improved moth-flame optimization algorithm for support vector machine prediction of photovoltaic power generation. J. Clean. Prod. 2020, 253, 119966. [Google Scholar] [CrossRef]

- Sivapragasam, C.; Liong, S.-Y.; Pasha, M.F.K. Rainfall and runoff forecasting with SSA–SVM approach. J. Hydroinformatics 2001, 3, 141–152. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Zhang, C. An online-learning-based evolutionary many-objective algorithm. Inf. Sci. 2020, 509, 1–21. [Google Scholar] [CrossRef]

- Dulebenets, M.A. An Adaptive Polyploid Memetic Algorithm for scheduling trucks at a cross-docking terminal. Inf. Sci. 2021, 565, 390–421. [Google Scholar] [CrossRef]

- Liu, Z.-Z.; Wang, Y.; Huang, P.-Q. AnD: A many-objective evolutionary algorithm with angle-based selection and shift-based density estimation. Inf. Sci. 2020, 509, 400–419. [Google Scholar] [CrossRef] [Green Version]

- Pasha, J.; Dulebenets, M.A.; Kavoosi, M.; Abioye, O.F.; Wang, H.; Guo, W. An Optimization Model and Solution Algorithms for the Vehicle Routing Problem with a “Factory-in-a-Box”. IEEE Access 2020, 8, 134743–134763. [Google Scholar] [CrossRef]

- D’Angelo, G.; Pilla, R.; Tascini, C.; Rampone, S. A proposal for distinguishing between bacterial and viral meningitis using genetic programming and decision trees. Soft Comput. 2019, 23, 11775–11791. [Google Scholar] [CrossRef]

- Panda, N.; Majhi, S.K. How effective is the salp swarm algorithm in data classification. In Computational Intelligence in Pattern Recognition; Springer: Singapore, 2020; pp. 579–588. [Google Scholar]

- Alwan, H.B.; Mahamud, K. Mixed-variable ant colony optimisation algorithm for feature subset selection and tuning support vector machine parameter. Int. J. Bio-Inspired Comput. 2017, 9, 53–63. [Google Scholar] [CrossRef]

- Frhlich, H.; Chapelle, O.; Schlkopf, B. Feature Selection for Support Vector Machines by Means of Genetic Algorithms. In Proceedings of the 15th IEEE International Conference on Tools with Artificial Intelligence (ICTAI 2003), Sacramento, CA, USA, 5 November 2003; IEEE: New York, NY, USA, 2003. [Google Scholar]

- Huang, C.-L.; Wang, C.-J. A GA-based feature selection and parameters optimizationfor support vector machines. Expert Syst. Appl. 2006, 31, 231–240. [Google Scholar] [CrossRef]

- Lin, S.-W.; Tseng, T.-Y.; Chen, S.-C.; Huang, J.-F. A SA-Based Feature Selection and Parameter Optimization Approach for Support Vector Machine. Pervasive Comput. IEEE 2006. [Google Scholar] [CrossRef]

- Jia, H.; Sun, K. Improved barnacles mating optimizer algorithm for feature selection and support vector machine optimization. Pattern Anal. Appl. 2021, 24, 1249–1274. [Google Scholar] [CrossRef] [PubMed]

- Slipinski, A.; Escalona, H. Australian Longhorn Beetles (Coleoptera: Cerambycidae); CSIRO Publishing: Clayton, Australia, 2013; Volume 1. [Google Scholar]

- Mafarja, M.M.; Mirjalili, S. Hybrid Whale Optimization Algorithm with simulated annealing for feature selection. Neurocomputing 2017, 260, 302–312. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).