An Image Hashing Algorithm for Authentication with Multi-Attack Reference Generation and Adaptive Thresholding

Abstract

1. Introduction

2. Problem Statement and Contributions

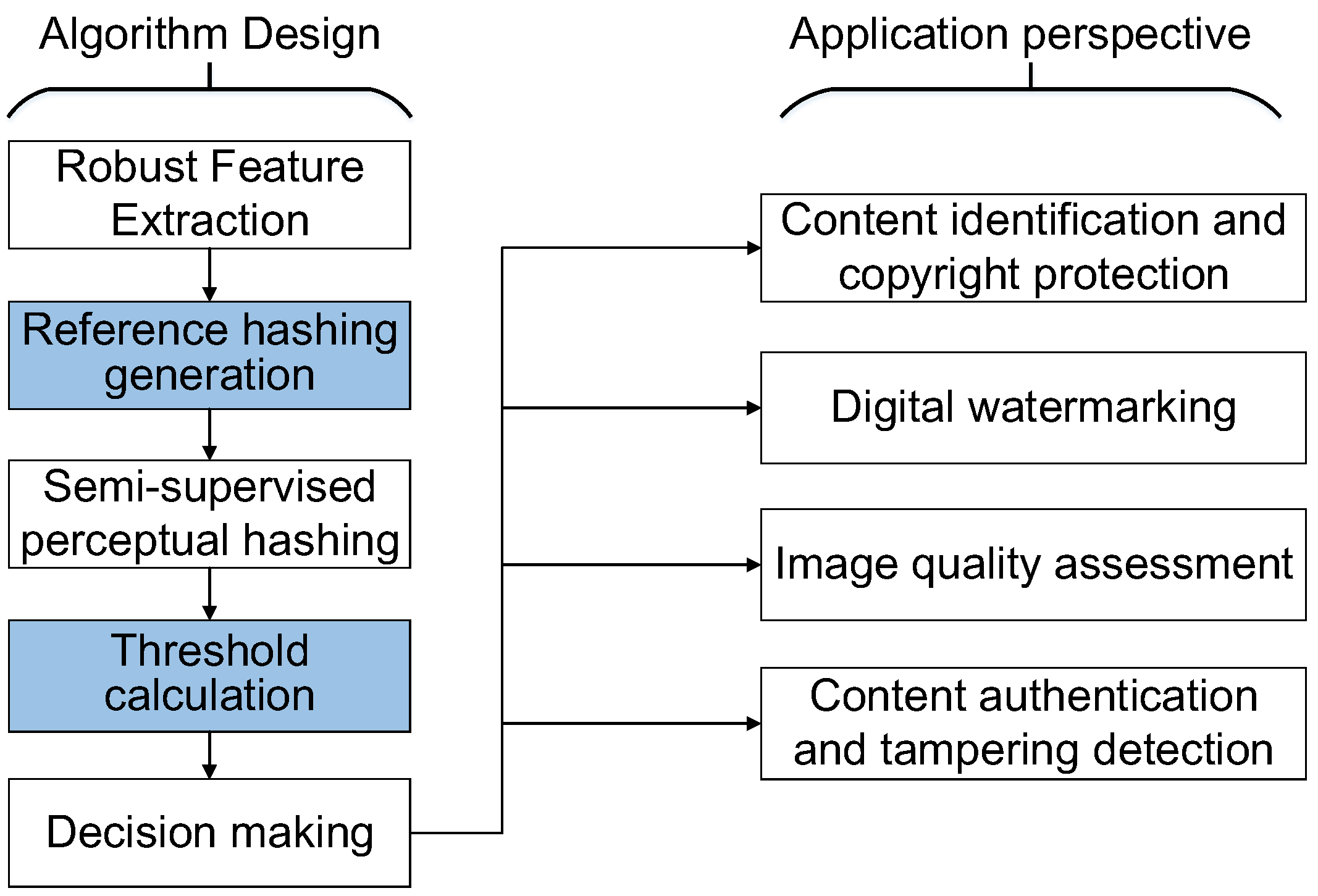

- (1)

- We propose building the prior information set based on the help of multiple virtual prior attacks, which we did by applying virtual prior distortions and attacks to the original images. On the basis of said prior image set we aimed to infer the clustering centroids for reference hashing generation, which is used for a similarity measure.

- (2)

- We effectively exploited the semi-supervised information into the perceptual image hashing learning. Instead of determining metric distance on training results, we explored the hashing distance for thresholding by considering the effect on different images.

- (3)

- In order to account for variations in exacted features of different images, we took into account the pairwise variations among different originally-received image pairs. Those adaptive thresholding improvements maximally discriminate the malicious tampering from content-preserving operations, leading to an excellent tamper detection rate.

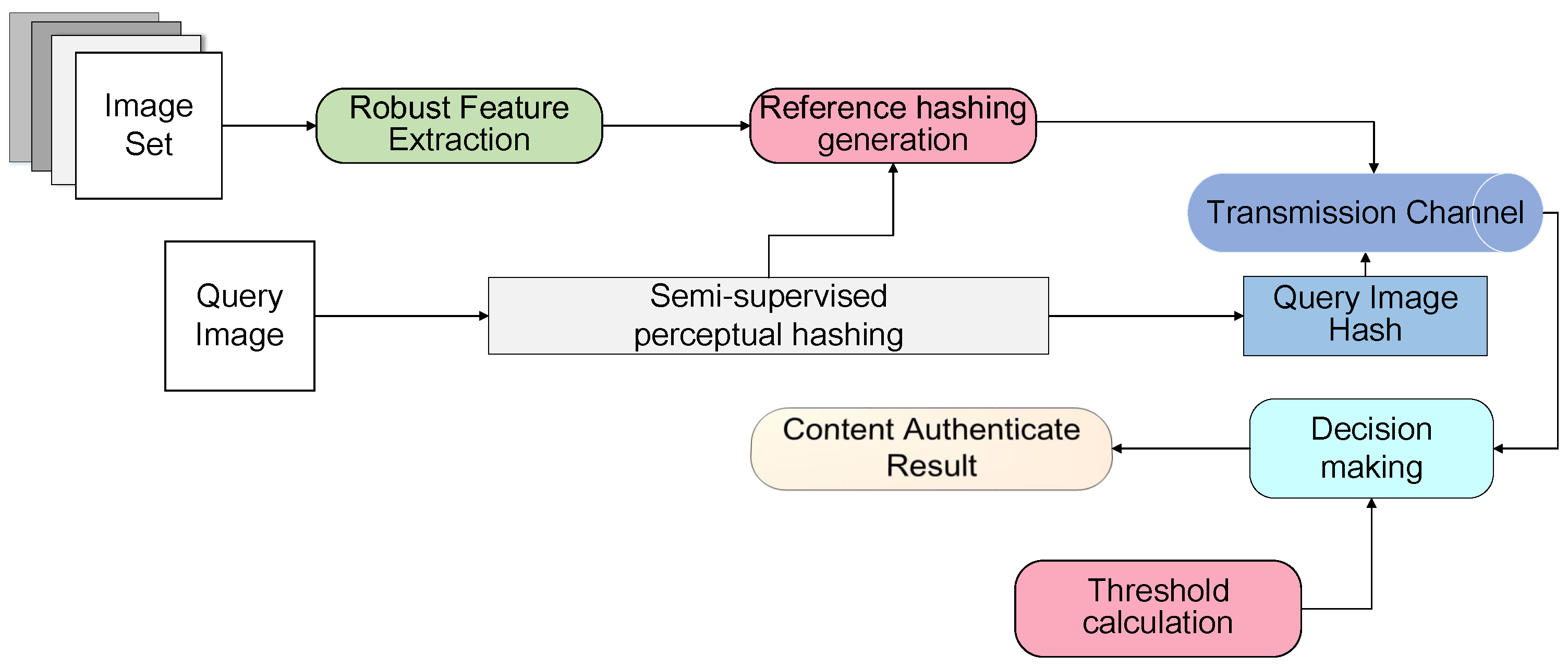

3. Proposed Method

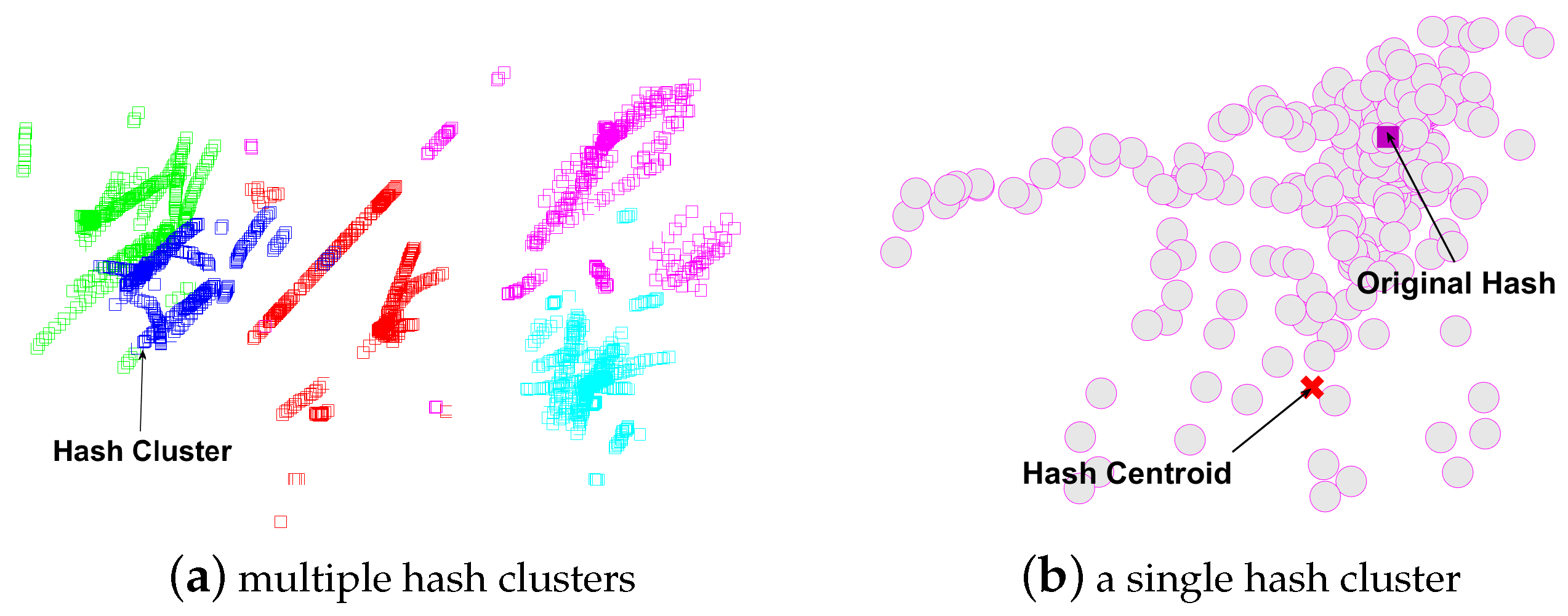

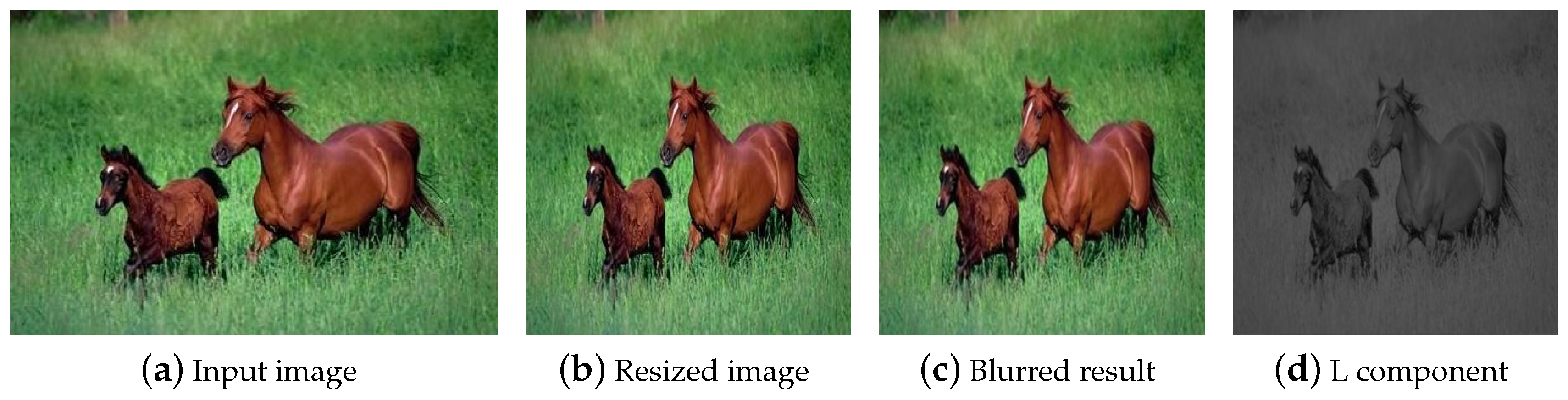

3.1. Multi-Attack Reference Hashing

3.2. Semi-Supervised Hashing Code Learning

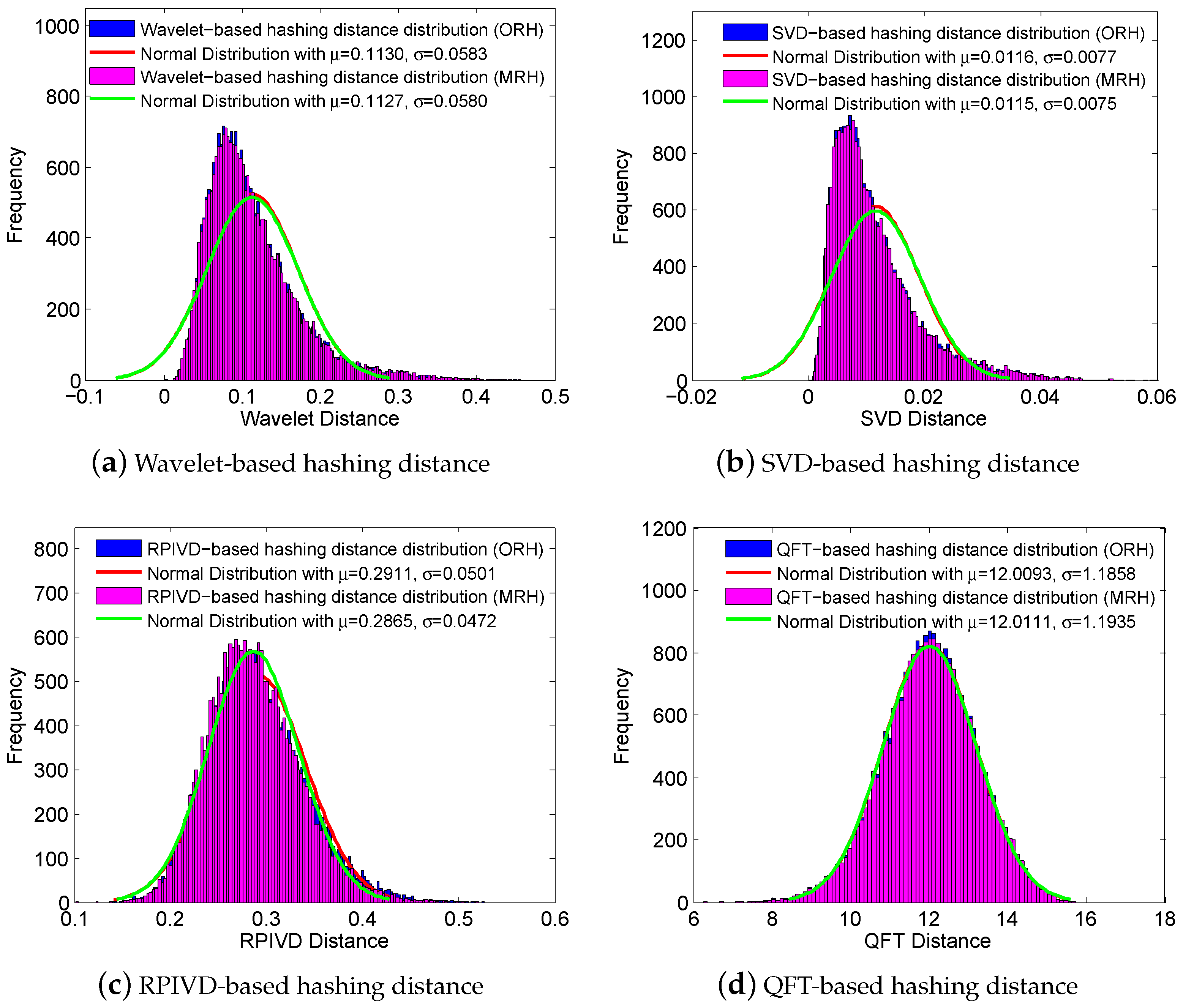

3.3. Adaptive Thresholds-Based Decision Making

4. Experiments

4.1. Data

4.2. Baselines

4.3. Perceptual Robustness

4.4. Discriminative Capability

4.5. Authentication Results

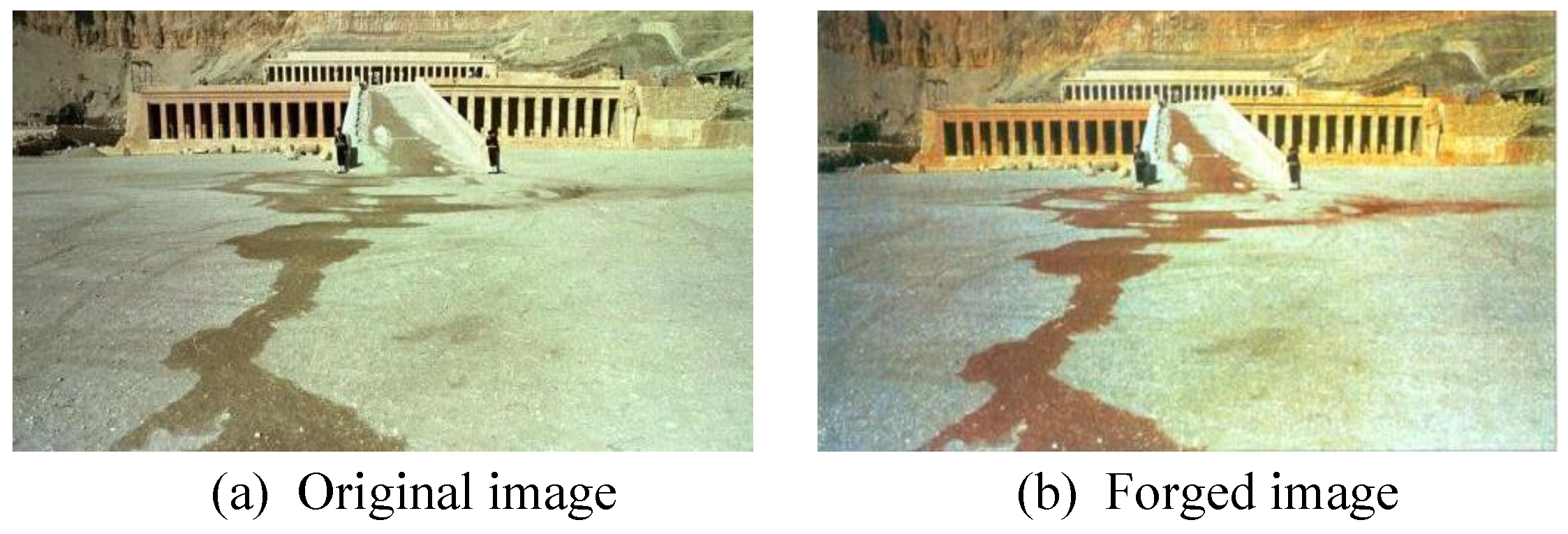

5. Domains of Application

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kalker, T.; Haitsma, J.; Oostveen, J.C. Issues with DigitalWatermarking and Perceptual Hashing. Proc. SPIE 2001, 4518, 189–197. [Google Scholar]

- Tang, Z.; Chen, L.; Zhang, X.; Zhang, S. Robust Image Hashing with Tensor Decomposition. IEEE Trans. Knowl. Data Eng. 2018, 31, 549–560. [Google Scholar] [CrossRef]

- Yang, H.; Yin, J.; Yang, Y. Robust Image Hashing Scheme Based on Low-Rank Decomposition and Path Integral LBP. IEEE Access 2019, 7, 51656–51664. [Google Scholar] [CrossRef]

- Tang, Z.; Yu, M.; Yao, H.; Zhang, H.; Yu, C.; Zhang, X. Robust Image Hashing with Singular Values of Quaternion SVD. Comput. J. 2020. [Google Scholar] [CrossRef]

- Tang, Z.; Ling, M.; Yao, H.; Qian, Z.; Zhang, X.; Zhang, J.; Xu, S. Robust Image Hashing via Random Gabor Filtering and DWT. CMC Comput. Mater. Contin. 2018, 55, 331–344. [Google Scholar]

- Karsh, R.K.; Saikia, A.; Laskar, R.H. Image Authentication Based on Robust Image Hashing with Geometric Correction. Multimed. Tools Appl. 2018, 77, 25409–25429. [Google Scholar] [CrossRef]

- Gharde, N.D.; Thounaojam, D.M.; Soni, B.; Biswas, S.K. Robust Perceptual Image Hashing Using Fuzzy Color Histogram. Multimed. Tools Appl. 2018, 77, 30815–30840. [Google Scholar] [CrossRef]

- Tang, Z.; Yang, F.; Huang, L.; Zhang, X. Robust image hashing with dominant dct coefficients. Optik Int. J. Light Electron Opt. 2014, 125, 5102–5107. [Google Scholar] [CrossRef]

- Lei, Y.; Wang, Y.-G.; Huang, J. Robust image hash in radon transform domain for authentication. Signal Process. Image Commun. 2011, 26, 280–288. [Google Scholar] [CrossRef]

- Tang, Z.; Dai, Y.; Zhang, X.; Huang, L.; Yang, F. Robust image hashing via colour vector angles and discrete wavelet transform. IET Image Process. 2014, 8, 142–149. [Google Scholar] [CrossRef]

- Ouyang, J.; Coatrieux, G.; Shu, H. Robust hashing for image authentication using quaternion discrete fourier transform and log-polar transform. Digit. Signal Process. 2015, 41, 98–109. [Google Scholar] [CrossRef]

- Yan, C.-P.; Pun, C.-M.; Yuan, X. Quaternion-based image hashing for adaptive tampering localization. IEEE Trans. Inf. Forensics Secur. 2016, 11, 2664–2677. [Google Scholar] [CrossRef]

- Yan, C.-P.; Pun, C.-M. Multi-scale difference map fusion for tamper localization using binary ranking hashing. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2144–2158. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, A.; Cao, Y.; Gao, Y.; Tan, R.; He, H.; Zhou, M. Robust image hashing based on hybrid approach of scale-invariant feature transform and local binary patterns. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar]

- Qin, C.; Hu, Y.; Yao, H.; Duan, X.; Gao, L. Perceptual image hashing based on weber local binary pattern and color angle representation. IEEE Access 2019, 7, 45460–45471. [Google Scholar] [CrossRef]

- Yan, C.-P.; Pun, C.-M.; Yuan, X.-C. Adaptive local feature based multi-scale image hashing for robust tampering detection. In Proceedings of the TENCON 2015—2015 IEEE Region 10 Conference, Macao, China, 1–4 November 2015; pp. 1–4. [Google Scholar]

- Yan, C.P.; Pun, C.M.; Yuan, X.C. Multi-scale image hashing using adaptive local feature extraction for robust tampering detection. Signal Process. 2016, 121, 1–16. [Google Scholar] [CrossRef]

- Pun, C.-M.; Yan, C.-P.; Yuan, X. Robust image hashing using progressive feature selection for tampering detection. Multimed. Tools Appl. 2017, 77, 11609–11633. [Google Scholar] [CrossRef]

- Qin, C.; Chen, X.; Luo, X.; Xinpeng, Z.; Sun, X. Perceptual image hashing via dual-cross pattern encoding and salient structure detection. Inf. Sci. 2018, 423, 284–302. [Google Scholar] [CrossRef]

- Monga, V.; Evans, B. Robust perceptual image hashing using feature points. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 677–680. [Google Scholar]

- Qin, C.; Chen, X.; Dong, J.; Zhang, X. Perceptual image hashing with selective sampling for salient structure features. Displays 2016, 45, 26–37. [Google Scholar] [CrossRef]

- Qin, C.; Sun, M.; Chang, C.-C. Perceptual hashing for color images based on hybrid extraction of structural features. Signal Process. 2018, 142, 194–205. [Google Scholar] [CrossRef]

- Anitha, K.; Leveenbose, P. Edge detection based salient region detection for accurate image forgery detection. In Proceedings of the IEEE International Conference on Computational Intelligence and Computing Research, Coimbatore, India, 18–20 December 2015; pp. 1–4. [Google Scholar]

- Kozat, S.S.; Mihcak, K.; Venkatesan, R. Robust perceptual image hashing via matrix invariances. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 3443–3446. [Google Scholar]

- Ghouti, L. Robust perceptual color image hashing using quaternion singular value decomposition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Florence, Italy, 4–9 May 2014; pp. 3794–3798. [Google Scholar]

- Abbas, S.Q.; Ahmed, F.; Zivic, N.; Ur-Rehman, O. Perceptual image hashing using svd based noise resistant local binary pattern. In Proceedings of the International Congress on Ultra Modern Telecommunications and Control Systems and Workshops, Lisbon, Portugal, 18–20 October 2016; pp. 401–407. [Google Scholar]

- Tang, Z.; Ruan, L.; Qin, C.; Zhang, X.; Yu, C. Robust image hashing with embedding vector variance of lle. Digit. Signal Process. 2015, 43, 17–27. [Google Scholar] [CrossRef]

- Sun, R.; Zeng, W. Secure and robust image hashing via compressive sensing. Multimed. Tools Appl. 2014, 70, 1651–1665. [Google Scholar] [CrossRef]

- Liu, H.; Xiao, D.; Xiao, Y.; Zhang, Y. Robust image hashing with tampering recovery capability via low-rank and sparse representation. Multimed. Tools Appl. 2016, 75, 7681–7696. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, X.; Li, X.; Zhang, S. Robust image hashing with ring partition and invariant vector distance. IEEE Trans. Inf. Forensics Secur. 2016, 11, 200–214. [Google Scholar] [CrossRef]

- Srivastava, M.; Siddiqui, J.; Ali, M.A. Robust image hashing based on statistical features for copy detection. In Proceedings of the IEEE Uttar Pradesh Section International Conference on Electrical, Computer and Electronics Engineering, Varanasi, India, 9–11 December 2017; pp. 490–495. [Google Scholar]

- Huang, Z.; Liu, S. Robustness and discrimination oriented hashing combining texture and invariant vector distance. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 1389–1397. [Google Scholar]

- Zhang, D.; Chen, J.; Shao, B. Perceptual image hashing based on zernike moment and entropy. Electron. Sci. Technol. 2015, 10, 12. [Google Scholar]

- Chen, Y.; Yu, W.; Feng, J. Robust image hashing using invariants of tchebichef moments. Optik Int. J. Light Electron Opt. 2014, 125, 5582–5587. [Google Scholar] [CrossRef]

- Hosny, K.M.; Khedr1, Y.M.; Khedr, W.I.; Mohamed, E.R. Robust image hashing using exact gaussian-hermite moments. IET Image Process. 2018, 12, 2178–2185. [Google Scholar] [CrossRef]

- Lv, X.; Wang, A. Compressed binary image hashes based on semisupervised spectral embedding. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1838–1849. [Google Scholar] [CrossRef]

- Bondi, L.; Lameri, S.; Guera, D.; Bestagini, P.; Delp, E.J.; Tubaro, S. Tampering detection and localization through clustering of camera-based cnn features. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1855–1864. [Google Scholar]

- Yarlagadda, S.K.; Güera, D.; Bestagini, P.; Zhu, F.M.; Tubaro, S.; Delp, E.J. Satellite image forgery detection and localization using gan and one-class classifier. arXiv 2018, arXiv:1802.04881. [Google Scholar] [CrossRef]

- Du, L.; Chen, Z.; Ke, Y. Image hashing for tamper detection with multi-view embedding and perceptual saliency. Adv. Multimed. 2018, 2018, 4235268 . [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, L.; Nie, F.; Li, X.; Chen, Z.; Wang, F. WeGAN: Deep Image Hashing with Weighted Generative Adversarial Networks. IEEE Trans. Multimed. 2020, 22, 1458–1469. [Google Scholar] [CrossRef]

- Wang, Y.; Ward, R.; Wang, Z.J. Coarse-to-Fine Image DeHashing Using Deep Pyramidal Residual Learning. IEEE Signal Process. Lett. 2020, 22, 1295–1299. [Google Scholar] [CrossRef]

- Jiang, C.; Pang, Y. Perceptual image hashing based on a deep convolution neural network for content authentication. J. Electron. Imaging 2018, 27, 043055. [Google Scholar] [CrossRef]

- Peng, Y.; Zhang, J.; Ye, Z. Deep Reinforcement Learning for Image Hashing. IEEE Trans. Multimed. 2020, 22, 2061–2073. [Google Scholar] [CrossRef]

- Du, L.; Wang, Y.; Ho, A.T.S. Multi-attack Reference Hashing Generation for Image Authentication. In Proceedings of the Digital Forensics and Watermarking—18th International Workshop (IWDW 2019), Chengdu, China, 2–4 November 2019; pp. 407–420. [Google Scholar]

- Zheng, Z.; Li, L. Binary Multi-View Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1774–1782. [Google Scholar] [CrossRef] [PubMed]

- Dong, J.; Wang, W. Casia image tampering detection evaluation database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2003; pp. 422–426. [Google Scholar]

- Korus, P.; Huang, J. Evaluation of random field models in multi-modal unsupervised tampering localization. In Proceedings of the 2016 IEEE International Workshop on Information Forensics and Security, Abu Dhabi, UAE, 4–7 December 2016; pp. 1–6. [Google Scholar]

- Korus, P.; Huang, J. Multi-scale analysis strategies in prnu-based tampering localization. IEEE Trans. Inf. Forensics Secur. 2017, 12, 809–824. [Google Scholar] [CrossRef]

- Venkatesan, R.; Koon, S.M.; Jakubowski, M.H.; Moulin, P. Robust image hashing. In Proceedings of the IEEE International Conference on Image Processing, Thessaloniki, Greece, 7–10 October 2001; pp. 664–666. [Google Scholar]

| Method | Manipulation | ORH | MRH | ||||

|---|---|---|---|---|---|---|---|

| Max | Min | Mean | Max | Min | Mean | ||

| Gaussian noise | 0.02828 | 0.00015 | 0.00197 | 0.02847 | 0.00014 | 0.00196 | |

| Salt&Pepper | 0.01918 | 0.00021 | 0.00252 | 0.01918 | 0.00024 | 0.00251 | |

| Gaussian blurring | 0.00038 | 0.00005 | 0.00017 | 0.00067 | 0.00006 | 0.00019 | |

| Circular blurring | 0.00048 | 0.00006 | 0.00022 | 0.00069 | 0.00006 | 0.00021 | |

| Motion blurring | 0.00034 | 0.00006 | 0.00015 | 0.00065 | 0.00005 | 0.00016 | |

| Wavelet | Average filtering | 0.00071 | 0.00007 | 0.00033 | 0.00071 | 0.00009 | 0.00030 |

| Median filtering | 0.00704 | 0.00006 | 0.00099 | 0.00753 | 0.00007 | 0.00099 | |

| Wiener filtering | 0.00101 | 0.00008 | 0.00028 | 0.00087 | 0.00008 | 0.00028 | |

| Image sharpening | 0.00906 | 0.00009 | 0.00115 | 0.00906 | 0.00010 | 0.00114 | |

| Image scaling | 0.00039 | 0.00005 | 0.00013 | 0.00064 | 0.00006 | 0.00018 | |

| Illumination correction | 0.08458 | 0.00447 | 0.02759 | 0.08458 | 0.00443 | 0.02757 | |

| JPEG compression | 0.00143 | 0.00009 | 0.00026 | 0.00275 | 0.00013 | 0.00051 | |

| Gaussian noise | 0.00616 | 0.00007 | 0.00031 | 0.00616 | 0.00007 | 0.00030 | |

| Salt&Pepper | 0.00339 | 0.00008 | 0.00034 | 0.00338 | 0.00007 | 0.00033 | |

| Gaussian blurring | 0.00017 | 0.00007 | 0.00010 | 0.00113 | 0.00007 | 0.00011 | |

| Circular blurring | 0.00018 | 0.00006 | 0.00010 | 0.00114 | 0.00006 | 0.00011 | |

| Motion blurring | 0.00017 | 0.00007 | 0.00010 | 0.00113 | 0.00006 | 0.00011 | |

| SVD | Average filtering | 0.00025 | 0.00007 | 0.00011 | 0.00111 | 0.00006 | 0.00012 |

| Median filtering | 0.00166 | 0.00007 | 0.00015 | 0.00190 | 0.00007 | 0.00016 | |

| Wiener filtering | 0.00035 | 0.00005 | 0.00011 | 0.00113 | 0.00007 | 0.00012 | |

| Image sharpening | 0.00104 | 0.00007 | 0.00018 | 0.00099 | 0.00007 | 0.00018 | |

| Image scaling | 0.00016 | 0.00007 | 0.00010 | 0.00114 | 0.00007 | 0.00011 | |

| Illumination correction | 0.00662 | 0.00014 | 0.00149 | 0.00674 | 0.00014 | 0.00150 | |

| JPEG compression | 0.00031 | 0.00007 | 0.00010 | 0.00053 | 0.00008 | 0.00012 | |

| Gaussian noise | 0.25827 | 0.00864 | 0.03086 | 0.29081 | 0.01115 | 0.03234 | |

| Salt&Pepper | 0.22855 | 0.01131 | 0.02993 | 0.25789 | 0.01191 | 0.03033 | |

| Gaussian blurring | 0.03560 | 0.00411 | 0.01471 | 0.14023 | 0.00545 | 0.01786 | |

| Circular blurring | 0.06126 | 0.00447 | 0.01713 | 0.13469 | 0.00565 | 0.01924 | |

| Motion blurring | 0.03570 | 0.00362 | 0.01432 | 0.18510 | 0.00473 | 0.01825 | |

| RPIVD | Average filtering | 0.07037 | 0.00543 | 0.02109 | 0.20190 | 0.00591 | 0.02237 |

| Median filtering | 0.06126 | 0.00512 | 0.02234 | 0.18360 | 0.00625 | 0.02465 | |

| Wiener filtering | 0.07156 | 0.00421 | 0.01803 | 0.20421 | 0.00581 | 0.02041 | |

| Image sharpening | 0.06324 | 0.00609 | 0.02442 | 0.18283 | 0.00706 | 0.02765 | |

| Image scaling | 0.03311 | 0.00275 | 0.01154 | 0.18233 | 0.00381 | 0.01761 | |

| Illumination correction | 0.11616 | 0.00769 | 0.02864 | 0.20944 | 0.01047 | 0.02920 | |

| JPEG compression | 0.07037 | 0.00543 | 0.02109 | 0.06180 | 0.00707 | 0.02155 | |

| Gaussian noise | 6.97151 | 0.13508 | 0.73563 | 6.30302 | 0.11636 | 0.60460 | |

| Salt&Pepper | 7.63719 | 0.16998 | 0.66200 | 7.50644 | 0.15073 | 0.63441 | |

| Gaussian blurring | 0.26237 | 0.00513 | 0.02519 | 0.10820 | 0.00318 | 0.01449 | |

| Circular blurring | 0.26529 | 0.00712 | 0.03163 | 0.17937 | 0.00460 | 0.02075 | |

| Motion blurring | 0.26408 | 0.00465 | 0.02286 | 0.10729 | 0.00300 | 0.01318 | |

| QFT | Average filtering | 0.30154 | 0.00976 | 0.04403 | 0.30719 | 0.00760 | 0.03263 |

| Median filtering | 0.95120 | 0.03084 | 0.19822 | 0.87149 | 0.02706 | 0.19345 | |

| Wiener filtering | 0.64373 | 0.01746 | 0.08046 | 0.68851 | 0.01551 | 0.07616 | |

| Image sharpening | 6.55606 | 0.05188 | 1.52398 | 6.55596 | 0.05189 | 1.52398 | |

| Image scaling | 0.51083 | 0.04031 | 0.10067 | 0.52404 | 0.02800 | 0.09827 | |

| Illumination correction | 4.37001 | 0.27357 | 0.84280 | 4.36692 | 0.27348 | 0.84170 | |

| JPEG compression | 7.55523 | 0.13752 | 1.29158 | 13.1816 | 0.13585 | 1.46682 | |

| Manipulation | Wavelet | SVD | RPIVD | QFT | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| Original image-based reference hashing | ||||||||||||||||

| Gaussian noise | 0.6257 | 0.9500 | 0.7545 | 0.8442 | 0.8537 | 0.4773 | 0.6122 | 0.8501 | 0.8326 | 0.8211 | 0.8268 | 0.8991 | 0.8978 | 0.7591 | 0.8227 | 0.9241 |

| Salt&Pepper | 0.5485 | 0.9773 | 0.7026 | 0.8043 | 0.8537 | 0.4773 | 0.6122 | 0.8507 | 0.8806 | 0.8119 | 0.7449 | 0.9088 | 0.8851 | 0.7727 | 0.8252 | 0.9184 |

| Gaussian blurring | 1.0000 | 0.8727 | 0.9320 | 0.9866 | 1.0000 | 0.4409 | 0.6120 | 0.9874 | 1.0000 | 0.7465 | 0.8549 | 0.9557 | 1.0000 | 0.7227 | 0.8391 | 0.9948 |

| Circular blurring | 1.0000 | 0.8733 | 0.9346 | 0.9787 | 1.0000 | 0.4364 | 0.6076 | 0.9852 | 0.9821 | 0.7604 | 0.8571 | 0.9447 | 1.0000 | 0.7227 | 0.8391 | 0.9948 |

| Motion blurring | 1.0000 | 0.8727 | 0.9346 | 0.9787 | 1.0000 | 0.4273 | 0.5987 | 0.9868 | 1.0000 | 0.7477 | 0.8556 | 0.9572 | 1.0000 | 0.7227 | 0.8391 | 0.9949 |

| Average filtering | 1.0000 | 0.8864 | 0.9398 | 0.9665 | 1.0000 | 0.4318 | 0.6032 | 0.9790 | 0.9598 | 0.7661 | 0.8520 | 0.9351 | 1.0000 | 0.7227 | 0.8391 | 0.9948 |

| Median filtering | 0.6967 | 0.9500 | 0.8038 | 0.9012 | 0.9898 | 0.4409 | 0.6101 | 0.9544 | 0.9399 | 0.7890 | 0.8579 | 0.9212 | 1.0000 | 0.7409 | 0.8512 | 0.9721 |

| Wiener filtering | 0.9847 | 0.8773 | 0.9279 | 0.9713 | 1.0000 | 0.4318 | 0.6032 | 0.9822 | 0.9880 | 0.7569 | 0.8571 | 0.9427 | 1.0000 | 0.7227 | 0.8391 | 0.9950 |

| Image sharpening | 0.7178 | 0.9364 | 0.8126 | 0.8872 | 0.9709 | 0.4545 | 0.6192 | 0.9368 | 0.8980 | 0.8073 | 0.8502 | 0.9155 | 0.8851 | 0.8537 | 0.8537 | 0.8537 |

| Image scaling | 1.0000 | 0.8773 | 0.9346 | 0.9892 | 1.0000 | 0.4318 | 0.6032 | 0.9873 | 1.0000 | 0.7385 | 0.8496 | 0.9677 | 0.8851 | 0.7727 | 0.8252 | 0.9184 |

| Illumination correction | 0.5000 | 1.0000 | 0.6667 | 0.5593 | 0.5479 | 0.8318 | 0.6606 | 0.6754 | 0.9021 | 0.8028 | 0.8495 | 0.9073 | 0.6429 | 0.9000 | 0.7500 | 0.8498 |

| JPEG compression | 1.0000 | 0.4909 | 0.6585 | 0.9271 | 1.0000 | 0.4318 | 0.6032 | 0.9846 | 1.0000 | 0.3073 | 0.4702 | 0.9408 | 0.9273 | 0.6955 | 0.7948 | 0.9015 |

| Multi-attack reference hashing | ||||||||||||||||

| Gaussian noise | 0.8345 | 0.5273 | 0.6462 | 0.8465 | 0.8462 | 0.3000 | 0.4430 | 0.8846 | 0.9600 | 0.3303 | 0.4915 | 0.8948 | 0.9279 | 0.8773 | 0.9019 | 0.9588 |

| Salt&Pepper | 0.7619 | 0.5818 | 0.6598 | 0.8046 | 0.9507 | 0.6136 | 0.7459 | 0.9263 | 0.9706 | 0.3028 | 0.4615 | 0.9057 | 0.9500 | 0.6909 | 0.8000 | 0.9355 |

| Gaussian blurring | 1.0000 | 0.6000 | 0.7500 | 0.9955 | 1.0000 | 0.6045 | 0.7535 | 0.9904 | 0.9927 | 0.6415 | 0.7794 | 0.9880 | 1.0000 | 0.6818 | 0.8108 | 0.9952 |

| Circular blurring | 1.0000 | 0.4955 | 0.6626 | 0.9811 | 1.0000 | 0.6045 | 0.7535 | 0.9904 | 0.9926 | 0.6368 | 0.7759 | 0.9870 | 1.0000 | 0.6818 | 0.8108 | 0.9953 |

| Motion blurring | 1.0000 | 0.4909 | 0.6585 | 0.9849 | 1.0000 | 0.6000 | 0.7500 | 0.9955 | 0.9855 | 0.6415 | 0.7771 | 0.9857 | 1.0000 | 0.6818 | 0.8108 | 0.9952 |

| Average filtering | 1.0000 | 0.4955 | 0.6626 | 0.9709 | 1.0000 | 0.6091 | 0.7571 | 0.9955 | 0.9714 | 0.3119 | 0.4722 | 0.9270 | 1.0000 | 0.6818 | 0.8108 | 0.9952 |

| Median filtering | 0.9590 | 0.5318 | 0.6842 | 0.9013 | 0.9926 | 0.6091 | 0.7549 | 0.9803 | 1.0000 | 0.3211 | 0.4861 | 0.9258 | 1.0000 | 0.6818 | 0.8108 | 0.9809 |

| Wiener filtering | 1.0000 | 0.4909 | 0.6585 | 0.9703 | 1.0000 | 0.6045 | 0.7535 | 0.9901 | 0.9854 | 0.6368 | 0.7736 | 0.9858 | 1.0000 | 0.6864 | 0.8140 | 0.9950 |

| Image sharpening | 0.8986 | 0.5636 | 0.6927 | 0.8884 | 1.0000 | 0.2864 | 0.4452 | 0.9313 | 0.9722 | 0.3211 | 0.4828 | 0.9071 | 0.9167 | 0.7000 | 0.7938 | 0.9011 |

| Image scaling | 1.0000 | 0.4955 | 0.6626 | 0.9828 | 1.0000 | 0.6000 | 0.7500 | 0.9955 | 0.9855 | 0.6415 | 0.6415 | 0.9868 | 0.9494 | 0.6818 | 0.7937 | 0.9607 |

| Illumination correction | 0.5046 | 1.0000 | 0.6707 | 0.7791 | 0.6376 | 0.8318 | 0.7219 | 0.7848 | 0.9714 | 0.3119 | 0.4722 | 0.9062 | 0.7500 | 0.7909 | 0.7699 | 0.8405 |

| JPEG compression | 1.0000 | 0.4909 | 0.6585 | 0.9256 | 1.0000 | 0.6045 | 0.7535 | 0.9900 | 1.0000 | 0.3073 | 0.4702 | 0.9264 | 0.9403 | 0.8591 | 0.8979 | 0.9598 |

| Manipulation | Wavelet | SVD | RPIVD | QFT | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | Precision | Recall | F1 | AUC | |

| Original image-based reference hashing | ||||||||||||||||

| Gaussian noise | 0.7451 | 0.6623 | 0.8010 | 0.7909 | 0.8385 | 0.7015 | 0.7639 | 0.8825 | 0.9782 | 0.6830 | 0.8044 | 0.9021 | 0.8802 | 0.8965 | 0.8883 | 0.9520 |

| Salt&Pepper | 0.8128 | 0.6481 | 0.7212 | 0.8307 | 0.8978 | 0.6983 | 0.7855 | 0.9164 | 0.9699 | 0.6329 | 0.7660 | 0.9282 | 0.8837 | 0.8856 | 0.8847 | 0.9572 |

| Gaussian blurring | 0.9694 | 0.5861 | 0.7305 | 0.9434 | 0.9937 | 0.6852 | 0.8811 | 0.9512 | 0.9502 | 0.6452 | 0.7685 | 0.8981 | 1.0000 | 0.8638 | 0.9269 | 0.9989 |

| Circular blurring | 0.9399 | 0.5959 | 0.7293 | 0.9526 | 0.9696 | 0.6939 | 0.8089 | 0.8274 | 0.8124 | 0.6856 | 0.7436 | 0.8467 | 1.0000 | 0.8638 | 0.9269 | 0.9989 |

| Motion blurring | 0.9745 | 0.5817 | 0.7285 | 0.9526 | 0.9952 | 0.6797 | 0.8078 | 0.9642 | 0.9827 | 0.6201 | 0.7604 | 0.9161 | 1.0000 | 0.8638 | 0.9269 | 0.9989 |

| Average filtering | 0.8786 | 0.6231 | 0.7291 | 0.8917 | 0.8728 | 0.7179 | 0.7878 | 0.8835 | 0.6562 | 0.7738 | 0.7101 | 0.7739 | 1.0000 | 0.8638 | 0.9269 | 0.9989 |

| Median filtering | 0.8838 | 0.6503 | 0.7307 | 0.8457 | 0.9269 | 0.7048 | 0.8007 | 0.9047 | 0.7296 | 0.7216 | 0.7256 | 0.8080 | 1.0000 | 0.8649 | 0.9276 | 0.9939 |

| Wiener filtering | 0.8997 | 0.6155 | 0.7309 | 0.9055 | 0.9485 | 0.7015 | 0.8065 | 0.9212 | 0.8227 | 0.6921 | 0.7539 | 0.8506 | 1.0000 | 0.8638 | 0.9269 | 0.9980 |

| Image sharpening | 0.7194 | 0.7197 | 0.7186 | 0.7878 | 0.8089 | 0.7702 | 0.7891 | 0.8656 | 0.6526 | 0.8268 | 0.7295 | 0.8014 | 0.6565 | 0.9390 | 0.7727 | 0.8653 |

| Image scaling | 0.9868 | 0.5719 | 0.7241 | 0.9640 | 0.9952 | 0.6808 | 0.8085 | 0.9672 | 0.9581 | 0.6234 | 0.7553 | 0.9180 | 1.0000 | 0.8627 | 0.9263 | 0.9986 |

| Illumination correction | 0.5008 | 0.9978 | 0.6669 | 0.6063 | 0.6256 | 0.8573 | 0.7233 | 0.7541 | 0.9941 | 0.5579 | 0.7147 | 0.9810 | 0.8854 | 0.9085 | 0.8968 | 0.9616 |

| JPEG compression | 1.0000 | 0.4909 | 0.6585 | 0.9271 | 0.9676 | 0.6830 | 0.8008 | 0.9580 | 0.9565 | 0.6495 | 0.7736 | 0.9076 | 0.7148 | 0.9281 | 0.8076 | 0.8861 |

| Multi-attack reference hashing | ||||||||||||||||

| Gaussian noise | 0.7604 | 0.6569 | 0.7049 | 0.7993 | 0.8647 | 0.6961 | 0.7713 | 0.8902 | 0.9429 | 0.8638 | 0.9016 | 0.9578 | 0.9130 | 0.8922 | 0.9025 | 0.9646 |

| Salt&Pepper | 0.8415 | 0.6362 | 0.7246 | 0.8407 | 0.9261 | 0.6961 | 0.7948 | 0.9202 | 0.9738 | 0.8497 | 0.9075 | 0.9693 | 0.8906 | 0.8954 | 0.8930 | 0.9614 |

| Gaussian blurring | 1.0000 | 0.5664 | 0.7232 | 0.9797 | 1.0000 | 0.6634 | 0.7976 | 0.9807 | 0.9584 | 0.8046 | 0.8748 | 0.9481 | 1.0000 | 0.8758 | 0.9338 | 0.9989 |

| Circular blurring | 0.9943 | 0.5708 | 0.7253 | 0.9624 | 0.9951 | 0.6645 | 0.7969 | 0.9644 | 0.8596 | 0.8155 | 0.8370 | 0.9081 | 1.0000 | 0.8758 | 0.9338 | 0.9989 |

| Motion blurring | 1.0000 | 0.5654 | 0.7223 | 0.9800 | 1.0000 | 0.6656 | 0.7992 | 0.9857 | 0.9867 | 0.8079 | 0.8884 | 0.9618 | 1.0000 | 0.8758 | 0.9338 | 0.9989 |

| Average filtering | 0.9451 | 0.6002 | 0.7342 | 0.9201 | 0.9574 | 0.6852 | 0.7987 | 0.9203 | 0.6915 | 0.8328 | 0.7556 | 0.8349 | 1.0000 | 0.8758 | 0.9338 | 0.9989 |

| Median filtering | 0.7954 | 0.6438 | 0.7116 | 0.8366 | 0.9077 | 0.6961 | 0.7879 | 0.9038 | 0.7795 | 0.8297 | 0.8038 | 0.8851 | 1.0000 | 0.8769 | 0.9344 | 0.9958 |

| Wiener filtering | 0.9818 | 0.5871 | 0.7348 | 0.9369 | 0.9842 | 0.6776 | 0.8026 | 0.9542 | 0.8659 | 0.8177 | 0.8411 | 0.9195 | 1.0000 | 0.8769 | 0.9344 | 0.9984 |

| Image sharpening | 0.7271 | 0.7081 | 0.7174 | 0.7958 | 0.7901 | 0.7789 | 0.7844 | 0.8581 | 0.6722 | 0.9292 | 0.7801 | 0.8982 | 0.6579 | 0.9434 | 0.7749 | 0.8685 |

| Image scaling | 0.9923 | 0.5599 | 0.7159 | 0.9521 | 0.9952 | 0.6754 | 0.8047 | 0.9657 | 0.9716 | 0.8210 | 0.8899 | 0.9640 | 1.0000 | 0.8780 | 0.9350 | 0.9988 |

| Illumination correction | 0.5008 | 0.9978 | 0.6669 | 0.6043 | 0.6003 | 0.8638 | 0.7084 | 0.7389 | 0.9973 | 0.8111 | 0.8946 | 0.9915 | 0.8843 | 0.9161 | 0.8999 | 0.9649 |

| JPEG compression | 0.9925 | 0.5763 | 0.7292 | 0.9420 | 0.9779 | 0.6754 | 0.7990 | 0.9537 | 0.9720 | 0.8368 | 0.8994 | 0.9627 | 0.7145 | 0.9270 | 0.8070 | 0.8859 |

| Method | Similar Images | Tampered Image |

|---|---|---|

| DWT | 95.64% | 95.81% |

| Semi-Supervised (DWT) | 95.65% | 95.78% |

| OUR (DWT) | 96.19% | 97.14% |

| SVD | 84.97% | 84.92% |

| Semi-Supervised (SVD) | 85.12% | 85.08% |

| OUR (SVD) | 86.06% | 85.46% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, L.; He, Z.; Wang, Y.; Wang, X.; Ho, A.T.S. An Image Hashing Algorithm for Authentication with Multi-Attack Reference Generation and Adaptive Thresholding. Algorithms 2020, 13, 227. https://doi.org/10.3390/a13090227

Du L, He Z, Wang Y, Wang X, Ho ATS. An Image Hashing Algorithm for Authentication with Multi-Attack Reference Generation and Adaptive Thresholding. Algorithms. 2020; 13(9):227. https://doi.org/10.3390/a13090227

Chicago/Turabian StyleDu, Ling, Zehong He, Yijing Wang, Xiaochao Wang, and Anthony T. S. Ho. 2020. "An Image Hashing Algorithm for Authentication with Multi-Attack Reference Generation and Adaptive Thresholding" Algorithms 13, no. 9: 227. https://doi.org/10.3390/a13090227

APA StyleDu, L., He, Z., Wang, Y., Wang, X., & Ho, A. T. S. (2020). An Image Hashing Algorithm for Authentication with Multi-Attack Reference Generation and Adaptive Thresholding. Algorithms, 13(9), 227. https://doi.org/10.3390/a13090227