Abstract

Image hashing-based authentication methods have been widely studied with continuous advancements owing to the speed and memory efficiency. However, reference hash generation and threshold setting, which are used for similarity measures between original images and corresponding distorted version, are important but less considered by most of existing models. In this paper, we propose an image hashing method based on multi-attack reference generation and adaptive thresholding for image authentication. We propose to build the prior information set based on the help of multiple virtual prior attacks, and present a multi-attack reference generation method based on hashing clusters. The perceptual hashing algorithm was applied to the reference/queried image to obtain the hashing codes for authentication. Furthermore, we introduce the concept of adaptive thresholding to account for variations in hashing distance. Extensive experiments on benchmark datasets have validated the effectiveness of our proposed method.

1. Introduction

With the aid of sophisticated photoediting software, multimedia content authentication is becoming increasingly prominent. Images edited by Photoshop may mislead people and cause social crises of confidence. In recent years, image manipulation has received a lot of criticism for its use in altering the appearance of image content to the point of making it unrealistic. Hence, tampering detection, a scheme that identifies the integrity and authenticity of the digital multimedia data, has emerged as an important research topic. Perceptual image hashing [1,2,3,4] supports image content authentication by representing the semantic content in a compact signature, which should be sensitive to content altering modifications but robust against content preserving manipulations such as blur, noise and illumination correction [5,6,7].

A perceptual image hashing system generally consists of three pipeline stages: the pre-processing stage, the hashing generation stage and the decision making stage. The major purpose of pre-processing is to enhance the robustness of features by preventing the effects of some distortions. After that, the reference hashes are generated and transmitted through a secure channel. For the test image, the same perceptual hash process will apply to the queried image to be authenticated. After the image hashing is generated, the task of image authentication can be validated by the decision making stage. The reference hash will be compared with image hashes in the test database for content authentication based on the selected distance metric, such as Hamming distance. Currently the majority of perceptual hashing algorithms for authentication application can roughly be divided into the five categories: invariant feature transform-based methods [8,9,10,11,12,13], local feature points-based schemes [14,15,16,17,18,19,20,21,22,23], dimension reduction-based hashing [24,25,26,27,28,29], statistical features-based hashing [30,31,32,33,34,35] and leaning-based hashing [36,37,38,39].

For the decision making stage of perceptual hashing-based image authentication framework, only a few studies have been devoted to the reference generation and threshold selection. For reference hashing generation, Lv et al. [36] proposed obtaining an optimal estimate of the hash centroid using kernel density estimation (KDE). In this method, the centroid was obtained as the value which yields the maximum estimated distribution. Its major drawbacks are that the binary codes are obtained by using a data independent method. Since the hashing generation is independent of the data distribution, data independent hashing methods may not consider the characteristics of data distribution in hashing generation. Currently, more researchers are beginning to focus on the data dependent methods with learning for image tamper detection. Data dependent methods with learning [40,41,42,43] can be trained to optimally fit data distributions and specific objective functions, which produce better hashing codes to preserve the local similarity. In our previous work [44], we proposed a reference hashing method based on clustering. This algorithm makes the observation that the hashes of the original image actually not be the centroid of its cluster set. Therefore, how to learn the reference hashing code for solving multimedia security problems is an important topic for current research. As for authentication decision making, the simple way is to use threshold-based classifiers. Actually, perceptual differences under the image manipulations are often encountered when information is provided by different textural images. Traditional authentication tasks aim to identify the tampered results from distance values among different image codes. In this kind of task, the threshold is regarded as a fixed value. However, in a number of real-world cases, the objective truth cannot be identified by one fixed threshold for any image.

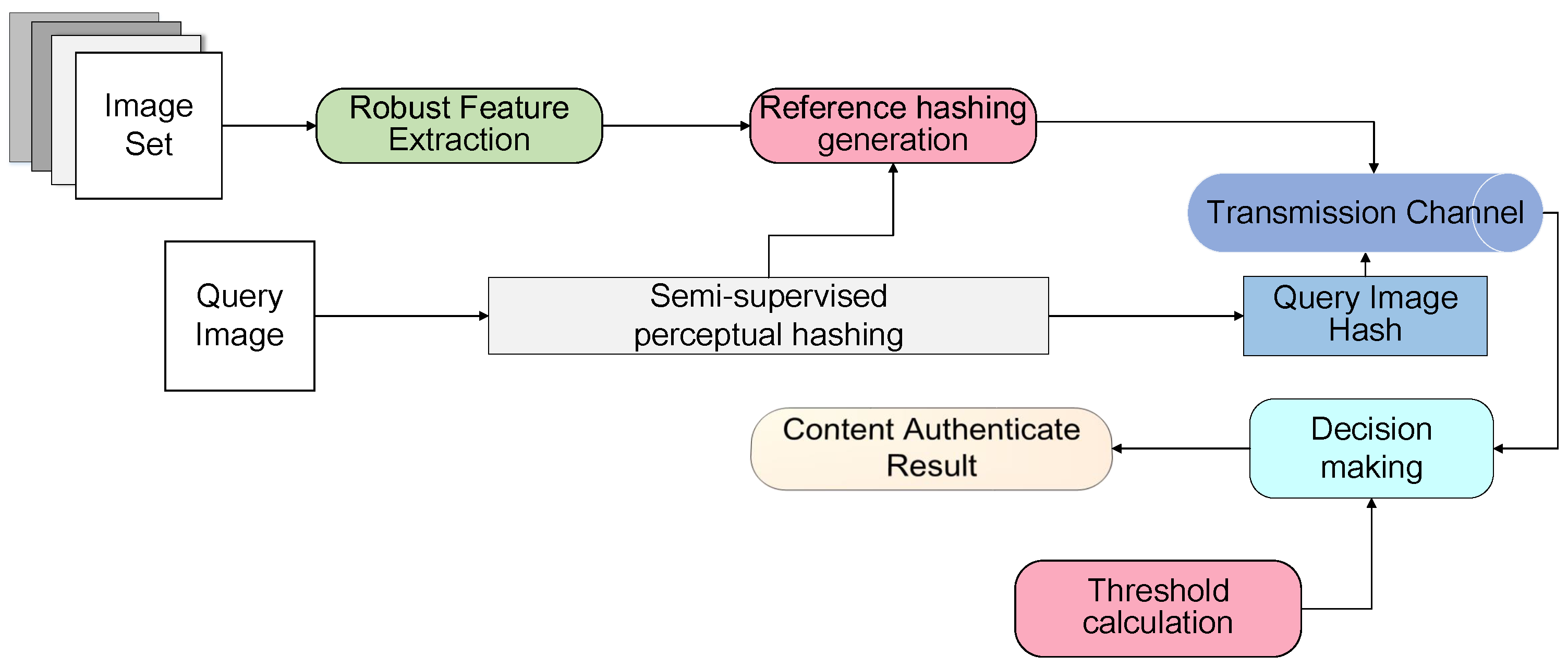

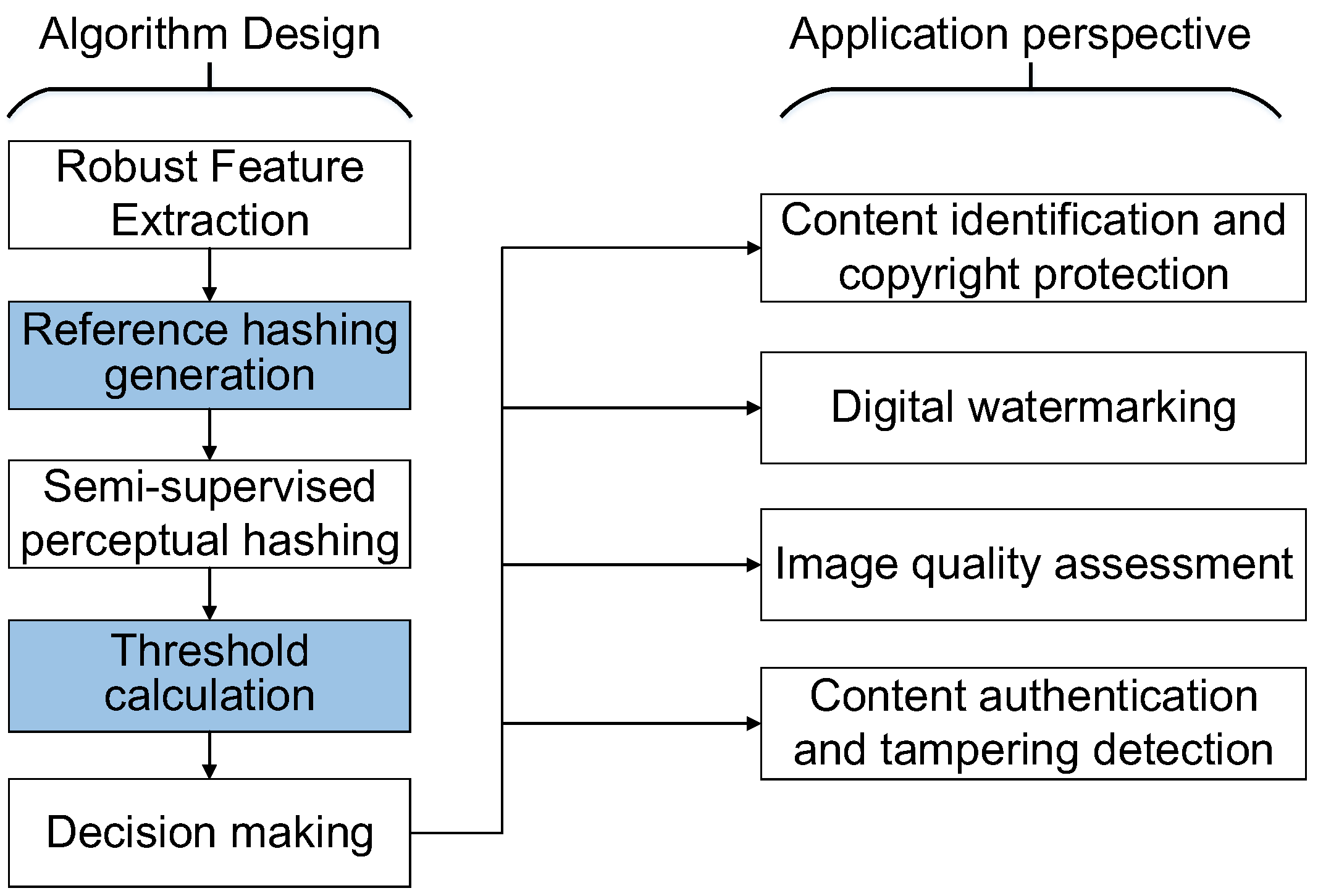

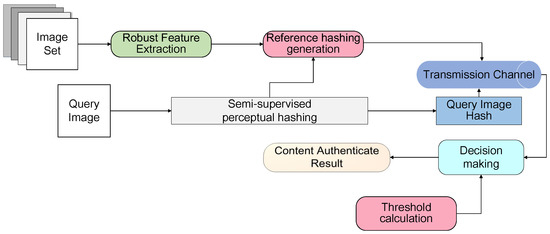

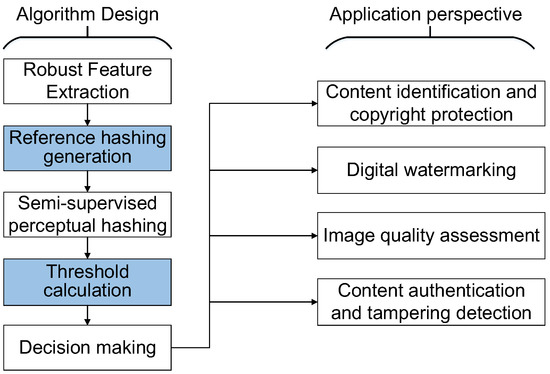

In this paper, we extend our previous work [44] and propose an image hashing algorithm framework for authentication with multi-attack reference generation and adaptive thresholding. According to the requirement of authentication application, we propose to build the prior information set based on the help of multiple virtual prior attacks, which is produced by applying virtual prior distortions and attacks on the original images. Differently from the traditional image authentication task, we address this uncertainty and introduce the concept of adaptive thresholding to account for variations in hashing distance. The main difference here is that a different threshold value is computed for each image. This technique provides more robustness to changes in image manipulations. We propose a data dependent semi-supervised image authentication scheme by using an attack-specific, adaptive threshold to generate a hashing code. This threshold tag is embedded in the hashing code transmission which can be reliably extracted at the receiver. The framework of our algorithm is shown in Figure 1. We firstly introduce the proposed multi-attack reference hashing algorithm. Then, we describe how original reference images were generated for experiments. After that, the perceptual hashing process was applied to the reference/queried image to be authenticated, so as to obtain the hashing codes. Finally, the reference hashes were compared with queried image hashes in the test database for content authentication.

Figure 1.

Block diagram of the proposed algorithm.

2. Problem Statement and Contributions

Authentication is an important issue of multimedia data protection; it makes possible to trace the author of the multimedia data and to allow the determination of whether an original multimedia data content was altered in any way from the time of its recording. The hash value is a compact abstract of the content. We can re-generate a hash value from the received content, and compare it with the original hash value. If they match, the content is considered as authentic. In the proposed algorithm, we aim to compute the common hashing function for image authentication work. Let indicate a decision making function for comparing two hash values. For given thresholds , the perceptual image hashing for tamper detection should satisfy the following criteria. If two images and are perceptually similar, their corresponding hashes need to be highly correlated, i.e., . Otherwise, If z is the tampered image from x, we should have .

The main contributions can be summarized as follows:

- (1)

- We propose building the prior information set based on the help of multiple virtual prior attacks, which we did by applying virtual prior distortions and attacks to the original images. On the basis of said prior image set we aimed to infer the clustering centroids for reference hashing generation, which is used for a similarity measure.

- (2)

- We effectively exploited the semi-supervised information into the perceptual image hashing learning. Instead of determining metric distance on training results, we explored the hashing distance for thresholding by considering the effect on different images.

- (3)

- In order to account for variations in exacted features of different images, we took into account the pairwise variations among different originally-received image pairs. Those adaptive thresholding improvements maximally discriminate the malicious tampering from content-preserving operations, leading to an excellent tamper detection rate.

3. Proposed Method

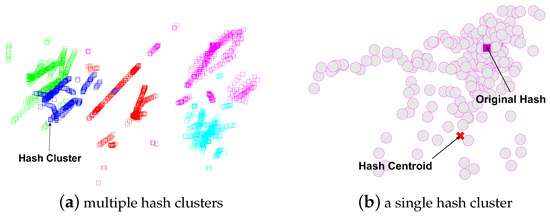

3.1. Multi-Attack Reference Hashing

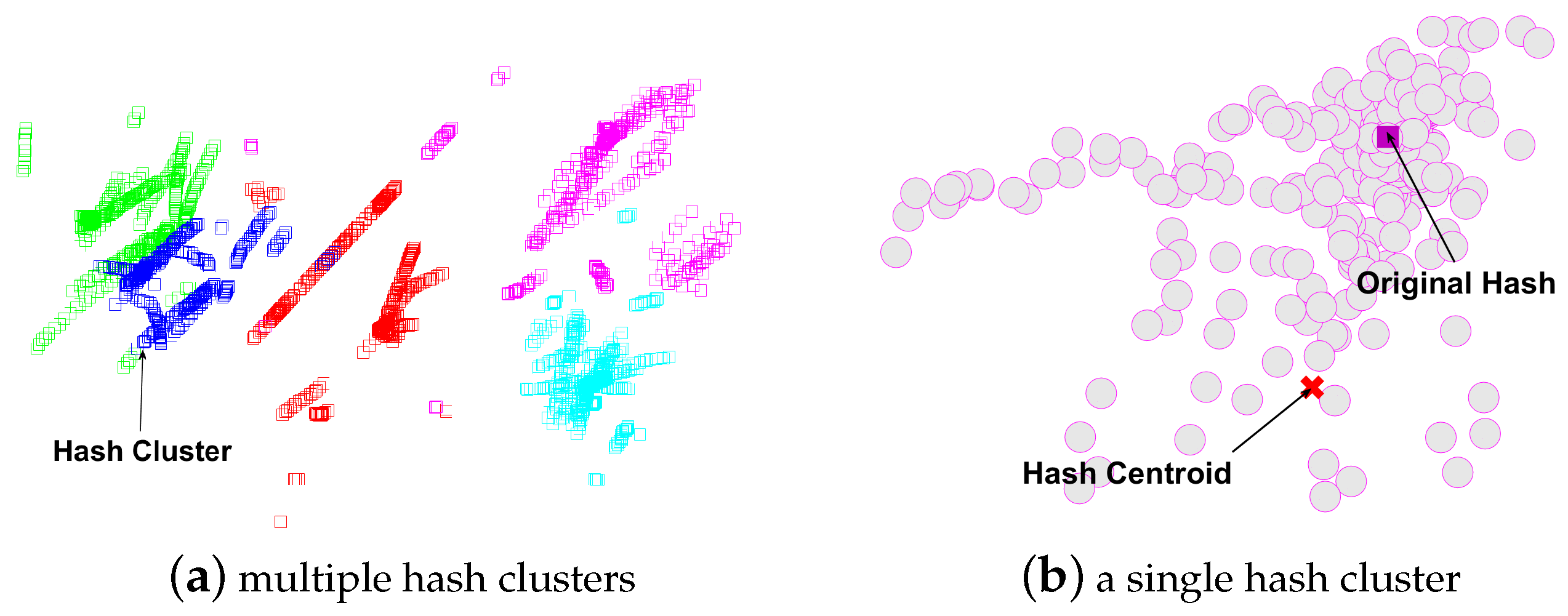

Currently, most image hashing method take the original image as the reference. However, the image hashes arising from the original image may not be the hash centroid of the distorted copies. As shown in Figure 2a, we applied 15 classes of attacks on five original images and represent their hashes in 2-dimensional space for both the original images and their distorted copies. From Figure 2a, we can observe five clusters in the hashing space. From Figure 2b by zooming into one hash cluster, we note an observation that the hashes of the original image actually may not be the centroid of its cluster.

Figure 2.

The examples of hash clusters.

For l original images in the dataset, we apply V type content preserving attacks with different types of parameter settings to generate simulated distorted copies. Let us denote the feature matrix of attacked instances in set as . Here, , m is the dimensionality of data feature and t is the number of instances for attack v. Finally, we get the feature matrices for the total n instance as , and here . Note that the feature matrices are normalized to zero-centered.

By considering the total reconstruction errors of all the training objects, we have the following minimization problem in a matrix form, which jointly exploits the information from various content preserving multi-attack data.

where is the shared latent multi-attack feature representation. The matrix can be viewed as the basis matrix, which maps the input multi-attack features onto the corresponding latent features. Parameter is a nonnegative weighting vector to balance the significance.

From the information-theoretic point of view, the variance over all data is measured, and taken as a regularization term:

where is a nonnegative constant parameter.

The image reference for authentication is actually an infinite clustering problem. The reference is usually generated based on the cluster centroid image. Therefore, we also consider keeping the cluster structures. We formulate this objective function as:

where and are the clustering centroid and indicator.

Finally, the formulation can be written as:

Our objective function simultaneously learns the feature representations and finds the mapping matrix , the cluster centroid and indicator . The iterative optimization algorithm is as follows.

Fixing all variables but optimize : The optimization problem (Equation (4)) reduces to:

By setting the derivation =0, we have:

Fix all variables but optimize : Similarly, we solve the following optimization problem:

which has a closed-form optimal solution:

Fix all variables but and : For the cluster centroid and indicator , we obtain the following problem:

Inspired by the optimization algorithm ADPLM (adaptive discrete proximal linear method) [45], we initialize and update as follows:

where , = 0.001, denote the p-th iteration.

The indicator matrix at indices is obtained by:

where is the distance between the i-th feature codes and the s-th cluster centroid .

After we infer the cluster centroid and the multi-attack feature representations , the corresponding l reference images are generated. The basic idea is to compare the hashing distances among the nearest content, preserving the attacked neighbors of each original image and corresponding cluster centroid.

3.2. Semi-Supervised Hashing Code Learning

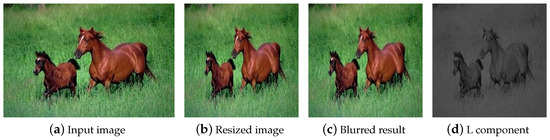

For the reference and received images, we use the semi-supervised learning algorithm for hashing code generation and image authentication. Firstly, all the input image is converted to a normalized size by using the bi-linear interpolation. The resizing operation makes our hashing robust against image rescaling. Then, the Gaussian low-pass filter is used to blur the resized image, which can reduce the influences of high-frequent components on the image, such as noise contamination or filtering. Let be the element in the i-th row and the j-th column of the convolution mask. It is calculated by

in which is defined as

where is the standard deviation of all elements in the convolution mask.

Next, the RGB color image is converted into the CIE LAB space and the image is represented by the L component. The reason is that the L component closely matches human perception of lightness. The RGB color image is firstly converted into the XYZ color space by the following formula:

where R, G, and B are the red, green and blue components of the color pixel. We convert it into the CIE LAB space by the following equation:

where = 0.950456, = 1.0 and = 1.088754 are the CIE XYZ tristimulus values of the reference white point, and is determined by:

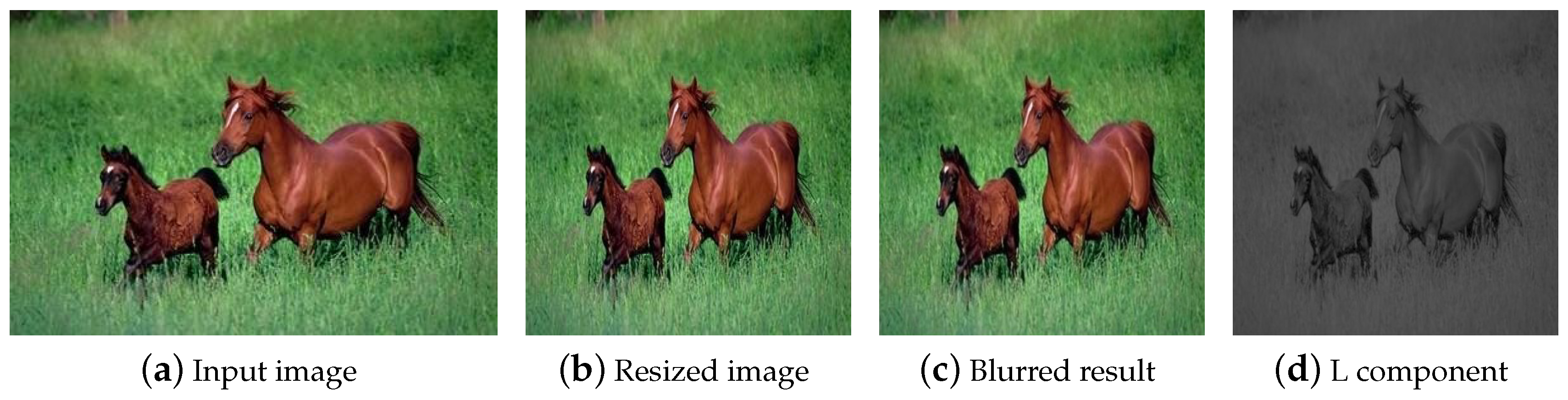

Figure 3 illustrates an example of the preprocessing.

Figure 3.

An example of preprocessing.

Let us say we have N images in our training set. Select L images as labeled images, . The features of a single image are expressed as , where M is the extracted feature length. The features of all images are represented as , where . The features of labeled images are represented as . Note that these feature matrices are normalized to zero-centered. The goal of our algorithm is to learn hash functions that map to a compact representation in a low-dimensional Hamming space, where K is the digits length. Our hash function is defined as:

The hash function of a single image is defined as:

In order to learn a that is simultaneously maximizing the empirical accuracy on the labeled image and the variance of hash bits over all images, the empirical accuracy on the labeled image is defined as:

where matrix is the classification of marked image pairs, as follows:

Specifically, a pair is denoted as a perceptually similar pair when the two images are the same images or the attacked images of a same image, and a pair is denoted as a perceptually different pair when the two images are different images or when one suffered from malicious manipulations or perceptually significant attacks.

Equation (19) can also be represented as:

This relaxation is quite intuitive. That is, the similar images are desired to not only have the same sign but also large projection magnitudes, while the projections for dissimilar images not only have different signs but also are as different as possible.

Moreover, to maximize the amount of information per hash bit, we want to calculate the maximum variance of all hash bits of all images and use it as a regularization term of the hash function.

Due to the indifferentiability of the above function, it is difficult to calculate its extreme value. However, the maximum variance of the hash function is the lower bound of the scale variance of the projected data, so the information theoretic regularization is represented as:

Finally, the overall semi-supervised objective function combines the relaxed empirical fitness term from Equation (21) and the regularization term from Equation (23).

where is a tradeoff parameter. The optimization problem is as follows:

where the constraint makes the projection directions orthogonal. We learn the optimal projection that is obtained by means of eigenvalue decomposition of matrix .

3.3. Adaptive Thresholds-Based Decision Making

To measure the similarity between hashes of original and attacked/tampered images, the metric distance between two hashing code is calculated by:

where and are two image hashes. In general, the more similar the images, the smaller the distance. The greater the difference, the greater the distance.

Then, the threshold T is defined to judge whether the image is a similar image or a tampered image.

If the distance is less than a given threshold, the two images are judged as visually identical images. Otherwise, they are judged as distinct images.

Traditional image tamper detection algorithms take a fixed value as the threshold to judge similar images/tampered images. However, due to the different characteristics among images, some images cannot be correctly judged by the fixed threshold value. In our adaptive thresholds algorithm, we firstly find the maximum value for the distance value of the similar images and the minimum value for the distance value of the tampered images. In order to prevent the two values from being too extreme, we set the following limits:

where is the distance between the similar image and the original image; is the distance between the tampered image and the original image. and are two constants set experimentally. Then, the resulting maximum and minimum values are compared with fixed thresholds:

where is a fixed threshold obtained experimentally, is the adaptive threshold suitable for this image. Then, all images have their own thresholds, which are represented as:

Finally, we put the adaptive threshold at the top of the hash code and transfer it along with the hash code. Thus, the final hash code is represented as:

4. Experiments

4.1. Data

Our experiments were carried out on two real-world datasets. The first came from the CASIA [46], which contains 918 image pairs, including 384 × 256 real images and corresponding distorted images with different texture characteristics. The other one was RTD [47,48], which contains 220 real images and corresponding distorted images with resolution 1920 × 1080.

To ensure that the images of the training set were different from the images of the testing set, we selected 301 non-repetitive original images and their corresponding tampered images to generate 66,231 images as our training data. Furthermore, 10,000 images were randomly selected from 66,231 images as a labeled subset. We adopted 226 repetitive original images and their corresponding set of tampered images to determine the threshold value of each image. The remaining images in CASIA and RTD datasets were used to test performance.

4.2. Baselines

We compared our proposed algorithm with a number of baselines. In particular, we compared it with:

Wavelet-based image hashing [49]: It is an invariant feature transform-based method, which develops an image hash from the various sub-bands in a wavelet decomposition of the image and makes it convenient to transform from the space-time domain to the frequency.

SVD-based image hashing [24]: It belongs to dimension reduction-based hashing and it uses spectral matrix invariants as embodied by singular value decomposition. The invariant features based on matrix decomposition show good robustness against noise addition, blurring and compressing attacks.

RPIVD-based image hashing [30]: It incorporates ring partition and invariant vector distance into image hashing by calculating the images statistics. The statistical information of the images includes: mean, variance, standard deviation, kurtosis, etc.

Quaternion-based image hashing [12]: This method considers multiple features, and constructs a quaternion image to implement a quaternion Fourier transform for hashing generation.

4.3. Perceptual Robustness

To validate the perceptual robustness of proposed algorithm, we applied twelve types of content preserving operations: (a) Gaussian noise addition with the variance of 0.005. (b) Salt and pepper noise addition with a density of 0.005. (c) Gaussian blurring with the standard deviation of the filter 10. (d) Circular blurring with a radius of 2. (e) Motion blurring with the amount of the linear motion 3 and the angle of the motion blurring filter 45. (f) Average filtering with filter size of 5. (g) Median filtering with filter size of 5. (h) Wiener filtering with filter size of 5. (i) Image sharpening with the parameter alpha of 0.49. (j) Image scaling with the percentage 1.2. (k) Illumination correction with parameter gamma 1.18. (l) JPEG compression with quality factor 20.

We extracted the reference hashing code based on the original image (ORH) and our proposed multi-attack reference hashing (MRH). For the content-preserving distorted images, we calculated the corresponding distances between reference hashing codes and content-preserving images’ hashing codes. The statistical results under different attacks are presented in Table 1. Just as shown, the hashing distances for the four baseline methods were small enough. In our experiments, we set the threshold = 0.12 to distinguish the similar images and forgery images from the CASIA dataset for the PRIVD method. Similarly, for the other three methods, we set the thresholds as 1.2, 0.0012 and 0.008 correspondingly for their best results.

Table 1.

Hashing distances under different content-preserving manipulations.

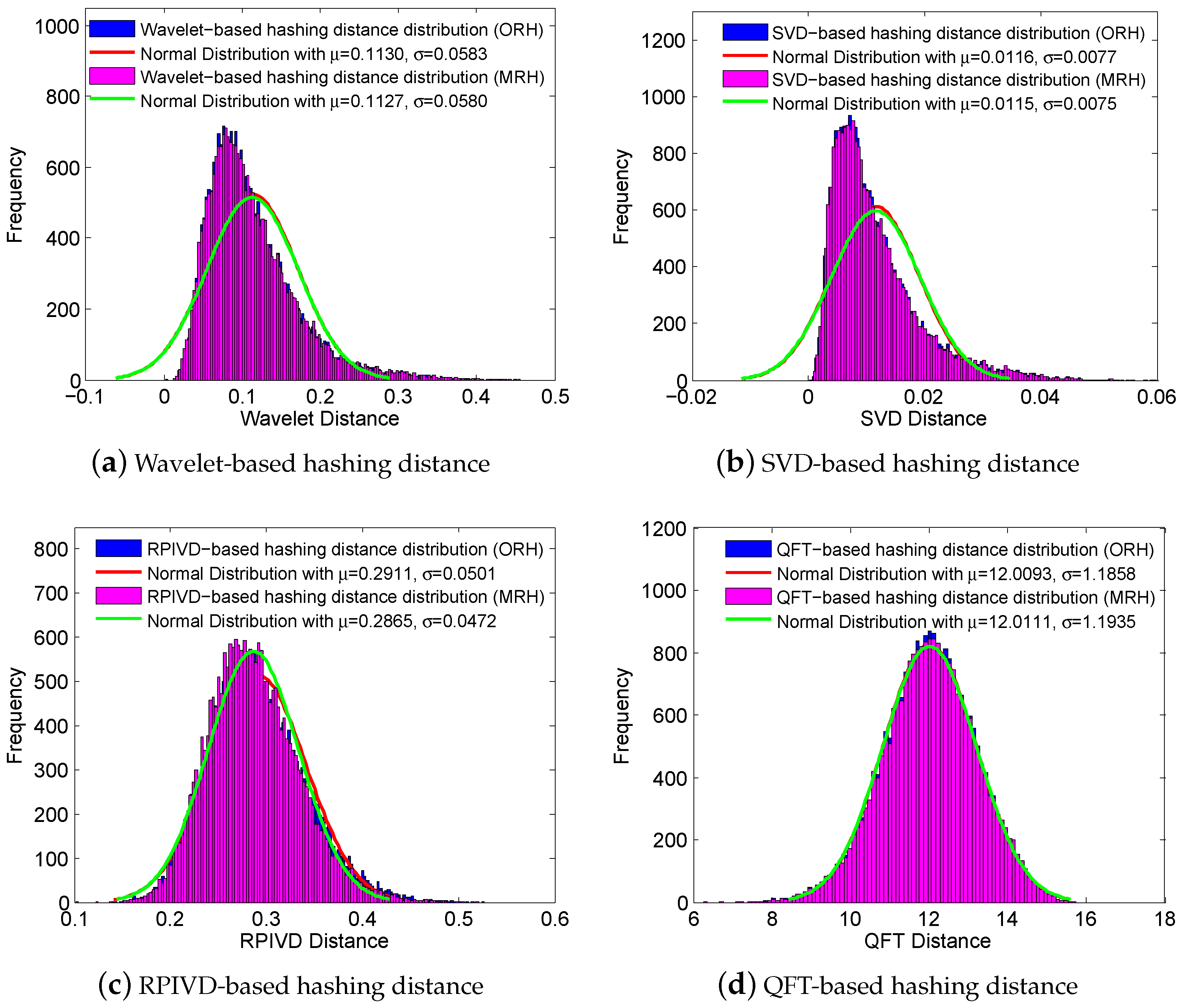

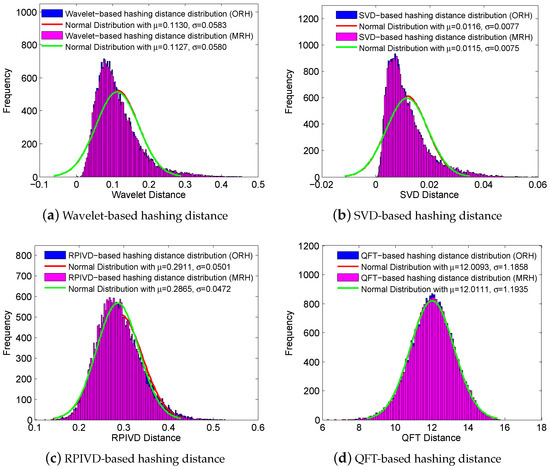

4.4. Discriminative Capability

The discriminative capability of a image hashing means that visually distinct images should have significantly different hashes. In other words, two images that are visually distinct should have a very low probability of generating similar hashes. Here, RTD dataset consisting of 220 different uncompressed color images was adopted to validate the discriminative capability of our proposed multi-attack reference hashing algorithm. We first extracted reference hashing codes for all 220 images in RTD and then calculated the hashing distance for each image with the other 219 images. Thus, we can finally obtained 220 × (220−1)/2 = 24,090 hashing distances. Figure 4 shows the distribution of these 24,090 hashing distances between hashing pairs with varying thresholds, where the abscissa is the hashing distance and the ordinate represents the frequency of hashing distance. It can be seen clearly from the histogram that the proposed method has good discriminative capability. For instance, we set = 0.12 as the threshold on CASIA dataset when extracting the reference hashing by RPIVD method. The minimum value for hashing distance was 0.1389, which is above the threshold. The results show that the multi-attack reference hashing can replace the original image-based reference hashing with good discrimination.

Figure 4.

Distribution of hashing distances between hashing pairs with varying thresholds.

4.5. Authentication Results

As the reference hashing performance for authentication, we compared the proposed multi-attack reference hashing (MRH) with original image-based reference hashing (ORH) on four baseline image hashing methods, i.e., wavelet-based image hashing, SVD-based image hashing, RPIVD-based image hashing and QFT-based image hashing, with twelve content-preserving operations. The results are shown in Table 2 and Table 3. Note that higher values indicate better performance for all metrics. It was observed that the proposed MRH algorithm outperformed the ORH algorithm by a clear margin, irrespective of the content preserving operation and image datasets (RTD and CASIA). This is particularly evident for illumination correction. For instance, in contrast to original image-based reference hashing, the multi-attack reference hashing increased the AUC of illumination correction by 21.98% on the RTD image dataset when getting the reference hashing by wavelet, as shown in Table 2. For the QFT approach, the multi-attack reference hashing we proposed was more stable and outstanding than other corresponding reference hashings. Since the QFT robust image hashing technique is used to process the three channels of the color image, the chrominance information of the color image can be prevented from being lost and image features are more obvious. Therefore, the robustness of the multi-attack reference hashing is more able to resist geometric attacks and content preserving operations. For instance, the multi-attack reference hashing increased the precision of Gaussian noise by 3.28% on the RTD image.

Table 2.

Comparisons between the original image-based reference hashing and the proposed multi-attack reference hashing (RTD dataset).

Table 3.

Comparisons between original image-based reference hashing and the proposed multi-attack reference hashing (CASIA dataset).

For performance analysis, we took wavelet-based and SVD-based image hashing to extract features and used the semi-supervised method to train for each content-preserving manipulations. The experimental results are summarized in Table 4. They show the probability of the true authentication capability of the proposed method compared to the methods: wavelet-based, SVD-based features and the corresponding semi-supervised method. Here, for the wavelet-based method, and ; and for SVD-based method, and . The column of similar image represents the true authentication capability of the judgment of a similar image, which indicates the robustness of the algorithm. The column of tampering image represents the true authentication capability of tampering image, which indicates the discrimination of the algorithm. Higher values mean better robustness and differentiation. Only our approach selected adaptive thresholds, as other approaches choose a fixed threshold that balances robustness and discrimination.

Table 4.

Result for the probability of true authentication capability.

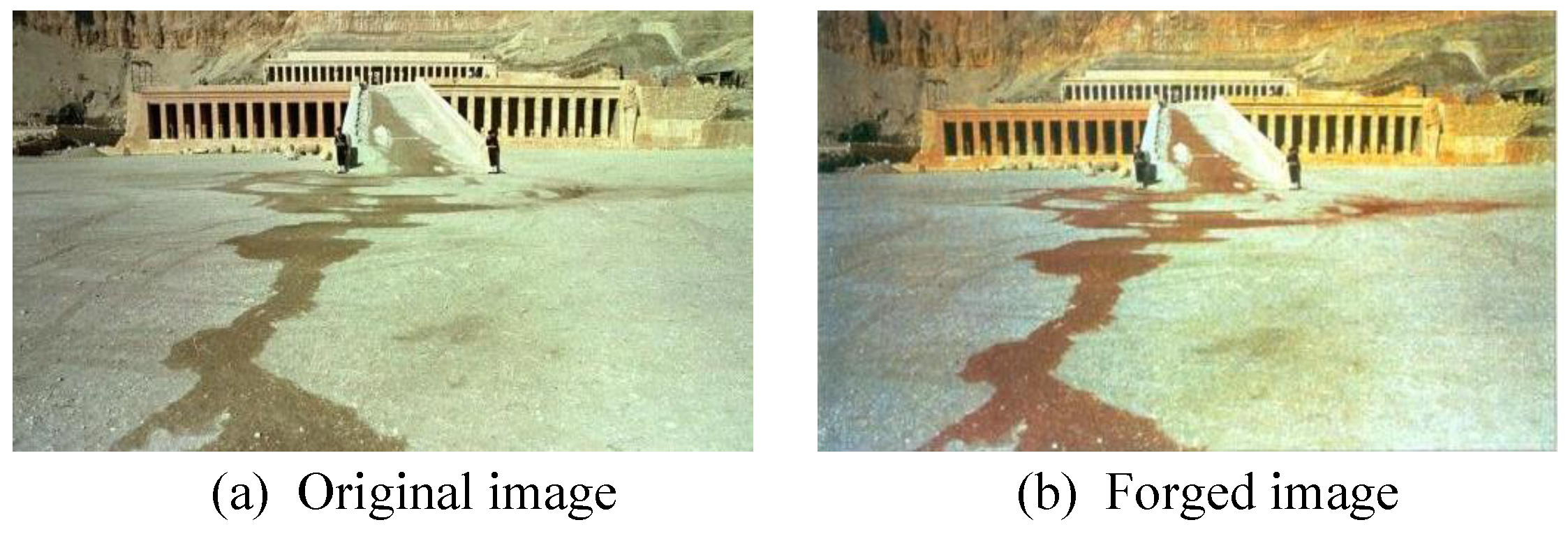

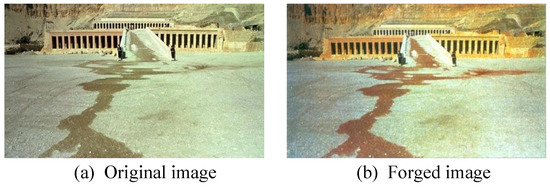

5. Domains of Application

With the aid of sophisticated photoediting software, multimedia content security is becoming increasingly prominent. By using image editing tool, such as Photoshop, the counterfeiters can easily tamper the color attribute to distort the actual meanings of images. Figure 5 shows some real examples for image tamper. These edited images spread over the social network, which not only disturb our daily lives, but also seriously threat our social harmony and stability. If tampered images are extensively used in the official media, scientific discovery, and even forensic evidence, the degree of trustworthiness will undoubtedly be reduced, thus having a serious impact on various aspects of society.

Figure 5.

The German-language daily tabloid, Blick, forged the flooding water to blood red, and distributed the falsified image to news channels.

Many image hashing algorithms are widely used in image authentication, image copy detection, digital watermarking, image quality assessment and other fields, as shown in Figure 6. Perceptual image hashing aims to be smoothly invariant to small changes in the image (rotation, crop, gamma correction, noise addition, adding a border). This is in contrast to cryptographic hash functions that are designed for non-smoothness and to change entirely if any single bit changes. Our proposed perceptual image hashing algorithm is mainly for image authentication applications. Our technique is suitable for processing large image data, making it a valuable tool for image authentication applications.

Figure 6.

A generic framework of image hashing and an application perspective.

6. Conclusions

In this paper, we have proposed a hashing algorithm based on multi-attack reference generation and adaptive thresholding for image authentication. We effectively exploited simultaneously the supervised content-preserving images and multiple attacks for feature generation and the hashing learning. We specially take into account the pairwise variations among different originally-received image pairs, which makes the threshold more adaptable and the value more reasonable. We performed extensive experiments on two image datasets and compared our results with the state-of-the-art hashing baselines. Experimental results demonstrated that the proposed method yields superior performance. For image hashing-based authentication, a scheme with not only high computational efficiency but also reasonable authentication performance is expected. Compared with other original image-based reference generation, the limitation of the our work is that it is time consuming for cluster operation. In the future work, we will design the co-regularized hashing for multiple features, which is expected to show even better performance.

Author Contributions

Conceptualization, L.D.; methodology, L.D., Z.H. and Y.W.; software, L.D., Z.H. and X.W.; validation, Z.H. and Y.W.; formal analysis, L.D., X.W. and A.T.S.H.; data curation, Z.H. and Y.W.; writing-original draft preparation, L.D., Z.H. and Y.W.; writing-review and editing, X.W., and A.T.S.H.; funding acquisition, L.D. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (grant number 61602344), and the Science and Technology Development Fund of Tianjin Education Commission for Higher Education, China (grant number 2017KJ091, 2018KJ222).

Conflicts of Interest

The authors declare that there are no conflict of interest regarding the publication of this article.

References

- Kalker, T.; Haitsma, J.; Oostveen, J.C. Issues with DigitalWatermarking and Perceptual Hashing. Proc. SPIE 2001, 4518, 189–197. [Google Scholar]

- Tang, Z.; Chen, L.; Zhang, X.; Zhang, S. Robust Image Hashing with Tensor Decomposition. IEEE Trans. Knowl. Data Eng. 2018, 31, 549–560. [Google Scholar] [CrossRef]

- Yang, H.; Yin, J.; Yang, Y. Robust Image Hashing Scheme Based on Low-Rank Decomposition and Path Integral LBP. IEEE Access 2019, 7, 51656–51664. [Google Scholar] [CrossRef]

- Tang, Z.; Yu, M.; Yao, H.; Zhang, H.; Yu, C.; Zhang, X. Robust Image Hashing with Singular Values of Quaternion SVD. Comput. J. 2020. [Google Scholar] [CrossRef]

- Tang, Z.; Ling, M.; Yao, H.; Qian, Z.; Zhang, X.; Zhang, J.; Xu, S. Robust Image Hashing via Random Gabor Filtering and DWT. CMC Comput. Mater. Contin. 2018, 55, 331–344. [Google Scholar]

- Karsh, R.K.; Saikia, A.; Laskar, R.H. Image Authentication Based on Robust Image Hashing with Geometric Correction. Multimed. Tools Appl. 2018, 77, 25409–25429. [Google Scholar] [CrossRef]

- Gharde, N.D.; Thounaojam, D.M.; Soni, B.; Biswas, S.K. Robust Perceptual Image Hashing Using Fuzzy Color Histogram. Multimed. Tools Appl. 2018, 77, 30815–30840. [Google Scholar] [CrossRef]

- Tang, Z.; Yang, F.; Huang, L.; Zhang, X. Robust image hashing with dominant dct coefficients. Optik Int. J. Light Electron Opt. 2014, 125, 5102–5107. [Google Scholar] [CrossRef]

- Lei, Y.; Wang, Y.-G.; Huang, J. Robust image hash in radon transform domain for authentication. Signal Process. Image Commun. 2011, 26, 280–288. [Google Scholar] [CrossRef]

- Tang, Z.; Dai, Y.; Zhang, X.; Huang, L.; Yang, F. Robust image hashing via colour vector angles and discrete wavelet transform. IET Image Process. 2014, 8, 142–149. [Google Scholar] [CrossRef]

- Ouyang, J.; Coatrieux, G.; Shu, H. Robust hashing for image authentication using quaternion discrete fourier transform and log-polar transform. Digit. Signal Process. 2015, 41, 98–109. [Google Scholar] [CrossRef]

- Yan, C.-P.; Pun, C.-M.; Yuan, X. Quaternion-based image hashing for adaptive tampering localization. IEEE Trans. Inf. Forensics Secur. 2016, 11, 2664–2677. [Google Scholar] [CrossRef]

- Yan, C.-P.; Pun, C.-M. Multi-scale difference map fusion for tamper localization using binary ranking hashing. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2144–2158. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, A.; Cao, Y.; Gao, Y.; Tan, R.; He, H.; Zhou, M. Robust image hashing based on hybrid approach of scale-invariant feature transform and local binary patterns. In Proceedings of the 2018 IEEE 23rd International Conference on Digital Signal Processing (DSP), Shanghai, China, 19–21 November 2018; pp. 1–5. [Google Scholar]

- Qin, C.; Hu, Y.; Yao, H.; Duan, X.; Gao, L. Perceptual image hashing based on weber local binary pattern and color angle representation. IEEE Access 2019, 7, 45460–45471. [Google Scholar] [CrossRef]

- Yan, C.-P.; Pun, C.-M.; Yuan, X.-C. Adaptive local feature based multi-scale image hashing for robust tampering detection. In Proceedings of the TENCON 2015—2015 IEEE Region 10 Conference, Macao, China, 1–4 November 2015; pp. 1–4. [Google Scholar]

- Yan, C.P.; Pun, C.M.; Yuan, X.C. Multi-scale image hashing using adaptive local feature extraction for robust tampering detection. Signal Process. 2016, 121, 1–16. [Google Scholar] [CrossRef]

- Pun, C.-M.; Yan, C.-P.; Yuan, X. Robust image hashing using progressive feature selection for tampering detection. Multimed. Tools Appl. 2017, 77, 11609–11633. [Google Scholar] [CrossRef]

- Qin, C.; Chen, X.; Luo, X.; Xinpeng, Z.; Sun, X. Perceptual image hashing via dual-cross pattern encoding and salient structure detection. Inf. Sci. 2018, 423, 284–302. [Google Scholar] [CrossRef]

- Monga, V.; Evans, B. Robust perceptual image hashing using feature points. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 677–680. [Google Scholar]

- Qin, C.; Chen, X.; Dong, J.; Zhang, X. Perceptual image hashing with selective sampling for salient structure features. Displays 2016, 45, 26–37. [Google Scholar] [CrossRef]

- Qin, C.; Sun, M.; Chang, C.-C. Perceptual hashing for color images based on hybrid extraction of structural features. Signal Process. 2018, 142, 194–205. [Google Scholar] [CrossRef]

- Anitha, K.; Leveenbose, P. Edge detection based salient region detection for accurate image forgery detection. In Proceedings of the IEEE International Conference on Computational Intelligence and Computing Research, Coimbatore, India, 18–20 December 2015; pp. 1–4. [Google Scholar]

- Kozat, S.S.; Mihcak, K.; Venkatesan, R. Robust perceptual image hashing via matrix invariances. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 3443–3446. [Google Scholar]

- Ghouti, L. Robust perceptual color image hashing using quaternion singular value decomposition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Florence, Italy, 4–9 May 2014; pp. 3794–3798. [Google Scholar]

- Abbas, S.Q.; Ahmed, F.; Zivic, N.; Ur-Rehman, O. Perceptual image hashing using svd based noise resistant local binary pattern. In Proceedings of the International Congress on Ultra Modern Telecommunications and Control Systems and Workshops, Lisbon, Portugal, 18–20 October 2016; pp. 401–407. [Google Scholar]

- Tang, Z.; Ruan, L.; Qin, C.; Zhang, X.; Yu, C. Robust image hashing with embedding vector variance of lle. Digit. Signal Process. 2015, 43, 17–27. [Google Scholar] [CrossRef]

- Sun, R.; Zeng, W. Secure and robust image hashing via compressive sensing. Multimed. Tools Appl. 2014, 70, 1651–1665. [Google Scholar] [CrossRef]

- Liu, H.; Xiao, D.; Xiao, Y.; Zhang, Y. Robust image hashing with tampering recovery capability via low-rank and sparse representation. Multimed. Tools Appl. 2016, 75, 7681–7696. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, X.; Li, X.; Zhang, S. Robust image hashing with ring partition and invariant vector distance. IEEE Trans. Inf. Forensics Secur. 2016, 11, 200–214. [Google Scholar] [CrossRef]

- Srivastava, M.; Siddiqui, J.; Ali, M.A. Robust image hashing based on statistical features for copy detection. In Proceedings of the IEEE Uttar Pradesh Section International Conference on Electrical, Computer and Electronics Engineering, Varanasi, India, 9–11 December 2017; pp. 490–495. [Google Scholar]

- Huang, Z.; Liu, S. Robustness and discrimination oriented hashing combining texture and invariant vector distance. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 1389–1397. [Google Scholar]

- Zhang, D.; Chen, J.; Shao, B. Perceptual image hashing based on zernike moment and entropy. Electron. Sci. Technol. 2015, 10, 12. [Google Scholar]

- Chen, Y.; Yu, W.; Feng, J. Robust image hashing using invariants of tchebichef moments. Optik Int. J. Light Electron Opt. 2014, 125, 5582–5587. [Google Scholar] [CrossRef]

- Hosny, K.M.; Khedr1, Y.M.; Khedr, W.I.; Mohamed, E.R. Robust image hashing using exact gaussian-hermite moments. IET Image Process. 2018, 12, 2178–2185. [Google Scholar] [CrossRef]

- Lv, X.; Wang, A. Compressed binary image hashes based on semisupervised spectral embedding. IEEE Trans. Inf. Forensics Secur. 2013, 8, 1838–1849. [Google Scholar] [CrossRef]

- Bondi, L.; Lameri, S.; Guera, D.; Bestagini, P.; Delp, E.J.; Tubaro, S. Tampering detection and localization through clustering of camera-based cnn features. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1855–1864. [Google Scholar]

- Yarlagadda, S.K.; Güera, D.; Bestagini, P.; Zhu, F.M.; Tubaro, S.; Delp, E.J. Satellite image forgery detection and localization using gan and one-class classifier. arXiv 2018, arXiv:1802.04881. [Google Scholar] [CrossRef]

- Du, L.; Chen, Z.; Ke, Y. Image hashing for tamper detection with multi-view embedding and perceptual saliency. Adv. Multimed. 2018, 2018, 4235268 . [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, L.; Nie, F.; Li, X.; Chen, Z.; Wang, F. WeGAN: Deep Image Hashing with Weighted Generative Adversarial Networks. IEEE Trans. Multimed. 2020, 22, 1458–1469. [Google Scholar] [CrossRef]

- Wang, Y.; Ward, R.; Wang, Z.J. Coarse-to-Fine Image DeHashing Using Deep Pyramidal Residual Learning. IEEE Signal Process. Lett. 2020, 22, 1295–1299. [Google Scholar] [CrossRef]

- Jiang, C.; Pang, Y. Perceptual image hashing based on a deep convolution neural network for content authentication. J. Electron. Imaging 2018, 27, 043055. [Google Scholar] [CrossRef]

- Peng, Y.; Zhang, J.; Ye, Z. Deep Reinforcement Learning for Image Hashing. IEEE Trans. Multimed. 2020, 22, 2061–2073. [Google Scholar] [CrossRef]

- Du, L.; Wang, Y.; Ho, A.T.S. Multi-attack Reference Hashing Generation for Image Authentication. In Proceedings of the Digital Forensics and Watermarking—18th International Workshop (IWDW 2019), Chengdu, China, 2–4 November 2019; pp. 407–420. [Google Scholar]

- Zheng, Z.; Li, L. Binary Multi-View Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1774–1782. [Google Scholar] [CrossRef] [PubMed]

- Dong, J.; Wang, W. Casia image tampering detection evaluation database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2003; pp. 422–426. [Google Scholar]

- Korus, P.; Huang, J. Evaluation of random field models in multi-modal unsupervised tampering localization. In Proceedings of the 2016 IEEE International Workshop on Information Forensics and Security, Abu Dhabi, UAE, 4–7 December 2016; pp. 1–6. [Google Scholar]

- Korus, P.; Huang, J. Multi-scale analysis strategies in prnu-based tampering localization. IEEE Trans. Inf. Forensics Secur. 2017, 12, 809–824. [Google Scholar] [CrossRef]

- Venkatesan, R.; Koon, S.M.; Jakubowski, M.H.; Moulin, P. Robust image hashing. In Proceedings of the IEEE International Conference on Image Processing, Thessaloniki, Greece, 7–10 October 2001; pp. 664–666. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).