Groundwater Prediction Using Machine-Learning Tools

Abstract

1. Introduction

2. Background on Groundwater Prediction

3. Techniques Used

3.1. Multivariate Linear Regression

3.2. Multilayer Perceptron

3.3. Random Forest

3.4. eXtreme Gradient Boosting

3.5. Support Vector Machine and Support Vector Regression

3.6. Gaussian Mixture Models

3.7. Performance Metrics

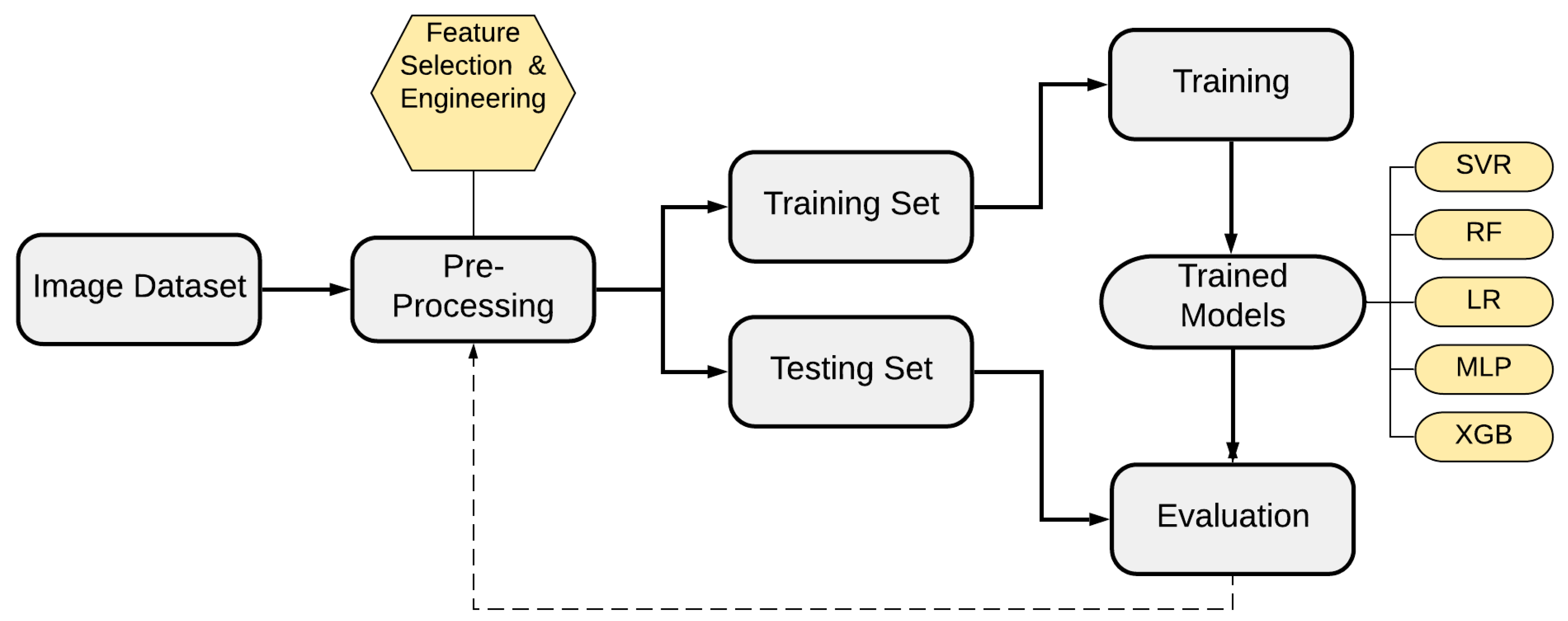

4. Groundwater Prediction Methodology

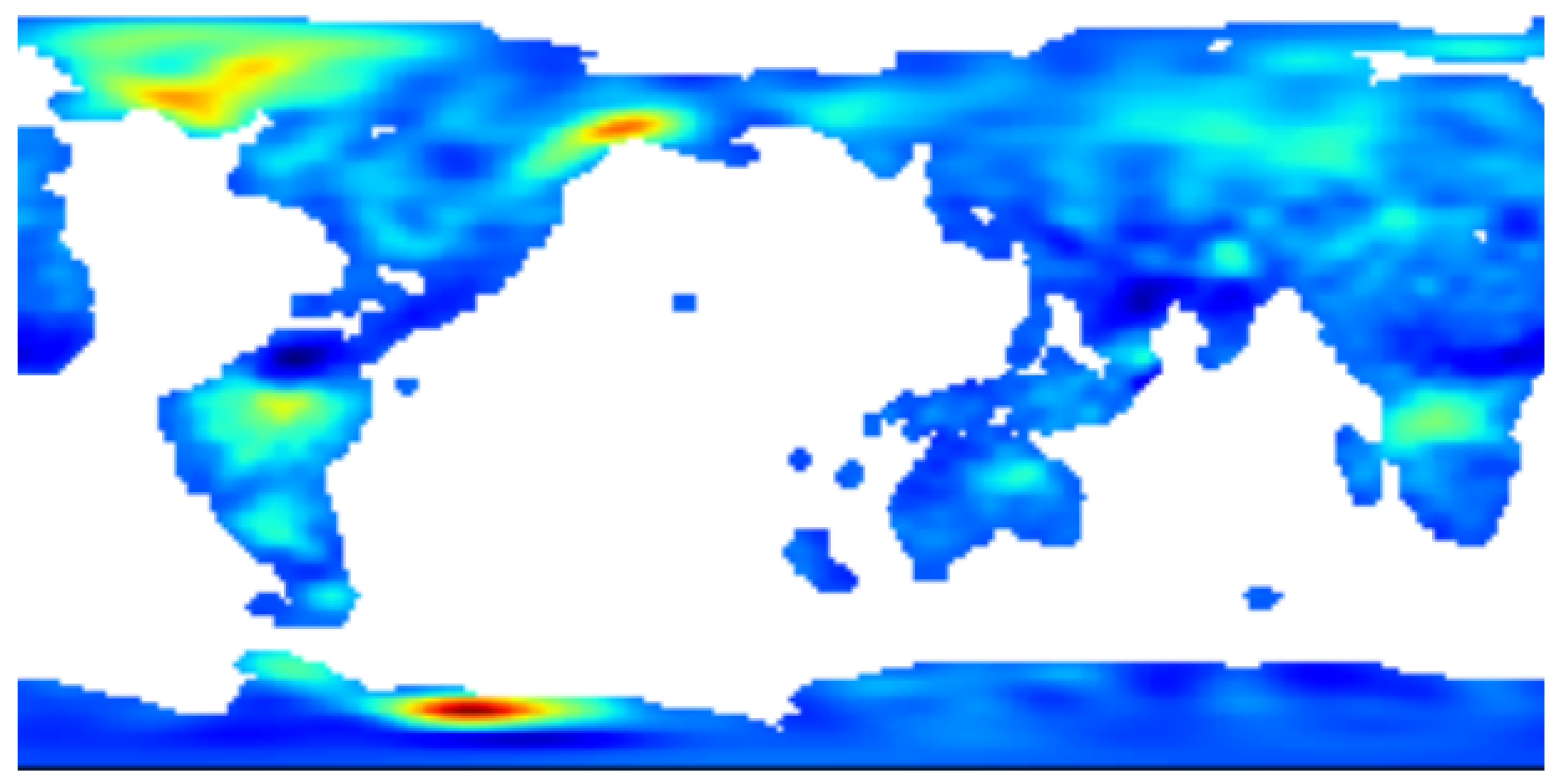

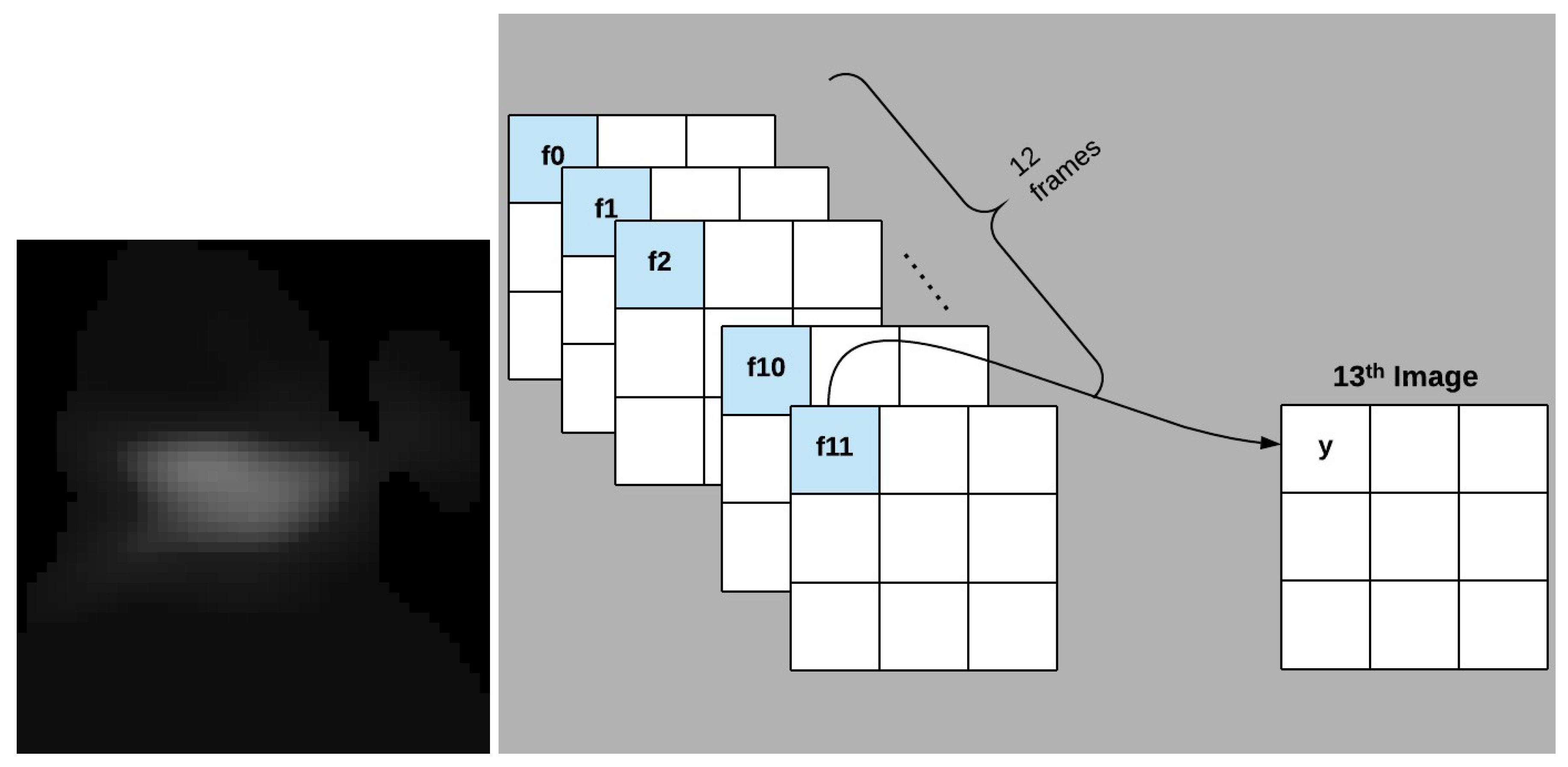

4.1. Monthly Groundwater Data Set

4.2. Image Pre-Processing

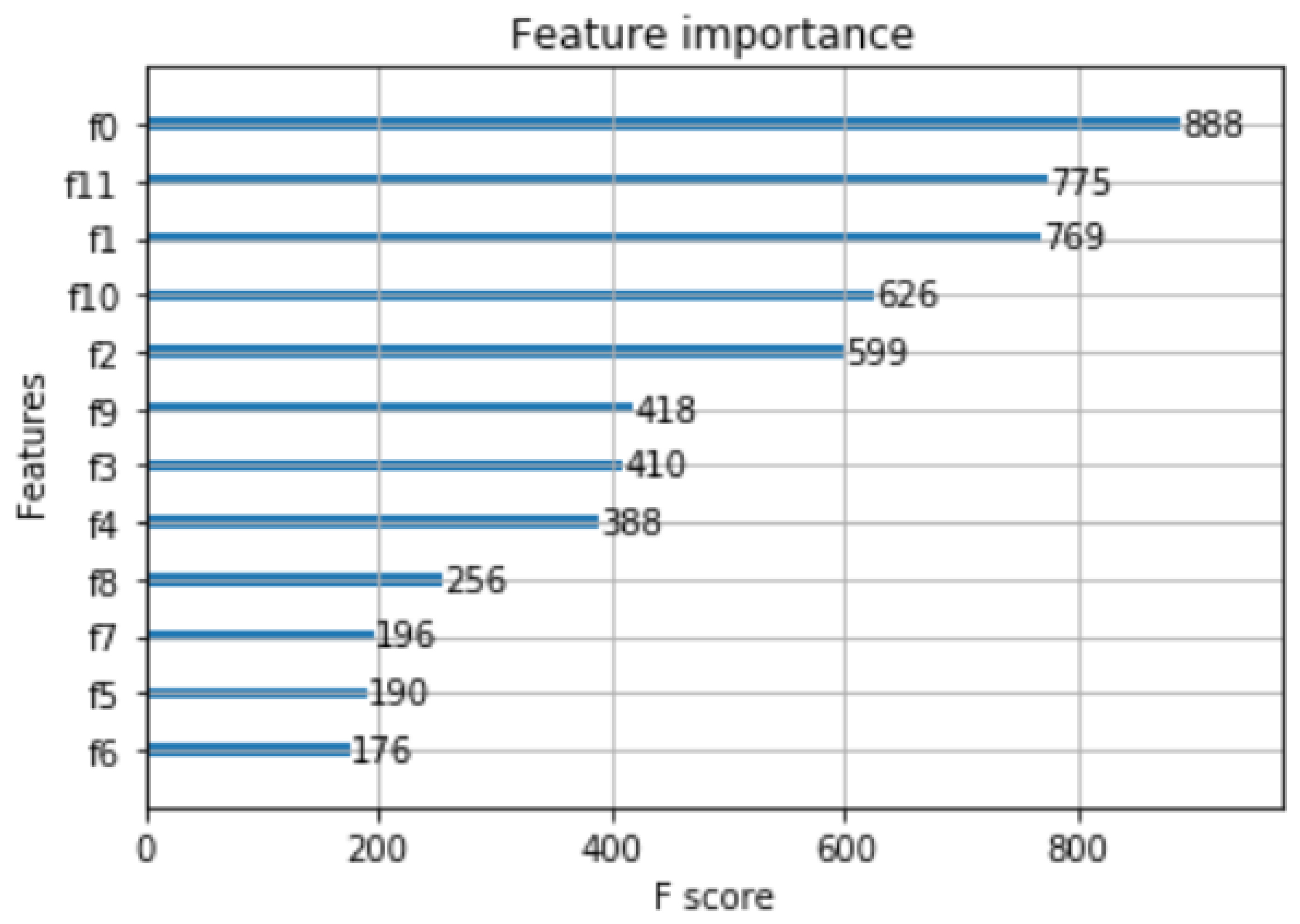

4.3. Feature Selection

4.3.1. Same-Pixel Features

- a = f(0, 11)

- b = f(0, 11, 1)

- c = f(0, 11, 1, 10)

- d = f(0, 11, 1, 10, 2)

- e = f(0, 11, 1, 10, 2, 9)

- f = f(0, 11, 1, 10, 2, 9, 3)

- g = f(0, 11, 1, 10, 2, 9, 3, 8, 4)

4.3.2. Other Local Spatiotemporal Features, and Rescaling

- Pixel’s coordinate;

- Time stamp () (0 = January, …11 = December)

4.3.3. Global Feature Generation Using Gaussian Mixture Models

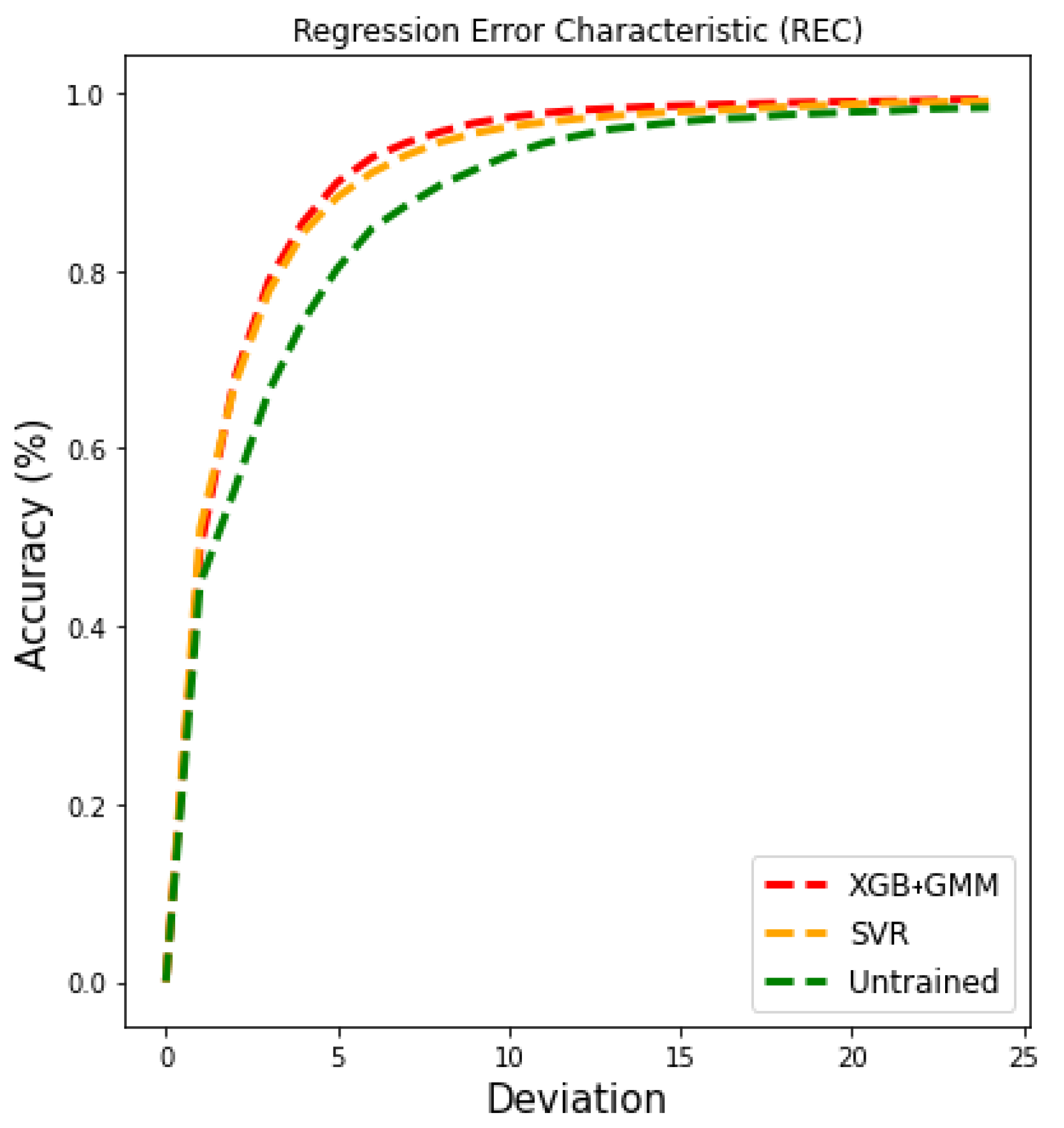

5. Performance Results and Discussion

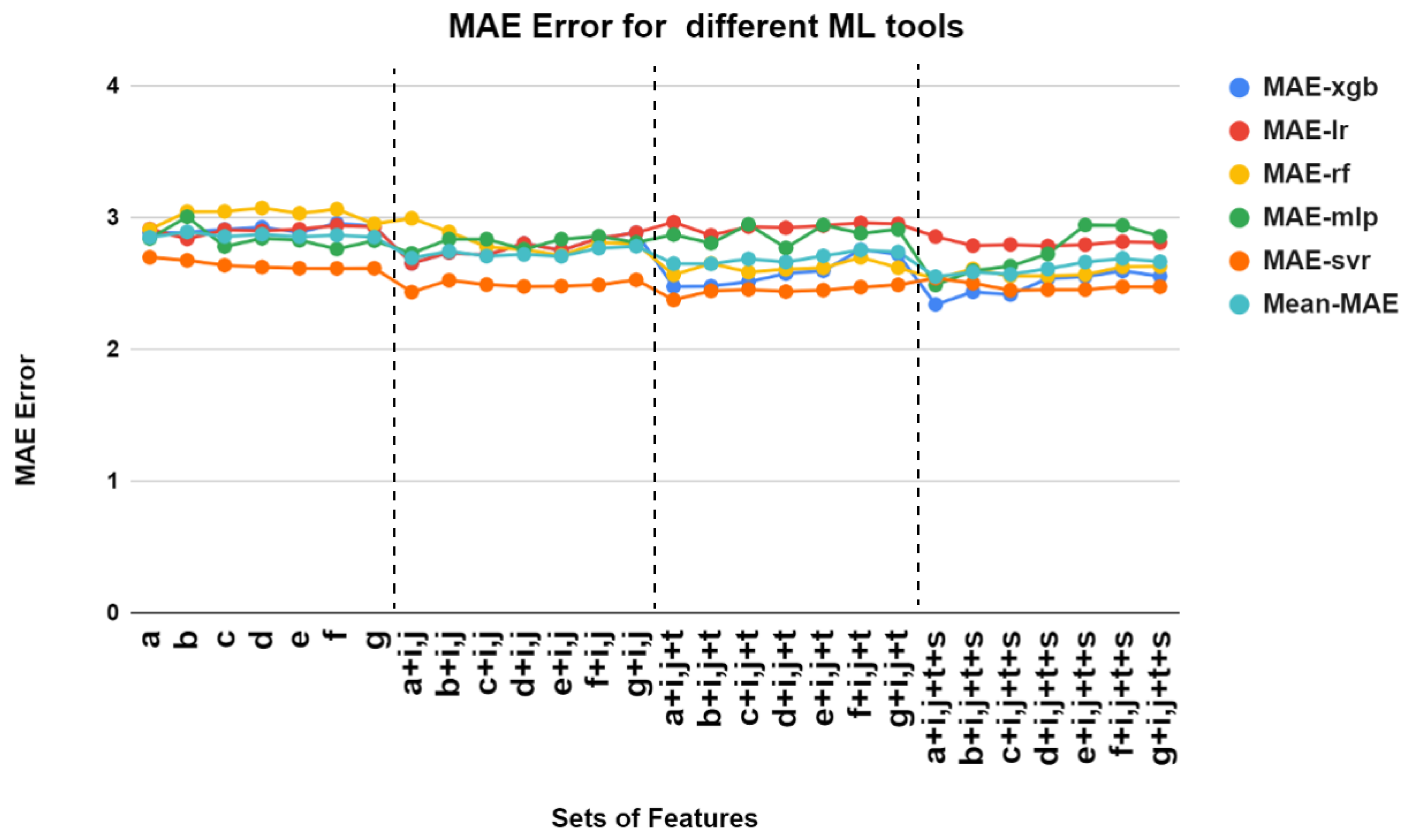

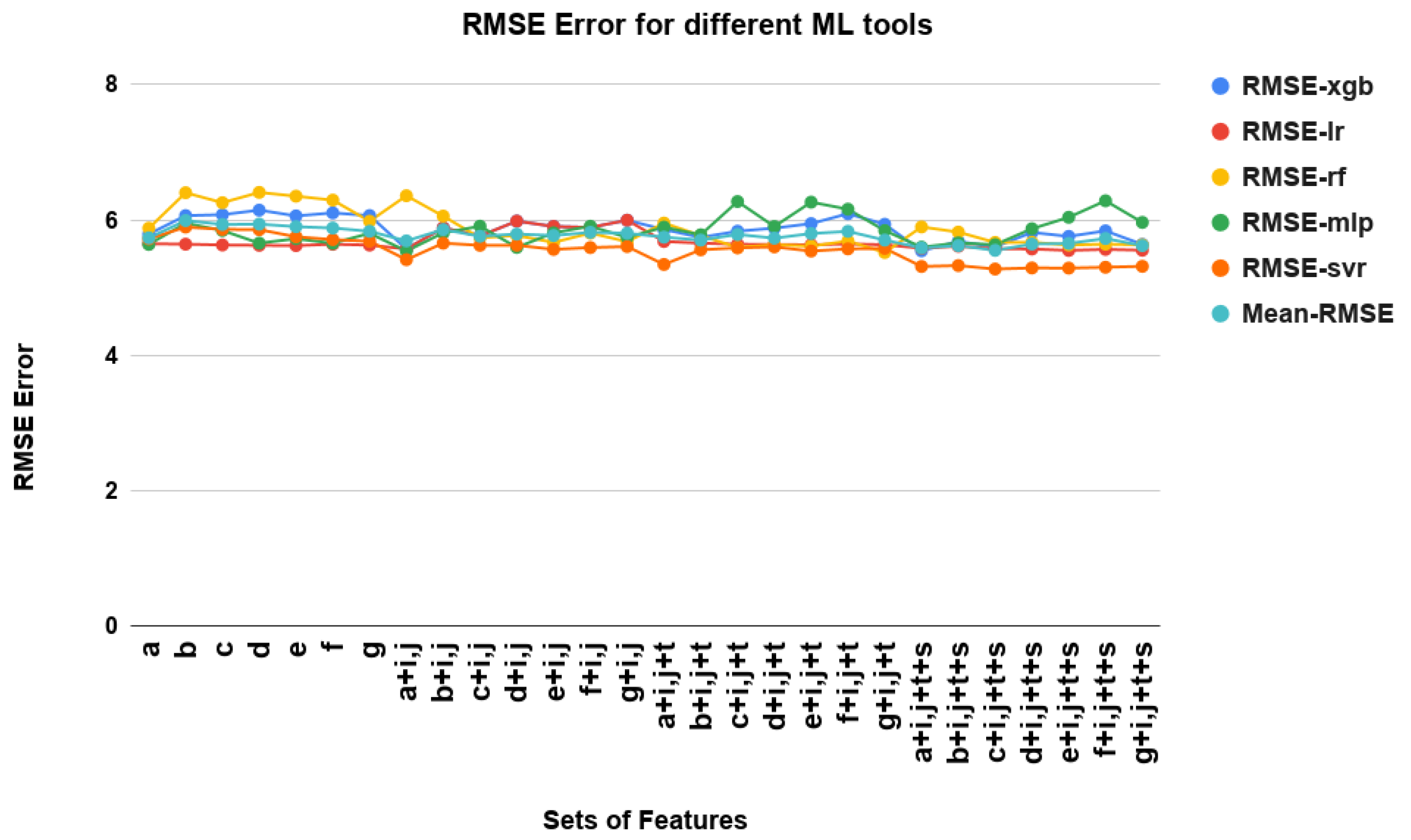

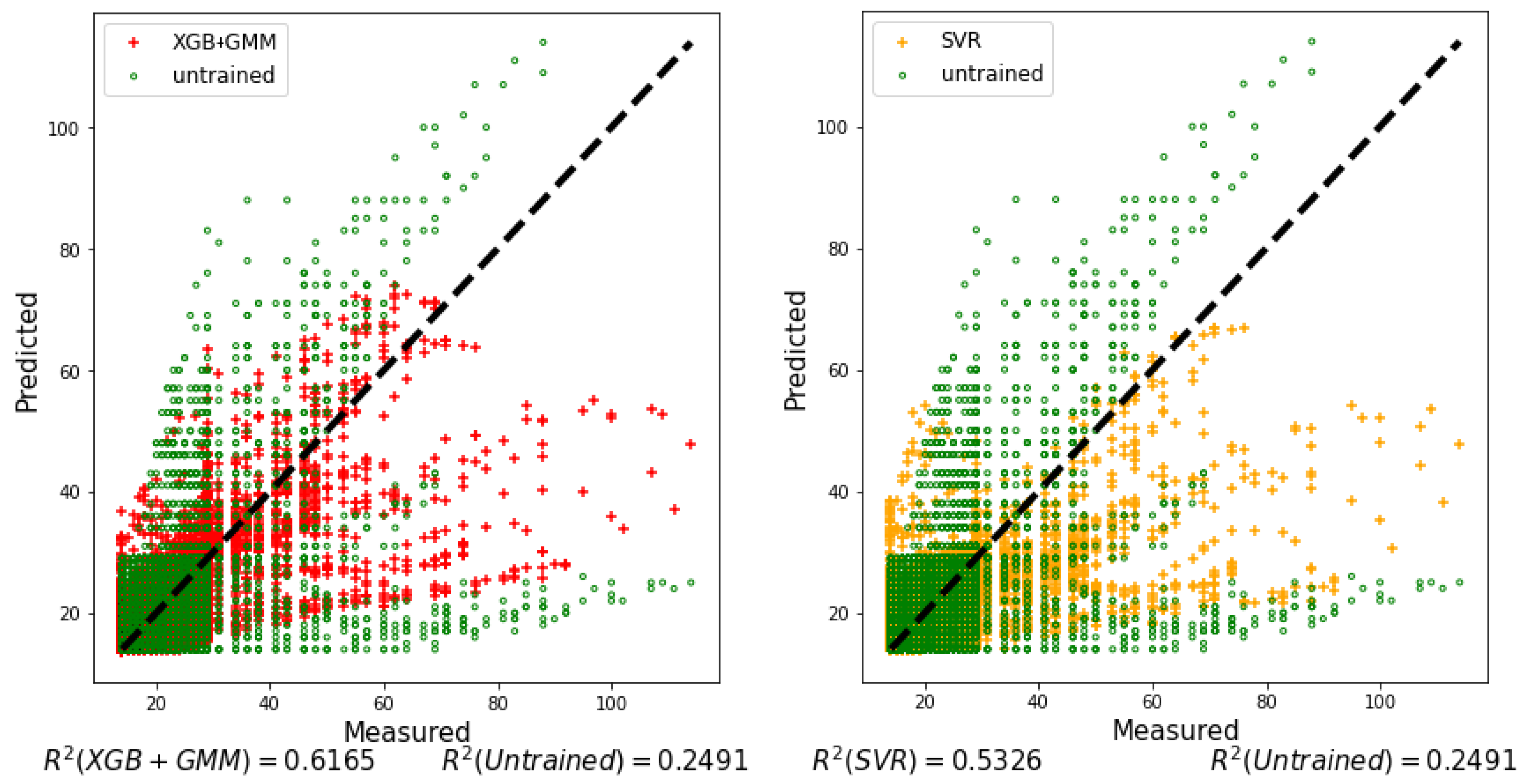

5.1. Performance Results

5.2. Performance Comparisons

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Levy, J.; Xu, Y. Groundwater management and groundwater/surface-water interaction in the context of South African water policy. Hydrogeol. J. 2012, 20, 205–226. [Google Scholar] [CrossRef]

- Braune, E.; Xu, Y. Groundwater management issues in Southern Africa—An IWRM perspective. Water SA 2008, 34, 699–706. [Google Scholar] [CrossRef]

- Ghasemian, D. Groundwater Management Using Remotely Sensed Data in High Plains Aquifer. Ph.D. Thesis, The University of Arizona, Tucson, AZ, USA, 2016. [Google Scholar]

- Cao, G.; Zheng, C.; Scanlon, B.R.; Liu, J.; Li, W. Use of flow modeling to assess sustainability of groundwater resources in the North China Plain. Water Resour. Res. 2013, 49, 159–175. [Google Scholar] [CrossRef]

- Assembly, U.N.G. Transforming Our World: The 2030 Agenda for Sustainable Development. 2015. Available online: http://www.naturalcapital.vn/wp-content/uploads/2017/02/UNDP-Viet-Nam.pdf (accessed on 15 July 2020).

- Felix Landerer. JPL TELLUS GRACE Level-3 Monthly Land Water-Equivalent-Thickness Surface Mass Anomaly Release 6.0 Version 03 in netCDF/ASCII/GeoTIFF Formats; Ver. RL06 v03; PO.DAAC: Pasadena, CA, USA, 2020. [Google Scholar] [CrossRef]

- Natkhin, M.; Steidl, J.; Dietrich, O.; Dannowski, R.; Lischeid, G. Differentiating between climate effects and forest growth dynamics effects on decreasing groundwater recharge in a lowland region in Northeast Germany. J. Hydrol. 2012, 448, 245–254. [Google Scholar] [CrossRef]

- Goderniaux, P.; Brouyère, S.; Wildemeersch, S.; Therrien, R.; Dassargues, A. Uncertainty of climate change impact on groundwater reserves—Application to a chalk aquifer. J. Hydrol. 2015, 528, 108–121. [Google Scholar] [CrossRef]

- Yadav, B.; Ch, S.; Mathur, S.; Adamowski, J. Assessing the suitability of extreme learning machines (ELM) for groundwater level prediction. J. Water Land Dev. 2017, 32, 103–112. [Google Scholar] [CrossRef]

- Lo, M.H.; Famiglietti, J.S.; Yeh, P.F.; Syed, T. Improving parameter estimation and water table depth simulation in a land surface model using GRACE water storage and estimated base flow data. Water Resour. Res. 2010, 46. [Google Scholar] [CrossRef]

- Zhou, T.; Wang, F.; Yang, Z. Comparative analysis of ANN and SVM models combined with wavelet preprocess for groundwater depth prediction. Water 2017, 9, 781. [Google Scholar] [CrossRef]

- Adamowski, J.; Fung Chan, H.; Prasher, S.O.; Ozga-Zielinski, B.; Sliusarieva, A. Comparison of multiple linear and nonlinear regression, autoregressive integrated moving average, artificial neural network, and wavelet artificial neural network methods for urban water demand forecasting in Montreal, Canada. Water Resour. Res. 2012, 48. [Google Scholar] [CrossRef]

- Sahoo, S.; Jha, M.K. Groundwater-level prediction using multiple linear regression and artificial neural network techniques: A comparative assessment. Hydrogeol. J. 2013, 21, 1865–1887. [Google Scholar] [CrossRef]

- Bourennane, H.; King, D.; Couturier, A. Comparison of kriging with external drift and simple linear regression for predicting soil horizon thickness with different sample densities. Geoderma 2000, 97, 255–271. [Google Scholar] [CrossRef]

- Tiwari, M.K.; Adamowski, J. Urban water demand forecasting and uncertainty assessment using ensemble wavelet-bootstrap-neural network models. Water Resour. Res. 2013, 49, 6486–6507. [Google Scholar] [CrossRef]

- Arandia, E.; Ba, A.; Eck, B.; McKenna, S. Tailoring seasonal time series models to forecast short-term water demand. J. Water Resour. Plan. Manag. 2016, 142, 04015067. [Google Scholar] [CrossRef]

- Mirzavand, M.; Ghazavi, R. A stochastic modelling technique for groundwater level forecasting in an arid environment using time series methods. Water Resour. Manag. 2015, 29, 1315–1328. [Google Scholar] [CrossRef]

- Nielsen, A. Practical Time Series Analysis: Prediction with Statistics and Machine Learning; O’Reilly: Sebastopol, CA, USA, 2020. [Google Scholar]

- Yoon, H.; Jun, S.C.; Hyun, Y.; Bae, G.O.; Lee, K.K. A comparative study of artificial neural networks and support vector machines for predicting groundwater levels in a coastal aquifer. J. Hydrol. 2011, 396, 128–138. [Google Scholar] [CrossRef]

- Sun, A.Y. Predicting groundwater level changes using GRACE data. Water Resour. Res. 2013, 49, 5900–5912. [Google Scholar] [CrossRef]

- Emamgholizadeh, S.; Moslemi, K.; Karami, G. Prediction the groundwater level of bastam plain (Iran) by artificial neural network (ANN) and adaptive neuro-fuzzy inference system (ANFIS). Water Resour. Manag. 2014, 28, 5433–5446. [Google Scholar] [CrossRef]

- Moosavi, V.; Vafakhah, M.; Shirmohammadi, B.; Behnia, N. A wavelet-ANFIS hybrid model for groundwater level forecasting for different prediction periods. Water Resour. Manag. 2013, 27, 1301–1321. [Google Scholar] [CrossRef]

- Dos Santos, C.C.; Pereira Filho, A.J. Water demand forecasting model for the metropolitan area of São Paulo, Brazil. Water Resour. Manag. 2014, 28, 4401–4414. [Google Scholar] [CrossRef]

- Huang, F.; Huang, J.; Jiang, S.H.; Zhou, C. Prediction of groundwater levels using evidence of chaos and support vector machine. J. Hydroinform. 2017, 19, 586–606. [Google Scholar] [CrossRef]

- Rahaman, M.M.; Thakur, B.; Kalra, A.; Li, R.; Maheshwari, P. Estimating High-Resolution Groundwater Storage from GRACE: A Random Forest Approach. Environments 2019, 6, 63. [Google Scholar] [CrossRef]

- Jing, W.; Yao, L.; Zhao, X.; Zhang, P.; Liu, Y.; Xia, X.; Song, J.; Yang, J.; Li, Y.; Zhou, C. Understanding terrestrial water storage declining trends in the Yellow River Basin. J. Geophys. Res. Atmos. 2019, 124, 12963–12984. [Google Scholar] [CrossRef]

- Jing, W.; Zhao, X.; Yao, L.; Di, L.; Yang, J.; Li, Y.; Guo, L.; Zhou, C. Can terrestrial water storage dynamics be estimated from climate anomalies? Earth Space Sci. 2020, 7, e2019EA000959. [Google Scholar] [CrossRef]

- Sahour, H.; Sultan, M.; Vazifedan, M.; Abdelmohsen, K.; Karki, S.; Yellich, J.A.; Gebremichael, E.; Alshehri, F.; Elbayoumi, T.M. Statistical applications to downscale GRACE-derived terrestrial water storage data and to fill temporal gaps. Remote Sens. 2020, 12, 533. [Google Scholar] [CrossRef]

- Mukherjee, A.; Ramachandran, P. Prediction of GWL with the help of GRACE TWS for unevenly spaced time series data in India: Analysis of comparative performances of SVR, ANN and LRM. J. Hydrol. 2018, 558, 647–658. [Google Scholar] [CrossRef]

- Seyoum, W.M.; Kwon, D.; Milewski, A.M. Downscaling GRACE TWSA data into high-resolution groundwater level anomaly using machine learning-based models in a glacial aquifer system. Remote Sens. 2019, 11, 824. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Shi, E.; Li, Q.; Gu, D.; Zhao, Z. A Method of Weather Radar Echo Extrapolation Based on Convolutional Neural Networks. In MultiMedia Modeling (MMM 2018); Schoeffmann, K., Ed.; Springer: Cham, Switzerland, 2018; Volume 10704, pp. 16–28. [Google Scholar]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.Y.; Wong, W.k.; Woo, W.C. Deep learning for precipitation nowcasting: A benchmark and a new model. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5617–5627. [Google Scholar]

- Tran, Q.K.; Song, S.k. Multi-Channel Weather Radar Echo Extrapolation with Convolutional Recurrent Neural Networks. Remote Sens. 2019, 11, 2303. [Google Scholar] [CrossRef]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Philip, S.Y. Predrnn: Recurrent neural networks for predictive learning using spatiotemporal lstms. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 879–888. [Google Scholar]

- Singh, S.; Sarkar, S.; Mitra, P. A deep learning based approach with adversarial regularization for Doppler weather radar ECHO prediction. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5205–5208. [Google Scholar]

- Chen, L.; Cao, Y.; Ma, L.; Zhang, J. A Deep Learning Based Methodology for Precipitation Nowcasting with Radar. Earth Space Sci. 2020, 7, e2019EA000812. [Google Scholar] [CrossRef]

- D’Isanto, A.; Cavuoti, S.; Gieseke, F.; Polsterer, K.L. Return of the features-Efficient feature selection and interpretation for photometric redshifts. Astron. Astrophys. 2018, 616, A97. [Google Scholar] [CrossRef]

- Yu, P.S.; Yang, T.C.; Chen, S.Y.; Kuo, C.M.; Tseng, H.W. Comparison of random forests and support vector machine for real-time radar-derived rainfall forecasting. J. Hydrol. 2017, 552, 92–104. [Google Scholar] [CrossRef]

- Mukhopadhyay, A.; Shukla, B.P.; Mukherjee, D.; Chanda, B. A novel neural network based meteorological image prediction from a given sequence of images. In Proceedings of the 2011 Second International Conference on Emerging Applications of Information Technology, Kolkata, India, 19–20 February 2011; pp. 202–205. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Khademi, F.; Jamal, S.M.; Deshpande, N.; Londhe, S. Predicting strength of recycled aggregate concrete using artificial neural network, adaptive neuro-fuzzy inference system and multiple linear regression. Int. J. Sustain. Built Environ. 2016, 5, 355–369. [Google Scholar] [CrossRef]

- Bengio, Y.; Goodfellow, I.; Courville, A. Deep Learning; MIT Press: New York, NY, USA, 2017; Volume 1. [Google Scholar]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Ding, S.; Li, H.; Su, C.; Yu, J.; Jin, F. Evolutionary artificial neural networks: A review. Artif. Intell. Rev. 2013, 39, 251–260. [Google Scholar] [CrossRef]

- Kolluru, V.; Ussenaiah, M. A survey on classification techniques used for rainfall forecasting. Int. J. Adv. Res. Comput. Sci. 2017, 8, 226–229. [Google Scholar]

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.L.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renew. Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Farnaaz, N.; Jabbar, M. Random forest modeling for network intrusion detection system. Procedia Comput. Sci. 2016, 89, 213–217. [Google Scholar] [CrossRef]

- Brownlee, J. XGBoost with Python, 1.10 ed.; Machine Learning Mastery; Machine Learning Mastery Pty: Victoria, Australia, 2018. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science & Business Media: Berlin, Germany, 2009. [Google Scholar]

- Chau, K.; Wu, C. A hybrid model coupled with singular spectrum analysis for daily rainfall prediction. J. Hydroinform. 2010, 12, 458–473. [Google Scholar] [CrossRef]

- Oğcu, G.; Demirel, O.F.; Zaim, S. Forecasting electricity consumption with neural networks and support vector regression. Procedia Soc. Behav. Sci. 2012, 58, 1576–1585. [Google Scholar] [CrossRef]

- Hsu, C.C.; Wu, C.H.; Chen, S.C.; Peng, K.L. Dynamically optimizing parameters in support vector regression: An application of electricity load forecasting. In Proceedings of the 39th Annual Hawaii International Conference on System Sciences (HICSS’06), Kauia, HI, USA, 4–7 January 2006; Volume 2, p. 30c. [Google Scholar]

- Cheng, C.S.; Chen, P.W.; Huang, K.K. Estimating the shift size in the process mean with support vector regression and neural networks. Expert Syst. Appl. 2011, 38, 10624–10630. [Google Scholar] [CrossRef]

- Zeng, J.; Xie, L.; Liu, Z.Q. Type-2 fuzzy Gaussian mixture models. Pattern Recognit. 2008, 41, 3636–3643. [Google Scholar] [CrossRef]

- Reynolds, D.A. Gaussian Mixture Models. 2009. Available online: http://leap.ee.iisc.ac.in/sriram/teaching/MLSP_16/refs/GMM_Tutorial_Reynolds.pdf (accessed on 15 July 2020).

- Tran, D.; Le, T.V.; Wagner, M. Fuzzy Gaussian mixture models for speaker recognition. In Proceedings of the Fifth International Conference on Spoken Language Processing, Sydney, Australia, 30 November–4 December 1998. [Google Scholar]

- Brassington, G. Mean Absolute Error and Root Mean Square Error: Which Is the Better Metric for Assessing Model Performance? 2017. Available online: https://meetingorganizer.copernicus.org/EGU2017/EGU2017-3574.pdf (accessed on 15 July 2020).

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?—Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Mukhopadhyay, A.; Shukla, B.P.; Mukherjee, D.; Chanda, B. Prediction of meteorological images based on relaxation labeling and artificial neural network from a given sequence of images. In Proceedings of the 2012 International Conference on Computer Communication and Informatics, Coimbatore, India, 10–12 January 2012; pp. 1–5. [Google Scholar]

- Mehr, A.D.; Nourani, V.; Khosrowshahi, V.K.; Ghorbani, M.A. A hybrid support vector regression—Firefly model for monthly rainfall forecasting. Int. J. Environ. Sci. Technol. 2019, 16, 335–346. [Google Scholar] [CrossRef]

- Nourani, V.; Uzelaltinbulat, S.; Sadikoglu, F.; Behfar, N. Artificial intelligence based ensemble modeling for multi-station prediction of precipitation. Atmosphere 2019, 10, 80. [Google Scholar] [CrossRef]

- Fienen, M.N.; Nolan, B.T.; Feinstein, D.T. Evaluating the sources of water to wells: Three techniques for metamodeling of a groundwater flow model. Environ. Model. Softw. 2016, 77, 95–107. [Google Scholar] [CrossRef]

- Abudu, S.; Cui, C.; King, J.P.; Moreno, J.; Bawazir, A.S. Modeling of daily pan evaporation using partial least squares regression. Sci. China Technol. Sci. 2011, 54, 163–174. [Google Scholar] [CrossRef]

- Pinheiro, A.; Vidakovic, B. Estimating the square root of a density via compactly supported wavelets. Comput. Stat. Data Anal. 1997, 25, 399–415. [Google Scholar] [CrossRef]

| Features | MAE XGB | RMSE XGB | MAE LR | RMSE LR | MAE RF | RMSE RF | MAE MLP | RMSE MLP | MAE SVR | RMSE SVR | MAE Mean | RMSE Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| a | 2.887 | 5.790 | 2.915 | 5.649 | 2.911 | 5.878 | 2.843 | 5.639 | 2.700 | 5.720 | 2.851 | 5.735 |

| b | 2.890 | 6.064 | 2.840 | 5.642 | 3.047 | 6.402 | 3.008 | 5.952 | 2.677 | 5.895 | 2.892 | 5.991 |

| c | 2.912 | 6.078 | 2.909 | 5.630 | 3.048 | 6.255 | 2.782 | 5.844 | 2.640 | 5.861 | 2.858 | 5.933 |

| d | 2.928 | 6.145 | 2.900 | 5.625 | 3.074 | 6.407 | 2.844 | 5.657 | 2.626 | 5.857 | 2.874 | 5.938 |

| e | 2.890 | 6.060 | 2.913 | 5.621 | 3.034 | 6.351 | 2.829 | 5.723 | 2.617 | 5.751 | 2.856 | 5.901 |

| f | 2.957 | 6.104 | 2.942 | 5.641 | 3.065 | 6.293 | 2.763 | 5.655 | 2.616 | 5.710 | 2.868 | 5.880 |

| g | 2.936 | 6.065 | 2.933 | 5.628 | 2.954 | 5.981 | 2.826 | 5.803 | 2.617 | 5.685 | 2.853 | 5.832 |

| Features | MAE XGB | RMSE XGB | MAE LR | RMSE LR | MAE RF | RMSE RF | MAE MLP | RMSE MLP | MAE SVR | RMSE SVR | MAE Mean | RMSE Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| a + i, j | 2.655 | 5.571 | 2.655 | 5.571 | 2.996 | 6.358 | 2.73 | 5.540 | 2.436 | 5.413 | 2.694 | 5.690 |

| b + i, j | 2.736 | 5.884 | 2.736 | 5.884 | 2.893 | 6.057 | 2.838 | 5.809 | 2.526 | 5.657 | 2.745 | 5.858 |

| c + i, j | 2.716 | 5.763 | 2.716 | 5.763 | 2.781 | 5.736 | 2.838 | 5.908 | 2.493 | 5.625 | 2.708 | 5.750 |

| d + i, j | 2.805 | 5.983 | 2.805 | 5.983 | 2.759 | 5.770 | 2.760 | 5.594 | 2.479 | 5.626 | 2.721 | 5.791 |

| e + i, j | 2.753 | 5.904 | 2.753 | 5.904 | 2.714 | 5.668 | 2.838 | 5.809 | 2.481 | 5.565 | 2.707 | 5.770 |

| f + i, j | 2.844 | 5.890 | 2.844 | 5.890 | 2.811 | 5.806 | 2.860 | 5.907 | 2.491 | 5.592 | 2.770 | 5.817 |

| g + i, j | 2.887 | 5.996 | 2.887 | 5.996 | 2.804 | 5.679 | 2.813 | 5.742 | 2.529 | 5.607 | 2.784 | 5.804 |

| Features | MAE XGB | RMSE XGB | MAE LR | RMSE LR | MAE RF | RMSE RF | MAE MLP | RMSE MLP | MAE SVR | RMSE SVR | MAE Mean | RMSE Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| a + i, j + t | 2.478 | 5.859 | 2.967 | 5.682 | 2.567 | 5.954 | 2.872 | 5.893 | 2.377 | 5.342 | 2.652 | 5.746 |

| b + i, j + t | 2.481 | 5.742 | 2.867 | 5.658 | 2.653 | 5.769 | 2.807 | 5.781 | 2.445 | 5.559 | 2.650 | 5.701 |

| c + i, j + t | 2.514 | 5.834 | 2.933 | 5.641 | 2.587 | 5.595 | 2.95 | 6.272 | 2.456 | 5.588 | 2.680 | 5.786 |

| d + i, j + t | 2.576 | 5.879 | 2.924 | 5.637 | 2.609 | 5.634 | 2.771 | 5.903 | 2.440 | 5.602 | 2.660 | 5.731 |

| e + i, j + t | 2.598 | 5.946 | 2.94 | 5.633 | 2.620 | 5.613 | 2.945 | 6.263 | 2.451 | 5.540 | 2.710 | 5.799 |

| f + i, j + t | 2.758 | 6.092 | 2.962 | 5.645 | 2.700 | 5.689 | 2.882 | 6.159 | 2.474 | 5.573 | 2.755 | 5.831 |

| g + i, j + t | 2.724 | 5.936 | 2.954 | 5.634 | 2.621 | 5.519 | 2.912 | 5.843 | 2.491 | 5.580 | 2.740 | 5.702 |

| Features | MAE XGB | RMSE XGB | MAE LR | RMSE LR | MAE RF | RMSE RF | MAE MLP | RMSE MLP | MAE SVR | RMSE SVR | MAE Mean | RMSE Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| a + i, j + t + s | 2.342 | 5.544 | 2.857 | 5.582 | 2.536 | 5.897 | 2.490 | 5.598 | 2.542 | 5.313 | 2.553 | 5.586 |

| b + i, j + t + s | 2.438 | 5.682 | 2.788 | 5.612 | 2.612 | 5.821 | 2.598 | 5.661 | 2.503 | 5.326 | 2.587 | 5.620 |

| c + i, j + t + s | 2.417 | 5.602 | 2.797 | 5.575 | 2.558 | 5.668 | 2.633 | 5.634 | 2.450 | 5.275 | 2.571 | 5.550 |

| d + i, j + t + s | 2.539 | 5.816 | 2.785 | 5.571 | 2.557 | 5.670 | 2.726 | 5.870 | 2.455 | 5.291 | 2.612 | 5.643 |

| e + i, j + t + s | 2.554 | 5.757 | 2.796 | 5.550 | 2.569 | 5.629 | 2.945 | 6.039 | 2.455 | 5.289 | 2.663 | 5.652 |

| f + i, j + t + s | 2.596 | 5.839 | 2.818 | 5.565 | 2.628 | 5.642 | 2.942 | 6.283 | 2.477 | 5.301 | 2.692 | 5.726 |

| g + i, j + t + s | 2.557 | 5.639 | 2.811 | 5.553 | 2.631 | 5.632 | 2.859 | 5.964 | 2.477 | 5.315 | 2.667 | 5.620 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussein, E.A.; Thron, C.; Ghaziasgar, M.; Bagula, A.; Vaccari, M. Groundwater Prediction Using Machine-Learning Tools. Algorithms 2020, 13, 300. https://doi.org/10.3390/a13110300

Hussein EA, Thron C, Ghaziasgar M, Bagula A, Vaccari M. Groundwater Prediction Using Machine-Learning Tools. Algorithms. 2020; 13(11):300. https://doi.org/10.3390/a13110300

Chicago/Turabian StyleHussein, Eslam A., Christopher Thron, Mehrdad Ghaziasgar, Antoine Bagula, and Mattia Vaccari. 2020. "Groundwater Prediction Using Machine-Learning Tools" Algorithms 13, no. 11: 300. https://doi.org/10.3390/a13110300

APA StyleHussein, E. A., Thron, C., Ghaziasgar, M., Bagula, A., & Vaccari, M. (2020). Groundwater Prediction Using Machine-Learning Tools. Algorithms, 13(11), 300. https://doi.org/10.3390/a13110300