Recognition of Cross-Language Acoustic Emotional Valence Using Stacked Ensemble Learning

Abstract

1. Introduction

- The selection of highly discriminating features from an existing set of acoustic features using a random forest recursive feature elimination (RF-RFE) algorithm to seamlessly recognize positive and negative valence emotions in a cross-language environment is a unique contribution of this study. Most of the existing methods reported in the literature hardly use feature selection for cross-language speech recognition, yet it is essential to identify the features that can easily distinguish valence emotions across different languages and accents.

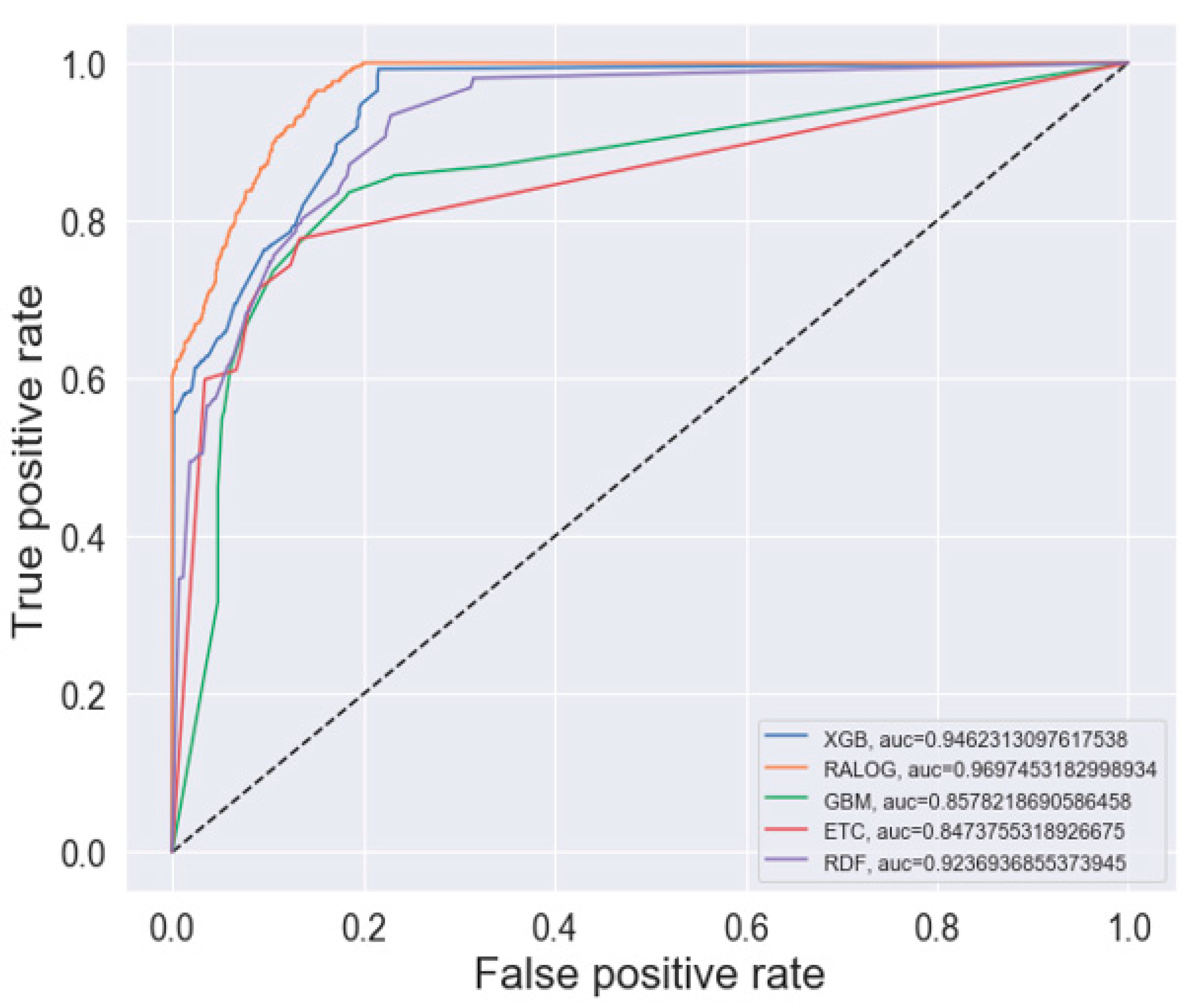

- The development of a RALOG-staked ensemble learning algorithm that can effectively recognize positive and negative valence emotions in human spoken utterances with high precision, recall, F1 score, and accuracy values is an important contribution of this study. Moreover, the training time of the algorithm was greatly reduced through feature scaling to improve the efficiency of the algorithm.

- The experimental comparison of various benchmarked ensemble learning algorithms to test the effectiveness of the proposed RALOG algorithm based on the selected acoustic features is an essential contribution of this research work.

2. Related Literature

3. Materials and Methods

3.1. Speech Emotion Corpora

3.2. Feature Extraction

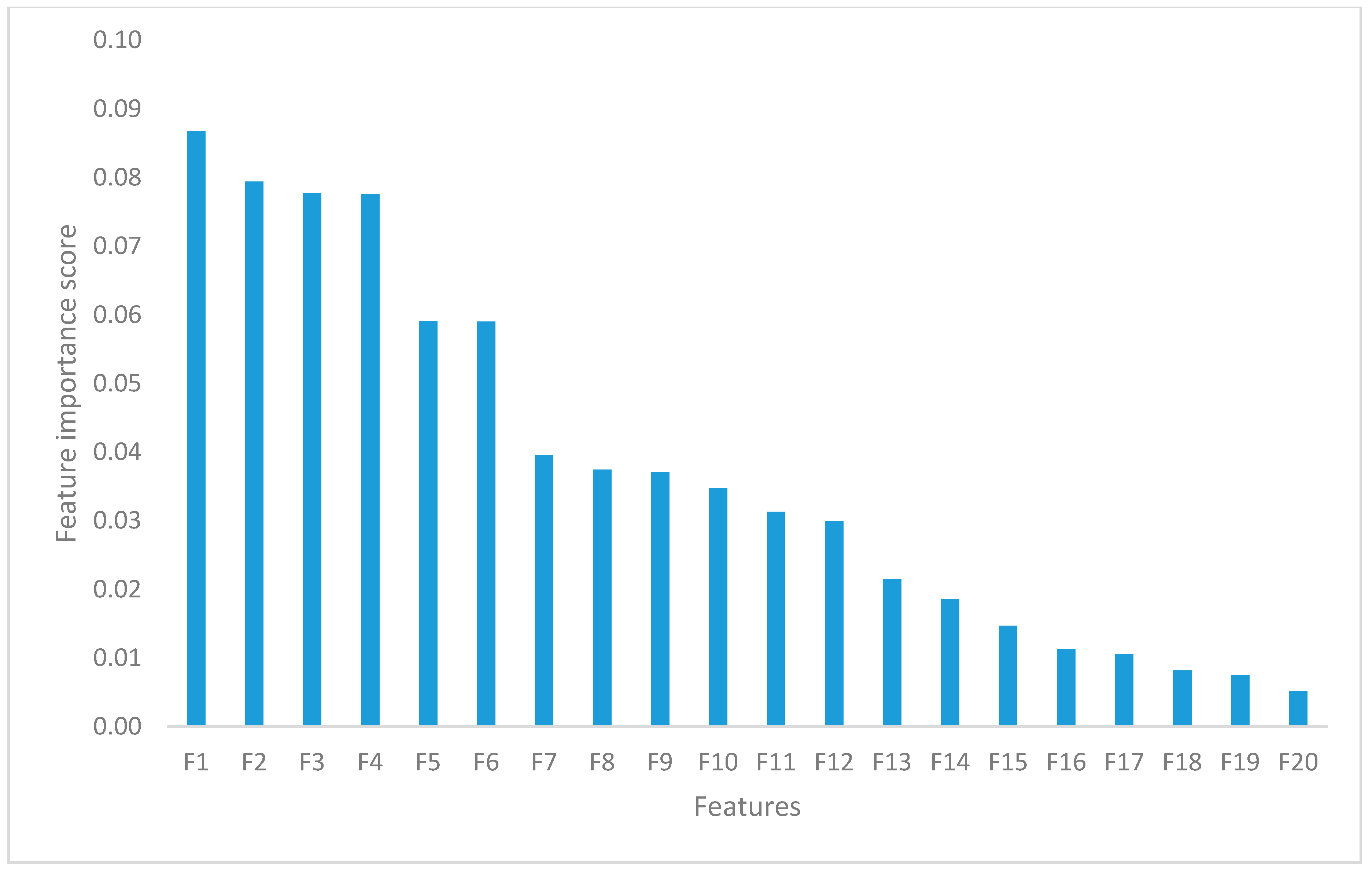

3.3. Feature Selection

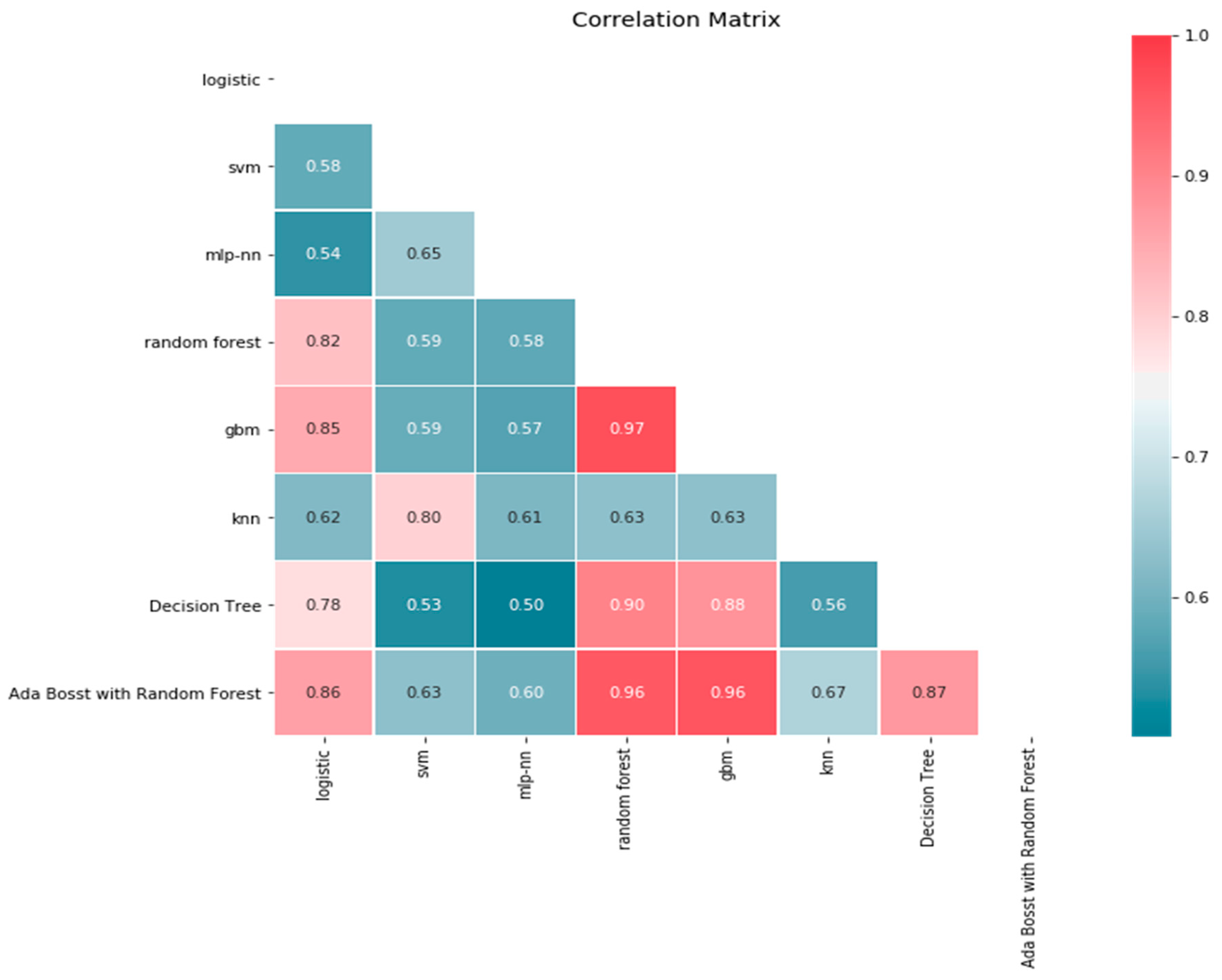

3.4. Ensemble Creation

| Algorithm 1: Stacking Ensemble Algorithm |

| Input: D = {(xi, yi)| xi ∈ χ, yi ∈ 𝘠 } Output: An ensemble algorithm A Step 1: Learn first-level learning algorithms For s ← 1 to S do Learn a base learning algorithm as based on D Step 2: Construct a new dataset from D For i ← 1 to m do Construct a new dataset that contains {xnewi, yi}, where xnewi = {aj(xi) for j = 1 to S} end Step 3: Learn a second-level learning algorithm Learn a new learning algorithm anew based on the newly constructed dataset Return A(xi) = anew (a1(x), a2(x), …, aS(x)) |

3.5. Recognition of Valence

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Okuboyejo, D.A.; Olugbara, O.O.; Odunaike, S.A. Automating skin disease diagnosis using image classification. World Congr. Eng. Comput. Sci. 2013, 2, 850–854. [Google Scholar]

- Karthik, R.; Hariharan, M.; Anand, S.; Mathikshara, P.; Johnson, A. Attention embedded residual CNN for disease detection in tomato leaves. Appl. Soft Comput. J. 2019, 89, 105933. [Google Scholar]

- Vadovsky, M.; Paralic, J. Parkinson’s disease patients classification based on the speech signals. In Proceedings of the IEEE 15th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 26–28 January 2017; pp. 000321–000326. [Google Scholar]

- Jiang, W.; Wang, Z.; Jin, J.S.; Han, X.; Li, C. Speech emotion recognition with heterogeneous feature unification of deep neural network. Sensors (Switz.) 2019, 19, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Ram, R.; Palo, H.K.; Mohanty, M.N. Emotion recognition with speech for call centres using LPC and spectral analysis. Int. J. Adv. Comput. Res. 2013, 3, 182–187. [Google Scholar]

- Tursunov, A.; Kwon, S.; Pang, H.S. Discriminating emotions in the valence dimension from speech using timbre features. Appl. Sci. (Switz.) 2019, 9, 2470. [Google Scholar] [CrossRef]

- Lisetti, C.L. Affective computing. Pattern Anal. Appl. 1998, 1, 71–73. [Google Scholar] [CrossRef]

- Mencattini, A.; Martinelli, E.; Costantini, G.; Todisco, M.; Basile, B.; Bozzali, M.; Di Natale, C. Speech emotion recognition using amplitude modulation parameters and a combined feature selection procedure. Knowl. Based Syst. 2014, 63, 68–81. [Google Scholar] [CrossRef]

- Rasool, Z.; Masuyama, N.; Islam, M.N.; Loo, C.K. Empathic interaction using the computational emotion model. In Proceedings of the 2015 IEEE Symposium Series on Computational Intelligence, SSCI 2015, Cape Town, South Africa, 7–10 December 2015; pp. 109–116. [Google Scholar]

- Gunes, H.; Pantic, M. Automatic, dimensional and continuous emotion recognition. Int. J. Synth. Emot. 2010, 1, 68–99. [Google Scholar] [CrossRef]

- Charland, L.C. The natural kind status of emotion. Br. J. Philos. Sci. 2002, 53, 511–537. [Google Scholar] [CrossRef][Green Version]

- Tan, J.W.; Andrade, J.W.; Li, H.; Walter, S.; Hrabal, D.; Rukavina, S.; Limbrecht-Ecklundt, K.; Hoffman, H.; Traue, H.C. Recognition of intensive valence and arousal affective states via facial electromyographic activity in young and senior adults. PLoS ONE 2016, 11, 1–14. [Google Scholar] [CrossRef]

- Jokinen, J.P.P. Emotional user experience: Traits, events, and states. Int. J. Hum. Comput. Stud. 2015, 76, 67–77. [Google Scholar] [CrossRef]

- Huang, Z.; Dong, M.; Mao, Q.; Zhan, Y. Speech emotion recognition using CNN. In Proceedings of the ACM International Conference on Multimedia—MM ’14, Orlando, FL, USA, 3–7 November 2014; pp. 801–804. [Google Scholar]

- Lee, C.M.; Narayanan, S.S. Toward detecting emotions in spoken dialogs. IEEE Trans. Speech Audio Process. 2005, 13, 293–303. [Google Scholar]

- Rong, J.R.J.; Chen, Y.-P.P.; Chowdhury, M.; Li, G.L.G. Acoustic features extraction for emotion recognition. In Proceedings of the 6th IEEE/ACIS International Conference on Computer and Information Science (ICIS 2007), Melbourne, Australia, 11–13 July 2007; pp. 419–424. [Google Scholar]

- Pampouchidou, A.; Simantiraki, O.; Vazakopoulou, C.M.; Chatzaki, C.; Pediaditis, M.; Maridaki, A.; Marias, K.; Simos, P.; Yang, F.; Meriaudeau, F.; et al. Facial geometry and speech analysis for depression detection. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Seogwipo, South Korea, 11–15 July 2017; pp. 1433–1436. [Google Scholar]

- Hossain, M.S.; Muhammad, G. Emotion-aware connected healthcare big data towards 5G. IEEE Internet Things J. 2017, 4662, 1–8. [Google Scholar] [CrossRef]

- Wang, W.; Klinger, K.; Conapitski, C.; Gundrum, T.; Snavely, J. Machine audition: Principles, algorithms. In Machine Audition: Principles, Algorithms and Systems; IGI Global Press: Hershey, PA, USA, 2010; Chapter 17; pp. 398–423. [Google Scholar]

- Junger, J.; Pauly, K.; Bröhr, S.; Birkholz, P.; Neuschaefer-rube, C.; Kohler, C.; Schneider, F.; Derntl, B.; Habel, U. NeuroImage sex matters: Neural correlates of voice gender perception. NeuroImage 2013, 79, 275–287. [Google Scholar] [CrossRef] [PubMed]

- Yang, P. Cross-corpus speech emotion recognition based on multiple kernel learning of joint sample and feature matching. J. Electr. Comput. Eng. 2017, 2017, 1–6. [Google Scholar] [CrossRef]

- Feraru, S.M.; Schuller, D.; Schuller, B. Cross-language acoustic emotion recognition: An overview and some tendencies. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction, ACII 2015, Xi’an, China, 21–24 September 2015; Volume 1, pp. 125–131. [Google Scholar]

- Kim, J.; Englebienne, G.; Truong, K.P.; Evers, V. Towards speech emotion recognition “in the wild” using aggregated corpora and deep multi-task learning. In Proceedings of the Annual Conference of the International Speech Communication Association, Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 1113–1117. [Google Scholar]

- Schuller, B.; Vlasenko, B.; Eyben, F.; Wollmer, M.; Stuhlsatz, A.; Wendemuth, A.; Rigoll, G. Cross-corpus acoustic emotion recognition: Variances and strategies (Extended abstract). In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction, ACII 2015, Xi’an, China, 21–24 September 2015; Volume 1, pp. 470–476. [Google Scholar]

- Latif, S.; Qayyum, A.; Usman, M.; Qadir, J. Cross lingual speech emotion recognition: Urdu vs. Western languages. In Proceedings of the 2018 International Conference on Frontiers of Information Technology, FIT 2018, Islamabad, Pakistan, 17–19 December 2018; pp. 88–93. [Google Scholar]

- Shah, M.; Chakrabarti, C.; Spanias, A. Within and cross-corpus speech emotion recognition using latent topic model-based features. Eurasip J. Audio SpeechMusic Process. 2015, 1, 4. [Google Scholar] [CrossRef]

- Gideon, J.; Provost, E.M.; McInnis, M. Mood state prediction from speech of varying acoustic quality for individuals with bipolar disorder. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016; pp. 2359–2363. [Google Scholar]

- Anagnostopoulos, C.N.; Iliou, T.; Giannoukos, I. Features and classifiers for emotion recognition from speech: A survey from 2000 to 2011. Artif. Intell. Rev. 2012, 43, 155–177. [Google Scholar] [CrossRef]

- Akçay, M.B.; Oğuz, K. Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers. Speech Commun. 2020, 116, 56–76. [Google Scholar] [CrossRef]

- Adetiba, E.; Olugbara, O.O. Lung cancer prediction using neural network ensemble with histogram of oriented gradient genomic features. Sci. World J. 2015, 2015, 1–17. [Google Scholar] [CrossRef]

- Zvarevashe, K.; Olugbara, O.O. Gender voice recognition using random forest recursive feature elimination with gradient boosting machines. In Proceedings of the 2018 International Conference on Advances in Big Data, Computing and Data Communication Systems, icABCD 2018, Durban, South Africa, 6–7 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Abe, B.T.; Olugbara, O.O.; Marwala, T. Hyperspectral Image classification using random forests and neural networks. In Proceedings of the World Congress on Engineering and Computer Science, San Francisco, CA, USA, 24–26 October 2012; Volume I; pp. 522–527. [Google Scholar]

- Oyewole, S.A.; Olugbara, O.O. Product image classification using eigen colour feature with ensemble machine learning. Egypt. Inform. J. 2018, 19, 83–100. [Google Scholar] [CrossRef]

- Zhang, Z.; Weninger, F.; Wöllmer, M.; Schuller, B. Unsupervised learning in cross-corpus acoustic emotion recognition. In Proceedings of the 2011 IEEE Workshop on Automatic Speech Recognition and Understanding, ASRU 2011, Waikoloa, HI, USA, 11–15 December 2011; pp. 523–528. [Google Scholar]

- Schuller, B.; Zhang, Z.; Weninger, F.; Rigoll, G. Using multiple databases for training in emotion recognition: To unite or to vote? In Proceedings of the Annual Conference of the International Speech Communication Association, Interspeech 2011, Florence, Italy, 28–31 August 2011; pp. 1553–1556. [Google Scholar]

- Latif, S.; Rana, R.; Younis, S.; Qadir, J.; Epps, J. Transfer learning for improving speech emotion classification accuracy. In Proceedings of the Annual Conference of the International Speech Communication Association, Interspeech 2018, Hyderabad, India, 2–6 September 2018; pp. 257–261. [Google Scholar]

- Ocquaye, E.N.N.; Mao, Q.; Xue, Y.; Song, H. Cross lingual speech emotion recognition via triple attentive asymmetric convolutional neural network. Int. J. Intell. Syst. 2020, 1–19. [Google Scholar] [CrossRef]

- Mustaqeem; Kwon, S. A CNN-assisted enhanced audio signal processing for speech emotion recognition. Sensors (Switz.) 2020, 20, 183. [Google Scholar] [CrossRef] [PubMed]

- Liu, N.; Zong, Y.; Zhang, B.; Liu, L.; Chen, J.; Zhao, G.; Zhu, J. Unsupervised cross-corpus speech emotion recognition using domain-adaptive subspace learning. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, ICASSP 2018, Calgary, AB, Canada, 15–20 April 2018; pp. 5144–5148. [Google Scholar]

- Li, Y.; Zhao, T.; Kawahara, T. Improved end-to-end speech emotion recognition using self attention mechanism and multitask learning. In Proceedings of the Conference of the International Speech Communication Association, Interspeech 2019, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Deng, J.; Zhang, Z.; Eyben, F.; Schuller, B. Autoencoder-based unsupervised domain adaptation for speech emotion recognition. IEEE Signal Process. Lett. 2014, 21, 1068–1072. [Google Scholar] [CrossRef]

- Parry, J.; Palaz, D.; Clarke, G.; Lecomte, P.; Mead, R.; Berger, M.; Hofer, G. Analysis of deep learning architectures for cross-corpus speech emotion recognition. In Proceedings of the Annual Conference of the International Speech Communication Association, Interspeech 2019, Graz, Austria, 15–19 September 2019; pp. 1656–1660. [Google Scholar]

- Livingstone, S.R.; Russo, F.A. The ryerson audio-visual database of emotional speech and song (ravdess): A dynamic, multimodal set of facial And vocal expressions in north American english. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [PubMed]

- Costantini, G.; Iadarola, I.; Paoloni, A.; Todisco, M. EMOVO corpus: An Italian emotional speech database. In Proceedings of the 9th International Conference on Language Resources and Evaluation, LREC 2014, Reykjavik, Iceland, 26–31 May 2014; pp. 3501–3504. [Google Scholar]

- Sierra, H.; Cordova, M.; Chen, C.S.J.; Rajadhyaksha, M. CREMA-D: Crowd-sourced emotional multimodal actors dataset. J. Investig. Dermatol. 2015, 135, 612–615. [Google Scholar] [CrossRef]

- Zvarevashe, K.; Olugbara, O.O. Ensemble learning of hybrid acoustic features for speech emotion recognition. Algorithms 2020, 70, 1–24. [Google Scholar] [CrossRef]

- McEnnis, D.; McKay, C.; Fujinaga, I.; Depalle, P. jAudio: A feature extraction library. In Proceedings of the International Conference on Music Information Retrieval, London, UK, 11–15 September 2005; pp. 600–603. [Google Scholar]

- Yan, J.; Wang, X.; Gu, W.; Ma, L. Speech emotion recognition based on sparse representation. Arch. Acoust. 2013, 38, 465–470. [Google Scholar] [CrossRef]

- Song, P.; Ou, S.; Du, Z.; Guo, Y.; Ma, W.; Liu, J. Learning corpus-invariant discriminant feature representations for speech emotion recognition. IEICE Trans. Inf. Syst. 2017, E100D, 1136–1139. [Google Scholar] [CrossRef]

- Thu, P.P.; New, N. Implementation of emotional features on satire detection. In Proceedings of the 18th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing, SNPD 2017, Kanazawa, Japan, 26–28 June 2017; pp. 149–154. [Google Scholar]

- Ribeiro, M.H.D.M.; dos Santos Coelho, L. Ensemble approach based on bagging, boosting and stacking for short-term prediction in agribusiness time series. Appl. Soft Comput. J. 2020, 86, 105837. [Google Scholar] [CrossRef]

- Raschka, S. MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J. Open Source Softw. 2018, 3, 638. [Google Scholar] [CrossRef]

- Bhavan, A.; Chauhan, P.; Hitkul; Shah, R.R. Bagged support vector machines for emotion recognition from speech. Knowl. Based Syst. 2019, 184, 104886. [Google Scholar] [CrossRef]

- Dong, X.; Yu, Z.; Cao, W.; Shi, Y.; Ma, Q. A survey on ensemble learning. Front. Comput. Sci. 2020, 14, 241–258. [Google Scholar] [CrossRef]

- Khorram, S.; McInnis, M.; Mower Provost, E. Jointly aligning and predicting continuous emotion annotations. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Li, X.; Akagi, M. Improving multilingual speech emotion recognition by combining acoustic features in a three-layer model. Speech Commun. 2019, 110, 1–12. [Google Scholar] [CrossRef]

| Reference | Corpora | Languages | Emotion Mapping | Recognition Method | Result |

|---|---|---|---|---|---|

| Latif et al. [25] | FAU-AIBO, IEMOCAP, EMO-DB, EMOVO, SAVEE | German, English, Italian | Positive valence: Surprise, motherese, joyful/happy, neutral, rest, excited, Negative valence: Angry, touchy, sadness, emphatic, reprimanding, boredom disgust, fear | eGeMAPS + DBN + Leave-One-Out | 80.00% (Accuracy) |

| Latif et al. [36] | EMOVO, URDU, SAVEE, EMO-DB | British English, Italian, German, Urdu | Positive valence: Anger, sadness, fear, boredom, disgust, Negative valence: Neutral, happiness, surprise | eGeMAPS + SVM | 70.98% (Accuracy) |

| Ocquaye et al. [37] | FAU-Aibo, IEMOCAP, EMO-DB, EMOVO, SAVEE | German, English, Italian | Positive valence: Surprise, motherese, joyful/happy, neutral, rest, excited, Negative valence: Angry, touchy, sadness, emphatic, reprimanding, boredom disgust, fear | triple attentive asymmetric CNN model | 73.11% (Accuracy) |

| Mustaqeem et al. [38] | RAVDESS, IEMOCAP | North American English, | Anger, happy, neutral, sad | Clean spectrograms + DSCNN | 56.50% (Accuracy) |

| Liu et al. [39] | eNTERFACE, EmoDB | English | Angry, disgust, fear, happy, sad, and surprise | DALSR + SVM | 52.27% (UAR) |

| Li et al. [40] | FUJITSU, EMO-DB, CASIA | Japanese, German, Chinese | Neutral, happy, angry, sad | IS16 + MSF + LMT (logistic model trees) | 82.63% (F-Measure) |

| Parry et al. [41] | RAVDESS, IEMOCAP, EMO-DB, EMOVO, SAVEE, EPST | English, German, Italian | Positive valence: Elation, excitement, happiness, joy, pleasant surprise, pride, surprise, Negative valence: Anger, anxiety, cold anger, contempt, despair, disgust, fear, frustration, hot anger, panic, sadness, shame, Neutral valence: Boredom, calm, interest, neutral | CNN | 55.11% (Average accuracy) |

| Deng et al. [42] | ABC, FAU AEC | German | Positive valence: Cheerful, neutral, rest, medium stress, Negative valence: Tired, aggressive, intoxicated, nervous, screaming, fear, high stress | INTERSPEECH 2009 Emotion Challenge baseline feature set + A-DAE (adaptive denoising autoencoders) | 64.18% (UAR) |

| Proposed Model | EMO-DB, SAVEE, RAVDESS, EMOVO, CREMA-D | German, British English, North American English | Positive valence: Anger, sadness, fear, disgust, boredom, Negative valence: Neutral, happiness, surprise | RF-RFE extracted features + RALOG | 96.60% (Accuracy) 96.00% (Recall) |

| Corpus | Language | Total Utterances | Training Utterances | Testing Utterances | Negative Valence | Positive Valence | |||

|---|---|---|---|---|---|---|---|---|---|

| Male | Female | Male | Female | Male | Female | ||||

| EMO-DB | German | 400 | 400 | 320 | 320 | 80 | 80 | Anger, Sadness, Fear, Disgust, Boredom | Neutral, Happiness |

| SAVEE | British English | 480 | 0 | 384 | 0 | 96 | 0 | Anger, Sadness, Fear, Disgust | Neutral, Happiness, Surprise |

| RAVDESS | North American English | 720 | 720 | 576 | 576 | 144 | 144 | Anger, Sadness, Fear, Disgust | Neutral, Happiness, Surprise, Calm |

| EMOVO | Italian | 294 | 294 | 235 | 235 | 59 | 59 | Anger, Sadness, Fear, Disgust | Neutral, Happiness, Surprise |

| CREMA-D | African, American, Asian, Caucasian, Hispanic, and Unspecified | 3579 | 3207 | 2863 | 2566 | 716 | 641 | Anger, Sadness, Fear, Disgust | Neutral, Happiness |

| Group | Type | Number |

|---|---|---|

| Prosodic | ||

| Energy | Logarithm of Energy | 10 |

| Pitch | Fundamental Frequency | 70 |

| Times | Zero Crossing Rate | 24 |

| Spectral | ||

| Cepstral | MFCC | 133 |

| Shape | Spectral Roll-off Point | 12 |

| Amplitude | Spectral Flux | 12 |

| Moment | Spectral Centroid | 22 |

| Audio | Spectral Compactness | 10 |

| Frequency | Fast Fourier Transform | 9 |

| Signature | Spectral Variability | 21 |

| Envelope | LPCC | 81 |

| Key | Feature Description |

|---|---|

| F1 | Derivative of standard deviation of area method of moments overall standard deviation (6th variant) |

| F2 | Derivative of standard deviation of relative difference function overall standard deviation standard (1st variant) |

| F3 | Area method of moments overall standard deviation (7th variant) |

| F4 | Peak detection overall average (10th variant) |

| F5 | Standard deviation of area method of moments overall standard deviation (8th variant) |

| F6 | Derivative of area method of moments overall standard deviation (8th variant) |

| F7 | Area method of moments of Mel frequency cepstral coefficients overall standard deviation (1st variant) |

| F8 | Derivative of area method of moments overall standard deviation (9th variant) |

| F9 | Derivative of standard deviation of area method of moments overall standard deviation (4th variant) |

| F10 | Standard deviation of method of moments overall average (2nd variant) |

| F11 | Peak detection overall average (10th variant) |

| F12 | Peak detection overall average (9th variant) |

| F13 | Peak detection overall average (7th variant) |

| F14 | Derivative of standard deviation of area method of moments overall standard deviation (6th variant) |

| F15 | Method of moments overall average (3rd variant) |

| RALOG | RDF | GBM | ETC | XGB | |

|---|---|---|---|---|---|

| Before RFE | 43.314 | 5.290 | 60.495 | 0.168 | 0.004 |

| After RFE | 9.050 | 0.459 | 16.228 | 0.244 | 0.001 |

| Algorithm | Stage | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| RALOG | Before RFE | 82 (±0.007) | 83 (±0.007) | 83 (±0.007) | 82 (±0.007) |

| After RFE | 90 (±0.006) | 90 (±0.006) | 91 (±0.006) | 92 (±0.005) | |

| RDF | Before RFE | 72 (±0.009) | 82 (±0.007) | 77 (±0.008) | 80 (±0.008) |

| After RFE | 80 (±0.008) | 88 (±0.006) | 82 (±0.007) | 85 (±0.007) | |

| GBM | Before RFE | 69 (±0.009) | 73 (±0.009) | 71 (±0.009) | 73 (±0.009) |

| After RFE | 79 (±0.008) | 82 (±0.007) | 80 (±0.008) | 85 (±0.007) | |

| XGB | Before RFE | 71 (±0.009) | 81 (±0.008) | 75 (±0.008) | 78 (±0.008) |

| After RFE | 78 (±0.008) | 85 (±0.007) | 81 (±0.006) | 87 (±0.007) |

| Algorithm | Stage | Precision | Recall | F1-score | Accuracy | ||||

|---|---|---|---|---|---|---|---|---|---|

| Negative | Positive | Negative | Positive | Negative | Positive | Negative | Positive | ||

| RALOG | Before RFE | 88 | 76 | 86 | 79 | 88 | 77 | 86 | 78 |

| After RFE | 93 | 87 | 94 | 86 | 94 | 87 | 97 | 86 | |

| RDF | Before RFE | 83 | 59 | 84 | 78 | 82 | 67 | 82 | 74 |

| After RFE | 97 | 86 | 97 | 62 | 88 | 87 | 92 | 72 | |

| GBM | Before RFE | 81 | 56 | 78 | 64 | 80 | 62 | 79 | 71 |

| After RFE | 92 | 66 | 89 | 74 | 90 | 70 | 91 | 79 | |

| XGB | Before RFE | 84 | 60 | 85 | 79 | 83 | 71 | 84 | 76 |

| After RFE | 95 | 61 | 88 | 82 | 91 | 70 | 94 | 80 | |

| Algorithm | Valence | Before RFE | After RFE | ||

|---|---|---|---|---|---|

| Negative | Positive | Negative | Positive | ||

| RALOG | Negative | 88 | 12 | 93 | 7 |

| Positive | 24 | 76 | 13 | 87 | |

| RDF | Negative | 83 | 17 | 97 | 3 |

| Positive | 41 | 59 | 38 | 62 | |

| GBM | Negative | 81 | 19 | 92 | 8 |

| Positive | 41 | 59 | 34 | 66 | |

| ETC | Negative | 80 | 20 | 93 | 7 |

| Positive | 43 | 57 | 37 | 63 | |

| XGB | Negative | 84 | 16 | 95 | 5 |

| Positive | 40 | 60 | 39 | 61 | |

| Corpus | Stage | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| EMO-DB | Before RFE | 95 | 96 | 95 | 95 |

| After RFE | 97 | 96 | 96 | 96 | |

| SAVEE | Before RFE | 97 | 96 | 96 | 96 |

| After RFE | 98 | 98 | 98 | 98 | |

| RAVDEES | Before RFE | 94 | 94 | 94 | 95 |

| After RFE | 97 | 97 | 97 | 97 | |

| EMOVO | Before RFE | 92 | 92 | 93 | 92 |

| After RFE | 94 | 94 | 94 | 95 | |

| CREMA-D | Before RFE | 88 | 89 | 88 | 89 |

| After RFE | 85 | 95 | 89 | 92 |

| EMO-DB | SAVEE | RAVDEES | EMOVO | CREMA-D | |

|---|---|---|---|---|---|

| Before RFE | 3.440 | 2.070 | 6.190 | 2.540 | 29.200 |

| After RFE | 0.780 | 0.470 | 1.380 | 0.580 | 6.050 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zvarevashe, K.; Olugbara, O.O. Recognition of Cross-Language Acoustic Emotional Valence Using Stacked Ensemble Learning. Algorithms 2020, 13, 246. https://doi.org/10.3390/a13100246

Zvarevashe K, Olugbara OO. Recognition of Cross-Language Acoustic Emotional Valence Using Stacked Ensemble Learning. Algorithms. 2020; 13(10):246. https://doi.org/10.3390/a13100246

Chicago/Turabian StyleZvarevashe, Kudakwashe, and Oludayo O. Olugbara. 2020. "Recognition of Cross-Language Acoustic Emotional Valence Using Stacked Ensemble Learning" Algorithms 13, no. 10: 246. https://doi.org/10.3390/a13100246

APA StyleZvarevashe, K., & Olugbara, O. O. (2020). Recognition of Cross-Language Acoustic Emotional Valence Using Stacked Ensemble Learning. Algorithms, 13(10), 246. https://doi.org/10.3390/a13100246