Abstract

We describe an algorithm computing an optimal prefix free code for n unsorted positive weights in time within , where the alternation approximates the minimal amount of sorting required by the computation. This asymptotical complexity is within a constant factor of the optimal in the algebraic decision tree computational model, in the worst case over all instances of size n and alternation . Such results refine the state of the art complexity of in the worst case over instances of size n in the same computational model, a landmark in compression and coding since 1952. Beside the new analysis technique, such improvement is obtained by combining a new algorithm, inspired by van Leeuwen’s algorithm to compute optimal prefix free codes from sorted weights (known since 1976), with a relatively minor extension of Karp et al.’s deferred data structure to partially sort a multiset accordingly to the queries performed on it (known since 1988). Preliminary experimental results on text compression by words show to be polynomially smaller than n, which suggests improvements by at most a constant multiplicative factor in the running time for such applications.

1. Introduction

Given n positive weights coding for the frequencies of n messages (We use the conveniently concise and general terminology of messages for the input and symbols for the output, as introduced by Huffman [] himself, which should not be confused with other terminologies found in the literature, of input symbols, letters, or words for the input and output symbols or bits in the binary case for the output), and a constant number D of (output) symbols3, an optimal prefix free code [] is a set of n code strings on the alphabet , of variable lengths such that no string is prefix of another, and the average length of a code is minimized (i.e., is minimal). The particularity of such codes is that even though the code strings assigned to the messages can differ in lengths (assigning shorter ones to more frequent messages yields compression to symbols), the prefix free property insures a non-ambiguous decoding.

Such optimal codes, known since 1952 [], are used in “all the mainstream compression formats” [] (e.g., PNG, JPEG, MP3, MPEG, GZIP, and PKZIP). “Huffman’s algorithm for computing minimum-redundancy prefix-free codes has almost legendary status in the computing disciplines” (Moffat []). The concept is “one of the fundamental ideas that people in computer science and data communications are using all the time” (Knuth []), and the code itself is “one of the enduring techniques of data compression. It was used in the venerable PACK compression program, authored by Szymanski in 1978, and remains no less popular today” (Moffat et al. [] in 1997).

Even though some compression methods use only precomputed tables coding for an Optimal Binary Prefix Free Code “built-in” the compression method, there are still many applications that require the computation of such codes for each instance (e.g., BZIP2 [], JPEG [], etc.). In the current state of the art, the running time of such algorithm is almost never the bottleneck of the compression process, but nevertheless worth studying if only for theoretical sake, and potentially for future applications less directly related to compression: Takaoka [,,] described an adaptive version of merge sort which scans for sorted runs in the input, and merge them according to a scheme which is exactly the Huffman code tree; and Barbay and Navarro [] described how to use such code tree to compute the optimal shape of a wavelet tree for runs-based compression of permutations. This, in turns, has applications to the compression of general texts, via the compression of the permutations appearing in a Burrows Wheeler’s transform of the text.

1.1. Background

Any prefix free code can be computed in time linear in the input size from a set of code lengths satisfying the Kraft inequality . The original description of the code by Huffman [] yields a heap-based algorithm performing algebraic operations, using the bijection between D-ary prefix free codes and D-ary cardinal trees []. In order to consider the optimality of this running time, one must notice that this algorithm is not in the comparison model (as it performs sums on elements of its input), but still in a quite restricted computational model, dubbed the algebraic decision tree computational model [], composed of algorithms which can be modeled as a decision tree where decision nodes are based only on algebraic operations with a finite number of operators. In the algebraic decision tree computational model, the complexity of the algorithm suggested by Huffman [] is asymptotically optimal for any constant value of D, in the worst case over instances composed of n positive weights, as computing the optimal prefix free code for the weights is equivalent to sorting the positive integers , a task proven to require (as D is a constant) in the algebraic decision tree model, by a simple argument of information theory.

Yet, not all instances require the same amount of work to compute an optimal code (see Table 1 for a partial list of relevant results):

Table 1.

A selection of results on the computational complexity of optimal prefix free codes. n is the number of weights in the input; is the number of distinct weights in the input; is the number of distinct codelengths produced; and is the difficulty measure introduced in this work, the number of alternation between External nodes and Internal nodes in an execution of the algorithm suggested by Huffman [] or by van Leeuwen’s algorithm [] (see Section 3.1 for the formal definition).

- When the weights are given in sorted order, van Leeuwen [] showed in 1976 that an optimal code can be computed using within algebraic operations.

- When the weights consist of distinct values and are given in a sorted, compressed form, Moffat and Turpin [] showed in 1998 how to compute an optimal binary prefix free code using within algebraic operations, which is sublinear in n when .

- In the case where the weights are given unsorted, Belal and Elmasry [,] described in 2006 many families of instances for which an optimal binary prefix free code can be computed in linear time.

Such example of “easy instances” suggest that it could be possible to compute optimal prefix free codes in much less time by taking advantage of some measure of “easiness”, and indeed Belal and Elmasry [,] proposed an algorithm claimed to perform within algebraic operations, in the worst case over instances formed by n weights such that the binary prefix free code obtained by Huffman’s method [] has exactly k distinct code lengths. The proof included in the proceedings yields only a bound within , while the claim of a complexity within was later downgraded to in extended versions of their article published on public repositories []. Such result is asymptotically better than the state of the art when k is finite, but worse when k is larger than , which does not seem to be the case in practice (see our own experimental results in Table 3).

1.2. Question

In the context described above, we wondered about the existence of an algorithm taking advantage of small values of k, while behaving more reasonably than Belal and Elmasry’s solution [,] for large values of k (e.g., ). Kirkpatrick [] defined a dovetailing combination of several algorithms as running all of them algorithms in parallel (not necessarily at the same rate) and stopping them all whenever one reaches the answer. We wonder if there is an algorithin more interestingly than a “dovetailing” combination of solutions running respectively in time within and .

Given n positive integer weights, can one compute an optimal binary prefix free code in time within in the algebraic decision tree computational model for some general class of instances?

1.3. Contributions

We answer in the affirmative for many classes of instances (extending and formalizing the proofs of the same theoretical results previously described in 2016 []), identified by the “alternation” measure assigning a difficulty to each instance (formally defined in Section 3.1):

Theorem 1.

The computational complexity of optimal binary prefix free code is within in the algebraic decision tree computational model, in the worst case over instances of size n and alternation α.

Proof.

We describe in Lemma 2 of Section 2.2 a deferred data structure which supports q queries of type rank, select and partialSum in time within , all within the algebraic decision tree computational model. We describe in Section 2.3 the Group–Dock–Mix (GDM) algorithm, inspired by the van Leeuwen’s algorithm [], modified to use this deferred data structure to compute optimal prefix free codes given an unsorted input, and we prove its correction in Lemma 3. We show in Lemma 8 that any algorithm A in the algebraic decision tree computational model performs within ) algebraic operations in the worst case over instances of size n and alternation . We show in Lemma 5 that the GDM algorithm performs such queries, which yields in Corollary 6 a complexity within , all within the algebraic decision tree computational model. As and for this range (Lemma 7), the asymptotic optimality ensues. □

When is at its maximal (i.e., ), this complexity matches the tight asymptotic computational complexity bound of for algorithms in the algebraic decision tree computational model in the worst case over all instances of size n. When is substantially smaller than n (e.g., , which practicality we discuss in Section 4), the GDM algorithm performs within operations, down to linear in n for finite values of .

Another natural question is whether practical instances present small enough values of parameters such as k and that taking advantage of them makes a difference. By a preliminary set of experiments on word-based compression of English texts, we answer with a tentative negation (this experimentation is a new addition to the previous presentation of this work []). The alternation of practical instances is much smaller than the number n of weights, with ratios (see Table 3 for more similar values) from 77 ( and ) to 154 ( and 67,780). However, it does not seem to be small enough to make a meaningful difference in term of running time, as on the data set, which suggests that such instances are not “easy enough” for such techniques to make a meaningful difference in running time. We obtain similar results about the parameter k, with for all instances of the data set, rendering a solution running in time within non competitive compared either to the state of the art solution running in time within , nor to the solution proposed in this work running in time within .

In the next section (Section 2), we describe our solution in three parts: the intuition behind the general strategy in Section 2.1, the deferred data structure which maintains a partially sorted list of weights while supporting rank, select and partialSum queries in Section 2.2, and the algorithm which uses those operators to compute an optimal prefix free code in Section 2.3. Our most technical contribution consists in the analysis of the running time of this solution, described in Section 3: the formal definition of the parameter of the analysis in Section 3.1, the upper bound in Section 3.2 and the matching lower bound in Section 3.3. In Section 4, we compare the experimental values of the difficulty measures over unordered instances of the optimal prefix free code computation on a sample of texts, the alternation introduced in this work and the number k of distinct code lengths proposed by Belal and Elmasry [,]. We conclude with a theoretical comparison of our results with those from Belal and Elmasry [,] in Section 5, along with a discussion of potential themes for further research.

2. Solution

The solution that we describe is a combination of two results: some results about deferred data structures for multisets, which support queries in a “lazy” way; and some results about optimal prefix free codes themselves, about the relation between the computational cost of partially sorting a set of positive integers and the computational cost of computing a binary optimal prefix free code for the corresponding frequency distribution. We describe the general intuition of our solution in Section 2.1, the deferred data structure in Section 2.2, and the algorithm in Section 2.3.

2.1. General Intuition

Observing that the algorithm suggested by Huffman [] in 1952 always creates the internal nodes in increasing order of weight, van Leeuwen [] described in 1976 an algorithm to compute optimal prefix free codes in linear time when the input (i.e., the weights of the external nodes) is given in sorted order. A close look at the execution of van Leeuwen’s algorithm [] reveals a sequence of sequential searches for the insertion rank r of the weight of some internal node in the list of weights of external nodes. Such sequential search could be replaced by a more efficient search algorithm in order to reduce the number of comparisons performed (e.g., a doubling search [] would find such a rank r in comparisons instead of the r comparisons spent by a sequential search). Of course, this would reduce the number of comparisons performed, but it would not reduce the number of algebraic operations (in this case, sums) performed, and hence neither would it significantly reduce the total running time of the algorithm.

Example 1.

Consider the following instance for the computation of a optimal prefix free code formed by sorted positive weights  such that the first internal node created has larger weight than the largest weight in the original array (i.e., ). On such an instance, van Leeuwen’s algorithm [] starts by performing comparisons in the equivalent of a sequential search in W for : a binary search would perform comparisons instead, and a doubling search [] no more than comparisons.

such that the first internal node created has larger weight than the largest weight in the original array (i.e., ). On such an instance, van Leeuwen’s algorithm [] starts by performing comparisons in the equivalent of a sequential search in W for : a binary search would perform comparisons instead, and a doubling search [] no more than comparisons.

As mentioned above, any algorithm must access (and sum) each weight at least once in order to compute an optimal prefix free code for the input, so that reducing the number of comparisons does not reduce the running time of van Leeuwen’s algorithm on a sorted input, but it illustrates how instances with clustered external and internal nodes are “easier” than instances in which they are interleaved.

The algorithm suggested by Huffman [] starts with a heap of external nodes, selects the two nodes of minimal weight, pair them into a new node which it adds to the heap, and iterates till only one node is left. Whereas the type of the nodes selected, external or internal, does not matter in the analysis of the complexity of Huffman’s algorithm, we claim that the computational cost of optimal prefix free codes can be greatly reduced on instances where many external nodes are selected consecutively. We define the “EI signature” of an instance as the first step toward the characterization of such instances:

Definition 1 (EI signature).

Given an instance of the optimal prefix free code problem formed by n positive weights , its EI signature is a string of length over the alphabet (where E stands for “external” and I for “internal”) marking, at each step of the algorithm suggested by Huffman [], whether an external or internal node is chosen as the minimum (including the last node returned by the algorithm, for simplicity).

The analysis described in Section 3 is based on the number of maximal blocks of consecutive positions formed only of E in the EI signature of the instance . We can already show some basic properties of this measure:

Lemma 1.

Given the EI signature of n unsorted positive weights ,

- 1.

- The number of occurrences of E in the signature is ;

- 2.

- The number of occurrences of I in the signature is ;

- 3.

- The length of the signature is their sum, ;

- 4.

- The signature starts with two E;

- 5.

- The signature finishes with one I;

- 6.

- The number of consecutive occurrences of in the signature is one more than the number of occurrences of in it, ;

- 7.

- The number of consecutive occurrences of in the signature is at least 1 and at most , .

Proof.

The three first properties are simple consequences of basic properties on binary trees. starts with two E as the first two nodes paired are always external. finishes with one I as the last node returned is always (for ) an internal node. The two last properties are simple consequences of the fact that is a binary string starting with an E and finishing with an I. □

Example 2.

For example, consider the text “ABBCCCDDDDEEEEEFFFFFGGGGGGHHHHHHH” formed by the concatenation of one occurrence of “A”, two occurrences of “B”, three occurrences of “C”, four occurrences of “D”, five occurrences of “E”, five (again) occurrences of “F”, six occurrences of “G” and seven occurrences of “H”, so that the corresponding frequencies are  . It corresponds to an instance of size , of EI signature of length 15, which starts with , finishes with I, and contains only occurrences of (underlined), corresponding to a decomposition into maximal blocks of consecutive s (in bold), out of a maximal potential number of 7 for the alphabet size .

. It corresponds to an instance of size , of EI signature of length 15, which starts with , finishes with I, and contains only occurrences of (underlined), corresponding to a decomposition into maximal blocks of consecutive s (in bold), out of a maximal potential number of 7 for the alphabet size .

Instances such as that presented in Example 2, with very few blocks of E (more than in Example 3, but much less than in the worst case), are easier to solve than instances with many such blocks. For example, an instance W of length n such that its EI signature is composed of a single run of nEs followed by a single run of Is (such as the one described in Figure 1) can be solved in linear time, and in particular without sorting the weights: it is enough to assign the codelength to the largest weights and the codelength to the smallest weights. Separating those weights is a simple select operation, supported in amortized linear time by the data structures described in the following section. We describe two other extreme examples, starting with one where all the weights are equal (as a particular case of when they are all within a factor of two of each other).

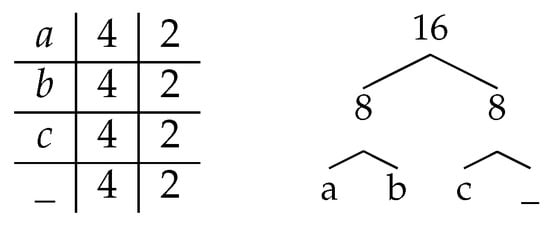

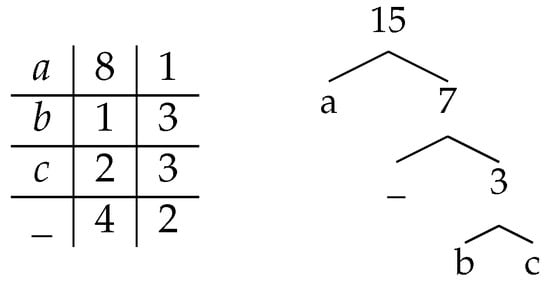

Figure 1.

Illustrations for the instance based on the text “ba_bb_caca_ba_cc”, minimizing the number of occurrences of “EI” in its EI signature “EEE EI II”. The columns of the array respectively list the messages, their numbers of occurrences and the code lengths assigned to each.

Example 3.

Consider the text “ba_bb_caca_ba_cc” from Figure 1. Each of the four messages (input symbols) of its alphabet occurs exactly 4 times, so that an optimal prefix free code assigns a uniform codelength of 2 bits to all messages (see Figure 1). There is no need to sort the messages by frequency (and the prefix free code does not yield any information about the order in which the messages would be sorted by monotone frequencies), and accordingly the EI signature of this text, “EEE EI II”, has a single block of Es, indicating a very easy instance. The same holds if the text is such that the frequencies of the messages are all within a factor of two of each other.

On the other hand, some instances present the opposite extreme, where no weight is within a factor of two of any other, which “forces” any algorithm computing optimal prefix free codes to sort the weights.

Example 4.

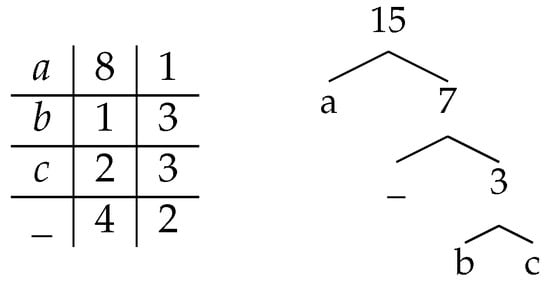

Consider the text “aaaaaaaabcc____” from Figure 2 (composed of one occurrence of “b”, two occurrences of “c”, four occurrences of “_”, and eight occurrences of “a”), such that the frequencies of its messages follow an exponential distribution, so that an optimal prefix free code assigns different codelengths to almost all messages (see the third column of the array in Figure 2). Any optimal prefix free code for this instance yields all the information required to sort the messages by frequencies. Accordingly, the EI signature “E EIEIEI” of this instance has three blocks of Es (out of three possible ones) for this value of the alphabet size , indicating a more difficult instance. The same holds with more general distributions, as long as no two pairs of message frequencies are within a factor of two of each other.

Figure 2.

Illustrations for the instance based on the text “aaaaaaaabcc____”, maximizing the number of occurrences of “EI” in its EI signature “E EI EI EI”. The columns of the array respectively list the messages, their numbers of occurrences and the code lengths assigned to each.

Those various examples should give an intuition of the features of the instance that our techniques aim to take advantage of. We describe those techniques more formally in the two following sections, starting with the deferred data structure allowing to partially sort the weights in Section 2.2, and following with the algorithm itself in Section 2.3.

2.2. Partial Sum Deferred Data Structure

Given a multiset of size n on an alphabet of size , Karp et al. [] defined the first deferred data structure supporting for all and queries such as rank, the number of elements which are strictly smaller than x in W; and select, the value of the r-th smallest value (counted with multiplicity) in W. Their data structure supports q queries in time within , all in the comparison model (Karp et al.’s result [] is actually better than this formula when the number of queries q is larger than the size n of the multiset, but such configuration does not occur in the case of the computation of an optimal prefix free code, where the number q of queries is always smaller or equal to n: for simplicity, we summarize their result as ). To achieve this results, it partially sorts its data in order to minimize the computational cost of future queries, but avoids sorting all of the data if the set of queries does not require it: the queries have then become operators in some sense (they modify the data representation). Note that whereas the running time of each individual query depends on the state of the data representation, the answer to each query is itself independent of the state of the data representation.

Karp et al.’s data structure [] supports only rank and select queries in the comparison model, whereas the computation of optimal prefix free codes requires to sum pairs of weights from the input, and the algorithm that we propose in Section 2.3 requires to sum weights from a range in the input. Such requirement can be reduced to partialSum queries. Whereas partialSum queries have been defined in the literature based on the positions in the input array, we define such queries here in a way that depends only on the content of the multiset (as opposed to a definition depending on the order in which the multiset is given in the input), so that it can be generalized to deferred data structures.

Definition 2 (Partial sum data structure).

Given n unsorted positive weights , a partial sum data structure supports the following queries:

- rank, the number of elements which are strictly smaller than x in W;

- select, the value of the r-th smallest value (counted with multiplicity) in W;

- partialSum, the sum of the r smallest elements (counted with multiplicity) in W.

Example 5.

Given the array  ,

,

- the number of elements strictly smaller than 5 is rank,

- the sixth smallest value is select (counting with redundancies), and

- the sum of the two smallest elements is partialSum.

We describe below how to extend Karp et al.’s deferred data structure [], which already supports rank and select queries on multisets, in order to add the support for partialSum queries, with an amortized running time within a constant factor of the asymptotic time of the original solution. Note that the operations performed by the data structure are not any more within the comparison model, but rather in the algebraic decision tree computational model, as they introduce algebraic operations (additions) on the elements of the multiset. The result is a direct extension of Karp et al. [], adding a sub-task taking linear time (updating partial sums in an interval of positions) to a sub-task which was already taken linear time (partitioning this same interval by a pivot):

Lemma 2.

Given n unsorted positive weights , there is a partial sum deferred data structure which supports q operations of type rank, select, and partialSum in time within , all within the algebraic decision tree computational model.

Proof.

Karp et al. [] described a deferred data structure which supports the rank and select queries (but not partialSum queries). It is based on median computations and -trees, and performs q queries on n values in time within , all within the comparison model (and hence in the even less restricted algebraic decision tree computational model). We describe below how to modify their data structure in a simple way, so that to support partialSum queries with asymptotically negligible additional cost.

At the initialization of the data structure, compute the n partial sums corresponding to the n positions of the unsorted array. After each median computation and partitioning in a rank or select query, recompute the partial sums on the range of values newly partitioned, which increases the cost of the query only by a constant factor. When answering a partialSum query, perform a select query, and then return the value of the partial sum corresponding to the value by the select query: the asymptotic complexity is within a constant factor of the one described by Karp et al. []. □

Barbay et al. [] further improved Karp et al.’s result [] with a simpler data structure (a single binary array) and a finer analysis taking into account the gaps between the positions hit by the queries. Barbay et al.’s results [] can similarly be augmented in order to support partialSum queries while increasing the computational complexity by only a constant factor. This finer result is not relevant to the analysis described in Section 3, given the lack of specific features of the distribution of the gaps between the positions hits by the queries, as generated by the GDM algorithm described in Section 2.3.

Such a deferred data structure is sufficient to simply execute van Leeuwen’s algorithm [] on an unsorted array of positive integers, but would not result in an improvement in the computational complexity: such a simple variant of van Leeuwen’s algorithm [] is simply performing n select operations on the input, effectively sorting the unsorted array. We describe in the next section an algorithm which uses the deferred data structure described above to batch the operations on the external nodes, and to defer the computation of the weights of some internal nodes to later, so that for many instances the input is not completely sorted at the end of the execution, which indeed reduces the total cost of the execution of the algorithm.

2.3. Algorithm “Group–Dock–Mix” (GDM) for the Binary Case

There are five main phases in the GDM algorithm: the initialization, three phases (grouping, docking, and mixing, giving the name “GDM” to the algorithm) inside a loop running until only internal nodes are left to process, and the conclusion:

- In the initialization phase, initialize the partial sum deferred data structure with the input, and the first internal node by pairing the two smallest weights of the input.

- In the grouping phase, detect and group the weights smaller than the smallest internal node: this corresponds to a run of consecutive E in the EI signature of the instance.

- In the docking phase, pair the consecutive positions of those weights (as opposed to the weights themselves, which can be reordered by future operations) into internal nodes, and pair those internal nodes until the weight of at least one such internal node becomes equal or larger than the smallest remaining weight: this corresponds to a run of consecutive I in the EI signature of the instance.

- In the mixing phase, rank the smallest unpaired weight among the weights of the available internal nodes, and pairs the internal node of smaller weight two by two, leaving the largest one unpaired: this corresponds to an occurrence of in the EI signature of the instance. This is the most complicated (and most costly) phase of the algorithm.

- In the conclusion phase, with i internal nodes left to process (and no external node left), assign codelength to the largest ones and codelength to the smallest ones: this corresponds to the last run of consecutive I in the EI signature of the instance.

The algorithm and its complexity analysis distinguish two types of internal nodes: pure nodes, which descendants were all paired during the same grouping phase; and mixed nodes, each of which either is the ancestor of such a mixed node, or pairs a pure internal node with an external node, or pairs two pure internal nodes produced at distinct phases of the GDM algorithm. The distinction is important as the algorithm computes the weight of any mixed node at its creation (potentially generating several data structure operations), whereas it defers the computation of the weight of some pure nodes to later, and does not compute the weight of some pure nodes. We will discuss this further in Section 5 about the non istance optimality of the solution presented.

Before describing each phase more in detail, it is important to observe the following invariant of the algorithm:

Property 1.

Given an instance of the optimal binary prefix free code problem formed by positive weights , between each phase of the algorithm, all unpaired internal nodes have weight within a constant factor of two (i.e., the maximal weight of an unpaired internal node is strictly smaller than twice the minimal weight of an unpaired internal node).

Proof.

The generally property is proven by checking that each phase preserves it:

- Initialization: there is only one internal node at the end of this phase, hence the conditions for the property to stand are created.

- Grouping: no internal node is created, hence the property is preserved.

- Docking: pairing until at least one internal node has weight equal or larger than the smallest weight of a remaining weight (future external node) insures the property.

- Mixing: as this phase pairs all internal nodes except possibly the one of largest weight, the property is preserved.

- Conclusion: A single node is left at the end of the phase, hence the property.

As the initialization phase creates the property and each other phase preserves it, the property is verified through the execution of the algorithm. □

We now proceed to describe each phase in more details:

- Initialization: Initialize the deferred data structure partial sum with the input; compute the weight currentMinInternal of the first internal node through the operation partialSum (the sum of the two smallest weights); create this internal node, of weight currentMinInternal and children 1 and 2 (the positions of the first and second weights, in any order); compute the weight currentMinExternal of the first unpaired weight (i.e., the first available external node) by the operation select; setup the variables nbInternals and nbExternalProcessed .

- Grouping: Compute the position r of the first unpaired weight larger than the smallest unpaired internal node, through the operation rank(currentMinInternal); pair the (() modulo 2) indices to form pure internal nodes; compute the parity of the number of unpaired weights smaller than the first unpaired internal node; if it is odd, select the r-th weight through the operation , compute the weight of the first unpaired internal node, compare it with the next unpaired weight, to form one mixed node by combining the minimal of the two with the extraneous weight.

- Docking: Pair all internal nodes by batches (by Property 1, their weights are all within a factor of two, so all internal nodes of a generation are processed before any internal node of the next generation); after each batch, compare the weight of the largest such internal node (compute it through on its range if it is a pure node, otherwise it is already computed) with the first unpaired weight: if smaller, pair another batch, and if larger, the phase is finished.

- Mixing: Rank the smallest unpaired weight among the weights of the available internal nodes, by a doubling search starting from the beginning of the list of internal nodes. For each comparison, if the internal node’s weight is not already known, compute it through a partialSum operation on the corresponding range (if it is a mixed node, it is already known). If the number r of internal nodes of weight smaller than the unpaired weight is odd, pair all but one, compute the weight of the last one and pair it with the unpaired weight. If r is even, pair all of the r internal nodes of weight smaller than the unpaired weight, compare the weight of the next unpaired internal node with the weight of the next unpaired external node, and pair the minimum of the two with the first unpaired weight. If there are some unpaired weights left, go back to the Grouping phase, otherwise continue to the Conclusion phase.

- Conclusion: There are only internal nodes left, and their weights are all within a factor of two from each other. Pair the nodes two by two in batch as in the docking phase, computing the weight of an internal node only when the number of internal nodes of a batch is odd.

The combination of those phases forms the GDM algorithm, which computes an optimal prefix free code given an unsorted sets of positive integers.

Lemma 3.

The tree returned by the GDM algorithm describes an optimal binary prefix free code for its input.

In the next section, we analyze the number q of rank, select and partialSum queries performed by the GDM algorithm, and deduce from it the complexity of the algorithm in term of algebraic operations.

3. Analysis

The GDM algorithm runs in time within in the worst case over instances of size n (which is optimal (if not a new result) in the algebraic decision tree computational model). However, it runs much faster on instances with few blocks of consecutive Es in the EI signature of the instance. We formalize this concept by defining the alternation of the instance in Section 3.1. We then proceed in Section 3.2 to show upper bounds on the number of queries to the deferred data structure and algebraic operations on the data performed by the GDM algorithm in the worst case over instances of fixed size n and alternation . We finish in Section 3.3 with a matching lower bound for the number of operations performed by any algorithm in the algebraical decision tree model.

3.1. Parameter Alternation

We suggested in Section 2.1 that the number of blocks of consecutive Es in the EI signature of an instance can be used to measure its difficulty. Indeed, some “easy” instances have few such blocks, and the instance used to prove the lower bound on the computational complexity of optimal prefix free codes in the algebraic decision tree computational model in the worst case over instances of size n has such blocks (the maximum possible in an instance of size n). We formally define this measure as the “alternation” of the instance (it measures how many times the algorithm suggested by Huffman [] or the van Leeuwen algorithm [] “alternates” from an external node to an internal node in its iterative selection process for nodes of minimum weight) and denote it by the parameter :

Definition 3 (Alternation).

Given an instance of the optimal binary prefix free code problem formed by n positive weights , its alternation is the number of occurrences of the substring “” in its EI signature .

In other words, the alternation of W is the number of times that the algorithm suggested by Huffman [] or the van Leeuwen’s algorithm [] selects an internal node immediately after selecting an internal node.

Note that counting the number of blocks of consecutive Es is equivalent to counting the number of blocks of consecutive Is: they are the same, because the EI signature starts with two Es and finishes with an I, and each new I-block ends an E-block and vice-versa. Also, the choice between measuring the number of occurrences of “” or the number of occurrence of “” is arbitrary, as they are within a term of 1 of each other (see Section 3.1): counting the number of occurrences of “” just gives a nicer range of (as opposed to ). This number is of particular interest as it measures the number of iteration of the main loop in the GDM algorithm:

Lemma 4.

Given an instance of the optimal prefix free code problem of alternation α, the GDM algorithm performs α iterations of its main loop.

Proof.

This is a direct consequence of the definition of the alternation on one hand, and of the definition of the algorithm GDM on the other hand.

The main loop consists in three phases, respectively named grouping, docking, and mixing. The grouping phase corresponds to the detection and grouping of the weights smaller than the smallest internal node: this corresponds to a run of consecutive E in the EI signature of the instance. The docking phase corresponds to the pairing of the consecutive positions of those weights into internal nodes, and of those internal nodes though produced, recusively until the weight of at least one such internal node becomes equal or larger than the smallest remaining weight: this corresponds to a run of consecutive I in the EI signature of the instance. The mixing phase corresponds to the ranking of the smallest unpaired weight among the weights of the available internal nodes: this corresponds to an occurrence of in the EI signature of the instance.

As the iterations of the main loop of the GDM algorith are in bijection with the runs of consecutive E in the EI signature of the instance, the number of such iteration is the number of such runs. □

In the next section, we refine this result to the number of data structure operations and algebraic operations performed by the GDM algorithm.

3.2. Running Time Upper Bound

In order to measure the number of queries performed by the GDM algorithm, we detail how many queries are performed in each phase of the algorithm.

- The initialization corresponds to a constant number of data structure operations: a select operation to find the third smallest weight (and separate it from the two smallest ones), and a simple partialSum operation to sum the two smallest weights of the input.

- Each grouping phase corresponds to a constant number of data structure operations: a partialSum operation to compute the weight of the smallest internal node if needed, and a rank operation to identify the unpaired weights which are smaller or equal to that of this node.

- The number of operations performed by each docking and mixing phase is better analyzed together: if there are i “I” in the I-block corresponding to this phase in the EI signature, and if the internal nodes are grouped on h levels before generating an internal node of weight larger than the smallest unpaired weight, the docking phase corresponds to at most h partialSum operations, whereas the mixing phase corresponds to at most partialSum operations, which develops to , for a total of data structure operations.

- The conclusion phase corresponds to a number of data structure operations logarithmic in the size of the last block of Is in the EI signature of the instance: in the worst case, the weight of one pure internal node is computed for each batch, through one single partialSum operation each time.

Lemma 4 and the concavity of the logarithm function yields the total number of data structure operations performed by the GDM algorithm:

Lemma 5.

Given an instance of the optimal binary prefix free code problem of alternation α, the GDM algorithm performs within data structure operations on the deferred data structure given as input.

Proof.

For , let be the number of internal nodes at the beginning of the i-th docking phase. According to Lemma 4 and the analysis of the number of data structure operations performed in each phase, the GDM algorithm performs in total within data structure operations. Since there are at most internal nodes and the sum , by concavity of the logarithm the number of queries is within . □

Combining this result with the complexity of the partialSum deferred data structure from Lemma 2 directly yields the complexity of the GDM algorithm in algebraic operation (and running time):

Lemma 6.

Given an instance of the optimal binary prefix free code problem of alternation α, the GDM algorithm runs in time within , all within the algebraic decision tree computational model.

Proof.

Let q be the number of queries performed by the GDM algorithm. Lemma 5 implies that . Plunging this into the expression from Lemma 2 yields a complexity within . □

Some simple functional analysis further simplifies the expression to our final upper bound:

Lemma 7.

Given two positive integers and ,

Proof.

Given two positive integers and , and . A simple rewriting yields and . Then, implies , which yields the result. □

In the next section, we show that this complexity is indeed optimal in the algebraic decision tree computational model, in the worst case over instances of fixed size n and alternation .

3.3. Lower Bound

A complexity within is exactly what one could expect, by analogy with multiset sorting: there are groups of weights, so that the order within each groups does not matter much, but the order between weights from different groups does matter. We combine two results:

- a linear time reduction from multiset sorting to the computation of optimal prefix free codes; and

- the lower bound within (tight in the comparison model) suggested by information theory for the computational complexity of multiset sorting in the worst case over multisets of size n with at most distinct elements.

This yields a lower bound within on the computational complexity of computing optimal binary prefix free codes in the worst case over instances of size n and alternation .

Lemma 8.

Given the integers and , for any correct algorithm A computing optimal binary prefix free codes in the algebraic decision tree computational model, there is a set of n positive weights of alternation α such that A performs within ) algebraic operations.

Proof.

For any Multiset of n values from an alphabet of distinct values, define the instance of size n, so that computing an optimal prefix free code for W, sorted by code length, provides an ordering for A. W has alternation : for any two distinct values x and y from A, the algorithm suggested by Huffman [] as well as van Leeuwen’s algorithm [] pair all the weights of value before pairing any weight of value , so that the EI signature of has blocks of consecutive Es. The lower bound then results from the classical lower bound on sorting mmultisets in the comparison model in the worst case over multisets of size n with distinct values [], itself based on the number of possible such multisets. □

Having shown that the GDM algorithm takes optimally advantage of , we are left to check whether values of in practice are small enough for GDM’s improvements to be worth of notice. We show in the next section that, at least for one application, it does not seem to be the case.

4. Preliminary Experimentations

There are many mature implementations [,] to compute optimal prefix free codes: Huffman’s solution [] to the problem of optimal prefix free codes is not only ancient (67 years from 1952 to 2019), but also one still in wide use: In 1991, Gary Stix stated that “products that use Huffman code might fill a consumer electronics store” []. In 2010, the answer to the question “what are the real-world applications of Huffman coding?” on the website Stacks Exchange [] stated that “Huffman is widely used in all the mainstream compression formats that you might encounter—from GZIP, PKZIP (winzip etc.) and BZIP2, to image formats such as JPEG and PNG.” In 2019, the Wikipedia website on Huffman coding still states that “ prefix codes nevertheless remain in wide use because of their simplicity, high speed, and lack of patent coverage. They are often used as a “back-end” to other compression methods. DEFLATE (PKZIP’s algorithm) and multimedia codecs such as JPEG and MP3 have a front-end model and quantization followed by the use of prefix codes (...).” []. Presenting an implementation competitive with industrial ones is well beyond the scope of this (theoretical) work, but it is possible to perform some preliminary experimental work in order to predict the potential practical impact of algorithms taking advantage of various difficulty measures introduced so far. We realized such an implementation (which sources are publicly available, see Supplementary Materials) and present preliminary experimental results using such an implementation.

Albeit the computation of optimal prefix free codes occurs in many applications (In several applications, optimal prefix free codes are fixed once for all, but there are still many applications for which such codes are computed again for each instance) (e.g., BZIP2 [], JPEG [], etc.), the alphabet size is not necessarily increasing with the size of the data to be compressed. In order to evaluate the potential improvement of adaptive techniques such as presented in this work and others [,], we consider the application of optimal prefix free codes to the word-based compression of natural languague texts, cited as an example of “large alphabet” application by Moffat [], and studied by Moura et al. []. As for the natural language texts themselves, we considered a random selection of nine texts from the Gutenberg project [], listed in Table 2.

Table 2.

Data sets used for the experimentation measures, all from the Project Gutenberg.

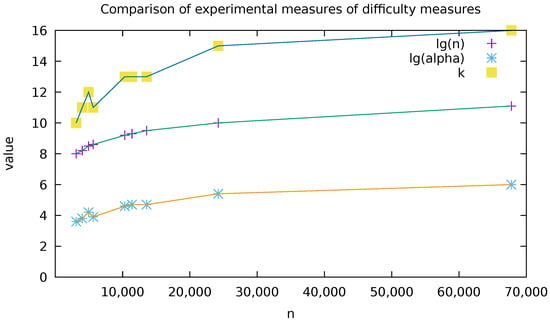

Compared to the many difficulty measures known for sorting [,], there are only a few ones for the computation of optimal prefix free codes, respectively introduced by Moffat and Turpin [], Milidiu et al. [], Belal and Elmasry [,], and ourselves in this work: Table 3 describes those measures of difficulty, and the experimental values of the most relevant ones on the texts listed in Table 2. Even if optimized implementations might shave some constant factor of the running time (in particular concerning the computation of the median of a set of values), studying the ratio between theoretical complexities yields an idea of how big such a constant factor must be to make a difference.

Table 3.

Experimental values of various difficulty factors on the data sets listed in Table 2, sorted by n, along with their logarithms (truncated to one decimal) and relevant combinations. denotes the number of words in the document (i.e., the sum of the frequencies); n denotes the number of distinct words (i.e., the number of frequencies in the input); k denotes the number of distinct codelengths, a notation introduced by Belal and Elmasry []; denotes the alternation of the instance, introduced in this work. The experimental values of k (number of distinct code code lengths) are much smaller than that of (the alternation of the instance), themselves much smaller than that of n (the number of frequencies in the input). A more interesting comparison of k with and is given in Figure 3.

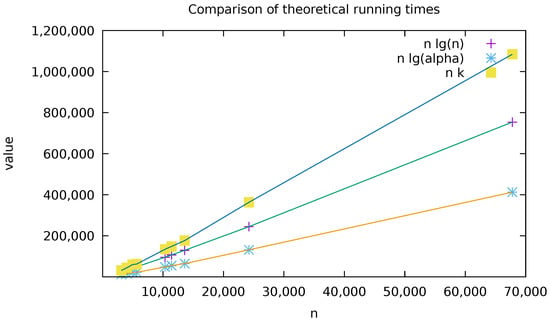

The experimental measures listed in Table 3 suggest that the advantage potentially won by the GDM algorithm, by taking advantage of the alternation of the input (at the cost repeated regular median computations), will be only of a constant factor (see Figure 3 and Figure 4 for how is never less than half of ). Concerning the number k of distinct codelengths, the proven complexity of is completely unpractical (The values for are not shown in Table 3 for lack of space), and even the claimed complexity of would yield at best a constant factor improvement, as on the data tested. In the next section we discuss, among other things, how such results affect research perspectives on this topic.

Figure 3.

Plots comparing the experimental values of , and k from Table 3: is roughly half of , itself roughly half of k. This implies that the experimental values of the alternation are only polynomially smaller than the number n of weights, and that experimental values of k are exponentially smaller than both the number n of weights and the alternation . The consequences on preducted running times are described more explicitly in Figure 4.

Figure 4.

Plots comparing the experimental values of , and from the array of Table 3: is roughly half of , itself roughly half of .The experimental values of , and are all within a constant factor of each other.

5. Discussion

We described an algorithm computing an optimal prefix free code for n unsorted positive weights in time within , where the alternation roughly measures the amount of sorting required by the computation of such an optimal prefix free code. This result is a combination of a new algorithm inspired by van Leeuwen’s 1976 algorithm [], and of a minor extension of Karp et al.’s 1988 results about deferred data structures supporting rank and select queries on multisets []. In theory, such a result has the potential to improve over previous results, whether the complexity suggested by Huffman in 1952 or more recent improvements for specific classes of instances [,]. In practice, it does not seem to be very promising, at least when computing optimal binary prefix free codes for mere texts: on such data, preliminary experiments show the alternation to be polynomial in the input size, the number n of weights (their logarithms are within a constant factor of each other). The situation might be different for some other practical application, but in any application, the alternation would have to be asymptotically bounded by any polynomial of the input size n, in order for the GDM algorithm to yield an improvement in running time by more than a constant factor.

The results described above yield many new questions, of which we discuss only a few in the following sections: how those results relate to previous results (Section 5.1); about the potential (lack of) practical applications of our results on the practical computation of optimal binary prefix free codes (Section 5.2); and about problems similar in essence to the computation of optimal prefix free codes, but where optimizing the computational complexity might have more of a practical impact (Section 5.3).

5.1. Relation to Previous Work

The work presented here present similarities with various previous work, which we discuss here: Belal and Elmasry’s work [], which inspired ours (Section 5.1.1), the definition of other deferred data structures obtained since 1988 (Section 5.1.2 and Section 5.1.3), and the potentiality for instance optimality results for the computation of of optimal binary prefix free codes (Section 5.1.4).

5.1.1. Previous Work on Optimal Prefix Free Codes

In 2006, Belal and Elmasry [] described a variant of Milidiú et al.’s algorithm [,] to compute optimal prefix free codes, potentially performing algebraic operations when the weights are not sorted, where k is the number of distinct code lengths in the optimal prefix free code computed by the algorithm suggested by Huffman []. They describe an algorithm running in time within when the weights are unsorted, and propose to improve the complexity to by partitioning the weights into smaller groups, each corresponding to disjoint intervals of weights value. The claimed complexity of is asymptotically better than the one suggested by Huffman when .

Like the GDM algorithm, the algorithm suggested by Belal and Elmasry [,] for the unsorted case is based on several computations of the median of the weights within a given interval, in particular, in order to select the weights smaller than some well chosen value. The essential difference between both works is the use of a deferred data structure in the GDM algorithm, which simplifies both the algorithm and the analysis of its complexity.

While an algorithm running in time within would improve over the running time within of our proposed solution, such an algorithm has not been defined yet, and for any complexity within is a strong improvement over the complexity class suggested by Belal and Elmasry [,], in addition to be a much more formal statement of the algorithm and of the analysis of its running time on a dynamically changing set of weights.

5.1.2. Applicability of Dynamic Results on Deferred Data Structures

Karp et al. [], when they defined the first deferred data structures, supporting rank and select on multisets and other queries on convex hull, left as an open problem the support of dynamic operators such as insert and delete: Ching et al. [] quickly demonstrated how to add such support in good amortized time.

The dynamic addition and deletion of elements in a deferred data structure (added by Ching et al. [] to Karp et al. []’s results) does not seem to have any application to the computation of optimal prefix free codes: even if the list of weights was dynamic such as in an online version of the computation of optimal prefix free codes, extensive additional work would be required in order to build a deferred data structure supporting something like “prefix free code queries”.

5.1.3. Applicability of Refined Results on Deferred Data Structures

Karp et al.’s analysis [] of the complexity of the deferred data structure is in function of the total number q of queries and operators, while Kaligosi et al. [] analyzed the complexity of an offline version in function of the size of the gaps between the positions of the queries. Barbay et al. [] combined the three results into a single deferred data structure for multisets which supports the operators rank and select in amortized time proportional to the entropy of the distribution of the sizes of the gaps between the positions of the queries.

At first view, one could hope to generalize the refined entropy analysis (introduced by Kaligosi et al. [] on the static version and applied by Barbay et al. [] to the online version) of multisets deferred data structures supporting rank and select to the computational complexity of optimal prefix free codes: a complexity proportional to the entropy of the distribution of codelengths in the output would nicely match the lower bound of suggested by information theory, where the output contains codes of length , for some integer vector of distinct codelengths and some integer k measuring the number of distinct codelengths. Our current analysis does not yield such a result: the gap lengths in the list of weights between the position hit by the queries generated by the GDM algorithm are not as regular as , so that the entropy of such gaps seems unrelated to the entropy of the number of codes of a given length for each .

5.1.4. Instance Optimality

The refinement of analysis techniques from the worst case over instances of fixed size to the worst case over more restricted classes of instances has yield interesting results on a multitude of problems, from sorting permutation [], sorting multisets [] and computing convex hulls and maxima sets [], etc. Afshani et al. [] described how minor variants of Kirkpatrick and Seidel’s algorithms [] to compute convex hulls and maxima sets in two dimensions take optimally advantage of the positions of the input points, to the point that those algorithms are actually instance optimal among algorithms ignoring the input order (Formally, those are “input order oblivious instance optimal” in the algebraical decision tree model).

As we refined the analysis of the computation of optimal binary prefix free codes from the worst case over instances of fixed size n to the worst case over instances of fixed size n and alternation , it is natural to ask whether further refinements are possible, or if the GDM algorithm is instance optimal. There are two parts to the answer: one about the input order, and one about the input structure. While the GDM algorithm does not take into account the input order (and hence cannot be truly instance optimal), replacing Karp et al.’s deferred data structure [] by one which does take optimally advantage of the input order [] in the extension described in Section 2.2 does yield a solution taking advantage of some measure of input order. The tougher issue is that of taking optimally advantage of the input structure, in this case the values of the input frequencies. In order to simplify both its expression and the analysis of its running time, the GDM algorithm immediately computes the weights of “mixed” nodes (pairing a pure internal node with an external node, or two pure internal nodes produced at distinct phases of the algorithm, see Section 2.3 for the formal definition). This might not be necessary on some instances, making the GDM algorithm non competitive compared to others. Designing another algorithm which postpone the computation of the weights of mixed nodes would be a prerequisite to instance optimality.

5.2. Potential (Lack of) Practical Impact

We expect the impact of our faster algorithm on the execution time of optimal prefix free code based techniques to be of little importance in most cases: compressing a sequence of messages from an input alphabet of size n requires not only computing the code (in time within using our solution), but also computing the weights of the messages (in time linear in ), and encoding the sequence itself using the computed code (in time linear in ), where the later usually dominates the total running time.

5.2.1. In Classical Computational Models and Applications

Improving the code computation time will improve on the compression time only in cases where the number n of distinct messages in the input S is very large compared to the length of such input. One such application is the compression of texts in natural language, where the input alphabet is composed of all the natural words [] (which we partially explored in Section 4 with relatively disappointing results). Another potential application is the boosting technique from Ferragina et al. [], which divides the input sequence into very short subsequences and computes a prefix free code for each subsequences on the input alphabet of the whole sequence.

A logical step would be to study, among the communication solutions using an optimal prefix free code computed offline, which can now afford to compute a new optimal prefix free code more frequently and see their compression performance improved by a faster prefix free code algorithm. Another logical step would be to study, among the compression algorithms computing an optimal prefix free code on each new instance (e.g., JPEG [], BZIP [], MP3, MPEG), which ones get a better time performance by using a faster algorithm to compute optimal prefix free codes.

Another argument for the potential lack of practical impact of our result is that there exist algorithms computing optimal prefix free codes in time within within the RAM model (The algorithm proposed by van Leeuwen [] reduces, in time linear in the number of messages of the alphabet, the computation of an optimal prefix free code to their sorting, and Han [] described how to sort a set of n integers (which message frequencies are) in time within in the RAM model): a time complexity within is an improvement only for values of substantially smaller than , which does not seem to be the case in practice according to the experimentations described in Section Table 2.

5.2.2. Generalisation to Non Binary Output Alphabets

Huffman [] described both how to compute optimal prefix free codes in the case of two output symbols, and how to generalize this method to more output symbols. Moura et al. [] showed that compressing to 256 output symbols (encoded un bytes) provides some advantages in terms of indexing the compressed output, with a relatively minor cost in terms of the compression ratio.

Keeping the same definition of the EI signature and alternation of an instance, the GDM algorithm described in Section 2.3 (and its analysis) can be extended to the case of D output symbols with very minor changes, and will result in an algorithm which running time is already adaptive to , with the same running time as if there were only output symbols. Yet this would not be optimal for large values of D: on instances where , the optimal d-ary prefix free code is uniform and returned in time at most linear in the input size, whereas an algorithm ignoring the value of D could spend time within .

Further improvements could be achieved by extending the concepts described in this work to take into account the value of D, such as to D-ary-EI signature and D-ary alternation. Such improvements in running time would be at most by a multiplicative factor of , and seem theoretically interesting, if probably of limited interest in practice.

5.2.3. External Memory

Sibeyn [] described an efficient algorithm to support select queries on a multiset in external memory. Barbay et al. [] described an efficient deferred data structure supporting rank and select queries on a multiset in external memory with a competitive ratio in the number of external memory accesses performed within (i.e., it asymptotically performs within times of the number of memory accesses performed by the best offline solution). In Section 2.2, we described how to add support for partialSum queries to Karp et al.’s data structure [], at the only cost of an additional constant factor in the complexity.

The same technique as that described in Section 2.2, if applied to Barbay et al.’s deferred data structure [], yields a deferred data structure supporting rank, select and partialSum queries in external memory, of competitive ratio in the number of external memory accesses within , and hence an efficient algorithm to compute optimal binary prefix free codes in external memory. Further work would be required to properly analize and optimize the behavior of the GTD algorithm to take into account caching of external memory. It is not clear whether the computation of optimal prefix free codes in external memory has practical applications.

5.3. Variants of the Optimal Prefix Free Code Problem

Another promising line of research is given by variants of the original problem, such as optimal bounded length prefix free codes [,,], where the maximal length of each word of the prefix free code must be less than or equal to a parameter l, while still minimizing the entropy of the code; or such as the order constrained prefix free codes, where the order of the words of the codes is constrained to be the same as the order of the weights: this problem is equivalent to the computation of optimal alphabetical search trees [,]. Both problems have complexity in the worst case over instances of fixed input size n, while having linear complexity when all the weights are within a factor of two of each other, exactly as for the computation of optimal prefix free codes.

Supplementary Materials

Sources and Data Sets are available at https://gitlab.com/FineGrainedAnalysis/PrefixFreeCodes.

Funding

This research was partially funded by the Millennium Nucleus RC130003 “Information and Coordination in Networks”.

Acknowledgments

The author would like to thank Peyman Afshani and Seth Pettie for interesting discussions during the author’s visit to the center MADALGO in January 2014; Jouni Siren for detecting a central error in a previous version of this work; Gonzalo Navarro for suggesting the application to the boosting technique from Ferragina et al. []; Charlie Clarke, Gordon Cormack, and J. Ian Munro for helping to clarify the history of the van Leeuwen’s algorithm []; Renato Cerro for various English corrections; various people who have reviewed and commented on various preliminary drafts and presentations of related work: Carlos Ochoa, Francisco Claude-Faust, Javiel Rojas, Peyman Afshani, Roberto Konow, Seth Pettie, Timothy Chan, and Travis Gagie.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huffman, D.A. A Method for the Construction of Minimum-Redundancy Codes. Proc. Inst. Radio Eng. (IRE) 1952, 40, 1098–1101. [Google Scholar] [CrossRef]

- Chen, C.; Pai, Y.; Ruan, S. Low power Huffman coding for high performance data transmission. In Proceedings of the International Conference on Hybrid Information Technology ICHIT, Cheju Island, Korea, 9–11 November 2006; Volume 1, pp. 71–77. [Google Scholar]

- Moffat, A. Huffman Coding. ACM Comput. Surv. 2019, 52, 85:1–85:35. [Google Scholar] [CrossRef]

- Chandrasekaran, M.N. Discrete Mathematics; PHI Learning Pvt. Ltd.: Delhi, India, 2010. [Google Scholar]

- Moffat, A.; Turpin, A. On the implementation of minimum redundancy prefix codes. ACM Trans. Commun. TCOM 1997, 45, 1200–1207. [Google Scholar] [CrossRef]

- Wikipedia. bzip2. Available online: https://en.wikipedia.org/wiki/Bzip2 (accessed on 20 December 2019).

- Wikipedia. JPEG. Available online: https://en.wikipedia.org/wiki/JPEG#Entropy_coding (accessed on 20 December 2019).

- Takaoka, T.; Nakagawa, Y. Entropy as Computational Complexity. J. Inf. Process. JIP 2010, 18, 227–241. [Google Scholar] [CrossRef][Green Version]

- Takaoka, T. Partial Solution and Entropy. In Proceedings of the 34th International Symposium on Mathematical Foundations of Computer Science 2009 (MFCS 2009), Novy Smokovec, High Tatras, Slovakia, 24–28 August 2009; Královič, R., Niwiński, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 700–711. [Google Scholar]

- Takaoka, T. Minimal Mergesort. Technical Report, University of Canterbury. 1997. Available online: http://ir.canterbury.ac.nz/handle/10092/9676 (accessed on 23 August 2016).

- Barbay, J.; Navarro, G. On Compressing Permutations and Adaptive Sorting. Theor. Comput. Sci. TCS 2013, 513, 109–123. [Google Scholar] [CrossRef]

- Even, S.; Even, G. Graph Algorithms, 2nd ed.; Cambridge University Press: Cambridge, UK, 2012; pp. 1–189. [Google Scholar]

- Aho, A.V.; Hopcroft, J.E.; Ullman, J. Data Structures and Algorithms; Addison-Wesley Longman Publishing Company: Massachusetts, MA, USA, 1983. [Google Scholar]

- Van Leeuwen, J. On the construction of Huffman trees. In Proceedings of the International Colloquium on Automata, Languages and Programming ICALP, Edinburgh, UK, 20–23 July 1976; pp. 382–410. [Google Scholar]

- Moffat, A.; Turpin, A. Efficient Construction of Minimum-Redundancy Codes for Large Alphabets. IEEE Trans. Inf. Theory TIT 1998, 44, 1650–1657. [Google Scholar] [CrossRef]

- Belal, A.A.; Elmasry, A. Distribution-Sensitive Construction of Minimum-Redundancy Prefix Codes. In Proceedings of the International Symposium on Theoretical Aspects of Computer Science STACS, Marseille, France, 23–25 February 2006; Lecture Notes in Computer Science. Durand, B., Thomas, W., Eds.; Springer: Berlin, Germany, 2006; Volume 3884, pp. 92–103. [Google Scholar]

- Belal, A.A.; Elmasry, A. Distribution-Sensitive Construction of Minimum-Redundancy Prefix Codes. arXiv 2010, arXiv:cs/0509015v4. Available online: https://arxiv.org/pdf/cs/0509015.pdf (accessed on 29 June 2012).

- Moffat, A.; Katajainen, J. In-Place Calculation of Minimum-Redundancy Codes. In Proceedings of the International Workshop on Algorithms and Data Structures WADS, Kingston, ON, Canada, 16–18 August 1995; Lecture Notes in Computer Science. Springer: London, UK, 1995; Volume 955, pp. 393–402. [Google Scholar]

- Milidiú, R.L.; Pessoa, A.A.; Laber, E.S. Three space-economical algorithms for calculating minimum-redundancy prefix codes. IEEE Trans. Inf. Theory TIT 2001, 47, 2185–2198. [Google Scholar] [CrossRef]

- Kirkpatrick, D. Hyperbolic dovetailing. In Proceedings of the Annual European Symposium on Algorithms ESA, Copenhagen, Denmark, 7–9 September 2009; pp. 516–527. [Google Scholar]

- Barbay, J. Optimal Prefix Free Codes with Partial Sorting. In Proceedings of the Annual Symposium on Combinatorial Pattern Matching CPM, Tel Aviv, Israel, 27–29 June 2016; LIPIcs. Grossi, R., Lewenstein, M., Eds.; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Wadern, Germany, 2016; Volume 54, pp. 29:1–29:13. [Google Scholar]

- Bentley, J.L.; Yao, A.C.C. An almost optimal algorithm for unbounded searching. Inf. Process. Lett. IPL 1976, 5, 82–87. [Google Scholar] [CrossRef]

- Karp, R.; Motwani, R.; Raghavan, P. Deferred Data Structuring. SIAM J. Comput. SJC 1988, 17, 883–902. [Google Scholar] [CrossRef][Green Version]

- Barbay, J.; Gupta, A.; Jo, S.; Rao, S.S.; Sorenson, J. Theory and Implementation of Online Multiselection Algorithms. In Proceedings of the Annual European Symposium on Algorithms ESA, Sophia Antipolis, France, 2–4 September 2013. [Google Scholar]

- Munro, J.I.; Spira, P.M. Sorting and Searching in Multisets. SIAM J. Comput. SICOMP 1976, 5, 1–8. [Google Scholar] [CrossRef]

- Stix, G. Profile: David A. Huffman. Sci. Am. SA 1991, 54–58. Available online: http://www.huffmancoding.com/my-uncle/scientific-american (accessed on 29 June 2012). [CrossRef]

- Website TCS Stack Exchange. What Are the Real-World Applications of Huffman Coding? 2010. Available online: http://stackoverflow.com/questions/2199383/what-are-the-real-world-applications-of-huffman-coding (accessed on 25 October 2012).

- Wikipedia. Huffman Coding. Available online: https://en.wikipedia.org/wiki/Huffman_coding (accessed on 26 December 2019).

- Moura, E.; Navarro, G.; Ziviani, N.; Baeza-Yates, R. Fast and Flexible Word Searching on Compressed Text. ACM Trans. Inf. Syst. TOIS 2000, 18, 113–139. [Google Scholar] [CrossRef]

- Hart, M. Gutenberg Project. Available online: https://www.gutenberg.org/ (accessed on 27 May 2018).

- Moffat, A.; Petersson, O. An Overview of Adaptive Sorting. Aust. Comput J ACJ 1992, 24, 70–77. [Google Scholar]

- Estivill-Castro, V.; Wood, D. A Survey of Adaptive Sorting Algorithms. ACM Comput. Surv. ACMCS 1992, 24, 441–476. [Google Scholar] [CrossRef]

- Milidiú, R.L.; Pessoa, A.A.; Laber, E.S. A Space-Economical Algorithm for Minimum-Redundancy Coding; Technical Report; Departamento de Informática, PUC-RJ: Rio de Janeiro, Brazil, 1998. [Google Scholar]

- Ching, Y.T.; Mehlhorn, K.; Smid, M.H. Dynamic deferred data structuring. Inf. Process. Lett. IPL 1990, 35, 37–40. [Google Scholar] [CrossRef][Green Version]

- Kaligosi, K.; Mehlhorn, K.; Munro, J.I.; Sanders, P. Towards Optimal Multiple Selection. In Proceedings of the International Colloquium on Automata, Languages and Programming ICALP, Lisbon, Portugal, 11–15 July 2005; pp. 103–114. [Google Scholar]

- Kirkpatrick, D.G.; Seidel, R. The Ultimate Planar Convex Hull Algorithm? SIAM J. Comput. SJC 1986, 15, 287–299. [Google Scholar] [CrossRef]

- Afshani, P.; Barbay, J.; Chan, T.M. Instance-Optimal Geometric Algorithms. J. ACM 2017, 64, 3:1–3:38. [Google Scholar] [CrossRef]

- Barbay, J.; Ochoa, C.; Satti, S.R. Synergistic Solutions on MultiSets. In Proceedings of the Annual Symposium on Combinatorial Pattern Matching CPM, Warsaw, Poland, 4–6 July 2017; Leibniz International Proceedings in Informatics (LIPIcs). Kärkkäinen, J., Radoszewski, J., Rytter, W., Eds.; Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2017; Volume 78, pp. 31:1–31:14. [Google Scholar]

- Ferragina, P.; Giancarlo, R.; Manzini, G.; Sciortino, M. Boosting textual compression in optimal linear time. J. ACM 2005, 52, 688–713. [Google Scholar] [CrossRef]

- Han, Y. Deterministic sorting in O(n log log n) time and linear space. J. Algorithms JALG 2004, 50, 96–105. [Google Scholar] [CrossRef]

- Sibeyn, J.F. External selection. J. Algorithms JALG 2006, 58, 104–117. [Google Scholar] [CrossRef]

- Barbay, J.; Gupta, A.; Rao, S.S.; Sorenson, J. Dynamic Online Multiselection in Internal and External Memory. In Proceedings of the International Workshop on Algorithms and Computation WALCOM, Chennai, India, 13–15 February 2014. [Google Scholar]

- Milidiú, R.L.; Pessoa, A.A.; Laber, E.S. Efficient Implementation of the WARM-UP Algorithm for the Construction of Length-Restricted Prefix Codes. In Proceedings of the Workshop on Algorithm Engineering and Experiments ALENEX, Baltimore, MD, USA, 15–16 January 1999; Lecture Notes in Computer Science. Goodrich, M.T., McGeoch, C.C., Eds.; Springer: Berlin, Germany, 1999; Volume 1619, pp. 1–17. [Google Scholar]

- Milidiú, R.L.; Pessoa, A.A.; Laber, E.S. In-Place Length-Restricted Prefix Coding. In Proceedings of the 11th Symposium on String Processing and Information Retrieval SPIRE, Santa Cruz de la Sierra, Bolivia, 9–11 September 1998; pp. 50–59. [Google Scholar]

- Milidiú, R.L.; Laber, E.S. The WARM-UP Algorithm: A Lagrangean Construction of Length Restricted Huffman Codes; Technical Report; Departamento de Informática, PUC-RJ: Rio de Janeiro, Brazil, 1996. [Google Scholar]

- Knuth, D.E. Art of Computer Programming, Volume 3: Sorting and Searching, 2nd ed.; Addison-Wesley Professional: Massachusetts, MA, USA, 1998. [Google Scholar]

- Hu, T.; Tucker, P. Optimal alphabetic trees for binary search. Inf. Process. Lett. IPL 1998, 67, 137–140. [Google Scholar] [CrossRef]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).