1. Introduction

An artificial neural network is a framework for many different machine learning algorithms to work together and process complex data inputs; it is vaguely inspired by biological neural networks that constitute animal brains [

1]. Neural networks have been widely used in many application areas, such as systems identification [

2], signal processing [

3,

4], and so on. Furthermore, several variants of neural networks have been derived from the context of applications. The convolutional neural network is one of the most popular variants. It is composed of one or more convolutional layers with fully connected layers and pooling layers [

5]. In addition, the deep belief network (DBN) is considered to be a composition of simple learning modules that make up each layer [

6]. Several restricted Boltzmann machines [

7] are stacked and trained greedily to form the DBN. In 2013, Grossberg proposed a recurrent neural network, which is a class of artificial neural network in which connections between nodes form a directed graph along a sequence [

8]. The output layer can obtain information from past and future states simultaneously.

However, improving the performance of a neural network remains an open question. From the viewpoint of information theory, mutual information is used to optimize neural networks. In the method of the ensemble, two neural networks are forced to convey different information about some features of their input by minimizing the mutual information between the variables extracted by the two neural networks [

9]. In this method, mutual information is used to measure the correlation between two hidden neurons. In 2010, an adaptive merging and splitting algorithm (AMSA) pruned hidden neurons by merging correlated hidden neurons and added hidden neurons by splitting existing hidden neurons [

10]. In this method, the mutual information is used to measure the correlation between the two hidden neurons. In 2015, Berglund et al. proposed measuring the usefulness of hidden units in Boltzmann machines with mutual information [

11]. However, the measure is not suitable as the sole criterion for model selection. In addition, the measure that was shown to correlate well with the entropy does not agree with the well-known and observed phenomenon that sparse features that have in general low entropy are good for many machine learning tasks, including classification. By a new objective function, a neural network with maximum mutual information training is proposed to solve the problem of two-class classification [

12]. It focuses on maximizing the difference between the estimated possibility of the two classes. However, when the two classes are completely separable, the algorithm cannot outperform the traditional algorithm of maximum likelihood estimation. To address the problems above, we introduce the theory of information geometry into neural networks.

Information geometry is a branch of mathematics that applies the theory of Riemannian manifolds to the field of probability theory [

13]. By the Fisher information [

14], the probability distributions for a statistical model are treated as the points of a Riemannian manifold. This approach focuses on studying the geometrical structures of parameter spaces of distributions. Information geometry has found various applications in many fields, such as control theory [

15], signal processing [

16], the expectation–maximization algorithm [

17], and many others [

18,

19]. In addition, information geometry is used to study neural networks. In 1991, Amari [

20] studied the dualistic geometry of the manifold of higher-order neurons. Furthermore, the natural gradient works efficiently in the learning of neural networks [

21]. The natural gradient learning method can overcome some disadvantages in the learning process of the networks effectively, such as the slow learning speed and the existence of plateaus. In 2005, a novel information geometric-based variable selection criterion for multilayer perceptron networks was described [

22]. It is based on projections of the Riemannian manifold as defined by a multilayer perceptron network on submanifolds with reduced input dimension.

In this paper, we propose a new algorithm based on the theory of mutual information and information geometry. We study the information covered by a whole neural network quantitatively via mutual information. In the proposed method, the learning of the neural network attempts to keep as much information as possible while maximizing the likelihood. Then, with the theory of information geometry, the optimization of the mutual information turns into minimizing the difference information between the estimated distribution and the original distribution. The distributions are mapping to points on a manifold. The Fisher distance is a proper measurement of the path between the points. Then, we obtain a unique solution of the mutual information by minimizing the Fisher distance between the estimated distribution and the original distribution along the shortest path (geodesic line).

2. Materials and Methods

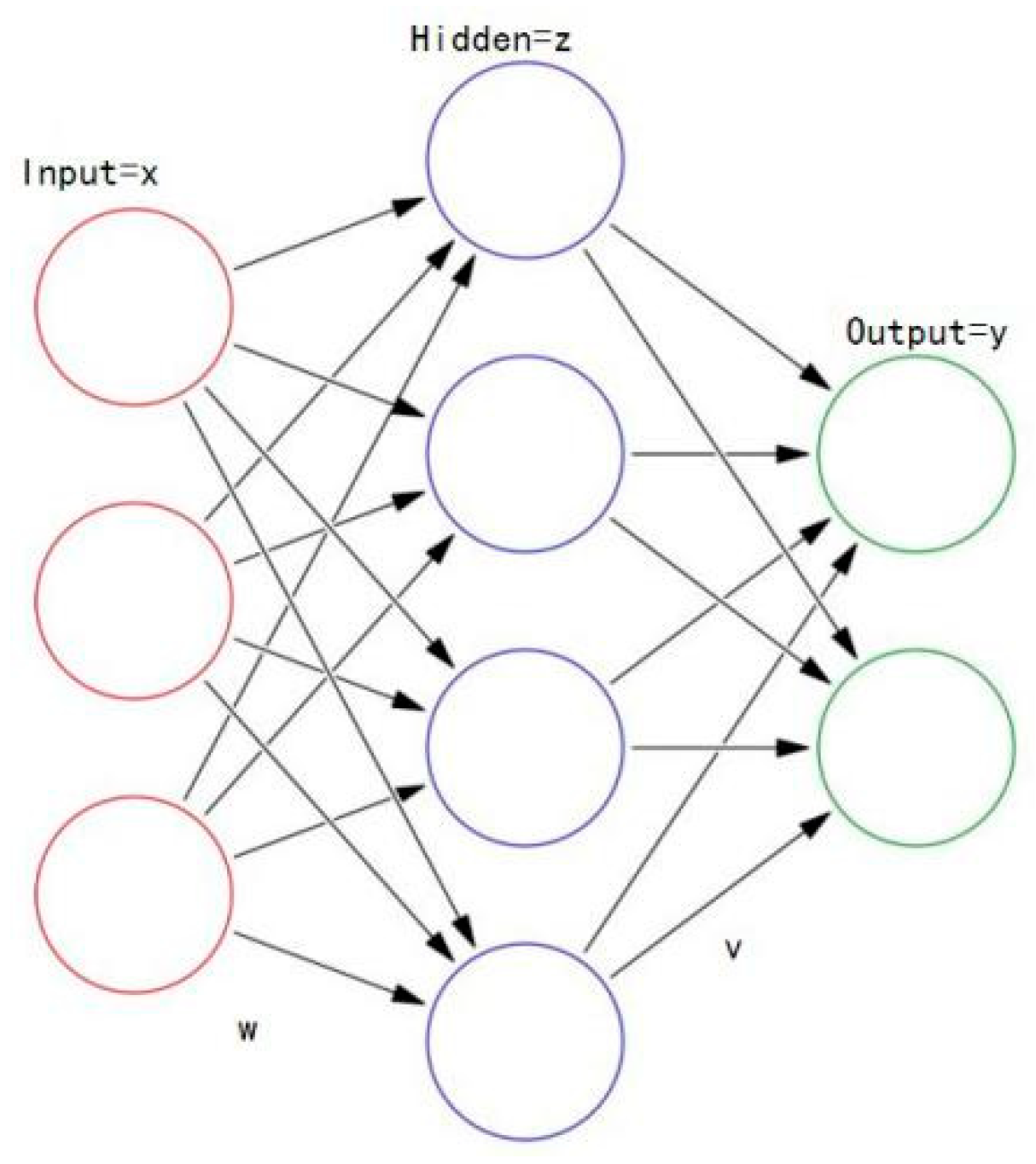

Let us consider a neural network with one input layer, one output layer, and one hidden layer. The weights for the input are denoted by

. The weights for the hidden neurons are

. Here,

is the input, while

is the actual output, and

z is the activation of the hidden neurons. The concatenation of the output and hidden neurons is represented as a vector

.

Figure 1 illustrates the architecture of the neural network.

The neural network is a static and usually nonlinear model

where the parameters of the neural network are denoted by

.

The mutual information (MI) [

23] of two random variables quantifies the “amount of information” obtained about one random variable, through the other random variable. In the proposed algorithm, we treat the input

and the combination of the output and hidden neurons

as two random variables. Correspondingly, the MI quantifies the “amount of information” obtained by the output and hidden variable from the input. The learning of the neural network attempts to keep as much information as possible while maximizing the likelihood. We define a novel objective function as

where

y is the desired output (i.e., the true value of the label). The first item is the objective function of traditional backpropagation neural networks, while the second item is the MI of

and

. With the first item, we maximize the likelihood similar to what traditional neural networks do. With the second item, the proposed method helps the neural networks to keep information from the input. Here,

is a constant determined by experience.

Many methods exist for minimizing the first item, such as conjugate gradient [

24]. Therefore, we just discuss how to work with the second item in this paper. By the definition of MI and Kullback–Leibler divergence (KLD) [

25], the mutual information of

x and

is

where

is the value domain of

x, while

is the value domain of

.

In general, the density

is assumed to be unknown. Therefore, we make a finite-sample approximation, since we work with a finite dataset. The size of the set is

N. Then, the mutual information in (3) is calculated as

where

is the

th input data.

In addition, we have

where

is the value of the vector of hidden neurons when the input is

, while

is the value domain of

, and

so that

.

The original distribution

can be derived from a finite-sample approximation, which gives us the following:

Next, we will show an effective way to calculate the in (5) with the theory of information geometry.

Any function that can be written in the following form is from the exponential family [

26]:

where

is a function of

.

Without loss of generality, the probability of the activation of each hidden unit can be approximated with a function from the exponential family [

27]. Here,

represents the

th hidden unit; then,

where

is a function of

(i.e., the linear weighted input of

), while

is the

th column of

, for

, and

is the size of the hidden layer. Additionally,

is a function of

. Considering that the activations of the hidden neurons are independent, the probability of

is calculated as

Thus, is from the exponential family, while is a function of the linear input of . In addition, is a function of .

A classic parametric space for this family of probability density functions (PDFs) is

For the distributions in the exponential family, there are two dual coordinate systems

and

on the manifold (which is defined as a topological space that locally resembles Euclidean space near each point) of the parameters [

28]. Here,

is given by

With (9) and (11), we calculate the

for each

. From (6), it is evident that the

is the mean of the conditional probability over each input

. Thus, with (11), the parameters in the density in (6) are approximated by

For the distribution in the exponential family, we have

For distribution with

parameters, the Fisher distance between two points

and

in the half-plane

reflects the dissimilarity between the associated PDF’s. It is defined as the minimal integral [

29]

where

represents a curve that is parameterized by

, while

is the Fisher information matrix which is defined as

where

and

are the indexes of the elements in

.

The curve that satisfies the minimization condition in (14) is called a geodesic line.

Theorem 1 [

30]

. For the distributions in the exponential family, the Fisher distance is the Kullback–Leibler divergence given by With (11) to (13), we obtain a dual description for

, as follows:

Let

and

in (14). With (5), we have

Then, we substitute (20) into (3) to obtain the second item in (2). By the definition of the Fisher distance (14) and Theorem 1, we know that minimizing the second item in (2) is equivalent to minimizing the Fisher distance between the estimated distribution

and the original distribution

along the shortest path (geodesic line). To describe the geodesic line using local coordinates, we must solve the geodesic equations given by the Euler–Lagrange equations, as follows:

where

. By solving (21), one obtains a unique solution for the mutual information by minimizing the Fisher distance along the shortest path (geodesic line). In practice, we replace this step by the neural gradient descent [

31], which makes the parameters in the second item in (2) update along the geodesic line. Here, the direction of the updating of the parameters is

where

is the common gradient.

3. Results

The proposed algorithm is used to improve a shallow neural network (NN) and a DBN [

31]. The architectures of the networks are determined by the method in [

32]. We use three popular datasets, namely, Iris, Poker Hand, and Forest Fires, from the UCI Machine Learning Repository (

http://archive.ics.uci.edu/ml/datasets. html). With these datasets, we design several experiments to solve typical problems in machine learning, such as problems that involve classification and regression.

3.1. Classification

In machine learning, classification is the problem of classifying instances into one of two or more classes. For this issue, accuracy is defined as the accuracy rate of the classification.

We designed neural networks as classifiers to solve the classification problems from two datasets, Iris and Poker Hand. For each of these datasets, 50% of the training samples are used for learning. In these samples, the percent of labeled training samples samples ranges from 10% to 50%. In the remainder of the dataset, 10% of the samples are randomly selected as test data. The constant is set to −0.1.

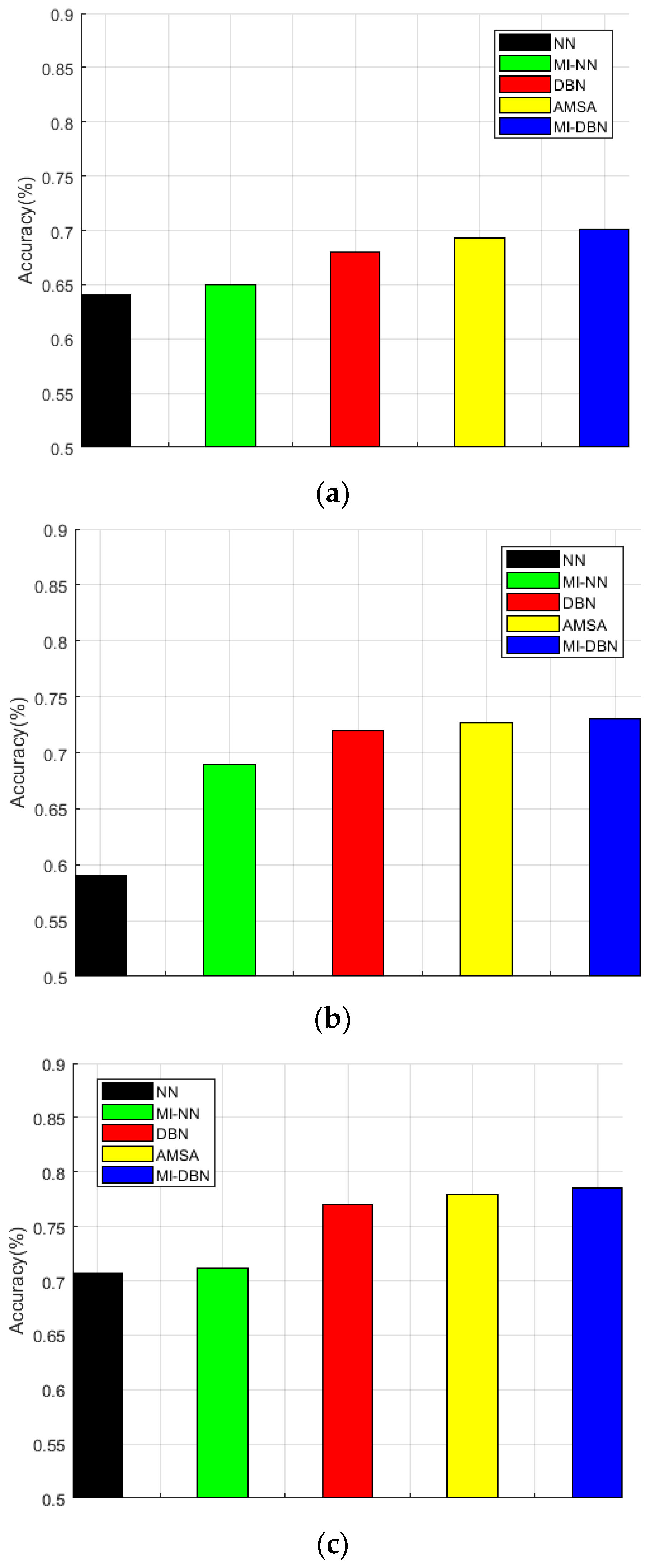

The test accuracy on the Iris dataset for NN, DBN, AMSA, MI-NN, and MI-DBN (“MI-“ denotes the proposed methods) can be seen in

Figure 2. The network structures used in this experiment are 4-3-3 and 4-6-7-3, which correspond to the shallow networks (i.e., NN and MI-NN) and the deep networks (i.e., DBN, AMSA, and MI-DBN), respectively. The activation and output function is sigmoid.

The test accuracies on the Poker Hand dataset for NN, DBN, AMSA, MI-NN, and MI-DBN (“MI-“ denotes the proposed methods) can be seen in

Figure 3. The network structures used in this experiment are 85-47-10 and 85-67-48-10, which correspond to the shallow networks (i.e., NN and MI-NN) and the other networks (i.e., DBN, AMSA, and MI-DBN), respectively. The activation and output function is sigmoid.

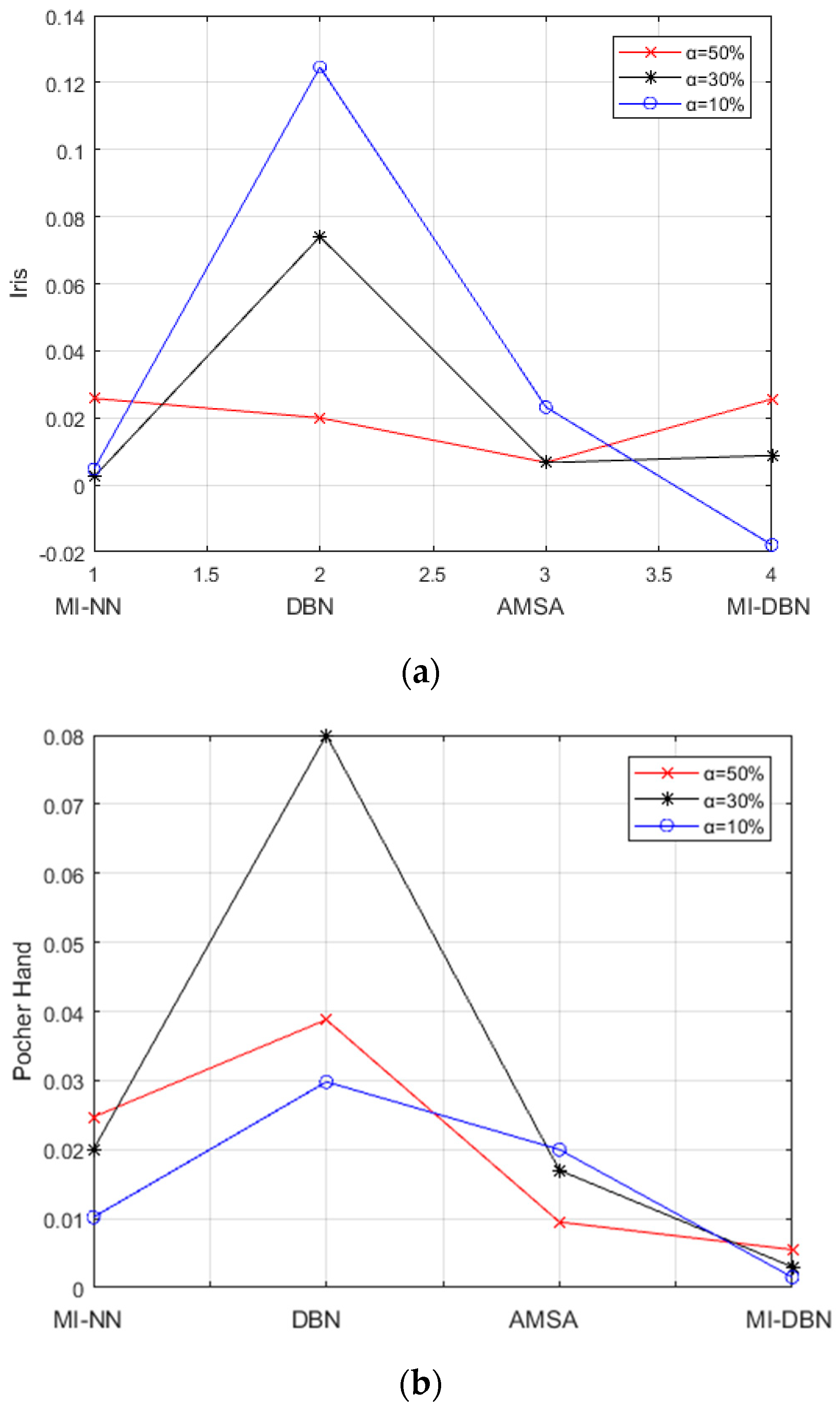

It can be seen that the proposed algorithm outperforms the previous methods on both datasets successfully, although a sole exception is that the MI-NN is slightly worse than AMSA with Iris when

. This finding means that the proposed algorithm can be applied to the problem of classification. In addition, the proposed algorithm improves the traditional algorithms constantly, when the percent of labeled training samples changes. To study the correlation between the performance and

, we illustrate the difference between the two methods with varied

in

Figure 4. According to

Figure 4, there is a positive correlation between the improvement brought by the proposed method and the value of

. The reason is that more label data leads the proposed method to absorb more useful information for classification. In contrast, this aspect does not hold true for the other algorithms.

3.2. Regression

In machine learning, regression is a set of statistical processes for estimating the relationships among the variables. In this approach, the accuracy is the error rate of regression substituted by 1.

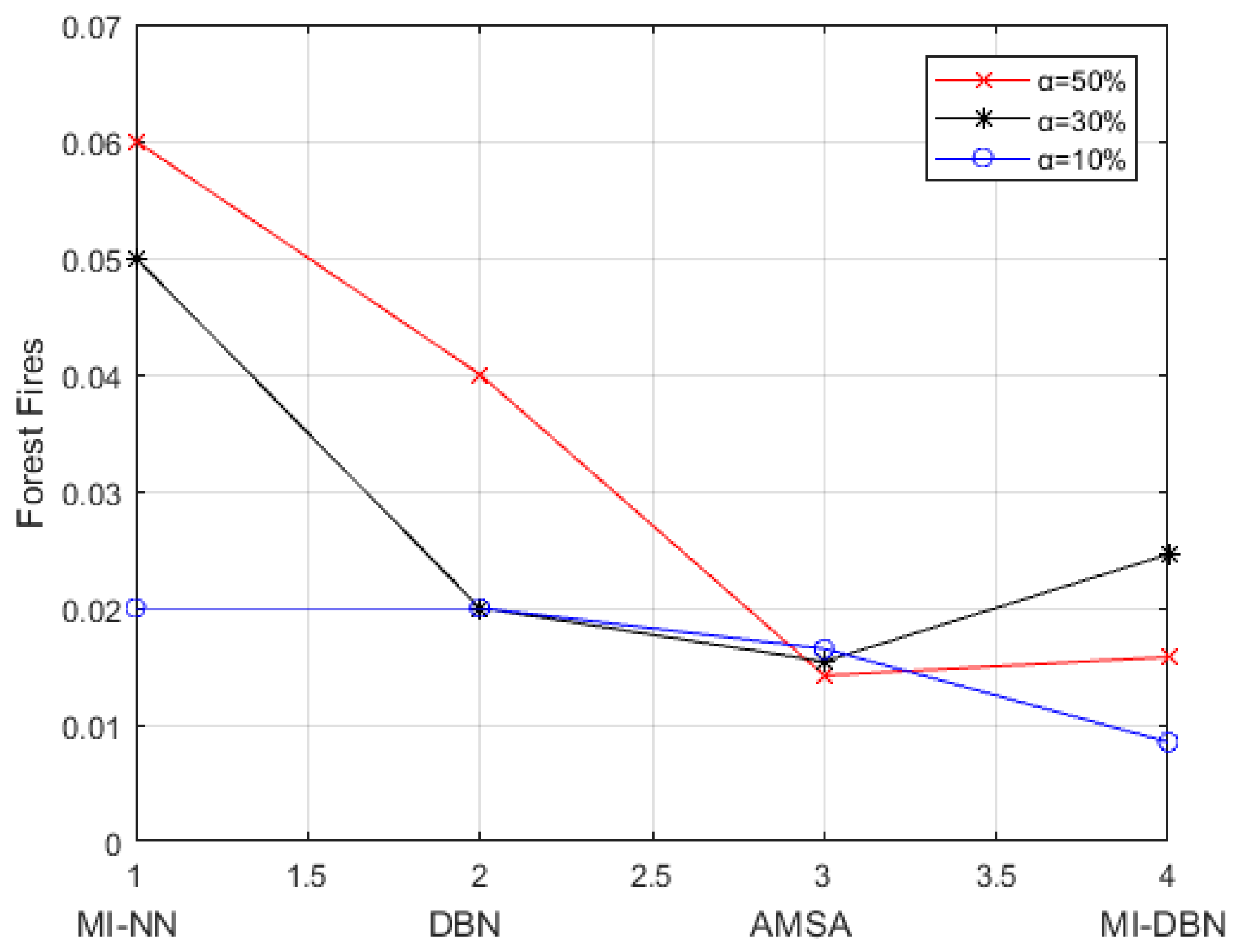

We design neural networks as regression functions to solve the regression problems in the Forest Fires dataset. For the dataset, 50% of the training samples are used for learning. In these samples, the percent of the labeled training samples ranges from 10% to 50%. In the remainder of the dataset, 10% of the samples are randomly selected as test data. The constant is set to –0.1.

The test accuracy on the Forest Fires dataset for NN, DBN, AMSA, MI-NN, and MI-DBN (“MI-“denotes the proposed methods) can be seen in

Figure 5. The network structures used in this experiment are 13-15-1 and 13-15-17-1, which correspond to shallow networks (i.e., NN and MI-NN) and deep networks (i.e., DBN, AMSA, and MI-DBN), respectively. The activation function is sigmoid, and the output function is linear.

We can see that the proposed algorithm outperforms the previous methods on the dataset successfully. This finding means that the proposed algorithm can be applied to the problem of regression. In addition, the proposed algorithm improves the traditional algorithms constantly, when the percent of labeled training samples changes. To study the correlation between the performance and

, we illustrate the difference between the two methods with varied

in

Figure 6. According to

Figure 6, there is a positive correlation between the improvement brought by the proposed method and the value of

. The reason is that more labeled data leads the proposed method to absorb more useful information for its classification. In contrast, this aspect does not hold true for the other algorithms.