Salt and Pepper Noise Removal with Multi-Class Dictionary Learning and L0 Norm Regularizations

Abstract

:1. Introduction

2. Methods

2.1. Typical Sparsity-Based Impulse Noise Removal Method

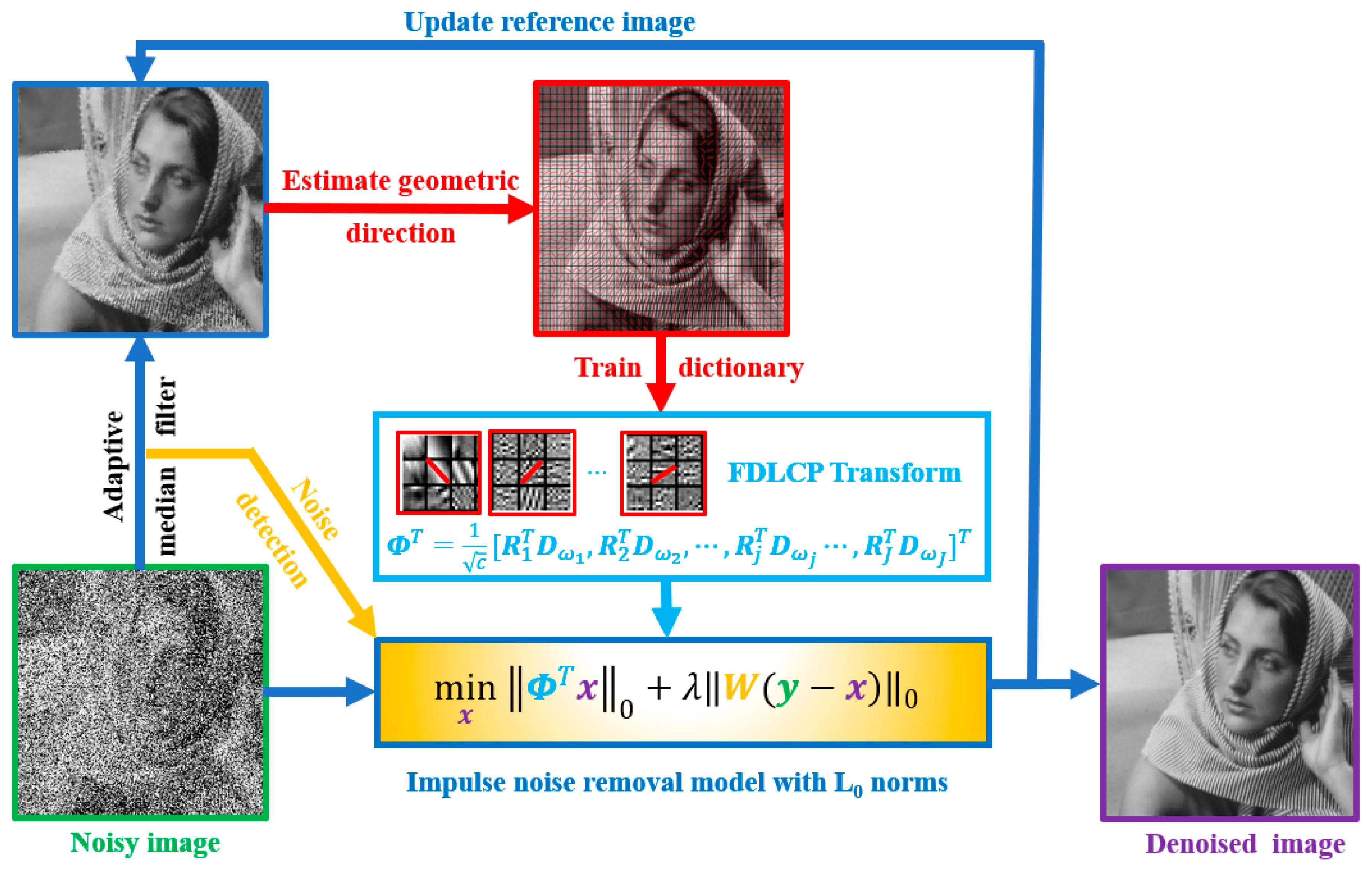

2.2. Proposed Method

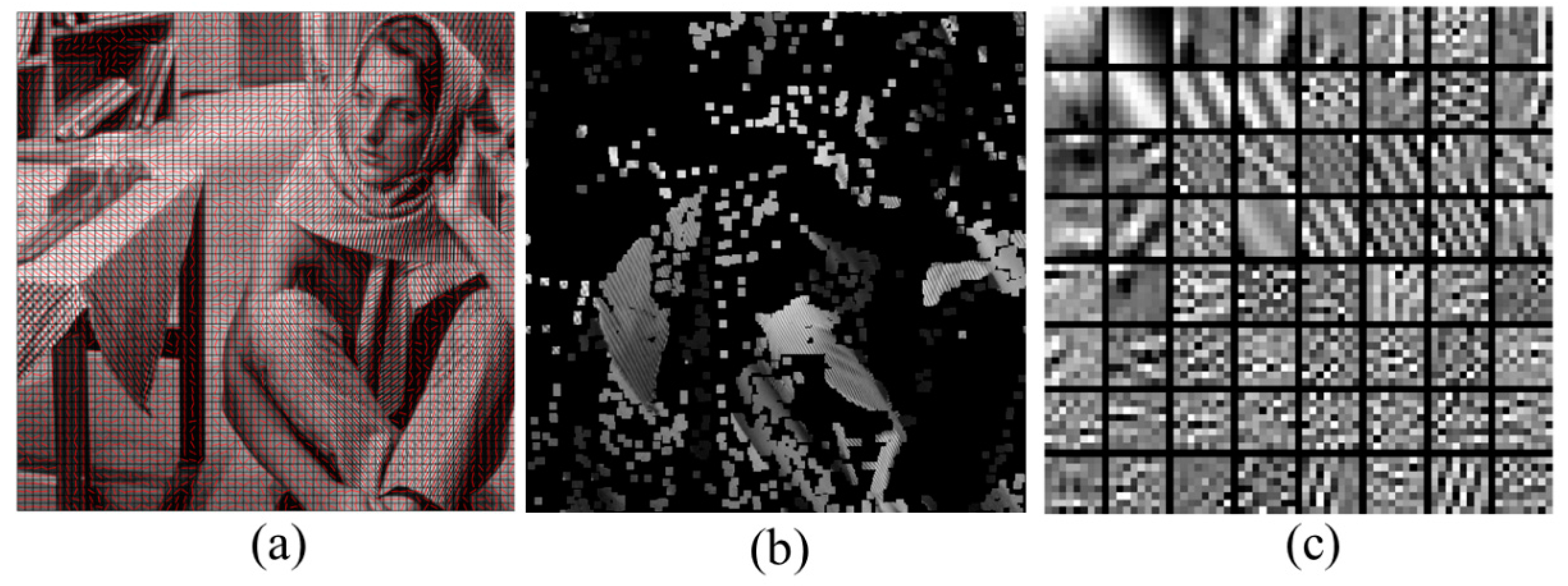

2.2.1. Adaptive Dictionary Learning in Salt and Pepper Noise Removal

2.2.2. FLCLP-Based Image Reconstruction with The L0 Norm Regularizations

2.2.3. Numerical Algorithm

| Algorithm 1. ADMC Algorithm for the L0 Norm Minimizations. |

| Input: The noise image , diagonal matrix , adaptive dictionaries , and the regularization Initialize: , , k = 1, and . Main: While repeat steps (a)~(d) until convergence: (a) Update by computing ( denotes the hard thresholding operator with a threshold [20].) according to Equation (16). (b) Update by solving according to Equation (18). (c) Compute via Equation (20). (d) Evaluate the difference of successive reconstruction , (e) If , go to (a); else set and . End While Output: Reconstructed image . |

3. Results

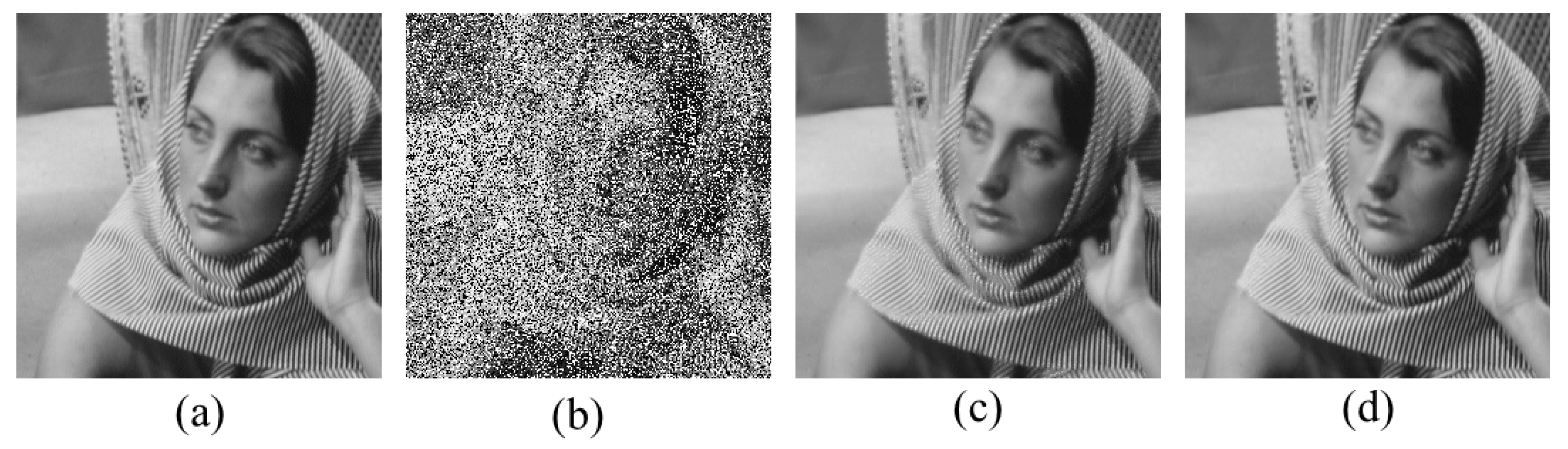

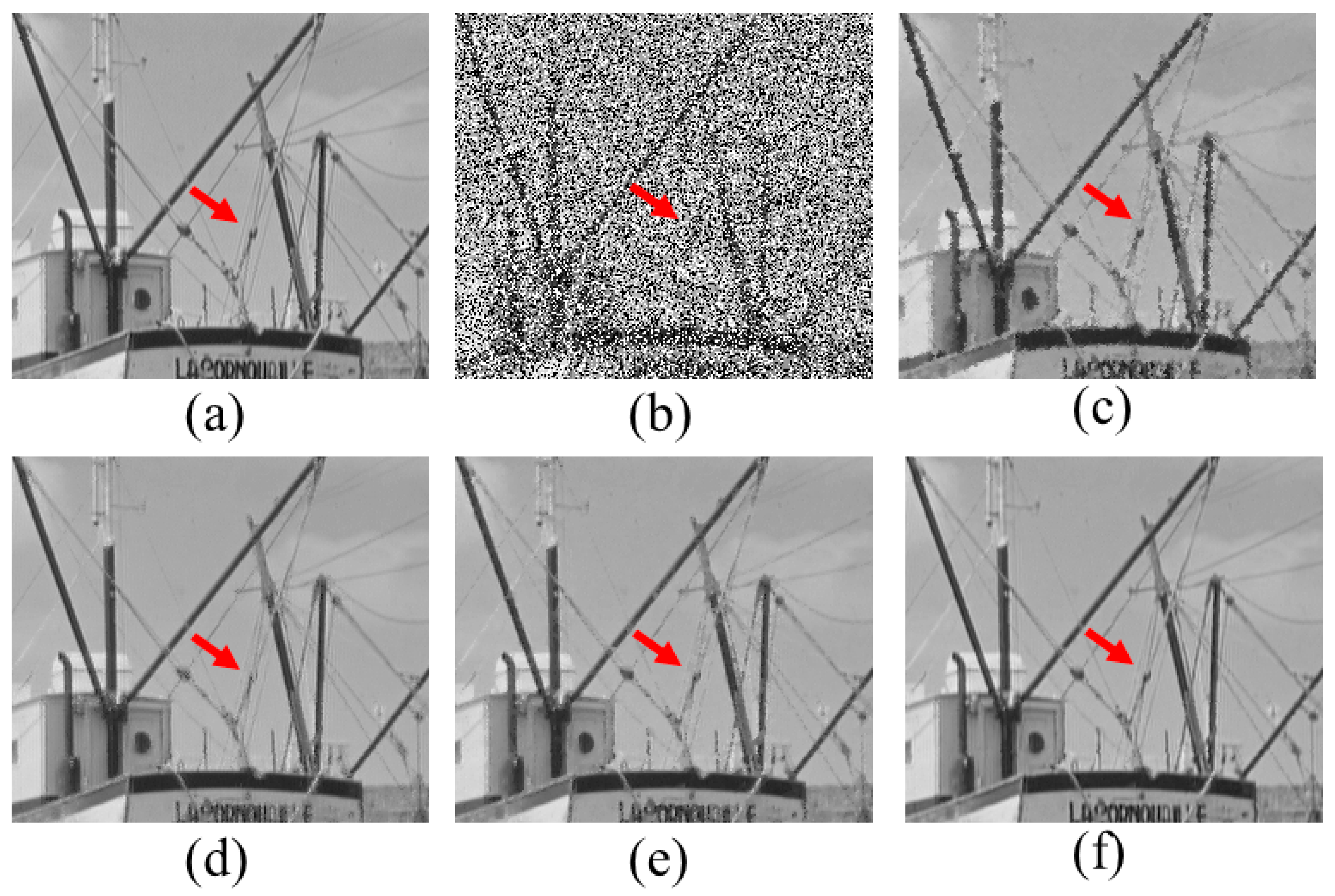

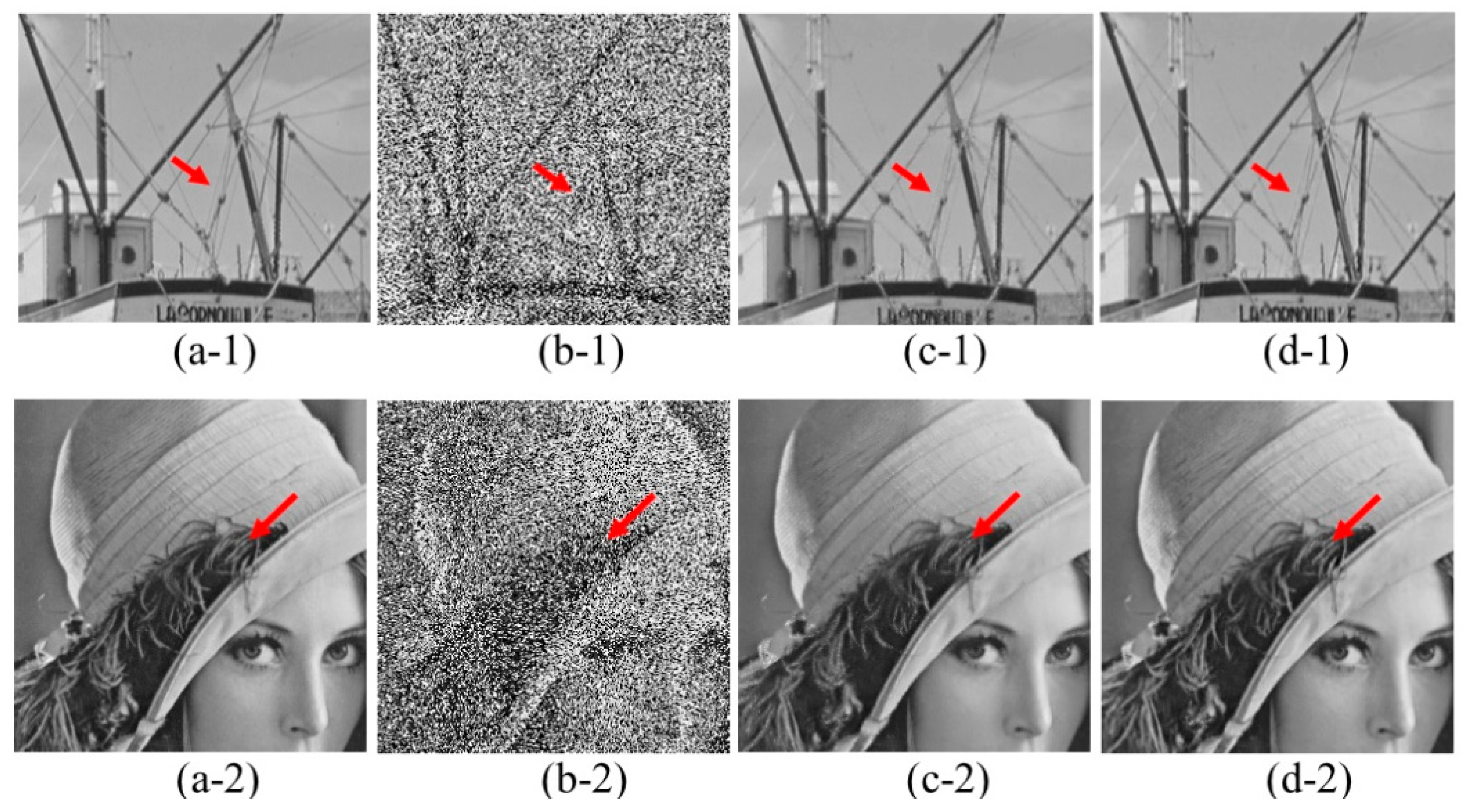

3.1. Denoising Performance under a Fixed Noise Level

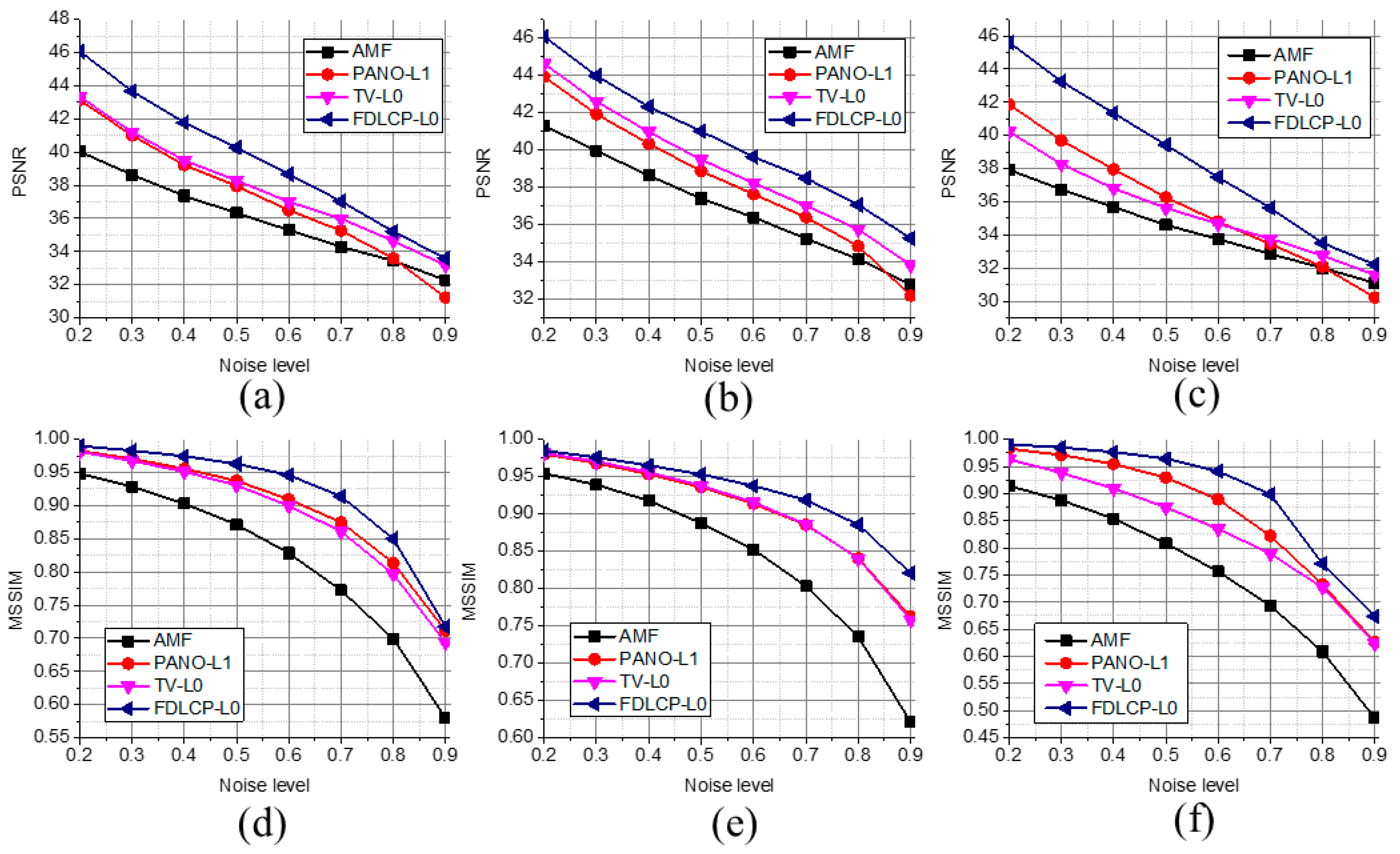

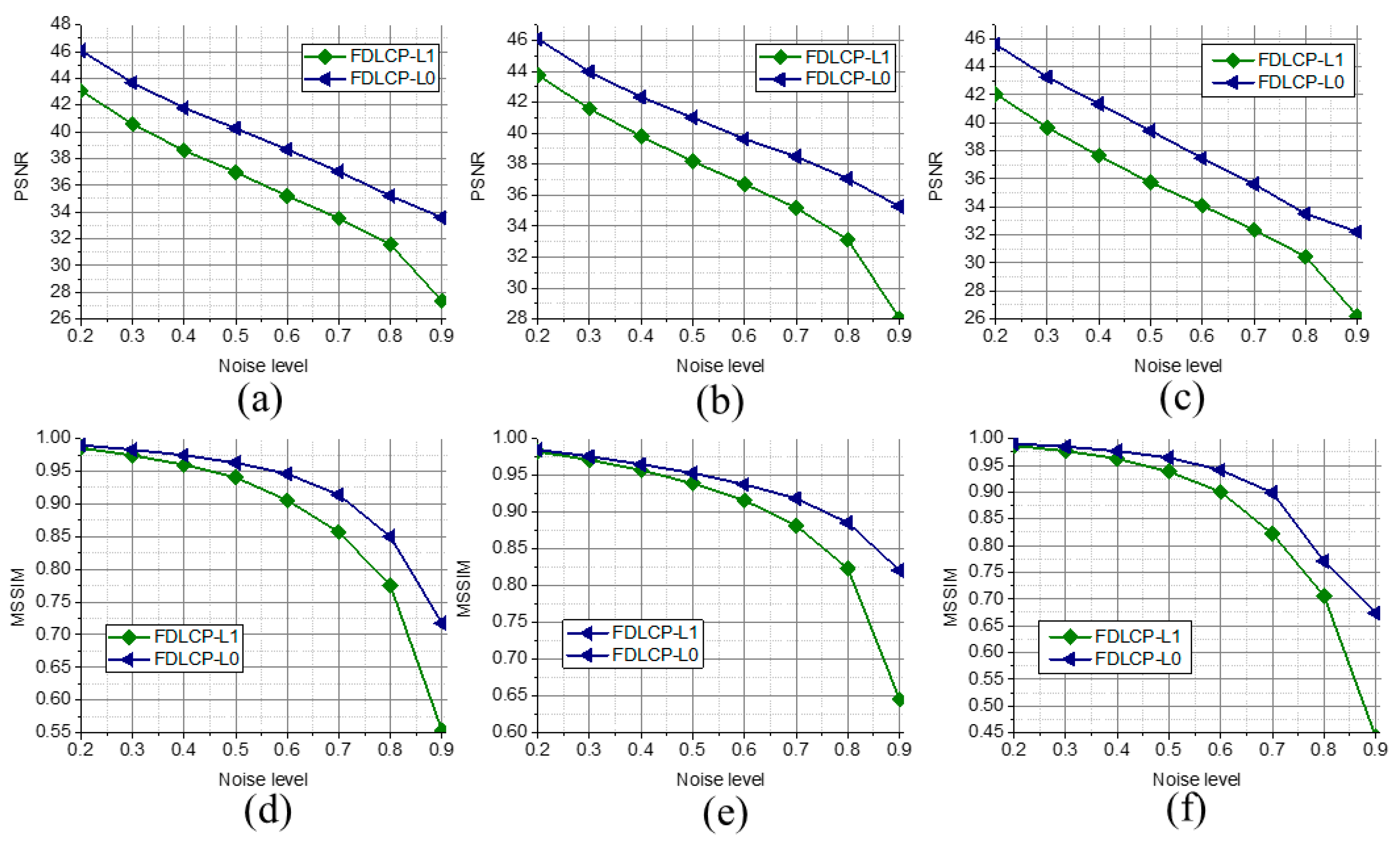

3.2. Denoising Performance under Different Noise Levels

4. Discussions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gonzalez, R.C.; Richard, E. Digital Image Processing; Prentice Hall Press: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Hwang, H.; Haddad, R. Adaptive median filters: New algorithms and results. IEEE Trans. Image Process. 1995, 4, 499–502. [Google Scholar] [CrossRef] [PubMed]

- Sree, P.S.J.; Kumar, P.; Siddavatam, R.; Verma, R. Salt-and-pepper noise removal by adaptive median-based lifting filter using second-generation wavelets. Signal Image Video Process. 2013, 7, 111–118. [Google Scholar] [CrossRef]

- Adeli, A.; Tajeripoor, F.; Zomorodian, M.J.; Neshat, M. Comparison of the Fuzzy-based wavelet shrinkage image denoising techniques. Int. J. Comput. Sci. 2012, 9, 211–216. [Google Scholar]

- Mafi, M.; Martin, H.; Cabrerizo, M.; Andrian, J.; Barreto, A.; Adjouadi, M. A comprehensive survey on impulse and Gaussian denoising filters for digital images. Signal Process. 2018. [Google Scholar] [CrossRef]

- Huang, S.; Zhu, J. Removal of salt-and-pepper noise based on compressed sensing. Electron. Lett. 2010, 46, 1198–1199. [Google Scholar] [CrossRef]

- Wang, X.-L.; Wang, C.-L.; Zhu, J.-B.; Liang, D.-N. Salt-and-pepper noise removal based on image sparse representation. Opt. Eng. 2011, 50, 097007. [Google Scholar] [CrossRef]

- Xiao, Y.; Zeng, T.; Yu, J.; Ng, M.K. Restoration of images corrupted by mixed Gaussian-impulse noise via l1–l0 minimization. Pattern Recognit. 2011, 44, 1708–1720. [Google Scholar] [CrossRef]

- Wang, S.; Liu, Q.; Xia, Y.; Dong, P.; Luo, J.; Huang, Q.; Feng, D.D. Dictionary learning based impulse noise removal via L1–L1 minimization. Signal Process. 2013, 93, 2696–2708. [Google Scholar] [CrossRef]

- Guo, D.; Qu, X.; Du, X.; Wu, K.; Chen, X. Salt and pepper noise removal with noise detection and a patch-based sparse representation. Adv. Multimed. 2014, 2014. [Google Scholar] [CrossRef]

- Guo, D.; Qu, X.; Wu, M.; Wu, K. A modified iterative alternating direction minimization algorithm for impulse noise removal in images. J. Appl. Math. 2014, 2014, 595782. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Cai, J.-F.; Ji, H.; Shen, Z.; Ye, G.-B. Data-driven tight frame construction and image denoising. Appl. Comput. Harmonic Anal. 2014, 37, 89–105. [Google Scholar] [CrossRef]

- Ravishankar, S.; Bresler, Y. MR image reconstruction from highly undersampled k-space data by dictionary learning. IEEE Trans. Med. Imaging 2011, 30, 1028–1041. [Google Scholar] [CrossRef] [PubMed]

- Zhan, Z.; Cai, J.F.; Guo, D.; Liu, Y.; Chen, Z.; Qu, X. Fast multiclass dictionaries learning with geometrical directions in MRI reconstruction. IEEE Trans. Biomed. Eng. 2016, 63, 1850–1861. [Google Scholar] [CrossRef] [PubMed]

- Qu, X.; Hou, Y.; Lam, F.; Guo, D.; Zhong, J.; Chen, Z. Magnetic resonance image reconstruction from undersampled measurements using a patch-based nonlocal operator. Med. Image Anal. 2014, 18, 843–856. [Google Scholar] [CrossRef] [PubMed]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Ganzhao, Y.; Ghanem, B. L0TV: A new method for image restoration in the presence of impulse noise. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition-CVPR 2015, Boston, MA, USA, 7–12 June 2015; pp. 5369–5377. [Google Scholar]

- Trzasko, J.; Manduca, A. Highly undersampled magnetic resonance image reconstruction via homotopic L0-minimization. IEEE Trans. Med. Imaging 2009, 28, 106–121. [Google Scholar] [CrossRef]

- Ning, B.; Qu, X.; Guo, D.; Hu, C.; Chen, Z. Magnetic resonance image reconstruction using trained geometric directions in 2D redundant wavelets domain and non-convex optimization. Magn. Reson. Imaging 2013, 31, 1611–1622. [Google Scholar] [CrossRef]

- Qu, X.; Cao, X.; Guo, D.; Hu, C.; Chen, Z. Compressed sensing MRI with combined sparsifying transforms and smoothed L0 norm minimization. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP 2010, Dallas, TX, USA, 14–19 March 2010; pp. 626–629. [Google Scholar]

- Qu, X.; Qiu, T.; Guo, D.; Lu, H.; Ying, J.; Shen, M.; Hu, B.; Orekhov, V.; Chen, Z. High-fidelity spectroscopy reconstruction in accelerated NMR. Chem. Commun. 2018, 54, 10958–10961. [Google Scholar] [CrossRef]

- Qu, X.; Guo, D.; Cao, X.; Cai, S.; Chen, Z. Reconstruction of self-sparse 2D NMR spectra from undersampled data in the indirect dimension. Sensors 2011, 11, 8888–8909. [Google Scholar] [CrossRef]

- Qu, X.; Cao, X.; Guo, D.; Chen, Z. Compressed sensing for sparse magnetic resonance spectroscopy. In Proceedings of the 18th Scientific Meeting on International Society for Magnetic Resonance in Medicine-ISMRM 2010, Stockholm, Sweden, 1–7 May 2010; p. 3371. [Google Scholar]

- Li, Q.; Liang, S. Weak fault detection of tapered rolling bearing based on penalty regularization approach. Algorithms 2018, 11, 184. [Google Scholar] [CrossRef]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Zhu, Z.; Qi, G.; Chai, Y.; Chen, Y. A novel multi-focus image fusion method based on stochastic coordinate coding and local density peaks clustering. Future Internet 2016, 8, 53. [Google Scholar] [CrossRef]

- Qi, G.; Zhu, Z.; Chen, Y.; Wang, J.; Zhang, Q.; Zeng, F. Morphology-based visible-infrared image fusion framework for smart city. Int. J. Simul. Process Modell. 2018, 13, 523–536. [Google Scholar] [CrossRef]

- Nikolova, M. A variational approach to remove outliers and impulse noise. J. Math. Imaging Vis. 2004, 20, 99–120. [Google Scholar] [CrossRef]

- Cai, J.-F.; Chan, R.; Nikolova, M. Fast two-phase image deblurring under impulse noise. J. Math. Imaging Vis. 2010, 36, 46–53. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, T. The benefit of group sparsity. Ann. Stat. 2010, 38, 1978–2004. [Google Scholar] [CrossRef]

- Wang, L.; Chen, Y.; Lin, F.; Chen, Y.; Yu, F.; Cai, Z. Impulse noise denoising using total variation with overlapping group sparsity and Lp-pseudo-norm shrinkage. Appl. Sci. 2018, 8, 2317. [Google Scholar] [CrossRef]

- Chen, P.; Selesnick, I.W. Group-sparse signal denoising: Non-convex regularization, convex optimization. IEEE Trans. Signal Process. 2014, 62, 3464–3478. [Google Scholar] [CrossRef]

- Alpago, D.; Zorzi, M.; Ferrante, A. Identification of sparse reciprocal graphical models. IEEE Control Syst. Lett. 2018, 2, 659–664. [Google Scholar] [CrossRef]

- Lesage, S.; Gribonval, R.; Bimbot, F.; Benaroya, L. Learning unions of orthonormal bases with thresholded singular value decomposition. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing-ICASSP′05, Philadelphia, PA, USA, 18–23 March 2005; Volume 5, pp. 293–296. [Google Scholar]

- Liu, Y.; Zhan, Z.; Cai, J.F.; Guo, D.; Chen, Z.; Qu, X. Projected iterative soft-thresholding algorithm for tight frames in compressed sensing magnetic resonance imaging. IEEE Trans. Med. Imaging 2016, 35, 2130–2140. [Google Scholar] [CrossRef] [PubMed]

- Lai, Z.; Qu, X.; Liu, Y.; Guo, D.; Ye, J.; Zhan, Z.; Chen, Z. Image reconstruction of compressed sensing MRI using graph-based redundant wavelet transform. Med. Image Anal. 2016, 27, 93–104. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Zhang, Y.; Yin, W. A fast TVL1-L2 Minimization Algorithm for Signal Reconstruction from Partial Fourier Data. Rice University. 2009. Available online: ftp://ftp.math.ucla.edu/pub/camreport/cam09-24.pdf (accessed on 1 October 2018).

- Yang, J.; Zhang, Y.; Yin, W. A fast alternating direction method for TV l1-l2 signal reconstruction from partial fourier data. IEEE J. Sel. Top. Signal Process. 2010, 4, 288–297. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Portilla, J.; Strela, V.; Wainwright, M.J.; Simoncelli, E.P. Image denoising using scale mixtures of Gaussians in the wavelet domain. IEEE Trans. Image Process. 2003, 12, 1338–1351. [Google Scholar] [CrossRef] [PubMed]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef] [PubMed]

- Computational Imaging and Visual Image Processing. Available online: http://www.io.csic.es/PagsPers/JPortilla/image-processing/bls-gsm/63-test-images (accessed on 1 October 2018).

| Images | Quantitative Measure | Methods | |||

|---|---|---|---|---|---|

| AMF | PANO-L1 | TV-L0 | FDLCP-L0 | ||

| Boat | PSNR | 36.32 | 37.95 | 38.29 | 40.26 |

| MSSIM | 0.8715 | 0.9375 | 0.9307 | 0.9631 | |

| Lena | PSNR | 37.42 | 38.87 | 39.48 | 41.01 |

| MSSIM | 0.8878 | 0.9360 | 0.9382 | 0.9531 | |

| Barbara | PSNR | 34.61 | 36.28 | 35.63 | 39.44 |

| MSSIM | 0.8080 | 0.9293 | 0.8746 | 0.9644 | |

| Images | Methods | Noise Level | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | ||

| Boat | L1 | 43.07 | 40.58 | 38.60 | 36.99 | 35.20 | 33.54 | 31.58 | 27.35 |

| L0 | 46.07 | 43.68 | 41.78 | 40.26 | 38.67 | 37.04 | 35.21 | 33.59 | |

| Lena | L1 | 43.75 | 41.58 | 39.80 | 38.17 | 36.71 | 35.18 | 33.12 | 28.04 |

| L0 | 46.09 | 43.97 | 42.32 | 41.01 | 39.63 | 38.49 | 37.07 | 35.27 | |

| Barbara | L1 | 42.06 | 39.67 | 37.65 | 35.74 | 34.08 | 32.38 | 30.45 | 26.21 |

| L0 | 45.62 | 43.27 | 41.35 | 39.44 | 37.48 | 35.63 | 33.52 | 32.22 | |

| Images | Methods | Noise Level | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | ||

| Boat | L1 | 0.9856 | 0.9743 | 0.9601 | 0.9407 | 0.9055 | 0.8571 | 0.7752 | 0.5537 |

| L0 | 0.9900 | 0.9830 | 0.9745 | 0.9631 | 0.9461 | 0.9139 | 0.8501 | 0.7178 | |

| Lena | L1 | 0.9822 | 0.9711 | 0.9569 | 0.9391 | 0.9159 | 0.8819 | 0.8232 | 0.6458 |

| L0 | 0.9849 | 0.9759 | 0.9650 | 0.9531 | 0.9376 | 0.9188 | 0.8856 | 0.8208 | |

| Barbara | L1 | 0.9867 | 0.9769 | 0.9624 | 0.9385 | 0.9001 | 0.8220 | 0.7058 | 0.4427 |

| L0 | 0.9909 | 0.9849 | 0.9766 | 0.9644 | 0.9412 | 0.8987 | 0.7709 | 0.6736 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, D.; Tu, Z.; Wang, J.; Xiao, M.; Du, X.; Qu, X. Salt and Pepper Noise Removal with Multi-Class Dictionary Learning and L0 Norm Regularizations. Algorithms 2019, 12, 7. https://doi.org/10.3390/a12010007

Guo D, Tu Z, Wang J, Xiao M, Du X, Qu X. Salt and Pepper Noise Removal with Multi-Class Dictionary Learning and L0 Norm Regularizations. Algorithms. 2019; 12(1):7. https://doi.org/10.3390/a12010007

Chicago/Turabian StyleGuo, Di, Zhangren Tu, Jiechao Wang, Min Xiao, Xiaofeng Du, and Xiaobo Qu. 2019. "Salt and Pepper Noise Removal with Multi-Class Dictionary Learning and L0 Norm Regularizations" Algorithms 12, no. 1: 7. https://doi.org/10.3390/a12010007

APA StyleGuo, D., Tu, Z., Wang, J., Xiao, M., Du, X., & Qu, X. (2019). Salt and Pepper Noise Removal with Multi-Class Dictionary Learning and L0 Norm Regularizations. Algorithms, 12(1), 7. https://doi.org/10.3390/a12010007