Diabetes mellitus (DM) has become a serious healthcare problem and is observed to be the fourth leading factor of death. Recently, the number of diabetic patients was 336 million around the world, and this number is auspicated to reach 592 million by the year 2035 [

1]. Diabetic retinopathy (DR) is a microvascular complication of diabetes that causes eyesight loss in various ethnicities of the working-age population. To date, 80% of DR cases have been recorded in patients who have suffered from diabetes for more than 10 years. If the DR patient undergoes early screening and consistent treatment, then the progression of DR can be reduced from severe to the mild stage. The categorization of DR by clinicians is manifested into two classes, i.e., nonproliferative (NPDR) and proliferative (PDR). According to the clinical estimations in Reference [

2] about retina impairment, NPDR is further divided into mild, moderate, and severe stages, and therefore, an early screening of DR severity levels is usually performed using the NPDR subclasses. PDR is an advanced stage where retinal anatomic features such as optic disc, cup, and retinal vascular structure have a large and distorted size [

3,

4].

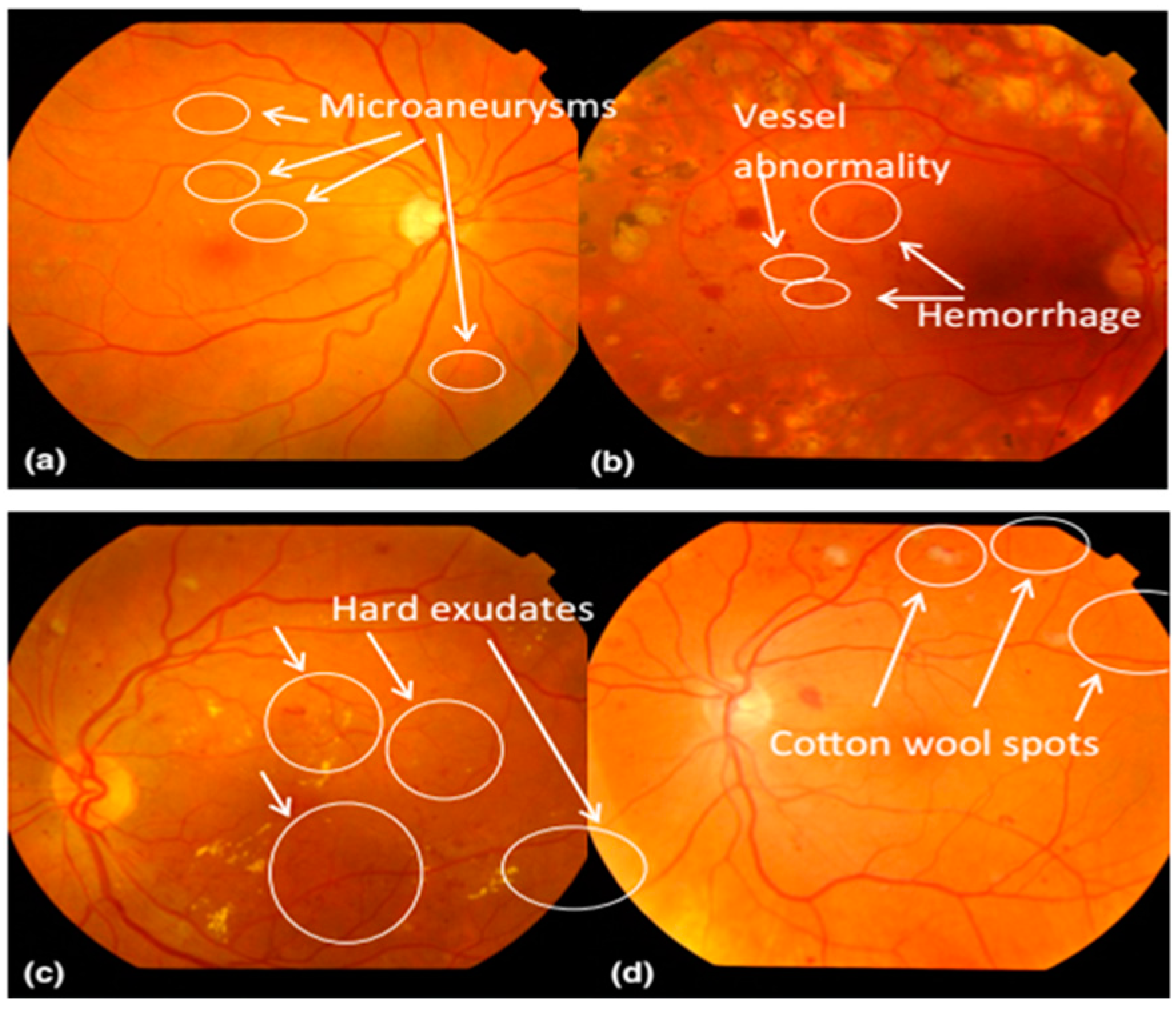

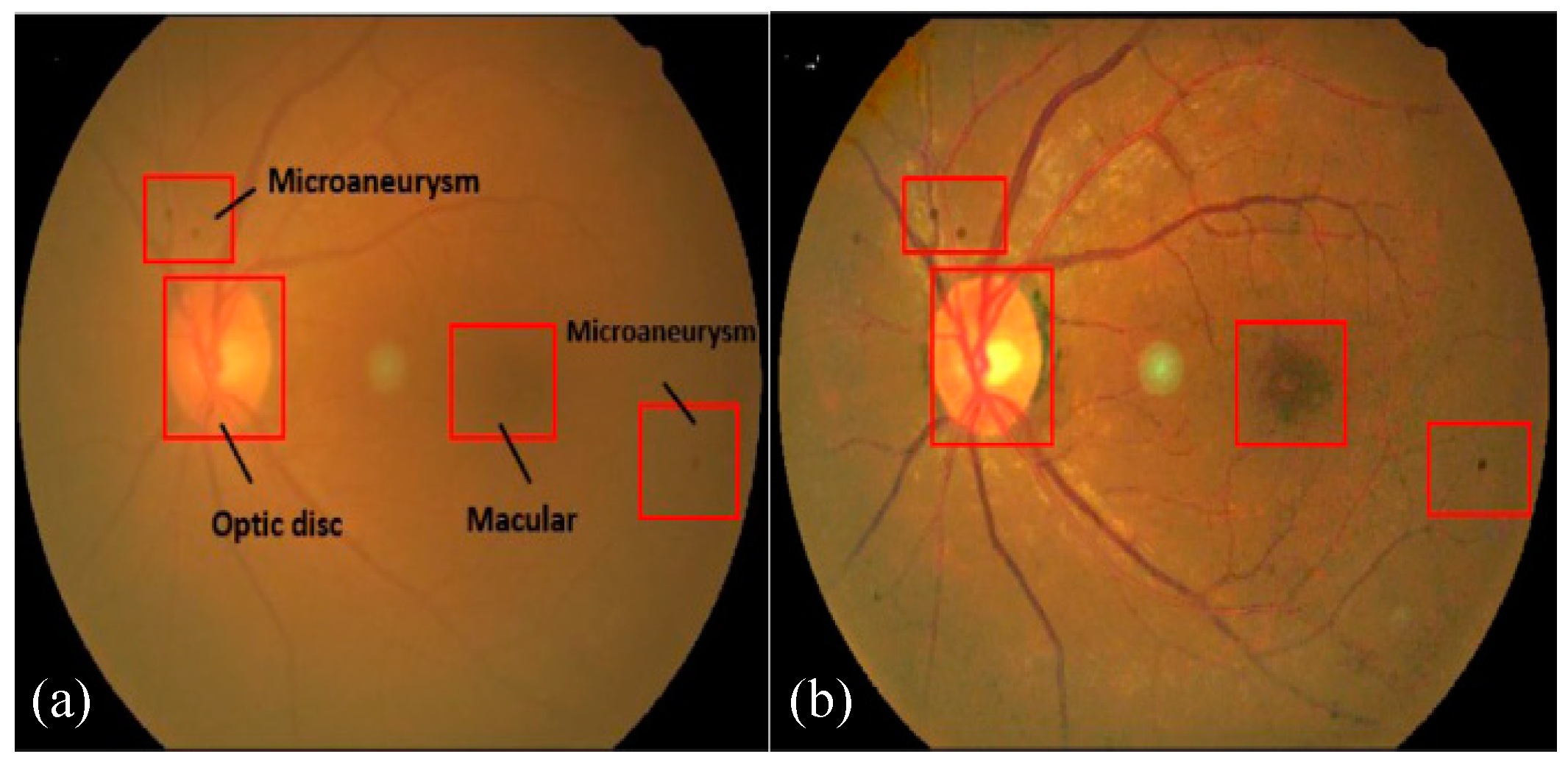

In modern ophthalmology, digital fundus photography is conceived as a powerful medical imaging channel used by ophthalmologists and computer-aided diagnosis (CAD) systems to detect various retinal disorders such as glaucoma, diabetic retinopathy, macular degeneration, and retinal neoplasms [

5,

6]. Fundus images normally contain the central and posterior retinal surface, i.e., bright (optic disc and cup) and dark (macula and fovea) regions. Moreover, DR clinical features such as microaneurysms (MAs), hemorrhages (HEMs), exudates (EXs) and cotton wool spots (CWSes) are also available on the fundus images. These images are often processed by clinical experts and screening algorithms to grade and interpret severity levels of DR in patients [

7].

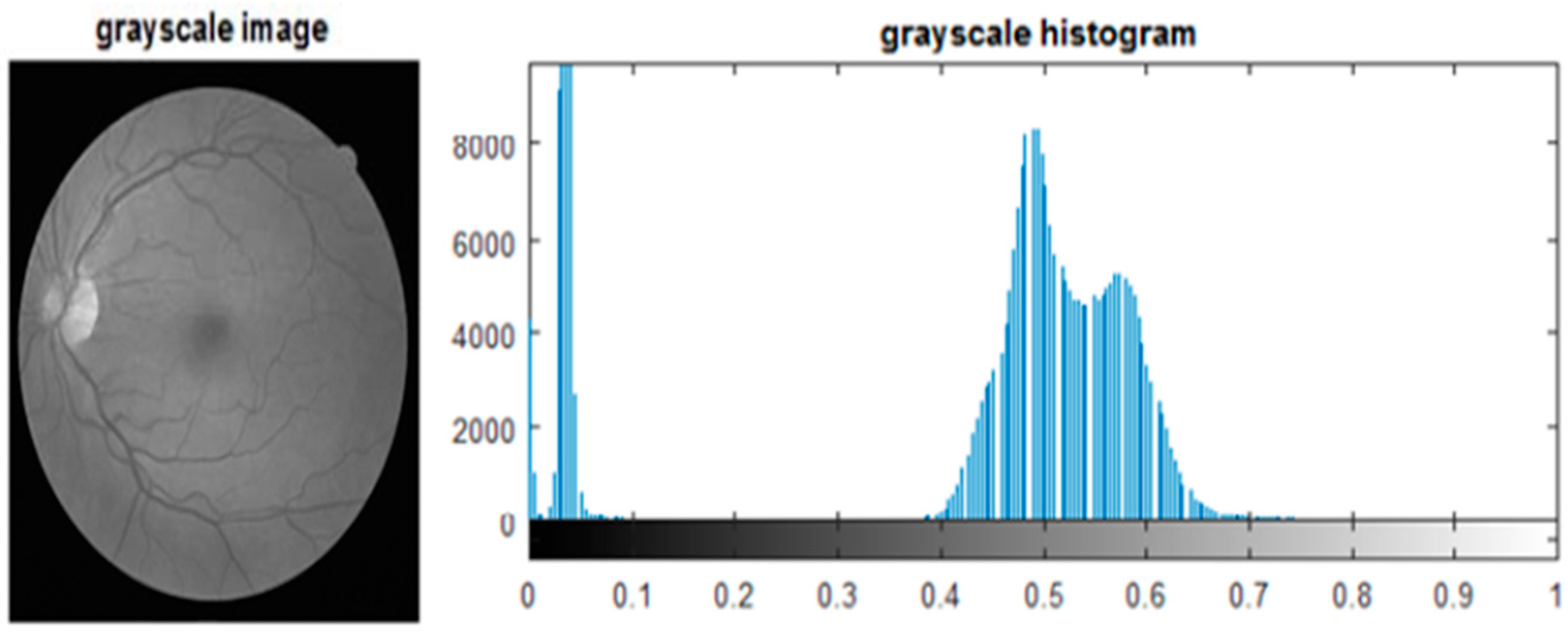

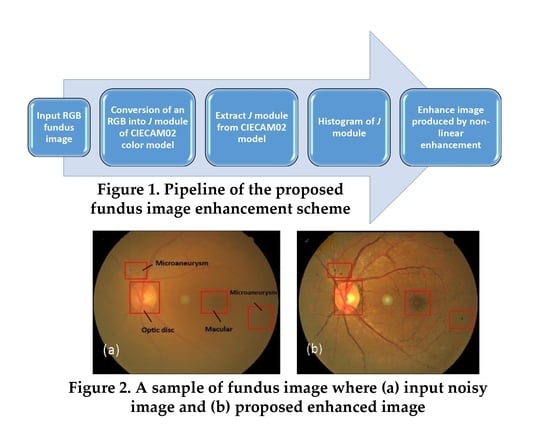

Figure 1 presents a visual example of a fundus image with DR-based indicators.

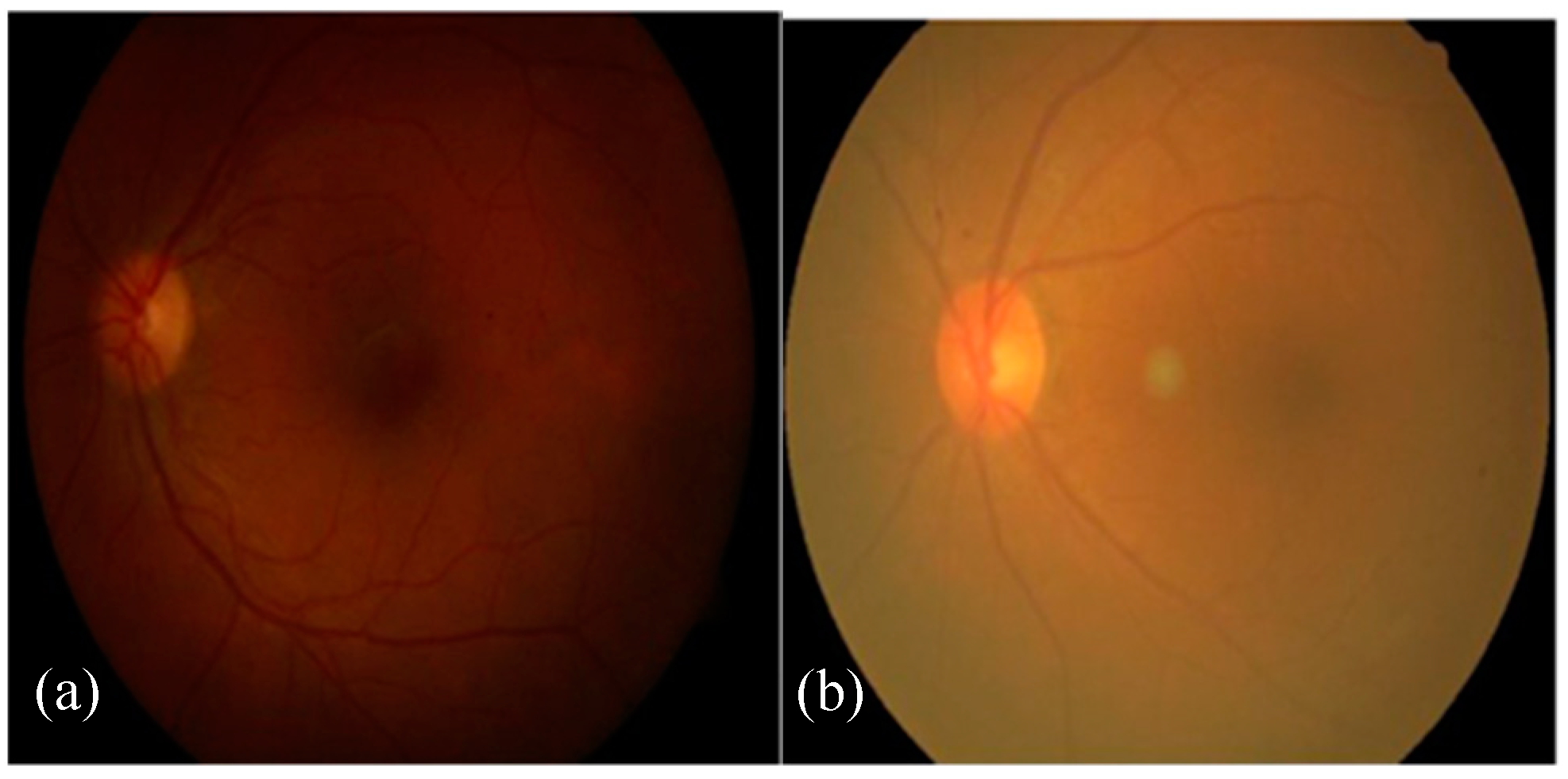

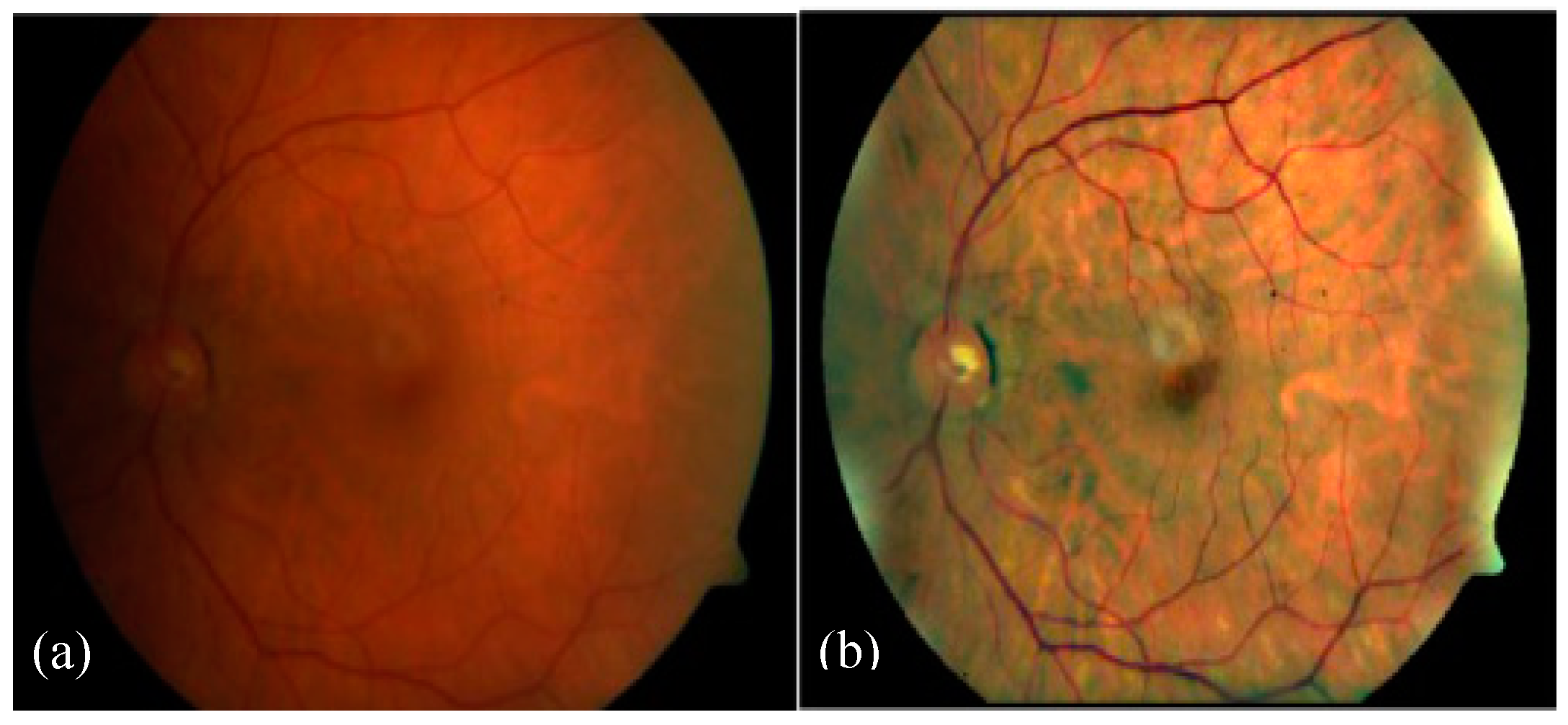

The acquisition of fundus images is accomplished using a fundus camera, where fundus images are captured using different light conditions and angles. Therefore, these images suffer from inadequate brightness and contrast, leading to low segmentation and classification results in DR assessment.

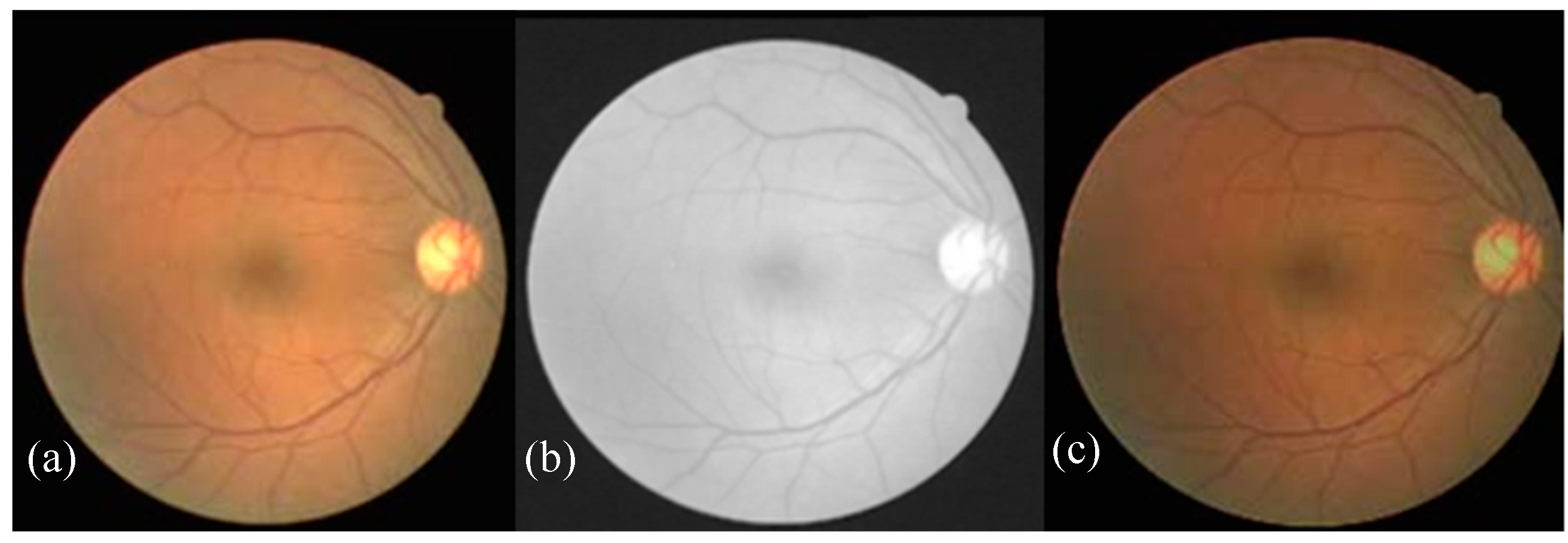

Figure 2a illustrates the uneven illumination conditions of fundus images, whereas

Figure 2b shows a noisy and low contrast fundus image [

8]. The automated diagnosis of DR is only possible by the identification of DR-related features on the fundus images. This could be a challenging task because of varied contrast, appearance, and structure of these DR-based lesions.

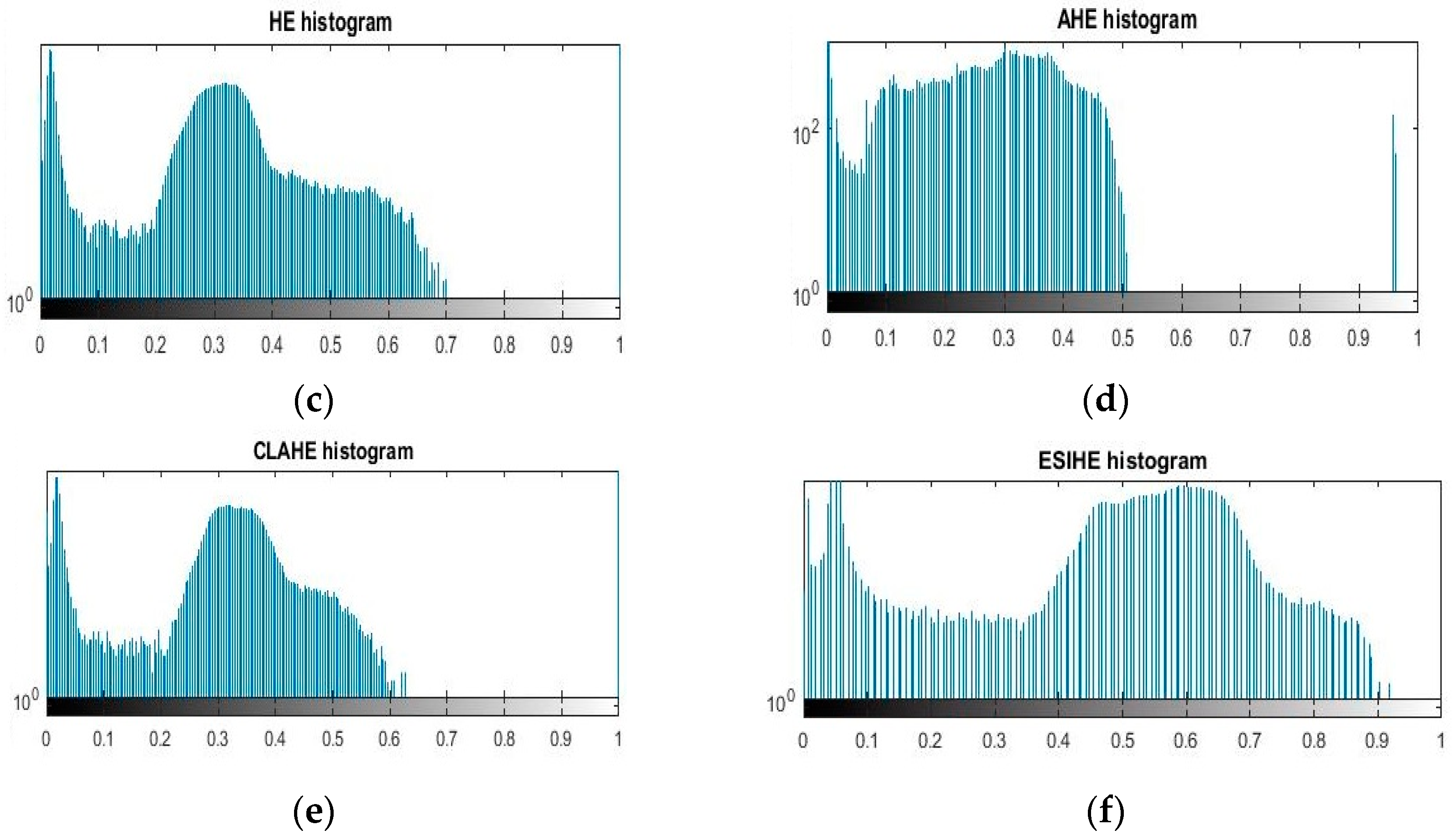

Histogram equalization (HE) is a significant operated image enhancement art due to its easy implementation. Moreover, a number of HE variants in the literature have been found to be promising in the enhancement of a dynamic range of modalities. Each variant has its own merits and demerits [

11]. An adaptive histogram equalization (AHE) was then presented to overcome the shortcomings of HE, i.e., the over-enhancement of artifacts and intensity saturation. These adaptive approaches applied the histogram on pixel levels to boost the regional contrast of the image. The AHE produces good results, but it has a slow processing speed and great influence on the amplification of noise [

12]. To address these problems, a contrast-limited adaptive histogram equalization (CLAHE) procedure was released in Reference [

13], which has been observed to be remarkable in the removal of high rations of noise, artifacts, and over-enhancement of bright regions from the images. Due to its popularity, various CLAHE extensions have been proposed by authors [

14,

15]. The key problem of CLAHE is not employing the complete range of histograms for image enhancement, as well as suppressing some fine details. In addition to the usage of a non-holistic histogram range, the performance of CLAHE also highly depends on the selection of contextual regions, tile size, histogram bins, adequate distribution of tiles, and the parameters of an enhanced image itself. This seems to be computationally expensive, and obtained CLAHE results become worse than an original image [

16]. Later, an exposure-based sub-image histogram equalization (ESIHE) was created to come up with a solution to regulate the enhancement rate, maintain brightness, and lessen noise level [

12]. Yang et al. [

17] considered a bin underflow–bin overflow histogram equalization (BUBOHE) scheme to solve the HE problems. Their scheme remained successful in image enhancement, but it failed to address the problem of decreased local contrast. Another study based on adaptive gamma correction (AGC), proposed by Chiu et al. [

18], was used to enhance the quality of dimmed light images. They employed weight distribution on pixel level intensity to improve image contrast. Their approach presented significant contrast levels, but over-enhanced the bright area of the image. Saravanan et al. [

19] used an adaptive histogram equalization on a green plane of the RGB image to improve the contrast. Initially, a green channel was extracted because of its rich color and content details. Then, background subtraction was performed using a median filtered grayscale image, and finally, an AHE was applied to normalize the brightness and intensity. Similarly, a shaded correction method [

20] was employed to subtract the background details and to improve the contrast of color images. However, these mechanisms have produced spot noise and increased the luminance of the brighter features, i.e., the optic disc and cup. Intajag et al. [

21] considered a fuzzy set with a derivation equation to partition the histogram into multimodes for image enhancement. They mainly applied a fuzzy set on each mode of histogram to get an optimal crossover point of the S-function with the highest probability of the fuzzy index, which was then utilized for enhancement. Their scheme was found to be successful in image enhancement, but still, the problem of over-enhancement of noise was not addressed.

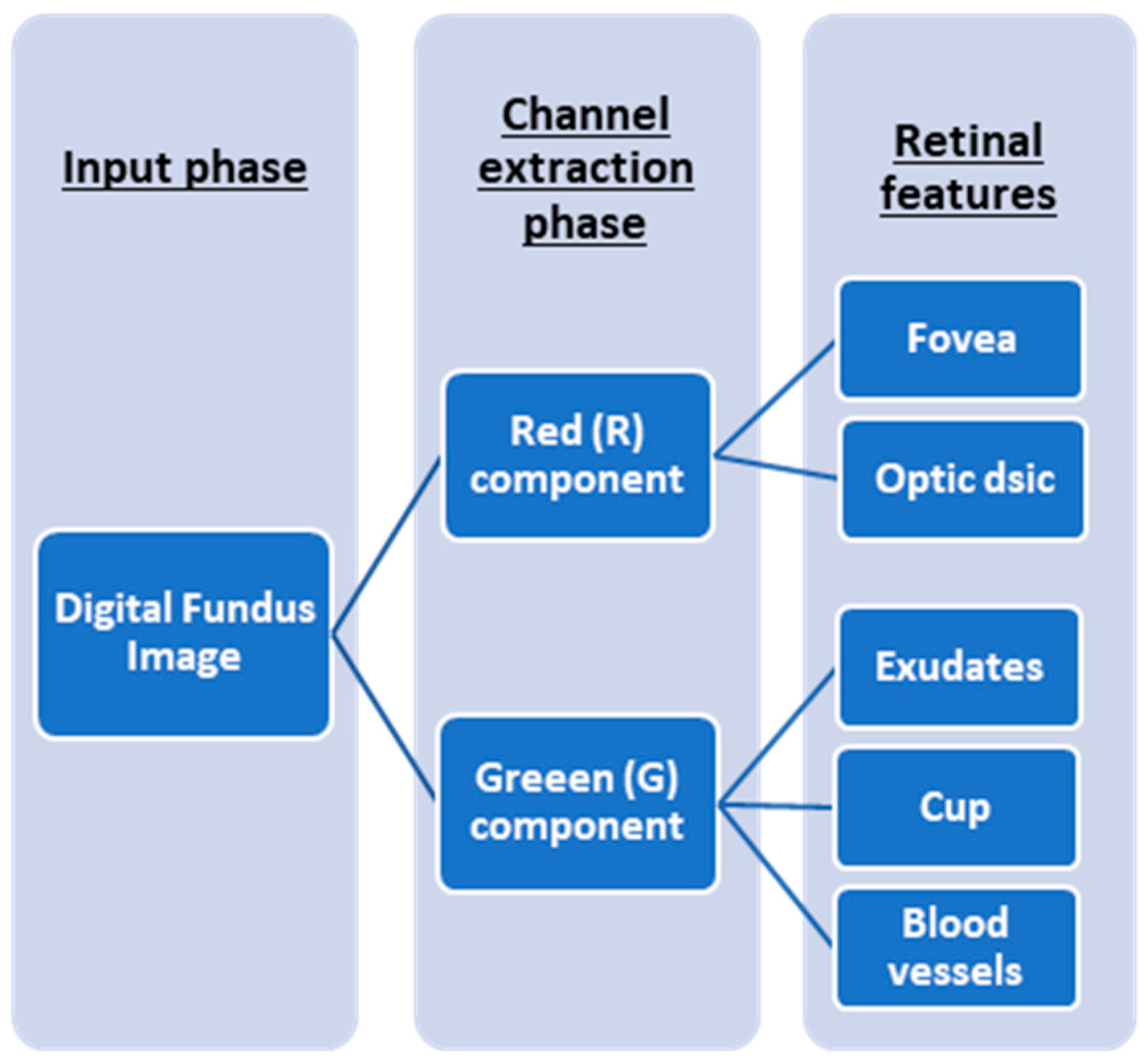

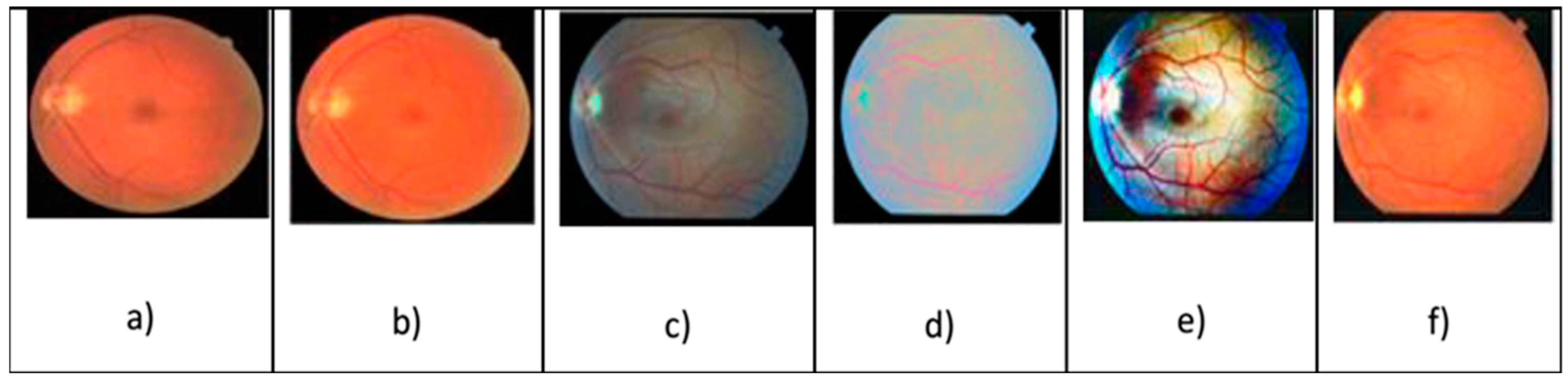

Figure 3 demonstrates the application of an RGB component in the extraction of diagnostic parameters for DR assessment. Datta et al. [

22] computed a fuzzy histogram of the green channel and then partitioned it into subhistograms. Finally, contrast enhancement was achieved using the equalization of an intensity level of the subhistograms with minimization of the difference of mean brightness with respect to its corresponded original image. However, the problem of a high rate of luminosity in the brighter region of the image remained unsolved. A green plane modification [

23] and a multiscale image analysis with a Laplacian pyramid [

24] were found significant in image normalization and contrast enhancement. These two studies [

25,

26] presented a comprehensive look at the HE, AHE, CLAHE, and Mahalanobis distance arts investigated for image enhancement. These approaches produced reasonable image enhancement results, but were found less effective in the elimination of the over-enhancement of noise and luminance in the brighter part of the image.

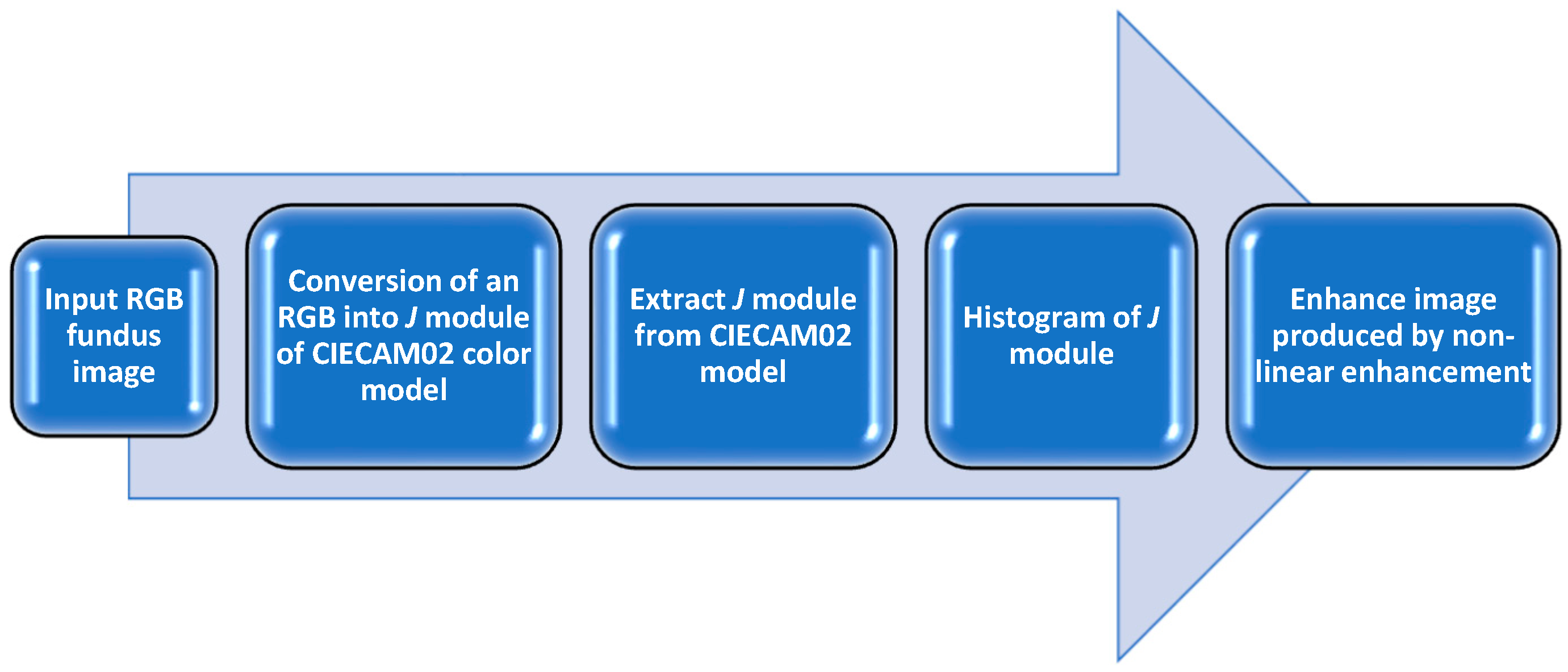

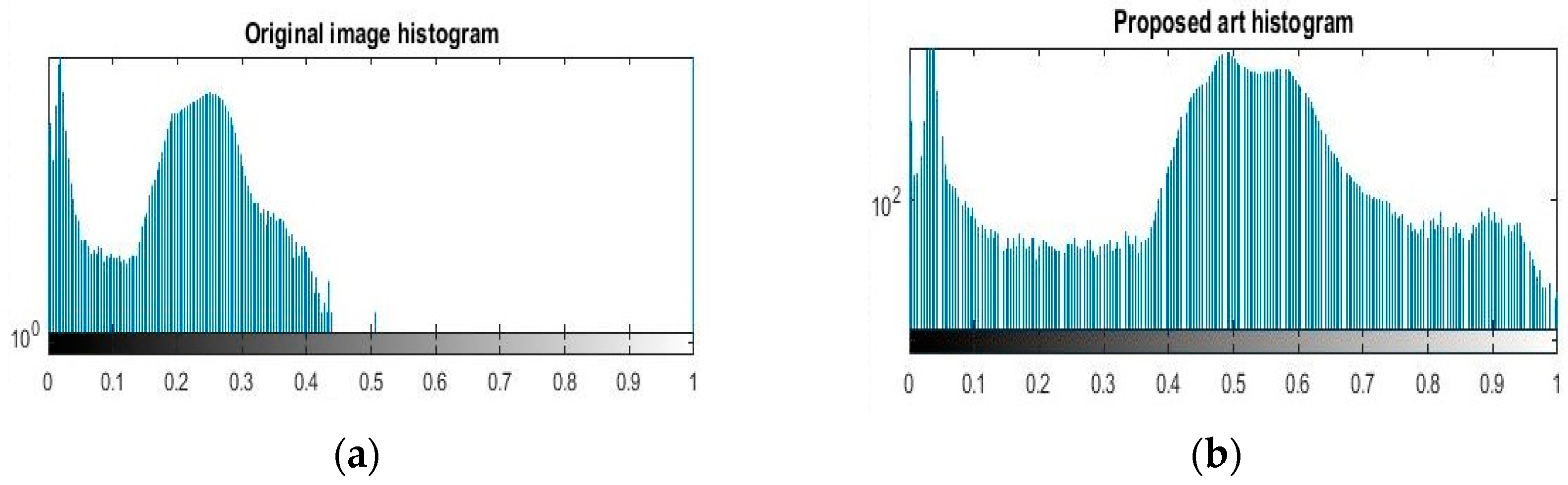

Due to the drawbacks of the above enhancement arts, the aim of this work was to develop a real-time image enhancement tool to boost the contrast of fundus images holistically without any pre- or postprocessing steps or distortion of mean brightness and color information of the image. Lastly, the presented art results were compared to histogram-related methods and recorded up to the mark in optimization of these method limitations.