Abstract

The Small Set Expansion Hypothesis is a conjecture which roughly states that it is NP-hard to distinguish between a graph with a small subset of vertices whose (edge) expansion is almost zero and one in which all small subsets of vertices have expansion almost one. In this work, we prove conditional inapproximability results with essentially optimal ratios for the following graph problems based on this hypothesis: Maximum Edge Biclique, Maximum Balanced Biclique, Minimum k-Cut and Densest At-Least-k-Subgraph. Our hardness results for the two biclique problems are proved by combining a technique developed by Raghavendra, Steurer and Tulsiani to avoid locality of gadget reductions with a generalization of Bansal and Khot’s long code test whereas our results for Minimum k-Cut and Densest At-Least-k-Subgraph are shown via elementary reductions.

1. Introduction

Since the PCP theorem was proved two decades ago [1,2], our understanding of approximability of combinatorial optimization problems has grown enormously; tight inapproximability results have been obtained for fundamental problems such as Max-3SAT [3], Max Clique [4] and Set Cover [5,6]. Yet, for other problems, including Vertex Cover and Max Cut, known NP-hardness of approximation results come short of matching best known algorithms.

Khot’s introduction of the Unique Games Conjecture (UGC) [7] propelled another wave of development in hardness of approximation that saw many of these open problems resolved (see e.g., [8,9]). Alas, some problems continue to elude even attempts at proving UGC-hardness of approximation. For a class of such problems, the failure stems from the fact that typical reductions are local in nature; many reductions from unique games to graph problems could produce disconnected graphs. If we try to use such reductions for problems that involve some forms of expansion of graphs (e.g., Sparsest Cut), we are out of luck.

One approach to overcome the aforementioned issue is through the Small Set Expansion Hypothesis (SSEH) of Raghavendra and Steurer [10]. To describe the hypothesis, let us introduce some notations. Throughout the paper, we represent an undirected edge-weighted graph by a vertex set V, an edge set E and a weight function . We call Gd-regular if for every . For a d-regular weighted graph G, the edge expansion of is defined as

where is the total weight of edges across the cut . The small set expansion problem SSE, where are two parameters that lie in (0, 1), can be defined as follows.

Definition 1

(SSE()). Given a regular edge-weighted graph , distinguish between:

- (Completeness) There exists of size such that .

- (Soundness) For every of size , .

Roughly speaking, SSEH asserts that it is NP-hard to distinguish between a graph that has a small non-expanding subset of vertices and one in which all small subsets of vertices have almost perfect edge expansion. More formally, the hypothesis can be stated as follows.

Conjecture 1

(SSEH [10]). For every , there is such that SSE() is NP-hard.

Interestingly, SSEH not only implies UGC [10], but it is also equivalent to a strengthened version of the latter, in which the graph is required to have almost perfect small set expansion [11].

Since its proposal, SSEH has been used as a starting point for inapproximability of many problems whose hardnesses are not known otherwise. Most relevant to us is the work of Raghavendra, Steurer and Tulsiani (henceforth RST) [11] who devised a technique that exploited structures of SSE instances to avoid locality in reductions. In doing so, they obtained inapproximability of Minimum Bisection, Minimum Balanced Separator, and Minimum Linear Arrangement, all of which are not known to be hard to approximate under UGC.

1.1. Maximum Edge Biclique and Maximum Balanced Biclique

Our first result is an adaptation of RST technique to prove inapproximability of Maximum Edge Biclique (MEB) and Maximum Balanced Biclique (MBB). For both problems, the input is a bipartite graph. The goal for the former is to find a complete bipartite subgraph that contains as many edges as possible whereas, for the latter, the goal is to find a balanced complete bipartite subgraph that contains as many vertices as possible.

Both problems are NP-hard. MBB was stated (without proof) to be NP-hard in Garey and Johnson’s seminal book (p. 196 [12]); several proofs of this exist such as one provided in [13]. For MEB, it was proved to be NP-hard more recently by Peeters [14]. Unfortunately, much less is known when it comes to approximability of both problems. Similar to Maximum Clique, folklore algorithms give approximation ratio for both MBB and MEB, and no better algorithm is known. However, not even NP-hardness of approximation of some constant ratio is known for the problems. This is in stark contrast to Maximum Clique for which strong inapproximability results are known [4,15,16,17]. Fortunately, the situation is not completely hopeless as the problems are known to be hard to approximate under stronger complexity assumptions.

Feige [18] showed that, assuming that random 3SAT formulae cannot be refuted in polynomial time, both problems cannot be approximated to within of the optimum in polynomial time for some . (While Feige only stated this for MBB, the reduction clearly works for MEB too.) Later, Feige and Kogan [19] proved ratio inapproximability for both problems for some , assuming that 3SAT ∉ DTIME() for some . Moreover, Khot [20] showed, assuming 3SAT ∉ BPTIME() for some , that no polynomial time algorithm achieves -approximation for MBB for some . Ambühl et al. [21] subsequently built on Khot’s result and showed a similar hardness for MEB. Recently, Bhangale et al. [22] proved that both problems are hard to approximate to within factor for every , assuming a certain strengthened version of UGC and NP ≠ BPP. (In [22], the inapproximability ratio is only claimed to be for some . However, it is not hard to see that their result in fact implies factor hardness of approximation as well.) In addition, while not stated explicitly, the author’s recent reduction for Densest k-Subgraph [23] yields ratio inapproximability for both problems under the Exponential Time Hypothesis [24] (3SAT ∉ DTIME()) and this ratio can be improved to for any under the stronger Gap Exponential Time Hypothesis [25,26] (no time algorithm can distinguish a fully satisfiable 3SAT formula from one which is only -satisfiable for some ); these ratios are better than those in [19] but worse than those in [20,21,22].

Finally, it is worth noting that, assuming the Planted Clique Hypothesis [27,28] (no polynomial time algorithm can distinguish between a random graph and one with a planted clique of size ), it follows (by partitioning the vertex set into two equal sets and delete all the edges within each partition) that Maximum Balanced Biclique cannot be approximated to within ratio in polynomial time. Interestingly, this does not give any hardness for Maximum Edge Biclique, since the planted clique has only edges, which less than that in a trivial biclique consisting of any vertex and all of its neighbors.

In this work, we prove strong inapproximability results for both problems, assuming SSEH:

Theorem 1.

Assuming the Small Set Expansion Hypothesis, there is no polynomial time algorithm that approximates MEB or MBB to within factor of the optimum for every , unless NP ⊆ BPP.

We note that the only part of the reduction that is randomized is the gap amplification via randomized graph product [29,30]. If one is willing to assume only that NP ≠ P (and SSEH), our reduction still implies that both are hard to approximate to within any constant factor.

Only Bhangale et al.’s result [22] and our result achieve the inapproximability ratio of for every ; all other results achieve at most ratio for some . Moreover, only Bhangale et al.’s reduction and ours are candidate NP-hardness reductions, whereas each of the other reductions either uses superpolynomial time [19,20,21,23] or relies on an average-case assumption [18]. It is also worth noting here that, while both Bhangale et al.’s result and our result are based on assumptions which can be viewed as stronger variants of UGC, the two assumptions are incomparable and, to the best of our knowledge, Bhangale et al.’s technique does not apply to SSEH. A discussion on the similarities and differences between the two assumptions can be found in Appendix C.

Along the way, we prove inapproximability of the following hypergraph bisection problem, which may be of independent interest: given a hypergraph find a bisection of such that the number of uncut hyperedges is maximized. ( is a bisection of if and .) We refer to this problem as Max UnCut Hypergraph Bisection (MUCHB). Roughly speaking, we show that, assuming SSEH, it is hard to distinguish a hypergraph whose optimal bisection cuts only fraction of hyperedges from one in which every bisection cuts all but fraction of hyperedges:

Lemma 1.

Assuming the Small Set Expansion Hypothesis, for every , it is NP-hard to, given a hypergraph , distinguish between the following two cases:

- (Completeness) There is a bisection of s.t. .

- (Soundness) For every set of size at most , .

Here denotes the set of hyperedges that lie completely inside of the set .

Our result above is similar to Khot’s quasi-random PCP [20]. Specifically, Khot’s quasi-random PCP can be viewed as a hardness for MUCHB in the setting where the hypergraph is d-uniform; roughly speaking, Khot’s result states that it is hard (if 3SAT ∉ BPTIME()) to distinguish between a d-uniform hypergraph where fraction of hyperedges are uncut in the optimal bisection from one where roughly fraction of hyperedges are uncut in any bisection. Note that the latter is the fraction of uncut hyperedges in random d-uniform hypergraphs and hence the name “quasi-random”. In this sense, Khot’s result provides better soundness at the expense of worse completeness compared to Theorem 1.

1.2. Minimum k-Cut

In addition to the above biclique problems, we prove an inapproximability result for the Minimum k-Cut problem, in which a weighted graph is given and the goal is to find a set of edges with minimum total weight whose removal paritions the graph into (at least) k connected components. The Minimum k-Cut problem has long been studied. When , the problem can be solved in polynomial time simply by solving Minimum cut for every possible pairs of s and t. In fact, for any fixed k, the problem was proved to be in P by Goldschmidt and Hochbaum [31], who also showed that, when k is part of the input, the problem is NP-hard. To circumvent this, Saran and Vazirani [32] devised two simple polynomial time -approximation algorithms for the problem. In the ensuing years, different approximation algorithms [33,34,35,36,37] have been proposed for the problem, none of which are able achieve an approximation ratio of for some in polynomial time. In fact, Saran and Vazirani themselves conjectured that -approximation is intractible for the problem [32]. In this work, we show that their conjecture is indeed true, if the SSEH holds:

Theorem 2.

Assuming the Small Set Expansion Hypothesis, it is NP-hard to approximate Minimum k-Cut to within factor of the optimum for every constant .

Note that the problem was claimed to be APX-hard in [32]. However, to the best of our knowledge, the proof has never been published and no other inapproximability is known.

1.3. Densest At-Least-k-Subgraph

Our final result is a hardness of approximating the Densest At-Least-k-Subgraph (DALkS) problem, which can be stated as follows. Given an edge-weighted graph, find a subset S of at least k vertices such that the induced subgraph on S has maximum density, which is defined as the ratio between the total weight of edges and the number of vertices. The problem was first introduced by Andersen and Chellapilla [38] who also gave a 3-approximation algorithm for the problem. Shortly after, 2-approximation algorithms for the problem were discovered by Andersen [39] and independently by Khuller and Saha [40]. We show that, assuming SSEH, this approximation guarantee is essentially the best we can hope for:

Theorem 3.

Assuming the Small Set Expansion Hypothesis, it is NP-hard to approximate Densest At-Least-k-Subgraph to within factor of the optimum for every constant .

After our manuscript was made available online, we were informed that Theorem 3 was also proved independently by Bergner [41]. To the best of our knowledge, this is the only known hardness of approximation result for DALkS. We remark that DALkS is a variant of the Densest k-Subgraph (DkS) problem, which is the same as DALkS except that the desired set S must have size exactly k. DkS has been extensively studied dating back to the early 90s [10,18,20,23,42,43,44,45,46,47,48,49,50,51,52]. Despite these considerable efforts, its approximability is still wide open. In particular, even though lower bounds have been shown under stronger complexity assumptions [10,18,20,23,50,52] and for LP/SDP hierarchies [49,53,54], not even constant factor NP-hardness of approximation for DkS is known. On the other hand, the best polynomial time algorithm for DkS achieves only -approximation [49]. Since any inapproximability result for DALkS translates directly to DkS, even proving some constant factor NP-hardness of approximating DALkS would advance our understanding of approximability of DkS.

2. Inapproximability of Minimum k-Cut

We now proceed to prove our main results. Let us start with the simplest: Minimum k-Cut.

Proof of Theorem 2.

The reduction from SSE() to Minimum k-Cut is simple; the graph G remains the input graph for Minimum k-Cut and we let where .

Completeness. If there is of size such that , then we partition the graph into k groups where the first group is and each of the other groups contains one vertex from S. The edges cut are the edges across the cut and the edges within the set S itself. The total weight of edges of the former type is and that of the latter type is at most . Hence, the total weight of edges cut in this partition is at most .

Soundness. Suppose that, for every of size , . Let be any k-partition of the graph. Assume without loss of generality that . Let where i is the maximum index such that .

We claim that . To see that this is the case, suppose for the sake of contradiction that . Since , we have . Moreover, since , we have . As a result, we have , which is a contradiction. Hence, .

Now, note that, for every of size , implies that where denotes the total weight of all edges within S. Since , we also have . As a result, the total weight of edges across the cut , all of which are cut by the partition, is at least

For every sufficiently small constant , by setting and , the ratio between the two cases is at least , which concludes the proof of Theorem 2. ☐

3. Inapproximability of Densest At-Least-k-Subgraph

We next prove our inapproximability result for Densest At-Least-k-Subgraph, which is also very simple. For this reduction and the subsequent reductions, it will be more convenient for us to use a different (but equivalent) formulation of SSEH. To state it, we first define a variant of SSE() called SSE(); the completeness remains the same whereas the soundness is strengthened to include all S of size in .

Definition 2

(SSE()). Given a regular edge-weighted graph , distinguish between:

- (Completeness) There exists of size such that .

- (Soundness) For every with , .

The new formulation of the hypothesis can now be stated as follows.

Conjecture 2.

For every , there is such that SSE is NP-hard.

Raghavendra et al. [11] showed that this formulation is equivalent to the earlier formulation (Conjecture 1); please refer to Appendix A.2 of [11] for a simple proof of this equivalence.

Proof of Theorem 3.

Given an instance of SSE(), we create an input graph for Densest At-Least-k-Subgraph as follows. consists of all the vertices in V and an additional vertex . The weight function remains the same as w for all edges in V whereas has only a self-loop with weight . (If we would like to avoid self-loops, we can replace with two vertices with an edge of weight between them.) In other words, and . Finally, let where .

Completeness. If there is of size such that , consider the set . We have and the density of is where denote the total weight of edges within S. This can be written as

Soundness. Suppose that for every of size . Consider any set of size at least k. Let and let denote the total weight of edges within T. Observe that the density of S is at most . Let us consider the following two cases.

- . In this case, and we have

- . In this case, we have

Hence, in both cases, the density of is at most .

For every sufficiently small constant , by picking and , the ratio between the two cases is at least , concluding the proof of Theorem 3. ☐

4. Inapproximability of MEB and MBB

Let us now turn our attention to MEB and MBB. First, note that we can reduce MUCHB to MEB/MBB by just letting the two sides of the bipartite graph be and creating an edge iff . This immediately shows that Lemma 1 implies the following:

Lemma 2.

Assuming SSEH, for every , it is NP-hard to, given a bipartite graph with , distinguish between the following two cases:

- (Completeness) G contains as a subgraph.

- (Soundness) G does not contain as a subgraph.

Here denotes the complete bipartite graph in which each side contains t vertices.

We provide the full proof of Lemma 2 in Appendix A. We also note that Theorem 1 follows from Lemma 2 by gap amplification via randomized graph product [29,30]. Since this has been analyzed before even for biclique (Appendix D [20]), we defer the full proof to Appendix B.

We are now only left to prove Lemma 1; we devote the rest of this section to this task.

4.1. Preliminaries

Before we continue, we need additional notations and preliminaries. For every graph and every vertex v, we write to denote the distribution on its neighbors weighted according to w. (That is, is if and is zero otherwise.) Moreover, we sometimes abuse the notation and write to denote a random edge of G weighted according to w.

While our reduction can be understood without notation of unique games, it is best described in a context of unique games reductions. We provide a definition of unique games below.

Definition 3

(Unique Game (UG)). A unique game instance consists of an edge-weighted graph , a label set , and, for each , a permutation . The goal is to find an assignment such that is maximized; we call an edge such that satisfied.

Khot’s Unique Games Conjecture (UGC) [7] states that, for every , it is NP-hard to distinguish between a unique game in which there exists an assignment satisfying at least fraction of edges from one in which every assignment satisfies at most fraction of edges.

Finally, we need some preliminaries in discrete Fourier analysis. We state here only few facts that we need. We refer interested readers to [55] for more details about the topic.

For any discrete probability space , can be written as where is the product Fourier basis of (see [55] (Chapter 8.1)). The degree-d influence on the j-th coordinate of f is where . It is well known that (see [56] (Proposition 3.8)).

We also need the following theorem. It follows easily from the so-called “It Ain’t Over Till It’s Over” conjecture, which is by now a theorem ([56] (Theorem 4.9)). For more details on how this version follows from there, please refer to [57] (p. 769).

Theorem 4

([56]). For any , there exists and such that, if any functions where Ω is a probability space whose probability of each atom is at least β satisfy

then

where is a random subset of where each is included independently w.p. , and is a shorthand for .

We remark that the constant 0.99 in Theorem 4 could be replaced by any constant less than one; we use it only to avoid introducing more parameters

4.2. Bansal-Khot Long Code Test and A Candidate Reduction

Theorem 4 leads us nicely to the Bansal-Khot long code test [58]. For UGC hardness reductions, one typically needs a long code test (aka dictatorship gadget) which, on input , has the following properties:

- (Completeness) If is a long code, the test accepts with large probability. (A long code is simply j-junta (i.e. a function that depends only on the ) for some .)

- (Soundness) If are balanced (i.e. ) and are “far from being a long code”, then the test accepts with low probability.A widely-used notion of “far from being a long code”, and one we will use here, is that the functions do not share a coordinate with large low degree influences, i.e., for every and every , at least one of and is small.

Bansal-Khot long code test works by first picking and . Then, test whether evaluates to 1 on the whole . This can be viewed as an “algorithmic” version of Theorem 4; specifically, the theorem (with ) immediately implies the soundness property of this test. On the other hand, it is obvious that, if is a long code, then the test accepts with probability .

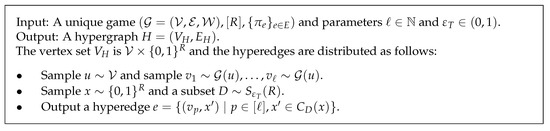

Bansal and Khot used this test to prove tight hardness of approximation of Vertex Cover. The reduction is via a natural composition of the test with unique games. Their reduction also gives a cadidate reduction from UG to MUCHB, which is stated below in Figure 1.

Figure 1.

A Candidate Reduction from UG to MUCHB.

As is typical for gadget reductions, for , we view the indicator function for each as the intended long code. If there exists an assignment to the unique game instance that satisfies nearly all the constraints, then the bisection corresponding to cuts only small fraction of edges, which yields the completeness of MUCHB.

As for the soundness, we want to decode an UG assignment from of size at most which contains at least fraction of hyperedges. In terms of the tests, this corresponds to a collection of functions such that and the Bansal-Khot test on passes with probability at least where are sampled as in Figure 1. Now, if we assume that for all , then such decoding is possible via a similar method as in [58] since Theorem 4 can be applied here.

Unfortunately, the assumption does not hold for an arbitrary and the soundness property indeed fails. For instance, imagine the constraint graph of the starting UG instance consisting of two disconnected components of equal size; let be the set of vertices in the two components. In this case, the bisection does not even cut a single edge! This is regardless of whether there exists an assignment to the UG that satisfies a large fraction of edges.

4.3. RST Technique and The Reduction from SSE to MUCHB

The issue described above is common for graph problems that involves some form of expansion of the graph. The RST technique [11] was in fact invented to specifically circumvent this issue. It works by first reducing SSE to UG and then exploiting the structure of the constructed UG instance when composing it with a long code test; this allows them to avoid extreme cases such as one above. There are four parameters in the reduction: and such that R is divisible by k. Before we describe the reduction, let us define additional notations:

- Let denote the R-tensor graph of ; the vertex set of is and, for every , the edge weight between is the product of in G for all .

- For each , denote the distribution on where the i-th coordinate is set to with probability and is uniformly randomly sampled from V otherwise.

- Let denote the set of all permutations ’s of such that, for each , .

- Let denote the probability space such that the probability for are both and the probability for ⊥ is .

The first step of reduction takes an SSE() instance and produces a unique game where and the edges are distributed as follows:

- Sample and .

- Sample and .

- Sample two random .

- Output an edge with .

Here is a small constant, k is large and should be think of as . When there exists a set of size with small edge expansion, the intended assignment is to, for each , find the first block such that where denotes the multiset and let be the coordinate of the vertex in that intersection. If no such j exists, we assign arbitrarily. Note that, since , is constant, which means that only fraction of vertices are assigned arbitrarily. Moreover, it is not hard to see that, for the other vertices, their assignments rarely violate constraints as and are small. This yields the completeness. In addition, the soundness was shown in [10,11], i.e., if every of size has near perfect expansion, no assignment satisfies many constraints in (see Lemma 7).

The second step is to reduce this UG instance to a hypergraph . Instead of making the vertex set as in the previous candidate reduction, we will instead make where and is a small constant. This does not seem to make much sense from the UG reduction standpoint because we typically want to assign which side of the bisection is according to but could be ⊥ in this construction. However, it makes sense when we view this as a reduction from SSE directly: let us discard all coordinates i’s such that and define . If there exists a block such that , then let be the first such block (i.e. ), let be the coordinate in the intersection between and S, and assign to . (Recall that is the intended bisection.) Otherwise, if no such block exists, assign arbitrarily.

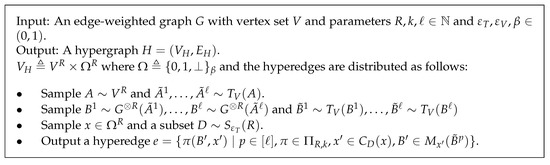

Observe that, in the intended solution, the side that is assigned to does not change if (1) is modified for some s.t. or (2) we apply some permutation to both A and x. In other words, we can “merge” two vertices and that are equivalent through these changes together in the reduction. For notational convenience, instead of merging vertices, we will just modify the reduction so that, if is included in some hyperedge, then every reachable from by these operations is also included in the hyperedge. More specifically, if we define corresponding to the first operation, then we add to the hyperedge for every and . The full reduction is shown in Figure 2.

Figure 2.

Reduction from SSE to Max UnCut Hypergraph Bisection.

Note that the test we apply here is slightly different from Bansal-Khot test as our test is on instead of used in [58]. Another thing to note is that now our vertices and hyperedges are weighted, the vertices according to the product measure of and the edges according to the distribution produced from the reduction. We write to denote the measure on the vertices, i.e., for , , and we abuse the notation and use it to denote the probability that a hyperedge as generated in Figure 2 lies completely in T. We note here that, while the MUCHB as stated in Lemma 1 is unweighted, it is not hard to see that we can go from weighted version to unweighted by copying each vertex and each edge proportional to their weights. (Note that this is doable since we can pick to be rational.)

The advantage of this reduction is that the vertex “merging” makes gadget reduction non-local; for instance, it is clear that even if the starting graph V has two connected components, the resulting hypergraph is now connected. In fact, Raghavendra et al. [11] show a much stronger quantitative bound. To state this, let us consider any with . From how the hyperedges are defined, we can assume w.l.o.g. that, if , then for every and every . Again, let . The following bound on the variance of is implied by the proof of Lemma 6.6 in [11]:

The above bound implies that, for most A’s, the mean of cannot be too large. This will indeed allow us to ultimately apply Theorem 4 on a certain fraction of the tuples in the reduction, which leads to an UG assignment with non-negligible value.

4.4. Completeness

In the completeness case, we define a bisection similar to that described above. This bisection indeed cuts only a small fraction of hyperedges; quantitatively, this yields the following lemma.

Lemma 3.

If there is a set such that and where , then there is a bisection of such that where and hide only absolute constants.

Proof.

Suppose that there exists of size where and . For , we will use the following notations throughout this proof:

- For , let denote the set of all coordinates i in j-th block such that and , i.e., .

- Let denote the first block j with , i.e., . Note that if such block does not exist, we set .

- Let be the only element in . If , let .

To define , we start by constructing and as follows: assign each such that to . Finally, we assign the rest of the vertices arbitrarily to and in such a way that . Since , it suffices to show the desired bound for . Due to symmetry, it suffices to bound . Recall that where e is generated as detailed in Figure 2.

To compute , it will be most convenient to make a block-by-block analysis. In particular, for each block , we define to denote the event that for some . We will be interested in bounding the following conditional probabilities:

Here and throughout the proof, are as sampled by our reduction in Figure 2. Note also that it is clear that do not depend on j.

Before we bound , let us see how these probabilities can be used to bound .

The probability that is in fact simply

Moreover, can be written as

Plugging these two back into Equation (1), we have

With Equation (2) in mind, we will proceed to bound . Before we do so, let us state two inequalities that will be useful: for every , we have

and

The first inequality comes from the fact that, for to be in S when , at least one of the following events must occur: (1) and , (2) and , (3) . Each of first two occurs with probability whereas the last event occurs with probability at most . On the other hand, for the second inequality, at least one of the following events must occur: (1) , (2) , (3) . Each of first two occurs with probability whereas the last event occurs with probability at most .

Bounding . To compute , observe that is the probability that, for exactly one i in the j-th block, and . For a fixed i, this happens with probability . Hence, . Since , we can conclude that is simply a constant (i.e. ).

Bounding . We next bound . If , we know that . Let us consider the following two cases:

- . Observe that, if occurs, then there exist and such that , and or . For brevity, below we denote the conditional event by E. By union bound, our observation gives the following bound.We can now bound the first term byConsider the other term in Equation (5). We can rearrange it as follows.

- . Let and be two different (arbitrary) elements of . Again, for convenient, we use E to denote the conditional event . Now, let us first split as follows.Observe that, when , for every . Hence, for to occur, there must be such that at least one of is not in S. In other words,Combining this with Equation (8), we have .

As a result, we can conclude that is at most .

Bounding . Finally, let us bound . First, note that the probability that is and that the probability that is . This means that

where E is the event .

Moreover, since , from Equation (4) and from union bound, we have

From the above two inequalities, we have

Conditioned on the above event, implies that there exists and some in this (j-th) block such that , and or . We have bounded an almost identical probability before in the case when we bound . Similarly, here we have an upper bound of on this probability. Hence,

By combining our bounds on with Equation (2), we immediately arrive at the desired bound:

☐

4.5. Soundness

Let us consider any set T such that . We would like to give an upper bound on . From how we define hyperedges, we can assume w.l.o.g. that if and only if for every and . We call such T -invariant.

Let denote the indicator function for T, i.e., if and only if . Note that . Following notation from [11], we write as a shorthand for . In addition, for each , we will write as a shorthand for generated randomly by sampling , and respectively. Let us restate Raghavendra et al.’s [11] lemma regarding the variance of in a more convenient formulation below.

Lemma 4

([11] (Lemma 6.6)). For every , let . We have

Note that Lemma 6.6 in [11] requires a symmetrization of f’s, but we do not need it here since T is -invariant.

To see how the above lemma helps us decode an UG assignment, observe that, if our test accepts on , then it also accepts on any subset of the functions (with the same ); hence, to apply Theorem 4, it suffices that t of the functions have means . We will choose ℓ to be large compared to t. Using above lemma and a standard tail bound, we can argue that Theorem 4 is applicable for almost all tuples , as stated below.

Lemma 5.

For any positive integer ,

Proof.

First, note that, since , we can use Cherbychev’s inequality and Lemma 4 to arrive at the following bound, which is analogous to Lemma 6.7 in [11]:

Let us call such that bad and the rest of good.

For any good , Markov’s inequality implies that . As a result, an application of Chernoff bound gives the following inequality.

4.5.1. Decoding an Unique Games Assignment

With Lemma 5 ready, we can now decode an UG assignment via a similar technique from [58].

Lemma 6.

For any , let and be as in Theorem 4. For any integer , if there exists of such that and , then there exists such that

Proof.

The decoding procedure is as follows. For each , we construct a set of candidate labels . We generate F randomly by, with probability 1/2, setting to be a random element of and, with probability 1/2, sampling and setting to be a random element from . Note that, if the candidate set is empty, then we simply pick an arbitrary assignment.

From our assumption that T is -invariant, it follows that, for every and , . In other words, we have

Next, note that, from how our reduction is defined, can be written as

From and from Lemma 5, we can conclude that

From Markov’s inequality, we have

A tuple is said to be good if and . For such tuple, Theorem 4 implies that there exist s.t. . This means that .

Hence, if we sample a tuple at random, and then sample two different randomly from , then the tuple is good with probability at least and, with probability , we have . This gives the following bound:

Now, observe that and above are distributed in the same way as if we pick both of them independently with respect to . Recall that, with probability 1/2, is a random element of where and, with probability 1/2, is a random element of . Moreover, since the sum of degree d-influence is at most d (Proposition 3.8 [56]), the candidate sets are of sizes at most . As a result, the above bound yields

which, together with Equation (12), concludes the proof of the lemma. ☐

4.5.2. Decoding a Small Non-Expanding Set

To relate our decoded UG assignment back to a small non-expanding set in G, we use the following lemma of [11], which roughly states that, with the right parameters, the soundness case of SSEH implies that only small fraction of constriants in the UG can be satisfied.

Lemma 7

([11] (Lemma 6.11)). If there exists such that

then there exists a set with with .

By combining the above lemma with Lemma 6, we immediately arrive at the following:

Lemma 8.

For any , let and be as in Theorem 4. For any integer and any , if there exists with such that , then there exists a set with with where .

4.6. Putting Things Together

We can now deduce inapproximability of MUCHB by simply picking appropriate parameters.

Proof of Lemma 1.

The parameters are chosen as follows:

- Let , and so that the term in Lemma 3 is .

- Let so that the error term in Lemma 3 is at most .

- Let and be as in Theorem 4.

- Let be as in Lemma 8 and let .

- Let where so that the error term in Lemma 3 is at most .

- Let so that the error term in Lemma 3 is at most .

- Let .

- Finally, let where is the parameter from the SSEH (Conjecture 2).

Let be an instance of SSE() and let be the hypergraph resulted from our reduction. If there exists of size of expansion at most , Lemma 3 implies that there is a bisection of such that .

As for the soundness, Lemma 8 with our choice of parameters implies that, if there exists a set with and , there exists with whose expansion is less than . The contrapositive of this yields the soundness property. ☐

5. Conclusions

In this work, we prove essentially tight inapproximability of MEB, MBB, Minimum k-Cut and DALkS based on SSEH. Our results, expecially for the biclique problems, demonstrate further the applications of the hypothesis and particularly the RST technique [11] in proving hardness of graph problems that involve some form of expansion. Given that the technique has been employed for only a handful of problems [11,59], an obvious but intriguing research direction is to try to utilize the technique to other problems. One plausible candidate problem to this end is the 2-Catalog Segmentation Problem [60] since a natural candidate reduction for this problem fails due to a similar counterexample as in Section 4.2.

Another interesting question is to derandomize graph product used in the gap amplification step for biclique problems. For Maximum Clique, this step has been derandomized before [17,61]; in particular, Zuckerman [17] derandomized Håstad’s result [4] to achieve ratio NP-hardness for approximating Maximum Clique. Without going into too much detail, we would like to note that Zuckerman’s result is based on a construction of dispersers with certain parameters; properties of dispersers then imply soundness of the reduction whereas completeness is trivial from the construction since Håstad’s PCP has perfect completeness. Unfortunately, our PCP does not have perfect completeness and, in order to use Zuckerman’s approach, additional properties are required in order to argue about completeness of the reduction.

Acknowledgments

I am grateful to Prasad Raghavendra for providing his insights on the Small Set Expansion problem and techniques developed in [10,11] and Luca Trevisan for lending his expertise in PCPs with small free bits. I would also like to thank Haris Angelidakis for useful discussions on Minimum k-Cut and Daniel Reichman for inspiring me to work on the two biclique problems. Furthermore, I thank ICALP 2017 anonymous reviewers for their useful comments and, more specifically, for pointing me to [22]. Finally, I wish to thank Aditya Bhaskara for pointing me to Bergner’s thesis [41] and Lilli Bergner for providing me with a copy of the thesis. This material is based upon work supported by the National Science Foundation under Grants No. CCF 1540685 and CCF 1655215.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Reduction from MUCHB to Biclique Problems

Proof of Lemma 2 from Lemma 1.

The reduction from MUCHB to the two biclique problems are simple. Given a hypergraph , we create a bipartite graph by letting and creating an edge for iff .

Completeness. If there is a bisection of such that , then and induce a complete bipartite subgraph with at least vertices on each side in G.

Soundness. Suppose that, for every of size at most , we have . We will show that G does not contain as a subgraph. Suppose for the sake of contradiction that G contains as a subgraph. Consider one such subgraph; let and be the vertices of the subgraph. From how the edges are defined, and are disjoint. At least one of the two sets is of size at most , assume without loss of generality that . This is a contradiction since contains at least hyperedges .

By picking and , we arrive at the desired hardness result. ☐

Appendix B. Gap Amplification via Randomized Graph Product

In this section, we provide a full proof of the gap amplification step for biclique problems, thereby proving Theorem 1. The argument provided below is almost the same as the randomized graph product-based analysis of Khot (Appendix D [20]), which is in turn based on the analysis of Berman and Schnitger [29], except that we modify the construction slightly so that the reduction time is polynomial. (Using Khot’s result directly would result in the construction time being quasi-polynomial.)

Specifically, we will prove the following statement, which immediately implies Theorem 1.

Lemma A1.

Assuming SSEH and NP ≠ BPP, for every , no polynomial time algorithm can, given a bipartite graph with , distinguish between the following two cases:

- (Completeness) contains as a subgraph.

- (Soundness) does not contain as a subgraph.

Proof.

Let be any constant. Assume without loss of generality that . Consider any bipartite graph with . Let and . We construct a graph where as follows. For , pick random elements and , and add them to and respectively. Finally, for every and , there is an edge between U and V in if and only if there is an edge in the original graph G between and for every .

Completeness. Suppose that the original graph G contains as a subgraph. Let one such subgraph be where and . Observe that induces a biclique in . For each , note that with probability independent of each other. As a result, from Chernoff bound, with high probability. Similarly, we also have with high probability. Thus, contains as a subgraph with high probability.

Soundness. Suppose that G does not contain as a subgraph. We will show that, with high probability, does not contain as a subgraph. To do so, we will first prove the following proposition.

Proposition A1.

For any set A, let denote the power set of A. Moreover, let be the “flattening” operation defined by . Then, with high probability, we have for every subset of size and for every subset of size .

Proof of Proposition A1.

Let us consider the probability that there exists a set of size such that . This can be bounded as follows.

Observe that, for each , is simply an independent Bernoulli random variable with mean . Hence, by Chernoff bound, we have

as desired.

Analogously, we also have for every subset of size with high probability, thereby concluding the proof of Proposition A1. ☐

With Proposition A1 ready, let us proceed with our soundness proof. Suppose that the event in Proposition A1 occurs. Consider any subset of size and any subset of size . Since and G does not contain as a subgraph, there exists and such that . From the definition of , this implies that and do not induce a biclique in . As a result, does not contain as a subgraph. From this and from Proposition A1, does not contain as a subgraph with high probability, concluding our soundness argument.

Since Lemma 2 asserts that distinguishing between the two cases above are NP-hard (assuming SSEH) and the above reduction takes polynomial time, we can conclude that, assuming SSEH and NP ≠ BPP, no polynomial time algorithm can distinguish the two cases stated in the lemma. ☐

Appendix C. Comparison Between SSEH and Strong UGC

In this section, we briefly discuss the similarities and differences between the classical Unique Games Conjecture [7], the Small Set Expansion Hypothesis [10] and the Strong Unique Games Conjecture [58]. Let us start by stating the Unique Games Conjecture, proposed by Khot in his influential work [7]:

Conjecture A1

(Unique Games Conjecture (UGC) [7]). For every , there exists such that, given an UG instance such that is regular, it is NP-hard to distinguish between the following two cases:

- (Completeness) There exists an assignment such that .

- (Soundness) For every assignment , .

In other words, Khot’s UGC states that it is NP-hard to distinguish between an UG instance which is almost satisfiable from one in which only small fraction of edges can be satisfied. While SSEH as stated in Conjecture 1 is not directly a statement about an UG instance, it has a strong connection with the UGC. Raghavendra and Steurer [10], in the same work in which they proposed the conjecture, observed that SSEH is implied by a variant of UGC in which the soundness is strengthened so that the constraint graph is also required to be a small-set expander (i.e. every small set has near perfect edge expansion). In a subsequent work, Raghavendra, Steurer and Tulsiani [11] showed that the two conjectures are in fact equivalent. More formally, the following variant of UGC is equivalent to SSEH:

Conjecture A2

(UGC with Small-Set Expansion (UGC with SSE) [10]). For every , there exist and such that, given an UG instance such that is regular, it is NP-hard to distinguish between the following two cases:

- (Completeness) There exists an assignment such that .

- (Soundness) For every assignment , . Moreover, satisfies for every of size .

While our result is based on SSEH (which is equivalent to UGC with SSE), Bhangale et al. [22] relies on another strengthened version of the UGC, which requires the following additional properties:

- There is not only an assignment that satisfies almost all constraints, but also a partial assignment to almost the whole graph such that every constraint between two assigned vertices is satisfied.

- The graph in the soundness case has to satisfy the following vertex expansion property: for every not too small subset of , its neighborhood spans almost the whole graph.

More formally, the conjecture can be stated as follows.

Conjecture A3

(Strong UGC (SUGC) [58]). For every , there exists such that, given an UG instance such that is regular, it is NP-hard to distinguish between the following two cases:

- (Completeness) There exists a subset of size at least and a partial assignment such that every edge inside S is satisfied.

- (Soundness) For every assignment , . Moreover, satisfies for every of size where denote the set of all neighbors of S.

The conjecture was first formulated by Bansal and Khot [58]. We note here that the name “Strong UGC” was not given by Bansal and Khot, but was coined by Bhangale et al. [22]. In fact, the name “Strong UGC” was used earlier by Khot and Regev [8] to denote a different variant of UGC, in which the completeness is strengthened to be the same as in Conjecture A3 but the soundness does not include the vertex expansion property. Interestingly, this variant of UGC is equivalent to the original version of the conjecture [8]. Moreover, as pointed out in [58], it is not hard to see that the soundness property of SUGC can also be achieved by simply adding a complete graph with negligible weight to the constraint graph. In other words, both the completeness and soundness properties of SUGC can be achieved separately. However, it is not known whether SUGC is implied by UGC.

The conjecture was first formulated by Bansal and Khot [58]. We note here that the name “Strong UGC” was not given by Bansal and Khot, but was coined by Bhangale et al. [22]. In fact, the name “Strong UGC” was used earlier by Khot and Regev [8] to denote a different variant of UGC, in which the completeness is strengthened to be the same as in Conjecture A3 but the soundness does not include the vertex expansion property. Interestingly, this variant of UGC is equivalent to the original version of the conjecture [8]. Moreover, as pointed out in [58], it is not hard to see that the soundness property of SUGC can also be achieved by simply adding a complete graph with negligible weight to the constraint graph. In other words, both the completeness and soundness properties of SUGC can be achieved separately. However, it is not known whether SUGC is implied by UGC.

To the best of our knowledge, it is not known if one of Conjecture A2 and Conjecture A3 implies the other. In particular, while the soundness cases of both conjectures require certain expansion properties of the graphs, Conjecture A2 deals with edge expansion whereas Conjecture A3 deals with vertex expansion; even though these notations are closely related, they do not imply each other. Moreover, as pointed out earlier, the completeness property of SUGC is stronger than that of UGC with SSE; we are not aware of any reduction from SSE to UG that achieves this while maintaining the same soundness as in Conjecture A2.

Finally, we note that both soundness and completeness properties of SUGC are crucial for Bhangale et al.’s reduction [22]. Hence, it is unlikely that their technique applies to SSEH. Similarly, our reduction relies crucially on edge expansion properties of the graph and, thus, is unlikely to be applicable to SUGC.

References

- Arora, S.; Lund, C.; Motwani, R.; Sudan, M.; Szegedy, M. Proof Verification and the Hardness of Approximation Problems. J. ACM 1998, 45, 501–555. [Google Scholar] [CrossRef]

- Arora, S.; Safra, S. Probabilistic Checking of Proofs: A New Characterization of NP. J. ACM 1998, 45, 70–122. [Google Scholar] [CrossRef]

- Håstad, J. Some Optimal Inapproximability Results. J. ACM 2001, 48, 798–859. [Google Scholar] [CrossRef]

- Håstad, J. Clique is Hard to Approximate within n1−ε; Springer: Berlin/Heidelberg, Gemany, 1996; pp. 627–636. [Google Scholar]

- Moshkovitz, D. The Projection Games Conjecture and the NP-Hardness of ln n-Approximating Set-Cover. Theory Comput. 2015, 11, 221–235. [Google Scholar] [CrossRef]

- Dinur, I.; Steurer, D. Analytical approach to parallel repetition. In Proceedings of the STOC ’14, Forty-Sixth Annual ACM Symposium on Theory of Computing, New York, NY, USA, 31 May–3 June 2014; pp. 624–633. [Google Scholar]

- Khot, S. On the Power of Unique 2-prover 1-round Games. In Proceedings of the STOC ’02, Thiry-Fourth Annual ACM Symposium on Theory of Computing, Montreal, QC, Canada, 19–21 May 2002; pp. 767–775. [Google Scholar]

- Khot, S.; Regev, O. Vertex cover might be hard to approximate to within 2 − ε. J. Comput. Syst. Sci. 2008, 74, 335–349. [Google Scholar] [CrossRef]

- Khot, S.; Kindler, G.; Mossel, E.; O’Donnell, R. Optimal Inapproximability Results for MAX-CUT and Other 2-Variable CSPs? SIAM J. Comput. 2007, 37, 319–357. [Google Scholar] [CrossRef]

- Raghavendra, P.; Steurer, D. Graph Expansion and the Unique Games Conjecture. In Proceedings of the STOC ’10, Forty-Second ACM Symposium on Theory of Computing, Cambridge, MA, USA, 5–8 June 2010; pp. 755–764. [Google Scholar]

- Raghavendra, P.; Steurer, D.; Tulsiani, M. Reductions Between Expansion Problems. In Proceedings of the CCC ’12, 27th Conference on Computational Complexity, Porto, Portugal, 26–29 June 2012; pp. 64–73. [Google Scholar]

- Garey, M.R.; Johnson, D.S. Computers and Intractability: A Guide to the Theory of NP-Completeness; W. H. Freeman & Co.: New York, NY, USA, 1979. [Google Scholar]

- Johnson, D.S. The NP-completeness Column: An Ongoing Guide. J. Algorithms 1987, 8, 438–448. [Google Scholar] [CrossRef]

- Peeters, R. The Maximum Edge Biclique Problem is NP-complete. Discrete Appl. Math. 2003, 131, 651–654. [Google Scholar] [CrossRef]

- Khot, S. Improved Inaproximability Results for MaxClique, Chromatic Number and Approximate Graph Coloring. In Proceedings of the 42nd IEEE Symposium on Foundations of Computer Science, Las Vegas, NV, USA, 14–17 October 2001; pp. 600–609. [Google Scholar]

- Khot, S.; Ponnuswami, A.K. Better Inapproximability Results for MaxClique, Chromatic Number and Min-3Lin-Deletion. In Proceedings of the International Colloquium on Automata, Languages, and Programming, Venice, Italy, 10–14 July 2006; pp. 226–237. [Google Scholar]

- Zuckerman, D. Linear Degree Extractors and the Inapproximability of Max Clique and Chromatic Number. Theory Comput. 2007, 3, 103–128. [Google Scholar] [CrossRef]

- Feige, U. Relations Between Average Case Complexity and Approximation Complexity. In Proceedings of the STOC ’02, Thiry-Fourth Annual ACM Symposium on Theory of Computing, Montreal, QC, Canada, 19–21 May 2002; pp. 534–543. [Google Scholar]

- Feige, U.; Kogan, S. Hardness of Approximation of the Balanced Complete Bipartite Subgraph Problem; Technical Report; Weizmann Institute of Science: Rehovot, Israel, 2004. [Google Scholar]

- Khot, S. Ruling Out PTAS for Graph Min-Bisection, Dense k-Subgraph, and Bipartite Clique. SIAM J. Comput. 2006, 36, 1025–1071. [Google Scholar] [CrossRef]

- Ambühl, C.; Mastrolilli, M.; Svensson, O. Inapproximability Results for Maximum Edge Biclique, Minimum Linear Arrangement, and Sparsest Cut. SIAM J. Comput. 2011, 40, 567–596. [Google Scholar] [CrossRef]

- Bhangale, A.; Gandhi, R.; Hajiaghayi, M.T.; Khandekar, R.; Kortsarz, G. Bicovering: Covering Edges With Two Small Subsets of Vertices. SIAM J. Discrete Math. 2016, 31, 2626–2646. [Google Scholar] [CrossRef]

- Manurangsi, P. Almost-Polynomial Ratio ETH-Hardness of Approximating Densest k-Subgraph. In Proceedings of the STOC ’17, 49th Annual ACM SIGACT Symposium on Theory of Computing, Montreal, QC, Canada, 19–23 June 2017; pp. 954–961. [Google Scholar]

- Impagliazzo, R.; Paturi, R.; Zane, F. Which Problems Have Strongly Exponential Complexity? J. Comput. Syst. Sci. 2001, 63, 512–530. [Google Scholar] [CrossRef]

- Dinur, I. Mildly exponential reduction from gap 3SAT to polynomial-gap label-cover. ECCC Electron. Colloq. Comput. Complex. 2016, 23, 128. [Google Scholar]

- Manurangsi, P.; Raghavendra, P. A Birthday Repetition Theorem and Complexity of Approximating Dense CSPs. In Proceedings of the ICALP ’17, 44th International Colloquium on Automata, Languages, and Programming, Warsaw, Poland, 10–14 July 2017; pp. 78:1–78:15. [Google Scholar]

- Jerrum, M. Large Cliques Elude the Metropolis Process. Random Struct. Algorithms 1992, 3, 347–359. [Google Scholar] [CrossRef]

- Kučera, L. Expected Complexity of Graph Partitioning Problems. Discrete Appl. Math. 1995, 57, 193–212. [Google Scholar] [CrossRef]

- Berman, P.; Schnitger, G. On the Complexity of Approximating the Independent Set Problem. Inf. Comput. 1992, 96, 77–94. [Google Scholar] [CrossRef]

- Blum, A. Algorithms for Approximate Graph Coloring. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1991. [Google Scholar]

- Goldschmidt, O.; Hochbaum, D.S. A polynomial algorithm for the k-cut problem for fixed k. Math. Oper. Res. 1994, 19, 24–37. [Google Scholar] [CrossRef]

- Saran, H.; Vazirani, V.V. Finding k Cuts Within Twice the Optimal. SIAM J. Comput. 1995, 24, 101–108. [Google Scholar] [CrossRef]

- Naor, J.S.; Rabani, Y. Tree Packing and Approximating k-cuts. In Proceedings of the SODA ’01, Twelfth Annual ACM-SIAM Symposium on Discrete Algorithms, Washington, DC, USA, 7–9 January 2001; pp. 26–27. [Google Scholar]

- Zhao, L.; Nagamochi, H.; Ibaraki, T. Approximating the minimum k-way cut in a graph via minimum 3-way cuts. J. Comb. Optim. 2001, 5, 397–410. [Google Scholar] [CrossRef]

- Ravi, R.; Sinha, A. Approximating k-cuts via Network Strength. In Proceedings of the SODA ’02, Thirteenth Annual ACM-SIAM Symposium on Discrete Algorithms, San Francisco, CA, USA, 6–8 January 2002; pp. 621–622. [Google Scholar]

- Xiao, M.; Cai, L.; Yao, A.C.C. Tight approximation ratio of a general greedy splitting algorithm for the minimum k-way cut problem. Algorithmica 2011, 59, 510–520. [Google Scholar] [CrossRef]

- Gupta, A.; Lee, E.; Li, J. An FPT Algorithm Beating 2-Approximation for k-Cut. arXiv, 2018; arXiv:1710.08488. [Google Scholar]

- Andersen, R.; Chellapilla, K. Finding Dense Subgraphs with Size Bounds. In Proceedings of the International Workshop on Algorithms and Models for the Web-Graph 2009, Barcelona, Spain, 12–13 February 2009; pp. 25–37. [Google Scholar]

- Andersen, R. Finding large and small dense subgraphs. arXiv, 2007; arXiv:cs/0702032. [Google Scholar]

- Khuller, S.; Saha, B. On Finding Dense Subgraphs. In Proceedings of the International Colloquium on Automata, Languages, and Programming, Rhodes, Greece, 5–12 July 2009; pp. 597–608. [Google Scholar]

- Bergner, L. Small Set Expansion. Master’s Thesis, École Polytechnique Fédérale de Lausanne, Lausanne, Switzerland, 2013. [Google Scholar]

- Kortsarz, G.; Peleg, D. On Choosing a Dense Subgraph (Extended Abstract). In Proceedings of the 34th Annual Symposium on Foundations of Computer Science, Palo Alto, CA, USA, 3–5 November 1993; pp. 692–701. [Google Scholar]

- Feige, U.; Seltser, M. On the Densest k-Subgraph Problem; Technical Report; Weizmann Institute of Science: Rehovot, Israel, 1997. [Google Scholar]

- Srivastav, A.; Wolf, K. Finding Dense Subgraphs with Semidefinite Programming. In Proceedings of the International Workshop on Approximation Algorithms for Combinatorial Optimization, Aalborg, Denmark, 18–19 July 1998; pp. 181–191. [Google Scholar]

- Feige, U.; Langberg, M. Approximation Algorithms for Maximization Problems Arising in Graph Partitioning. J. Algorithms 2001, 41, 174–211. [Google Scholar] [CrossRef]

- Feige, U.; Kortsarz, G.; Peleg, D. The Dense k-Subgraph Problem. Algorithmica 2001, 29, 410–421. [Google Scholar] [CrossRef]

- Asahiro, Y.; Hassin, R.; Iwama, K. Complexity of finding dense subgraphs. Discrete Appl. Math. 2002, 121, 15–26. [Google Scholar] [CrossRef]

- Goldstein, D.; Langberg, M. The Dense k Subgraph problem. arXiv, 2009; arXiv:0912.5327. [Google Scholar]

- Bhaskara, A.; Charikar, M.; Chlamtac, E.; Feige, U.; Vijayaraghavan, A. Detecting high log-densities: An O(n1/4) approximation for densest k-subgraph. In Proceedings of the STOC ’10, 42nd ACM Symposium on Theory of Computing, Cambridge, MA, USA, 5–8 June 2010; pp. 201–210. [Google Scholar]

- Alon, N.; Arora, S.; Manokaran, R.; Moshkovitz, D.; Weinstein, O. Inapproximabilty of Densest k-Subgraph from Average Case Hardness. Unpublished Manuscript. 2018. [Google Scholar]

- Bhaskara, A.; Charikar, M.; Vijayaraghavan, A.; Guruswami, V.; Zhou, Y. Polynomial Integrality Gaps for Strong SDP Relaxations of Densest k-subgraph. In Proceedings of the SODA ’12, Twenty-Third Annual ACM-SIAM Symposium on Discrete Algorithms, Kyoto, Japan, 17–19 January 2012; pp. 388–405. [Google Scholar]

- Braverman, M.; Ko, Y.K.; Rubinstein, A.; Weinstein, O. ETH Hardness for Densest-k-Subgraph with Perfect Completeness. In Proceedings of the SODA ’17, Twenty-Eighth Annual ACM-SIAM Symposium on Discrete Algorithms, Barcelona, Spain, 16–19 January 2017; pp. 1326–1341. [Google Scholar]

- Manurangsi, P. On Approximating Projection Games. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2015. [Google Scholar]

- Chlamtác, E.; Manurangsi, P.; Moshkovitz, D.; Vijayaraghavan, A. Approximation Algorithms for Label Cover and The Log-Density Threshold. In Proceedings of the SODA ’17, Twenty-Eighth Annual ACM-SIAM Symposium on Discrete Algorithms, Barcelona, Spain, 16–19 January 2017; pp. 900–919. [Google Scholar]

- O’Donnell, R. Analysis of Boolean Functions; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Mossel, E.; O’Donnell, R.; Oleszkiewicz, K. Noise stability of functions with low influences: Invariance and optimality. Ann. Math. 2010, 171, 295–341. [Google Scholar] [CrossRef]

- Svensson, O. Hardness of Vertex Deletion and Project Scheduling. Theory Comput. 2013, 9, 759–781. [Google Scholar] [CrossRef]

- Bansal, N.; Khot, S. Optimal Long Code Test with One Free Bit. In Proceedings of the FOCS ’09, 50th Annual IEEE Symposium on Foundations of Computer Science, Atlanta, GA, USA, 5–27 October 2009; pp. 453–462. [Google Scholar]

- Louis, A.; Raghavendra, P.; Vempala, S. The Complexity of Approximating Vertex Expansion. In Proceedings of the 2013 IEEE 54th Annual Symposium on Foundations of Computer Science (FOCS), Berkeley, CA, USA, 26–29 October 2013; pp. 360–369. [Google Scholar]

- Kleinberg, J.; Papadimitriou, C.; Raghavan, P. Segmentation Problems. J. ACM 2004, 51, 263–280. [Google Scholar] [CrossRef]

- Alon, N.; Feige, U.; Wigderson, A.; Zuckerman, D. Derandomized Graph Products. Comput. Complex. 1995, 5, 60–75. [Google Scholar] [CrossRef]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).