2.1.1. Reversible Invisible Watermarking Scheme for Hyperspectral Images

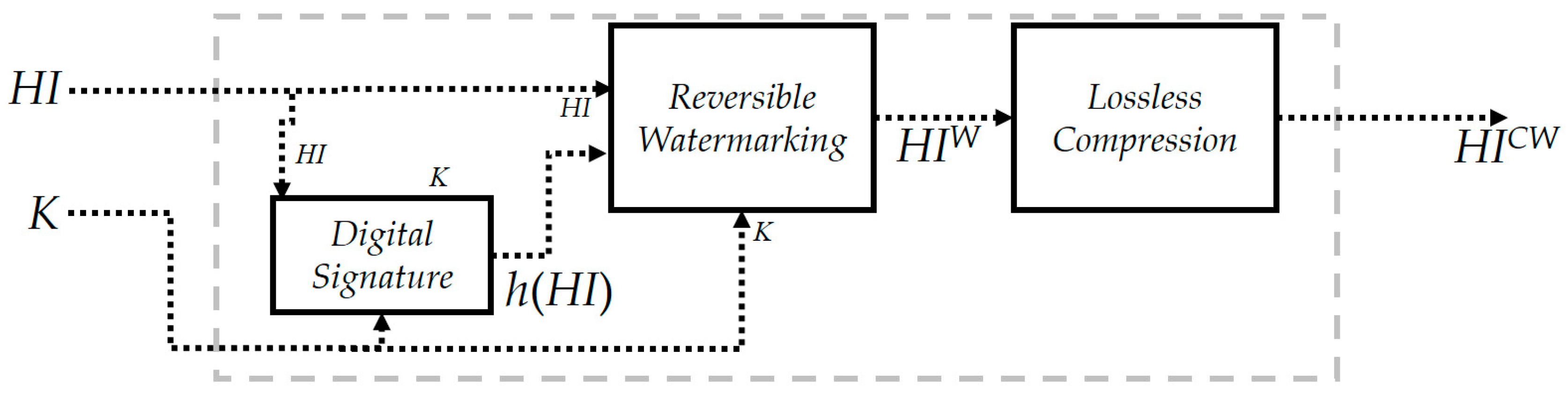

In [

13], we have proposed a preliminary version of a reversible invisible watermarking scheme for hyperspectral images. This scheme relies on the approaches outlined in [

9,

14], and belongs to the category of additive schemes. In the additive scheme, the watermark signal

w (i.e., a watermark string) is directly added to the input signal, namely the pixels of the input hyperspectral image

HI. In this way the output produced (i.e., the watermarked hyperspectral image

HIW) contains both the signals (the one that represents the

HI and the one that represents the watermark

w). A secret key,

K, is used to perform the embedding phase.

It is important to note that this scheme is reversible. Therefore, it is possible to restore the original HI and to extract the watermark w. In addition, this scheme is fragile: a simple modification of HIW might cause the disappearance of the embedded watermark, w.

The basic objective of our scheme is to spread the bits of w among all the bands of HI. More precisely, each bit of w—referred to as bw—will be embedded into a set of four pixels . These pixels are pseudo-randomly selected by means of a pseudo-random number generator (PRNG) based on the secret key, K.

Since it is possible to have a set SP that cannot be used to carry bw due to the fact that the extraction algorithm might be unable to extract the hidden bit, it is necessary to classify the sets into two categories: “carrier sets” and “non-carrier sets”. A carrier set is a set in which a bit, bw, can be embedded, while a non-carrier set is a set in which a bit, bw, cannot be embedded.

When the algorithm identifies a carrier set

SP, a bit

bw can be embedded by means of the “embedWatermarkBit” procedure, reported in Algorithm 1, which returns as output the set

.

represents the set

SP in which

bw is embedded. To classify a set

SP, the relationship between

SP and its

estimation is exploited. This estimation is computed by means of a linear combination of the pixels of

SP, as explained in the “estimate” procedure (see Algorithm 2). By using the estimation, the extraction algorithm can classify, in two steps, a set

SP. Furthermore, the extraction algorithm can restore the original pixel values of the carrier set by manipulating a watermarked carrier set. In this manner, the reversibility property is obtained.

| Algorithm 1 The “embedWatermarkBit” procedure (pseudo-code from [13]) |

| procedure embedWatermarkBit (, ) |

if then ; ; ; ; else ; ; ; ; endif ; return ;

|

| end procedure |

Algorithm 3 reports the pseudo-code of the “embed” procedure. This procedure embeds the watermark string w into the input hyperspectral image HI by using in the embedding process the secret key K.

In details: the pseudo-random number generator (PRNG) G is initialized by using K as a seed. Subsequently, w is subdivided into M substrings (where M is the number of bands of HI). The ith substring, wi, will be embedded into the ith band of HI, denoted as HI(i). Therefore, each band will carry at least bits, where N denotes the length of w.

The algorithm considers each substring

wi and performs the following steps until all the bits composing

wi are embedded into

HI(i): four pixels (we denote them as

,

,

, and

) are randomly selected by using the PRNG

G to compose a set

. Subsequently, the estimation

(composed by four estimated pixels, that we denote as

,

,

, and

) of

is calculated. This estimation is computed by a linear combination of the pixels of

SP, as it is shown in the

estimate procedure of Algorithm 2. In order to classify the set

, the difference

D, in absolute value, between

and

is computed. In the case

is less than 1, then the set

is classified as a carrier set and, therefore, the “embedWatermarkBit” procedure (Algorithm 1) is performed in order to embed

bw into

. Thus, the processing of bit

bw is complete. The coordinates of the pixels

,

,

, and

will be no longer selectable and the algorithm proceeds by embedding the next bit of

wi.

| Algorithm 2 The “estimate” procedure (pseudo-code from [13]) |

| procedure estimate () |

; ; ; ; ; return ;

|

| end procedure |

In case

Sp is classified, instead, as a non-carrier set, then the value of the pixels of

Sp are modelled in order to increase the difference,

. In this manner, the extraction algorithm is able to correctly understand that

Sp is a non-carrier set. As a consequence, the bit,

bw, cannot be embedded into the set

Sp and other four pixels (different from the ones previously selected) will be selected to compose a new set

Sp, to try the embedding again.

| Algorithm 3 The “embed” procedure (pseudo-code from [13]) |

| procedure embed (, w, K) |

Let be a PRNG; ; Subdivide w into ; for i = 1 to M do ; repeat ; By using along with K, selects from ; ; ; ; if then ; ; else Modifying of , by using embedWatermarkBit( 0) or embedWatermarkBit( 1), in order to increase the value of ; endif Setting of the coordinates of , , and no longer selectable; until ; end for Copying of all modified and unmodified pixels to ; return ;

|

| end procedure |

2.1.2. Multiband Lossless Compression of Hyperspectral Images

The predictive-based multiband lossless compression for hyperspectral images (LMBHI) [

11] algorithm exploits the inter-band correlation (i.e., the correlation among the neighboring pixels of contiguous bands) as well as the intra-band correlations (i.e., the correlations among the neighboring pixels of the same band), by using a predictive coding model.

Each pixel of the input hyperspectral image

HI is predicted by using one of the following predictive structures: the 2-D linearized median predictor (2-D LMP) [

15] and the 3-D multiband linear predictor (3D-MBLP).

2-D LMP considers only the intra-band correlation and it is used only for the pixels of the first band for which there are no previous reference bands.

3D-MBLP exploits the intra-band and the inter-band correlations instead and is used to predict the pixels of all the bands except for the first one.

Once the prediction step is performed the prediction error, e, is modelled and coded. In particular, e is obtained by subtracting the value of the prediction of the current pixel from its effective value.

The 3D-MBLP predictor uses a three-dimensional prediction context which is defined by considering two parameters: B and N, where B indicates the number of the previous reference bands and N indicates the number of pixels that will be included in the prediction context that are in the current band and in the previous B reference bands.

In order to permit an efficient and relative indexing of the pixels that form the prediction context of the 3D-MBLP, an enumeration, E, is defined. We denote with the ith pixel (according to the enumeration E) of the jth band. In addition, we suppose that the current band is the th band. In this manner, is referred to the pixel that has the same spatial coordinates of the current pixel (denoted as ), in the jth band.

The 3D-MBLP predictor is based on the least squares optimization technique and the prediction is computed, as in Equation (1).

It is important to point out that the coefficients

are chosen to minimize the energy of the prediction error, as in Equation (2).

It should be observable that Equation (2) can be rewritten (by using the matrix notation), as outlined in the equation

Subsequently, by computing the derivate of

and by setting it to zero, the optimal coefficients can be obtained

Once the coefficients , which solve the linear system of Equation (3), are computed, the prediction , of the current pixel , can be calculated.